Abstract

With the rapid development of information technologies, multi-source heterogeneous data has become an open problem, and the data is usually modeled as graphs since the graph structure is able to encode complex relationships among entities. However, in practical applications, such as network security analysis and public opinion analysis over social networks, the structure and the content of graph data are constantly evolving. Therefore, the ability to continuously monitor and detect interesting patterns on massive and dynamic graphs in real-time is crucial for many applications. Recently, a large group of excellent research works has also emerged. Nevertheless, these studies focus on different updates of graphs and apply different subgraph matching algorithms; thus, it is desirable to review these works comprehensively and give a thorough overview. In this paper, we systematically investigate the existing continuous subgraph matching techniques from the aspects of key techniques, representative algorithms, and performance evaluation. Furthermore, the typical applications and challenges of continuous subgraph matching over dynamic graphs, as well as the future development trends, are summarized and prospected.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Over the last decade, information networks such as social networks, communication networks, world wide web, financial transaction networks, etc., have become ubiquitous and pervasive. Apart from their increasing data scale and data types, one of the most important aspects is that the structure and content of the graphs constantly evolve due to the frequent updates in the real world. Taking social networks as an example, there are over 1.4 billion daily active users on Facebook, generating more than 500,000 posts/comments and 4 million likes per minute, leading to a flood of updates on the Facebook social network [2]. In addition, Google+ added 10 million new users in the two weeks since its launch in 2011 [32]. Therefore, the ability to continuously monitor and detect patterns of interest is essential for obtaining meaningful and up-to-date discoveries in such frequently updated graphs. Meanwhile, this monitoring capability is required for many applications from a variety of domains.

-

Social networks. The applications may involve: 1) targeted advertising, spam or fraudulent activities detection [14, 38, 74] and 2) fake news propagation monitoring [66].

-

Protein-interaction network. In a protein-interaction network, by matching a known protein network with a dynamic protein interaction network, the mutated protein structure can be found quickly from the protein interaction network [19, 77].

-

Transportation networks. In transportation networks, we can monitor the real-time traffic accidents by matching the pattern graph with the dynamic road network data graph [66].

-

Computer networks. In dynamic computer networks, based on the continuous subgraph matching technique, we can monitor cyber-attack events or detect anomaly flow in time [17].

-

Knowledge graphs. It can deal with evolving knowledge over time to support continuous question answering [13] and reasoning over RDF graph is also based on such pattern monitoring [6].

Given a dynamic data graph and a query graph, the continuous subgraph matching problem can be described as identifying and monitoring the query graph in the dynamic data graph continuously and reporting the newer up-dates of the matching results. Matching here refers to the same structure and meeting the specific semantic constraint. Now, we take cyber-attack event detection and credit card fraud detection as examples to illustrate how to abstract an event into a pattern graph and the significance of continuous subgraph matching.

Example 1

Figure 1a demonstrates a graph-based description of cyber-attack pattern. A victim browses the compromised website, causing a downloading of malware scripts. Then, the malware scripts establish a communication between the victim and the botnet command and control server. When the victim registers at the botnet command and control server, he receives a command at the same time, leading to an information exfiltration back to the botnet command and control server. Obviously, if we can detect the attack pattern (based on the subgraph matching) in the network, potential malicious activities could be avoided. Recently, Verizon, an American communications company, analyzed 100,000 security incidents over the past decade and found that 90% of them could be easily classified into ten major graph-based attack patterns [7].

Example 2

Figure 1b shows an example of a credit card fraud with a series of transactions modeled by a pattern graph. Criminals try to cash out illegally by making bogus deals with merchants and middlemen. They first establish a credit payment to the merchant; When the merchant receives the real payment from the bank, he transfers the money to the middleman account(s), which further transfers the money back to the criminal to complete the cashing. Apparently, this pattern also can be easily modeled as a query graph. By detecting this pattern query in the financial transaction networks, fraud can be avoided effectively.

Challenges and Limitations. Owing to the importance and practicality of continuous subgraph matching, how to effectively and efficiently find the matching results of a given query graph continuously over the dynamic graphs is a meaningful research topic in the era of big data. By observing its practical application requirements, we identify a list of challenges that the continuous subgraph matching may face:

-

Frequent data updates. The graph data in the network system, such as social networks, data center networks, or financial networks, updates all the time. Thus, traditional static graph matching techniques would need to rebuild an index and rematch the whole graph data for each update, which is a time-consuming and labor-intensive approach.

-

Large-scale data. Due to the constant updates, the amount of dynamic graph data is larger than that of static graph data, increasing the difficulty of graph subgraph matching.

-

Increased demand for real-time analysis. In many real-time analysis applications, whenever the graph is updated, the new matching result requires to be obtained in time; otherwise, the application value of the matching result will be reduced or lost. For example, in network security, real-time monitoring of suspicious data transmission modes is required, and a delay in analyzing the matching results can result in network paralysis.

The primary motivation for conducting this survey is twofold. Firstly, a lot of graph algorithms have been proposed to perform continuous subgraph matchings tasks in recent years. However, existing surveys are somewhat out-of-date, and no new research and recommendations are discussed. The latest survey [39] was published five years ago, and there was only a brief introduction to subgraph matching in the dynamic graph. Secondly, the surveys [33] and [46] only introduce and summarize the existing algorithm from a certain aspect, not comprehensive. Additionally, existing survey [47] solely focus on the subgraph matching algorithms in static graph [36, 63, 85, 87]. These existing surveys did not provide comprehensive reviews on continuous subgraph matching over the dynamic graph data. In particular, Our survey is the first one to illustrate the existing research effort and progress that has been made in the area of continuous subgraph matching in the dynamic graph regarding key techniques, representative algorithms, and performance evaluations. Meanwhile, there are some related surveys, such as pattern mining [28] and community search [27]. However, the former focuses on graph mining in dynamic graphs while the latter focuses on community retrieval over big graphs. Although both of these technologies require subgraph matching algorithms, it is not the focus of their technical optimization.

In this survey, we introduce the existing continuous subgraph matching methods which committed to solving the above problems. First, we categorize the research of continuous subgraph matching systematically according to the different practical application requirements. And then, we discuss two different types of continuous subgraph matching approaches based on the update methods of the dynamic graph. Among them, continuous subgraph matching for graph structure change is the most commonly study, and the incremental matching approach is the most advanced method applied to solve this problem, which attempts to identify changes to previously matched data in response to updated dynamic graph data to meet the increased demand for the real-time analysis.

The rest of the paper is organized as follows. Section 2 provides a detailed definition of continuous subgraph matching and classification. Sections 3 and 4 describe the concepts of and matching techniques for structure-based and content-based changes, respectively. Section 5 compares the performance of different matching algorithms. Section 6 introduces the current applications of continuous subgraph matching. Section 7 presents a list of future topics, and Sect. 8 presents the conclusion.

2 Definition and classification of continuous subgraph matching

In this section, we first formally introduce continuous subgraph matching and then have a comprehensive classification of continuous subgraph matching from a variety of different methodological perspectives.

2.1 Preliminary definition

The graph is usually is defined as (V, E, L), where V is a set of vertices, E is a set of edges between, and L is a label function that associates each vertex v \(\in \) V with a set of labels. Any edge \(e \in E_G\) in the graph can be noted as \((v_i, v_j)\), where \(v_i, v_j \in V_G\). In this paper, we use \(G=(V_G,E_G,L_G)\) to represent data graph, and \(P=(V_P,E_P,L_P)\) to represent pattern graph. Currently, there are two data graph types: super-large graphs, such as social networks, or a combination of many small graphs, such as the AIDS Antiviral dataset [5] that comprises numerous small pictures showing the atomic structure of chemicals. As the current continuous subgraph matching problem mainly relates to super-large graphs, this paper concentrates on continuous subgraph matching for super-large graphs.

A dynamic graph, also known as a streaming graph, is a graph that evolves over time. There are two categories of dynamic graph updates, namely (1) graph structure updates, where the vertices and edges in the graph are inserted or deleted over time, causing changes in the graph structure; (2) graph content updates, where the content or attributes of the data objects in the graph are updated, resulting in content change.

The definition of the dynamic graph is given in the next section. As the current research is mainly focused on the structural update of the data graph, the definition of the dynamic graph is given in the graph structure update.

2.1.1 Graph structure update

Definition 1

(Graph Update Stream) A graph update stream \(\Delta g\) is construct with a sequence of update operations \((\Delta g_1,\Delta g_2,\cdots )\), where \(\Delta g_1\) is a triple \(\langle op, v_i,v_j\rangle \) such that \(op=\{I,D\}\) is the type of operations, with I and D representing edge insertion and edge deletion respectively of an edge \((v_i,v_j)\).

A dynamic graph abstracts an initial graph \(G\) \(=\) \((V_G,E_G,L_G)\) and an update stream \(\Delta g\). After applying \(\Delta g\) to G, it transforms to \(G'\). Note that insertion operation creates new edges between vertices and could also add new vertices. The same does for deletion operations.

Definition 2

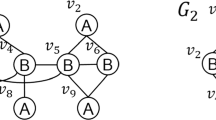

(Subgraph isomorphism) Given two graphs \(G_1\)=\((V_1,E_1,L_1)\) and \(G_2\)=\((V_2,E_2,L_2)\), an embedding of \(G_1\) in \(G_2\) (or, from \(G_1\) to \(G_2\)) is an bijective function f: (1) \(\forall v \in V_1\), \(L_1(v)=L_2(f(v))\); and (2) \(\forall (v_1, v_2)\in E_1\), \((f(v_1),f(v_2))\in E_2\).

Example 3

In Fig. 2, the dashed box shows the subgraph \(M=\) \([(v_1,u_3)\),\((v_2,\) \(u_6)\),\((v_3,u_5)\),\((v_4,u_8)]\) that matches the pattern graph P after the initial data graph G is changed to \(G_1\) with the update operation \(\Delta g=\{\langle I,u_5,u_8\rangle \}\).

Recently, graph functional dependencies [25], keys [21], and association rules [24] are all defined based on subgraph isomorphic matching [62]. However, it is a NP-complete problem due to the exponential search space resulting generated by all possible subgraphs [65] [35]. Subgraph isomorphism often imposes stronger topological constraints on the matching process to obtain more consistent matching results. Some applications, such as social networks, do not require high matching accuracy, which greatly restricts matching efficiency. In view of its intractability, approximate matching has been studied to obtain inexact solutions, which allows vertex/edge mismatching [9, 70]. Differs from approximate matching, simulation matching does not allow vertex/edge mismatching. The simulation matching is described in more detail below.

According to the degree of the matching approximations, it is divided into Bounded simulation [23], Graph simulation [53], Dual simulation [50], Strong simulation [50] and Strict simulation, all above are collectively called simulation matching. Bounded simulation matching relaxes the strict structural constraints of isomorphism matching by moving from edge-to-edge mapping to edge-to-path mapping. If a data vertex u matches a query vertex v, they only need to satisfy that \(L_P(u)\)=\(L_G(v)\) and \(L_P(u')\)=\(L_G(v')\), where \(u'\) is one of the descendant vertex of u and \(v'\) is one of the child vertex have of \(v'\). Compared with bounded simulation, graph simulation requires that \(u'\) is one of the child vertexes of u. It means that vertex u maintains the same successor relationship with the corresponding vertex v. The dual simulation further requires u to maintain the same precursor relationship with v. On the basis of dual simulation, strong simulation requires that the radius of the subgraph containing the matching vertex(the subgraph may contain non-matching vertices) is not larger than the diameter of the pattern graph. Strict simulation matching is the strictest one of simulation matching, and it further requires the subgraph composed entirely of the matching vertices.

Note that simulation matching can tolerate some noise and errors in matching results. Thus, it plays an important role in some applications such as social network and Web network analysis. Although differences between the results of simulation matching and subgraph isomorphism remain, simulation matching results usually meet the needs of practical applications. Hence, it is still regarded as the correct matching result. Take graph simulation as an example, and it is defined as follows:

Definition 3

(Graph simulation) Given a pattern graph \(P=(V_P,E_P,L_P)\) and \(G=(V_G,E_G,L_G)\), graph simulation refers that there is a binary relationship \(S\) \(\subseteq \) \(V_P\) \(\times \) \(V_G\) between P and G, which satisfies that:

-

(1)

\(\forall \) \((v, u)\) \(\subseteq \) \(S\), \(L_G(v)\)=\(L_P(u)\).

-

(2)

\(\forall \) \(v\) \(\in \) \(V_P\), we have: (a) \(\exists \) \(u\) \(\in \) \(V_G\) with \((v,u)\) \(\in \) \(S\), and (b) \(\forall \) \((v, v)\) \(\in \) \(E_G\), \(\exists \) \((u,u')\) \(\in \) \(E_G\) with \((v',u')\) \(\in \) \(S\).

Example 4

As shown in Fig. 3, the vertex \(u_2\) in the initial data graph G has the same label as \(v_2\) in the pattern graph P. Moreover, \({u_2}'s\) successor \(u_4\) has the same label as \({v_2}'s\) successor \(v_4\). Therefore, we can infer that \(u_2\) matches \(v_2\). Similarly, because \({v_3}'s\) successor in the pattern graph P is empty, we can infer that \(v_3\) matches \(u_3\). As there is no vertex in \({u_4}'s\) successor and its label is the same as \({v_4}'s\) successor, \(v_3\) , \(u_4\) and \(v_4\) do not match. Therefore, according to the definition of graph simulation matching, for \(t=1\), after the initial data graph G was updated with the operation \(GC_2=\{\langle I, u_4, u_6\rangle \}\), \(u_4\) and \(v_4\) met the matching conditions. Furthermore, the subgraphs \(M_1=[(v_2, u_2), (v_3, u_3), (v_4, u_4)]\) and \(M_2=[(v_2, u_2), (v_3, u_6), (v_4, u_4)]\) matched the pattern graph generated. Note that, although the structure of \(M_2\) is different from that of the pattern graph P, it is still considered as a match.

2.1.2 Graph content update

Graph content update means that the labels of the vertex/edge or specific object evaluation in the graph can change with time.

Example 5

As shown in Fig. 4, for time \(t=1\), the label of \(u_3\) and \(u_6\) in the initial data graph G were changed. The dotted box shows the subgraph \(M = [(v_1, u_3), (v_2, u_6), (v_3, u_5), (v_4, u_8)]\), which matches the pattern graph after the data graph is updated.

2.2 Classification of continuous subgraph matching

In this subsection, we classify continuous subgraph matching according to five methodological perspectives and introduce the representative algorithms for each class. Since each classification is not independent, there are intersections between the algorithms for each classification.

2.2.1 Dynamic feature of data graph

From the dynamic feature of data graph, continuous subgraph matching is divided into structural change and content change based on whether the topological structure of the graph is changed. Currently, many studies about continuous subgraph matching mainly focus on structural changes caused by the dynamic addition and deletion of vertices or edges in the data graph. It is mainly used in network anomaly attack detection and social network relationship detection. Continuous subgraph matching for structure change was first proposed by Wang et al. [75] in 2009. They constructed a node-neighbor tree (NNT) to filter the matching candidate set, which can effectively reduce the negative matching results. Later, some representative algorithms are proposed, including IncIsoMatch [23], SJ-Tree [16], graph simulation (DDST [66], and IncBMatch [23]), which further improve the execution efficiency of subgraph matching.

Content changes for graph mainly appears in the data center. Many studies have abstracted the network topological structure of the data center into a data graph for data analysis, where each vertex in the graph represents a server, and the edges between vertices represent the links between servers. In this case, the topological structure of the graph data does not change frequently, but the labels of the vertices (i.e., the amount of free memory of the server) and edges (i.e., the effective bandwidth of the link) in the data graph change frequently over time. In order to deal with this situation effectively, BoZhong et al. [88] proposed the Gradin algorithm. It put forward a N-dimensional grid index for tracking the evolution data and can also be directly applied to the frequently updated edge labels in a data graph.

2.2.2 Strength of consistency constraint

From the strength of consistency constraint, according to whether a bijective function or binary relationship is used, continuous subgraph matching can be divided into subgraph isomorphism and simulation matching, respectively. Subgraph isomorphism guarantees that the matching results are completely consistent with the pattern graph. It is mainly used for protein-interaction network analyzing [39], network abnormal behavior monitoring [17] and other data analysis applications with strict structural requirements.

While simulation matching obtains the results via binary relationship, and the matching results are approximate matches. First, it generates a matching candidate set of each query vertex according to the label of the data vertex and then filters out unmatched vertices according to the different approximate matching degrees of the precursor and successor of the vertex in the pattern graph. Representative algorithms include DDST [66] and IncBMatch [23], etc. Particularly, the results obtained by the IncBMatch algorithm satisfy the matching conditions even if the structure of the subgraphs is different from the pattern graph. Meanwhile, the matching process of IncBMatchcan is performed in polynomial time with higher efficiency. Simulation matching is more flexible, improves the matching efficiency, and identifies more useful matching results. Hence, it is mainly used for monitoring traffic accidents on the road network [66], and detecting the relationship between people and various groups [23] (such as the drug transaction relationship network). This graph data analysis application focuses more on mining the relationship between vertices.

2.2.3 Type of matched results

From the type of matched results, a continuous subgraph matching algorithm is classified as an exact algorithm or approximate algorithm due to the accuracy of the obtained matching results. Exact algorithms ensure that the matching results are completely accurate and are mainly used in many fields such as network anomaly detection and biological data analysis, which rely on accurate matching results. The examples of exact algorithms used to match data graphs and pattern graphs include IncIsoMatch [23], SJ-Tree [16], and Gradin [88].

However, many applications that use real-time results require that all matching results be returned quickly, making complicated exact matching algorithms unsuitable. Coincidently, these applications could also tolerate some vertex/edge mismatches (i.e., false-positive results). To address these problems, the approximate subgraph matching algorithm is generated. It is different from simulation matching as it is usually based on mathematical models such as probability statistics. The error ratio of the matching results can be kept within a certain range through parameter adjustment. All research works which presented NNT [75], Replication mechanism [55], and SSD [30], proposed an approximate graph matching algorithm that converts the graph matching problem into a more easily solved problem, trading matching accuracy for matching efficiency.

Note that the exact algorithm and approximate algorithm are two algorithms that determine the accuracy of matching results, while subgraph isomorphism and simulation matching are different types of graph matching models.

2.2.4 Computational environment

From the computational environment, continuous subgraph matching can either be centralized or distributed according to whether it is deployed on a distributed platform or single machine. Centralized continuous subgraph matching runs on a single computer with either join-based or exploration-based matching methods and mainly handles small-scale graph data. The join-based matching method decomposes the query graph, matches each query fragment, and then connects the obtained matching results. Generally, this method needs to build an index. The exploration-based method [69] starts from a vertex in the data graph and explores the entire data graph according to the query graph’s structure. Differ from join-based matching. This method does not need to build an index.

Distributed continuous subgraph matching mainly depends on a distributed parallel graph processing framework to process dynamic graph data with a large scale and high computational complexity. Currently, there are three types of mainstream distributed parallel graph computing systems, namely vertex-centric model (including Pregel [51], Trinity [64], Giraph [1], and GraphLab [49]), block-centric model (Blogel [78], BLADYG [11]), and auto-parallelization model (GRAPE [26]). Among them, vertex-centric and block-centric models need to modify the algorithm according to the characteristics of the model, which is difficult for the unfamiliar user to recast the parallel models. In contrast, the auto-parallelization model does not need to require that; it simply requires the user to provide three sequential (incremental) algorithms for the data graph with a small amount of content. The logic of the existing algorithm does not need to be modified. Additionally, all the three distributed parallel graph computing systems follow the BSP model [72] and the exploration-based approach is generally adopted for distributed matching owing to the need for the incremental updating of matching results.

Table 1 shows the performance of different types of distributed parallel graph computing systems in terms of shortest path query. Using the US road network as a data set, it can be found that the performance of the GRAPE system is significantly better than other types of systems.

2.2.5 Dependency relationship of queries

From the dependency relationship of queries, continuous subgraph matching can also be divided into single query answer or multi-query answer according to whether it expresses the continuous subgraph queries as one or many updated graph streams. The existing algorithms mainly contribute to single query answer, where queries are set to be isolated and evaluated independently. Currently, the single query answer technique for the dynamic graph is quite mature, as seen in NNT [75], SJ-tree [16], and SSD [30], where algorithms use a-query-at-a-time approaches: optimizing and answering each query separately. They do not allow batch processing of multiple queries. However, sequential processing is not always most efficient when multiple queries arrive [62].

Zervakis et al. [83] first proposes a continuous multi-query process engine, TRIC, over the dynamic graph. To make full use of the information from multiple queries, it decomposes them into several covering paths and constructs an index to organize the paths. Whenever an update occurs, it continuously evaluates queries by taking advantage of the shared limitations in the query set. The key point of multiple queries is to (1) quickly detect the affected queries for each update and (2) avoid expensive joins and explore an approach for larger sets of queries.

In summary, each of the algorithms and classifications presented is different and solves different graph matching problems. Table 2 summarizes the representative continuous subgraph matching algorithms and their classifications. The following sections will detail the continuous subgraph matching technique based on structure- and content-based change. In each section, the main problems will be analyzed and compared, and representative algorithms and research status of each matching technique type will be discussed. Since most current research revolves around the problem of continuous subgraph matching for structure-based change, the main focus of this paper will be on the latest research progress on graph matching techniques for structure-based change.

3 Continuous subgraph matching for structure-based change

Continuous subgraph matching for structure-based changes is the most widely used technique currently. From the perspective of algorithm design, two techniques currently exist snapshot-based matching (Sect. 3.1) and incremental matching (Sect. 3.2). The snapshot-based matching technique treats the updated data graph for each timestamp as a static graph to perform a matching algorithm. In contrast, the incremental matching technique only analyzes and matches the updated parts of the data graph, avoiding the unnecessary calculation of rematching the overall data graph. Furthermore, the finer-grained classification of the incremental matching technique is described in detail in Sect. 3.2. In Fig. 5, we summarize the classification and representative algorithms of the continuous subgraph matching technique for structural change.

3.1 Snapshot-based matching technique

Snapshot-based matching technique over dynamic graph is regarded as a large-scale static graph matching problem since each updated data graph for each moment is a static graph. Matching algorithms are then performed on these continuous static graph streams. It is usually suitable the cases where there are numerous added edges. In this case, adding all the edges can be completed simultaneously, and the matching algorithm is performed on the updated data graph snapshot. Therefore, the snapshot-based matching technique includes two parts, an update operation and a matching operation. The following is a discussion of these two operations.

3.1.1 Data graph update

The continuous subgraph matching problem was first discussed in [75] by Wang et al. In this study, continuously updated graph data was regarded as graph streams, and a snapshot-based pattern matching algorithm, the NNT algorithm, is proposed, which has achieved good performance. The NNT algorithm constructed a node-neighbor tree(NNT) for each vertex u in both data graph G and pattern graph P, which is denoted as NNT(u). For the data graph G, given a depth value L, \(NNT(u) (u \in G)\) stores all the paths in the data graph G, which regards u as the root vertex, and the length does not exceed L. As shown in Fig. 5a, \(T_1, T_2, T_3,\) and \(T_4\) are NNTs with a depth value \(L=2\) corresponding to vertices 1, 2, 3, and 4 in the data graph G. The capital letters indicating the labels of the vertices, the contents of \(T_1\) and \(T_2\) are the same. Figure 6b shows an inverted index for NNTs to find the vertices and edges in each NNT that match the vertices and edges in the data graph, where \(*\) represents the omitted part in the inverted index.

The update operations for structure changes over dynamic graph include deleting edge operations and adding edge operations. The following discussions show how each operation uses the inverted index based on the NNT to update the index.

Delete edge operation: Assuming that an edge in a data graph is deleted at a certain time, the corresponding edges and sub-edges in all NNTs have to be deleted simultaneously through the inverted index. For the data graph G in Fig. 5, if edge (1, 3) is deleted, edge (a, c), sub-edges (c, e) and (c, f), and the vertices associated with these edges \( T_1 \& T_2\) are removed from the inverted index.

Add edge operation: If an edge is added to a data graph at a certain time. First, the vertices in each NNT, corresponding to the node of the added edge, are found based on the inverted index. Then, the newly generated path in the data graph is added to the NNT, starting from the vertex of the corresponding node. For the data graph G in Fig. 6, if edge (1, 4) is added, in \(T_1\), the path \((a \rightarrow g \rightarrow h)\) is added from vertex a, where the attributes of vertices g and h are C and B, respectively. In \(T_2\), the path \((b \rightarrow g)\) is added from vertex b, where the attribute of g is C. The adding edge operation is completed when all paths in the NNTs are added. Finally, the inverted index needs to be updated by adding the newly added vertices and edges. Similarly, the same operation needs to be performed on vertex 4 to update the index structure.

The NNT algorithm matches the pattern graph with the snapshot of the data graph at each moment, which does not reflect the evolution process of the graph over time. Yang et al. [81] first proposed an index structure, BR-Index, for large-scale dynamic graphs, which divided the data graph into a series of overlapping index regions. And each region contained several independent core regions. It extracted the maximum features (small subgraphs) of each index region and then established a feature lattice for maintenance and lookup. Meanwhile, it also maintained a vertex lookup table based on the hash principle. The table was used to store the data vertices in each index region and which core region the vertex is in the index region. Thus, the updated part can be located quickly using the lookup table when a data graph is updated.

Additionally, Song et al. [66] proposed a subgraph matching algorithm, DDST, based on a time window, which was the first work to impose a timing order constraint on graph streams, where the window contained all the snapshots that met the time constraint. Moreover, there was a time constant on each edge that recorded the generation time of the edge. Based on this timestamp, it was possible to determine whether a legitimate data subgraph is within a given time window. Only when the legal data subgraph in the window met the matching conditions, it would become a matching result. Compared with the NNT algorithm, the advantage of a time window approach is its ability to evolve the graph over time and that it conforms better to practical applications.

Furthermore, Khurana et al. [42] proposed a tree-like index structure, DeltaGraph. It is a rooted and directed graph where each leaf node stores a snapshot arranged in time order, and the internal nodes store a graph made up of combining lower-level graphs. Here, the root node represented the source node, the leaf node represented the target node, and each edge held information from the source and target nodes. When the data graph performed an add or delete edge operation, this index structure added a snapshot of the data graph at that moment to the leaf node. The information stored with each edge is called edge delta. And it is sufficient to build the source node (i.e., root node) of the graph corresponding to the target node (e.g., leaf node) of the graph, so that a specific snapshot can be created from the root to the snapshot by traversal the path. When the data graph performed an add or delete edge operation, this index structure only needed to add the snapshot of the data graph at that moment to the leaf node.

3.1.2 Subgraph matching

When using the subgraph isomorphism method in the matching process, the time complexity is very high. For example, for the NNT algorithm using the subgraph isomorphism method for matching, the time complexity can be described as \(O(n_1 *n_2(|T_1|^{1.5} / log|T_1|)|T_2|)\) [81], where \(n_1\) represents the number of vertices in the pattern graph, \(n_2\) represents the number of vertices in the data graph at each time, \(T_1\) and \(T_2\) represent the maximum number of NNTs corresponding to the pattern graph and data graph. This shows that the subgraph isomorphism method severely restricts the execution efficiency of the NNT algorithm; therefore, it does not meet the real-time requirements of continuous subgraph matching and should be avoided as much as possible.

NNT algorithm uses an approximate algorithm, which performs subgraph matching by comparing the paths of two vertices in the NNT. If all the paths in the NNT constructed by each pattern graph vertex can find a match in the NNT constructed by the corresponding data graph vertex, it indicates that the pattern graph matches the data subgraph. The time complexity of this subgraph matching method is \(O(n_1n_2r^l)\), where r represents the maximum degree of the data graph node, and l represents the selected depth value. Because each matching operation requires matching all possible vertices, the overhead is still very high. Thus, the algorithm also proposes a coding method to convert the NNT into a numerical vector and counts the number of different paths in all NNTs to further reduce the computing overhead.

As shown in Fig. 6a, there are eight different paths in \(T_1, T_2, T_3\), and \(T_4\); hence, the NNT of each vertex can be represented by an 8-dimensional vector, where the i-th dimension records the number of i-th paths in the NNT. Consequently, the pattern graph and subgraph can be converted into a multidimensional numerical vector. If the value of each dimension in the pattern graph is less than or equal to the value of the dimension in the subgraph, it means the subgraph meets the matching conditions. The matching time complexity based on the above optimization is \(O({{\overline{L}}}n_1n_2)\), where L represents the number of non-zero items in the numerical vector.

During the subgraph matching process, the BR-Index algorithm first extracts the features (subgraphs) set of the pattern graph. Then based on this extracted set, it finds the index regions in the data graph that contain some of these features. Next, it finds the candidate sets of these features in the corresponding index region. Finally, the candidate sets are combined to obtain the matching result.

In comparison, the DDST algorithm uses a simulation matching method to complete subgraph matching. As simulation matching is more flexible than isomorphism matching, it can identify more useful results. However, in subgraph isomorphism traditional graph simulation extends edge-to-edge mapping to edge-to-path mapping and imposes a more flexible topological constraint, resulting in imprecise results. To solve this problem, the DDST algorithm adds two constraints Dual simulation and Locality [50] to graph simulation and constructs a signature for the pattern graph. The signature includes the labels of the edges and vertices, as well as the in- and out-degree information of all vertices. If the signature of the data subgraph is consistent with the pattern graph, it sequentially uses the binary simulation. Simultaneously, it presents the time order of the edges in the pattern graph. If the time attribute of each edge in the data subgraph meets the time order in the pattern graph, it meets the matching condition. Further, DeltaGraph uses a distributed parallel graph processing framework to complete subgraph matching; it stores snapshots in memory. Given a pattern graph, it can directly find a matched snapshot starting from the root using the information on the edge.

In summary, the subgraph isomorphism method has a low computation efficiency and is very time-consuming. Therefore, the approximate matching algorithm and simulation matching are better candidates for the design of snapshot-based matching methods. The approximate algorithm uses a small number of false-positive results to reduce the matching time, and the simulation matching uses the verification of binary relationships to replace the subgraph isomorphism. Simulation matching is mainly applied for applications that focus more on mining relationships between vertices. Finally, the application requirements determine the most suitable subgraph matching algorithm.

3.2 Incremental matching technique

Using the snapshot-based matching method over dynamic graphs will cause many redundant calculations even though there is only a small number of update operations. In response, Wenfei Fan et al. [23] first applied the incremental processing technique to continuous subgraph matching by proposing the IncSimMatch algorithm and IncBMatch algorithm, which only analyzed and matched the updated parts of the data graph. In addition, they compared the two algorithms with their batch processing (snapshot) algorithms, Match\(_{s}\) and Match\(_{bs}\) , respectively. The experimental results showed that when the number of addition or deletion edge operations did not exceed a certain percentage of the total number of edges in the initial data graph, the execution efficiency of the incremental matching technique was higher.

Incremental matching means that given a data graph G, a pattern graph P, an initial pattern graph matching result P(G), and a set of update operations for data graph \(\Delta G\), a newly added matching result set \(\Delta O\) will be calculated after the data graph update. This is \(P(G \oplus \Delta G) = P(G) \oplus \Delta O\), where \(\oplus \) represents the operator used to add the changed content to the original data.

In the matching process, the incremental matching technique can either use direct computation approach or construct an auxiliary data structure to utilize the intermediate results. The advantages and disadvantages of the two methods are discussed in detail below.

3.2.1 Direct computation

When an edge is added or deleted, it may only affect a small part of the subgraph. Therefore, Wenfei Fan et al. [23] proposed an incremental algorithm, IncIsoMat, based on direct computation with the graph simulation method. It identifies and extracts a subgraph \(G'\), which can be affected by \(\Delta O_i\). It executes a subgraph matching method on \(G'\) to obtain the change to the original matches by determining the set difference between the original matches and new matches. Wenfei Fan et al. [22] further proposed another direct computation method, IncISO, for the incremental subgraph isomorphism problem. When the data graph is updated, only the data graph nodes within the diameter range of the pattern graph around the updated edge are rematched to avoid double calculation of the entire data graph. The cost of IncISO can be represented as a function of |P| and \(|G_{d_P}(\Delta G)|\), instead of the entire graph size |G|, where \(G_d(v)\) represents the \(d-\)neighbor subgraph of v that are within d hops.

Although the above algorithms reduce redundant calculations by matching subgraphs in the affected small-scale subgraphs, they also create new performance problems. These approaches based on repeated search may incur significant overhead in extracting the affected subgraph \(G'\) (resp. \(G_d(v)\)), performing subgraph matching on \(G'\) (resp. \(G_d(v)\)) and computing the newer matching for each \(\Delta O_i\). Moreover, due to the average distance between two vertices is extremely small in real-world graphs [71], the extracted subgraph \(G'\) (resp. \(G_d(v)\)) could include most of the nodes and edges in G, leading to a useless optimization.

In terms of graph databases that handle continuous subgraph matching, many existing graph databases, such as Neo4j [3] and OrientDB [4], only support one-time subgraph queries which evaluate the subgraph on each snapshot of the data graph. However, due to the real-time demand, many applications are required to handle continuous subgraph queries. To solve this problem, Kankanamge et al. [40] presented an active graph database, Graphflow, which supports incremental subgraph evaluating for each update. Internally, the system’s query processor is based on Generic Join [58], which is essentially a worst-case optimal join algorithm [57, 73]. In addition, Ammar et al. [10] further extend the worst-case optimal join to the distributed system.

Given a pattern graph \(P=(V(P), E(P))\), the generic join first determines a query node order \(s = \langle u_1, u_2, \ldots ,u_{|E(P)|}\rangle \), and then, implements a multi-way join using the multi-way intersection operation on the query nodes in turn, according to order s. Each order of the query node can also be considered as a query plan. In the problem of subgraph matching, the multi-way intersection can be achieved using the intersection operation on adjacency lists matched by one or more query nodes. In the process of implementation, the order, s, of query nodes ensures that, for any k less than |E(Q)|, the induced subgraph composed by \(s = \langle u_1, u_2, \ldots ,u_k \rangle \) is connected. Here, the induced subgraph is represented as \(P_k\). The entire process of a generic join contains two basic operations:

-

Scan: For first two nodes on the query node, order \(s = \langle u_1, u_2, \ldots ,u_{|E(P)|}\rangle \) is obtained directly by scanning the adjacency list of the graph.

-

Extend/Intersect: When obtaining the \(P_{k-1}\) match, the matches of \(P_k\) can be calculated through the Extend/Intersect operator. For any match of \(P_{k-1}\) , each match of the query nodes adjacent to \(u_k\) starts along its adjacency table to acquire all possible matches for \(u_k\); then, these matches are intersected to obtain the final matches for \(u_k\).

Although Graphflow can handle the continuous subgraph query based on Generic Join without calculating the set difference, it still needs to compute the join operation from scratch for each \(\Delta O_i\) even if the \(\Delta O_i\) does not cause any new matches.

3.2.2 Auxiliary data structure

Although the incremental matching algorithm with direct computation is performed on a small-scale graph affected by \(\Delta O_i\), it still needs to perform subgraph matching on the small graph from scratch for each update, leading to high calculation costs. To solve this problem, an auxiliary data structure can be constructed to keep track of the (partial) results of the previous computation. Then, for each recent update, the newer results can be obtained easily.

In the process of constructing an auxiliary data structure, the intermediate results can either be stored in the query graph or data graph, which is called query-centric representation or data-centric representation, respectively. After the auxiliary data structure is constructed and the candidate set of all query vertices is found, the subgraph matching operation of the query graph is performed. The following is an in-depth introduction to subgraph matching based on the two different auxiliary data structures.

Query-Centric Representation Query-centric representation is the more commonly used, which stores a set of candidate data vertices for each query vertex, and partial matches can be obtained by traversing the query graph. For the subgraph matching process, continuous subgraph matching is similar to static subgraph matching. Based on whether a pattern graph needs to be decomposed, it can use either a join-based matching or an exploration-based matching technique. Further, graph simulation-based matching technique has also obtained good experimental results in continuous subgraph matching. An in-depth introduction to these three methods is provided below.

(1) Join-based matching technique

For a large pattern graph, it can be time-consuming to rematch the entire pattern graph every time the data graph is updated. Therefore, rather than looking for a match to any edge of the entire graph or the query graph, it is better to divide the query graph into smaller subgraphs and then search for them. As shown in Fig. 7, the pattern graph P is decomposed into a series of smaller pattern subgraphs, expressed as \(\langle P_1, \ldots ,P_k\rangle \). These pattern subgraphs have then tracked the matches with individual subgraphs and combined the matches to produce progressively final matching results for the entire pattern graph.

The join-based method is widely used in static subgraph matching. The representative algorithms include the gIndex algorithm [80] and GraphGrep algorithm [31]. The join-based method mines features using recognition capabilities from the data graph, such as path [31, 87], tree [63, 82] and subgraph [15, 37, 80]. Based on these features, it can build an index on the data graph and decompose the pattern graph. However, simply applying this method to the continuous subgraph matching process will produce a series of problems. For example, the gIndex algorithm [80] would need to mine the features of the data graph subgraph at each moment. Therefore, this method is unsuitable for continuous subgraph matching processing with real-time analysis requirements. Although the GraphGrep algorithm [31] meets the real-time requirements, it may produce several negative results by only using the path; therefore, it is not highly recommended for verification and filtering applications. Notably, when applying the join-based method to continuous subgraph matching analysis, the efficient extraction of the data graph features is the main challenge.

Data graphs constructed in social network applications are often multi-relational graphs. The properties of edges represent the connectivity and relationship between entities. Therefore, in this application, the relationship between data graph entities (i.e., type of edge) can be used as a data graph feature to decompose the pattern graph. Choudhury et al. [16] proposed a tree-like auxiliary data structure called the subgraph join tree (SJ-Tree). The SJ-Tree is a binary tree whose root node represents the pattern graph, and its child node represents the subgraph of the pattern graph. And then, the subgraph of each node is further decomposed to obtain their child node. The leaf nodes represent the final decomposition results.

Decomposition of social query in SJ-Tree (Taken from [16])

As shown in Fig. 8, each node in the SJ-Tree stores the information of its sibling and parent nodes and maintains a hash table to store the matching results of the node. When the data graph is updated, an iterative search of all the leaf nodes on the SJ-Tree is conducted to obtain the matching result that contains the newly added edge; this matching result is stored in the hash table of the corresponding node in the SJ-Tree. Simultaneously, where possible, the matching result of the leaf nodes is integrated with the matching result of its sibling nodes to form a larger matching result. The larger matching result is stored in the hash table of the parent node. Furthermore, a join order is defined, where the individual matching subgraphs will be combined. The join operation is complete when a result that matches the entire pattern graph is generated. Figure 8 shows an example of a social query decomposition with SJ-Tree. The leaf nodes candidate set of SJ-Tree {(“George”, “friend”, “Join”)} and {(“Join”, “like”, “Santana”)} can be connected and integrated to be a greater matching result {(“George”, “friend”, “Join”), (“Join”, “like”, “Santana”)}.

Although SJ-Tree can perform graph queries in an exponential time relative to its height, it still has a limitation. In Fig. 8, since the “friend" relationship frequently occurs in the data graph during the subgraph matching process, it will undoubtedly take a considerable amount of time to track all the edges matching “friend". Therefore, matching the “friend" edge in the pattern graph can be postponed, and the decomposition result with relatively infrequent occurrences in the data graph is matched first. Based on this idea, Choudhury et al. [18] proposed a Lazy-search algorithm. Given an initial data graph G, the selectivity of a k-edge subgraph g in graph G is the ratio of the number of occurrences of g to the number of all k-edge subgraphs in G, where g is called the selectivity primitive. To both limit the computational cost of subgraph isomorphism and keep the selectivity primitive effective when a data graph updates, unilateral or bilateral subgraphs are generally selected as the selectivity primitive. The Lazy-search algorithm calculates the selectivity of the types of unilateral or bilateral subgraphs contained in the data graph with an offline method and sorts these selectivity primitives in ascending order according to their selectivity value. When the selectivity value is lower, the recognition ability is higher. Subsequently, the query graph is decomposed according to the order of these selectivity primitives.

In the matching process, in order to ensure that the matching results of adjacent leaf nodes in the SJ-Tree are still adjacent on the data graph, it constructs a bitmap index, as shown in Fig. 9b. The rows represent all the nodes in the data graph, and the columns represent the decomposition results of a pattern graph that satisfies the adjacency relationship. Figure 9a shows the two adjacent decomposition results of the pattern graph P. If the data graph vertices are in the matching result of the query fragment \(g_1\), the corresponding bits are 1, and the others are 0. For example, as \(g_1\) matches the edge \((u_1, u_2)\) in the data graph, the bits of \(u_1\) and \(u_2\) corresponding to \(g_1\) are 1, and the other bit positions are 0. When matching \(g_2\), only the results that meet the matching condition of \(g_2\) around the vertex for which the bitmap vector is 1 in \(g_1\) need to be found. This advantage is that it can use adjacent decomposition results to ensure that the matching results are still adjacent on the data graph. Therefore, it can avoid matching results that do not meet the adjacency relation, reduce the search space, and speed up the matching process.

Based on the decomposition and join methods, Youhuan Li et al. [48] studied continuous subgraph matching with timing order constraints which meant the occurrence of edges in the stream followed the time sequence, and proposed the timing subgraph matching algorithm. All the matching results should meet both structure and timing constraints. An example of query graph Q with two-timing order constraints is presented in Fig. 10, \(\epsilon _1\) \(\prec \) \(\epsilon _2\) indicate that edges matching \(\epsilon _1\) should arrive before edges matching \(\epsilon _2\) in the subgraph matches of Q. In Fig. 10, the query is decomposed into a set of subgraph queries that contain only one timing order constraint called timing connect-query, and a match-store tree (MS-tree) for each subgraph query \(Q_i\) is constructed to store the intermediate results \(G(Q_i)\). Then, all the intermediate results of the subgraphs are joined according to the timing order constraint to obtain the final matching result G(Q)=\(\{G(Q_1),G(Q_2),G(Q_3)\}\).

Based on timed order constraints, MS-Tree can filter out a large number of discardable partial matches, which reduces both space costs and maintenance overhead without incurring any additional data access burden. Unlike the DDST algorithm with the time constraint mentioned earlier, this is based on subgraph isomorphism rather than graph simulation. More importantly, it was designed to perform effective concurrency management, using a fine-granularity locking technique in the computation to improve system throughput. It is the first work that studied subgraph matching with concurrency management over streaming graphs.

Join-based matching techniques use subgraph isomorphism in the matching process, which can obtain accurate matching results, but at an expense. Although the method of query decomposition can control the size of subgraphs and limit the cost of the subgraph isomorphism, it still produces many invalid intermediate results. If an index is built for the data graph to reduce the number of invalid intermediate results, the cost of index construction and maintenance should also be considered. Simultaneously, how to mine feature structures over frequently updated dynamic graph data for query decomposition is still a problem that needs to be solved.

(2) Exploration-based matching technique

Motivated by the above problems, Sun et al. [69] proposed an exploration-based matching technique, where the obtained results are approximate rather than exact. An example of the exploration process is shown in Fig. 11. Given a pattern graph P and a data graph G, this technique starts with vertex a in the pattern graph and finds the matching vertex \(a_1\) in the data graph G through a simple index that maps labels to node IDs. Next, the data graph is explored from vertex \(a_1\) to reach vertex \(b_1\), meeting the requirement of the partial query (a, b). Then, it is explored from \(b_1\) to reach \(c_1\) and \(c_2\), meeting the requirement of the partial query (b, c). In this way, it can obtain the matching results of the pattern graph from the data graph without generating and joining large intermediary results or building and maintaining the index for the data graph like the join-based matching technique does. Certainly, if the approach starts from a node labeled b or c, it may still get some useless intermediate results. But in general, it will not generate as many of them unless it is in the worst case. The meaningful advantage of the exploration-based matching technique is that the join operations are avoided.

Compared with the join-based continuous subgraph matching, the explorat-ion-based continuous subgraph matching is more suitable for the distributed parallel graph processing framework. The mainstream distributed parallel graph processing frameworks, such as Google’s Pregel [51] system, use a node-centric computing model framework. For this framework, each vertex maintains an input queue and an output queue. Additionally, each computing task is composed of a series of super-steps. After receiving the input information from the input queue in each super-step, the vertex will process the information according to the user-defined script program, and finally, output the processing result to the output queue. As the storage and processing units are vertices, this computing model, together with join-based continuous subgraph matching, will produce several intermediate results, thereby increasing the processing, storage, and data transmission costs of vertices. In summary, the join-based continuous subgraph matching technique is unsuitable in a distributed parallel graph processing framework. In comparison, the exploration-based method is more suitable because it does not require index construction, nor does it produce and process numerous intermediate results. Furthermore, it naturally meets the potential needs of incremental computing.

The PathMatch algorithm is an exploration-based matching technique used in a distributed parallel graph processing framework. It finds a Hamiltonian path in the pattern graph that can access each vertex in the graph. Using this path transfers information to complete the exploration (matching). However, if we apply the exploration-based matching technique to a distributed parallel graph processing framework, it still faces the following problems:

-

First of all, the matching results obtained by the exploration-based method are approximate rather than exact;

-

When solving some continuous subgraph matching problems, it is more expensive to use a naive graph exploration than the join operation;

-

Not all queries can be answered by relying only on exploration, for example, when attempting to check whether the next explored vertex is the first matching vertex.

To solve the above problems, Sun et al. [69] presented a novel exploration method, STwigMatch, that maximizes the benefits of both the join-based approach and the exploration-based approach while avoiding their disadvantages. Specifically, this method uses a join-based graph matching framework on the macro level and an exploration-based matching method to avoid useless candidates during the join and find matching results. In other words, it decomposes the pattern graph into a series of small twigs based on the transmitted information in the pattern graph rather than the feature subgraph extracted in the data graph and then stores each decomposition result on a vertex of the distributed parallel graph processing framework. Later, it matches these small twigs through the exploration-based approach. The benefit of this algorithm is the tradeoff between matching calculation cost and matching result accuracy.

Additionally, Gao et al. [30] proposed an exploration-based algorithm, SSD, in the distributed framework, Giraph. Figure 12 is an illustration of the computation process of the SSD algorithm. First, a vertex with the maximum degree is labeled as the sink vertex in the pattern graph (i.e., vertex C in Fig. 12a). It is called a sink, as all the edges satisfied are passed to it, and it does not need to send a message out. After selecting the sink vertex, the direction of the edge is determined via a BFS (breadth-first-searching) strategy. Based on this concept, the pattern graph can be converted into a directed acyclic graph (DAG) with a single sink vertex as in Fig. 12b. And regarding the sink vertex as a cut vertex, the DAG can further be decomposed into three sub-DAGs. In each sub-DAG, there are some source vertices (e.g., vertex a and e in sub-DAG 0), which do not have any incoming edge and can initialize the message transfer.

Figure 12c shows the process of subgraph matching. In sub-DAG 0, information passes to all its neighbors (vertex 1 and vertex 2) to convert from vertex 0. Then, the converted information continues to pass to their downstream neighbors and finally reaches vertex 3. Thus, it can obtain the information transmission rules from the source vertex. Then, it maps the information transmission rules to the data graph and starts from the vertex in the data graph with the same attribute as the source vertex to check whether the subsequent vertices match the query vertices based on the rules. For example, in Fig. 12c, source vertex 0 matches the data graph vertex a, and the matching process is completed by a series of super-steps. In super-step 1, vertex a passes information to its neighbors; in super-step 2, vertices b and c pass the information to vertex d after receiving it. If vertex d receives two pieces of information from the same vertex, which is the same as the information transmission rules of sub-DAG 0, the existence of a matching subgraph can be proved.

Illustration of the SSD algorithm (Taken from [30])

However, there is still a problem with the SSD algorithm. In super-step 2, if edge (a, b) is deleted when vertex b and c pass the information to vertex d, vertex d will return an expired, inaccurate matching result after receiving the information. The problem of inconsistent results is severe in distributed systems since each distributed parallel graph computing framework contains many super-steps. In order to solve this problem, Gao et al. [29] proposed the Stp(Q) algorithm.

The exploration-based matching method is more suitable for processing large-scale graphs; however, inconsistent matching can produce inaccurate matching results. Therefore, this type of method is suitable for applications that do not have strict accuracy requirements for results, such as social network analysis. Determining methods to effectively combine the exploration-based and join-based matching methods to balance efficiency and subgraph matching accuracy is a topical issue in current research.

(3) Simulation-based matching technique

Note that the exploration-based matching technique produces inaccurate matching results, while the join-based matching technique is more expensive. Both methods use the NP-hard subgraph isomorphism matching method, which limits the improvement of the performance. Therefore, the simulation-based matching technique has gained attention in the continuous subgraph matching field. This method generates a matching candidate set for each query vertex according to its label and then filters out the unmatched vertices according to the different approximation degrees of the precursors and successors of the query vertices (see Sect. 2.1 for details on simulation matching).

Wenfei Fan et al. [23] proposed an incremental graph simulation matching (IncSimMatch) algorithm with the auxiliary data structures, match(v) and candt(v), to maintain the intermediate results and accelerate the matching calculation. Here, v represents a vertex in the pattern graph, match(v) represents a vertex in the data graph that matches v, and candt(v) represents a vertex in the data graph that has the same label as that of v but does not meet other matching conditions.

With these auxiliary data structures, unnecessary update operations can be filtered out:

-

For delete edge operation, only when the deleted edge, such as \((u_i, u_j)\), satisfies \(u_i \in match(\cdot )\) and \(u_j \in match(\cdot )\), it will cause the reduction of the matching results.

-

For add edge operation, only when the added edge, such as \((u_i, u_j)\), satisfies \(u_i \in candt(\cdot )\) and \(u_j \in match(\cdot )\) or \(u_i \in candt(\cdot )\) and \(u_j \in candt(\cdot )\), it will cause a new matching result set.

In addition, Wenfei Fan et al. extended the definition of initial simulation matching and proposed the concept of bounded simulation, which redefines the pattern graph with edge weights. Each edge in the pattern graph maintains a constant, k. If there is a vertex in the data graph that matches the successor vertex of the corresponding vertex in the pattern graph within k hops, then there is a match. The author further applied the bounded simulation matching technique to the continuous subgraph matching and proposed the incremental algorithm, IncBMatch. The IncBMatch algorithm is similar to the IncSimMatch algorithm mentioned with the auxiliary data structure match(v) and candt(v), which solves the problem of highly complex isomorphism matching and achieves good results. The advantage of bounded simulation matching is that the matching process can be completed in polynomial time, which effectively improves the efficiency of subgraph matching.

The literature [41] was the first work that applied the IncSimMatch algorithm to the vertex-centric distributed parallel graph processing framework and realized the distributed parallel computing of dynamic graph simulation matching. During the matching process, the updated graph was stored in a vertex of the framework, and an execution script allowed the vertex to filter out edges that would not produce new matching results. Then, the edges were evaluated against the edge constraint relationship in the graph simulation matching by a vertex of the framework. The main process would receive the processing information from all vertices and then evaluate whether these subgraphs meet the edge constraint relationship in the graph simulation matching to obtain the final matching result.

However, the simulation matching method is based on a binary relationship and can produce inconsistent results with the structure of the pattern graph; therefore, it is mainly suitable for applications that do not have very strict requirements for graph structure matching, such as social network analysis. Further, simulation matching can be used to prune candidate sets. Wickramaarachchi et al. [76] proposed a distributed pruning algorithm D-IDS over the dynamic graph, which uses a dual simulation matching method to prune the data graph. The dual simulation requires that all child and parent nodes of the current node conform to the binary relationship. When the data graph is updated, the binary simulation is used to prune the data graph, and a large data graph can be pruned into a relatively small data graph. Meanwhile, the data graph can be maintained continuously. In the matching process, only incremental matching needs to be performed on the small graph.

The matching efficiency of simulation matching is high; it is applicable to both distributed and centralized environments and has some unique advantages. This advantage is more apparent in the processing of continuous subgraph matching. The bidirectional simulation matching based on graph simulation matching can better tradeoff the effectiveness and timeliness of simulation matching results and simultaneously obtain a matching result that is more consistent with the structure of the pattern graph, thereby effectively compensating for the disadvantages of the join-based matching technique and exploration-based matching technique.

Although constructing an auxiliary data structure to store intermediate results can reduce the re-computation overhead and achieve better performance, query-centric representation still has several limitations. When a data graph vertex v has multiple candidate query vertices, v needs to be copied as many times as the number of the matched query vertices. Further, storing many partial results and then joining each other follows a certain order does cause lots of redundancy. Specifically, the worst storage complexity for SJ-tree is \(O(|V(q)|*|E(G)|^{|E(q)|})\), where the |E(G)| represents the number of edge in a graph G, and V(q) represents the number of vertices in a graph q. Finally, when any update occurs for vertex v, it must find all the partial matches containing v in the query-centric representation. Therefore, it needs to design and maintain an additional index of duplicate keys to find corresponding partial matches in the query-centric representation.

Data-Centric Representation All of the above problems of existing methods motivated [43] to investigate the novel concept representation, called data-centric graph (DCG). It stores the corresponding query vertex ID as the incoming edge label for each data vertex in the data graph. Thus, in the DCG, each data vertex appears at most once, and each data edge stores at most |V(q)| edges. Thus, its worst-case storage complexity is \(O(|V(q)|*|E(g)|)\). For a dynamic graph, the execution model allows for fast incremental maintenance. Each edge in the DCG has one of three states: NULL, IMPLICIT, or EXPLICIT. An explicit edge \((v,u',v')\) represents that query vertex \(u'\) is candidate of \(v'\) and the data path and subtree of \(u'\) matches the corresponding data path and subtree of \(v'\). In an implicit edge \((v,u',v')\), only the data path of \(u'\) matches the corresponding data path of \(v'\), and the subtree does not match. When a new/expired edge is inserted/deleted, TurboFlux uses an edge transition model to change the state of each corresponding edge, and finally, it can report positive/negative matches based on the explicit edge. Furthermore, compared with other auxiliary data structures, as DCG itself is a graph, whenever the DCG is updated, it can directly access corresponding vertices; there is no need for an additional duplicate key index like with query-centric representation.

A running example (taken from [43])

Figure 13 shows a running example of DCG. Figure 13 shows the DCG obtained after the transformation of the data graph for the query graph q. When \((v_0,v_1)\) is inserted into g, the state of \((v_0,u_1,v_1)\) in the DCG will be transited from NULL to IMPLICIT, as the inserted edge matches \((u_0, u_1)\) and \(v_0\) has an incoming explicit edge with label \(u_0\) (Fig. 13). Then, the state of \((v_1,u_4,v_4)\) is transited from NULL to IMPLICIT, because \(v_4\) is the child vertex of \(v_1\) and the state of \((v_0,u_1,v_1)\) is IMPLICIT (Fig. 13e). Next, the state of edge \((v_1,u_4,v_4)\) will be transited to EXPLICIT (Fig. 13f). Note that the subtree of \(v_1\) matches that of \(u_1\). Thus, the state of \((v_0,u_1,v_1)\) is transited to EXPLICIT (Fig. 13g). Finally, the state of \((v^{*}_s,u_0,v_0)\) is also transited from IMPLICIT to EXPLICIT for the same reason (Fig. 13h).

In summary, the concise auxiliary data structure and efficient incremental maintenance strategy of TurboFlux make it the most advanced method currently. It can efficiently identify update operations that may cause positive/negative matches with the current partial solutions.

3.2.3 Complexity analysis

Continuous subgraph matching algorithms aim to find the occurrences of a given pattern on a stream of data graphs online. The dynamic nature of the data graph demands that the matching results need to be responded to in a little time. Thus, the incremental continuous subgraph matching algorithms have been widely studied. In Table 3, we summarize the space complexity of the index and the time complexity of updating the index on each update of four latest and representative continuous subgraph matching algorithms, including IncIsoMatch, SJ-Tree, Graphflow, and TurboFlux. IncIsoMatch and Graphflow are the direct computation incremental algorithm, and they do not generate any auxiliary data structure. While SJ-Tree and TurboFlux construct an auxiliary data structure to accelerate the filtration rate.

From Table 3, we can see that for SJ-Tree, the space complexity and time complexity of the auxiliary data structure (index) are exponential, while for TurboFlux, they are linear. This is because SJ-Tree built a tree index and uses a lot of join operations. TurboFlux used a data-centric representation to store the candidate set. Obviously, TurboFlux can effectively reduce the search space.

4 Continuous subgraph matching for content-based change

Besides the structure-based continuous subgraph matching, the content-based continuous subgraph matching techniques have also been studied. For example, using this technique, a server deployment solution that meets user needs can be found in a frequently updated data center network [60]; advertisers can find closely linked groups of people in frequently changing social networks; an HR can construct a team in dynamic social networks based on constrained pattern graph [44]. The labels of nodes or edges in these applications will frequently change with time. Currently, there are few studies on content-based continuous subgraph matching techniques. The following are some typical studies.

4.1 Join-based matching approach

Taking resource allocation in a data center network as the application background, Bo Zong et al. [88] proposed the Gradin algorithm to solve the continuous subgraph matching problem between the user-defined resource pattern graph and data-center network. It adopts a join-based matching approach since the data center network has a relatively stable topology but frequent changes in the node/edge labels, which representing vacant CPU cycles and network bandwidth. The matching process will include the following two stages.

-

Offline index construction: First, the frequent graph structure set is decided by existing structure selection algorithms [80], and then all the graph fragments in data that contain the frequent graph structure were obtained by the subgraph mining technique [79]. Based on these frequent subgraphs, an inverted index is built on the data graph.

-

Online query processing: Gradin uses a join-based approach to search the compatible subgraphs of pattern graphs. The pattern graph is decomposed first based on the frequent subgraphs. Then Gradin searches the candidates for each query fragment to sional vectors and uses indices to efficiently search them. Then, it combines all matched fragments of the query’s subgraphs via join operation to form compatible subgraphs for pattern graphs.

Obviously, the frequent update is a huge challenge that needs to be addressed. Thus, the Gradin algorithm further used a grid-based index, FracFilter, which can be used to construct indices for frequent subgraphs in the graph and avoid redundant comparisons. As shown in Fig. 14, let \(s_1\) represent a frequent subgraph mined from the data graph. Then, all the subgraphs in the data graph with the same structure as \(s_1\) can be converted into two-dimensional vectors based on their node labels and further mapped into the two-dimensional grid indices, i.e., all the black dot in FracFilter. Simultaneously, the subgraph with the same structure as \(s_1\) in the pattern graph is also mapped to the grid index in the same way indicated by a red square. If the subgraphs of the pattern graph match that of the data graph, the labels of corresponding nodes must satisfy the partial order. Therefore, the two-dimensional grid can be divided into three areas according to the position of the red square. The subgraphs of the data graph, corresponding to the black dots that fall in the green shadow area, \(R_1\), will meet the matching requirements with the subgraphs of the pattern graph. Conversely, the subgraphs of the data graph, corresponding to the black dots that fall in the brown shadow area, \(R_3\), do not meet the matching requirements with the subgraphs of the pattern graph. Therefore, it is only necessary to perform matching computation on the subgraphs corresponding to the black dots in the area between the green and brown shadow areas (i.e., \(R_2\)) to obtain the matching result.

In the searching processing, the Gradin algorithm requires matching \(dn_s/{\lambda ^d}\) \([(\lambda +1)/2]^{d-1}\) times on average, where \(\lambda \) is the density of the grid index, d is the dimension of the grid index, and \(n_s\) is the number of pattern graph slices during the offline index construction. When the nodes/edges labels of the data graph are frequently updated, FracFilter has a lower maintenance cost than other index structures. In average, each graph update only requires \(2(1-1/{\lambda ^d})\) operations.

Mapping a frequent subgraph \(s_1\) to the grid index (Taken from [88])

The Gradin algorithm can also be directly extended to the case where the edge labels in the data graph are frequently updated. In addition to using frequent subgraphs mined in the data graph to decompose the pattern graph, current advanced subgraph structures commonly used in academia can also be used to decompose the pattern graph.

4.2 Exploration-based matching approach

However, the join-based approach cannot be directly extended to large dynamic graphs [69], and it is suitable for queries with static or infrequently updated labels. Since the active attributes in dynamic graphs would cause a lot of updates to the join indexes. Thus, Mondal et al. [56] designed an exploration-based query evaluation system, called CASQD, to continuously detect meaningful and up-to-date insights based on subgraph matching over content-changed graphs.

The principle of the exploration-based approach is to select a set of potential pivot nodes and then explore their neighbors to get a match. The key of this method is how to select pivot nodes efficiently and make the best use of additional information about adjacent nodes. Therefore, CASQD used a monitor, explore and trigger-based approach to select pivot nodes and reduce the search space:

-

Monitor: In the monitoring phase, CASQD summarized the activity node and/or its activity neighbors via a set of models. The monitoring policies have two methods to capture the activity information: (1) directly evaluating the node’s activity predicates and tracking the number of its active neighbors in real-time; (2) calculating probabilistic “estimates" of the active nodes by historical information.

-

Explore: In the exploring phase, if there is a match around a node v based on its model and neighbors’ knowledge, it is called a pivot node. The exploration phase searches for a match around the pivot node and finds a possible result. In addition, it further updates the neighborhood knowledge of the pivot node with the information gathered during the exploration phase.

-

Trigger: The trigger phase is used to let the neighbors of a active node know about any significant change and update their knowledge based on it.

Compared with Gradin, the advantage of the design is that there is no need for frequent subgraph mining on the data graph, and the advanced subgraph structures can be integrated into any query language that supports subgraph pattern matching. In addition, CASQD extends simple tree-structured patterns to more complicated structures, e.g., stars, cliques, or bipartite cliques, and designs different exploration algorithms for finding different primitive patterns.

5 Performance comparison of matching algorithms