Abstract

We propose a new first-order method for minimizing nonconvex functions with Lipschitz continuous gradients and Hölder continuous Hessians. The proposed algorithm is a heavy-ball method equipped with two particular restart mechanisms. It finds a solution where the gradient norm is less than \(\varepsilon \) in \(O(H_{\nu }^{\frac{1}{2 + 2 \nu }} \varepsilon ^{- \frac{4 + 3 \nu }{2 + 2 \nu }})\) function and gradient evaluations, where \(\nu \in [0, 1]\) and \(H_{\nu }\) are the Hölder exponent and constant, respectively. This complexity result covers the classical bound of \(O(\varepsilon ^{-2})\) for \(\nu = 0\) and the state-of-the-art bound of \(O(\varepsilon ^{-7/4})\) for \(\nu = 1\). Our algorithm is \(\nu \)-independent and thus universal; it automatically achieves the above complexity bound with the optimal \(\nu \in [0, 1]\) without knowledge of \(H_{\nu }\). In addition, the algorithm does not require other problem-dependent parameters as input, including the gradient’s Lipschitz constant or the target accuracy \(\varepsilon \). Numerical results illustrate that the proposed method is promising.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

This paper studies general nonconvex optimization problems:

where \(f :\mathbb R^d \rightarrow \mathbb R\) is twice differentiable and lower bounded, i.e., \(\inf _{x \in \mathbb R^d} f(x) > - \infty \). Throughout the paper, we impose the following assumption of Lipschitz continuous gradients.

Assumption 1

There exists a constant \(L > 0\) such that \(\Vert \nabla f(x) - \nabla f(y)\Vert \le L\Vert x - y\Vert \) for all \(x, y \in \mathbb R^d\).

First-order methods [3, 31], which access f through function and gradient evaluations, have gained increasing attention because they are suitable for large-scale problems. A classical result is that the gradient descent method finds an \(\varepsilon \)-stationary point (i.e., \(x \in \mathbb R^d\) where \(\Vert \nabla f(x)\Vert \le \varepsilon \)) in \(O(\varepsilon ^{-2})\) function and gradient evaluations under Assumption 1. Recently, more sophisticated first-order methods have been developed to achieve faster convergence for more smooth functions. Such methods [2, 6, 28, 33,34,35, 53] have complexity bounds of \(O(\varepsilon ^{-7/4})\) or \(\tilde{O}(\varepsilon ^{-7/4})\) under Lipschitz continuity of Hessians in addition to gradients.Footnote 1

This research stream raises two natural questions:

-

Question 1

How fast can first-order methods converge under smoothness assumptions stronger than Lipschitz continuous gradients but weaker than Lipschitz continuous Hessians?

-

Question 2

Can a single algorithm achieve both of the following complexity bounds: \(O(\varepsilon ^{-2})\) for functions with Lipschitz continuous gradients and \(O(\varepsilon ^{-7/4})\) for functions with Lipschitz continuous gradients and Hessians?

Question 2 is also crucial from a practical standpoint because it is often challenging for users of optimization methods to check whether a function of interest has a Lipschitz continuous Hessian. It would be nice if there were no need to use several different algorithms to achieve faster convergence.

Motivated by the questions, we propose a new first-order method and provide its complexity analysis with the Hölder continuity of Hessians. Hölder continuity generalizes Lipschitz continuity and has been widely used for complexity analyses of optimization methods [12, 13, 18, 20, 22,23,24,25, 30, 38]. Several properties and an example of Hölder continuity can be found in [23, Section 2].

Definition 1

The Hölder constant of \(\nabla ^2 f\) with exponent \(\nu \in [0, 1]\) is defined by

The Hessian \(\nabla ^2 f\) is said to be Hölder continuous with exponent \(\nu \), or \(\nu \)-Hölder, if \(H_\nu < +\infty \).

We should emphasize that f determines the value of \(H_{\nu }\) for each \(\nu \in [0, 1]\) and that \(\nu \) is not a constant determined by f. Under Assumption 1, we have \(H_{0} \le 2 L\) because the assumption implies \(\Vert \nabla ^2 f(x)\Vert \le L\) for all \(x \in \mathbb R^d\) [37, Lemma 1.2.2]. For \(\nu \in (0, 1]\), we may have \(H_\nu = +\infty \), but we will allow it. In contrast, all existing first-order methods [2, 6, 28, 33,34,35, 53] with complexity bounds of \(O(\varepsilon ^{-7/4})\) or \(\tilde{O}(\varepsilon ^{-7/4})\) assume \(H_{1} < + \infty \) (i.e., the Lipschitz continuity of \(\nabla ^2 f\)) in addition to Assumption 1. We should note that it is often difficult to compute the Hölder constant \(H_{\nu }\) of a real-world function for a given \(\nu \in [0, 1]\).

The proposed first-order method is a heavy-ball method equipped with two particular restart mechanisms, enjoying the following advantages:

-

For \(\nu \in [0, 1]\) such that \(H_{\nu } < + \infty \), our algorithm finds an \(\varepsilon \)-stationary point in

$$\begin{aligned} O \left( { H_{\nu }^{\frac{1}{2 + 2 \nu }} \varepsilon ^{- \frac{4 + 3 \nu }{2 + 2 \nu }} }\right) \end{aligned}$$(2)function and gradient evaluations under Assumption 1. This result answers Question 1 and covers the classical bound of \(O(\varepsilon ^{-2})\) for \(\nu = 0\) and the state-of-the-art bound of \(O(\varepsilon ^{-7/4})\) for \(\nu = 1\).

-

The complexity bound (2) is simultaneously attained for all \(\nu \in [0, 1]\) such that \(H_{\nu } < + \infty \) by a single \(\nu \)-independent algorithm. The algorithm thus automatically achieves the bound with the optimal \(\nu \in [0, 1]\) that minimizes (2). This result affirmatively answers Question 2..

-

Our algorithm requires no knowledge of problem-dependent parameters, including the optimal \(\nu \), the Lipschitz constant \(L\), or the target accuracy \(\varepsilon \).

Let us describe our ideas for developing such an algorithm. We employ the Hessian-free analysis recently developed for Lipschitz continuous Hessians [35] to estimate the Hessian’s Hölder continuity with only first-order information. The Hessian-free analysis uses inequalities that include the Hessian’s Lipschitz constant \(H_{1}\) but not a Hessian matrix itself, enabling us to estimate \(H_{1}\). Extending this analysis to general \(\nu \) allows us to estimate the Hölder constant \(H_{\nu }\), given \(\nu \in [0, 1]\). We thus obtain an algorithm that requires \(\nu \) as input and has the complexity bound (2) for the given \(\nu \). However, the resulting algorithm lacks usability because \(\nu \) that minimizes (2) is generally unknown.

Our main idea for developing a \(\nu \)-independent algorithm is to set \(\nu = 0\) for the above \(\nu \)-dependent algorithm. This may seem strange, but we prove that it works; a carefully designed algorithm for \(\nu = 0\) achieves the complexity bound (2) for any \(\nu \in [0, 1]\). Although we design an estimate for \(H_{0}\), it also has a relationship with \(H_{\nu }\) for \(\nu \in (0, 1]\), as will be stated in Proposition 1. This proposition allows us to obtain the desired complexity bounds without specifying \(\nu \).

To evaluate the numerical performance of the proposed method, we conducted experiments with standard machine-learning tasks. The results illustrate that the proposed method outperforms state-of-the-art methods.

Notation. For vectors \(a, b \in \mathbb R^d\), let \(\langle a,b\rangle \) denote the dot product and \(\Vert a\Vert \) denote the Euclidean norm. For a matrix \(A \in \mathbb R^{m \times n}\), let \(\Vert A\Vert \) denote the operator norm, or equivalently the largest singular value.

2 Related work

This section reviews previous studies from several perspectives and discusses similarities and differences between them and this work.

Complexity of first-order methods. Gradient descent (GD) is known to have a complexity bound of \(O(\varepsilon ^{-2})\) under Lipschitz continuous gradients (e.g., [37, Example. 1.2.3]). First-order methods [12, 22] for Hölder continuous gradients have recently been proposed to generalize the bound; they enjoy bounds of \(O(\varepsilon ^{-\frac{1+\mu }{\mu }})\), where \(\mu \in (0, 1]\) is the Hölder exponent of \(\nabla f\). First-order methods have also been studied under stronger assumptions. The methods of [2, 6, 28, 53] enjoy complexity bounds of \({\tilde{O}}(\varepsilon ^{-7/4})\) under Lipschitz continuous gradients and Hessians,Footnote 2 and the bounds have recently been improved to \(O(\varepsilon ^{-7/4})\) [33,34,35]. This paper generalizes the classical bound of \(O(\varepsilon ^{-2})\) in a different direction from [12, 22] and interpolates the existing bounds of \(O(\varepsilon ^{-2})\) and \(O(\varepsilon ^{-7/4})\). Table 1 compares our complexity results with the existing ones.

Complexity of second-order methods using Hölder continuous Hessians. The Hölder continuity of Hessians has been used to analyze second-order methods. Grapiglia and Nesterov [23] proposed a regularized Newton method that finds an \(\varepsilon \)-stationary point in \(O(\varepsilon ^{-\frac{2 + \nu }{1 + \nu }})\) evaluations of f, \(\nabla f\), and \(\nabla ^2 f\), where \(\nu \in [0, 1]\) is the Hölder exponent of \(\nabla ^2 f\). The complexity bound generalizes previous \(O(\varepsilon ^{-3/2})\) bounds under Lipschitz continuous Hessians [10, 11, 14, 40]. We make the same assumption of Hölder continuous Hessians as in [23] but do not compute Hessians in the algorithm. Table 2 summarizes the first-order and second-order methods together with their assumptions.

Universality for Hölder continuity. When Hölder continuity is assumed, it is preferable that algorithms not require the exponent \(\nu \) as input because a suitable value for \(\nu \) tends to be hard to find in real-world problems. Such \(\nu \)-independent algorithms, called universal methods, were first developed as first-order methods for convex optimization [30, 38] and have since been extended to other settings, including higher-order methods or nonconvex problems [12, 13, 20, 22,23,24,25]. Within this research stream, this paper proposes a universal method with a new setting: a first-order method under Hölder continuous Hessians. Because of the differences in settings, the existing techniques for universality cannot be applied directly; we obtain a universal method by setting \(\nu = 0\) for a \(\nu \)-dependent algorithm, as discussed in Sect. 1.

Heavy-ball methods. Heavy-ball (HB) methods are a kind of momentum method first proposed by Polyak [43] for convex optimization. Although some complexity results have been obtained for (strongly) convex settings [21, 32], they are weaker than the optimal bounds given by Nesterov’s accelerated gradient method [36, 39]. For nonconvex optimization, HB and its variants [15, 29, 46, 50] have been practically used with great success, especially in deep learning, while studies on theoretical convergence analysis are few [34, 41, 42]. O’Neill and Wright [42] analyzed the local behavior of the original HB method, showing that the method is unlikely to converge to strict saddle points. Ochs et al. [41] proposed a generalized HB method, iPiano, that enjoys a complexity bound of \(O(\varepsilon ^{-2})\) under Lipschitz continuous gradients, which is of the same order as that of GD. Li and Lin [34] proposed an HB method with a restart mechanism that achieves a complexity bound of \(O(\varepsilon ^{-7/4})\) under Lipschitz continuous gradients and Hessians. Our algorithm is another HB method with a different restart mechanism that enjoys more general complexity bounds than [34], as discussed in Sect. 1.

Comparison with [35]. This paper shares some mathematical tools with [35] because we utilize the Hessian-free analysis introduced in [35] to estimate Hessian’s Hölder continuity. While the analysis in [35] is for Nesterov’s accelerated gradient method under Lipschitz continuous Hessians, we here analyze Polyak’s HB method under Hölder continuity. Thanks to the simplicity of the HB momentum, our estimate for the Hölder constant is easier to compute than the estimate for the Lipschitz constant proposed in [35], which improves the efficiency of our algorithm. We would like to emphasize that a \(\nu \)-independent algorithm cannot be derived simply by applying the mathematical tools in [35]. It should also be mentioned that we have not confirmed that it is impossible or very challenging to develop a \(\nu \)-independent algorithm with Nesterov’s momentum under Hölder continuous Hessians.

Lower bounds. So far, we have discussed upper bounds on worst-case complexity, but there are also papers that study lower bounds. Carmon et al. [8] proved that no deterministic or stochastic first-order method can improve the complexity of \(O(\varepsilon ^{-2})\) with the assumption of Lipschitz continuous gradients alone. (See [8, Theorems 1 and 2] for more rigorous statements.) This result implies that GD is optimal in terms of complexity under Lipschitz continuous gradients. Carmon et al. [9] showed a lower bound of \(\Omega (\varepsilon ^{-12/7})\) for first-order methods under Lipschitz continuous gradients and Hessians. Compared with the upper bound of \(O(\varepsilon ^{-7/4})\) under the same assumptions, there is still a \(\Theta (\varepsilon ^{-1/28})\) gap. Closing this gap would be an interesting research question, though this paper does not focus on it.

3 Preliminary results

The following lemma is standard for the analyses of first-order methods.

Lemma 1

(e.g., [37, Lemma 1.2.3]) Under Assumption 1, the following holds for any \(x, y \in \mathbb R^d\):

This inequality helps estimate the Lipschitz constant \(L\) and evaluate the decrease in the objective function per iteration.

We also use the following inequalities derived from Hölder continuous Hessians.

Lemma 2

For any \(z_1, \dots , z_n \in \mathbb R^d\), let \({\bar{z}} {:}{=}\sum _{i=1}^n \lambda _i z_i\), where \(\lambda _1,\dots ,\lambda _n \ge 0\) and \(\sum _{i=1}^n \lambda _i = 1\). Then, the following holds for all \(\nu \in [0, 1]\) such that \(H_{\nu } < + \infty \):

Lemma 3

For all \(x, y \in \mathbb R^d\) and \(\nu \in [0, 1]\) such that \(H_{\nu } < + \infty \), the following holds:

The proofs are given in Sect. A.1. These lemmas generalize [35, Lemmas 3.1 and 3.2] for Lipschitz continuous Hessians (i.e., \(\nu = 1\)). It is important to note that the inequalities in Lemmas 2 and 3 are Hessian-free; they include the Hessian’s Hölder constant \(H_{\nu }\) but not a Hessian matrix itself. Accordingly, we can adaptively estimate the Hölder continuity of \(\nabla ^2 f\) in the algorithm without computing Hessians.

4 Algorithm

The proposed method, Algorithm 1, is a heavy-ball (HB) method equipped with two particular restart schemes. In the algorithm, the iteration counter k is reset to 0 when HB restarts on Line 8 or 10, whereas the total iteration counter K is not. We refer to the period between one reset of k and the next reset as an epoch. Note that it is unnecessary to implement K in the algorithm; it is included here only to make the statements in our analysis concise.

The algorithm uses estimates \(\ell \) and \(h_k\) for the Lipschitz constant \(L\) and the Hölder constant \(H_{0}\). The estimate \(\ell \) is fixed during an epoch, while \(h_k\) is updated at each iteration, having the subscript k.

4.1 Update of solutions

With an estimate \(\ell \) for the Lipschitz constant \(L\), Algorithm 1 defines a solution sequence \((x_k)\) as follows: \(v_0 = \textbf{0}\) and

for \(k \ge 1\). Here, \((v_k)\) is the velocity sequence, and \(0 \le \theta _k \le 1\) is the momentum parameter. Let \(x_{-1} {:}{=}x_0\) for convenience, which makes (4) valid for \(k = 0\). This type of optimization method is called a heavy-ball method or Polyak’s momentum method.

In this paper, we use the simplest parameter setting:

for all \(k \ge 1\). Our choice of \(\theta _k\) differs from the existing ones; the existing complexity analyses [16, 17, 21, 32, 34, 43] of HB prohibit \(\theta _k = 1\). For example, Li and Lin [34] proposed \(\theta _k = 1 - 5 (H_{1} \varepsilon )^{1/4} / \sqrt{L}\). Our new proof technique described later in Sect. 5.1 enables us to set \(\theta _k = 1\).

We will later use the averaged solution

to compute the estimate \(h_k\) for \(H_{0}\) and set the best solution \(x^\star _k\). The averaged solution can be computed efficiently with a simple recursion: \({\bar{x}}_{k+1} = \frac{k}{k+1} {\bar{x}}_k + \frac{1}{k+1} x_k\).

4.2 Estimation of Hölder continuity

Let

to simplify the notation. Our analysis uses the following inequalities due to Lemmas 2 and 3.

Lemma 4

For all \(k \ge 1\) and \(\nu \in [0, 1]\) such that \(H_{\nu } < + \infty \), the following hold:

Proof

Eq. (6) follows immediately from Lemma 3. The proof of (7) is given in Sect. A.2. \(\square \)

Algorithm 1 requires no information on the Hölder continuity of \(\nabla ^2 f\), automatically estimating it. To illustrate the trick, let us first consider a prototype algorithm that works when a value of \(\nu \in [0, 1]\) such that \(H_{\nu } < + \infty \) is given. Given such a \(\nu \), one can compute an estimate h of \(H_{\nu }\) such that

which come from Lemma 4. This estimation scheme yields a \(\nu \)-dependent algorithm that has the complexity bound (2) for the given \(\nu \), though we will omit the details. The algorithm is not so practical because it requires \(\nu \in [0, 1]\) such that \(H_{\nu } < + \infty \) as input. However, perhaps surprisingly, setting \(\nu = 0\) for the \(\nu \)-dependent algorithm gives a \(\nu \)-independent algorithm that achieves the bound (2) for all \(\nu \in [0, 1]\). Algorithm 1 is the \(\nu \)-independent algorithm obtained in that way.

Let \(h_0 {:}{=}0\) for convenience. At iteration \(k \ge 1\) of each epoch, we use the estimate \(h_k\) for \(H_{0}\) defined by

so that \(h_k \ge h_{k-1}\) and

The above inequalities were obtained by plugging \(\nu = 0\) into (8) and (9).

Although we designed \(h_k\) to estimate \(H_{0}\), it fortunately also relates to \(H_{\nu }\) for general \(\nu \in [0, 1]\). The following upper bound on \(h_k\) shows the relationship between \(h_k\) and \(H_{\nu }\), which will be used in the complexity analysis.

Proposition 1

For all \(k \ge 1\) and \(\nu \in [0, 1]\) such that \(H_{\nu } < + \infty \), the following holds:

Proof

Lemma 4 gives

Hence, definition (10) of \(h_k\) yields

The desired result follows inductively since \(H_{\nu } (k S_k)^{\frac{\nu }{2}}\) is nondecreasing in k. \(\square \)

For \(\nu = 0\), Proposition 1 gives a natural upper bound, \(h_k \le H_{0}\), since the estimate \(h_k\) is designed for \(H_{0}\) based on Lemma 4. For \(\nu \in (0, 1]\), the upper bound can become tighter when \(k S_k\) is small. Indeed, the iterates \((x_k)\) are expected to move less significantly in an epoch as the algorithm proceeds. Accordingly, \((S_k)\) increases more slowly in later epochs, yielding a tighter upper bound on \(h_k\). This trick improves the complexity bound from \(O(\varepsilon ^{-2})\) for \(\nu = 0\) to \(O(\varepsilon ^{- \frac{4 + 3 \nu }{2 + 2 \nu }})\) for general \(\nu \in [0, 1]\).

4.3 Restart mechanisms

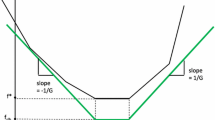

Algorithm 1 is equipped with two restart mechanisms. The first one uses the standard descent condition

to check whether the current estimate \(\ell \) for the Lipschitz constant \(L\) is large enough. If the descent condition (13) does not hold, HB restarts with a larger \(\ell \) from the best solution \(x^\star _k {:}{=}\mathop {\textrm{argmin}}\limits _{x \in \{ x_0,\dots ,x_k,{\bar{x}}_1,\dots ,{\bar{x}}_k \}} f(x)\) during the epoch.

We consider not only \(x_0,\dots ,x_k\) but also the averaged solutions \({\bar{x}}_1,\dots ,{\bar{x}}_k\) as candidates for the next starting point because averaging may stabilize the behavior of the HB method. As we will show later in Lemma 6, the gradient norm of averaged solutions is small, which leads to stability. For strongly-convex quadratic problems, Danilova and Malinovsky [16] also show that averaged HB methods have a smaller maximal deviation from the optimal solution than the vanilla HB method. A similar effect for nonconvex problems is expected in the neighborhood of local optima where quadratic approximation is justified.

The second restart scheme resets the momentum effect when k becomes large; if

is satisfied, HB restarts from the best solution \(x^\star _k\). At the restart, we can reset \(\ell \) to a smaller value in the hope of improving practical performance, though decreasing \(\ell \) is not necessary for the complexity analysis. This restart scheme guarantees that

holds at iteration k of each epoch.

The Lipschitz estimate \(\ell \) increases only when the descent condition (13) is violated. On the other hand, Lemma 1 implies that condition (13) always holds as long as \(\ell \ge L\). Hence, we have the following upper bound on \(\ell \).

Proposition 2

Suppose that Assumption 1 holds. Then, the following is true throughout Algorithm 1: \(\ell \le \max \{ \ell _{\textrm{init}}, \alpha L \}\).

5 Complexity analysis

This section proves that Algorithm 1 enjoys the complexity bound (2) for all \(\nu \in [0, 1]\).

5.1 Objective decrease for one epoch

First, we evaluate the decrease in the objective function value during one epoch.

Lemma 5

Suppose that Assumption 1 holds and that the descent condition

holds for all \(1 \le i \le k\). Then, the following holds under condition (15):

Before providing the proof, let us remark on the lemma.

Evaluating the decrease in the objective function is the central part of a complexity analysis. It is also an intricate part because the function value does not necessarily decrease monotonically in nonconvex acceleration methods. To overcome the non-monotonicity, previous analyses have employed different proof techniques. For example, Li and Lin [33] constructed a quadratic approximation of the objective, diagonalized the Hessian, and evaluated the objective decrease separately for each coordinate; Marumo and Takeda [35] designed a tricky potential function and showed that it is nearly decreasing.

This paper uses another technique to deal with the non-monotonicity. We observe that the solution \(x_k\) does not need to attain a small function value; it is sufficient for at least one of \(x_1,\dots ,x_k\) to do so, thanks to our particular restart mechanism. This observation permits the left-hand side of (17) to be \(\min _{1 \le i \le k} f(x_i)\) rather than \(f(x_k)\) and makes the proof easier. The proof of Lemma 5 calculates a weighted sum of \(2k-1\) inequalities derived from Lemmas 1 and 3, which is elementary compared with the existing proofs. Now, we provide that proof.

Proof of Lemma 5

Combining (16) with the update rules (3) and (4) yields

for \(1 \le i \le k\). For \(1 \le i < k\), we also have

We will calculate a weighted sum of \(2k-1\) inequalities:

-

(18) with weight 1 for \(1 \le i \le k\),

-

(19) with weight \(2(k-i)\) for \(1 \le i < k\).

The left-hand side of the weighted sum is

On the right-hand side of the weighted sum, some calculations with \(v_0 = \textbf{0}\) show that the inner-product terms of \(\langle v_{i-1},v_i\rangle \) cancel out as follows:

The remaining terms on the right-hand side of the weighted sum are

We now obtain

Finally, we evaluate the coefficient on the right-hand side with (15) as

which completes the proof. \(\square \)

The proof elucidates that the second restart condition (14) was designed to derive the lower bound of \(\ell \frac{2k-1}{4k}\) in (20).

For an epoch that ends at Line 10 in iteration \(k \ge 1\), Lemma 5 gives

For an epoch that ends at Line 8 in iteration \(k \ge 2\), the lemma gives

These bounds will be used to derive the complexity bound.

5.2 Upper bound on gradient norm

Next, we prove the following upper bound on the gradient norm at the averaged solution.

Lemma 6

In Algorithm 1, the following holds at iteration \(k \ge 2\):

Proof

For \(k = 2\), the result follows from \(\Vert \nabla f ({\bar{x}}_1) \Vert = \Vert \nabla f (x_0) \Vert = \ell \Vert v_1\Vert \). Below, we assume that \(k \ge 3\). Let \(A_k {:}{=}\sum _{i=1}^{k-1} i^2\); we have

for \(k \ge 3\). A weighted sum of (12) over k yields

since \(h_k\) and \(S_k\) are nondecreasing in k. Each term can be bounded by the Cauchy–Schwarz inequality as

and thus

where the last inequality uses (15). Using (23) and \(1 + \frac{3}{8 \sqrt{32}} < \frac{2}{\sqrt{3}}\) concludes the proof. \(\square \)

5.3 Complexity bound

Let \(\bar{\ell }\) denote the upper bound on the Lipschitz estimate \(\ell \) given in Proposition 2: \(\bar{\ell }{:}{=}\max \{ \ell _{\textrm{init}}, \alpha L \}\). The following theorem shows iteration complexity bounds for Algorithm 1. Recall that \(\alpha > 1\) and \(0 < \beta \le 1\) are the input parameters of Algorithm 1.

Theorem 1

Suppose that Assumption 1 holds and \(\inf _{x \in \mathbb R^d} f(x) > - \infty \). Let

In Algorithm 1, when \(\Vert \nabla f({\bar{x}}_k)\Vert \le \varepsilon \) holds for the first time, the total iteration count K is at most

In particular, if we set \(\beta = 1\), then \({c_1} = 0\) and the upper bound simplifies to

Proof

We classify the epochs into three types:

-

successful epoch: an epoch that does not find an \(\varepsilon \)-stationary point and ends at Line 10 with the descent condition (13) satisfied,

-

unsuccessful epoch: an epoch that does not find an \(\varepsilon \)-stationary point and ends at Line 8 with the descent condition (13) unsatisfied,

-

last epoch: the epoch that finds an \(\varepsilon \)-stationary point.

Let \(N_{\textrm{suc}}\) and \(N_{\textrm{unsuc}}\) be the number of successful and unsuccessful epochs, respectively. Let \(K_{\textrm{suc}}\) be the total iteration number of all successful epochs. Below, we fix \(\nu \in [0, 1]\) arbitrarily such that \(H_{\nu } < + \infty \). (Note that there exists such a \(\nu \) since \(H_{0} \le 2 L< + \infty \).)

Successful epochs. Let us focus on a successful epoch and let k denote the total number of iterations of the epoch we are focusing on, i.e., the epoch ends at iteration k.

We then have

as follows: if \(k = 1\), we have \(S_k = \Vert v_1\Vert ^2 = \frac{1}{\ell ^2} \Vert \nabla f(x_0)\Vert ^2 > \frac{\varepsilon ^2}{\ell ^2} \ge \frac{\varepsilon ^2 k^3}{8 \ell ^2}\); if \(k \ge 2\), Lemma 6 gives \(\varepsilon < \ell \sqrt{8 S_{k-1} / k^3} \le \ell \sqrt{8 S_k / k^3}\).

On the other hand, putting the restart condition (14) together with Proposition 1 yields

and hence

Combining (27) and (26) leads to

Plugging them into (21) yields

since \(\nu \ge 0\). Summing these bounds over all successful epochs results in

and hence

Other epochs. Let \(k_1,\dots ,k_{N_{\textrm{unsuc}}}\) and \(k_{N_{\textrm{unsuc}} + 1}\) be the iteration number of unsuccessful and last epochs, respectively. Then, the total iteration number of the epochs can be bounded with the Cauchy–Schwarz inequality as follows:

where \(\sum _{i:\, k_i \ge 2}\) denotes a sum over \(i = 1, \dots , N_{\textrm{unsuc}} + 1\) such that \(k_i \ge 2\). We will evaluate \(N_{\textrm{unsuc}}\) and the sum of \(k_i^2\). First, we have \(\ell _{\textrm{init}}\beta ^{N_{\textrm{suc}}} \alpha ^{N_{\textrm{unsuc}}} \le \bar{\ell }\) and hence

from (28), where \(c_1\) and \(c_2\) are defined by (24). Next, let us focus on an epoch that ends at iteration \(k \ge 2\). Lemma 6 gives \(\varepsilon < \ell \sqrt{8 S_{k-1} / k^3}\) and hence \(S_{k-1} \ge \frac{\varepsilon ^2 k^3}{8 \ell ^2}\). Plugging this bound into (22) yields

Summing this bound over all unsuccessful and last epochs results in

Plugging (30) and (31) into (29) yields

where the last inequality uses \(\sqrt{a + b} \le \sqrt{a} + \sqrt{b}\) for \(a, b \ge 0\). Putting this bound together with (28) gives an upper bound on the total iteration number of all epochs:

where we have used \(2^{\frac{13}{2}} < 91\), \(2^8 = 256\), and \(2^{\frac{5}{2}} < 6\). Since \(\nu \in [0, 1]\) is now arbitrary, taking the infimum completes the proof. \(\square \)

Algorithm 1 evaluates the objective function and its gradient at two points, \(x_k\) and \({\bar{x}}_k\), in each iteration. Therefore, the number of evaluations is of the same order as the iteration complexity in Theorem 1.

The complexity bounds given in Theorem 1 may look somewhat unfamiliar since they involve an \(\inf \)-operation on \(\nu \). Such a bound is a significant benefit of \(\nu \)-independent algorithms. The \(\nu \)-dependent prototype algorithm described immediately after Lemma 4 achieves the bound

only for the given \(\nu \). In contrast, Algorithm 1 is \(\nu \)-independent and automatically achieves the bound with the optimal \(\nu \), as shown in Theorem 1. The fact that the optimal \(\nu \) is difficult to find also points to the advantage of our \(\nu \)-independent algorithm. The complexity bound (25) also gives a looser bound:

where we have taken \(\nu = 0\) and have used \(H_{0} \le 2 L\le 2 \bar{\ell }\). This bound matches the classical bound of \(O(\varepsilon ^{-2})\) for GD. Theorem 1 thus shows that our HB method has a more elaborate complexity bound than GD.

Remark 1

Although we employed global Lipschitz and Hölder continuity in Assumptions 1 and Definition 1, they can be restricted to the region where the iterates reach. More precisely, if we assume that the iterates \((x_k)\) generated by Algorithm 1 are contained in some convex set \(C \subseteq \mathbb R^d\), we can replace all \(\mathbb R^d\) in our analysis with C; we can obtain the same complexity bound as Theorem 1 with Lipschitz and Hölder continuity on C.Footnote 3

6 Numerical experiments

This section compares the performance of the proposed method with several existing algorithms. The experimental setup, including the compared algorithms and problem instances, follows [35]. We implemented the code in Python with JAX [4] and Flax [26] and executed them on a computer with an Apple M3 Chip (12 cores) and 36 GB RAM. The source code used in the experiments is available on GitHub.Footnote 4

6.1 Compared algorithms

We compared the following six algorithms.

-

Proposed is Algorithm 1 with parameters set as \((\ell _{\textrm{init}}, \alpha , \beta ) = (10^{-3}, 2, 0.1)\).

-

GD is a gradient descent method with Armijo-type backtracking. This method has input parameters \(\ell _{\textrm{init}}\), \(\alpha \), and \(\beta \) similar to those in Proposed, which were set as \((\ell _{\textrm{init}}, \alpha , \beta ) = (10^{-3}, 2, 0.9)\).

-

JNJ2018 [28, Algorithm 2] is an accelerated gradient (AG) method for nonconvex optimization. The parameters were set in accordance with [28, Eq. (3)]. The equation involves constants c and \(\chi \), whose values are difficult to determine; we set them as \(c = \chi = 1\).

-

LL2022 [33, Algorithm 2] is another AG method. The parameters were set in accordance with [33, Theorem 2.2 and Section 4].

-

MT2022 [35, Algorithm 1] is another AG method. The parameters were set in accordance with [35, Section 6.1].

-

L-BFGS is the limited-memory BFGS method [5]. We used SciPy [52] for the method, i.e., scipy.optimize.minimize with option method="L-BFGS-B".

The parameter setting for JNJ2018 and LL2022 requires the values of the Lipschitz constants \(L\) and \(H_{1}\) and the target accuracy \(\varepsilon \). For these two methods, we tuned the best \(L\) among \(\{ 10^{-4},10^{-3},\dots ,{10^{10}} \}\) and set \(H_{1} = 1\) and \(\varepsilon = 10^{-16}\) following [33, 35]. It should be noted that if these values deviate from the actual ones, the methods do not guarantee convergence.

6.2 Problem instances

We tested the algorithms on seven different instances. The first four instances are benchmark functions from [27].

-

Dixon–Price function [19]:

$$\begin{aligned} \min _{(x_1,\dots ,x_d) \in \mathbb R^d}\ (x_1 - 1)^2 + \sum _{i=2}^d i (2 x_i^2 - x_{i-1})^2. \end{aligned}$$(32)The optimum is \(f(x^*) = 0\) at \(x^*_i = 2^{2^{1-i} - 1}\) for \(1 \le i \le d\).

-

Powell function [44]:

$$\begin{aligned}&\min _{(x_1,\dots ,x_d) \in \mathbb R^d}\ \sum _{i=1}^{\lfloor d/4\rfloor } \left( { \left( {x_{4i-3} + 10 x_{4i-2}}\right) ^2 + 5 \left( {x_{4i-1} - x_{4i}}\right) ^2 + \left( {x_{4i-2} - 2 x_{4i-1}}\right) ^4 + 10 \left( {x_{4i-3} - x_{4i}}\right) ^4 }\right) . \end{aligned}$$(33)The optimum is \(f(x^*) = 0\) at \(x^* = (0, \dots , 0)\).

-

Qing Function [45]:

$$\begin{aligned} \min _{(x_1,\dots ,x_d) \in \mathbb R^d}\ \sum _{i=1}^{d-1} (x_i^2 - i)^2. \end{aligned}$$(34)The optimum is \(f(x^*) = 0\) at \(x^* = (\pm \sqrt{1}, \pm \sqrt{2}, \dots , \pm \sqrt{d})\).

-

Rosenbrock function [47]:

$$\begin{aligned} \min _{(x_1,\dots ,x_d) \in \mathbb R^d}\ \sum _{i=1}^{d-1} \left( { 100 \left( {x_{i+1} - x_i^2}\right) ^2 + (x_i - 1)^2 }\right) . \end{aligned}$$(35)The optimum is \(f(x^*) = 0\) at \(x^* = (1, \dots , 1)\).

The dimension d of the above problems was fixed as \(d = 10^6\). The starting point was set as \(x_{\textrm{init}}= x^* + \delta \), where \(x^*\) is the optimal solution, and each entry of \(\delta \) was drawn from the normal distribution \({\mathcal {N}}(0, 1)\). For the Qing function (34), we used \(x^* = (\sqrt{1}, \sqrt{2}, \dots , \sqrt{d})\) to set the starting point.

The other three instances are more practical examples from machine learning.

-

Training a neural network for classification with the MNIST dataset:

$$\begin{aligned} \min _{w \in \mathbb R^d}\&\frac{1}{N} \sum _{i=1}^N \ell _{\textrm{CE}}(y_i, \phi _1(x_i; w)). \end{aligned}$$(36)The vectors \(x_1,\dots ,x_N \in \mathbb R^M\) and \(y_1,\dots ,y_N \in \{ 0, 1 \}^K\) are given data, \(\ell _{\textrm{CE}}\) is the cross-entropy loss, and \(\phi _1(\cdot ; w): \mathbb R^M \rightarrow \mathbb R^K\) is a neural network parameterized by \(w \in \mathbb R^d\). We used a three-layer fully connected network with bias parameters. The layers each have M, 32, 16, and K nodes, where \(M = 784\) and \(K = 10\). The hidden layers have the logistic sigmoid activation, and the output layer has the softmax activation. The total number of the parameters is \(d = (784 \times 32 + 32 \times 16 + 16 \times 10) + (32 + 16 + 10) = 25818\). The data size is \(N = 10000\).

-

Training an autoencoder for the MNIST dataset:

$$\begin{aligned} \min _{w \in \mathbb R^d}\&\frac{1}{2MN} \sum _{i=1}^N \Vert x_i - \phi _2(x_i; w)\Vert ^2. \end{aligned}$$(37)The vectors \(x_1,\dots ,x_N \in \mathbb R^M\) are given data, and \(\phi _2(\cdot ; w): \mathbb R^M \rightarrow \mathbb R^M\) is a neural network parameterized by \(w \in \mathbb R^d\). We used a four-layer fully connected network with bias parameters. The layers each have M, 32, 16, 32, and M nodes, where \(M = 784\). The hidden and output layers have the logistic sigmoid activation. The total number of the parameters is \(d = (784 \times 32 + 32 \times 16 + 16 \times 32 + 32 \times 784) + (32 + 16 + 32 + 784) = 52064\). The data size is \(N = 10000\).

-

Low-rank matrix completion with the MovieLens-100K dataset:

$$\begin{aligned} \min _{\begin{array}{c} U \in \mathbb R^{p \times r}\\ V \in \mathbb R^{q \times r} \end{array}}\ {}&\frac{1}{2 N} \sum _{(i, j, s) \in \Omega } \left( {(U V^\top )_{ij} - s}\right) ^2 + \frac{1}{2 N} \Vert U^\top U - V^\top V\Vert _{\textrm{F}}^2. \end{aligned}$$(38)The set \(\Omega \) consists of \(N = 100000\) observed entries of a \(p \times q\) data matrix, and \((i, j, s) \in \Omega \) means that the (i, j)-th entry is s. The second term with the Frobenius norm \(\Vert \cdot \Vert _{\textrm{F}}\) was proposed in [51] as a way to balance U and V. The size of the data matrix is \(p = 943\) times \(q = 1682\), and we set the rank as \(r \in \{ 100, 200 \}\). Thus, the number of variables is \(pr + qr \in \{ 262500, 525000 \}\).

Although we did not check whether the above seven instances have globally Lipschitz continuous gradients or Hessians, we confirmed in our experiments that the iterates generated by each algorithm were bounded. Since all of the above instances are continuously thrice differentiable, both the gradients and Hessians are Lipschitz continuous in the bounded domain. Considering Remark 1, we can say that in the experiments, the proposed algorithm achieves the same complexity bound as Theorem 1.

6.3 Results

Figure 1 illustrates the results with the four benchmark functions.Footnote 5 The horizontal axis is the number of calls to the oracle that computes both f(x) and \(\nabla f(x)\) at a given point \(x \in \mathbb R^d\).

Let us first focus on the methods other than L-BFGS, which is very practical but does not have complexity guarantees for general nonconvex functions, unlike the other methods.

Figure 1a and b show that Proposed converged faster than the existing methods except for L-BFGS, and Fig. 1c shows that Proposed and MT2022 converged fast. Figure 1d shows that GD and LL2022 attained a small objective function value, while GD and Proposed converged fast regarding gradient norm. In summery, the proposed algorithm was stable and fast.

L-BFGS successfully solved the four benchmarks, but we should note that the results do not imply that L-BFGS converged faster than the proposed algorithm in terms of execution time. Figure 2 provides the four figures in the right column of Fig. 1, with the horizontal axis replaced by the elapsed time. Figure 2 shows that Proposed converged comparably or faster in terms of time than L-BFGS. One reason for the large difference in the apparent performance of L-BFGS in Figs. 1 and 2 is that the computational costs of the non-oracle parts in L-BFGS, such as updating the Hessian approximation and solving linear systems, are not negligible. In contrast, the proposed algorithm does not require heavy computation besides oracle calls and is more advantageous in execution time when function and gradient evaluations are low-cost.

Figure 3 presents the results with the machine learning instances. Similar to Figs. 1, 3 shows that the proposed algorithm performed comparably or better than the existing methods except for L-BFGS, especially in reducing the gradient.

Figure 4 illustrates the objective function value \(f(x_k)\) and the estimates \(\ell \) and \(h_k\) at each iteration of the proposed algorithm for the machine learning instances. The iterations at which a restart occurred are also marked; “successful” and “unsuccessful” mean restarts at Line 10 and Line 8 of Algorithm 1, respectively. This figure shows that the proposed algorithm restarts frequently in the early stages but that the frequency decreases as the iterations progress. The frequent restarts in the early stages help update the estimate \(\ell \); \(\ell \) reached suitable values in the first few iterations, even though it was initialized to a pretty small value, \(\ell _{\textrm{init}}= 10^{-3}\). The infrequent restarts in later stages enable the algorithm to take full advantage of the HB momentum.

Notes

The \({\tilde{O}}\)-notation hides polylogarithmic factors in \(\varepsilon ^{-1}\). For example, the method of [28] has a complexity bound of \(O(\varepsilon ^{-7/4} (\log \varepsilon ^{-1})^6)\).

We note that some methods [1, 7, 48, 49] also attain the complexity of \({\tilde{O}}(\varepsilon ^{-7/4})\), but they employ Hessian-vector multiplications and thus are technically not first-order methods (although Hessian-vector products can be approximated using a firstorder oracle by finite differences).

We omit the proof, which is essentially the same, only replacing all \(\mathbb R^d\) with C. The convexity of C is necessary to guarantee that the averaged solution \({\bar{x}}_k\) also belongs to C.

To obtain results of L-BFGS, we ran the SciPy functions multiple times with the maximum number of iterations set to \(2^0, 2^1, 2^2,\dots \) because we cannot obtain the solution at each iteration while running SciPy codes of L-BFGS, but only the final result. The results are thus plotted as markers instead of lines in Figs. 1, 2, and 3.

References

Agarwal, N., Allen-Zhu, Z., Bullins, B., Hazan, E., Ma, T.: Finding approximate local minima faster than gradient descent. In: Proceedings of the 49th Annual ACM SIGACT Symposium on Theory of Computing, STOC 2017, pages 1195–1199, New York, NY, USA, (2017). Association for Computing Machinery. ISBN 9781450345286. https://doi.org/10.1145/3055399.3055464

Allen-Zhu, Z., Li, Y.: NEON2: finding local minima via first-order oracles. In: S. Bengio, H. Wallach, H. Larochelle, K. Grauman, N. Cesa-Bianchi, and R. Garnett, editors, Advances in Neural Information Processing Systems, volume 31. Curran Associates, Inc., (2018). https://proceedings.neurips.cc/paper/2018/file/d4b2aeb2453bdadaa45cbe9882ffefcf-Paper.pdf

Beck, A.: First-Order Methods in Optimization. Society for Industrial and Applied Mathematics, Philadelphia, PA (2017). https://doi.org/10.1137/1.9781611974997. https://epubs.siam.org/doi/abs/10.1137/1.9781611974997

Bradbury, J., Frostig, R., Hawkins, P., Johnson, M. J., Leary, C., Maclaurin, D., Necula, G., Paszke, A., VanderPlas, J., Wanderman-Milne, S., Zhang, Q.: JAX: composable transformations of Python+NumPy programs, (2018). https://github.com/google/jax

Byrd, R.H., Lu, P., Nocedal, J., Zhu, C.: A limited memory algorithm for bound constrained optimization. SIAM J. Sci. Comput. 16(5), 1190–1208 (1995). https://doi.org/10.1137/0916069

Carmon, Y., Duchi, J. C., Hinder, O., Sidford, A.: Convex until proven guilty: dimension-free acceleration of gradient descent on non-convex functions. In: D. Precup and Y. W. Teh, editors, Proceedings of the 34th International Conference on Machine Learning, volume 70 of Proceedings of Machine Learning Research, pp. 654–663. PMLR, (06–11 Aug 2017). URL https://proceedings.mlr.press/v70/carmon17a.html

Carmon, Y., Duchi, J.C., Hinder, O., Sidford, A.: Accelerated methods for nonconvex optimization. SIAM J. Optim. 28(2), 1751–1772 (2018). https://doi.org/10.1137/17M1114296

Carmon, Y., Duchi, J.C., Hinder, O., Sidford, A.: Lower bounds for finding stationary points I. Math. Program. 184(1), 71–120 (2020). https://doi.org/10.1007/s10107-019-01406-y

Carmon, Y., Duchi, J.C., Hinder, O., Sidford, A.: Lower bounds for finding stationary points II: first-order methods. Math. Program. 185(1), 315–355 (2021). https://doi.org/10.1007/s10107-019-01431-x

Cartis, C., Gould, N.I.M., Toint, P.L.: Adaptive cubic regularisation methods for unconstrained optimization. Part I: motivation, convergence and numerical results. Math. Program. 127(2), 245–295 (2011). https://doi.org/10.1007/s10107-009-0286-5

Cartis, C., Gould, N.I.M., Toint, P.L.: Adaptive cubic regularisation methods for unconstrained optimization. Part II: worst-case function- and derivative-evaluation complexity. Math. Programm. 130(2), 295–319 (2011). https://doi.org/10.1007/s10107-009-0337-y

Cartis, C., Gould, N.I.M., Toint, P.L.: Worst-case evaluation complexity of regularization methods for smooth unconstrained optimization using Hölder continuous gradients. Optim. Methods Softw. 32(6), 1273–1298 (2017). https://doi.org/10.1080/10556788.2016.1268136

Cartis, C., Gould, N.I.M., Toint, P.L.: Universal regularization methods: varying the power, the smoothness and the accuracy. SIAM J. Optim. 29(1), 595–615 (2019). https://doi.org/10.1137/16M1106316

Curtis, F.E., Robinson, D.P., Samadi, M.: A trust region algorithm with a worst-case iteration complexity of \(O(\epsilon ^{-3/2})\) for nonconvex optimization. Math. Program. 162(1), 1–32 (2017). https://doi.org/10.1007/s10107-016-1026-2

Cutkosky, A., Mehta, H.: Momentum improves normalized SGD. In: H. D. III and A. Singh (eds.) Proceedings of the 37th International Conference on Machine Learning, volume 119 of Proceedings of Machine Learning Research, pp. 2260–2268. PMLR, (13–18 Jul 2020). URL https://proceedings.mlr.press/v119/cutkosky20b.html

Danilova, M., Malinovsky, G.: Averaged heavy-ball method. arXiv preprint, (2021). arxiv:2111.05430

Danilova, M., Kulakova, A., Polyak, B.: Non-monotone behavior of the heavy ball method. In: M. Bohner, S. Siegmund, R. Šimon Hilscher, and P. Stehlík (eds.) Difference Equations and Discrete Dynamical Systems with Applications, pp. 213–230, Cham, (2020). Springer International Publishing. ISBN 978-3-030-35502-9

Devolder, O., Glineur, F., Nesterov, Y.: First-order methods of smooth convex optimization with inexact oracle. Math. Program. 146(1), 37–75 (2014). https://doi.org/10.1007/s10107-013-0677-5

Dixon, L.C.W., Price, R.C.: Truncated Newton method for sparse unconstrained optimization using automatic differentiation. J. Optim. Theory Appl. 60(2), 261–275 (1989). https://doi.org/10.1007/BF00940007

Dvurechensky, P.: Gradient method with inexact oracle for composite non-convex optimization. arXiv preprint, (2017). arxiv:1703.09180

Ghadimi, E., Feyzmahdavian, H. R., Johansson, M.: Global convergence of the heavy-ball method for convex optimization. In: 2015 European Control Conference (ECC), pp. 310–315, (2015). https://doi.org/10.1109/ECC.2015.7330562

Ghadimi, S., Lan, G., Zhang, H.: Generalized uniformly optimal methods for nonlinear programming. J. Sci. Comput. 79(3), 1854–1881 (2019). https://doi.org/10.1007/s10915-019-00915-4

Grapiglia, G.N., Nesterov, Y.: Regularized Newton methods for minimizing functions with Hölder continuous Hessians. SIAM J. Optim. 27(1), 478–506 (2017). https://doi.org/10.1137/16M1087801

Grapiglia, G.N., Nesterov, Y.: Accelerated regularized Newton methods for minimizing composite convex functions. SIAM J. Optim. 29(1), 77–99 (2019). https://doi.org/10.1137/17M1142077

Grapiglia, G.N., Nesterov, Y.: Tensor methods for minimizing convex functions with Hölder continuous higher-order derivatives. SIAM J. Optim. 30(4), 2750–2779 (2020). https://doi.org/10.1137/19M1259432

Heek, J., Levskaya, A., Oliver, A., Ritter, M., Rondepierre, B., Steiner, A., van Zee, M.: Flax: a neural network library and ecosystem for JAX, (2020). https://github.com/google/flax

Jamil, M., Yang, X.-S.: A literature survey of benchmark functions for global optimisation problems. Int. J. Math. Model. Numer. Optim. 4(2), 150–194 (2013). https://doi.org/10.1504/IJMMNO.2013.055204

Jin, C., Netrapalli, P., Jordan, M. I.: Accelerated gradient descent escapes saddle points faster than gradient descent. In: S. Bubeck, V. Perchet, and P. Rigollet (eds.) Proceedings of the 31st Conference On Learning Theory, volume 75 of Proceedings of Machine Learning Research, pp. 1042–1085. PMLR, (06–09 Jul 2018). URL https://proceedings.mlr.press/v75/jin18a.html

Kingma, D. P., Ba, J.: Adam: A method for stochastic optimization. In: Y. Bengio and Y. LeCun, (eds.) 3rd International Conference on Learning Representations, (2015). URL http://arxiv.org/abs/1412.6980

Lan, G.: Bundle-level type methods uniformly optimal for smooth and nonsmooth convex optimization. Math. Program. 149(1), 1–45 (2015). https://doi.org/10.1007/s10107-013-0737-x

Lan, G.: First-order and Stochastic Optimization Methods for Machine Learning. Springer, Cham (2020)

Lessard, L., Recht, B., Packard, A.: Analysis and design of optimization algorithms via integral quadratic constraints. SIAM J. Optim. 26(1), 57–95 (2016). https://doi.org/10.1137/15M1009597

Li, H., Lin, Z.: Restarted nonconvex accelerated gradient descent: No more polylogarithmic factor in the \(O(\epsilon ^{-7/4})\) complexity. In: K. Chaudhuri, S. Jegelka, L. Song, C. Szepesvari, G. Niu, and S. Sabato, (eds.) Proceedings of the 39th International Conference on Machine Learning, volume 162 of Proceedings of Machine Learning Research, pp. 12901–12916. PMLR, (17–23 Jul 2022). URL https://proceedings.mlr.press/v162/li22o.html

Li, H., Lin, Z.: Restarted nonconvex accelerated gradient descent: No more polylogarithmic factor in the \(O(\epsilon ^{-7/4})\) complexity. J. Mach. Learn. Res. 24(157), 1–37 (2023)

Marumo, N., Takeda, A.: Parameter-free accelerated gradient descent for nonconvex minimization. To appear in SIAM J. Optim. arxiv:2212.06410

Nesterov, Y.: A method for solving a convex programming problem with convergence rate \(O(1/k^2)\). Soviet Mathematics Doklady 269(3), 372–376 (1983)

Nesterov, Y.: Introductory Lectures on Convex Optimization: A Basic Course. Springer, New York (2004)

Nesterov, Y.: Universal gradient methods for convex optimization problems. Math. Program. 152(1), 381–404 (2015). https://doi.org/10.1007/s10107-014-0790-0

Nesterov, Y.: Lectures on Convex Optimization, vol. 137. Springer, Cham (2018)

Nesterov, Y., Polyak, B.T.: Cubic regularization of Newton method and its global performance. Math. Program. 108(1), 177–205 (2006). https://doi.org/10.1007/s10107-006-0706-8

Ochs, P., Chen, Y., Brox, T., Pock, T.: iPiano: Inertial proximal algorithm for nonconvex optimization. SIAM J. Imag. Sci. 7(2), 1388–1419 (2014). https://doi.org/10.1137/130942954

O’Neill, M., Wright, S.J.: Behavior of accelerated gradient methods near critical points of nonconvex functions. Math. Program. 176(1), 403–427 (2019). https://doi.org/10.1007/s10107-018-1340-y

Polyak, B.T.: Some methods of speeding up the convergence of iteration methods. USSR Comput. Math. Math. Phys. 4(5), 1–17 (1964)

Powell, M.J.D.: An iterative method for finding stationary values of a function of several variables. Comput. J. 5(2), 147–151 (1962)

Qing, A.: Dynamic differential evolution strategy and applications in electromagnetic inverse scattering problems. IEEE Trans. Geosci. Remote Sens. 44(1), 116–125 (2006). https://doi.org/10.1109/TGRS.2005.859347

Reddi, S. J., Kale, S., Kumar, S.: On the convergence of Adam and beyond. In International Conference on Learning Representations, (2018). URL https://openreview.net/forum?id=ryQu7f-RZ

Rosenbrock, H.H.: An automatic method for finding the greatest or least value of a function. Comput. J. 3(3), 175–184 (1960)

Royer, C.W., Wright, S.J.: Complexity analysis of second-order line-search algorithms for smooth nonconvex optimization. SIAM J. Optim. 28(2), 1448–1477 (2018). https://doi.org/10.1137/17M1134329

Royer, C.W., O’Neill, M., Wright, S.J.: A Newton-CG algorithm with complexity guarantees for smooth unconstrained optimization. Math. Program. 180(1), 451–488 (2020). https://doi.org/10.1007/s10107-019-01362-7

Sutskever, I., Martens, J., Dahl, G., Hinton, G.: On the importance of initialization and momentum in deep learning. In: S. Dasgupta and D. McAllester (eds.) Proceedings of the 30th International Conference on Machine Learning, volume 28 of Proceedings of Machine Learning Research, pp. 1139–1147, Atlanta, Georgia, USA, 17–19 Jun 2013. PMLR. URL https://proceedings.mlr.press/v28/sutskever13.html

Tu, S., Boczar, R., Simchowitz, M., Soltanolkotabi, M., Recht, B.: Low-rank solutions of linear matrix equations via Procrustes flow. In: M. F. Balcan and K. Q. Weinberger (eds.) Proceedings of The 33rd International Conference on Machine Learning, volume 48 of Proceedings of Machine Learning Research, pp. 964–973, New York, New York, USA, (20–22 Jun 2016). PMLR. URL https://proceedings.mlr.press/v48/tu16.html

Virtanen, P., Gommers, R., Oliphant, T.E., Haberland, M., Reddy, T., Cournapeau, D., Burovski, E., Peterson, P., Weckesser, W., Bright, J., van der Walt, S.J., Brett, M., Wilson, J., Millman, K.J., Mayorov, N., Nelson, A.R.J., Jones, E., Kern, R., Larson, E., Carey, C.J., Polat, İ, Feng, Y., Moore, E.W., VanderPlas, J., Laxalde, D., Perktold, J., Cimrman, R., Henriksen, I., Quintero, E.A., Harris, C.R., Archibald, A.M., Ribeiro, A.H., Pedregosa, F., van Mulbregt, P.: Fundamental algorithms for scientific computing in python. Nat. Methods 17, 261–272 (2020)

Xu, Y., Jin, R., Yang, T.: NEON+: Accelerated gradient methods for extracting negative curvature for non-convex optimization. arXiv preprint, (2017). arxiv:1712.01033

Acknowledgements

This work was partially supported by JSPS KAKENHI (19H04069) and JST ERATO (JPMJER1903).

Funding

Open Access funding provided by The University of Tokyo.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no Conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Omitted proofs

Omitted proofs

1.1 Proof of Lemmas 2 and 3

Proof of Lemma 2

Since f is twice differentiable, we have

and its weighted average gives

Therefore, we obtain the first inequality as follows:

Next, we will prove the second inequality. Hölder’s inequality gives

since \(\sum _{i=1}^n \lambda _i = 1\). Furthermore, we have \(\sum _{i=1}^n \lambda _i \Vert z_i - {\bar{z}}\Vert ^2 = \sum _{1 \le i < j \le n} \lambda _i \lambda _j \Vert z_i - z_j\Vert ^2\) because

which completes the proof. \(\square \)

Proof of Lemma 3

We obtain the desired result as follows:

For the last inequality, we used Lemma 2 with \(n = 2\), \(z_1 = x\), \(z_2 = y\), \(\lambda _1 = t\), and \(\lambda _2 = 1-t\), obtaining

\(\square \)

1.2 Proof of (7)

Inequality (7) is a modification of [35, Eq. (5.12)], which was originally for an accelerated gradient method with Lipschitz continuous Hessians, for our heavy-ball method with Hölder continuous Hessians. The following proof of (7) is based on the one for [35, Eq. (5.12)] but is easier, thanks to our simple choice of \(\theta _k = 1\).

Proof

Using the triangle inequality and Lemma 2 with \(n = k\), \(z_i = x_i\), and \(\lambda _i = \frac{1}{k}\) yields

and we will evaluate each term. First, it follows from the update rule (3) that

Therefore, the first term on the right-hand side of (39) reduces to \(\frac{\ell }{k} \Vert v_k\Vert \). Next, we bound the second term. Using the triangle inequality and the Cauchy–Schwarz inequality yields

and interchanging the summations leads to

We obtain the desired result by evaluating the right-hand side of (39). \(\square \)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Marumo, N., Takeda, A. Universal heavy-ball method for nonconvex optimization under Hölder continuous Hessians. Math. Program. (2024). https://doi.org/10.1007/s10107-024-02100-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10107-024-02100-4