Abstract

We propose a new variant of Chubanov’s method for solving the feasibility problem over the symmetric cone by extending Roos’s method (Optim Methods Softw 33(1):26–44, 2018) of solving the feasibility problem over the nonnegative orthant. The proposed method considers a feasibility problem associated with a norm induced by the maximum eigenvalue of an element and uses a rescaling focusing on the upper bound for the sum of eigenvalues of any feasible solution to the problem. Its computational bound is (1) equivalent to that of Roos’s original method (2018) and superior to that of Lourenço et al.’s method (Math Program 173(1–2):117–149, 2019) when the symmetric cone is the nonnegative orthant, (2) superior to that of Lourenço et al.’s method (2019) when the symmetric cone is a Cartesian product of second-order cones, (3) equivalent to that of Lourenço et al.’s method (2019) when the symmetric cone is the simple positive semidefinite cone, and (4) superior to that of Pena and Soheili’s method (Math Program 166(1–2):87–111, 2017) for any simple symmetric cones under the feasibility assumption of the problem imposed in Pena and Soheili’s method (2017). We also conduct numerical experiments that compare the performance of our method with existing methods by generating strongly (but ill-conditioned) feasible instances. For any of these instances, the proposed method is rather more efficient than the existing methods in terms of accuracy and execution time.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Recently, Chubanov [2, 3] proposed a new polynomial-time algorithm for solving the problem (\(\textrm{P}(A)\)),

where A is a given integer (or rational) matrix and \(\hbox {rank} (A) = m\) and \(\varvec{0}\) is an n-dimensional vector of 0s. The method explores the feasibility of the following problem \(\textrm{P}_{S_1}(A)\), which is equivalent to \(\textrm{P}(A)\) and given by

where \(\varvec{1}\) is an n-dimensional vector of 1s. Chubanov’s method consists of two ingredients, the “main algorithm” and the “basic procedure.” Note that the alternative problem \(\textrm{D}(A)\) of \(\textrm{P}(A)\) is given by

where \(\textrm{range} A^\top \) is the orthogonal complement of \(\textrm{ker}A\). The structure of the method is as follows: In the outer iteration, the main algorithm calls the basic procedure, which generates a sequence in \({\mathbb {R}}^n\) using projection to the set \(\hbox {ker} A := \{x \in {\mathbb {R}}^n \mid Ax= \varvec{0}\}\). The basic procedure terminates in a finite number of iterations returning one of the following: (i). a solution of problem \(\textrm{P}(A)\), (ii). a solution of problem \(\textrm{D}(A)\), or (iii). a cut of \(\textrm{P}(A)\), i.e., an index \(j \in \{ 1,2,\ldots ,n \}\) for which \(0 < x_j \le \frac{1}{2} \nonumber \) holds for any feasible solution of problem \(\textrm{P}_{S_1}(A)\).

If result (i) or (ii) is returned by the basic procedure, then the feasibility of problem \(\textrm{P}(A)\) can be determined and the main procedure stops. If result (iii) is returned, then the main procedure generates a diagonal matrix \(D \in {\mathbb {R}}^{n \times n} \) with a (j, j) element of 2 and all other diagonal elements of 1 and rescales the matrix as \(AD ^ {-1} \). Then, it calls the basic procedure with the rescaled matrix. Chubanov’s method checks the feasibility of \(\textrm{P}(A) \) by repeating the above procedures.

For problem \(\textrm{P}(A)\), [15] proposed a tighter cut criterion of the basic procedure than the one used in [3]. [3] used the fact that \(x_j \le \frac{ \sqrt{n} \Vert z\Vert _2}{y_j}\) holds for any \(y \in {\mathbb {R}}^n\) satisfying \(\sum _{i=1}^n y_i = 1 , y \ge 0\) and \(y \notin \hbox {range} A^T \), \(z \in {\mathbb {R}}^n \) obtained by projecting this y onto \(\hbox {ker} A\), and any feasible solution \(x \in {\mathbb {R}}^n\) of \(\textrm{P}_{S_1}(A)\), and the basic procedure is terminated if a y is found for which \(\frac{ \sqrt{n} \Vert z\Vert _2}{y_j} \le \frac{1}{2}\) holds for some index j. On the other hand, [15] showed that for \(v = y - z\), \(x_j \le \min ( 1 , \varvec{1}^T \left[ -v /v_j \right] ^+ ) \le \frac{ \sqrt{n} \Vert z\Vert _2}{y_j}\) holds if \(v_j \ne 0\), where \(\left[ -v / v_j \right] ^+\) is the projection of \(-v/v_j \in {\mathbb {R}}^n\) onto the nonnegative orthant and \(\varvec{1}\) is the vector of ones, and the basic procedure is terminated if a y is found for which \(\varvec{1}^T \left[ -v/v_j \right] ^+ \le \frac{1}{2}\) holds.

Chubanov’s method has also been extended to include the feasibility problem over the second-order cone [9] and the symmetric cone [10, 13]. The feasibility problem over the symmetric cone is of the form,

where \({\mathcal {A}}\) is a linear operator, \({\mathcal {K}}\) is a symmetric cone, and \(\hbox {int} {\mathcal {K}}\) is the interior of the set \({\mathcal {K}}\). As proposed in [10, 13], for problem \(\textrm{P}({\mathcal {A}})\), the structure of Chubanov’s method remains the same; i.e., the main algorithm calls the basic procedure, and the basic procedure returns one of the following in a finite number of iterations: (i). a solution of problem \(\textrm{P}({\mathcal {A}})\), or (ii). a solution of the alternative problem of problem \(\textrm{P}({\mathcal {A}})\), or (iii). a recommendation of scaling problem \(\textrm{P}({\mathcal {A}})\). If result (i) or (ii) is returned by the basic procedure, then the feasibility of the problem \(\textrm{P}({\mathcal {A}})\) can be determined and the main procedure stops. If result (iii) is returned, the problem is scaled appropriately and the basic procedure is called again.

It should be noted that the purpose of rescaling differs between [10] and [13]. In [13], the authors devised a rescaling method so that the following value becomes larger:

where \(\hbox {ker} {\mathcal {A}} :=\{ x \mid {\mathcal {A}}(x) = \varvec{0} \}\) and \(\Vert x\Vert _J\) is the norm induced by the inner product \(\langle x, y \rangle = \textrm{trace}(x \circ y)\) defined in Sect. 2.3. They proposed four updating schemes to be employed in the basic procedure and conducted numerical experiments to compare the effect of these schemes when the symmetric cone is the nonnegative orthant [14].

In [10], the authors assumed that the symmetric cone \({\mathcal {K}}\) is given by the Cartesian product of p simple symmetric cones \({\mathcal {K}}_1, \ldots , {\mathcal {K}}_p\), and they investigated the feasibility of the problem (\(\textrm{P}_{S_{1,\infty }}({\mathcal {A}})\)),

where for each \(x = (x_1, \ldots , x_p) \in {\mathcal {K}} ={\mathcal {K}}_1 \times \cdots {\mathcal {K}}_p\), \(\Vert x\Vert _{1,\infty }\) is defined by \(\Vert x\Vert _{1,\infty } := \max \{ \Vert x_1\Vert _1 , \ldots ,\Vert x_p\Vert _1 \}\), and \(\Vert x\Vert _1\) is the sum of the absolute values of all eigenvalues of x. Note that if \(p=1\), then problem \(\textrm{P}_{S_{1,\infty }}({\mathcal {A}})\) turns out to be \(\textrm{P}_{S_1}({\mathcal {A}})\), which is equivalent to \(\textrm{P}({\mathcal {A}})\):

The authors focused on the volume of the feasible region of \(\textrm{P}_{S_{1,\infty }}({\mathcal {A}})\) and devised a rescaling method so that the volume becomes smaller. Their method will stop when the feasibility of problem \(\textrm{P}_{S_{1,\infty }}({\mathcal {A}})\) or the fact that the minimum eigenvalue of any feasible solution of problem \(\textrm{P}_{S_{1,\infty }}({\mathcal {A}})\) is less than \(\varepsilon \) is determined.

The aim of this paper is to devise a new variant of Chubanov’s method for solving \(\textrm{P}({\mathcal {A}})\) by extending Roos’s method [15] to the following feasibility problem (\(\textrm{P}_{S_{\infty }}({\mathcal {A}})\)) over the symmetric cone \({\mathcal {K}}\):

where \(\Vert x\Vert _\infty \) is the maximum absolute eigenvalue of x. Throughout this paper, we will assume that \({\mathcal {K}}\) is the Cartesian product of p simple symmetric cones \({\mathcal {K}}_1, \ldots , {\mathcal {K}}_p\), i.e., \({\mathcal {K}} = {\mathcal {K}}_1 \times \cdots \times {\mathcal {K}}_p\). Here, we should mention an important issue about Lemma 4.2 in [15], which is one of the main results of [15]. The proof of Lemma 4.2 given in the paper [15] is incorrect and a correct proof is provided in the paper [19], while this study derives theoretical results without referring to the lemma. Our method has a feature that the main algorithm works while keeping information about the minimum eigenvalue of any feasible solution of \(\textrm{P}_{S_{\infty }}({\mathcal {A}})\) and, in this sense, it is closely related to Lourenço et al.’s method [10]. Using the norm \(\Vert \cdot \Vert _\infty \) in problem \(\textrm{P}_{S_\infty } ({\mathcal {A}})\) makes it possible to

-

calculate the upper bound for the minimum eigenvalue of any feasible solution of \(\textrm{P}_{S_\infty } ({\mathcal {A}})\),

-

quantify the feasible region of \(\textrm{P} ({\mathcal {A}})\), and hence,

-

determine whether there exists a feasible solution of \(\textrm{P} ({\mathcal {A}})\) whose minimum eigenvalue is greater than \(\varepsilon \) as in [10].

Note that the symmetric cone optimization includes several types of problems (linear, second-order cone, and semi-definite optimization problems) with various settings and the computational bound of an algorithm depends on these settings. As we will describe in Sect. 6, the theoretical computational bound of our method is

-

equivalent to that of Roos’s original method [15] and superior to that of Lourenço et al.’s method [10] when the symmetric cone is the nonnegative orthant,

-

superior to that of Lourenço et al.’s method when the symmetric cone is a Cartesian product of second-order cones, and

-

equivalent to that of Lourenço et al.’s method when the symmetric cone is the simple positive semidefinite cone, under the assumption that the costs of computing the spectral decomposition and of the minimum eigenvalue are of the same order for any given symmetric matrix.

-

superior to that of Pena and Soheili’s method [13] for any simple symmetric cones under the feasibility assumption of the problem imposed in [13].

Another aim of this paper is to give comprehensive numerical comparisons of the existing algorithms and our method. As described in Sect. 7, we generate strongly feasible ill-conditioned instances, i.e., \(\hbox {ker} {\mathcal {A}} \cap \hbox {int} {\mathcal {K}} \ne \emptyset \) and \(x \in \hbox {ker} {\mathcal {A}} \cap \hbox {int} {\mathcal {K}}\) has positive but small eigenvalues, for the simple positive semidefinite cone \({\mathcal {K}}\), and conduct numerical experiments.

The paper is organized as follows: Sect. 2 contains a brief description of Euclidean Jordan algebras and their basic properties. Section 3 gives a collection of propositions which are necessary to extend Roos’s method to problem \(\textrm{P}_{S_{\infty }}({\mathcal {A}})\) over the symmetric cone. In Sects. 4 and 5, we explain the basic procedure and the main algorithm of our variant of Chubanov’s method. Section 6 compares the theoretical computational bounds of Lourenço et al.’s method [10], Pena and Soheili’s method [13] and our method. In Sect. 7, we conduct numerical experiments comparing our variant with the existing methods. The conclusions are summarized in Sect. 8.

2 Euclidean Jordan algebras and their basic properties

In this section, we briefly introduce Euclidean Jordan algebras and symmetric cones. For more details, see [5]. In particular, the relation between symmetry cones and Euclidean Jordan algebras is given in Chapter III (Koecher and Vinberg theorem) of [5].

2.1 Euclidean Jordan algebras

Let \({\mathbb {E}}\) be a real-valued vector space equipped with an inner product \(\langle \cdot , \cdot \rangle \) and a bilinear operation \(\circ \) : \({\mathbb {E}} \times {\mathbb {E}} \rightarrow {\mathbb {E}}\), and e be the identity element, i.e.,\(x \circ e = e \circ x = x\) holds for any \( x \in {\mathbb {E}}\). \(({\mathbb {E}}, \circ )\) is called a Euclidean Jordan algebra if it satisfies

for all \(x,y,z \in {\mathbb {E}}\) and \(x^2 := x \circ x\). We denote \(y \in {\mathbb {E}}\) as \(x^{-1}\) if y satisfies \(x \circ y = e\). \(c \in {\mathbb {E}}\) is called an idempotent if it satisfies \(c \circ c = c\), and an idempotent c is called primitive if it can not be written as a sum of two or more nonzero idempotents. A set of primitive idempotents \(c_1, c_2, \ldots c_k\) is called a Jordan frame if \(c_1, \ldots c_k\) satisfy

For \(x \in {\mathbb {E}}\), the degree of x is the smallest integer d such that the set \(\{e,x,x^2,\ldots ,x^d\}\) is linearly independent. The rank of \({\mathbb {E}}\) is the maximum integer r of the degree of x over all \(x \in {\mathbb {E}}\). The following properties are known.

Proposition 2.1

(Spectral theorem (cf. Theorem III.1.2 of [5])) Let \(({\mathbb {E}}, \circ )\) be a Euclidean Jordan algebra having rank r. For any \(x \in {\mathbb {E}} \), there exist real numbers \(\lambda _1 , \dots , \lambda _r\) and a Jordan frame \(c_1 , \dots , c_r \) for which the following holds:

The numbers \(\lambda _1 , \dots , \lambda _r\) are uniquely determined eigenvalues of x (with their multiplicities). Furthermore, \(\textrm{trace}(x) := \sum _{i=1}^r \lambda _i\), \(\det (x) := \prod _{i=1}^r \lambda _i\).

2.2 Symmetric cone

A proper cone is symmetric if it is self-dual and homogeneous. It is known that the set of squares \({\mathcal {K}} = \{ x^2 : x \in {\mathbb {E}} \}\) is the symmetric cone of \({\mathbb {E}}\) (cf. Theorems III.2.1 and III.3.1 of [5]). The following properties can be derived from the results in [5], as in Corollary 2.3 of [21]:

Proposition 2.2

Let \(x \in {\mathbb {E}}\) and let \(\sum _{j=1}^r \lambda _j c_j\) be a decomposition of x given by Propositoin 2.1. Then

-

(i)

\(x \in {\mathcal {K}}\) if and only if \(\lambda _j \ge 0 \ (j=1,2,\ldots ,r)\),

-

(ii)

\(x \in \textrm{int} {\mathcal {K}}\) if and only if \(\lambda _j > 0 \ (j=1,2,\ldots ,r)\).

From Propositions 2.1 and 2.2 for any \(x \in {\mathbb {E}}\), its projection \(P_{\mathcal {K}} (x)\) onto \({\mathcal {K}}\) can be written as an operation to round all negative eigenvalues of x to 0, i.e., \(P_{\mathcal {K}} (x) = \sum _{i=1}^r [\lambda _i]^+ c_i\), where \([\cdot ]^+\) denotes the projection onto the nonnegative orthant. Using \(P_{\mathcal {K}}\), we can decompose any \(x \in {\mathbb {E}}\) as follows.

Lemma 2.3

Let \(x \in {\mathbb {E}}\), and \({\mathcal {K}}\) be the symmetric cone corresponding to \({\mathbb {E}}\). Then, x can be decomposed into \(x = P_{\mathcal {K}} (x) - P_{\mathcal {K}} (-x)\).

Proof

From Propositoin 2.1, let x be given as \(x = \sum _{i=1}^r \lambda _i c_i\). Let \(I_1\) be the set of indices such that \(\lambda _i \ge 0\) and \(I_2\) be the set of indices such that \(\lambda _i < 0\). Then, we have \(P_{\mathcal {K}} (x) = \sum _{i \in I_1} \lambda _i c_i\) and \(P_{\mathcal {K}} (-x) = \sum _{i \in I_2} - \lambda _i c_i\), which implies that \(x = \sum _{i \in I_1} \lambda _i c_i + \sum _{i \in I_2} \lambda _i c_i = P_{\mathcal {K}} (x) - P_{\mathcal {K}} (-x)\). \(\square \)

A Euclidean Jordan algebra \(({\mathbb {E}} , \circ )\) is called simple if it cannot be written as any Cartesian product of non-zero Euclidean Jordan algebras. If the Euclidean Jordan algebra \(({\mathbb {E}} , \circ )\) associated with a symmetric cone \({\mathcal {K}}\) is simple, then we say that \({\mathcal {K}}\) is simple. In this paper, we will consider that \({\mathcal {K}}\) is given by a Cartesian product of p simple symmetric cones \({\mathcal {K}}_\ell \), \({\mathcal {K}} := {\mathcal {K}}_1 \times \cdots \times {\mathcal {K}}_p\), whose rank and identity element are \(r_\ell \) and \(e_\ell \) \((\ell =1, \ldots , p)\). The rank r and the identity element of \({\mathcal {K}}\) are given by

In what follows, \(x_\ell \) stands for the \(\ell \)-th block element of \(x \in {\mathcal {K}}\), i.e., \(x = (x_1, \dots , x_p) \in {\mathcal {K}}_1 \times \cdots \times {\mathcal {K}}_p\). For each \(\ell =1, \ldots , p\), we define \(\lambda _{\min }(x_\ell ) := \min \{ \lambda _1, \ldots , \lambda _{r_\ell } \}\) where \(\lambda _1, \ldots , \lambda _{r_\ell }\) are eigenvalues of \(x_\ell \). The minimum eigenvalue \(\lambda _{\min }(x)\) of \(x \in {\mathcal {K}}\) is given by \(\lambda _{\min }(x) = \hbox {min} \{ \lambda _{\min }(x_1), \ldots , \lambda _{\min }(x_p) \}\).

Next, we consider the quadratic representation \(Q_v(x)\) defined by \(Q_v(x) := 2 v \circ ( v \circ x ) - v^2 \circ x\). For the cone \({\mathcal {K}} = {\mathcal {K}}_1 \times \cdots \times {\mathcal {K}}_p\), the quadratic representation \(Q_v(x)\) of \(x \in {\mathcal {K}}\) is denoted by \(Q_v(x) = \left( Q_{v_1} (x_1) , \dots , Q_{v_p}(x_p) \right) \). Letting \(I_\ell \) be the identity operator of the Euclidean Jordan algebra \(({\mathbb {E}}_\ell , \circ _\ell )\) associated with the cone \({\mathcal {K}}_\ell \), we have \(Q_{e_\ell } = I_\ell \) for \(\ell =1, \ldots , p\). The following properties can also be retrieved from the results in [5] as in Proposition 3 of [10]:

Proposition 2.4

For any \(v \in \textrm{int}{\mathcal {K}}\), \( Q_v ({\mathcal {K}}) = {\mathcal {K}}\).

It is also known that the following relations hold for the quadratic representation \(Q_v\) and \(\det (\cdot )\) [5].

Proposition 2.5

(cf. Proposition II.3.3 and III.4.2-(i), [5]) For any \(v,x \in {\mathbb {E}}\),

-

1.

\(\det Q_v(x) = \det (v)^2 \det (x)\),

-

2.

\(Q_{Q_v(x)} = Q_v Q_x Q_v\) (i.e., if \(x =e\) then \(Q_{v^2} = Q_v Q_v\)) .

More detailed descriptions, including concrete examples of symmetric cone optimization, can be found in, e.g., [1, 5, 6, 16]. Here, we will use concrete examples of symmetric cones to explain the biliniear operation, the identity element, the inner product, the eigenvalues, the primitive idempotents, the projection on the symmetric cone and the quadratic representation on the cone.

Example 2.6

(\({\mathcal {K}}\) is the semidefinite cone \({\mathbb {S}}^n_+\)). Let \({\mathbb {S}}^n\) be the set of symmetric matrices of \(n \times n\).The semidefinite cone \({\mathbb {S}}^n_+\) is given by \({\mathbb {S}}^n_+ = \{ X \in {\mathbb {S}}^n : X \succeq O \}\). For any symmetric matrices \(X , Y \in {\mathbb {S}}^n\), define the bilinear operation \(\circ \) and inner product as \(X \circ Y = \frac{ XY + YX }{2}\) and \(\langle X , Y \rangle = \hbox {tr}(XY) = \sum _{i=1}^n \sum _{j=1}^n X_{ij} Y_{ij}\), respectively. For any \(X \in {\mathbb {S}}^n\), perform the eigenvalue decomposition and let \(u_1 , \dots , u_n\) be the corresponding normalized eigenvectors for the eigenvalues \(\lambda _1 , \dots , \lambda _n\): \(X = \sum _{i=1}^n \lambda _i u_i u_i^T\). The eigenvalues of X in the Jordan algebra are \(\lambda _1 , \dots , \lambda _n\) and the primitive idempotents are \(c_1 = u_1 u_1^T , \dots , c_n = u_n u_n^T\), which implies that the rank of the semidefinite cone \({\mathbb {S}}^n_+\) is \(r=n\). The identity element is the identity matrix I and the projection onto \({\mathbb {S}}^n_+\) is given by \(P_{{\mathbb {S}}^n_+}(X) = \sum _{i=1}^n [ \lambda _i ]^+ u_i u_i^T\). The quadratic representation of \(V \in {\mathbb {S}}^n\) is given by \(Q_V(X) = V X V\).

Example 2.7

(\({\mathcal {K}}\) is the second-order cone \({\mathbb {L}}_n\)). The second order cone is given by \({\mathbb {L}}_n = \{ ( x_1, \varvec{{\bar{x}}}^\top )^\top \in {\mathbb {R}}^n : x_1 \ge \Vert \varvec{{\bar{x}}} \Vert _2\}\). For any \(x , y \in {\mathbb {R}}^n\), define the bilinear operation \(\circ \) and the inner product as \(x \circ y = ( x^\top y, ( x_1\varvec{{\bar{y}}} + y_1\varvec{{\bar{x}}})^\top )^\top \) and \(\langle x , y \rangle = 2 \sum _{i=1}^n x_i y_i\), respectively. For any \(x \in {\mathbb {R}}^n\), by the decomposition

we obtain the eigenvalues and the primitive idempotents as follows:

where \(z \in {\mathbb {R}}^{n-1}\) is an arbitrary vector satisfying \(\Vert z\Vert _2=1\). The above implies that the rank of the second-order cone \({\mathbb {L}}_n\) is \(r=2\). The identity element is given by \(e = (1, \varvec{0}^\top )^\top \in {\mathbb {R}}^n\). The projection \(P_{{\mathbb {L}}_n}(x)\) onto \({\mathbb {L}}_n\) is given by

Letting \(I_{n-1}\) be the identity matrix of order \(n-1\), the quadratic representation \(Q_v(\cdot )\) of \(v \in {\mathbb {R}}^n\) is as follows:

2.3 Notation

This subsection summarizes the notations used in this paper. For any \(x,y \in {\mathbb {E}}\), we define the inner product \(\langle \cdot ,\cdot \rangle \) and the norm \(\Vert \cdot \Vert _{J}\) as \(\langle x , y \rangle := \textrm{trace}(x \circ y)\) and \(\Vert x \Vert _J := \sqrt{ \langle x , x \rangle }\), respectively. For any \(x \in {\mathbb {E}}\) having decomposition \(x = \sum _{i=1}^r \lambda _i c_i\) as in Proposition 2.1, we also define \(\Vert x \Vert _1 := |\lambda _1| + \cdots + |\lambda _r|\), \(\Vert x\Vert _\infty := \max \{|\lambda _1| , \dots , |\lambda _r| \}\). For \(x \in {\mathcal {K}}\), we obtain the following equivalent representations: \(\Vert x\Vert _1 = \langle e , x \rangle \), \(\Vert x\Vert _\infty = \lambda _{\max } (x)\). The following is a list of other definitions and frequently used symbols in the paper.

-

d: the dimension of the Euclidean space \({\mathbb {E}}\) corresponding to \({\mathcal {K}}\),

-

\(F_{\textrm{P}_{S_{\infty }}({\mathcal {A}})}\): the feasible region of \(\textrm{P}_{S_{\infty }}({\mathcal {A}})\),

-

\(P_{{\mathcal {A}}} (\cdot ) \): the projection map onto \(\hbox {ker} \hspace{0.3mm} {\mathcal {A}}\),

-

\({\mathcal {P}}_{\mathcal {K}} (\cdot ) \): the projection map onto \({\mathcal {K}}\),

-

\(\lambda (x) \in {\mathbb {R}}^r\): an r-dimensional vector composed of the eigenvalues of \( x \in {\mathcal {K}}\),

-

\(\lambda (x_\ell ) \in {\mathbb {R}}^{r_\ell }\): an \(r_\ell \)-dimensional vector composed of the eigenvalues of \(x_\ell \in {\mathcal {K}}_\ell \) (\(\ell =1,\ldots ,p\)),

-

\(c(x_\ell )_i \in {\mathcal {K}}_\ell \): the i-th primitive idempotent of \(x_\ell \in {\mathbb {E}}_\ell \). When \({\mathcal {K}}\) is simple, it is abbreviated as \(c_i\).

-

\(\left[ \cdot \right] ^+\): the projection map onto the nonnegative orthant, and

-

\({\mathcal {A}}^*(\cdot )\): the adjoint operator of the linear operator \({\mathcal {A}}(\cdot )\), i.e., \(\langle {\mathcal {A}}(x) , y \rangle = \left\langle x , {\mathcal {A}}^* (y) \right\rangle \) for all \(x \in {\mathcal {K}}\) and \(y \in {\mathbb {R}}^m\).

3 Extension of Roos’s method to the symmetric cone problem

3.1 Outline of the extended method

We focus on the feasibility of the following problem \(\textrm{P}_{S_{\infty }}({\mathcal {A}})\), which is equivalent to \(\textrm{P}({\mathcal {A}})\):

The alternative problem \(\textrm{D}({\mathcal {A}})\) of \(\textrm{P}({\mathcal {A}})\) is

where \(\hbox {range} {\mathcal {A}}^*\) is the orthogonal complement of \(\hbox {ker} {\mathcal {A}}\). As we mentioned in Sect. 2.2, we assume that \({\mathcal {K}}\) is given by a Cartesian product of p simple symmetric cones \({\mathcal {K}}_\ell (\ell =1, \ldots , p)\), i.e., \({\mathcal {K}} = {\mathcal {K}}_1 \times \cdots \times {\mathcal {K}}_p \). In our method, the upper bound for the sum of eigenvalues of a feasible solution of \(\textrm{P}_{S_{\infty }}({\mathcal {A}})\) plays a key role, whereas the existing work focuses on the volume of the set of the feasible region [10] or the condition number of a feasible solution [13]. Before describing the theoretical results, let us outline the proposed algorithm when \({\mathcal {K}}\) is simple. The algorithm repeats two steps: (i). find a cut for \(\textrm{P}_{S_{\infty }}({\mathcal {A}})\), (ii) scale the problem to an isomorphic problem equivalent to \(\textrm{P}_{S_{\infty }}({\mathcal {A}})\) such that the region narrowed by the cut is efficiently explored. Given a feasible solution x of \(\textrm{P}_{S_{\infty }}({\mathcal {A}})\) and a constant \(0<\xi <1\), our method first searches for a Jordan frame \(\{ c_1 , \dots , c_r \}\) such that the following is satisfied:

where \(H \subseteq \{1, \dots ,r\}\) and \(|H|>0\). In this case, instead of \(\textrm{P}_{S_{\infty }}({\mathcal {A}})\), we may consider \(\textrm{P}^{\textrm{Cut}}_{S_{\infty }}({\mathcal {A}})\) as follows:

Here, we define the set \(SR^{\textrm{Cut}} = \{x \in {\mathbb {E}} : x \in \hbox {in} \quad {\mathcal {K}}, \ \Vert x\Vert _\infty \le 1, \ \langle c_i, x \rangle \le \xi \ (i \in H), \ \langle c_i, x \rangle \le 1 \ i \notin H) \}\) as the search range for the solutions of the problem \(\textrm{P}^{\textrm{Cut}}_{S_{\infty }}({\mathcal {A}})\). The proposed method then creates a problem equivalent and isomorphic to \(\textrm{P}_{S_{\infty }}({\mathcal {A}})\) such that \(SR^{\textrm{Cut}}\), the region narrowed by the cut, can be searched efficiently. Such a problem is obtained as follows:

where g is given by \(g = \sqrt{\xi } \sum _{i \in H} c_i + \sum _{i \notin H} c_i \in \textrm{in} {\mathcal {K}}\) for which \(e = Q_{g^{-1}} (u)\) holds for \(u = \sum _{i \in H} \xi c_i + \sum _{i \notin H} c_i\).

In the succeeding sections, we explain how the cut for \(\textrm{P}_{S_{\infty }}({\mathcal {A}})\) is obtained from some \(v \in \hbox {range} {\mathcal {A}}^*\); we also explain the scaling method for the problem in detail. To simplify our discussion, we will assume that \({\mathcal {K}}\) is simple, i.e., \(p=1\), in Sect. 3.2. Then, in Sect. 3.3, we will generalize our discussion to the case of \(p \ge 2\).

3.2 Simple symmetric cone case

Let us consider the case where \({\mathcal {K}}\) is simple. It is obvious that, for any feasible solution x of \(\textrm{P}_{S_{\infty }}({\mathcal {A}})\), the constraint \(\Vert x\Vert _\infty \le 1\) implies that \(\langle e , x\rangle \le r\), since \(x \in {\mathcal {K}}\). In Proposition 3.3, we show that this bound may be improved as \(\langle e , x\rangle <r\) by using a point \(v \in \hbox {range} \hspace{0.3mm} {\mathcal {A}}^*\setminus \{0\}\). To prove Proposition 3.3, we need the following Lemma 3.1 and Proposition 3.2.

Lemma 3.1

Let \(({\mathbb {E}}, \circ )\) be a Euclidean Jordan algebra with the associated symmetric cone \({\mathcal {K}}\). For any \(y \in {\mathbb {E}}\), the following equation holds:

Proof

Using the decomposition \(y = \sum _{i=1}^r \lambda _i c_i\) obtained by Proposition 2.1, we see that

Noting that \(x \in {\mathcal {K}}, e-x \in {\mathcal {K}}\) from \(\varvec{0} \le \lambda (x) \le \varvec{1}\), since \(c_i \in {\mathcal {K}}\) is primitive idempotent, we find that \(\langle c_i,x \rangle \ge 0\) and \(\langle c_i, e-x \rangle \ge 0\), which implies that \(0 \le \langle c_i,x \rangle \le 1\). Thus, letting \(I_1\) be the set of indices for which \(\lambda _i \le 0\) and \(I_2\) be the set of indices for which \(\lambda _i > 0\), if there exists an x satisfying

then such an x is an optimal solution of (2). In fact, if we define \(x^* = \sum _{i \in I_2} c_i\), then by the dedfinition of the Jordan frame, \(x^*\) satisfies (3) and \(\varvec{0} \le \lambda (x) \le \varvec{1}\) and becomes an optimal solution of (2). In this case, the optimal value of (2) turns out to be

\(\square \)

Proposition 3.2

Let \(({\mathbb {E}}, \circ )\) be a Euclidean Jordan Algebra with the corresponding symmetric cone \({\mathcal {K}}\). For a given \(c \in {\mathbb {E}}\), consider the problem

The dual problem of the above is

Proof

Define the Lagrangian function L(x, w) as \(L(x,w) := \langle c , x \rangle - w^\top {\mathcal {A}}(x)\) where \(w \in {\mathbb {R}}^m\) is the Lagrange multiplier. Supoose that \(x^*\) is an optimal sotution of the primal problem. Then, for any \(w \in {\mathbb {R}}^m\), we have \(\langle c,x^* \rangle = L(w,x^*) \le \max _{\varvec{0} \le \lambda (x) \le \varvec{1}} L(w,x)\), and hence,

\(\square \)

Proposition 3.3

Suppose that \(v \in \hbox {range} \mathcal {A}^*\) is given by \(v = \sum _{i=1}^r \lambda _i c_i\) as in Proposition 2.1. For each \(i \in \{ 1, \dots , r \}\) and \(\alpha \in {\mathbb {R}}\), define \(q_i(\alpha ) := \left[ 1-\alpha \lambda _i \right] ^+ + \sum _{j \ne i }^r \left[ - \alpha \lambda _j \right] ^+\). Then, the following relations hold for any \(x \in F_{\textrm{P}_{S_{\infty }}({\mathcal {A}})}\) and \(i \in \{ 1, \dots , r \}\):

Proof

For each \(i \in \{ 1, 2, \dots , r \}\), we have

and hence,

Note that, since \(q_i(\alpha )\) is a piece-wise linear convex function, if \(\lambda _i = 0\), it attains the minimum at \(\alpha = 0\) with \(q_i(0) = 1\), and if \(\lambda _i \ne 0\), it attains the minimum at \(\alpha = 0\) with \(q_i(0) = 1\) or at \(\alpha = \frac{1}{\lambda _i}\) with

Thus, we obtain equivalence in (4). Since \(\alpha v \in \hbox {range} \hspace{0.3mm} {\mathcal {A}}^*\) for all \(\alpha \in {\mathbb {R}}\), for each \(i \in \{ 1, \dots , r \}\), Proposition 3.2 and (5) ensure that \(\langle c_i , x \rangle \le \left\langle {\mathcal {P}}_{\mathcal {K}} \left( c_i - \alpha v \right) , e \right\rangle = q_i(\alpha )\) for all \(\alpha \in {\mathbb {R}}\), which implies the inequality in (4). \(\square \)

Since \(\sum _{i=1}^r c_i = e\) holds, Proposition 3.3 allows us to compute upper bounds for the sum of eigenvalues of x. The following proposition gives us information about indices whose upper bound for \(\langle c_i , x \rangle \) in Proposition 3.3 is less than 1.

Proposition 3.4

Suppose that \(v \in \hbox {range} {\mathcal {A}}^*\) is given by \(v = \sum _{i=1}^r \lambda _i c_i\) as in Proposition 2.1. If v satisfies \(\left\langle e , {\mathcal {P}}_{{\mathcal {K}}} \left( - \frac{1}{\lambda _i} v \right) \right\rangle = \xi < 1\) for some \(\xi < 1\) and for some \(i \in \{ 1, \dots , r \}\) for which \(\lambda _i \ne 0\) holds, then \(\lambda _i\) has the same sign as \(\langle e ,v \rangle \).

Proof

First, we consider the case where \(\lambda _i > 0\). Since the assumption implies that \(\langle e , {\mathcal {P}}_{{\mathcal {K}}} (-v) \rangle = \lambda _i \xi \), we have

where the first equality comes from Lemma 2.3.

For the case where \(\lambda _i < 0\), since the assumption also implies that \(\langle e , {\mathcal {P}}_{{\mathcal {K}}} (v) \rangle = - \lambda _i \xi \), we have

This completes the proof. \(\square \)

The above two propositions imply that, for any \(v \in \hbox {range} \hspace{0.3mm} {\mathcal {A}}^*\) with \(v = \sum _{i=1}^r \lambda _i c_i\), if we compute \(\langle c_i, x \rangle \) according to Proposition 3.3 for \(i \in \{ 1, \dots , r \}\) having the same sign as the one of \(\langle e , v \rangle \), we obtain an upper bound for the sum of eigenvalues of x over the set \(F_{\textrm{P}_{S_{\infty }}({\mathcal {A}})}\). The following proposition concerns the scaling method of problem \(\textrm{P}_{S_{\infty }}({\mathcal {A}})\) when we find such a \(v \in \hbox {range} \hspace{0.3mm} {\mathcal {A}}^*\).

Proposition 3.5

Let \(H \subseteq \{1 , \dots r \}\) be a nonempty set, \(c_1 , \dots , c_r \) be a Jordan frame, and \(\xi \) be a real number satisfying \(0< \xi < 1\). Let us define \(g \in \hbox {int} \quad {\mathcal {K}}\) as

For the two sets \(SR^{\textrm{Cut}} = \{x \in {\mathbb {E}} : x \in \hbox {int} {\mathcal {K}}, \ \Vert x\Vert _\infty \le 1, \ \langle c_i, x \rangle \le \xi \ (i \in H), \ \langle c_i, x \rangle \le 1 \ (i \notin H) \}\) and, \(SR^{\textrm{Scaled}} = \{ {\bar{x}} \in {\mathbb {E}} : {\bar{x}} \in \hbox {int} {\mathcal {K}}, \ \Vert {\bar{x}} \Vert _\infty \le 1 \}\), \(Q_g( SR^{\textrm{Scaled}} ) \subseteq SR^{\textrm{Cut}}\) holds.

Proof

Let \({\bar{x}}\) be an arbitrary point of \(SR^{\textrm{Scaled}}\). It suffices to show that (i) \(Q_g({\bar{x}}) \in {\textrm{int}} \hspace{0.75mm} {\mathcal {K}}\), (ii) \(\Vert Q_g({\bar{x}}) \Vert _\infty \le 1\), (iii) \(\langle c_i, Q_g({\bar{x}}) \rangle \le \xi \ (i \in H)\) and (iv) \(\langle c_i, Q_g({\bar{x}}) \rangle \le 1 \ (i \notin H)\) hold.

(i): Let us show that \(Q_g({\bar{x}}) \in {\textrm{int}} \hspace{0.75mm} {\mathcal {K}}\). Since g and \({\bar{x}}\) lie in the set \({\textrm{int}} \hspace{0.75mm} {\mathcal {K}}\), from Propositions 2.4 and 2.5, we see that

which implies that \(Q_g({\bar{x}}) \in {\textrm{int}} {\mathcal {K}}\).

(ii): Next let us show that \(\Vert Q_g({\bar{x}}) \Vert _\infty \le 1\). Since \({\bar{x}} \in SR^{\textrm{Scaled}}\), we see that \({\bar{x}} \in \hbox {int} {\mathcal {K}}\), \(\Vert {\bar{x}} \Vert _\infty \le 1\) and hence \(e-{\bar{x}} \in {\mathcal {K}}\). Since \(g \in \hbox {int} {\mathcal {K}}\), Proposition 2.4 guarantees that

By the definition (6) of g, the following equations hold for \(c_1 , \dots , c_r\):

Thus, we obtain \(Q_g(e) = \xi \sum _{i \in H} c_i + \sum _{i \notin H} c_i\). Combining this with the facts \(c_i \in {\mathcal {K}}\) and \((1-\xi )>0\) and (7), we have

Since we have shown that \(Q_g({\bar{x}}) \in {\textrm{int}} {\mathcal {K}}\), we can conclude that \(\Vert Q_g({\bar{x}})\Vert _\infty \le 1\).

(iii) and (iv): Finally, we compute an upper bound for the value \(\langle Q_g({\bar{x}}) , c_i \rangle \) over the set \(SR^{\textrm{Scaled}}\). It follows from \(c_i \in {\mathcal {K}}\) and (7) that \(\langle Q_g(e - {\bar{x}}) , c_i \rangle \ge 0\), i.e., \(\langle Q_g(e) , c_i \rangle \ge \langle Q_g({\bar{x}}) , c_i \rangle \) holds. Since we have shown that \(Q_g(e) = \xi \sum _{i \in H} c_i + \sum _{i \notin H} c_i\), this implies \(\langle Q_g({\bar{x}}) , c_i \rangle \le \xi \) holds if \(i \in H\) and \(\langle Q_g({\bar{x}}) , c_i \rangle \le 1\) holds if \(i \notin H\). \(\square \)

Note that Proposition 3.5 implies that if a cut is obtained for \(\textrm{P}_{S_{\infty }}({\mathcal {A}})\) based on Proposition 3.3, we can expect a more efficient search for solutions to problem \(\textrm{P}_{S_{\infty }}({\mathcal {A}}Q_g)\) rather than trying to solve problem \(\textrm{P}_{S_{\infty }}({\mathcal {A}})\).

3.3 Non-simple symmetric cone case

In this section, we consider the case where the symmetric cone is not simple. Propositions 3.6 and 3.7 are extensions of Proposition 3.3 and 3.4, respectively.

Proposition 3.6

Suppose that, for any \(v \in \hbox {range} {\mathcal {A}}^*\), the \(\ell \)-th block element \(v_\ell \) of \(v \in {\mathbb {E}}\) is decomposed into \(v_\ell = \sum _{i=1}^{r_\ell } {\lambda (v_\ell )}_i {c(v_\ell )}_i\) as in Proposition 2.1. For each \(\ell \in \{1,\ldots ,p \}\) and \(i \in \{1,\ldots ,r_p\}\), define

Then, the following relations hold for any feasible solution x of \(\textrm{P}_{S_{\infty }}({\mathcal {A}})\), \(\ell \in \{1,\ldots ,p \}\) and \(i \in \{1,\ldots ,r_p\}\).

Proof

Let \(c \in {\mathbb {E}}\) be an element whose \(\ell \)-th block element is \(c_\ell = c (v_\ell )_i\) and other block elements take 0. For any real number \(\alpha \in {\mathbb {R}}\), Proposition 3.2 ensures that

We obtain (9) by following a similar argument to the one used in the proof of Proposition 3.3. \(\square \)

The next proposition follows similarly to Proposition 3.4, by noting that \(\langle e, {\mathcal {P}}_{\mathcal {K}}(-v) \rangle = {\lambda (v_\ell )}_i \xi \) holds if \({\lambda (v_\ell )}_i >0\) and that \(\langle e, {\mathcal {P}}_{\mathcal {K}}(v) \rangle = - {\lambda (v_\ell )}_i \xi \) if \({\lambda (v_\ell )}_i <0\).

Proposition 3.7

Suppose that, for any \(v \in \hbox {range} \hspace{0.3mm} {\mathcal {A}}^*\), each \(\ell \)-th block element \(v_\ell \) of v is decomposed into \(v_\ell = \sum _{i=1}^{r_\ell } {\lambda (v_\ell )}_i {c(v_\ell )}_i\) as in Proposition 2.1. If v satisfies

for some \(\xi < 1\), \(\ell \in \{1 , \dots , p \} \) and \( i \in \{1 , \dots , r_\ell \} \), then \({\lambda (v_\ell )}_i\) has the same sign as \(\langle e , v \rangle \).

From Proposition 3.6, if we obtain \(v \in \hbox {range} \hspace{0.3mm} {\mathcal {A}}^*\) satisfying (11) for a block \(\ell \in \{1 , \dots , p \} \) with an index \(i \in \{1 , \dots r_\ell \} \), then the upper bound for the sum of the eigenvalues of any feasible solution x of \(\textrm{P}_{S_{\infty }}({\mathcal {A}})\) is reduced by \(\langle e , x \rangle \le r-1 + \xi _\ell < r\). In this case, as described below, we can find a scaling such that the sum of eigenvalues of any feasible solution of \(\textrm{P}_{S_\infty }({\mathcal {A}})\) is bounded by r. Let \(H_\ell \) be the set of indices i satisfying (11) for each block \(\ell \). According to Proposition 3.5, set \(g_\ell = \sqrt{\xi _\ell } \sum _{h \in H_\ell } {c(v_\ell )}_h + \sum _{h \notin H_\ell } {c(v_\ell )}_h\) and define the linear operator Q as follows:

where \(I_\ell \) is the identity operator of the Euclidean Jordan algebra \({\mathbb {E}}_\ell \) associated with the symmetric cone \({\mathcal {K}}_\ell \). From Proposition 3.5 and its proof, we can easily see that

and the sum of eigenvalues of any feasible solution of the scaled problem \(\textrm{P}_{S_\infty }({\mathcal {A}}Q)\) is bounded by \(\langle e,e \rangle = r = \sum _{\ell =1}^{p} r_\ell \).

4 Basic procedure of the extended method

4.1 Outline of the basic procedure

In this section, we describe the details of our basic procedure. First, we introduce our stopping criteria and explain how to update \(y^k\) when the the stopping criteria is not satisfied. Next, we show that the stopping criteria is satisfied within a finite number of iterations. Our stopping criteria is new and different from the ones used in [10, 13], while the method of updating \(y^k\) is similar to the one used in [10] or in the von Neumann scheme of [13]. Algorithm 1 is a full description of our basic procedure.

4.2 Termination conditions of the basic procedure

For \(z^k = P_{\mathcal {A}}(y^k)\), \(v^k = y^k - z^k\) and a given \(\xi \in (0,1)\), our basic procedure terminates when any of the following four cases occurs:

-

1.

\(z^k \in \hbox {int} {\mathcal {K}} \) meaning that \(z^k\) is a solution of \(\textrm{P}({\mathcal {A}})\),

-

2.

\(z^k = \varvec{0}\) meaning that \(y^k\) is feasible for \(\textrm{D}({\mathcal {A}})\),

-

3.

\(y^k - z^k \in {\mathcal {K}}\) and \(y^k - z^k \ne \varvec{0}\) meaning that \(y^k - z^k\) is feasible for \(\textrm{D}({\mathcal {A}})\), or

-

4.

there exist \(\ell \in \{ 1 , \dots , p\}\) and \(i \in \{1 , \dots , r_\ell \}\) for which

$$\begin{aligned} \begin{array}{lll} {\lambda (v^k_\ell )}_i \ne 0&\hbox {and}&\left\langle e , {\mathcal {P}}_{{\mathcal {K}}} \left( - \frac{1}{{\lambda (v^k_\ell )}_i} v^k \right) \right\rangle = \xi _\ell \le \xi < 1, \end{array} \end{aligned}$$(13)meaning that \(\langle e,x \rangle < r\) holds for any feasible solution x of \(\textrm{P}_{S_\infty }({\mathcal {A}})\) (see Proposition 3.6).

Cases 1 and 2 are direct extensions of the cases in [3], while case 3 was proposed in [9, 10]. Case 3 helps us to determine the feasibility of \(\textrm{P}({\mathcal {A}})\) efficiently, while we have to decompose \(y^k-z^k\) for checking it. If the basic procedure ends with case 1, 2, or 3, the basic procedure returns a solution of \(\textrm{P}({\mathcal {A}})\) or \(\textrm{D}({\mathcal {A}})\) to the main algorithm. If the basic procedure ends with case 4, the basic procedure returns to the main algorithm p index sets \(H_1 , \dots , H_p\) each of which consists of indices i satisfying (13) and the set of primitive idempotents \(C_\ell = \{ {c(v^k_\ell )}_1, \dots ,{c(v^k_\ell )}_{r_\ell } \}\) of \(v^k_\ell \) for each \(\ell \).

4.3 Update of the basic procedure

The basic procedure updates \(y^k \in \hbox {int} {\mathcal {K}}\) with \(\langle y^k , e \rangle = 1\) so as to reduce the value of \(\Vert z^k \Vert _J\). The following proposition is essentially the same as Proposition 13 in [10], so we will omit its proof.

Proposition 4.1

(cf. Proposition 13, [10]). For \(y^k \in \hbox {int} \quad {\mathcal {K}}\) satisfying \(\langle y^k , e \rangle = 1\), let \(z^k = P_{\mathcal {A}}(y^k)\). If \(z^k \notin \hbox {int} {\mathcal {K}} \) and \(z^k \ne \varvec{0}\), then the following hold.

-

1.

There exists \( c \in {\mathcal {K}}\) such that \(\langle c , z^k \rangle = \lambda _{\min } (z^k) \le 0\), \(\langle e , c \rangle =1\) and \(\ c\in {\mathcal {K}}\).

-

2.

For the above c, suppose that \(P_{\mathcal {A}}(c) \ne \varvec{0}\) and define

$$\begin{aligned} \alpha = \langle P_{\mathcal {A}}(c) , P_{\mathcal {A}}(c) - z^k \rangle \Vert z^k-P_{\mathcal {A}}(c) \Vert ^{-2}_J. \end{aligned}$$(14)Then, \(y^{k+1} := \alpha y^k + (1-\alpha ) c\) satisfies \(y^{k+1} \in \hbox {int} \quad {\mathcal {K}}\), \(\Vert y^{k+1}\Vert _{1,\infty } \ge 1/p\), \(\langle y^{k+1} , e \rangle = 1\), and \(z^{k+1} := P_{\mathcal {A}}(y^{k+1})\) satisfies \(\Vert z^{k+1} \Vert ^{-2}_J \ge \Vert z^k\Vert ^{-2}_J + 1\).

A method of accelerating the update of \(y^k\) is provided in [15]. For \(\ell \in \{1,2,\ldots ,p\}\), let \(I_\ell := \{ i \in \{1,2,\ldots ,r_\ell \} \mid \lambda _i (z^k_\ell ) \le 0\} \) and set \(N = \sum _{\ell =1}^p | I_\ell | \). Define the \(\ell \)-th block element of \(c \in {\mathcal {K}}\) as \(c_\ell = \frac{1}{N} \sum _{i \in I_\ell } {c(z^k_\ell )}_i\). Using \(P_{\mathcal {A}} \left( c \right) \), the acceleration method computes \(\alpha \) by (14) so as to minimize the norm of \(z^{k+1}\) and update y by \(y^{k+1} = \alpha y^k + (1-\alpha ) c\). We incorporate this method in the basic procedure of our computational experiment. As described in [13], we can also use the smooth perceptron scheme [17, 18] to update \(y^k\) in the basic procedure. As explained in the next section, using the smooth perceptron scheme significantly reduces the maximum number of iterations of the basic procedure. A detailed description of our basic procedure is given in Appendix A.

4.4 Finite termination of the basic procedure

In this section, we show that the basic procedure terminates in a finite number of iterations. To do so, we need to prove Lemma 4.2 and Proposition 4.3.

Lemma 4.2

Let \(({\mathbb {E}}, \circ )\) be a Euclidean Jordan algebra with the corresponding symmetric cone \({\mathcal {K}}\) given by the Cartesian product of p simple symmetric cones. For any \(x \in {\mathbb {E}}\) and \(y \in {\mathcal {K}}\), \([ \langle x , y \rangle ]^+ \le \langle {\mathcal {P}}_{\mathcal {K}} (x) , y \rangle \) holds.

Proof

Let \(x \in {\mathbb {E}}\) and suppose that each \(\ell \)-th block element \(x_\ell \) of x is given by \(x_\ell = \sum _{i=1}^{r_\ell } {\lambda (x_\ell )}_i {c(x_\ell )}_i\) as in Proposition 2.1. Then, we can see that

where the inequality follows from the fact that \({c(x_\ell )}_1 , \dots , {c(x_\ell )}_{r_\ell }\), and \(y_\ell \) lie in \({\mathcal {K}}_\ell \). \(\square \)

Proposition 4.3

For a given \(y \in {\mathcal {K}}\), define \(z = P_{\mathcal {A}} (y)\) and \(v = y - z\). Suppose that \(v \ne 0\) and each \(\ell \)-th element \(v_\ell \) is given by \(v_\ell = \sum _{i=1}^{r_\ell } {\lambda (v_\ell )}_i {c(v_\ell )}_i\), as in Proposition 2.1. Then, for any \(x \in F_{\textrm{P}_{S_{\infty }}({\mathcal {A}})}\), \(\ell \in \{ 1, \dots , p \}\) and \( i \in \{ 1, \dots , r_\ell \}\),

hold where \(q_{\ell ,i}(\alpha )\) is defined in (8).

Proof

The first inequality of (15) follows from (10) in the proof of Proposition 3.6. The second inequality is obtained by evaluating \(q_{\ell , i}(\alpha )\) at \(\alpha = \frac{1}{ \langle y_\ell , {c(v_\ell )}_i \rangle }\), as follows:

\(\square \)

Proposition 4.4

Let \(r_{\max } = \max \{ r_1 , \dots , r_p\}\). The basic procedure (Algorithm 1) terminates in at most \(\frac{p^2r_{\max }^2}{\xi ^2} \) iterations.

Proof

Suppose that \(y^k\) is obtained at the k-th iteration of Algorithm 1. Proposition 4.1 implies that \(\Vert y^k\Vert _{1,\infty } \ge \frac{1}{p}\) and an \(\ell \)-th block element exists for which \(\langle y_\ell , e_\ell \rangle \ge \frac{1}{p}\) holds. Thus, by letting \(v^k = y^k - z^k\) and the \(\ell \)-th block element \(v_\ell ^k\) of \(v^k\) be \(v_\ell ^k = \sum _{i=1}^{r_\ell } {\lambda (v^k_\ell )}_i {c(v^k_\ell )}_i\) as in Proposition 2.1, we have

Since Proposition 4.1 ensures that \(\frac{1}{\Vert z^k\Vert ^2_J} \ge k\) holds at the k-th iteration, by setting \(k=\frac{p^2r_{\max }^2}{\xi ^2}\), we see that \(\xi \ge p r_{\max } \Vert z^k\Vert _J\), and combining this with (16), we have

The above inequality and Proposition 4.3 imply that for any \(\ell \in \{1,\ldots ,p \}\) and \(i \in \{1,\ldots ,r_p\}\),

From the equivalence in (9) and the setting \(\xi \in (0,1)\), we conclude that Algorithm 1 terminates in at most \(\frac{p^2r_{\max }^2}{\xi ^2} \) iterations by satisfying (13) in the fourth termination condition at an \(\ell \)-th block and an index i. \(\square \)

An upper bound for the number of iterations of Algorithm 5 using smooth perceptoron scheme can be found as follows.

Proposition 4.5

Let \(r_{\max } = \max \{ r_1 , \dots , r_p\}\). The basic procedure (Algorithm 5) terminates in at most \(\frac{2 \sqrt{2} p r_{\max }}{\xi }\) iterations.

Proof

From Proposition 6 in [13], after \(k \ge 1\) iterations, we obtain the inequality \(\Vert z^k\Vert _J^2 \le \frac{8}{(k+1)^2}\). Similarly to the previous proof of Proposition 4.4, if \(\xi \ge p r_{max} \Vert z^k\Vert _J\) holds, then Algorithm 5 terminates. Thus, \(k \le \frac{2 \sqrt{2} p r_{\max }}{\xi }\) holds for a given k satisfying \(\left( \frac{\xi }{p r_{max}} \right) ^2 \le \frac{8}{(k+1)^2}\). \(\square \)

Here, we discuss the computational cost per iteration of Algorithm 1. At each iteration, the two most expensive operations are computing the spectral decomposition on line 5 and computing \(P_{{\mathcal {A}}}(\cdot )\) on lines 24 and 26. Let \(C_{\ell }^{\textrm{sd}}\) be the computational cost of the spectral decomposition of an element of \({\mathcal {K}}_\ell \). For example, \(C_{\ell }^{\textrm{sd}}={\mathcal {O}}(r_\ell ^3)\) if \({\mathcal {K}}_\ell = {\mathbb {S}}^{r_\ell }_+\) and \(C_{\ell }^{\textrm{sd}}={\mathcal {O}}(r_\ell )\) if \({\mathcal {K}}_\ell = {\mathbb {L}}_{r_\ell }\), where \({\mathbb {L}}_{r_\ell }\) denotes the \(r_\ell \)-dimensional second-order cone. Then, the cost \(C^{\textrm{sd}}\) of computing the spectral decomposition of an element of \({\mathcal {K}}\) is \(C^{\textrm{sd}} = \sum _{\ell =1}^p C_{\ell }^{\textrm{sd}}\). Next, let us consider the computational cost of \(P_{{\mathcal {A}}}(\cdot )\). Recall that d is the dimension of the Euclidean space \({\mathbb {E}}\) corresponding to \({\mathcal {K}}\). As discussed in [10], we can compute \(P_{{\mathcal {A}}} = I - {\mathcal {A}}^*({\mathcal {A}}{\mathcal {A}}^*)^{-1}{\mathcal {A}}\) by using the Cholesky decomposition of \(({\mathcal {A}}{\mathcal {A}}^*)^{-1}\). Suppose that \(({\mathcal {A}}{\mathcal {A}}^*)^{-1} = LL^*\), where L is an \(m \times m\) matrix and we store \(L^*{\mathcal {A}}\) in the main algorithm. Then, we can compute \(P_{{\mathcal {A}}}(\cdot )\) on lines 24 and 26, which costs \({\mathcal {O}}(md)\). The operation \(u_\mu (\cdot ) : {\mathbb {E}} \rightarrow \{ u \in {\mathcal {K}} \mid \langle u,e \rangle = 1\}\) in Algorithm 1 can be performed within the cost \(C^{\textrm{sd}}\) [13, 18]. From the above discussion and Proposition 4.4, the total costs of Algorithm 1 and Algorithm 5 are given by \({\mathcal {O}} \left( \frac{p^2 r_{\max }^2}{\xi ^2} \max ( C^{\textrm{sd}} , md ) \right) \) and \({\mathcal {O}} \left( \frac{p r_{\max }}{\xi } \max ( C^{\textrm{sd}} , md ) \right) \), respectively.

5 Main algorithm of the extended method

5.1 Outline of the main algorithm

In what follows, for a given accuracy \(\varepsilon > 0\), we call a feasible solution of \({\textrm{P}_{S_{\infty }}}({\mathcal {A}})\) whose minimum eigenvalue is \(\varepsilon \) or more an \(\varepsilon \)-feasible solution of \({\textrm{P}_{S_{\infty }}}({\mathcal {A}})\). This section describes the main algorithm. To set the upper bound for the minimum eigenvalue of any feasible solution x of \(\textrm{P}_{S_{\infty }}({\mathcal {A}})\), Algorithm 2 focuses on the product \(\det ({\bar{x}})\) of the eigenvalues of the arbitrary feasible solution \({\bar{x}}\) of the scaled problem \(\textrm{P}_{S_{\infty }}({\mathcal {A}}^k Q^k)\). Algorithm 2 works as follows. First, we calculate the corresponding projection \(P_{{\mathcal {A}}}\) onto \(\hbox {ker} {\mathcal {A}}\) and generate an initial point as input to the basic procedure. Next, we call the basic procedure and determine whether to end the algorithm with an \(\varepsilon \)-feasible solution or to perform problem scaling according to the returned result, as follows:

-

1.

If a feasible solution of \(\textrm{P}({\mathcal {A}})\) or \(\textrm{D}({\mathcal {A}})\) is returned from the basic procedure, the feasibility of \(\textrm{P}({\mathcal {A}})\) can be determined, and we stop the main algorithm.

-

2.

If the basic procedure returns the sets of indices \(H_1 , \dots , H_p\) and the sets of primitive idempotents \(C_1 , \dots , C_p\) that construct the corresponding Jordan frames, then for the total number of cuts obtaibed in the \(\ell \)-th block \(\textrm{num}_\ell \),

-

(a)

if \(\hbox {num}_\ell \ge r_\ell \frac{\log \varepsilon }{\log \xi }\) holds for some \(\ell \in \{ 1, \dots p \}\), we determine that \({\textrm{P}_{S_{\infty }}}({\mathcal {A}})\) has no \(\varepsilon \)-feasible solution according to Proposition 5.1 and stop the main algorithm,

-

(b)

if \(\hbox {num}_\ell < r_\ell \frac{\log \varepsilon }{\log \xi }\) holds for any \(\ell \in \{ 1, \dots p \}\), we rescale the problem and call the basic procedure again.

-

(a)

Note that our main algorithm is similar to Lourenço et al.’s method in the sense that it keeps information about the possible minimum eigenvalue of any feasible solution of the problem. In contrast, Pena and Soheili’s method [13] does not keep such information. Algorithm 2 terminates after no more than \(-\frac{r}{\log \xi } \log \left( \frac{1}{\varepsilon }\right) - p + 1\) iterations, so our main algorithm can be said to be a polynomial-time algorithm. We will give this proof in Sect. 5.2. We should also mention that step 24 in Algorithm 2 is not a reachable output theoretically. We have added this step in order to consider the influence of the numerical error in practice.

5.2 Finite termination of the main algorithm

Here, we discuss how many iterations are required until we can determine that the minimum eigenvalue \(\lambda _{\textrm{min}} (x)\) is less than \(\varepsilon \) for any \(x \in F_{\textrm{P}_{S_{\infty }}({\mathcal {A}})}\). Before going into the proof, we explain the Algorithm 2 in more detail than in Sect. 5.1. At each iteration of Algorithm 2, it accumulates the number of cuts \(|H_\ell ^k|\) obtained in the \(\ell \)-th block and stores the value in \(\textrm{num}_\ell \). Using \(\textrm{num}_\ell \), we can compute an upper bound for \(\lambda _{\textrm{min}} (x)\) (Proposition 5.1). On line 18, \({\bar{Q}}_\ell \) is updated to \({\bar{Q}}_\ell \leftarrow Q_{g_\ell ^{-1}} {\bar{Q}}_\ell \), where \({\bar{Q}}_\ell \) plays the role of an operator that gives the relation \({\bar{x}}_\ell = {\bar{Q}}_\ell (x_\ell )\) for the solution x of the original problem and the solution \({\bar{x}}\) of the scaled problem. For example, if \(|H^1_\ell | > 0\) for \(k=1\) (suppose that the cut was obtained in the \(\ell \)-th block), then the proposed method scales \({\mathcal {A}}^1_\ell Q^1_\ell \) and the problem to yield \({\bar{x}}_\ell = Q_{g_\ell ^{-1}}(x_\ell )\) for the feasible solution x of the original problem. And if \(|H^2_\ell | > 0\) even for \(k=2\), then the proposed method scales \({\bar{x}}\) again, so that \(\bar{{\bar{x}}}_\ell = Q_{g_\ell ^{-1}}({{\bar{x}}_\ell }) = {\bar{Q}}_\ell (x_\ell )\) holds. Note that \({\bar{Q}}_\ell \) is used only for a concise proof of Proposition 5.1, so it is not essential.

Now, let us derive an upper bound for the minimum eigenvalue \(\lambda _{\min }(x_\ell )\) of each \(\ell \)-th block of x obtained after the k-th iteration of Algorithm 2. Proposition 5.2 gives an upper bound for the number of iterations of Algorithm 2.

Proposition 5.1

After k iterations of Algorithm 2, for any feasible solution x of \(\textrm{P}_{S_{\infty }}({\mathcal {A}})\) and \( \ell \in \{1 , \dots , p\}\), the \(\ell \)-th block element \(x_\ell \) of x satisfies

Proof

At the end of the k-th iteration, any feasible solution \({\bar{x}}\) of the scaled problem \(\textrm{P}_{S_{\infty }}({\mathcal {A}}^{k+1}) = \textrm{P}_{S_{\infty }}({\mathcal {A}}^kQ^k)\) obviously satisfies

Note that \(\det {\bar{x}}_\ell \) can be expressed in terms of \(\det x_\ell \). For example, if \(|H_\ell ^1|>0\) when \(k=1\), then using Proposition 2.5, for any feasible solution \({\bar{x}}\) of \(\textrm{P}_{S_{\infty }}({\mathcal {A}}^{2})\), we find that

This means that \(\det {\bar{x}}_\ell \) can be determined from \(\det x_\ell \) and the number of cuts obtained so far in the \(\ell \)-th block. In Algorithm 2, the value of \(\hbox {num}_\ell \) is updated only when \(|H_\ell ^k|>0\). Since \({\bar{x}}\) satisfies \({\bar{x}}_\ell = {\bar{Q}}_\ell (x_\ell ) \ (\ell =1,2,\ldots ,p)\) for each feasible solution x of \(\textrm{P}_{S_{\infty }}({\mathcal {A}})\), we can see that

Therefore, (18) implies \(\det x_\ell \le \xi ^{\hbox {num}_\ell } \det e_\ell = \xi ^{\hbox {num}_\ell }\) and the fact \({\left( \lambda _{\min }(x_\ell ) \right) }^{r_\ell } \le \det x_\ell \) implies \({\left( \lambda _{\min }(x_\ell ) \right) }^{r_\ell } \le {\xi }^{\hbox {num}_\ell }\). By taking the logarithm of both sides of this inequality, we obtain (17). \(\square \)

Proposition 5.2

Algorithm 2 terminates after no more than \(-\frac{r}{\log \xi } \log \left( \frac{1}{\varepsilon }\right) - p + 1\) iterations.

Proof

Let us call iteration k of Algorithm 2 good if \(|H_\ell ^k| > 0\) for some \(\ell \in \{1,2, \dots , p\}\) at that iteration. Suppose that at least \(-\frac{r_\ell }{\log \xi } \log \left( \frac{1}{\varepsilon }\right) \) good iterations are observed for a cone \({\mathcal {K}}_\ell \). Then, by substituting \(-\frac{r_\ell }{\log \xi } \log \left( \frac{1}{\varepsilon }\right) \) into \(\hbox {num}_\ell \) of inequality (17) in Proposition 5.1, we have \(\log {\left( \lambda _{\min }(x_\ell ) \right) } \le \log \varepsilon \) and hence, \(\lambda _{\min }(x_\ell ) \le \varepsilon \). This implies that Algorithm 2 terminates after no more than

iterations. \(\square \)

6 Computational costs of the algorithms

This section compares the computational costs of Algorithm 2, Lourenço et al.’s method [10] and Pena and Soheili’s method [13]. Section 6.1 compares the computational costs of Algorithm 2 and Lourenço et al.’s method, and Sect. 6.2 compares those of Algorithm 2 and Pena and Soheili’s method under the assumption that \(\hbox {ker}\, {\mathcal {A}} \cap \hbox {int} {\mathcal {K}} \ne \emptyset \).

Both the proposed method and the method of Lourenço et al. guarantee finite termination of the main algorithm by termination criteria indicating the nonexistence of an \(\varepsilon \)-feasible solution, so that it is possible to compare the computational costs of the methods without making any special assumptions. This is because both methods proceed by making cuts to the feasible region using the results obtained from the basic procedure. On the other hand, Pena and Soheili’s method cannot be simply compared because the upper bound of the number of iterations of their main algorithm includes an unknown value of \(\delta (\hbox {ker} {\mathcal {A}} \cap \hbox {int} {\mathcal {K}}) := \max _x \left\{ \textrm{det}(x) \mid x \in \hbox {ker} {\mathcal {A}} \cap \hbox {int} {\mathcal {K}} , \Vert x\Vert _J^2 = r \right\} \). However, by making the assumption \(\hbox {ker} {\mathcal {A}} \cap \hbox {int} {\mathcal {K}} \ne \emptyset \) and deriving a lower bound for \(\delta (\hbox {ker} {\mathcal {A}} \cap \hbox {int} {\mathcal {K}})\), we make it possible to compare Algorithm 2 with Pena and Soheili’s method without knowing the specific values of \(\delta (\hbox {ker} {\mathcal {A}} \cap \hbox {int} {\mathcal {K}})\).

6.1 Comparison of Algorithm 2 and Lourenço et al.’s method

Let us consider the computational cost of Algorithm 2. At each iteration, the most expensive operation is computing \(P_{\mathcal {A}}\) on line 4. Recall that d is the dimension of the Euclidean space \({\mathbb {E}}\) corresponding to \({\mathcal {K}}\). As discussed in [10], by considering \(P_{\mathcal {A}}\) to be an \(m \times d\) matrix, we find that the computational cost of \(P_{{\mathcal {A}}}\) is \({\mathcal {O}}(m^3+m^2d)\). Therefore, by taking the computational cost of the basic procedure (Algorithm 1) and Proposition 5.2 into consideration, the cost of Algorithm 2 turns out to be

where \(C^{\textrm{sd}}\) is the computational cost of the spectral decomposition of \(x \in {\mathbb {E}}\).

Note that, in [10], the authors showed that the cost of their algorithm is

where \(C^{\textrm{min}}\) is the cost of computing the minimum eigenvalue of \(x \in {\mathbb {E}}\) with the corresponding idempotent,\(\rho \) is an input parameter used in their basic procedure (like \(\xi \) in the proposed algorithm) and \(\varphi (\rho ) = 2 - 1/\rho - \sqrt{3-2/\rho }\).

When the symmetric cone is simple, by setting \(\xi = 1/2\) and \(\rho = 2\), the maximum number of iterations of the basic procedure is bounded by the same value in both algorithms. Accordingly, we will compare the two computational costs (19) and (20) by supposing \(\xi = 1/2\) and \(\rho = 2\) (hence, \(- \log \xi \simeq 0.69\) and \(\varphi (\rho ) \simeq 0.09\)). As we can see below, the cost (19) of our method is smaller than (20) in the cases of linear programming and second-order cone problems and is equivalent to (20) in the case of semidefinite problems. First, let us consider the case where \({\mathcal {K}}\) is the n-dimensional nonnegative orthant \({\mathbb {R}}^n_+\). Here, we see that \(r=p=d=n\), \(r_1 = \cdots = r_p = r_{\max }=1\), and \(\max \left( C^{\textrm{sd}} , md \right) = \max \left( C^{\textrm{min}} , md \right) = md\) hold. By substituting these values, the bounds (19) and (20) turn out to be

and

This implies that for the linear programming case, our method (which is equivalent to Roos’s original method [15]) is superior to Lourenço et al.’s method [10] in terms of bounds (19) and (20) .

Next, let us consider the case where \({\mathcal {K}}\) is composed of p simple second-order cones \({\mathbb {L}}^{n_i} \ (i= 1, \ldots , p)\). In this case, we see that \(d = \sum _{i=1}^p n_i\), \(r_1 = \cdots = r_p = r_{\max }=2\) and \(\max \left( C^{\textrm{sd}} , md \right) = \max \left( C^{\textrm{min}} , md \right) = md\) hold. By substituting these values, the bounds (19) and (20) turn out to be

and

Note that \(\varepsilon \) is expected to be very small (\(10^{-6}\) or even \(10^{-12}\) in practice) and \(\frac{1}{0.69} \log \left( \frac{1}{\varepsilon } \right) \le \frac{1}{0.09} \left( \log \left( \frac{1}{\varepsilon } \right) - \log 2 \right) \) if \(\varepsilon \le 0.451\). Thus, even in this case, we may conclude that our method is superior to Lourenço et al.’s method in terms of the bounds (19) and (20) .

Finally, let us consider the case where \({\mathcal {K}}\) is a simple \(n \times n\) positive semidefinite cone. We see that \(p=1\), \(r = n\), and \(d = \frac{n(n+1)}{2}\) hold, and upon substituting these values, the bounds (19) and (20) turn out to be

and

From the discussion in Sect. 6.3, we can assume \({\mathcal {O}}\left( C^{\textrm{sd}} \right) = {\mathcal {O}}\left( C^{\textrm{min}} \right) \), and the computational bounds of two methods are equivalent.

6.2 Comparison of Algorithm 2 and Pena and Soheili’s method

In this section, we assume that \({\mathcal {K}}\) is simple since [13] has presented an algorithm for the simple form. We also assume that \({\textrm{ker}} {\mathcal {A}} \cap {\textrm{int}} {\mathcal {K}} \ne \emptyset \), because Pena and Soheili’s method does not terminate if \({\textrm{ker}} {\mathcal {A}} \cap {\textrm{int}} {\mathcal {K}} = \emptyset \) and \({\textrm{range}} {\mathcal {A}}^* \cap {\textrm{int}} {\mathcal {K}} = \emptyset \). Furthermore, for the sake of simplicity, we assume that the main algorithm of Pena and Soheili’s method applies only to \({\textrm{ker}} {\mathcal {A}} \cap {\textrm{int}} {\mathcal {K}}\). (Their original method applies the main algorithm to \({\textrm{range}} {\mathcal {A}}^* \cap {\textrm{int}} {\mathcal {K}}\) as well.)

First, we will briefly explain the idea of deriving an upper bound for the number of iterations required to find \(x \in {\textrm{ker}} {\mathcal {A}} \cap {\textrm{int}} {\mathcal {K}}\) in Pena and Soheili’s method. Pena and Soheili derive it by focusing on the indicator \(\delta (\hbox {ker} {\mathcal {A}} \cap \hbox {int} {\mathcal {K}}) := \max _x \left\{ \textrm{det}(x) \mid x \in \hbox {ker} {\mathcal {A}} \cap \hbox {int} {\mathcal {K}} , \Vert x\Vert _J^2 = r \right\} \). If \({\textrm{ker}} {\mathcal {A}} \cap {\textrm{int}} {\mathcal {K}} \ne \emptyset \), then \(\delta (\hbox {ker} {\mathcal {A}} \cap \hbox {int} {\mathcal {K}}) \in (0,1]\) holds, and if \(e \in {\textrm{ker}} {\mathcal {A}} \cap {\textrm{int}} {\mathcal {K}}\), then \(\delta (\hbox {ker} {\mathcal {A}} \cap \hbox {int} {\mathcal {K}}) =1\) holds. If \(e \in {\textrm{ker}} {\mathcal {A}} \cap {\textrm{int}} {\mathcal {K}}\), then the basic procedure terminates immediately and returns \(\frac{1}{r}e\) as a feasible solution. Then, they prove that \(\delta (Q_v \left( \hbox {ker} {\mathcal {A}} \right) \cap \hbox {int} {\mathcal {K}}) \ge 1.5 \cdot \delta (\hbox {ker} {\mathcal {A}} \cap \hbox {int} {\mathcal {K}})\) holds if the parameters are appropriately set, and derive an upper bound on the number of scaling steps, i.e., the number of iterations, required to obtain \(\delta (Q_v \left( \hbox {ker} {\mathcal {A}} \right) \cap \hbox {int} {\mathcal {K}}) = 1\).

In the following, we obtain an upper bound for the number of iterations of Algorithm 2 using the index \(\delta ^{\textrm{supposed}} \left( {\textrm{ker}} {\mathcal {A}} \cap \hbox {int} {\mathcal {K}} \right) := \max _x \left\{ \textrm{det}(x) \mid x \in \hbox {ker} {\mathcal {A}} \cap \hbox {int} {\mathcal {K}} , \Vert x\Vert _J^2 = 1 \right\} \). Note that \(\delta \left( {\textrm{ker}} {\mathcal {A}} \cap \hbox {int} {\mathcal {K}} \right) = r^{\frac{r}{2}} \cdot \delta ^{\textrm{supposed}} \left( {\textrm{ker}} {\mathcal {A}} \cap \hbox {int} {\mathcal {K}} \right) \). In fact, if \(x^*\) is the point giving the maximum value of \(\delta ^{\textrm{supposed}} \left( {\textrm{ker}} {\mathcal {A}} \cap \hbox {int} {\mathcal {K}} \right) \), then the point giving the maximum value of \(\delta \left( {\textrm{ker}} {\mathcal {A}} \cap \hbox {int} {\mathcal {K}} \right) \) is \(\sqrt{r} x^*\). Also, if \({\textrm{ker}} {\mathcal {A}} \cap {\textrm{int}} {\mathcal {K}} \ne \emptyset \), then \(\delta ^{\textrm{supposed}} (\hbox {ker} {\mathcal {A}} \cap \hbox {int} {\mathcal {K}}) \in (0,1/ r^{\frac{r}{2}} ]\), and if \(\frac{1}{\sqrt{r}} e \in {\textrm{ker}} {\mathcal {A}} \cap {\textrm{int}} {\mathcal {K}}\), then \(\delta ^{\textrm{supposed}} (\hbox {ker} {\mathcal {A}} \cap \hbox {int} {\mathcal {K}}) =1/ r^{\frac{r}{2}}\).

The outline of this section is as follows: First, we show that a lower bound for \(\delta ^{\textrm{supposed}} \left( {\textrm{ker}} {\mathcal {A}} \cap \hbox {int} {\mathcal {K}} \right) \) can be derived using the index value \(\delta ^{\textrm{supposed}} \left( Q_{g^{-1}} \left( {\textrm{ker}} {\mathcal {A}} \right) \cap \hbox {int} {\mathcal {K}} \right) \) for the problem after scaling (Proposition 6.5). Then, using this result, we derive an upper bound for the number of operations required to obtain \(\delta ^{\textrm{supposed}} \left( Q_{g^{-1}} \left( {\textrm{ker}} {\mathcal {A}} \right) \cap \hbox {int} {\mathcal {K}} \right) = 1/ r^{\frac{r}{2}}\) (Proposition 6.6). Finally, we compare the proposed method with Pena and Soheili’s method. To prove Proposition 6.3 used in the proof of Proposition 6.5, we use the following propositions from [8].

Proposition 6.1

(Theorem 3 of [8]). Let \(c \in {\mathbb {E}}\) be an idempotent and \(N_\lambda (c)\) be the set such that \(N_\lambda (c) = \{ x \in {\mathbb {E}} \mid c \circ x = \lambda x\}\). Then \(N_\lambda (c)\) is a linear maniforld, but if \(\lambda \ne 0, \frac{1}{2}\), and 1, then \(N_\lambda (c)\) consists of zero alone. Each \(x \in {\mathbb {E}}\) can be represented in the form

in one and only one way.

Proposition 6.2

(Theorem 11 of [8].) \(c \in {\mathbb {E}}\) is a primitive idempotent if and only if \(N_1(c) =\{ x \in {\mathbb {E}} \mid c \circ x = x\} = {\mathbb {R}} c\).

Proposition 6.3

Let \(c \in {\mathbb {E}}\) be a primitive idempotent. Then, for any \(x \in {\mathbb {E}}\), \(\langle x, Q_c(x) \rangle = \langle x,c \rangle ^2\) holds.

Proof

From Propositions 6.1 and 6.2, for any \(x \in {\mathbb {E}}\), there exist a real number \(\lambda \in {\mathbb {R}}\) and elements \(u \in N_0(c)\) and \(v \in N_{\frac{1}{2}}(c)\) such that \(x =u + v + \lambda c \).

First, we show that \(\langle x,c \rangle = \lambda \). For \(v \in N_\frac{1}{2} (c)\), we see that \(\langle v,c \rangle = \langle v, c \circ c \rangle = \langle v \circ c, c \rangle = \langle c \circ v, c \rangle = \frac{1}{2} \langle v, c \rangle \), which implies that \(\langle v,c \rangle =0\). Thus, since \(u \in N_0(c)\) and \(u \circ c = 0\), \(\langle x,c \rangle \) is given by

On the other hand, by using the facts \(x = u + v + \lambda c\), \(c^2 = c\), \(c \circ u =0\) and \(c \circ v =\frac{1}{2} v\) repeatedly, we have

Thus, we have shown that \(\langle x, Q_c(x) \rangle = \langle x,c \rangle ^2\) holds. \(\square \)

Remark 6.4

It should be noted that the proof of Proposition 3 in [13] is not correct since equation (14) does not necessarily hold. The above Proposition 6.3 also gives a correct proof of Proposition 3 in [13]. See the computation \(\langle y , Q_{g^{-2}} (y) \rangle \) in the proof of Proposition 6.5.

Proposition 6.5

Suppose that \({\textrm{ker}} {\mathcal {A}} \cap {\textrm{int}} {\mathcal {K}} \ne \emptyset \) and that, for a given nonempty index set \(H \subseteq \{1 , \dots r \}\), Jordan frame \(c_1 , \dots , c_r \), and \(0< \xi < 1\),

holds for any \(x \in F_{\textrm{P}_{S_{\infty }}({\mathcal {A}})}\). Define \(g \in \hbox {int} {\mathcal {K}}\) as \(g := \sqrt{\xi } \sum _{h \in H} c_h + \sum _{h \notin H} c_h\). Then, the following inequality holds:

Proof

For simplicity of discussion, let \(|H|=1\), i.e., \(H =\{i\}\). Let us define the points \(x^*\), \(y^*\), and \({\bar{x}}^*\) as follows:

Note that the feasible region with respect to y is the set of solutions whose norm is 1 after scaling. First, we show that \(\Vert y\Vert _J^2 < 1\), and then \(\det (x^*) > \det (y^*)\). Proposition 2.5 ensures that \(\Vert Q_{g^{-1}} (y)\Vert _J^2 = \langle Q_{g^{-1}} (y) , Q_{g^{-1}} (y) \rangle = \langle y , Q_{g^{-2}} (y) \rangle \). To expand \(Q_{g^{-2}} (y)\), we expand the following equations by letting \(a = \frac{1}{\sqrt{\xi }} - 1 \):

Thus, \(Q_{g^{-2}} (y)\) turns out to be

and hence, we obtain \(\Vert Q_{g^{-1}} (y)\Vert _J^2\) as

where the second equality follows from Proposition 6.3. Here, \(y \in \textrm{int} {\mathcal {K}}\) and \(c_i \in {\mathcal {K}}\)imply that \(\langle y,c_i \rangle >0\), and \(y \circ y = y^2 \in \textrm{int} {\mathcal {K}}\) implies \(\langle y \circ y,c_i \rangle > 0\). Noting that \(a > 0\) and \(\Vert Q_{g^{-1}} (y)\Vert _J^2 = 1\), \(\Vert y\Vert _J^2 < 1\) should hold and hence, \(\frac{1}{\Vert y^*\Vert _J} >1\), which implies that \(\det \left( \frac{1}{\Vert y^*\Vert _J} y^* \right) > \det (y^*)\). Since \(\left\| \frac{1}{\Vert y^*\Vert _J} y^* \right\| _J^2 = 1\) holds, we find that \(\det (x^*) > \det (y^*)\). Next, we describe the lower bound for \(\det (y^*)\) using \(\det ({\bar{x}}^*)\). Since the largest eigenvalue of \({\bar{x}}\) satisfying \(\Vert {\bar{x}} \Vert _J^2 =1\) is less than 1, by Proposition 3.5, we have:

This implies \(\det (y^*) \ge \det \left( Q_g ({\bar{x}}^*) \right) \), and by Proposition 2.5, we have \(\det (y^*) \ge \det (g)^2 \det ({\bar{x}}^*) = \xi ^{|H|} \det ({\bar{x}}^*) = \xi \det ({\bar{x}}^*)\). Thus, \(\det (x^*) > \det (y^*) \ge \xi \det ({\bar{x}}^*)\) holds, and we can conclude that \(\delta ^{\textrm{supposed}} \left( {\textrm{ker}} {\mathcal {A}} \cap \textrm{int} {\mathcal {K}} \right) > \xi \cdot \delta ^{\textrm{supposed}} \left( Q_{g^{-1}} \left( {\textrm{ker}} {\mathcal {A}} \right) \right. \left. \cap \textrm{int} {\mathcal {K}} \right) \). \(\square \)

Next, using Proposition 6.5, we derive the maximum number of iterations until the proposed method finds \(x \in {\textrm{ker}} \hspace{0.3mm} {\mathcal {A}} \cap \textrm{int} \hspace{0.75mm} {\mathcal {K}}\) by using \(\delta \left( {\textrm{ker}} \hspace{0.3mm} {\mathcal {A}} \cap \textrm{int} \hspace{0.75mm} {\mathcal {K}} \right) \) as in Pena and Soheili’s method.

Proposition 6.6

Suppose that \({\textrm{ker}} {\mathcal {A}} \cap {\textrm{int}} \hspace{0.75mm} {\mathcal {K}} \ne \emptyset \) holds. Algorithm 2 returns \(x \in {\textrm{ker}} {\mathcal {A}} \cap \textrm{int} {\mathcal {K}}\) after at most \(\log _\xi \delta \left( {\textrm{ker}} {\mathcal {A}} \cap \textrm{int} {\mathcal {K}} \right) \) iterations.

Proof

Let \({\textrm{ker}} \bar{{\mathcal {A}}}\) be the linear subspace at the start of k iterations of Algorithm 2 and suppose that \(\delta ^{\textrm{supposed}} \left( {\textrm{ker}} \bar{{\mathcal {A}}} \cap \textrm{int} {\mathcal {K}} \right) = 1/r^{\frac{r}{2}}\) holds. Then, from Proposition 6.5, we find that \(\delta ^{\textrm{supposed}} \left( {\textrm{ker}} {\mathcal {A}} \cap \textrm{int} {\mathcal {K}} \right) > \xi ^k / r^{\frac{r}{2}}\). This implies that \(\delta \left( {\textrm{ker}} {\mathcal {A}} \cap \hbox {int} {\mathcal {K}} \right) > \xi ^k\) since \(\delta \left( {\textrm{ker}} {\mathcal {A}} \cap \hbox {int} {\mathcal {K}} \right) = r^{\frac{r}{2}} \cdot \delta ^{\textrm{supposed}} \left( {\textrm{ker}} {\mathcal {A}} \cap \hbox {int} {\mathcal {K}} \right) \) holds. By taking the logarithm base \(\xi \), we obtain \(\log _\xi \delta \left( {\textrm{ker}} {\mathcal {A}} \cap \hbox {int} {\mathcal {K}} \right) > k\). \(\square \)

From here on, using the above results, we will compare the computational complexities of the methods in the case that \({\mathcal {K}}\) is simple and \({\textrm{ker}} {\mathcal {A}} \cap {\textrm{int}} {\mathcal {K}} \ne \emptyset \) holds. Table 1 summarizes the upper bounds on the number of iterations of the main algorithm (UB#iter) of the two methods and the computational costs required per iteration (CC/iter). As in the previous section, the main algortihm requires \({\mathcal {O}} (m^3+m^2d)\) to compute the projection \({\mathcal {P}}_{\mathcal {A}}\). Here, BP shows the computational cost of the basic procedure in each method.

The upper bound on the number of iterations of Algorithm 2 is given by \(\log _\xi \delta \left( {\textrm{ker}} {\mathcal {A}} \cap \textrm{int} {\mathcal {K}} \right) = \log _{1.5} \delta \left( {\textrm{ker}} {\mathcal {A}} \cap \textrm{int} {\mathcal {K}} \right) /\log _{1.5} \xi \), where we should note that \(0< \xi < 1\). Since \(0 < \frac{1}{-\log _{1.5} \xi } \le 1\) when \(\xi \le 2/3\), if \(\xi \le 2/3\), then the upper bound on the number of iterations of Algorithm 2 is smaller than that of the main algorithm of Pena and Soheili’s method.

Next, Table 2 summarizes upper bounds on the number of iterations of basic procedures in the proposed method (UB#iter) and Pena and Soheili’s method and the computational cost required per iteration (CC/iter). It shows cases of using the von Neumann scheme and the smooth perceptron in each method (corresponding to Algorithm 1 and Algorithm 5 in the proposed method). As in the previous section, \( C^{\textrm{sd}}\) denotes the computational cost required for spectral decomposition, and \(C^{\min }\) denotes the computational cost required to compute only the minimum eigenvalue and the corresponding primitive idempotent.

Note that by setting \(\xi = (4r)^{-1}\), the upper bounds on the number of iterations of the basic procedure of the two methods are the same. If \(\xi = (4r)^{-1}\), then \(\frac{1}{-\log _{1.5} \xi } = \frac{1}{\log _{1.5} 4r} \le \frac{1}{\log _{1.5} 4} = 0.292\), and the upper bound of the number of iterations of Algorithm 2 is less than 0.3 times the upper bound of the number of iterations of the main algorithm of Pena and Soheili’s method, which implies that the larger the value of r is, the smaller the ratio of those bounds becomes. From the discussion in Sect. 6.3, we can assume \({\mathcal {O}}(C^{\textrm{sd}} = \mathcal {C^{\min }})\), and Table 2 shows that the proposed method is superior for finding a point \(x \in {\textrm{ker}} \hspace{0.3mm} {\mathcal {A}} \cap \textrm{int} \hspace{0.75mm} {\mathcal {K}}\).

6.3 Computational costs of \( C^{\textrm{sd}}\) and \(C^{\min }\)

This section discusses the computational cost required for spectral decomposition \( C^{\textrm{sd}}\) and the computational cost required to compute only the minimum eigenvalue and the corresponding primitive idempotent \(C^{\min }\).

There are so-called direct and iterative methods for eigenvalue calculation algorithms, briefly described on pp.139-140 of [4]. (Note that it is also written that there is no direct method in the strict sense of an eigenvalue calculation since finding eigenvalues is mathematically equivalent to finding zeros of polynomials).

In general, when using the direct method of \({\mathcal {O}}(n^3)\), we see that \( C^{\textrm{sd}}={\mathcal {O}}(n^3)\) and \(C^{\min }={\mathcal {O}}(n^3)\). The Lanczos algorithm is a typical iterative algorithm used for sparse matrices. Its cost per iteration of computing the product of a matrix and a vector once is \({\mathcal {O}}(n^2)\). Suppose the number of iterations at which we obtain a sufficiently accurate solution is constant with respect to the matrix size. In that case, the overall computational cost of the algorithm is \({\mathcal {O}}(n^2)\). Corollary 10.1.3 in [7] discusses the number of iterations that yields sufficient accuracy. It shows that we can expect fewer iterations if the value of "the difference between the smallest and second smallest eigenvalues / the difference between the second smallest and largest eigenvalue" is larger. However, it is generally difficult to assume that the above value does not depend on the matrix size and is sufficiently large. Thus, even in this case, we cannot take advantage of the condition that we only need the minimum eigenvalue, and we conclude that it is reasonable to consider that \({\mathcal {O}}(C^{\textrm{sd}})={\mathcal {O}}(C^{\min })\).

7 Numerical experiments

7.1 Outline of numerical implementation

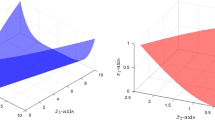

Numerical experiments were performed using the authors’ implementations of the algorithms on a positive semidefinite optimization problem with one positive semidefinite cone \({\mathcal {K}} = {\mathbb {S}}^n_{+}\) of the form

where \({\mathbb {S}}^n_{++}\) denotes the interior of \({\mathcal {K}} = {\mathbb {S}}^n_{+}\). We created strongly feasible ill-conditioned instances, i.e., \(\hbox {ker} {\mathcal {A}} \cap {\mathbb {S}}^n_{++} \ne \emptyset \) and \(X \in \hbox {ker} {\mathcal {A}} \cap {\mathbb {S}}^n_{++}\) has positive but small eigenvalues. We will explain how to make a such instance in Sect. 7.2. In what follows, we refer to Lourenço et al.’s method [10] as Lourenço (2019), and Pena and Soheili’s method [13] as Pena (2017). We set the termination parameter as \(\xi = 1/4\) in our basic procedure. The reason for setting \(\xi =1/4\) is to prevent the square root of \(\xi \) from becoming an infinite decimal, and to prevent the upper bound on the number of iterations of the basic procedure from becoming too large. We also set the accuracy parameter as \(\varepsilon \) = 1e-12, both in our main algorithm and in Lourenço (2019) and determined whether \({\textrm{P}_{S_\infty } ({\mathcal {A}})}\) or \({\textrm{P}_{S_1} ({\mathcal {A}})}\) has a solution whose minimum eigenvalue is greater than or equal to \(\varepsilon \). Note that [13] proposed various update methods for the basic procedure. In our numerical experiments, all methods employed the modified von Neumann scheme (Algorithm 4) with the identity matrix as the initial point and the smooth perceptron scheme (Algorithm 5). This implies that the basic procedures used in the three methods differ only in the termination conditions for moving to the main algorithm and that all other steps are the same. All executions were performed using MATLAB R2022a on an Intel (R) Core (TM) i7-6700 CPU @ 3.40GHz machine with 16GB of RAM. Note that we computed the projection \({\mathcal {P}}_{\mathcal {A}}\) using the MATLAB function for the singular value decomposition. The projection \({\mathcal {P}}_{\mathcal {A}}\) was given by \({\mathcal {P}}_{\mathcal {A}} = I - A^\top (AA^\top )^{-1} A\) using the matrix \(A \in {\mathbb {R}}^{m \times d}\) which represents the linear operator \({\mathcal {A}}(\cdot )\) and the identity matrix I. Here, suppose that the singular value decomposition of a matrix A is given by \( A = U \Sigma V^\top = U (\Sigma _m \ O) V^\top \) where \(U \in {\mathbb {R}}^{m \times m}\) and \(V \in {\mathbb {R}}^{d \times d}\) are orthogonal matrices, and \(\Sigma _m \in {\mathbb {R}}^{m \times m}\) is a diagonal matrix with m singular values on the diagonal. Substituting this decomposition into \(A^\top (AA^\top )^{-1} A\), we have

where \(V_{:, 1:m}\) represents the submatrix from column 1 to column m of V. Thus, for any \(x \in {\mathbb {E}}\), we can compute \({\mathcal {P}}_{\mathcal {A}}(x) = x - V_{:, 1:m} V_{:,1:m}^\top x\).