Abstract

We consider the bound-constrained global optimization of functions with low effective dimensionality, that are constant along an (unknown) linear subspace and only vary over the effective (complement) subspace. We aim to implicitly explore the intrinsic low dimensionality of the constrained landscape using feasible random embeddings, in order to understand and improve the scalability of algorithms for the global optimization of these special-structure problems. A reduced subproblem formulation is investigated that solves the original problem over a random low-dimensional subspace subject to affine constraints, so as to preserve feasibility with respect to the given domain. Under reasonable assumptions, we show that the probability that the reduced problem is successful in solving the original, full-dimensional problem is positive. Furthermore, in the case when the objective’s effective subspace is aligned with the coordinate axes, we provide an asymptotic bound on this success probability that captures its polynomial dependence on the effective and, surprisingly, ambient dimensions. We then propose X-REGO, a generic algorithmic framework that uses multiple random embeddings, solving the above reduced problem repeatedly, approximately and possibly, adaptively. Using the success probability of the reduced subproblems, we prove that X-REGO converges globally, with probability one, and linearly in the number of embeddings, to an \(\epsilon \)-neighbourhood of a constrained global minimizer. Our numerical experiments on special structure functions illustrate our theoretical findings and the improved scalability of X-REGO variants when coupled with state-of-the-art global—and even local—optimization solvers for the subproblems.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this paper, we address the bound-constrained global optimization problem

where \(f: {\mathbb {R}}^D \rightarrow {\mathbb {R}}\) is continuous, possibly non-convex and deterministic,Footnote 1 and where, without loss of generality,Footnote 2\({\mathcal {X}} := [-1,1]^D\subseteq {\mathbb {R}}^D\).

In an attempt to alleviate the curse of dimensionality of generic global optimization, we focus on objective functions with ‘low effective dimensionality’ [58], namely, those that only vary over a low-dimensional subspace (which may not necessarily be aligned with standard axes), and remain constant along its orthogonal complement. These functions are also known as objectives with ‘active subspaces’ [14] or ‘multi-ridge’ [25, 55]. They are frequently encountered in applications, typically when tuning (over)parametrized models and processes, such as in hyper-parameter optimization for neural networks [3], heuristic algorithms for combinatorial optimization problems [34], complex engineering and physical simulation problems [14] as in climate modelling [37], and policy search and dynamical system control [26, 61].

When the objective has low effective dimensionality and the effective subspace of variation is known, it is straightforward to cast (P) into a lower-dimensional problem which has the same global minimum \(f^*\) by restricting it to and solving (P) only within this important subspace. Typically, however, the effective subspace is unknown, and random embeddings have been proposed to reduce the size of (P) and hence the cost of its solution, while attempting to preserve the problem’s (original) global minimum values. In this paper, we investigate the following feasible formulation of the reduced randomised problem,

where \({{\varvec{A}}}\) is a \(D\times d\) Gaussian random matrix (see Definition A.1) with \(d \ll D\), and where \({{\varvec{p}}} \in {\mathcal {X}}\) is user-defined and provides additional flexibility that we exploit algorithmically. Our approach needs the following clarification.

Definition 1.1

We say that (\({\mathrm{RP}}{\mathcal {X}}\)) is successful if there exists \({{\varvec{y}}}^* \in {\mathbb {R}}^d\) such that \(f({{\varvec{A}}}{{\varvec{y}}}^*+{{\varvec{p}}}) = f^*\) and \({{\varvec{A}}}{{\varvec{y}}}^* + {{\varvec{p}}} \in {\mathcal {X}}\).

We derive a lower bound on the probability that (\({\mathrm{RP}}{\mathcal {X}}\)) is successful in the case when d lies between \(d_e\) and \(D-d_e\), where \(d_e\) is the dimension of the effective subspace. We show that this success probability is positive and that it depends on both the effective subspace and the ambient dimensions.Footnote 3 However, in the case when the effective subspace is aligned with the coordinate axes, we show that the dependence on D in this lower bound is at worst polynomial. We then propose X-REGO (\({\mathcal {X}}\)-Random Embeddings for Global Optimization), a generic algorithmic framework for solving (P) using multiple random embeddings. Namely, X-REGO solves (\({\mathrm{RP}}{\mathcal {X}}\)) repeatedly with different \({{\varvec{A}}}\) and possibly different \({{\varvec{p}}}\), and can use any global optimization algorithm for solving the reduced problem (\({\mathrm{RP}}{\mathcal {X}}\)). Using the computed lower bound on the probability of success of (\({\mathrm{RP}}{\mathcal {X}}\)), we derive a global convergence result for X-REGO, showing that as the number of random embeddings increases, X-REGO converges linearly, with probability one, to an \(\epsilon \)-neighbourhood of a global minimizer of (P).

Existing relevant literature Optimization of functions with low effective dimensionality has been recently studied primarily as an attempt to remedy the scalability challenges of Bayesian Optimization (BO), such as in [17, 21, 28, 41, 58]. Investigations of these special-structure problems have been extended beyond BO, to derivative-free optimization [48], multi-objective optimization [47] and evolutionary methods [15, 50]. As the effective subspace is generally unknown, some existing approaches learn the effective subspace beforehand [17, 21, 25, 55], while others estimate it during the optimization, updating the estimate as new information becomes available on the objective function [13, 15, 28, 61]. We focus here on an alternative approach, bypassing the subspace learning phase, and optimizing directly over random low-dimensional subspaces, as proposed in [6, 7, 36, 58].

Wang et al. [58] propose the REMBO algorithm, that solves, using Bayesian methods, a single reduced subproblem,

where \({{\varvec{A}}}\) is as above, and \(\delta > 0\). They evaluate the probability that the solution of (RP) corresponds to a solution of the original problem (P) in the case when the effective subspace is aligned with coordinate axes and when \(d=d_e\), where \(d_e\) denotes the dimension of the effective subspace; they show that this probability of success of (RP) depends on the parameter \(\delta \) (the size of the \({\mathcal {Y}}\) box), and it decreases as \(\delta \) shrinks. Conversely, setting \(\delta \) large may result in large computational costs to solve (RP). Thus, a careful calibration of \(\delta \) is needed for good algorithmic performance. The theoretical analysis in [58] has been extended by Sanyang and Kabán [50], where the probability of success of (RP) is quantified in the case \(d \ge d_e\); an algorithm, called REMEDA, is also proposed in [50] that uses Gaussian random embeddings in the framework of evolutionary methods for high-dimensional unconstrained global optimization.

In the recent preprint [9], we further extend these analyses to arbitrary effective subspaces (i.e., not necessarily aligned with the coordinate axes) and random embeddings of dimension \(d\ge d_e\), and consider the wider framework of generic unconstrained high-dimensional global optimization. We propose the REGO algorithm, that replaces the high-dimensional problem (P) (with \({\mathcal {X}} = {\mathbb {R}}^{D})\), by a single reduced problem (RP), and solves (RP) using any global optimization algorithm. Instead of estimating solely the norm of an optimal solution of (RP), as in [50, 58], we derive its exact probability distribution. Furthermore, we show that its squared Euclidean norm (when appropriately scaled) follows an inverse chi-squared distribution with \(d - d_e +1\) degrees of freedom, and use a tail bound on the chi-squared distribution to get a lower bound on the probability of success of (RP). Our theory and numerical experiments indicate that, under suitable assumptions, the success of (RP) is essentially independent on D, but depends mainly on two factors: the gap between the subspace dimension d and the effective dimension \(d_e\), and the ratio between \(\delta \) (the size of the low-dimensional domain), and the distance from the origin (the centre of the original domain \({\mathcal {X}}\)) to the closest affine subspace of global minimizers.

In contrast to [9, 50], the present case of the constrained problem (P) poses a new challenge: a solution \({{\varvec{y^*}}}\) of (RP) is not necessarily feasible for the full-dimensional problem (P) (i.e., \({{\varvec{A}}} {{\varvec{y}}}^* \notin {\mathcal {X}}\)). To remedy this, Wang et al. [58] endow REMBO with an additional step that projects \({{\varvec{A}}} {{\varvec{y}}}^*\) onto \({\mathcal {X}}\). However, the lack of injectivity of the projection may not be beneficial to the proposed Bayesian optimization algorithm as some parts of the domain might be over-explored when using a classical kernel (such as the squared exponential kernel) directly on the low-dimensional domain \({\mathcal {Y}}\). The design of kernels avoiding this over-exploration has been tackled in [6, 7]. Binois et al. [7] further advances the discussion regarding the choice of the low-dimensional domain \({\mathcal {Y}}\) in (RP) and computes an ‘optimal’ set \({\mathcal {Y}}^* \subset {\mathbb {R}}^d\), i.e., a set that has minimum (here, infimum) volume among all the sets \({\mathcal {Y}} \subset {\mathbb {R}}^d\) for which the image of the mapping \({\mathcal {Y}} \rightarrow {\mathcal {X}}: {{\varvec{y}}} \mapsto p_{\mathcal {X}}({{\varvec{A}}} {{\varvec{y}}})\) contains the ‘maximal embedded set’ \(\{ p_{\mathcal {X}}({{\varvec{A}}} {{\varvec{y}}}) : {{\varvec{y}}} \in {\mathbb {R}}^d \}\), where \(p_{{\mathcal {X}}}({{\varvec{x}}})\) is the classical Euclidean projection of \({{\varvec{x}}}\) on \({\mathcal {X}}\). They show that \(\mathcal {Y^*}\) has an intricate representation when the dimension of the full-dimensional problem is large, and propose to replace the Euclidean projection map \(p_{{\mathcal {X}}}\) suggested by Wang et al. [58] by an alternative mapping for which an ‘optimal’ low-dimensional domain has nicer properties. Nayebi et al. [43] circumvent the projection step by replacing the Gaussian random embeddings of (RP) by random embeddings defined using hashing matrices, and choose \({\mathcal {Y}} = [-1,1]^d\). This choice guarantees that any solution of the low-dimensional problem provides an admissible solution for the full-dimensional problem in the case \({\mathcal {X}} = [-1,1]^D\).

The need to combine optimization algorithms that rely on random Gaussian embeddings with a projection step has also been recently discussed in [40], where it is suggested to replace the formulation (RP) by (\({\mathrm{RP}}{\mathcal {X}}\)), that we also consider in this paper. However, Letham et al. [40] do not provide analytical estimates of the probability of success of this new formulation, solely evaluating it numerically using Monte-Carlo simulations; they also do not use multiple random embeddings as proposed in Wang et al. [58] and employed here as well. Our proposed X-REGO algorithmic framework (and more precisely, the adaptive variant A-REGO described in Sect. 5) is closely related to the sequential algorithm proposed by Qian et al. [48], in the framework of unconstrained derivative-free optimization of functions with approximate low-effective dimensionality, and to the algorithm proposed in [36] for constrained Bayesian optimization of functions with low-effective dimension, using one-dimensional random embeddings. However, our results rely on the assumption that the subspace dimension d is larger than the effective dimension \(d_e\), and so our approach significantly differs from [36]. Very recently, Tran-The et al. [54] have proposed an algorithm that uses several low-dimensional (deterministic) embeddings in parallel for Bayesian optimization of high-dimensional functions.

Randomized subspace/projections methods have recently attracted much interest for local or convex optimization problems; see for example, [30, 33, 38, 42, 44, 57]; no low effective dimensionality assumption is made in these works. Finally, we note that the main step in our convergence analysis consists in deriving a lower bound on the probability that a random subspace of given dimension intersects a given set (the set of approximate global minimizers), which is an important problem in stochastic geometry, see, e.g., the extensive discussion by Oymak and Tropp [46]. Unlike the results presented in [46], our results do not involve statistical dimensions of sets, which are unknown and, in our case, problem dependent.

We have recently explored the applicability of X-REGO to global optimization of Lipschitz-continuous objectives, that do not necessarily have low effective dimension, for arbitrary (non-empty) domain \({\mathcal {X}}\) and arbitrary d. We prove in [12] that X-REGO converges almost surely to an \(\epsilon \)-neighbourhood of a (constrained) global minimizer of (P), relying on tools from conic integral geometry to bound the probability of success of the random subproblem. The latter bound is understandably exponential in the big problem dimension and does not provide a distributional result for the size of the reduced minimizer. Even asymptotically, the general success bound is exponential in D while when there is an effective subspace aligned with coordinate axes, the bound here is polynomial. We also address in [12] the unconstrained case when the objective has low effective dimension but the effective dimension \(d_e\) is unknown, and propose a variant of X-REGO that explores random subspaces of increasing dimension until finding the effective dimension of the problem.

Our contributions Here we investigate a general random embedding framework for the bound-constrained global optimization of functions with low effective dimensionality. This framework replaces the original, potentially high-dimensional problem (P) with several reduced and randomized subproblems of the form (\({\mathrm{RP}}{\mathcal {X}}\)), which directly ensures feasibility of the iterates with respect to the constraints.

Using various properties of Gaussian matrices and a useful result from [9], we derive a lower bound on the probability of success of (\({\mathrm{RP}}{\mathcal {X}}\)) when \(d_e \le d \le D-d_e\). To achieve this, we provide a sufficient condition for the success of (\({\mathrm{RP}}{\mathcal {X}}\)) that depends on a random vector \({{\varvec{w}}}\), which in turn, is a function of the embedding matrix \({{\varvec{A}}}\), the parameter \({{\varvec{p}}}\) of (\({\mathrm{RP}}{\mathcal {X}}\)) and an arbitrary global minimizer \({{\varvec{x}}}^*\) of (P). We show that \({{\varvec{w}}}\) follows a \((D-d_e)\)-dimensional t-distribution with \(d - d_e +1\) degrees of freedom, and provide a lower bound on the probability of success of (\({\mathrm{RP}}{\mathcal {X}}\)) in terms of the integral of the probability density function of \({{\varvec{w}}}\) over a given closed domain. In the case when the effective subspace is aligned with the coordinate axes, the closed domain simplifies to a \((D-d_e)\)-dimensional box, and we provide an asymptotic expansion of the integral of the probability density function over the box, when \(D\rightarrow \infty \) (and d and \(d_e\) are fixed). Our theoretical analysis, backed by numerical testing, indicates that the probability of success of (\({\mathrm{RP}}{\mathcal {X}}\)) decreases with the dimension D of the original problem (P). However, in the case when the effective subspace is aligned with the coordinate axes, we show that it decreases at most in a polynomial way with the ambient dimension D for some useful choices of \({{\varvec{p}}}\).

We also propose the X-REGO algorithm, a generic framework for the constrained global optimization problem (P) that sequentially or in parallel solves multiple subproblems (\({\mathrm{RP}}{\mathcal {X}}\)), varying \({{\varvec{A}}}\) and also possibly \({{\varvec{p}}}\). We prove global convergence of X-REGO to a set of approximate global minimizers of (P) with probability one, with linear rate in terms of the number of subproblems solved. This result requires mild assumptions on problem (P) (f is Lipschitz continuous and (P) admits a strictly feasible solution) and on the algorithm used to solve the reduced problem (namely, it must solve (\({\mathrm{RP}}{\mathcal {X}}\)) globally and approximately, to required accuracy), and allows a diverse set of possible choices of \({{\varvec{p}}}\) (random, fixed, adaptive, deterministic). Our convergence proof crucially uses our result that the probability of success of (\({\mathrm{RP}}{\mathcal {X}}\)) is positive and uniformly bounded away from zero with respect to the choice of \({{\varvec{p}}}\), and hence, assumes that \(d_e \le d \le D-d_e\).

We provide an extensive numerical comparison of several variants of X-REGO on a set of test problems with low effective dimensionality, using three different solvers for (\({\mathrm{RP}}{\mathcal {X}}\)), namely, BARON [49], DIRECT [24] and (global and local) KNITRO [8]. We find that X-REGO variants show significantly improved scalability with most solvers, as the ambient problem dimension grows, compared to directly using the respective solvers on the test set. Notable efficiency was obtained in particular when local KNITRO was used to solve the subproblems and the points \({{\varvec{p}}}\) were updated to the ‘best’ point (with the smallest value of f) found so far.

Paper outline In Sect. 2, we recall the definition of functions with low effective dimensionality and some existing results that we will use in our analysis. Section 3 derives lower bounds for the probability of success of (\({\mathrm{RP}}{\mathcal {X}}\)). The X-REGO algorithm and its global convergence are then presented in Sect. 4, while in Sect. 5, different X-REGO variants are compared numerically on benchmark problems using three optimization solvers (DIRECT, BARON and KNITRO) for the subproblems. Our conclusions are drawn in Sect. 6.

Notation We use bold capital letters for matrices (\({{\varvec{A}}}\)) and bold lowercase letters (\({{\varvec{a}}}\)) for vectors. In particular, \({{\varvec{I}}}_D\) is the \(D \times D\) identity matrix and \({{\varvec{0}}}_D\), \({{\varvec{1}}}_D\) (or simply \({{\varvec{0}}}\), \({{\varvec{1}}}\)) are the D-dimensional vectors of zeros and ones, respectively. We write \(a_i\) to denote the ith entry of \({{\varvec{a}}}\) and write \({{\varvec{a}}}_{i:j}\), \(i<j\), for the vector \((a_i \; a_{i+1} \cdots a_{j})^T\). We let \({{\,\mathrm{range}\,}}({{\varvec{A}}})\) denote the linear subspace spanned in \({\mathbb {R}}^D\) by the columns of \({{\varvec{A}}} \in {\mathbb {R}}^{D \times d}\). We write \(\langle \cdot , \cdot \rangle \), \(\Vert \cdot \Vert \) and \(\Vert \cdot \Vert _{\infty }\) for the usual Euclidean inner product, the Euclidean norm and the infinity norm, respectively. Where emphasis is needed, for the Euclidean norm we also use \(\Vert \cdot \Vert _2\).

Given two random variables (vectors) x and y (\({{\varvec{x}}}\) and \({{\varvec{y}}}\)), the expression \(x {\mathop {=}\limits ^{law}} y\) (\({{\varvec{x}}} {\mathop {=}\limits ^{law}} {{\varvec{y}}}\)) means that x and y (\({{\varvec{x}}}\) and \({{\varvec{y}}}\)) have the same distribution. We reserve the letter \({{\varvec{A}}}\) for a \(D\times d\) Gaussian random matrix (see Definition A.1) and write \(\chi ^2_n\) to denote a chi-squared random variable with n degrees of freedom (see Definition A.5).

Given a point \({{\varvec{a}}} \in {\mathbb {R}}^D\) and a set S of points in \({\mathbb {R}}^D\), we write \({{\varvec{a}}}+S\) to denote the set \(\{ {{\varvec{a}}}+{{\varvec{s}}}: {{\varvec{s}}} \in S \}\). Given functions \(f(x):{\mathbb {R}}\rightarrow {\mathbb {R}}\) and \(g(x):{\mathbb {R}}\rightarrow {\mathbb {R}}^+\), we write \(f(x) = \Theta (g(x))\) as \(x \rightarrow \infty \) to denote the fact that there exist positive reals \(M_1,M_2\) and a real number \(x_0\) such that, for all \(x \ge x_0\), \(M_1g(x)\le |f(x)| \le M_2g(x)\).

2 Preliminaries

2.1 Functions with low effective dimensionality

Definition 2.1

(Functions with low effective dimensionality [58]) A function \(f : {\mathbb {R}}^D \rightarrow {\mathbb {R}}\) has effective dimension \(d_e\) if there exists a linear subspace \({\mathcal {T}}\) of dimension \(d_e\) such that for all vectors \({{\varvec{x}}}_{\top }\) in \({\mathcal {T}}\) and \({{\varvec{x}}}_{\perp }\) in \({\mathcal {T}}^{\perp }\) (the orthogonal complement of \({\mathcal {T}}\)), we have

and \(d_e\) is the smallest integer satisfying (2.1).

The linear subspaces \({\mathcal {T}}\) and \({\mathcal {T}}^{\perp }\) are called the effective and constant subspaces of f, respectively. In this paper, we make the following assumption on the function f.

Assumption 2.2

The function \(f:{\mathbb {R}}^D \rightarrow {\mathbb {R}}\) is continuous and has effective dimensionality \(d_e\) such that \(d_e < D\), with effective subspaceFootnote 4\({\mathcal {T}}\) and constant subspace \({\mathcal {T}}^{\perp }\) spanned by the columns of the orthonormal matrices \({{\varvec{U}}} \in {\mathbb {R}}^{D \times d_e}\) and \({{\varvec{V}}} \in {\mathbb {R}}^{D\times (D-d_e)}\), respectively. We let \({{\varvec{x}}}_\top = {{\varvec{U}}} {{\varvec{U}}}^T {{\varvec{x}}}\) and \({{\varvec{x}}}_\perp = {{\varvec{V}}} {{\varvec{V}}}^T {{\varvec{x}}}\), the unique Euclidean projections of any vector \({{\varvec{x}}} \in {\mathbb {R}}^D\) onto \({\mathcal {T}}\) and \({\mathcal {T}}^\perp \), respectively.

We define the set of feasible global minimizers of problem (P),

Note that, for any \({{\varvec{x}}}^* \in G\) with Euclidean projection \({{\varvec{x}}}_\top ^*\) on the effective subspace \({\mathcal {T}}\), and for any \(\tilde{{{\varvec{x}}}} \in {\mathcal {T}}^\perp \), we have

The minimizer \({{\varvec{x}}}_{\top }^*\) may lie outside \({\mathcal {X}}\), and furthermore, there may be multiple points \({{\varvec{x}}}_{\top }^*\) in \({\mathcal {T}}\) satisfying \(f^* = f({{\varvec{x}}}^*_\top )\) as illustrated in [9, Example 1.1]. Thus, the set G is (generally)Footnote 5 a union of (possibly infinitely many) (\(D-d_e\))-dimensional simply-connected polyhedral sets, each corresponding to a particular \({{\varvec{x}}}_{\top }^*\). If \({{\varvec{x}}}_{\top }^*\) is unique, i.e., every global minimizer \({{\varvec{x}}}^* \in G\) has the same Euclidean projection \({{\varvec{x}}}_\top ^*\) on the effective subspace, then G is the \((D-d_e)\)-dimensional set \(\{ {{\varvec{x}}} \in {\mathcal {X}} : {{\varvec{x}}} \in {{\varvec{x}}}_{\top }^* + {\mathcal {T}}^{\perp } \}\).

Definition 2.3

Suppose Assumption 2.2 holds. For any global minimizer \({{\varvec{x}}}^* \in G\), let \(G^* := \{ {{\varvec{x}}} \in {\mathcal {X}} : {{\varvec{x}}} \in {{\varvec{x}}}_{\top }^* + {\mathcal {T}}^{\perp } \}\) be the simply connected subset of G that contains \({{\varvec{x}}}_{\top }^* = {{\varvec{U}}} {{\varvec{U}}}^T {{\varvec{x}}}^*\), and \({\mathcal {G}}^* := \{ {{\varvec{x}}} \in {\mathbb {R}}^D: {{\varvec{x}}} \in {{\varvec{x}}}_{\top }^* + {\mathcal {T}}^{\perp } \}\), the (\(D-d_e\))-dimensional affine subspace that contains \(G^*\).

We can express \(G^* = {\mathcal {G}}^* \cap {\mathcal {X}} = \{{{\varvec{x}}}_{\top }^*+{{\varvec{V}}} {{\varvec{g}}}: -{{\varvec{1}}} \le {{\varvec{x}}}_{\top }^* + {{\varvec{V}}} {{\varvec{g}}} \le {{\varvec{1}}}, {{\varvec{g}}} \in {\mathbb {R}}^{D-d_e} \}\), where \({{\varvec{V}}}\) is defined in Assumption 2.2. For each \(G^*\), we define the corresponding set of “admissible” \((D-d_e)\)-dimensional vectors as

Note that the set \(G^*\) is \((D-d_e)\)-dimensional if and only if the volume of the set \({\bar{G}}^*\) in \({\mathbb {R}}^{D-d_e}\), denoted by \({{\,\mathrm{Vol}\,}}({\bar{G}}^*)\), is non-zero. In some particular cases, when the global minimizer \({{\varvec{x}}}^*\) in Definition 2.3 is on the boundary of \({\mathcal {X}}\), the corresponding simply connected component \(G^*\) may be of dimension strictly lower than \((D-d_e)\) and, hence, \({{\,\mathrm{Vol}\,}}({\bar{G}}^*) = 0\); a case we need to sometimes exclude from our analysis.

Definition 2.4

Let \(G^*\) and \({\bar{G}}^*\) be defined as in Definition 2.3 and (2.4), respectively. We say that \(G^*\) is non-degenerate if \({{\,\mathrm{Vol}\,}}({\bar{G}}^*) > 0\).

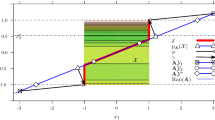

The definitions and assumptions introduced in this section are illustrated next in Fig. 1.

Abstract illustration of the embedding of an affine d-dimensional subspace \({{\varvec{p}}}+{{\,\mathrm{range}\,}}({{\varvec{A}}})\) into \({\mathbb {R}}^D\). The red line represents the feasible set of solutions along \({{\varvec{p}}}+{{\,\mathrm{range}\,}}({{\varvec{A}}})\) and the blue line represents the set \(G^*\). The random subspace intersects \({\mathcal {G}}^*\) at \({{\varvec{x}}}^*\), which is infeasible

Geometric description of the problem Figure 1 sketches the linear mapping \( {{\varvec{y}}} \rightarrow {{\varvec{A}}}{{\varvec{y}}} + {{\varvec{p}}}\) that maps points from \({\mathbb {R}}^d\) to points in the affine subspace \({{\varvec{p}}}+{{\,\mathrm{range}\,}}({{\varvec{A}}})\) in \({\mathbb {R}}^D\). This figure also illustrates the case of a non-degenerate simply-connected component \(G^*\) of global minimizers (blue line; Definition 2.3), which here has dimension \(D-d_e=1\). Degeneracy of \(G^*\) (Definition 2.4) would occur if \({{\varvec{x}}}^*_{\top }\) was a vertex of the domain \({\mathcal {X}}\), in which case the corresponding \(G^*\) would be a singleton.

For (\({\mathrm{RP}}{\mathcal {X}}\)) to be successful in solving the original problem (P), Fig. 1 illustrates it is sufficient that the red line segment (the feasible set of (reduced) solutions in \({\mathbb {R}}^d\) mapped to \({\mathbb {R}}^D\)) intersects the blue line segment (the set \(G^*\)). If \(G^* = G\), this sufficient condition is also necessary, so that (\({\mathrm{RP}}{\mathcal {X}}\)) is not successful; else, we need to check the other simply connected components of G to decide whether (\({\mathrm{RP}}{\mathcal {X}}\)) is successful or not.

Note that, while the blue and red line segments do not intersect in Fig. 1, their prolongations outside \({\mathcal {X}}\) (\({\mathcal {G}}^*\) and \({{\varvec{p}}}+{{\,\mathrm{range}\,}}({{\varvec{A}}})\)) do. This is related to [58, Theorem 2], which says that if the dimension of the embedded subspace (d) is greater than the effective dimension (\(d_e\)) of f then \({\mathcal {G}}^*\) and \({{\varvec{p}}}+{{\,\mathrm{range}\,}}({{\varvec{A}}})\) intersect with probability one. Wang et al. [58] have shown this result for the case \({{\varvec{p}}} = {{\varvec{0}}}\), but it can easily be generalized to arbitrary \({{\varvec{p}}}\). In other words, in the unconstrained setting when \({\mathcal {X}} = {\mathbb {R}}^D\) and \(d \ge d_e\), it is known that the reduced problem (\({\mathrm{RP}}{\mathcal {X}}\)) is successful with probability one [9, 58]: one random embedding typically suffices to solve the original problem (P). The main contribution of this paper is to quantify the probability of the random embedding to contain a feasible solution of the original problem, in the bound-constrained setting. For this, we rely on results of [9] that characterize the distribution of a random reduced minimizer \({{\varvec{y}}}^*\) such that \({{\varvec{A}}}{{\varvec{y}}}^*+{{\varvec{p}}}\) is an (unconstrained) minimizer of f, thus quantifying a specific intersection between the random subspace and \({\mathcal {G}}^*\). We recall these results in Sect. 2.2, and use this characterization in Sect. 3 to derive a lower bound on the probability of \({{\varvec{A}}} {{\varvec{y}}}^* + {{\varvec{p}}}\) to belong to \(G^*\), namely, to be feasible for the original problem (P).

2.2 Characterization of (unconstrained) minimizers in the reduced space

Let \({\mathcal {S}}^* := \{ {{\varvec{y}}}^* \in {\mathbb {R}}^d : {{\varvec{A}}}{{\varvec{y}}}^* + {{\varvec{p}}} \in {\mathcal {G}}^* \}\), with \({\mathcal {G}}^*\) defined in Definition 2.3, be a subset of points \({{\varvec{y}}}^*\) corresponding to solutions of minimizing f over the entire \({\mathbb {R}}^D\). With probability one, \({\mathcal {S}}^*\) is a singleton if \(d = d_e\) and has infinitely many points if \(d>d_e\) [9, Corollary 3.3]. It is sufficient to find one of the reduced minimizers in \({\mathcal {S}}^*\), ideally one that is easy to analyse, and that is close to the origin (i.e., the centre of the domain \({\mathcal {X}}\)) in some norm so as to encourage the feasibility with respect to \({\mathcal {X}}\) of its image through \({{\varvec{A}}}\). An obvious candidate is the minimal Euclidean norm solution,

The following result provides a useful characterization of \({{\varvec{y}}}_2^*\) as the minimum Euclidean norm solution to a random linear system.

Theorem 2.5

[9, Theorem 3.1] Suppose Assumption 2.2 holds. Let \({{\varvec{x}}}^*\) be any global minimizer of (P) with Euclidean projection \({{\varvec{x}}}_\top ^*\) on the effective subspace, and \({{\varvec{p}}}\in {\mathcal {X}}\), a given vector. Let \({{\varvec{A}}}\) be a \(D \times d\) Gaussian matrix, with \(d \ge d_e\). Then \({{\varvec{y}}}_2^{*}\) defined in (2.5) is given by

which is the minimum Euclidean norm solution to the system

where \( {{\varvec{B}}} = {{\varvec{U}}}^T {{\varvec{A}}}\) and \({{\varvec{z}}}^* \in {\mathbb {R}}^{d_e} \) is uniquely defined by

Proof

The full proof is given in the technical report version of this paper, see [11]. \(\square \)

Remark 2.6

Note that \({{\varvec{B}}} = {{\varvec{U}}}^T{{\varvec{A}}}\) is a \(d_e \times d\) Gaussian matrix, since \({{\varvec{U}}}\) has orthonormal columns (see Theorem A.2). Also, (2.8) implies \(\Vert {{\varvec{z}}}^* \Vert _2 = \Vert {{\varvec{x}}}_{\top }^* - {{\varvec{p}}}_{\top }\Vert _2\).

Using (2.6) and various properties of Gaussian matrices, [9] shows that the squared Euclidean norm of \({{\varvec{y}}}_2^*\) follows the (appropriately scaled) inverse chi-squared distribution. We rely on this result in the next section (see the proof of Theorem 3.3) to quantify the probability of success of (\({\mathrm{RP}}{\mathcal {X}}\)), which we will lower bound by the probability that \({{\varvec{A}}} {{\varvec{y}}}_2^* + {{\varvec{p}}} \in {\mathcal {X}}\), i.e., that \({{\varvec{A}}}{{\varvec{y}}}_2^* + {{\varvec{p}}}\), the representation of \({{\varvec{y}}}_2^*\) in the high-dimensional domain, is feasible for (P).

Theorem 2.7

([9, Theorem 3.7]) Suppose Assumption 2.2 holds. Let \({{\varvec{x}}}^*\) be any global minimizer of (P) and \({{\varvec{p}}}\in {\mathcal {X}}\) a given vector, with respective projections \({{\varvec{x}}}_\top ^*\) and \({{\varvec{p}}}_\top \) on the effective subspace. Let \({{\varvec{A}}}\) be a \(D \times d\) Gaussian matrix, with \(d \ge d_e\). Then, \({{\varvec{y}}}^*_2\) defined in (2.5) satisfies

If \({{\varvec{x}}}_{\top }^* = {{\varvec{p}}}_{\top }\), then \({{\varvec{y}}}^*_2 = {{\varvec{0}}}\) .

3 Estimating the success of the reduced problem

This section derives lower bounds on the probability of success of (\({\mathrm{RP}}{\mathcal {X}}\)). Lemma 3.1 lower bounds this probability by that of a non-empty intersection between the random subspace \({{\varvec{p}}}+{{\,\mathrm{range}\,}}({{\varvec{A}}})\) and an arbitrary simply-connected component \(G^*\) of the set of global minimizers (Definition 2.3). This probability is further expressed in Corollary 3.4 in terms of a random vector \({{\varvec{w}}}\) that follows a multivariate t-distribution. From Sect. 3.1 onwards, we derive positive and/or quantifiable lower bounds on the probability of success of (\({\mathrm{RP}}{\mathcal {X}}\)), while also trying to eliminate, wherever possible, the dependency of the lower bounds on the choice of \({{\varvec{p}}}\) and \(G^*\).

Lemma 3.1

Suppose Assumption 2.2 holds. Let \({{\varvec{x}}}^*\) be a(ny) global minimizer of (P), \({{\varvec{p}}}\in {\mathcal {X}}\), a given vector, and \({{\varvec{A}}}\), a \(D \times d\) Gaussian matrix with \(d \ge d_e\). Let \({{\varvec{y}}}_2^*\) be defined in (2.5). The reduced problem (\({\mathrm{RP}}{\mathcal {X}}\)) is successful in the sense of Definition 1.1 if \({{\varvec{A}}}{{\varvec{y}}}_2^*+{{\varvec{p}}} \in {\mathcal {X}}\), namely

Proof

This is an immediate consequence of Definition 1.1 and (2.5), as the latter implies \({{\varvec{A}}} {{\varvec{y}}}_2^* + {{\varvec{p}}} \in {\mathcal {G}}^*\) and so \(f({{\varvec{A}}} {{\varvec{y}}}_2^* + {{\varvec{p}}}) = f^*\). \(\square \)

Let us further express (3.1) as follows. Let \({{\varvec{Q}}} = ({{\varvec{U}}} \; {{\varvec{V}}})\), where \({{\varvec{U}}}\) and \({{\varvec{V}}}\) are defined in Assumption 2.2. Since \({{\varvec{Q}}}\) is orthogonal, we have

Using (2.6), we get \({{\varvec{U}}}^T{{\varvec{A}}} {{\varvec{y}}}_2^* = {{\varvec{z}}}^*\). Letting

we get

where in the last equality, we used (2.8). By substituting \({{\varvec{p}}} = {{\varvec{p}}}_{\top }+{{\varvec{p}}}_{\perp }\) and (3.4) in (3.1), we obtain

According to this derivation, all the randomness within the lower bound (3.5) is contained in the random vector \({{\varvec{w}}}\). The next theorem, derived in Appendix B, provides the probability density function of this random vector.

Remark 3.2

Suppose that Assumption 2.2 holds and recall (2.2). If there exists \({{\varvec{x}}}^*\in G\) such that \({{\varvec{x}}}_{\top }^* = {{\varvec{p}}}_{\top }\), where the subscript represents the respective Euclidean projections on the effective subspace, then \(f({{\varvec{p}}}) = f({{\varvec{p}}}_{\top }+{{\varvec{p}}}_{\perp }) = f({{\varvec{x}}}_{\top }^* + {{\varvec{p}}}_{\perp }) = f^*\), where \({{\varvec{p}}}_{\perp }\) is the Euclidean projection of \({{\varvec{p}}}\) on the constant subspace \({\mathcal {T}}^{\perp }\) of the objective function. Thus \({{\varvec{p}}}\in G\) so that, for any embedding \({{\varvec{A}}}\), (\({\mathrm{RP}}{\mathcal {X}}\)) is successful with the trivial solution \({{\varvec{y}}}^* = {{\varvec{0}}}\). Therefore, in our next result, without loss of generality, we make the assumption \({{\varvec{x}}}_{\top }^* \ne {{\varvec{p}}}_{\top }\).

Theorem 3.3

(The p.d.f. of \({{\varvec{w}}}\)) Suppose that Assumption 2.2 holds. Let \({{\varvec{x}}}^*\) be a(ny) global minimizer of (P), \({{\varvec{p}}}\in {\mathcal {X}}\), a given vector, and \({{\varvec{A}}}\), a \(D \times d\) Gaussian matrix with \(d_e \le d \le D-d_e\). Assume that \({{\varvec{p}}}_\top \ne {{\varvec{x}}}_\top ^*\), where the subscript represents the Euclidean projection on the effective subspace. The random vector \({{\varvec{w}}}\) defined in (3.3) follows a \((D-d_e)\)-dimensional t-distribution with parameters \(d-d_e+1\) and \(\frac{\Vert {{\varvec{x}}}_{\top }^* - {{\varvec{p}}}_{\top }\Vert ^2}{d-d_e+1}{{\varvec{I}}}\), and with p.d.f. \(g({{\varvec{{\bar{w}}}}})\) given by

where \(m = D-d_e\) and \(n = d-d_e+1\).

Proof

See Appendix B. \(\square \)

Let us now summarize the above analysis in the following corollary.

Corollary 3.4

Suppose that Assumption 2.2 holds. Let \({{\varvec{x}}}^*\) be a(ny) global minimizer of (P), \({{\varvec{p}}}\in {\mathcal {X}}\), a given vector, and \({{\varvec{A}}}\), a \(D \times d\) Gaussian matrix with \(d_e \le d \le D-d_e\). Assume that \({{\varvec{p}}}_\top \ne {{\varvec{x}}}_\top ^*\), where the subscript represents the Euclidean projection on the effective subspace. Then

where \({{\varvec{w}}}\) is a random vector that follows a \((D-d_e)\)-dimensional t-distribution with parameters \(d-d_e+1\) and \(\frac{\Vert {{\varvec{x}}}_{\top }^* - {{\varvec{p}}}_{\top }\Vert ^2}{d-d_e+1}{{\varvec{I}}}\).

Proof

The result follows from derivations (3.1)–(3.5) and Theorem 3.3. \(\square \)

Here is a roadmap for the results in the remainder of this section. We aim to answer the following two questions:

-

Is the probability of success of (\({\mathrm{RP}}{\mathcal {X}}\)) positive for any \({{\varvec{p}}}\)? In other words: Does the random subspace \(\mathrm {range}({{\varvec{A}}}) + {{\varvec{p}}}\) intersect a subspace of (unconstrained) global minimizers of (P) inside \({\mathcal {X}}\) with positive probability for all \({{\varvec{p}}}\)? Answering this question allows to quantify the number of random embeddings required for finding a global minimizer of (P) when \({{\varvec{p}}}\) is chosen arbitrarily and kept fixed (if several embeddings are used, they all share the same \({{\varvec{p}}}\)). We show that this question can be answered positively, and provide a lower bound on the probability of success of (\({\mathrm{RP}}{\mathcal {X}}\)) that depends on \({{\varvec{p}}}\) in Theorem 3.6.

-

Furthermore, can we derive a positive lower bound on the probability of success of (\({\mathrm{RP}}{\mathcal {X}}\)) that does not depend on \({{\varvec{p}}}\)? Ideally, we aim to prove convergence of X-REGO irrespectively of the choice of \({{\varvec{p}}}\). Indeed, it could be useful algorithmically to let \({{\varvec{p}}}\) vary from one random embedding to another, for example by setting \({{\varvec{p}}}\) to the best point found so far as proposed in Sect. 4. In the specific case where the effective subspace is aligned with the coordinate axes, we provide in Theorem 3.8 a lower bound that is independent of \({{\varvec{p}}}\), and asymptotically quantify its dependence on D, \(d_e\) and d. Deriving such a bound for arbitrary effective subspaces seems, unfortunately, too challenging; we bypass this difficulty by assuming Lipschitz-continuity of f and providing in Theorem 3.15 a uniform lower bound on the probability of \(\epsilon \)-success of (\({\mathrm{RP}}{\mathcal {X}}\)), a weaker condition that requires the random subspace to contain an \(\epsilon \)-minimizer, i.e., a feasible point \({{\varvec{x}}}\) such that \(f(x) \le f^*+ \epsilon \). This key result will allow us to prove almost sure convergence of X-REGO to an \(\epsilon \)-minimizer in Sect. 4, for any choice of \({{\varvec{p}}} \in {\mathcal {X}}\), including adaptive ones.

3.1 Positive probability of success of the reduced problem (\({\mathrm{RP}}{\mathcal {X}}\))

To prove that the probability of success of (\({\mathrm{RP}}{\mathcal {X}}\)) is strictly positive, we need the following additional assumption, that says that the function has low effective dimension and there exists a set of feasible optimal solutions of (P) that is “large enough”, i.e., of dimension at least \(D-d_e\); a counter-example of this assumption would be a case when the feasible global minimizers of (P) are isolated (for an illustration, translate the set \({\mathcal {G}}^*\) in Fig. 1 to the upper right so that the blue line segment shrinks to the upper right corner of the box).

Assumption 3.5

Assume that Assumption 2.2 holds, and that there is a set \(G^*\) defined in Definition 2.3 that is non-degenerate according to Definition 2.4.

The next result then proves that for all \({{\varvec{p}}}\), with a strictly positive probability, the random embedding contains a feasible global minimizer of (P).

Theorem 3.6

Suppose that Assumption 3.5 holds, and let \({{\varvec{A}}}\) be a \(D \times d\) Gaussian matrix with \(d_e \le d \le D-d_e\). Then, for any \({{\varvec{p}}} \in {\mathcal {X}}\),

Proof

We consider two cases, \({{\varvec{p}}}\in G\) and \({{\varvec{p}}} \in {\mathcal {X}}\setminus G\). Firstly, assume that \({{\varvec{p}}} \in G\). Then, \( {{\,\mathrm{{\mathbb {P}}}\,}}[({{\mathrm{RP}}{\mathcal {X}}}) \,\text {is successful}\,] = 1\) since taking \({{\varvec{y}}} = {{\varvec{0}}}\) in (\({\mathrm{RP}}{\mathcal {X}}\)) yields \(f({{\varvec{p}}}) = f^*\).

Assume now that \({{\varvec{p}}}\in {\mathcal {X}}\setminus G\). Assumption 3.5 implies that there exists a global minimizer \({{\varvec{x}}}^*\) and associated \(G^*\) for which \({{\,\mathrm{Vol}\,}}({\bar{G}}^*) > 0\), where \(G^*\) and \({\bar{G}}^*\) are defined in Definition 2.3 and (2.4), respectively. Using (3.7) with this particular \({{\varvec{x}}}^*\) and noting that \({{\varvec{p}}}_{\perp } = {{\varvec{V}}}{{\varvec{V}}}^T{{\varvec{p}}}\) gives us

where \(g({{\varvec{{\bar{w}}}}})\) is the p.d.f. of \({{\varvec{w}}}\) given in (3.6). The latter integral is positive since \(g({{\varvec{{\bar{w}}}}}) > 0\) for any \({{\varvec{{\bar{w}}}}} \in {\mathbb {R}}^{D-d_e}\) and since \({{\,\mathrm{Vol}\,}}(-{{\varvec{V}}}^T{{\varvec{p}}}+{\bar{G}}^*) = {{\,\mathrm{Vol}\,}}({\bar{G}}^*) > 0\) (invariance of volumes under translations) by Assumption 3.5. \(\square \)

Note that the proof of Theorem 3.6 illustrates that the success probability of (\({\mathrm{RP}}{\mathcal {X}}\)), though positive, depends on the choice of \({{\varvec{p}}}\).Footnote 6 Next, under additional problem assumptions, we derive lower bounds on the success probability of (\({\mathrm{RP}}{\mathcal {X}}\)) that are independent of \({{\varvec{p}}}\) and/or quantifiable.

3.2 Quantifying the success probability of (\({\mathrm{RP}}{\mathcal {X}}\)) in the special case of coordinate-aligned effective subspace

Provided the effective subspace \({\mathcal {T}}\) is aligned with coordinate axes and without loss of generality, we can write the orthonormal matrices \({{\varvec{U}}}\) and \({{\varvec{V}}}\), whose columns span \({\mathcal {T}}\) and \({\mathcal {T}}^\perp \), as \({{\varvec{U}}} = [{{\varvec{I}}}_{d_e} \; {{\varvec{0}}}]^T\) and \({{\varvec{V}}} = [{{\varvec{0}}} \; {{\varvec{I}}}_{D-d_e}]^T\). The following result quantifies the probability of the random embedding to contain a feasible global solution of (P) in this case, in terms of the cumulative distribution function of \({{\varvec{w}}}\).

Theorem 3.7

Let Assumption 2.2 hold with \({{\varvec{U}}} = [{{\varvec{I}}}_{d_e} \; {{\varvec{0}}}]^T\) and \({{\varvec{V}}} = [{{\varvec{0}}} \; {{\varvec{I}}}_{D-d_e}]^T\). Let \({{\varvec{x}}}^*\) be a(ny) global minimizer of (P), \({{\varvec{p}}}\in {\mathcal {X}}\), a given vector, and \({{\varvec{A}}}\), a \(D \times d\) Gaussian matrix with \(d_e \le d \le D-d_e\). Assume that \({{\varvec{p}}}_\top \ne {{\varvec{x}}}_\top ^*\), where the subscript represents the Euclidean projection on the effective subspace. Then

where \({{\varvec{w}}}\) is a random vector that follows a \((D-d_e)\)-dimensional t-distribution with parameters \(d-d_e+1\) and \(\frac{\Vert {{\varvec{x}}}_{\top }^* - {{\varvec{p}}}_{\top }\Vert ^2}{d-d_e+1}{{\varvec{I}}}\).

Proof

For \({{\varvec{x^*}}}\in G^*\), we have

Furthermore,

Note that \({{\varvec{x}}}^* \in [-1,1]^{D}\) implies that \({{\varvec{x}}}^*_{1:d_e} \in [-1,1]^{d_e}\). Corollary 3.4 then yields

which immediately gives (3.10). \(\square \)

Note that the right-hand side of (3.10) can be written as the integral of the p.d.f. of \({{\varvec{w}}}\) over the hyperrectangular region \(-{{\varvec{1}}} - {{\varvec{p}}}_{d_e+1:D} \le {{\varvec{w}}} \le {{\varvec{1}}} - {{\varvec{p}}}_{d_e+1:D} \). Instead of directly computing this integral, we analyse its asymptotic behaviour for large D, assuming that \(d_e\) and d are fixed. We obtain the following main result, with its proof provided in Appendix C.

Theorem 3.8

Let Assumption 2.2 hold with \({{\varvec{U}}} = [{{\varvec{I}}}_{d_e} \; {{\varvec{0}}}]^T\) and \({{\varvec{V}}} = [{{\varvec{0}}} \; {{\varvec{I}}}_{D-d_e}]^T\). Let \(d_e\) and d be fixed, with \(d_e \le d \le D-d_e\), and let \({{\varvec{A}}}\) be a \(D \times d\) Gaussian matrix. For all \({{\varvec{p}}} \in {\mathcal {X}}\), we have

where \(\tau \) satisfies

and the constants in \(\Theta (\cdot )\) depend only on \(d_e\) and d.

Proof

See Appendix C. \(\square \)

The next result shows that, in the particular case when \({{\varvec{p}}} = {{\varvec{0}}}\), the center of the full-dimensional domain \({\mathcal {X}}\), the probability of success decreases at worst in a polynomial wayFootnote 7 with the ambient dimension D.

Theorem 3.9

Let Assumption 2.2 hold with \({{\varvec{U}}} = [{{\varvec{I}}}_{d_e} \; {{\varvec{0}}}]^T\) and \({{\varvec{V}}} = [{{\varvec{0}}} \; {{\varvec{I}}}_{D-d_e}]^T\). Let \(d_e\) and d be fixed, with \(d_e \le d \le D-d_e\), and let \({{\varvec{A}}}\) be a \(D \times d\) Gaussian matrix. Let \({{\varvec{p}}} = {{\varvec{0}}}\). Then

where

and where the constants in \(\Theta (\cdot )\) depend only on \(d_e\) and d.

Proof

See Appendix C. \(\square \)

Remark 3.10

Unlike Theorem 3.6, the above result does not require Assumption 3.5. In this specific case, as the effective subspace is aligned with the coordinate axes, Assumption 3.5 is satisfied. The latter follows from \({{\bar{G}}}^* = \{ {{\varvec{g}}} \in {\mathbb {R}}^{D-d_e} : -{{\varvec{1}}} \le {{\varvec{x}}}_\top ^* + {{\varvec{V}}} {{\varvec{g}}} \le {{\varvec{1}}}\} = \{ {{\varvec{g}}} \in [-1,1]^{D-d_e}\}\), as \({{\varvec{V}}} = [{{\varvec{0}}} \; {{\varvec{I}}}_{D-d_e}]^T\) and the last \(D-d_e\) components of the vector \({{\varvec{x}}}_\top ^*\) are zero; see the proof of Theorem 3.7.

Remark 3.11

We note that (3.10) holds with equality if \(d=d_e\) and \(G = G^*\). Our numerical experiments in Sect. 5 also clearly illustrate that the success probability decreases with growing problem dimension D.

Remark 3.12

Our particular choice of asymptotic framework here is due to its practicality as well as to the ready-at-hand analysis of a similar integral to (C.2) in [60]. The scenario (\(d_e\) and d fixed, D large) is a familiar one in practice, where commonly, \(d_e\) is small compared to D, and d is limited by computational resources available to solve the reduced subproblem. Other asymptotic frameworks that could be considered in the future are \(d_e = O(1)\), \(d = O(\log (D))\) or \(d_e = O(1)\), \(d = \beta D\) where \(\beta \) is fixed. For more details on how to obtain asymptotic expansions similar to (3.13) and (3.15) for such choices of \(d_e\) and d, refer to [53, 60].

3.3 Uniformly positive lower bound on the success probability of (\({\mathrm{RP}}{\mathcal {X}}\)) in the general case

As mentioned in the last paragraph of Sect. 3.1, it is difficult to derive a uniformly positive lower bound on the probability of success of (\({\mathrm{RP}}{\mathcal {X}}\)) that does not depend on \({{\varvec{p}}}\). However, assuming Lipschitz continuity of the objective function, we are able to achieve such a guarantee for (\({\mathrm{RP}}{\mathcal {X}}\)) to be approximately successful, a weaker notion that is defined as follows.

Definition 3.13

For a(ny) \(\epsilon >0\), we say that (\({\mathrm{RP}}{\mathcal {X}}\)) is \(\epsilon \)-successful if there exists \({{\varvec{y}}}^* \in {\mathbb {R}}^d\) such that \(f({{\varvec{A}}}{{\varvec{y}}}^*+{{\varvec{p}}}) \le f^* + \epsilon \) and \({{\varvec{A}}}{{\varvec{y}}}^* + {{\varvec{p}}} \in {\mathcal {X}}\).

Let

be the set of feasible \(\epsilon \)-minimizers. The reduced problem (\({\mathrm{RP}}{\mathcal {X}}\)) is thus \(\epsilon \)-successful if it contains a feasible \(\epsilon \)-minimizer.

Assumption 3.14

The objective function \(f : {\mathbb {R}}^D \rightarrow {\mathbb {R}}\) is Lipschitz continuous with Lipschitz constant L, that is, \(|f({{\varvec{x}}}) - f({{\varvec{y}}})| \le L\Vert {{\varvec{x}}} - {{\varvec{y}}} \Vert _2\) for all \({{\varvec{x}}}\) and \({{\varvec{y}}}\) in \({\mathbb {R}}^D\).

The next theorem shows that the probability that (\({\mathrm{RP}}{\mathcal {X}}\)) is \(\epsilon \)-successful is uniformly bounded away from zero for all \({{\varvec{p}}} \in {\mathcal {X}}\).

Theorem 3.15

Suppose that Assumption 3.5 and Assumption 3.14 hold. Let \({{\varvec{A}}}\) be a \(D \times d\) Gaussian matrix with \(d_e \le d \le D-d_e\) and \(\epsilon >0\), an accuracy tolerance. Then there exists a constant \(\tau _{\epsilon } > 0\) such that, for all \({{\varvec{p}}} \in {\mathcal {X}}\),

Proof

Assumption 3.5 implies that there exists a global minimizer \({{\varvec{x}}}^* \in {\mathcal {X}}\), with corresponding sets \(G^*\) (Definition 2.3) and \({\bar{G}}^*\) in (2.4) such that \({{\,\mathrm{Vol}\,}}({\bar{G}}^*) > 0\). Let \(N_{\eta }(G^*):= \{ {{\varvec{x}}} \in {\mathcal {X}}: \Vert {{\varvec{x}}}_{\top }^* - {{\varvec{U}}}{{\varvec{U}}}^T {{\varvec{x}}}\Vert _2 \le \eta \}\) be a neighbourhood of \(G^*\) in \({\mathcal {X}}\), for some \(\eta > 0\), where as usual, \({{\varvec{x}}}_{\top }^* = {{\varvec{U}}} {{\varvec{U}}}^T {{\varvec{x}}}^*\) is the Euclidean projection of \({{\varvec{x}}}^*\) on the effective subspace.

Firstly, assume that \({{\varvec{p}}} \in N_{\epsilon /L}(G^*)\). Then, \(\Vert {{\varvec{x}}}_{\top }^* - {{\varvec{p}}}_{\top } \Vert \le \epsilon /L\), and by Assumption 3.14, \(|f({{\varvec{p}}}) - f^*| = |f({{\varvec{p}}}_{\top }) - f({{\varvec{x}}}_{\top }^*)| \le L\Vert {{\varvec{x}}}_{\top }^* - {{\varvec{p}}}_{\top } \Vert \le \epsilon \). Thus \({{\varvec{p}}} \in G_{\epsilon }\) and, hence, \({{\,\mathrm{{\mathbb {P}}}\,}}[({\mathrm{RP}}{\mathcal {X}}) \ \text {is}\,\epsilon \text {-successful}] = 1\).

Otherwise, \({{\varvec{p}}}\in {\mathcal {X}}\setminus N_{\epsilon /L}(G^*)\). Using the proof of Theorem 3.6, we have

where \(g({{\varvec{{\bar{w}}}}})\) is the p.d.f. of \({{\varvec{w}}}\) given by (3.6), and where the first inequality is due to the fact that (\({\mathrm{RP}}{\mathcal {X}}\)) being successful implies that (\({\mathrm{RP}}{\mathcal {X}}\)) is \(\epsilon \)-successful (by letting \(\epsilon := 0\) in Definition 3.13). To prove (3.17), it is thus sufficient to lower bound \(g({{\varvec{{\bar{w}}}}})\) by a positive constant, independent of \({{\varvec{p}}}\). Since \({{\varvec{p}}} \notin N_{\epsilon /L}(G^*)\), we have

where the last inequality follows from \(\Vert {{\varvec{U}}}{{\varvec{U}}}^T\Vert _2 = 1\), since \({{\varvec{U}}}\) has orthonormal columns, and from \(-{{\varvec{2}}} \le {{\varvec{x}}}^* - {{\varvec{p}}} \le {{\varvec{2}}}\) since \({{\varvec{x}}}^*,{{\varvec{p}}} \in [-1,1]^D\). Furthermore, note that, for any \({{\varvec{{\bar{w}}}}} \in -{{\varvec{V}}}^T {{\varvec{p}}} + {\bar{G}}^*\), we have

and, hence,

where the last inequality follows from \(\Vert {{\varvec{U}}}{{\varvec{U}}}^T\Vert _2 = 1\) and \(\Vert {{\varvec{V}}}{{\varvec{V}}}^T\Vert _2 = 1\) (as \({{\varvec{U}}}\) and \({{\varvec{V}}}\) are orthonormal) and from \({{\varvec{x}}}^*, {{\varvec{p}}} \in [-1,1]^D\). Thus,

By combining (3.6), (3.19) and (3.20), we finally obtain

where \(C(m,n) = \Gamma ((m+n)/2)/(\pi ^{m/2}\Gamma (n/2))\) and where in the last equality we used the fact \({{\,\mathrm{Vol}\,}}(-{{\varvec{V}}}^T{{\varvec{p}}}+{\bar{G}}^*) = {{\,\mathrm{Vol}\,}}({\bar{G}}^*)\) for any \({{\varvec{p}}} \in {\mathbb {R}}^D\) (invariance of volumes under translations). The result follows from the assumption that \({{\,\mathrm{Vol}\,}}({\bar{G}}^*)~>~0\). \(\square \)

4 The X-REGO algorithm and its global convergence

In the case of random embeddings for unconstrained global optimization, the success probability of the reduced problem is independent of the ambient dimension [9]. However, in the constrained case of problem (P), the analysis in Sect. 3 shows that the probability of success of the reduced problem (\({\mathrm{RP}}{\mathcal {X}}\)) decreases with D. It is thus imperative in any algorithm that uses feasible random embeddings in order to solve (P) to allow multiple such subspaces to be explored, and it is practically important to find out what are efficient and theoretically-sound ways to choose these subspaces iteratively. This is the aim of our generic and flexible algorithmic framework, X-REGO (Algorithm 1). Furthermore, as an additional level of generality and practicality, we allow the reduced, random subproblem to be solved stochastically, so that a sufficiently accurate global solution of this problem is only guaranteed with a certain probability. This covers the obvious case when a (convergent) stochastic global optimization algorithm would be employed to solve the reduced subproblem, but also when a deterministic global solver is used but may sometimes fail to find the required solution due to a limited computational budget, processor failure and so on.

In X-REGO, for \(k\ge 1\), the kth embedding is determined by a realization \(\tilde{{{\varvec{A}}}}^k = {{\varvec{A}}}^k({{\varvec{\omega }}}^k)\) of the random Gaussian matrix \({{\varvec{A}}}^k\), and it is drawn at the point \(\tilde{{{\varvec{p}}}}^{k-1} = {{\varvec{p}}}^{k-1}({{\varvec{\omega }}}^{k-1}) \in {\mathcal {X}}\), a realization of the random variable \({{\varvec{p}}}^{k-1}\) (which, without loss of generality, includes the case of deterministic choices by writing \({{\varvec{p}}}^{k-1}\) as a random variable with support equal to a singleton).

X-REGO can be seen as a stochastic process, so that in addition to \(\tilde{{{\varvec{p}}}}^k\) and \(\tilde{{{\varvec{A}}}}^k\), each algorithm realization provides sequences \(\tilde{{{\varvec{x}}}}^k = {{\varvec{x}}}^k({{\varvec{\omega }}}^k)\), \(\tilde{{{\varvec{y}}}}^k = {{\varvec{y}}}^k({{\varvec{\omega }}}^k)\) and \({\tilde{f}}_{min}^k = f_{min}^k({{\varvec{\omega }}}^k)\), for \(k \ge 1\), that are realizations of the random variables \({{\varvec{x}}}^k\), \({{\varvec{y}}}^k\) and \(f_{min}^k\), respectively. Each iteration of X-REGO solves—approximately and possibly, with a certain probability—a realization (\(\widetilde{\text {RP}{\mathcal {X}}^k}\)) of the random problem

To calculate \(\tilde{{{\varvec{y}}}}^k\), (\(\widetilde{\text {RP}{\mathcal {X}}^k}\)) may be solved to some required accuracy using a deterministic global optimization algorithm that is allowed to fail with a certain probability; or employing a stochastic algorithm, so that \(\tilde{{{\varvec{y}}}}^k\) is only guaranteed to be an approximate global minimizer of (\(\widetilde{\text {RP}{\mathcal {X}}^k}\)) (at least) with a certain probability.

Several variants of X-REGO can be obtained by specific choices of the random variable \({{\varvec{p}}}^k\) (assumed throughout the paper to have support contained in \({\mathcal {X}}\)). A first possibility consists in simply defining \({{\varvec{p}}}^k\) as a random variable with support \(\{{{\varvec{0}}}\}\), so that \(\tilde{{{\varvec{p}}}}^{k} = {{\varvec{0}}}\) for all k. It is also possible to preserve the progress achieved so far by defining \({{\varvec{p}}}^k = {{\varvec{x}}}_{opt}^k\), where

the random variable corresponding to the best point found over the k first embeddings. We compare numerically several choices of \({{\varvec{p}}}\) on benchmark functions in Sect. 5.

The termination in Line 2 could be set to a given maximum number of embeddings, or could check that no significant progress in decreasing the objective function has been achieved over the last few embeddings, compared to the value \(f(\tilde{{{\varvec{x}}}}^k_{opt})\). For generality, we leave it unspecified here.

4.1 Global convergence of the X-REGO algorithm to the set of global \(\epsilon \)-minimizers

For a(ny) given tolerance \(\epsilon >0\), let \(G_\epsilon \) be the set of approximate global minimizers of (P) defined in (3.16). We show that \({{\varvec{x}}}_{opt}^k\) in (4.2) converges to \(G_{\epsilon }\) almost surely as \(k \rightarrow \infty \) (see Theorem 4.7).

Intuitively, our proof relies on the fact that any vector \(\tilde{{{\varvec{x}}}}^k\) defined in (4.1) belongs to \(G_\epsilon \) if the following two conditions hold simultaneously: (a) the reduced problem (\(\text {RP}{\mathcal {X}}^k\)) is \((\epsilon - \lambda )\)-successful in the sense of Definition 3.13,Footnote 8 namely,

(b) the reduced problem (\(\widetilde{\text {RP}{\mathcal {X}}^k}\)) is solved (by a deterministic/stochastic algorithm) to an accuracy \(\lambda \in (0,\epsilon )\) in the objective function value, namely,

holds (at least) with a certain probability. We introduce two additional random variables that capture the conditions in (a) and (b) above,

where \(\mathbbm {1}\) is the usual indicator function for an event.

Let \({\mathcal {F}}^k = \sigma ({{\varvec{A}}}^1, \dots , {{\varvec{A}}}^k, {{\varvec{y}}}^1, \dots , {{\varvec{y}}}^k, {{\varvec{p}}}^0, \dots , {{\varvec{p}}}^k)\) be the \(\sigma \)-algebra generated by the random variables \({{\varvec{A}}}^1, \dots , {{\varvec{A}}}^k, {{\varvec{y}}}^1, \dots , {{\varvec{y}}}^k, {{\varvec{p}}}^0, \dots , {{\varvec{p}}}^k\) (a mathematical concept that represents the history of the X-REGO algorithm as well as its randomness until the kth embedding),Footnote 9 with \({\mathcal {F}}^0 = \sigma ({{\varvec{p}}}^0)\). We also construct an ‘intermediate’ \(\sigma \)-algebra, namely,

with \({\mathcal {F}}^{1/2} = \sigma ({{\varvec{p}}}^0, {{\varvec{A}}}^{1})\). Note that \({{\varvec{x}}}^k\), \(R^k\) and \(S^k\) are \({\mathcal {F}}^{k}\)-measurable,Footnote 10 and \(R^k\) is also \({\mathcal {F}}^{k-1/2}\)-measurable; thus they are well-defined random variables.

Remark 4.1

The random variables \({{\varvec{A}}}^1, \dots , {{\varvec{A}}}^k\), \({{\varvec{y}}}^1, \dots , {{\varvec{y}}}^k\), \({{\varvec{x}}}^1, \dots , {{\varvec{x}}}^k\), \({{\varvec{p}}}^0, {{\varvec{p}}}^1, \dots , {{\varvec{p}}}^k\), \(R^1\), \(\dots \), \(R^k\), \(S^1, \dots , S^{k}\) are \({\mathcal {F}}^{k}\)-measurable since \({\mathcal {F}}^0 \subseteq {\mathcal {F}}^1 \subseteq \cdots \subseteq {\mathcal {F}}^{k}\). Also, \({{\varvec{A}}}^1, \dots , {{\varvec{A}}}^k\), \({{\varvec{y}}}^1, \dots , {{\varvec{y}}}^{k-1}\), \({{\varvec{x}}}^1, \dots , {{\varvec{x}}}^{k-1}\), \({{\varvec{p}}}^0, {{\varvec{p}}}^1, \dots , {{\varvec{p}}}^{k-1}\), \(R^1\), \(\dots \), \(R^k\), \(S^1, \dots , S^{k-1}\) are \({\mathcal {F}}^{k-1/2}\)-measurable since \({\mathcal {F}}^0 \subseteq {\mathcal {F}}^{1/2} \subseteq {\mathcal {F}}^1 \subseteq \cdots \subseteq {\mathcal {F}}^{k-1} \subseteq {\mathcal {F}}^{k-1/2}\).

A weak assumption is given next, that is satisfied by reasonable techniques for the subproblems; namely, the reduced problem (\(\text {RP}{\mathcal {X}}^k\)) needs to be solved to required accuracy with some positive probability.

Assumption 4.2

There exists \(\rho \in (0,1]\) such that, for all \(k \ge 1\),Footnote 11

i.e., with (conditional) probability at least \(\rho > 0\), the solution \({{\varvec{y}}}^k\) of (\(\text {RP}{\mathcal {X}}^k\)) satisfies (4.4).

Remark 4.3

If a deterministic (global optimization) algorithm is used to solve (\(\widetilde{\text {RP}{\mathcal {X}}^k}\)), then \(S^k\) is always \({\mathcal {F}}_k^{k-1/2}\)-measurable and Assumption 4.2 is equivalent to \(S^k\ge \rho \). Since \(S^k\) is an indicator function, this further implies that \(S^k\equiv 1\), provided a sufficiently large computational budget is available.

The results of Sect. 3 provide a lower bound on the (conditional) probability of the reduced problem (\(\text {RP}{\mathcal {X}}^k\)) to be \((\epsilon -\lambda )\)-successful, with the consequence given in the first part of the next corollary.

Corollary 4.4

If Assumptions 3.5 and 3.14 hold and if \(d_e \le d \le D-d_e\), then

If Assumption 4.2 holds, then

Proof

Recall that the support of the random variable \({{\varvec{p}}}^k\) is contained in \({\mathcal {X}}\). For each embedding, we apply Theorem 3.15 (setting \({{\varvec{p}}} = \tilde{{{\varvec{p}}}}^{k-1}\) and replacing \(\epsilon \) by \(\epsilon -\lambda \)) to deduce that there exists \(\tau \in (0,1]\) such that \({{\,\mathrm{{\mathbb {P}}}\,}}[R^k = 1 | {\mathcal {F}}^{k-1} ] \ge \tau \), for \(k\ge 1\). Then, in terms of conditional expectation, we have \({\mathbb {E}}[R^k | {\mathcal {F}}^{k-1}] = 1 \cdot {{\,\mathrm{{\mathbb {P}}}\,}}[ R^k = 1 | {\mathcal {F}}^{k-1}] + 0 \cdot {{\,\mathrm{{\mathbb {P}}}\,}}[ R^k = 0 | {\mathcal {F}}^{k-1} ] \ge \tau \).

If Assumption 4.2 holds, then \({\mathbb {E}}[R^k S^k | {\mathcal {F}}^{k-1/2}] = R^k {\mathbb {E}}[ S^k | {\mathcal {F}}^{k-1/2}] \ge \rho R^k\), where the equality follows from the fact that \(R^k\) is \({\mathcal {F}}^{k-1/2}\)-measurable (see [20, Theorem 4.1.14]). \(\square \)

4.1.1 Global convergence proof

A useful property is given next.

Lemma 4.5

Let Assumptions 3.5, 3.14 and 4.2 hold, and let \(d_e \le d \le D-d_e\). Then, for \(K\ge 1\), we have

Proof

We define an auxiliary random variable, \( J^K := \mathbbm {1} \Big (\bigcup _{k=1}^K \big \{ \{R^k = 1\} \cap \{ S^k = 1 \} \big \} \big ). \) Note that \(J^K = 1- \prod _{k=1}^{K} (1-R^k S^k)\). We have

where

-

\((*)\) follow from the tower property of conditional expectation (see (4.1.5) in [20]),

-

\((\circ )\) is due to the fact that \(R^1, \dots , R^{K-1}\) and \(S^1,\dots ,S^{K-1}\) are \({\mathcal {F}}^{K-1/2}\)- and \({\mathcal {F}}^{K-1}\)-measurable (see Theorem 4.1.14 in [20]),

We repeatedly expand the expectation of the product for \(K-1\), \(\ldots \), 1, in exactly the same manner as above, to obtain the desired result. \(\square \)

In the next lemma, we show that if (\(\text {RP}{\mathcal {X}}^k\)) is \((\epsilon -\lambda )\)-successful and is solved to accuracy \(\lambda \) in objective value, then the solution \({{\varvec{x}}}^k\) must be inside \(G_{\epsilon }\); thus proving our intuitive statements (a) and (b) at the start of Sect. 4.1.

Lemma 4.6

Suppose Assumptions 3.5, 3.14 and 4.2 hold, and let \(d_e \le d \le D-d_e\). Then

Proof

By Definition 3.13, if (\(\text {RP}{\mathcal {X}}^k\)) is \((\epsilon -\lambda )\)-successful, then there exists \({{\varvec{y}}}^k_{int} \in {\mathbb {R}}^d\) such that \({{\varvec{A}}}^k {{\varvec{y}}}^k_{int} + {{\varvec{p}}}^{k-1} \in {\mathcal {X}}\) and

Since \({{\varvec{y}}}^k_{int}\) is in the feasible set of (\(\text {RP}{\mathcal {X}}^k\)) and \( f^k_{min}\) is the global minimum of (\(\text {RP}{\mathcal {X}}^k\)), we have

Then, for \({{\varvec{x}}}^k\), (4.4) gives the first inequality below,

where the second and third inequalities follow from (4.10) and (4.9), respectively. This shows that \({{\varvec{x}}}^k \in G_\epsilon \). \(\square \)

In the following theorem, we show that X-REGO converges almost surely to an \(\epsilon \)-minimizer of (P), i.e., a feasible solution of (P) with cost at most \(f^*+\epsilon \).

Theorem 4.7

(Global convergence) Suppose Assumptions 3.5, 3.14 and 4.2 hold, and let \(d_e \le d \le D-d_e\). Then

where \({{\varvec{x}}}^k_{opt}\) and \(G_{\epsilon }\) are defined in (4.2) and (3.16), respectively.

Furthermore, for any \(\xi \in (0,1)\),

where \(K_\xi := \Big \lceil {\frac{|\log (1-\xi )|}{\tau \rho }}\Big \rceil \).

Proof

Lemma 4.6 and the definition of \({{\varvec{x}}}^k_{opt}\) in (4.2) provide

for \(k = 1, 2,\dots , K\) and for any integer \(K\ge 1\). Hence,

Note that the sequence \(\{ f({{\varvec{x}}}^1_{opt}), f({{\varvec{x}}}^2_{opt}), \dots , f({{\varvec{x}}}^K_{opt})\}\) is monotonically decreasing. Therefore, if \({{\varvec{x}}}^k_{opt} \in G_{\epsilon }\) for some \(k \le K\) then \({{\varvec{x}}}^i_{opt} \in G_{\epsilon }\) for all \(i = k, \dots , K\); and so the sequence \((\{ {{\varvec{x}}}^k_{opt} \in G_{\epsilon } \})_{k = 1}^K\) is an increasing sequence of events. Hence,

From (4.13) and (4.12), we have for all \(K\ge 1\),

where the second inequality follows from Lemma 4.5. Finally, passing to the limit with K in (4.14), we deduce \( 1 \ge \lim _{K \rightarrow \infty } {{\,\mathrm{{\mathbb {P}}}\,}}[\{ {{\varvec{x}}}^K_{opt} \in G_{\epsilon } \}] \ge \lim _{K \rightarrow \infty } \left[ 1 - (1- \tau \rho )^K\right] = 1\), as required.

Note that if

then (4.14) implies \({{\,\mathrm{{\mathbb {P}}}\,}}[ {{\varvec{x}}}^k_{opt} \in G_{\epsilon } ] \ge \xi \). Since (4.15) is equivalent to \( k \ge \displaystyle \frac{\log (1-\xi )}{\log (1-\tau \rho )}\), (4.15) holds for all \(k\ge K_\xi \) since \(K_\xi \ge \displaystyle \frac{\log (1-\xi )}{\log (1-\tau \rho )}\). \(\square \)

Remark 4.8

Crucially, we note that X-REGO (Algorithm 1) is a generic framework that can be applied to a general, continuous objective f in (P). Furthermore, the convergence result in Theorem 4.7 also continues to hold in this general case provided (4.7) can be shown to hold; this is where we crucially use the special structure of low effective dimensionality of the objective that we investigate in this paper. In [12], we apply X-REGO to global optimization of Lipschitz continuous objectives, under no special structure assumption and for arbitrary values of d and arbitrary domains; there, we also prove almost sure convergence to \(\epsilon \)-minimizers using tools from conic integral geometry.

Remark 4.9

If f is a convex function (and known a priori to be so), then clearly, a local (deterministic or stochastic) optimization algorithm may be used to solve (\(\widetilde{\text {RP}{\mathcal {X}}^k}\)) and achieve (4.4). Apart from this important speed-up and simplification, it is difficult to exploit this additional special structure of f in our analysis, in order to improve the success bounds and convergence.

Quantifiable rates of convergence when the effective subspace is aligned with coordinate axes Using the estimates for \(\tau \) in Theorem 3.8, we can estimate precisely the rate of convergence of X-REGO as a function of problem dimension, assuming that \({\mathcal {T}}\) is aligned with coordinate axes.

Theorem 4.10

Suppose Assumption 2.2 holds with \({{\varvec{U}}} = [{{\varvec{I}}}_{d_e} \; {{\varvec{0}}}]^T\) and \({{\varvec{V}}} = [{{\varvec{0}}} \; {{\varvec{I}}}_{D-d_e}]^T\), as well as Assumption 4.2. Let \(\xi \in (0,1)\), and \(d_e\) and d be fixed with \(d_e \le d \le D-d_e\). Then (4.11) holds with

If \({{\varvec{p}}}^k = {{\varvec{0}}}\) for \(k\ge 0\), then (4.11) holds with

Proof

Firstly, note our remark regarding assumptions below. The result follows from Theorem 4.7, (3.13) and (3.15). \(\square \)

Remark 4.11

Assumptions 3.5 and 3.14 were required to prove Theorem 3.15 and, consequently, (4.7). If the effective subspace is aligned with coordinate axes, we no longer need Assumptions 3.14 and 3.5 to prove (4.7). In this case, (4.7) follows from Theorem 3.8, together with the fact that (\(\text {RP}{\mathcal {X}}^k\)) being successful implies (\(\text {RP}{\mathcal {X}}^k\)) is \(\epsilon \)-successful for any \(\epsilon \ge 0\).

5 Numerical experiments

5.1 Setup

Algorithms We test different variants of Algorithm 1 against the no-embedding framework, in which (P) is solved directly without using random embeddings and with no explicit exploitation of its special structure. Each variant of X-REGO corresponds to a specific choice of \({{\varvec{p}}}^k\), \(k \ge 0\):

-

Adaptive X-REGO (A-REGO). In X-REGO, the point \({{\varvec{p}}}^k\) is chosen as the best point found up to the kth embedding: if \(f({{\varvec{A}}}^k {{\varvec{y}}}^k+{{\varvec{p}}}^{k-1}) < f({{\varvec{p}}}^{k-1})\) then \({{\varvec{p}}}^{k} := {{\varvec{A}}}^k {{\varvec{y}}}^k+{{\varvec{p}}}^{k-1}\), otherwise, \({{\varvec{p}}}^k := {{\varvec{p}}}^{k-1}\).

-

Local Adaptive X-REGO (LA-REGO). In X-REGO, we solve (\(\widetilde{\text {RP}{\mathcal {X}}^k}\)) using a local solver (instead of a global one as in N-REGO). Then, if \(|f({{\varvec{A}}}^k {{\varvec{y}}}^k+{{\varvec{p}}}^{k-1}) - f({{\varvec{p}}}^{k-1})| > \gamma \) for some small \(\gamma \) (here, \(\gamma = 10^{-5}\)), we let \({{\varvec{p}}}^{k} := {{\varvec{A}}}^k {{\varvec{y}}}^k+{{\varvec{p}}}^{k-1}\), otherwise, \({{\varvec{p}}}^k\) is chosen uniformly at random in \({\mathcal {X}}\).

-

Nonadaptive X-REGO (N-REGO). In X-REGO, all the random subspaces are drawn at the origin: \({{\varvec{p}}}^k := {{\varvec{0}}}\) for all k.

-

Local Nonadaptive X-REGO (LN-REGO). In X-REGO, the low-dimensional problem (\(\widetilde{\text {RP}{\mathcal {X}}^k}\)) is solved using a local solver, and the point \({{\varvec{p}}}^k\) is chosen uniformly at random in \({\mathcal {X}}\) for all k.

Solvers We test the aforementioned X-REGO variants using three solvers for solving the reduced problem (\(\widetilde{\text {RP}{\mathcal {X}}^k}\))—or for solving the original problem (P) in the no-embedding case—namely, DIRECT ([24, 27, 35]), BARON ([49, 52]) and KNITRO ([8]).

DIRECT ([24, 27, 35]) version 4.0 (DIviding RECTangles) is a deterministicFootnote 12 global optimization solver, that does not require information about the gradient nor about the Lipschitz constant.

BARON ([49, 52]) version 17.10.10 (Branch-And-Reduce Optimization Navigator) is a state-of-the-art branch- and-bound type global optimization solver for nonlinear and mixed-integer programs, that is highly competitive [45]. However, it accepts only a few (general) classes of functions (e.g., no trigonometric functions, no black box functions).

KNITRO ([8]) version 10.3.0 is a large-scale nonlinear local optimization solver that makes use of objective derivatives. KNITRO has a multi-start feature, referred here as mKNITRO, allowing it to aim for global minimizers.

We refer to [9] for a detailed description of the solvers. We test A-REGO and N-REGO using DIRECT, BARON and mKNITRO and test LA-REGO and LN-REGO using only local KNITRO, with no multi-start.

In addition to the comparison with ‘no embedding’, we compare X-REGO with the REMBO approach proposed in [58], which we modify so that it matches our present framework. Namely, we replace the Bayesian solver used in REMBO with (m)KNITRO (in its local and global incarnations). Our proposal is tested within the N-REGO (with mKNITRO) and LN-REGO (with KNITRO) frameworks where instead of solving (\(\widetilde{\text {RP}{\mathcal {X}}^k}\)) we solve the following problem

where \({\mathcal {P}}_{{\mathcal {X}}}({{\varvec{x}}}) := \arg \min _{{{\varvec{z}}} \in {\mathcal {X}}} \Vert {{\varvec{z}}} - {{\varvec{x}}} \Vert _2 \) is the standard projection operator that projects an infeasible \({{\varvec{x}}}\) (that is outside \({\mathcal {X}}\)) onto the nearest feasible point in \({\mathcal {X}}\); note that \({\mathcal {P}}_{{\mathcal {X}}}\) ensures that the computed solutions are feasible and thus there is no need for linear constraints as in (\(\widetilde{\text {RP}{\mathcal {X}}^k}\)). However, to restrict the search space, we follow the original REMBO proposal [58] and impose the bound constraint \({{\varvec{y}}} \in [-\delta , \delta ]^{d_e}\), where \(\delta = \delta _{opt} := 8 \times \sqrt{d_e}\)—an optimal value for \(\delta \) determined based on the theoretical bounds in [9]. Note that \(\delta _{opt}\) is not the same for every problem in the test set as \(\delta _{opt}\) depends on \(d_e\).

Test set The methodology of these constructions is given in [9, 58] and summarized here in Appendix D. Our synthetic test set contains 19 D-dimensional functions with low effective dimension, with \(D = 10,100\) and 1000. We construct these high-dimensional functions from 19 global optimization problems (Table 4, of dimensions 2–6) with known global minima [5, 22, 29], some of which are in the Dixon-Szego test set [16]. The construction process consists in artificially adding coordinates to the original functions, and then applying a rotation to ensure that the effective subspace is not aligned with the coordinate axes.

Experimental setup We solve the entire test set with each version of X-REGO and its paired solvers. Let f be a function from the test set with the global minimum \(f^*\). When applying any version of X-REGO to minimize f, we let \(d=d_e\) for the size of the random embedding. We terminate either after \(K = 100\) embeddings, or earlier, as soon asFootnote 13

We then record the computational cost, which we measure in terms of either function evaluations or CPU time in seconds. To compare with ‘no-embedding’, we solve the full-dimensional problem (P) directly with DIRECT, BARON and mKNITRO with no use of random embeddings. The budget and termination criteria used for each solver to solve (\(\widetilde{\text {RP}{\mathcal {X}}^k}\)) within X-REGO, or to solve (P) in the ‘no-embedding’ framework are outlined in Table 1.

Remark 5.1

The experiments are done not to compare solvers but to contrast ‘no-embedding’ and REMBO with the X-REGO variants. All the experiments were run in MATLAB on the 16 cores (2 \(\times \) 8 Intel with hyper-threading) Linux machines with 256 GB RAM and 3300 MHz speed.

We compare the results using performance profiles (Dolan and Moré [18]), which measure the proportion of problems solved by the algorithm in less than a given budget defined based on the best performance among the algorithms considered. More precisely, for each solver (BARON, DIRECT and KNITRO), and for each algorithm \({\mathcal {A}}\) (the above-mentioned variants of X-REGO and ‘no-embedding’), we record \({\mathcal {N}}_p({\mathcal {A}})\), the computational cost (see Table 1) of running algorithm \({\mathcal {A}}\) to solve problem p within accuracy \(\epsilon \). Let \({\mathcal {N}}^*_p\) be the minimum computational cost required for problem p by any algorithm \({\mathcal {A}}\). The performance (probability) of algorithm \({\mathcal {A}}\) on the problem set \({\mathcal {P}}\) is defined as

with performance ratio \(\alpha \ge 1\).

5.2 Numerical results

DIRECT Figure 2 compares the adaptive and non-adaptive random embedding algorithms (A-REGO and N-REGO) to the no-embedding framework, when using the DIRECT solver for the reduced problem (\(\widetilde{\text {RP}{\mathcal {X}}^k}\)) (and for the full-dimensional problem in the case of the no-embedding framework). We find that the no-embedding framework outperforms the two X-REGO variants. We also note that this behaviour is more pronounced when the dimension of the problem (P) is small. In that regime, it is also difficult to determine which version of X-REGO performs the best. When D is large, the no-embedding framework still outperforms the two variants of X-REGO, but among these two, the adaptive one (A-REGO) performs generally better than N-REGO. The average number of function evaluations and random embeddings/subspaces required by the algorithms are given in Tables 2 and 3, respectively.

BARON Figure 3 compares A-REGO and N-REGO to the no-embedding framework, when using BARON to solve the reduced problem (\(\widetilde{\text {RP}{\mathcal {X}}^k}\)). We find that the no-embedding framework is clearly outperformed by the two variants of X-REGO in the large-dimensional setting. Then, it is also clear that the adaptive variant of X-REGO outperforms the non-adaptive one. Table 2 also indicates that the CPU time used by the different algorithms increases with the dimension of the problem, and that the increase is most rapid for ’no-embedding’.

KNITRO The comparison between the X-REGO variants, using mKNITRO to solve (\(\widetilde{\text {RP}{\mathcal {X}}^k}\)), is given in Fig. 4. Here, we also compare the local variants of X-REGO (namely, LA-REGO and LN-REGO), for which the reduced problem is solved using local KNITRO, with no multi-start feature, and the REMBO framework described above with (m)KNITRO. We find that the local variants outperform the global ones, and the no-embedding framework when the dimension of the problem is sufficiently large. Figure 4 also indicates that the local non-adaptive variant (LN-REGO) outperforms the adaptive one in this high-dimensional setting. This behaviour can also be observed in Tables 2 and 3, which indicate that less function evaluations and embeddings are needed for LA-REGO compared to LN-REGO for large D; this may be a feature of the test set we used/devised. Finally, the modified REMBO framework behaves comparatively well on this problem set. We note however, that its performance depends on the choice of a domain in the reduced space whose size \(\delta \) needs careful choosing and it is typically not known a priori.Footnote 14 By contrast, such careful reduced domain choices are not needed for X-REGO; which is a clear practical advantage for the latter. Furthermore, to the best of our knowledge, the convergence of the REMBO framework (with multiple embeddings) still needs to be developed, and we delegate this question to future work.

Conclusions to numerical experiments The numerical experiments presented in this paper indicate that, as expected, the X-REGO algorithm is mostly beneficial for high-dimensional problems, when D is large. In this setting, X-REGO variants paired with the BARON and mKNITRO solvers outperform the ‘no-embedding’ approach, of applying these solvers directly to the problems. It is less obvious to decide which variant of X-REGO is best, but it seems that, at least on the problem set considered, the local variants outperform the global ones.

We have conducted additional numerical experiments where d is chosen to be strictly greater than \(d_e\) (for example, \(d=d_e+2\) is reasonable for our test set given the small dimension of the original Dixon-Szëgo test set); the performance of methods—particularly with BARON and (m)KNITRO—was similar to the \(d=d_e\) presented here, as can be seen in the results given in Appendix E. Furthermore, we also considered five different realizations of the test set (namely, a different random rotation of the effective subspace was performed); again, similar results to the ones presented here were observed as illustrated in Appendix E and in the ArXiv version (arXiv:2009.10446) of this paper.

We also compared X-REGO (paired with KNITRO) with the simplest version of Random Search—uniform sampling inside the domain \({\mathcal {X}}\). The maximum budget of samples is set to \(10^5\) in our experiment. We sample until either we have successfully found a global minimum (i.e. \(f({{\varvec{x}}}_{sample}) \le f^* + 10^{-3}\)) or we have exhausted the budget. The results of this experiment (including average number of samples by Random Search) are provided in Appendix E in Fig. 8. As expected, our X-REGO variants perform reassuring well by comparison.