Abstract

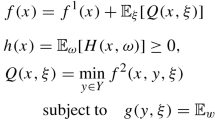

We investigate sample average approximation (SAA) for two-stage stochastic programs without relatively complete recourse, i.e., for problems in which there are first-stage feasible solutions that are not guaranteed to have a feasible recourse action. As a feasibility measure of the SAA solution, we consider the “recourse likelihood”, which is the probability that the solution has a feasible recourse action. For \(\epsilon \in (0,1)\), we demonstrate that the probability that a SAA solution has recourse likelihood below \(1-\epsilon \) converges to zero exponentially fast with the sample size. Next, we analyze the rate of convergence of optimal solutions of the SAA to optimal solutions of the true problem for problems with a finite feasible region, such as bounded integer programming problems. For problems with non-finite feasible region, we propose modified “padded” SAA problems and demonstrate in two cases that such problems can yield, with high confidence, solutions that are certain to have a feasible recourse decision. Finally, we conduct a numerical study on a two-stage resource planning problem that illustrates the results, and also suggests there may be room for improvement in some of the theoretical analysis.

Similar content being viewed by others

References

Ben-Tal, A., Goryashko, A., Guslitzer, E., Nemirovski, A.: Adjustable robust solutions of uncertain linear programs. Math. Program. 99(2), 351–376 (2004)

Benders, J.F.: Partitioning procedures for solving mixed-variables programming problems. Numer. Math. 4, 238–252 (1962)

Bertsimas, D., Sim, M.: Tractable approximations to robust conic optimization problems. Math. Program. 107(1–2), 5–36 (2006)

Birge, J.R., Louveaux, F.: Introduction to Stochastic Programming. Springer, Berlin (2011)

Calafiore, G., Campi, M.C.: Uncertain convex programs: randomized solutions and confidence levels. Math. Program. 102(1), 25–46 (2005)

Calafiore, G.C., Campi, M.C.: The scenario approach to robust control design. IEEE Trans. Autom. Control 51(5), 742–753 (2006)

Campi, M.C., Garatti, S.: The exact feasibility of randomized solutions of uncertain convex programs. SIAM J. Optim. 19(3), 1211–1230 (2008)

Chen, X., Shapiro, A., Sun, H.: Convergence analysis of sample average approximation of two-stage stochastic generalized equations. SIAM J. Optim. 29(1), 135–161 (2019)

Dembo, A., Zeitouni, O.: Large deviations techniques and applications. Stochastic modelling and applied probability, vol. 38 (2010)

Dupacová, J., Wets, R.: Asymptotic behavior of statistical estimators and of optimal solutions of stochastic optimization problems. Ann. Stat. 16, 1517–1549 (1988)

Dyer, M., Stougie, L.: Computational complexity of stochastic programming problems. Math. Program. 106(3), 423–432 (2006)

Heilmann, W.R.: Optimal selectors for stochastic linear programs. Appl. Math. Optim. 4(1), 139–142 (1977)

King, A.J., Wets, R.J.: Epi-consistency of convex stochastic programs. Stoch. Stoch. Rep. 34(1–2), 83–92 (1991)

Kleywegt, A.J., Shapiro, A., Homem-de Mello, T.: The sample average approximation method for stochastic discrete optimization. SIAM J. Optim. 12(2), 479–502 (2002)

Liu, R.P.: On feasibility of sample average approximation solutions. SIAM J. Optim. 30(3), 2026–2052 (2020)

Liu, X., Küçükyavuz, S., Luedtke, J.: Decomposition algorithms for two-stage chance-constrained programs. Math. Program. 157(1), 219–243 (2016)

Luedtke, J.: A branch-and-cut decomposition algorithm for solving chance-constrained mathematical programs with finite support. Math. Program. 146(1–2), 219–244 (2014)

Luedtke, J., Ahmed, S.: A sample approximation approach for optimization with probabilistic constraints. SIAM J. Optim. 19(2), 674–699 (2008)

Mak, W.K., Morton, D.P., Wood, R.K.: Monte carlo bounding techniques for determining solution quality in stochastic programs. Oper. Res. Lett. 24(1–2), 47–56 (1999)

Margellos, K., Goulart, P., Lygeros, J.: On the road between robust optimization and the scenario approach for chance constrained optimization problems. IEEE Trans. Autom. Control 59(8), 2258–2263 (2014)

Mitsos, A.: Global optimization of semi-infinite programs via restriction of the right-hand side. Optimization 60(10–11), 1291–1308 (2011)

Norkin, V.I., Pflug, G.C., Ruszczyński, A.: A branch and bound method for stochastic global optimization. Math. Program. 83(1–3), 425–450 (1998)

Ruszczyński, A., Świȩtanowski, A.: Accelerating the regularized decomposition method for two stage stochastic linear problems. Eur. J. Oper. Res. 101(2), 328–342 (1997)

Shapiro, A., Dentcheva, D., Ruszczyński, A.: Lectures on Stochastic Programming: Modeling and Theory. SIAM, Philadelphia (2009)

Shapiro, A., Homem-de Mello, T.: A simulation-based approach to two-stage stochastic programming with recourse. Math. Program. 81(3), 301–325 (1998)

Shapiro, A., Nemirovski, A.: On complexity of stochastic programming problems. In: Continuous Optimization, pp. 111–146. Springer (2005)

Sherali, H.D., Adams, W.P.: A Reformulation-Linearization Technique for Solving Discrete and Continuous Nonconvex Problems, vol. 31. Springer, Berlin (2013)

Van Slyke, R.M., Wets, R.: L-shaped linear programs with applications to optimal control and stochastic programming. SIAM J. Appl. Math. 17(4), 638–663 (1969)

Vershynin, R.: High-Dimensional Probability: An Introduction with Applications in Data Science, vol. 47. Cambridge University Press, Cambridge (2018)

Wets, R.J.B.: Stochastic programs with fixed recourse: the equivalent deterministic program. SIAM Rev. 16(3), 309–339 (1974)

Wets, R.J.B.: On the continuity of the value of a linear program and of related polyhedral-valued multifunctions. In: Mathematical Programming Essays in Honor of George B. Dantzig Part I. Springer, pp. 14–29 (1985)

Acknowledgements

The authors thank two anonymous referees for comments that helped improve this paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The authors dedicate this paper to Shabbir Ahmed. This work was supported by NSF Award CMMI-1634597.

Appendix

Appendix

1.1 Proof of Corollary 5

For any \(\nu \in (0,1)\),

The third inequality can be justified by observing that \(\frac{\nu N\epsilon }{n_1}\ge 1+\log \frac{\nu N\epsilon }{n_1}\). The fifth inequality can be justified by observing that \(\log (k!)\ge k(\log k -1)\) for \(k\ge 1\). The result follows by setting \(\nu =1/2\).

1.2 Proof of Proposition 11

We only need to prove that \(H(x,\xi )\) is Lipshitz continuous in \((W(\xi ),T(\xi ),h(\xi ))\) for all \(x\in X\) under infinity (matrix) norm. Then the result follows from the assumption that \((W(\xi ),T(\xi ),h(\xi ))\) is Lipshitz continuous in \(\xi \).

For fixed \(x\in X\), assume \((y^*,\eta ^*)\) and \((y',\eta ')\) are optimal solutions of (11) for \(M:=({\overline{W}},{\overline{T}},{\overline{h}}):=({\overline{W}}(\xi ),{\overline{T}}(\xi ),{\overline{h}}(\xi ))\) and \(M':=({\overline{W}}',{\overline{T}}',{\overline{h}}'):=({\overline{W}}(\xi '),{\overline{T}}(\xi '),{\overline{h}}(\xi '))\), respectively. Consider the different conditions.

-

1.

If the problem has only right-hand side randomness, then

$$\begin{aligned} \begin{aligned} \eta ^*&=\min _y\Big \{\max _{i \in I} \{{\overline{h}}_i - {\overline{W}}_iy-{\overline{T}}_ix\Big \} : Dy \ge d - Cx \}\\&\le \max _{i \in I} \Big \{{\overline{h}}_i -{\overline{W}}_iy'-{\overline{T}}_ix\Big \}\\&\le \max _{i \in I} \Big \{{\overline{h}}'_i-{\overline{W}}_iy'-{\overline{T}}_ix\Big \}+\Vert M-M'\Vert _\infty \Vert \left( \begin{array}{c} 0\\ 0\\ 1 \end{array} \right) \Vert _\infty \\&\le \eta '+\Vert M-M'\Vert _\infty . \end{aligned} \end{aligned}$$ -

2.

If the problem has fixed recourse and X is bounded, then

$$\begin{aligned} \begin{aligned} \eta ^*&=\min _y\Big \{\max _{i \in I} \Big \{{\overline{h}}_i - {\overline{W}}_iy-{\overline{T}}_ix\Big \}:Dy\ge d-Cx \Big \}\\&\le \max _{i \in I} \{{\overline{h}}_i -{\overline{W}}_iy'-{\overline{T}}_ix\}\\&\le \max _{i \in I} \{{\overline{h}}'_i-{\overline{W}}_iy'-{\overline{T}}'_ix\}+\Vert M-M'\Vert _\infty \Vert \left( \begin{array}{c} 0\\ x\\ 1 \end{array} \right) \Vert _\infty \\&\le \eta '+R\Vert M-M'\Vert _\infty \end{aligned} \end{aligned}$$for some \(R>0\) since X is bounded.

-

3.

If \(\{(x,y):x\in X,Dy\ge d-Cx \}\) is bounded, then similarly

$$\begin{aligned} \begin{aligned} \eta ^*&=\min _y\Big \{\max _{i \in I} \{{\overline{h}}_i - {\overline{W}}_iy-{\overline{T}}_ix\Big \}:Dy\ge d-Cx \}\\&\le \max _{i \in I} \{{\overline{h}}_i -{\overline{W}}_iy'-{\overline{T}}_ix\}\\&\le \max _{i \in I} \{{\overline{h}}'_i-{\overline{W}}'_iy'-{\overline{T}}'_ix\}+\Vert M-M'\Vert _\infty \Vert \left( \begin{array}{c} y'\\ x\\ 1 \end{array} \right) \Vert _\infty \\&\le \eta '+R\Vert M-M'\Vert _\infty . \end{aligned} \end{aligned}$$for some \(R>0\) since \(\{(x,y):x\in X,Dy\ge d-Cx \}\) is bounded.

So for each case, there exists \(R>0\) such that

Similarly, \(H(x,\xi ')-H(x,\xi )\le R\Vert M-M'\Vert _\infty \). Therefore,

which implies that \(H(x,\xi )\) is a Lipschitz continuous function in \((W(\xi ),T(\xi ),h(\xi ) )\) for all \(x\in X\) under infinity norm. Therefore, Assumption 4 holds.

1.3 Proof of Proposition 12

First observe that the linear program (11) is always feasible. Thus,

where we adopt the convention that if the dual linear program is infeasible then the optimal value is defined to be \(-\infty \). Thus,

Next, introduce binary variables \(\delta _{qj}\) for \(q \in [d]\) and \(j \in [N]\), where \(\delta _{qj}=1\) implies that \(J_q=j\). This leads to the mixed-integer nonlinear program:

Using the assumptions that \({\overline{W}}(\xi )\), \({\overline{T}}(\xi )\) and \({\overline{h}}(\xi )\) are linear in \(\xi \), problem (36) can be written as the following mixed-integer bilinear program:

We next use (38) to substitute out the variables \(\xi _q\) in this formulation. Observe that one this is done, the only nonlinear terms are of the form \(\alpha _p\delta _{qj}\) for \(p \in I, q \in [d], j \in [N]\). Thus, introduce new variables \(z_{pqj}\) to represent this product for each \(p \in I\), \(q \in [d]\), and \(j \in [N]\). Using constraint (41) we derive the linear constraints:

Using constraints (39) we derive the linear constraints:

Observe that constraints (43),(44) together with (42) and (39) are sufficient to imply \(z_{pqj} = \alpha _p \delta _{qj}\). Indeed for any fixed q, suppose \(j^*_q\) is the index such that \(\delta _{qj^*_q}=1\). Then (43) implies that \(z_{pqj}=0=\alpha _p\delta _{qj}\) for any \(j \ne j^*_q\) and all p, and (44) implies that \(\sum _{j \in [N]} z_{pqj} = z_{pqj^*_q} = \alpha _p = \alpha _p \delta _{qj^*_q}\) also for all p. Also note that constraints (41), (43) and (44) imply

for \(q\in [d]\). Therefore, constraints (39) are redundant when constraints (41), (43) and (44) are present. Thus the mixed-integer bilinear program (37)–(42) can be reformulated as the MILP given in Proposition 12 by using (38) to substitute out the variables \(\xi \), using \(z_{pqj}\) to replace the bilinear terms \(\alpha _p\delta _{qj}\), and adding the constraints (43)–(44).

1.4 Proof of Proposition 13

Note that \(H({\hat{x}},\cdot )\) is convex under the assumptions and the maximum of a convex function can only be attained at extreme points. Therefore, we can reformulate (27) as

Introduce binary variables \(\delta _{q1}\) and \(\delta _{q2}\) for \(q\in [d]\), where \(\delta _{q1}=1\) implies that \(\xi _q=\xi ^{\min }_{q}\) and \(\delta _{q2}=1\) implies that \(\xi _q=\xi ^{\max }_{q}\). We can then rewrite (45) as a mixed-integer bilinear program:

Similar to Proposition 12, we introduce variables \(z_{pqj}\) to represent \(\alpha _p\delta _{qj}\) for \(p\in I,q\in [d],j\in \{1,2\}\). Then problem (45) can be written as the following mixed-integer linear program:

Finally, we apply the reformulation-linearization technique to strengthen the MILP formulation (47)–(52). We introduce new variables \(w_{pqj}\ge 0\) to represent the product \(\beta _p\delta _{qj}\) for \(p\in [m_2]{\setminus } I,q\in [d],j\in \{1,2\}\). Note that \(w_{pqj}=\beta _p\delta _{qj}\) and \(\alpha ^T{\overline{W}}+\beta ^TD=0\) imply

and constraints \(\delta _{q1}+\delta _{q2}=1\) for \(q\in [d]\) imply

Note that constraints (49), (53) and (54) imply (50). Therefore, adding new variables \(w\ge 0\) together with constraints (53) and (54) into the original MILP formulation yields a strengthened formulation in the lifted space, as the MILP given in Proposition 13.

Rights and permissions

About this article

Cite this article

Chen, R., Luedtke, J. On sample average approximation for two-stage stochastic programs without relatively complete recourse. Math. Program. 196, 719–754 (2022). https://doi.org/10.1007/s10107-021-01753-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10107-021-01753-9