Abstract

We consider stochastic programs conditional on some covariate information, where the only knowledge of the possible relationship between the uncertain parameters and the covariates is reduced to a finite data sample of their joint distribution. By exploiting the close link between the notion of trimmings of a probability measure and the partial mass transportation problem, we construct a data-driven Distributionally Robust Optimization (DRO) framework to hedge the decision against the intrinsic error in the process of inferring conditional information from limited joint data. We show that our approach is computationally as tractable as the standard (without side information) Wasserstein-metric-based DRO and enjoys performance guarantees. Furthermore, our DRO framework can be conveniently used to address data-driven decision-making problems under contaminated samples. Finally, the theoretical results are illustrated using a single-item newsvendor problem and a portfolio allocation problem with side information.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Today’s decision makers not only collect observations of the uncertainties directly affecting their decision-making processes, but also gather data about measurable exogenous variables that may have some predictive power on those uncertainties [5]. In Statistics, Operations Research and Machine Learning, these variables are often referred to as covariates, explanatory variables, side information or features [39].

In the framework of Optimization Under Uncertainty, the side information acts by changing the probability measure of the uncertainties. In fact, if the joint distribution of the features and the uncertainties were known, this measure change would correspond to conditioning that distribution on the side information given. Unfortunately, in practice, the decision maker only has an incomplete picture of such a joint distribution in the form of a finite data sample. The development of optimization methods capable of exploiting the side information to make improved decisions, in a context of limited knowledge of its explanatory power on the uncertainties, defines the ultimate purpose of the so-called Prescriptive Stochastic Programming or Conditional Stochastic Optimization paradigm. This paradigm has recently become very popular in the technical literature, see, for instance, [5, 7, 39] and references therein. More specifically, a data-driven approach to address the newsvendor problem, whereby the decision is explicitly modeled as a parametric function of the features, is proposed in [5]. This approach thus seeks to optimize said function. In contrast, the authors in [7] formulate and formalize the problem of minimizing the conditional expectation cost given the side information, and develop various schemes based on machine learning methods (typically used for regression and prediction) to get data-driven solutions. Their approach is non-parametric in the sense that the optimal decision is not constrained to be a member of a certain family of the features’ functions. The inspiring work in [7] has been subject to further study and improvement in two principal directions, namely, the design of efficient algorithms to trim down the computational burden of the optimization [16] and the development of strategies to reduce the variance and bias of the decision obtained and its associated cost (the pairing of both interpreted as a statistical estimator). In the latter case, we can cite the work in [12], where they leverage ideas from bootstrapping and machine learning to confer robustness on the decision and acquire asymptotic performance guarantees. Similarly, the authors in [8] and [39] propose regularization procedures to reduce the variance of the data-driven solution to the conditional expectation cost minimization problem which is formalized and studied in [7]. A scheme to robustify the data-driven methods introduced in this work is also proposed in [9] for dynamic decision-making.

A different, but related thrust of research focuses on developing methods to construct predictions specifically tailored to the optimization problem that is to be solved and where those predictions are then used as input information. Essentially, the predictions are intended to yield decisions with a low disappointment or regret. This framework is known in the literature as (smart) Predict-then-Optimize, see, e.g., [4, 17, 18, 36], and references therein.

Our research, in contrast, builds upon Distributionally Robust Optimization (DRO), which is a powerful modeling paradigm to protect the task of decision-making against the ambiguity of the underlying probability distribution of the uncertainty [40]. Nevertheless, the technical literature on the use of DRO to address Prescriptive or Conditional Stochastic Programming problems is still relatively scarce. We highlight papers [9, 15, 28, 31, 33, 37, 38]Footnote 1, with [38] being a generalization of [37]. In [15], they resort to a scenario-dependent ambiguity set to exploit feature information in a DRO framework. However, their objective is to minimize a joint expectation and consequently, their approach cannot directly handle the Conditional Stochastic Optimization setting we consider here. In [28], the authors deal with a stochastic control problem with time-dependent data. They extend the idea of [29] to a fully dynamic setting and robustify the control policy against the worst-case weight vector that is within a certain \(\chi ^2\)-distance from the one originally given by the Nadaraya-Watson estimator. In the case of [9], the authors propose using the conditional empirical distribution given by a local predictive method as the center of the Wasserstein ball that characterizes the DRO approach in [35]. This proposal, nonetheless, fails to explicitly account for the inference error associated with the local estimation. In [31, 33], the authors develop a two-step procedure whereby a regression model between the uncertainty and the features is first estimated and then a distributionally robust decision-making problem is formulated, considering a Wasserstein ball around the empirical distribution of the residuals. Finally, the authors in [38] also consider a Wasserstein-ball ambiguity set as in [9, 31, 33], but centered at the empirical distribution of the joint data sample of the uncertainty and the features. In addition, they further constrain the ambiguity set by imposing that the worst-case distribution assigns some probability mass to the support of the uncertainty conditional on the values taken on by the features.

Against this background, our main contributions are:

-

1.

Modeling power: We develop a general framework to handle prescriptive stochastic programs within the DRO paradigm. Our DRO framework is based on a new class of ambiguity sets that exploit the close and convenient connection between trimmings and the partial mass problem to immunize the decision against the error incurred in the process of inferring conditional information from joint (limited) data. We also show that our approach serves as a natural framework for the application of DRO in data-driven decision-making under contaminated samples.

-

2.

Computational tractability: Our framework is as complex as the Wasserstein-metric-based DRO approach proposed in [35] without side information. Therefore, we extend the mass-transportation approach to the realm of Conditional Stochastic Optimization while preserving its appealing tractability properties.

-

3.

Theoretical results and performance guarantees: Leveraging theory from probability trimmings and optimal transport, we show that our DRO model enjoys a finite sample guarantee and is asymptotically consistent.

-

4.

Numerical results: We evaluate our DRO approach on the single-item newsvendor problem and the portfolio allocation problem, and compare it with the KNN method described in [7], the robustified KNN proposed in [9], and a KNN followed by the standard Wassertein-distance-based DRO model introduced in [35], as suggested in [9] too. Unlike all these approaches, ours explicitly accounts for the cost impact of the potential error made when inferring conditional information from a joint sample of the uncertainty and the covariates. To this end, we minimize the worst-case cost over a Wasserstein ball of probability measures with an ambiguous center.

The rest of the paper is organized as follows. In Sect. 2, we formulate our DRO framework to address decision-making problems under uncertainty in the presence of side information and show that it is as tractable as the standard Wasserstein-metric-based DRO approach developed in [35]. In Sect. 3.1, we deal with the case in which the side information corresponds to an event of known and positive probability and discuss its application to data-driven decision-making under contaminated samples. The situation in which the probability of such an event is positive, but unknown, is treated in Sect. 3.2. Section 3.3 elaborates on the case in which the side information reduces to a specific realization of the feature vector, more precisely, the instance where the side information represents an event of zero probability. Section 4 provides results from numerical experiments and, finally, Sect. 5 concludes the paper.

Notation. We use \(\overline{{\mathbb {R}}}\) to represent the extended real line, and adopt the conventions of its associated arithmetic. Moreover, \({\mathbb {R}}_+\) stands for the set of non-negative real numbers. We employ lower-case bold face letters to represent vectors. The inner product of two vectors \( {\mathbf {u}}, {\mathbf {v}} \) is denoted as \(\langle {\mathbf {u}}, {\mathbf {v}}\rangle = {\mathbf {u}}^T {\mathbf {v}}\) and by \(\left\| {\mathbf {u}}\right\| \) we denote the norm of the vector \({\mathbf {u}}\). For a set A, the indicator function \({\mathbb {I}}_A({\mathbf {a}})\) is defined through \({\mathbb {I}}_A({\mathbf {a}})=1\) if \({\mathbf {a}}\in A\); \(=0\) otherwise. The Lebesgue measure in \({\mathbb {R}}^d\) is denoted as \(\lambda ^d\). We use the symbol \(\delta _{\varvec{\xi }}\) to represent the Dirac distribution supported on \(\varvec{\xi }\). Additionally, we reserve the symbol “\(\;\widehat{}\;\)” for objects which are dependent on the sample data. The K-fold product of a distribution \({\mathbb {Q}}\) will be denoted as \({\mathbb {Q}}^K\). Finally, the symbols \({\mathbb {E}}\) and \({\mathbb {P}}\) denote, respectively, “expectation” and “probability” (the context will give us the measure under which that expectation or probability is taken).

2 Data-driven distributionally robust optimization with side information

In this paper, we propose a general framework for data-driven distributionally robust optimization with side information that relies on two related tools, namely, the optimal mass transport theory and the concept of trimming of a probability measure. Next, we introduce some preliminaries that help motivate our proposal. All the proofs that are missing in the main text are compiled in the “Appendix”.

2.1 Preliminaries and motivation

Let \({\mathbf {x}}\in X \subseteq {\mathbb {R}}^{d_{{\mathbf {x}}}}\) be the decision variable vector and \({\mathbf {y}}\), with support set \(\varXi _{{\mathbf {y}}} \subseteq {\mathbb {R}}^{d_{{\mathbf {y}}}}\), the random vector that models the uncertainty affecting the value of the decision. Let \({\mathbf {z}}\), with support set \(\varXi _{{\mathbf {z}}} \subseteq {\mathbb {R}}^{d_{{\mathbf {z}}}}\), be the (random) feature vector and denote the objective function to be minimized as \(f({\mathbf {x}}, \varvec{\xi })\), where \(\varvec{\xi }:=({\mathbf {z}},{\mathbf {y}})\).

Given a new piece of information in the form of the event \(\varvec{\xi } \in {\widetilde{\varXi }}\), the decision maker seeks to compute the optimal decision that minimizes the (true) conditional expected cost:

where \({\mathbb {Q}}\) is the true joint distribution of \(\varvec{\xi }:=({\mathbf {z}},{\mathbf {y}})\) with support set \(\varXi \subseteq {\mathbb {R}}^{d_{{\mathbf {z}}}+d_{{\mathbf {y}}}}\) and \({\mathbb {Q}}_{{\widetilde{\varXi }}}\) is the associated true distribution of \(\varvec{\xi }\) conditional on \(\varvec{\xi } \in {\widetilde{\varXi }}\). Hence, we implicitly assume that \({\mathbb {Q}}_{{\widetilde{\varXi }}}\) is a regular conditional distribution and that the conditional expectation (1) is well defined.

An example of \({\widetilde{\varXi }}\) would be \({\widetilde{\varXi }}:=\{ \varvec{\xi }=({\mathbf {z}},{\mathbf {y}}) \in \varXi \; : \; {\mathbf {z}}\in {\mathscr {Z}}\}\), with \({\mathscr {Z}}\subseteq \varXi _{{\mathbf {z}}} \) being an uncertainty set built from the information on the features. We note that this definition includes the case in which \({\mathscr {Z}}\) reduces to a singleton \({\mathbf {z}}^*\) representing a particular realization of the features.

Unfortunately, when it comes to solving problem (1), neither the true distribution \({\mathbb {Q}}\) nor—even less so—the conditional one \({\mathbb {Q}}_{{\widetilde{\varXi }}}\) are generally known to the decision maker. Actually, the decision maker typically counts only on a data sample consisting of N observations \(\widehat{\varvec{\xi }}_i:=(\widehat{{\mathbf {z}}}_{i}, \widehat{{\mathbf {y}}}_{i})\) for \(i=1, \ldots , N\), which we assume are i.i.d. Therefore, the solution to problem (1) per se is, in practice, out of reach and the best the decision maker can do is to approximate the solution to (1) with some (probabilistic) performance guarantees. Within this context, Distributionally Robust Optimization (DRO) emerges as a powerful modeling framework to achieve that goal. In brief, the DRO approach aims to find a decision \({\mathbf {x}}\in X\) that is robust against all conditional probability distributions that are somehow plausible given the information at the decision maker’s disposal. This is mathematically stated as follows:

where \(\widehat{{\mathscr {U}}}_N\) is a so-called ambiguity set that contains all those plausible conditional distributions. This ambiguity set must be built from the available information on \(\varvec{\xi }\), which, in our case, comprises the N observations \(\{\widehat{\varvec{\xi }}_i\}_{i=1}^{N}\). The subscript N in \(\widehat{{\mathscr {U}}}_N\) is intended to underline this issue. Furthermore, the condition \(Q_{{\widetilde{\varXi }}}({\widetilde{\varXi }}) = 1\) for all \(Q_{{\widetilde{\varXi }}} \in \widehat{{\mathscr {U}}}_N\) is implicit in the construction of that set. In our setup, however, problem (2) poses a major challenge, which has to do with the fact that the observations \(\{\widehat{\varvec{\xi }}_i\}_{i=1}^{N}\) pertain to the true joint distribution \({\mathbb {Q}}\), and not to the conditional one \({\mathbb {Q}}_{{\widetilde{\varXi }}}\). Consequently, we need to build an ambiguity set \(\widehat{{\mathscr {U}}}_N\) for the plausible conditional distributions from the limited joint information on \({\mathbb {Q}}\) provided by the data \(\{\widehat{\varvec{\xi }}_i\}_{i=1}^{N}\).

At this point, we should note that there are several approaches in the technical literature to handle the conditional stochastic optimization problem (1) for the particular case in which \({\widetilde{\varXi }}\) is defined as \({\widetilde{\varXi }}:=\{ \varvec{\xi }=({\mathbf {z}},{\mathbf {y}}) \in \varXi \; : \; {\mathbf {z}}= \mathbf {z^*}\}\). For example, the authors of [7] approximate (1) by the following conditional estimate

where \(w_N^i({\mathbf {z}}^*)\) is a weight function that can be given by various non-parametric machine learning methods such as K-nearest neighbors, kernel regression, CART, and random forests. Formulation (3) can be naturally interpreted as a (conditional) Sample-Average-Approximation (SAA) of problem (1).

The authors in [8] extend the work in [7] to accommodate the setting in which the outcome of the uncertainty \({\mathbf {y}}\) may be contingent on the taken decision \({\mathbf {x}}\). For this purpose, they work with an enriched data set comprising observations of the uncertainty \({\mathbf {y}}\), the decision \({\mathbf {x}}\) and the covariates \({\mathbf {z}}\), and allow the weights in (3) to depend on \({\mathbf {x}}\) too. Besides, they add terms to the objective function of (3) to penalize estimates of its variance and bias. The case in which the weight function (3) is given by the Nadaraya-Watson (NW) kernel regression estimator is considered in [29, 39]. In [39], in addition, they leverage techniques from moderate deviations theory to design a regularization scheme that reduces the optimistic bias of the NW approximation and to provide insight into its out-of-sample performance. The work in [12] focuses on conditional estimators (3) where the weights are provided by the NW or KNN method. They use DRO, based on the relative entropy distance for discrete distributions to get decisions from (3) that perform well on a large portion of resamples bootstraped from the empirical distribution of the available data set.

Finally, the authors in [9] provide a robustified version of the conditional estimator (3), which takes the following form

where \({\mathscr {U}}^i_N:= \{ {\mathbf {y}} \in \varXi _{{\mathbf {y}}} : \left\| {\mathbf {y}}-\widehat{{\mathbf {y}}}_i \right\| _p \leqslant \varepsilon _N \}\). This problem can be seen as a robust SAA method capable of exploiting side information and has also been used in [10, 11].

In our case, however, we follow a different path to address the conditional stochastic optimization problem (1) by way of (2). More precisely, we leverage the notion of trimmings of a distribution and the related theory of partial mass transportation.

2.2 The partial mass transportation problem and trimmings

This section introduces some concepts about trimmings and the partial mass transportation problem that help us construct the ambiguity set \(\widehat{{\mathscr {U}}}_N\) in (2) from the sample data \(\{\widehat{\varvec{\xi }}_i\}_{i=1}^{N}\). For simplicity, we restrict ourselves to probability measures defined in \({\mathbb {R}}^d\).

If \({\mathbb {Q}}({\widetilde{\varXi }}) = \alpha > 0\) (our analysis, though, will also cover the case \(\alpha = 0\) later in Sect. 3.3), problem (1) can be recast as

which only requires that \({\mathbb {E}}_{{\mathbb {Q}}}\left[ |f({\mathbf {x}}, \varvec{\xi }){\mathbb {I}}_{{\widetilde{\varXi }}}(\varvec{\xi })| \right] <\infty \) for all \({\mathbf {x}}\in X\) (see [27, Eq. 6.2]).

Now we introduce the notion of a trimming of a distribution, which is at the core of our proposed DRO framework.

Definition 1

(\((1-\alpha )\)-trimmings, Definition 1.1 from [6]) Given \(0 \leqslant \alpha \leqslant 1\) and probability measures \(P,Q \in {\mathbb {R}}^d\), we say that Q is an \((1-\alpha )\)-trimming of P if Q is absolutely continuous with respect to P, and the Radon-Nikodym derivative satisfies \(\frac{dQ}{dP} \leqslant \frac{1}{\alpha }\). The set of all \((1-\alpha )\)-trimmings (or trimming set of level \(1-\alpha \)) of P will be denoted by \({\mathscr {R}}_{1-\alpha }(P)\).

As extreme cases, we have that for \(\alpha = 1, \; {\mathscr {R}}_0(P)\) is just P, while, for \(\alpha = 0\), \({\mathscr {R}}_1(P)\) is the set of all probability measures absolutely continuous with respect to P. Given a probability P on \({\mathbb {R}}^d\), if \(\alpha _1 \leqslant \alpha _2\), then \({\mathscr {R}}_{1-\alpha _2}(P) \subset {\mathscr {R}}_{1-\alpha _1}(P) \). Especially useful is the fact that a trimming set is a convex set, which is, besides, compact under the topology of weak convergence. We refer the reader to [3, Proposition 2.7] for other interesting properties about the set \({\mathscr {R}}_{1-\alpha }(P)\).

Consider now the following minimization problem:

where D is a probability metric.

Problem (6) is known as the \((D,1-\alpha )-\)partial (or incomplete) mass problem [6]. While there is a variety of probability metrics we could choose from to play the role of D in (6), here we work with the space \({\mathscr {P}}_p({\mathbb {R}}^d)\) of probability distributions supported on \({\mathbb {R}}^d\) with finite p-th moment and restrict ourselves to the \(p-\)Wasserstein metric, \({\mathscr {W}}_p\), for its tractability and theoretical advantages. In such a case (i.e., when \(D={\mathscr {W}}_p\)), problem (6) is referred to as a partial mass transportation problem and interpolates between the classical optimal mass transportation problem (when \(\alpha =1\)) and the random quantization problem (when \(\alpha =0\)).

Intuitively, the partial optimal transport problem goes as follows. We have an excess of offer of a certain quantity of mass at origin (supply) and a mass that needs to be satisfied at destination (demand), so that it is not necessary to serve all the mass (demand\(= \alpha \times \)supply). In other words, some \((1-\alpha )\)-fraction of the mass at origin can be left non-served. The goal is to perform this task at the cheapest transportation cost. If we represent the demand at destination by a target probability distribution R, we can model the supply at origin as \(\frac{P}{\alpha }\), where P is another probability distribution and the mass required at destination is \(\alpha \) times the mass at origin. This way, a partial optimal transportation plan is a probability measure \(\varPi \) on \({\mathbb {R}}^d \times {\mathbb {R}}^d\) with first marginal in \({\mathscr {R}}_{1-\alpha }(P)\) and with second marginal equal to R, which solves the following cost minimization problem:

The following lemma allows us to characterize the connection between the joint distribution \({\mathbb {Q}}\) and the conditional distribution \({\mathbb {Q}}_{{\widetilde{\varXi }}}\) in problem (1) above in terms of the partial mass problem.

Lemma 1

Let Q be a probability on \({\mathbb {R}}^d\) such that \(Q({\widetilde{\varXi }}) = \alpha >0\) and let \(Q_{{\widetilde{\varXi }}}\) be the Q-conditional probability distribution given the event \(\varvec{\xi } \in {\widetilde{\varXi }}\). Also, assume that for a given probability metric D, \({\mathscr {R}}_{1-\alpha }(Q)\) is closed for D over an appropiate set of probability distributions. Then, \(Q_{{\widetilde{\varXi }}}\) is the unique distribution that satisfies \(Q_{{\widetilde{\varXi }}}({\widetilde{\varXi }})=1\) and \(D\left( {\mathscr {R}}_{1-\alpha }(Q), Q_{{\widetilde{\varXi }}}\right) = 0\).

By way of Lemma (1), we can reformulate Problem (1) as follows:

which now presents a form which is much more suited to our purpose, that is, to get to the DRO-type of problem (2) we propose. The change, nonetheless, has been essentially cosmetic, because problem (7) still relies on the true joint distribution \({\mathbb {Q}}\) and therefore, is of no use in practice as it stands right now. To make it practical, we need to rewrite it not in terms of the unknown \({\mathbb {Q}}\), but in terms of the information available to the decision maker, i.e., the sample data \(\{\widehat{\varvec{\xi }}_i\}_{i=1}^{N}\). For that purpose, it seems sensible and natural to replace \({\mathbb {Q}}\) in (7b) with its best approximation taken directly from the data, namely, the empirical measure of the sample, \(\widehat{{\mathbb {Q}}}_N\). Logically, to accommodate the approximation, we will need to introduce a budget \({\widetilde{\rho }}\) in equation (7b), that is,

Hereinafter we will use \(\widehat{{\mathscr {U}}}_{N}(\alpha ,{\widetilde{\rho }})\) to denote the ambiguity set defined by constraints (8b)–(8c). Under certain conditions, this uncertainty set enjoys nice topological properties, as we state in [19, Proposition EC.2].

Now we define what we call the minimum transportation budget, which plays an important role in the selection of budget \({\widetilde{\rho }}\) in problem (P).

Definition 2

(Minimum transportation budget) Given \(\alpha >0\) in problem \(\left( \mathrm{P}\right) \), the minimum transportation budget, which we denote as \({\underline{\epsilon }}_{N\alpha }\), is the p-Wasserstein distance between the set \({\mathscr {P}}_p({\widetilde{\varXi }})\) and the \((1-\alpha )\)-trimming of the empirical distribution \(\widehat{{\mathbb {Q}}}_N\) that is the closest to that set, i.e., \( \inf \{ {\mathscr {W}}_p(P,Q) \;:\; P \in {\mathscr {R}}_{1-\alpha }( \widehat{{\mathbb {Q}}}_N), \; Q \in {\mathscr {P}}_p({\widetilde{\varXi }})\}\), which is given by

where \(\varvec{\xi }_{k:N}\) is the k-th nearest data point from the sample to set \({\widetilde{\varXi }}\) and \( \text {dist} (\varvec{\xi }_j, {\widetilde{\varXi }}):= \inf _{\varvec{\xi }\in {\widetilde{\varXi }}} \text {dist} (\varvec{\xi }_j, \varvec{\xi }) = \inf _{\varvec{\xi }\in {\widetilde{\varXi }}}||\varvec{\xi }_j-\varvec{\xi }||. \) If \(\alpha = 0\), then \( {\underline{\epsilon }}_{N0} = \text {dist}(\varvec{\xi }_{1:N}, {\widetilde{\varXi }})\).

Importantly, the minimum transportation budget to the power of p, i.e., \({\underline{\epsilon }}_{N\alpha }^{p}\), is the minimum value of \({\widetilde{\rho }}\) in \(\left( \mathrm{P}\right) \) for this problem to be feasible. Furthermore, \({\underline{\epsilon }}_{N\alpha }\) is random, because it depends on the available data sample, but realizes before the decision \({\mathbf {x}}\) is to be made. It constitutes, therefore, input data to problem \(\left( \mathrm{P}\right) \).

We note that, if the random vector \({\mathbf {y}}\) takes values in a set that is independent of the feature vector \({\mathbf {z}}\), i.e., for all \({\mathbf {z}}^* \in \varXi _{{\mathbf {z}}} \), \(\{{\mathbf {y}} \in \varXi _{{\mathbf {y}}} : \varvec{\xi } = ({\mathbf {z}}^*, {\mathbf {y}}) \in \varXi \} = \varXi _{{\mathbf {y}}} \), then \(\text {dist}(\varvec{\xi }_j,{\widetilde{\varXi }}) = \inf _{\varvec{\xi }\in {\widetilde{\varXi }}}||\varvec{\xi }_j-\varvec{\xi }|| = \inf _{\varvec{\xi } = ({\mathbf {z}},{\mathbf {y}}) \in {\widetilde{\varXi }}}||{\mathbf {z}}_j-{\mathbf {z}}||\).

Furthermore, in what follows, we assume that \(\text {dist}(\varvec{\xi }_j,{\widetilde{\varXi }})\) (interpreted as a random variable) conditional on \(\xi _j \notin {\widetilde{\varXi }}\) has a continuous distribution function. This ensures that, in the case \({\mathbb {Q}}({\widetilde{\varXi }}) = 0\), which we study in Sect. 3.3, there will be exactly K nearest data points to \({\widetilde{\varXi }}\) with probability one.

Next we present an interesting result, which deals with the inner supremum of problem (P) and adds more meaning to this problem by linking it to an alternative formulation more in the style of the Wasserstein data-driven DRO approach proposed in [35], where, however, no side information is taken into account. In fact, the distributionally robust approach to conditional stochastic optimization that is proposed in [38] is based on this alternative formulation (see Proposition A.4 in that work)Footnote 2.

Proposition 1

Given \(N\geqslant 1\), \({\mathbb {Q}}({\widetilde{\varXi }}) = \alpha >0\), and any positive value of \({\widetilde{\rho }}\), problem (SP2) is a relaxation of (SP1), where (SP1) and (SP2) are given by

and where by “relaxation” it is meant that any solution Q feasible in (SP1) can be mapped into a solution \(Q_{{\widetilde{\varXi }}}\) feasible in (SP2) with the same objective function value.

Moreover, if \(\widehat{{\mathbb {Q}}}_{N}({\widetilde{\varXi }}) = 0\) or \(\alpha = 1\), then (SP1) and (SP2) are equivalent.

Among other things, Proposition 1 reveals that parameter \({\widetilde{\rho }}\) in problem (SP2), and hence in problem (P), can be understood as a cost budget per unit of transported mass. Likewise, parameter \(\alpha \) can be interpreted as the minimum amount of mass (in per unit) of the empirical distribution \(\widehat{{\mathbb {Q}}}_N\) that must be transported to the support \({\widetilde{\varXi }}\). This interpretation of parameters \({\widetilde{\rho }}\) and \(\alpha \) will be useful to follow the rationale behind the DRO solution approaches that we develop later on.

On the other hand, despite the connection between problems (SP1) and (SP2) that Proposition 1 unveils, the latter is qualitatively more amenable to further generalization and analysis. Examples of this are given by the relevant cases \(\alpha = 0\), for which problem (SP1) is ill-posed, while problem (SP2) is not, and \(\alpha \) unknown, for which the use of trimming sets in (SP2) allows for a more straightforward treatment. We will deal with both cases in Sects. 3.3 and 3.2 , respectively. Before that, we provide an implementable reformulation of the proposed DRO problem (P).

2.3 Towards a tractable reformulation of the partial mass transportation problem

In this section, we put the proposed DRO problem (P) in a form more suited to tackle its computational implementation and solution. For this purpose, we first need to introduce a technical result whereby we characterize the trimming sets of an empirical probability measure.

Lemma 2

Consider the sample data \(\{\widehat{\varvec{\xi }}_i\}_{i=1}^{N}\) and their associated empirical measure \(\widehat{{\mathbb {Q}}}_{N} = \frac{1}{N}\sum _{i=1}^{N}{\delta _{\widehat{\varvec{\xi }}_i}}\). If \(\alpha > 0\), the set of all \((1-\alpha )\)-trimmings of \(\widehat{{\mathbb {Q}}}_{N}\) is given by all probability distributions in the form \(\sum _{i=1}^{N}{b_i\delta _{\widehat{\varvec{\xi }}_i}}\) such that \(0\le b_i\le \frac{1}{N\alpha }\), \(\forall i =1, \ldots , N\), and \(\sum _{i=1}^{N}{b_i}=1\). Furthermore, if \(\alpha = 0\), the set \({\mathscr {R}}_{1-\alpha }(\widehat{{\mathbb {Q}}}_{N})\) of \((1-\alpha )\)-trimmings of \(\widehat{{\mathbb {Q}}}_{N}\) becomes \({\mathscr {R}}_{1}(\widehat{{\mathbb {Q}}}_{N}) = \{\sum _{i=1}^{N}{b_i\delta _{\widehat{\varvec{\xi }}_i}}\) such that \(b_i \geqslant 0\), \(\forall i =1, \ldots , N\), and \(\sum _{i=1}^{N}{b_i}=1\}\).

Proof

If \(\alpha > 0\), the form of any \((1-\alpha )\)-trimming of \(\widehat{{\mathbb {Q}}}_{N}\) as \(\sum _{i=1}^{N}{b_i\delta _{\widehat{\varvec{\xi }}_i}}\), along with the condition \(b_i\le \frac{1}{N\alpha }\), follows directly from Definition 1 of a \((1-\alpha )\)-trimming. Naturally, \(b_{i} \ge 0\) and \(\sum _{i=1}^{N}{b_i}=1\) are then required because any \((1-\alpha )\)-trimming is a probability distribution.

On the other hand, if \(\alpha = 0\), the resulting trimming set \({\mathscr {R}}_{1}(\widehat{{\mathbb {Q}}}_{N})\) is simply the family of all probability distributions supported on the data points \(\{\widehat{\varvec{\xi }}_i\}_{i=1}^{N}\). \(\square \)

In short, Lemma 2 tells us that trimming a data sample of size N with level \(1-\alpha \) involves reweighting the empirical distribution of such data by giving a new weight less than or equal to \(\frac{1}{N\alpha }\) to each data point. Therefore, we can recast constraint \({\mathscr {W}}_p^p({\mathscr {R}}_{1-\alpha }( \widehat{{\mathbb {Q}}}_N),Q_{{\widetilde{\varXi }}})\leqslant {\widetilde{\rho }}\) in problem (P) as

We are now ready to introduce the main result of this section.

Theorem 1

(Reformulation based on strong duality) For \(\alpha > 0\) and any value of \({\widetilde{\rho }}\geqslant {\underline{\epsilon }}^p_{N\alpha }\), subproblem (SP2) is equivalent to the following one:

Surely the most important takeaway message of Theorem 1 is that problem (P) is as tractable as the standard Wasserstein-metric-based DRO formulation proposed in [35] and [34]. In these two papers, conditions under which the inner supremum in \(\mathrm{(SP2')}\) can be recast in a more tractable form are provided. As an example, in Theorem EC.2 in the extended version of this paper [19], we provide a more refined reformulation of \(\mathrm{(SP2')}\), whereby the problems we solve in Sect. 4 can be directly handled.

In the following section, we show that problem (P) works, under certain conditions, as a statistically meaningful surrogate decision-making model for the target conditional stochastic program (1).

3 Finite sample guarantee and asymptotic consistency

Next we argue that the worst-case optimal expected cost provided by problem (P) for a fixed sample size N and a suitable choice of parameters \((\alpha , {\widetilde{\rho }})\) (dependent on N) leads to an upper confidence bound on the out-of-sample performance attained by the optimizers of (P) (finite sample guarantee) and that those optimizers almost surely converge to an optimizer of the true optimal expected cost as N grows to infinity (asymptotic consistency).

To be more precise, the out-of-sample performance of a given data-driven candidate solution \(\widehat{{\mathbf {x}}}_N\) to problem (1) is defined as \({\mathbb {E}}_{{\mathbb {Q}}} [f(\widehat{{\mathbf {x}}}_N , \varvec{\xi })\mid \varvec{\xi } \in {\widetilde{\varXi }}] = {\mathbb {E}}_{{\mathbb {Q}}_{{\widetilde{\varXi }}}} [f(\widehat{{\mathbf {x}}}_N , \varvec{\xi })]\). We say that a data-driven method built to address problem (1) enjoys a finite sample guarantee, if it produces pairs \((\widehat{{\mathbf {x}}}_N, {\widehat{J}}_{N})\) satisfying a relation in the form

and \({\widehat{J}}_N\) is a certificate for the out-of-sample performance of \(\widehat{{\mathbf {x}}}_N \) (i.e., an upper bound that is generally contingent on the data sample). The probability on the right-hand side of (10), i.e., \(1-\beta \), is known as the reliability of \((\widehat{{\mathbf {x}}}_N, {\widehat{J}}_N )\) and can be understood as a confidence level.

Our analysis relies on the lemma below, which immediately follows from setting \(P_1:=\widehat{{\mathbb {Q}}}_N,Q:={\mathbb {Q}}_{{\widetilde{\varXi }}}, P_2:={\mathbb {Q}}\) in Lemma 3.13 on probability trimmings in [1].

Lemma 3

Assume that \({\mathbb {Q}}_{{\widetilde{\varXi }}}, {\mathbb {Q}} \in {\mathscr {P}}_p({\mathbb {R}}^d)\), and take \(p \geqslant 1\), then

We notice that the term \({\mathscr {W}}_p({\mathscr {R}}_{1-\alpha }({\mathbb {Q}}),{\mathbb {Q}}_{{\widetilde{\varXi }}})\) in (11) is not random and depends exclusively on the true distributions \({\mathbb {Q}}_{{\widetilde{\varXi }}}\), \({\mathbb {Q}}\), and the trimming level \(\alpha \). It is, therefore, independent of the data sample (unlike the other two terms involved).

Inequality (11) reveals an interesting trade-off. On the one hand, the distance \({\mathscr {W}}_p({\mathscr {R}}_{1-\alpha }({\mathbb {Q}}),{\mathbb {Q}}_{{\widetilde{\varXi }}})\) diminishes as \(\alpha \) decreases to zero, because the trimming set \({\mathscr {R}}_{1-\alpha }({\mathbb {Q}})\) grows in size. On the other, the term \(\frac{1}{\alpha ^{1/p}}{\mathscr {W}}_p(\widehat{{\mathbb {Q}}}_N,{\mathbb {Q}})\) becomes larger as \(\alpha \) approaches zero. As we will see later on, controlling this trade-off is key to endowing problem (P) with performance guarantees. To this end, we will make use of the Proposition 2 below.

Assumption 1

Suppose that the true joint probability distribution \({\mathbb {Q}}\) is light-tailed, i.e., there exists a constant \(a > p \geqslant 1\) such that \({\mathbb {E}}_{{\mathbb {Q}}}\left[ \exp (\Vert \varvec{\xi }\Vert ^{a})\right] < \infty \).

Proposition 2

(Concentration tail inequality) Suppose that Assumption 1 holds. Then, there are constants \(c,C>0\) such that, for all \(\epsilon> 0, \alpha >0\), and \(N \geqslant 1\), it holds

where

with \(d = d_{{\mathbf {z}}} + d_{{\mathbf {y}}}\).

Proof

Because of Lemma 3 we have

where the right-hand side of this inequality is upper bounded by (13) according to [23, Theorem 2]. \(\square \)

Assuming \(p\ne d/2\) , if we equate \(\beta \) to \(\beta _{p, \epsilon , \alpha }(N)\) and solving for \(\epsilon \) we get:

In what follows, we distinguish three general setups that may appear in the real-life use of Conditional Stochastic Optimization, namely, the case \({\mathbb {Q}}({\widetilde{\varXi }}) = \alpha > 0\) with \(\alpha \) known, the case \({\mathbb {Q}}({\widetilde{\varXi }}) = \alpha > 0\) with \(\alpha \) unknown, and the case \({\mathbb {Q}} \ll \lambda ^{d}\) with \({\mathbb {Q}}({\widetilde{\varXi }}) = \alpha = 0\).

3.1 Case \({\mathbb {Q}}({\widetilde{\varXi }}) = \alpha > 0\). Applications in data-driven decision making under contaminated samples

When \({\mathbb {Q}}({\widetilde{\varXi }}) = \alpha > 0\) and known, we can solve the following DRO problem:

As we show below, problem \(\left( \mathrm{P}_{(\alpha , {\widetilde{\rho }}_N)}\right) \) enjoys a finite sample guarantee and produces solutions that are asymptotically consistent, i.e., that converge to the true solution (under complete information) given by problem (1). This is somewhat hinted at by the connection between problems (SP1) and (SP2) highlighted in Proposition 1.

Theorem 2

(Case \(\alpha > 0\): Finite sample guarantee) Suppose that the assumptions of Proposition 2 hold and take \(p \ne d/2\). Given \(N \geqslant 1\) and \(\alpha > 0\), choose \(\beta \in \left( 0,1\right) \), and determine \(\epsilon _{N,p, \alpha }(\beta )\) through (14). Then, for all \({\widetilde{\rho }}_N \geqslant \max (\epsilon ^p_{N,p, \alpha }(\beta ),{\underline{\epsilon }}_{N\alpha }^p)\), where \({\underline{\epsilon }}_{N\alpha }^p\) is the minimum transportation budget as in Definition 2, the pair \((\widehat{{\mathbf {x}}}_N\), \({\widehat{J}}_N)\) that is solution to problem \(\left( \mathrm{P}_{(\alpha ,{\widetilde{\rho }}_N)}\right) \) enjoys the finite sample guarantee (10).

Proof

For problem \(\left( \mathrm{P}_{(\alpha ,{\widetilde{\rho }}_N)}\right) \) to be feasible, we must have \({\widetilde{\rho }}_N \geqslant {\underline{\epsilon }}_{N\alpha }^p\). Furthermore, \({\mathscr {W}}_p({\mathscr {R}}_{1-\alpha }({\mathbb {Q}}),{\mathbb {Q}}_{{\widetilde{\varXi }}}) = 0\) in (12) because of Lemma 1. Hence, Proposition 2 ensures that \({\mathbb {Q}}^{N}\left( {\mathbb {Q}}_{{\widetilde{\varXi }}} \in \widehat{{\mathscr {U}}}_N(\alpha ,{\widetilde{\rho }}_N)\right) \geqslant 1-\beta \) for any \({\widetilde{\rho }}_N \geqslant \epsilon ^p_{N,p, \alpha }(\beta )\). It follows then

with probability at least \(1-\beta \). \(\square \)

We point out that, in the case \(\alpha >0\), data points may fall into the set \({\widetilde{\varXi }}\). Logically, the contribution of these points to the minimum transportation budget \({\underline{\epsilon }}_{N\alpha }^p\) is null and their order (the way their tie is broken) is irrelevant to our purpose.

Now we show that the solutions of the distributionally robust optimization problem \(\left( \mathrm{P}_{(\alpha ,{\widetilde{\rho }}_N)}\right) \) converge to the solution of the target conditional stochastic program (1) as N increases, for a careful choice of the budget \({\widetilde{\rho }}_N\). This result is underpinned by the fact that, under that selection of \({\widetilde{\rho }}_N\), any distribution in \(\widehat{{\mathscr {U}}}_N(\alpha ,{\widetilde{\rho }}_N)\) converges to the true conditional distribution \({\mathbb {Q}}_{{\widetilde{\varXi }}}\). This is formally stated in the following lemma.

Lemma 4

(Case \(\alpha >0\): Convergence of conditional distributions) Suppose that the assumptions of Proposition 2 hold. Choose a sequence \(\beta _N \in \left( 0,1\right) \), \(N \in {\mathbb {N}}\), such that \(\sum _{N=1}^{\infty }{\beta _N} < \infty \) and \(\lim _{N\rightarrow \infty }{\epsilon _{N,p, \alpha }(\beta _N)}\rightarrow 0\). Then,

for any sequence \(Q^N_{{\widetilde{\varXi }}}\), \(N \in {\mathbb {N}}\), such that \(Q^N_{{\widetilde{\varXi }}}\in \widehat{{\mathscr {U}}}_N(\alpha ,{\widetilde{\rho }}_N)\) with \({\widetilde{\rho }}_N = \max (\epsilon ^p_{N,p, \alpha }(\beta _N),{\underline{\epsilon }}_{N\alpha }^p)\).

Proof

Take N large enough and let \({\widehat{Q}}_{N/{\widetilde{\varXi }}}\) be the conditional probability distribution of \(\widehat{{\mathbb {Q}}}_N\) given \(\varvec{\xi } \in \varXi \). We have

We show that the two terms on the right-hand side of the above inequality vanish with probability one as N grows to infinity. We start with \({\mathscr {W}}_p({\widehat{Q}}_{N/{\widetilde{\varXi }}},{\mathbb {Q}}_{{\widetilde{\varXi }}})\).

Let I denote the subset of observations \(\widehat{\varvec{\xi }}_i:=(\widehat{{\mathbf {z}}}_{i}, \widehat{{\mathbf {y}}}_{i})\) for \(i=1, \ldots , N\), such that \(\widehat{\varvec{\xi }}_i \in {\widetilde{\varXi }}\). It follows from the Strong Law of Large Numbers that \(\widehat{{\mathbb {Q}}}_N({\widetilde{\varXi }}) = \frac{|I|}{N} = \alpha _N \rightarrow \alpha \) almost surely. Besides, since the sequence \(\beta _N, N \in {\mathbb {N}}\) is summable and \(\lim _{N\rightarrow \infty }{\epsilon _N(\beta _N)}\rightarrow 0\), the Borel-Cantelli Lemma and Proposition 2 implies

Then, from Lemma 1, we deduce that \({\mathscr {W}}_p({\widehat{Q}}_{N/{\widetilde{\varXi }}},{\mathbb {Q}}_{{\widetilde{\varXi }}}) \rightarrow 0\) with probability one.

We can deal with the term \({\mathscr {W}}_p(Q^N_{{\widetilde{\varXi }}},{\widehat{Q}}_{N/{\widetilde{\varXi }}})\) in a similar fashion, except for the subtle difference that, in this case, we require \({\widetilde{\rho }}_N = \max (\epsilon ^p_{N,p, \alpha }(\beta _N),{\underline{\epsilon }}_{N\alpha }^p)\), so that, for all \(N \in {\mathbb {N}}\), problem \(P_{(\alpha , {\widetilde{\rho }}_N)}\) delivers a feasible \(Q^N_{{\widetilde{\varXi }}}\) in the sequence. Hence, in order to prove that \({\mathscr {W}}_p(Q^N_{{\widetilde{\varXi }}},{\widehat{Q}}_{N/{\widetilde{\varXi }}}) \rightarrow 0\) almost surely, we need to show that \(\lim _{N\rightarrow \infty }{\underline{\epsilon }}_{N\alpha } = 0\) with probability one. This is something that can be directly deduced from the definition of \({\underline{\epsilon }}_{N\alpha }\), namely,

\(\square \)

Note that, by Eq. (9) in Definition 2, we have that \({\underline{\epsilon }}_{N\alpha } > 0\) if and only if

Once the convergence of \(Q^{N}_{{\widetilde{\varXi }}}\) to the true conditional distribution \({\mathbb {Q}}_{{\widetilde{\varXi }}}\) in the p-Wasserstein metric has been established by the previous lemma, the following asymptotic consistency result, which is analogous to that of [35, Theorem 3.6], can also be derived.

Theorem 3

(Asymptotic consistency) Consider that the conditions of Theorem 2 hold. Take a sequence \({\widetilde{\rho }}_N\) as in Lemma 4. Then, we have

-

(i)

If for any fixed value \({\mathbf {x}}\in X\), \(f({\mathbf {x}}, \varvec{\xi })\) is continuous in \(\varvec{\xi }\) and there is \(L\geqslant 0\) such that \(|f({\mathbf {x}}, \varvec{\xi })|\leqslant L(1+ \left\| \varvec{\xi } \right\| ^p) \) for all \({\mathbf {x}}\in X\) and \(\varvec{\xi } \in {\widetilde{\varXi }}\), then we have that \({\widehat{J}}_N \rightarrow J^*\) almost surely when N grows to infinity.

-

(ii)

If the assumptions in (i) are satisfied, \(f({\mathbf {x}}, \varvec{\xi })\) is lower semicontinuous on X for any fixed \(\varvec{\xi }\in {\widetilde{\varXi }}\), and the feasible set X is closed, then we have that any accumulation point of the sequence \(\{ \widehat{{\mathbf {x}}}_N \}_N\) is almost surely an optimal solution of problem (1).

Proof

We omit the proof, because it is essentially the same as the one in [35, Theorem 3.6], except that, since we are working with \(p \geqslant 1\), we additionally require that \(f({\mathbf {x}}, \varvec{\xi })\) be continuous in \(\varvec{\xi }\) so that we can make use of Theorem 7.12 from [45]. \(\square \)

In the following remark, we show how problem \(P_{(\alpha , {\widetilde{\rho }}_N)}\) can be used to make distributionally robust decisions in a context where the data available to the decision maker is contaminated.

Remark 1

(Data-driven decision-making under contaminated samples) Suppose that the dataset \(\widehat{\varvec{\xi }}_i:=(\widehat{{\mathbf {z}}}_{i}, \widehat{{\mathbf {y}}}_{i})\) for \(i=1, \ldots , N\) is composed of correct and contaminated samples. The decision maker only knows that a sample is correct with probability \(\alpha \) and contaminated with probability \(1-\alpha \), but does not know which type each sample belongs to. Thus, the data have been generated from a mixture distribution given by \(P=\alpha Q^*+(1-\alpha )R\), where \(Q^*\) is the correct distribution and R a contamination.

In our context, this is equivalent to stating that \(Q^*\in {\mathscr {R}}_{1-\alpha }(P)\), which, in turn, can be formulated as \({\mathscr {W}}_p({\mathscr {R}}_{1-\alpha }(P), Q^*)=0\). Since we only have limited information on P in the form of the empirical distribution \({\widehat{P}}_N\), we propose to solve problem \(\mathrm{P}_{(\alpha , {\widetilde{\rho }}_N)}\), that is,

where we have assumed that the correct distribution \(Q^*\), the contamination R and the data-generating distribution P are all supported on \(\varXi \).

The decision maker can profit from the finite sample guarantee that the solution to problem (18a)–(18b) satisfies as per Theorem 2, with \({\widetilde{\rho }}_N \geqslant \epsilon ^p_{N,p, \alpha }(\beta )\), \(\beta \in (0,1)\), since \({\underline{\epsilon }}^p_{N\alpha } = 0\) in this case. Furthermore, if we choose a summable sequence of \(\beta _N \in (0,1)\), \(N \in {\mathbb {N}}\), such that \(\lim _{N\rightarrow \infty }\epsilon _{N}(\beta _N) = 0\), then we have that

In plain words, for N large enough, the decision vector \({\mathbf {x}}\) is being optimized by way of problem (18a)–(18b) over the “smallest” ambiguity set that almost surely contains the correct distribution \(Q^*\) of the data (in the absence of any other information on \(Q^*\)). In fact, this means our DRO approach deals with contaminated samples in a way that is distinctly more convenient than that of [14, 22]. Essentially, they suggest optimizing over a 1-Wasserstein ball centered at \({\widehat{P}}_N\) of radius \({\widetilde{\rho }}\), that is,

under the argument that for \(\rho \) sufficiently large, the Wasserstein ball contains the true distribution of the data \(Q^*\) with a certain confidence level. For instance, the author of [22] uses the triangle inequality and the convexity property of the Wasserstein distance to establish that \({\mathscr {W}}_1({\widehat{P}}_{N}, Q^*) \leqslant {\mathscr {W}}_1({\widehat{P}}_{N}, P) + (1-\alpha ){\mathscr {W}}_1(R, Q^*)\), so that the extra budget \((1-\alpha ){\mathscr {W}}_1(R, Q^*)\) would ensure that \(Q^*\) is within the Wasserstein ball with a given confidence level (a similar argument is made in [14]). In practice, though, this extra budget as such cannot be computed, because neither the correct distribution \(Q^*\) nor the contamination R are known to the decision maker. However, our approach naturally encodes it in the ambiguity set (18b). Indeed, for N large enough, result (19) tells us that the correct distribution \(Q^*\) belongs, almost surely, to the \((1-\alpha )\)-trimming set of the empirical distribution \({\widehat{P}}_N\). It follows precisely from this and Proposition 4 in “Appendix A” that \({\mathscr {W}}_{p}({\widehat{P}}_N, Q^*) \rightarrow {\mathscr {W}}_{p}(\alpha Q^*+(1-\alpha )R, Q^*) \leqslant \alpha {\mathscr {W}}_{p}(Q^*, Q^*) + (1-\alpha ){\mathscr {W}}_p(R,Q^*)\), i.e., \({\mathscr {W}}_{p}({\widehat{P}}_N, Q^*) \leqslant (1-\alpha ){\mathscr {W}}_p(R,Q^*)\).

In short, our approach offers probabilistic guarantees in the finite-sample regime and, in the asymptotic one, naturally exploits all the information we have on \(Q^*\), namely, \(Q^* \in {\mathscr {R}}_{1-\alpha }(P)\), to robustify the decision \({\mathbf {x}}\) under contamination.

3.2 The case of unknown \({\mathbb {Q}}({\widetilde{\varXi }}) = \alpha > 0\)

In this section, we discuss how we can use the proposed DRO approach to deal with the case in which \({\mathbb {Q}}({\widetilde{\varXi }}) = \alpha > 0\) is unknown. For this purpose, we first introduce a proposition that will allows us to design a distributionally robust strategy to tackle problem (1) by means of problem (P).

Proposition 3

Suppose that \({\mathbb {Q}}({\widetilde{\varXi }}) = \alpha >0\). Take \(0< \alpha ' < \alpha \) and any positive value of \({\widetilde{\rho }}\). Given \(N \geqslant 1\), the following problem

is either fully equivalent to (SP2), if \(\frac{1}{N} \geqslant \alpha \) or a relaxation otherwise.

Proof

The proof of the proposition is trivial and directly follows from the fact that \({\mathscr {R}}_{1-\alpha }(\widehat{{\mathbb {Q}}}_N) \subset {\mathscr {R}}_{1-\alpha '}(\widehat{{\mathbb {Q}}}_N)\), if \(\alpha ' \leqslant \alpha \), and that \({\mathscr {R}}_{1-\alpha }(\widehat{{\mathbb {Q}}}_N) = {\mathscr {R}}_{1-\alpha '}(\widehat{{\mathbb {Q}}}_N)\) if, besides, \(\frac{1}{N\alpha } \geqslant 1\). \(\square \)

Based on Proposition 3, we could use the following two-step safe strategy to handle the case of unknown \({\mathbb {Q}}({\widetilde{\varXi }}) = \alpha > 0\):

-

1.

First, solve the following uncertainty quantification problem (see [25, 35] for further details),

$$\begin{aligned} \alpha _N:=\inf _{Q \in {\mathbb {B}}_{\epsilon _N}(\widehat{{\mathbb {Q}}}_N)} Q(\varvec{\xi }\in {\widetilde{\varXi }})=1-\sup _{Q \in {\mathbb {B}}_{\epsilon _N}(\widehat{{\mathbb {Q}}}_N)} Q(\varvec{\xi }\notin {\widetilde{\varXi }}) \end{aligned}$$(22)where the radius \(\epsilon _N\) of the Wasserstein ball has been chosen so that \(\alpha _N\) represents the minimum probability that the joint true distribution \({\mathbb {Q}}\) of the data assigns to the event \(\varvec{\xi } \in {\widetilde{\varXi }}\) with confidence \(1-\beta _N\), \(\beta _N \in (0,1)\).

-

2.

Next, solve problem \((P_{(\alpha _N, {\widetilde{\rho }}_N)})\), that is,

$$\begin{aligned} \inf _{{\mathbf {x}}\in X} \sup _{Q_{{\widetilde{\varXi }}}}&\;\; {\mathbb {E}}_{Q_{{\widetilde{\varXi }}}} \left[ f({\mathbf {x}}, \varvec{\xi }) \right] \end{aligned}$$(23a)$$\begin{aligned}&\text {s.t.} \ {\mathscr {W}}_p^p({\mathscr {R}}_{1-\alpha _N}( \widehat{{\mathbb {Q}}}_N),Q_{{\widetilde{\varXi }}}) \le {\widetilde{\rho }}_N \end{aligned}$$(23b)$$\begin{aligned}&\qquad \ Q_{{\widetilde{\varXi }}}({\widetilde{\varXi }})=1 \end{aligned}$$(23c)with \({\widetilde{\rho }}_N \geqslant \epsilon ^p_{N}(\beta _N)/\alpha _N\).

Now suppose that \({\mathbb {Q}} \in {\mathbb {B}}_{\epsilon _N(\beta _N)}(\widehat{{\mathbb {Q}}}_N)\) and therefore, \(\alpha _N \leqslant \alpha \) (this is a random event that occurs with probability at least \(1-\beta _N\)). According to Lemma 3, we have

Hence, \({\mathbb {Q}}_{{\widetilde{\varXi }}} \in \widehat{{\mathscr {U}}}_N(\alpha _N, {\widetilde{\rho }}_N)\) with probability at least \(1-\beta _N\). In other words, the two-step procedure here described does not degrade the reliability of the DRO solution. Furthermore, the minimum transportation budget \({\underline{\epsilon }}_{N\alpha _N}\) that makes problem \((P_{(\alpha _N, {\widetilde{\rho }}_N)})\) feasible is always zero here, if the event \(\varvec{\xi } \in {\widetilde{\varXi }}\) has been observed at least once. This is so because the uncertainty quantification problem of step 1 ensures that \(\alpha _N\) is lower than or equal to the fraction of training data points falling in \({\widetilde{\varXi }}\). Moreover, when N grows to infinity, this uncertainty quantification problem reduces to computing such a fraction of points, which, by the Strong Law of Large Numbers converges to the real \(\alpha \), i.e., \(\alpha _N \rightarrow \alpha \) with probability one. Therefore, in the asymptotic regime, this case resembles that of known \(\alpha >0\).

We notice, however, that, in practice, setting \({\widetilde{\rho }}_N \geqslant \epsilon ^p_{N}(\beta _N)/\alpha _N\) may result in too large budgets \({\widetilde{\rho }}_N\), and thus, in overly conservative solutions, because, as \(\epsilon _{N}\) is increased, \(\alpha _N\) decreases to zero. For this reason, in the supplementary material, we provide an alternative data-driven procedure to address the case \(\alpha >0\), in which we simply set \(\alpha _N = \widehat{{\mathbb {Q}}}_N({\widetilde{\varXi }})\) in problem \((P_{(\alpha _N, {\widetilde{\rho }}_N)})\) and use the data to tune parameter \({\widetilde{\rho }}_N\).

3.3 The case \({\mathbb {Q}} \ll \lambda ^{d}\) and \({\mathbb {Q}}({\widetilde{\varXi }}) = \alpha = 0\)

Suppose that the true joint distribution \({\mathbb {Q}}\) governing the random vector \(\varvec{\xi }:=({\mathbf {z}},{\mathbf {y}})\) admits a density function with respect to the Lebesgue measure \(\lambda ^d\), with \(d = d_{{\mathbf {z}}}+d_{{\mathbf {y}}}\). Without loss of generality, consider the event \(\varvec{\xi } \in {\widetilde{\varXi }}\), where \({\widetilde{\varXi }}\) is defined as \({\widetilde{\varXi }}=\{ \varvec{\xi }=({\mathbf {z}},{\mathbf {y}}) \in \varXi \; : \; {\mathbf {z}}= \mathbf {z^*}\}\). This means that \({\mathbb {Q}}({\widetilde{\varXi }}) = \alpha = 0\).

Therefore, our focus in this case is on the particular variant of problem (1) given by

Problem (24) has become a central object of study in what has recently come to be known as Prescriptive Stochastic Programming or Conditional Stochastic Optimization, (see, e.g., [5, 7,8,9, 12, 16, 39, 43], and references therein).

Devising a DRO approach to problem (24) using the standard Wasserstein ball \({\mathscr {W}}_p(\widehat{{\mathbb {Q}}}_N, Q)\leqslant \varepsilon \) is of no use here, because any point from the support of \(\widehat{{\mathbb {Q}}}_N\) with an arbitrarily small mass can be transported to the set \({\widetilde{\varXi }}\) at an arbitrarily small cost in terms of \({\mathscr {W}}_p(\widehat{{\mathbb {Q}}}_N, Q)\). This way, one could always place this arbitrarily small particle at a point \((\mathbf {z^*}, \mathbf {y'}) \in \underset{({\mathbf {z}},{\mathbf {y}}) \in {\widetilde{\varXi }}}{{\mathrm{arg\,max}}}f({\mathbf {x}}, ({\mathbf {z}},{\mathbf {y}}))\). In contrast, problem (P), which is based on partial mass transportation, offers a richer framework to seek for a distributional robust solution to (24). To see this, consider again the inequality (11). If we could set \(\alpha = 0\), the term \({\mathscr {W}}_p({\mathscr {R}}_{1-\alpha }({\mathbb {Q}}),{\mathbb {Q}}_{{\widetilde{\varXi }}})\) would vanish, because we could take random variables \(\varvec{\xi } \sim {\mathbb {Q}}_{{\widetilde{\varXi }}}\), \(\varvec{\xi }_m \sim {\mathbb {Q}}_m \in {\mathscr {R}}_{1}({\mathbb {Q}}), m \in {\mathbb {N}}\), such that \({\mathscr {W}}_p({\mathbb {Q}}_m, {\mathbb {Q}}_{{\widetilde{\varXi }}}) \rightarrow 0\). Unfortunately, fixing \(\alpha \) to zero is not a real option due to the term \(\frac{1}{\alpha ^{1/p}}{\mathscr {W}}_p(\widehat{{\mathbb {Q}}}_N,{\mathbb {Q}})\) in the inequality. Therefore, what we propose instead is to solve a sequence of optimization problems in the form

with both \(\alpha _N\) and \({\widetilde{\rho }}_N\) tending to zero appropriately as N increases. Next we show that, under certain conditions, problem \(\left( {\text{ P }}_{(\alpha _N,{\widetilde{\rho }}_N)}\right) \) enjoys a finite sample guarantee and is asymptotically consistent.

Assumption 2

(Condition (3.6) from [21]) Let \(B({\mathbf {z}}^*, r):= \{{\mathbf {z}} \in \varXi _{{\mathbf {z}}} : ||{\mathbf {z}}-{\mathbf {z}}^*|| \leqslant r\}\) denote the closed ball in \({\mathbb {R}}^{d_{{\mathbf {z}}}}\) with center \({\mathbf {z}}^*\) and radius r. The random vector \(\varvec{\xi }:= ({\mathbf {z}},{\mathbf {y}})\) has a joint density \(\phi \) that verifies the following for some \(r_0 > 0\).

-

1.

It admits uniformly for \(r \in [0, r_0]\) and \({\mathbf {y}}\in {\mathbb {R}}^{d_{{\mathbf {y}}}}\) the following expansion:

$$\begin{aligned} \phi ({\mathbf {z}}^*+r \,{\mathbf {u}}, {\mathbf {y}})= \phi ({\mathbf {z}}^*, {\mathbf {y}})\left[ 1+r \langle {\mathbf {u}}, \ell _1({\mathbf {y}})\rangle + O(r^2\ell _2({\mathbf {y}}))\right] \end{aligned}$$(26)where \({\mathbf {u}} \in {\mathbb {R}}^{d_{{\mathbf {z}}}}\) with \(||{\mathbf {u}}||= 1\), and where \(\ell _1:{\mathbb {R}}^{d_{{\mathbf {y}}}}\rightarrow {\mathbb {R}}^{d_{{\mathbf {z}}}}\) and \(\ell _2:{\mathbb {R}}^{d_{{\mathbf {y}}}}\rightarrow {\mathbb {R}}\) satisfy \(\int (||\ell _1({\mathbf {y}})||^2 +|\ell _2({\mathbf {y}})|^2) \phi ({\mathbf {z}}^*,{\mathbf {y}}) d{\mathbf {y}}<\infty \).

-

2.

The marginal density of \({\mathbf {z}}\) is bounded away from zero in \(B({\mathbf {z}}^*, r_0)\).

Assumption 3

(Regularity and boundedness) We assume that

-

1.

There exists \({\widetilde{C}}>0\) and \(r_0 >0\) such that \({\mathbb {P}}(\Vert {\mathbf {z}}^*-{\mathbf {z}} \Vert \leqslant r) \geqslant {\widetilde{C}} r^{d_{{\mathbf {z}}}}\), for all \(0 < r \leqslant r_0\).

-

2.

The uncertainty \({\mathbf {y}}\) is bounded, that is, \(\Vert {\mathbf {y}}\Vert \leqslant M\) a.s. for some constant \(M >0\).

We note that Assumption 3.1 is automatically implied by Assumption 2, but we explicitly state it here for ease of readability. Furthermore, under the boundedness condition established in Assumption 3.2, Assumption 2 is satisfied, for example, by a twice differentiable joint density \(\phi ({\mathbf {z}},{\mathbf {y}})\) with continuous and bounded partial derivatives in \(B({\mathbf {z}}^*,r) \times \varXi _{{\mathbf {y}}}\) and bounded away from zero in that set. These are standard regularity conditions in the technical literature on kernel density estimation and regression [39].

Theorem 4

(Case \(\alpha = 0\): Finite sample guarantee) Suppose that Assumptions 2, 3 and those of Proposition 2 hold. Set \(\alpha _0:= {\widetilde{C}}r_0^{d_{{\mathbf {z}}}}\). Given \(N \geqslant 1\), choose \(\alpha _{N} \in (0, \alpha _0]\), \(\beta \in \left( 0,1\right) \), and determine \(\epsilon _{N,p, \alpha _N}(\beta )\) through (14).

Then, for all

we have that the pair \((\widehat{{\mathbf {x}}}_N\), \({\widehat{J}}_N)\) delivered by problem \(\left( \mathrm{P}_{(\alpha _N,{\widetilde{\rho }}_N)}\right) \) with parameters \({\widetilde{\rho }}_N \) and \(\alpha _N\) enjoys the finite sample guarantee (10).

Proof

For problem \(\left( {\text{ P }}_{(\alpha _N,{\widetilde{\rho }}_N)}\right) \) to be feasible, we need \({\widetilde{\rho }}_N \geqslant {\underline{\epsilon }}^p_{N\alpha _N}\).

The proof essentially relies on upper bounding the term \({\mathscr {W}}_p({\mathscr {R}}_{1-\alpha }({\mathbb {Q}}),{\mathbb {Q}}_{{\widetilde{\varXi }}})\) that appears in Equation (12) of Proposition 2. To that end, define \(\alpha (r)= {\widetilde{C}}r^{d_{{\mathbf {z}}}}\), for all \(0 < r \leqslant r_0\). Set \(\alpha _0:= \alpha (r_0)\). Let \({\mathbb {Q}}_{B({\mathbf {z}}^*, r)\times \varXi _{{\mathbf {y}}}}\) be the probability measure of \(({\mathbf {z}},{\mathbf {y}})\) conditional on \(({\mathbf {z}},{\mathbf {y}}) \in B({\mathbf {z}}^*, r)\times \varXi _{{\mathbf {y}}}\) and let \({\mathbb {Q}}_{B({\mathbf {z}}^*, r)}\) be its \({\mathbf {y}}\)-marginal. Note that, by Assumption 3.1, \({\mathbb {Q}}_{B({\mathbf {z}}^*, r) \times \varXi _{{\mathbf {y}}}} \in {\mathscr {R}}_{1-\alpha (r)}({\mathbb {Q}})\) provided that \(0 < r \leqslant r_0\).

Furthermore, according to Theorem 3.5.2 in [21], there exists a positive constant A such that

uniformly for \(0< r < r_0\), where Hell stands for Hellinger distance.

From Equation (5.1) in [42] and Assumption 3.2 we know that

In turn, from [26] we have that \({\mathscr {W}}_1({\mathbb {Q}}_{B({\mathbf {z}}^*, r)}, {\mathbb {Q}}_{{\widetilde{\varXi }}})\leqslant M\cdot \mathrm{Hell}({\mathbb {Q}}_{B({\mathbf {z}}^*, r)}, {\mathbb {Q}}_{{\widetilde{\varXi }}})\). Hence,

Thus,

Since \({\mathbb {Q}}_{B({\mathbf {z}}^*, r)\times \varXi _{{\mathbf {y}}}} \in {\mathscr {R}}_{1-\alpha (r)}({\mathbb {Q}})\) for all \(0<r\leqslant r_0\), it holds

which we can express in terms of \(\alpha \) as

provided that \(0 < \alpha \leqslant \alpha _0\). \(\square \)

Remark 2

There are conditions on the smoothness of the true joint distribution \({\mathbb {Q}}\) around \({\mathbf {z}} ={\mathbf {z}}^*\), other than those stated in Assumptions 2 and 3, for which we can also upper bound the distance \({\mathscr {W}}_p({\mathscr {R}}_{1-\alpha }({\mathbb {Q}}),{\mathbb {Q}}_{{\widetilde{\varXi }}})\). We provide below two examples of these conditions, which have been invoked in [9, 32, 33], respectively, and neither of which requires the boundedness of the uncertainty \({\mathbf {y}}\).

Example 1

Suppose that the true data-generating model is given by \({\mathbf {y}} = f^*({\mathbf {z}}) +{\mathbf {e}}\), where \(f^*({\mathbf {z}}):={\mathbb {E}}[ {\mathbf {y}} \mid {\mathbf {z}}={\mathbf {z}}^*]\) is the regression function and \({\mathbf {e}}\) is a zero-mean random error. Furthermore, suppose that Assumption 3.1 holds and there exists a positive constant L such that \(\Vert f^*({\mathbf {z}}')-f^*({\mathbf {z}})\Vert \leqslant L\Vert {\mathbf {z}}'-{\mathbf {z}}\Vert \), for all \(0 \leqslant \Vert {\mathbf {z}}'-{\mathbf {z}}\Vert \leqslant r_0\).

Take \(\alpha (r)= {\widetilde{C}}r^{d_{{\mathbf {z}}}}\), for all \(0 < r \leqslant r_0\) and set \(\alpha _0:= \alpha (r_0)\). With abuse of notation, we can write for any event within \(B({\mathbf {z}}^*, r) \times \varXi _{{\mathbf {y}}}\)

where \({\mathbb {Q}}_{{\mathbf {z}}}\) is the probability law of the feature vector \({\mathbf {z}}\) and \({\mathbb {Q}}_{{\mathbf {z}}={\mathbf {z}}'}\) is the conditional measure of \({\mathbb {Q}}\) given that \({\mathbf {z}}={\mathbf {z}}'\).

Since \({\mathbb {Q}}_{B({\mathbf {z}}^*, r)\times \varXi _{{\mathbf {y}}}} \in {\mathscr {R}}_{1-\alpha (r)}({\mathbb {Q}})\) for all \(0<r\leqslant r_0\), by the convexity of the Wasserstein distance, we have

for all \(0 < \alpha \leqslant \alpha _0\).

Example 2

Take \(p=1\). Suppose that there exists a positive constant L such that \({\mathscr {W}}_{1}({\mathbb {Q}}_{{\mathbf {z}}={\mathbf {z}}'}, {\mathbb {Q}}_{{\mathbf {z}}={\mathbf {z}}^*}) \leqslant L\Vert {\mathbf {z}}'-{\mathbf {z}}^*\Vert \), for all \(0 \leqslant \Vert {\mathbf {z}}'-{\mathbf {z}}\Vert \leqslant r_0\) and that Assumption 3.1 holds.

Following a line of reasoning that is parallel to that of the previous example, we also get

for all \(0 < \alpha \leqslant \alpha _0\), with \(\alpha _0:= \alpha (r_0)\).

Equation (27) and Examples 1 and 2 reveal that our finite sample guarantee is affected by the curse of dimensionality. Recently, powerful ideas to break this curse have been introduced in [24] under the standard Wasserstein-metric-based DRO scheme. In our setup, however, we also need distributional robustness against the (uncertain) error incurred when inferring conditional information from a sample of the true joint distribution. This implies increasing the robustness budget in our approach by an amount linked to the term \({\mathscr {W}}_p({\mathscr {R}}_{1-\alpha }({\mathbb {Q}}),{\mathbb {Q}}_{{\widetilde{\varXi }}})\). Consequently, we might need stronger assumptions on the data-generating model to break the dependence of this term with the dimension of the feature vector and thus extend the ideas in [24] to the realm of conditional stochastic optimization.

Now we state the conditions under which the sequence of problems \(\left( \mathrm{P}_{(\alpha _N,{\widetilde{\rho }}_N)}\right) \), \(N \rightarrow \infty \), is asymptotically consistent.

Lemma 5

(Convergence of conditional distributions) Suppose that the support \(\varXi \) of the true joint distribution \({\mathbb {Q}}\) is compact and that Assumptions 2 and 3 .1 hold. Take \((\alpha _N, {\widetilde{\rho }}_N)\) such that \(\alpha _N \rightarrow 0\), \(\frac{N\alpha _N^2}{\log (N)}\rightarrow \infty \), and \({\widetilde{\rho }}_N \downarrow {\underline{\epsilon }}^p_{N\alpha _N}\), where \({\underline{\epsilon }}_{N\alpha _N}\) is the minimum transportation budget as in Definition 2. Then, we have that

where \(Q^N_{{\widetilde{\varXi }}}\) is any distribution from the ambiguity set \( \widehat{{\mathscr {U}}}_N(\alpha _N, {\widetilde{\rho }}_N)\).

Proof

First, we need to provide conditions under which \({\mathscr {W}}_p\left( {\mathscr {R}}_{1-\alpha }(\widehat{{\mathbb {Q}}}_N),{\mathbb {Q}}_{{\widetilde{\varXi }}} \right) \rightarrow 0\) a.s. Since \(\varXi \) is compact and \({\mathscr {W}}_{p-1}\left( {\mathscr {R}}_{1-\alpha }(\widehat{{\mathbb {Q}}}_N),{\mathbb {Q}}_{{\widetilde{\varXi }}} \right) \leqslant {\mathscr {W}}_p\left( {\mathscr {R}}_{1-\alpha }(\widehat{{\mathbb {Q}}}_N),{\mathbb {Q}}_{{\widetilde{\varXi }}} \right) \), we can take \(p > d/2\) and \(\alpha _N\) such that \(\frac{N\alpha _N^2}{\log (N)}\rightarrow \infty \), so that the probabilities (12) becomes summable over N for any arbitrarily small \(\epsilon \). In this way, we can choose a sequence \(\beta _N \in \left( 0,1\right) \), \(N \in {\mathbb {N}}\), such that \(\sum _{N=1}^{\infty }{\beta _N} < \infty \) and \(\lim _{N\rightarrow \infty }{\epsilon _{N,p, \alpha _N}(\beta _N)}\rightarrow 0\). With this choice, we have

because \({\mathscr {W}}_p\left( {\mathscr {R}}_{1-\alpha _N}({\mathbb {Q}}),{\mathbb {Q}}_{{\widetilde{\varXi }}}\right) = O\left( \alpha _N^{2/pd_{{\mathbf {z}}}}\right) \rightarrow 0\) for \(\alpha _N \rightarrow 0\).

Since, \({\mathbb {Q}}_{{\widetilde{\varXi }}} \in {\mathscr {R}}_{1-\alpha _N}(\widehat{{\mathbb {Q}}}_N)\) a.s. in the limit and, by definition, \({\mathbb {Q}}_{{\widetilde{\varXi }}}({\widetilde{\varXi }}) = 1\), we have that \({\mathbb {Q}}_{{\widetilde{\varXi }}} \in \widehat{{\mathscr {U}}}_N(\alpha _N, {\widetilde{\rho }}_N)\) for N sufficiently large, with both \(\alpha _N, {\widetilde{\rho }}_N \rightarrow 0\).

For its part, because \(Q^N_{{\widetilde{\varXi }}} \in \widehat{{\mathscr {U}}}_N(\alpha _N, {\widetilde{\rho }}_N)\), this means that \({\mathscr {W}}_p\left( {\mathscr {R}}_{1-\alpha _N}(\widehat{{\mathbb {Q}}}_N),Q^N_{{\widetilde{\varXi }}}\right) \leqslant {\widetilde{\rho }}_N\). Take N large enough, set \({\widetilde{\rho }}_N\) arbitrarily close to \({\underline{\epsilon }}^p_{N\alpha _N}\) and notice that \(\widehat{{\mathscr {U}}}_N(\alpha _N, {\underline{\epsilon }}^p_{N\alpha _N})\) boils down to one single probability measure, the one made up of the \(N\alpha _N\) data points of \(\widehat{{\mathbb {Q}}}_N\) that are the closest to \({\widetilde{\varXi }}\). In addition, we have \({\underline{\epsilon }}^p_{N\alpha _N} \rightarrow 0\) with probability one. To see this, take \(K:= \lceil N\alpha _N\rceil \) and note that

almost surely provided that \(\alpha _N \rightarrow 0\) (see [13, Lemmas 2.2 and 2.3]), where \(\mathbf {{\widehat{z}}}_{K:N}\) is the \({\mathbf {z}}\)-component of the K-th nearest neighbor to \({\mathbf {z}}^*\) after reordering the data sample \(\{\widehat{\varvec{\xi }}_i:=(\mathbf {{\widehat{z}}}_i, \mathbf {{\widehat{y}}}_i)\}_{i=1}^{N}\) in terms of \(\Vert \mathbf {{\widehat{z}}}_{i}-{\mathbf {z}}^*\Vert \) only.

Therefore, it must hold that \({\mathscr {W}}_p( Q^N_{{\widetilde{\varXi }}}, {\mathbb {Q}}_{{\widetilde{\varXi }}}) \rightarrow 0\) a.s. \(\square \)

Remark 3

The compactness of the support set \(\varXi \) is assumed here just to simplify the proof. In fact, in the “Appendix EC.2” of the extended version of this paper [19], we use results from nearest neighbors to show that the convergence of conditional distributions can be attained under the less restrictive condition \(\frac{N\alpha _N}{\log (N)}\rightarrow \infty \) even in some cases for which the uncertainty \({\mathbf {y}}\) and the feature vector \({\mathbf {z}}\) are unbounded. In addition, we also make use of those results to demonstrate that distributionally robust versions of some local nonparametric predictive methods, such as Nadaraya-Watson kernel regression and K-nearest neighbors, naturally emerge from our approach.

Remark 4

The convergence of conditional distributions allows us to establish an asymptotic consistency result analogous to that of Theorem 3, by simply replacing “Theorem 2”, “\({\widetilde{\rho }}_N\)” and “Lemma 4” with “Theorem 4”, “\((\alpha _N, {\widetilde{\rho }}_N)\)” and “Lemma 5”, respectively.

Remark 5

Suppose that the event \({\widetilde{\varXi }}\) on which we condition problem (1) is given by \({\widetilde{\varXi }}:=\{ \varvec{\xi }=({\mathbf {z}}_1,{\mathbf {z}}_2,{\mathbf {y}}) \in \varXi \; : \; {\mathbf {z}}_1 = {\mathbf {z}}^*_1, \ {\mathbf {z}}_2 \in {\mathscr {Z}}_2\}\), with \({\mathbb {Q}}({\widetilde{\varXi }})=0\) and \({\mathbb {P}}({\mathbf {z}}_2 \in {\mathscr {Z}}_2) > 0\). Let \({\mathbb {Q}}_{{\mathscr {Z}}_2}\) be the probability measure of \(({\mathbf {z}}_1, {\mathbf {y}})\) conditional on \({\mathbf {z}}_2 \in {\mathscr {Z}}_2\). If we have that there is \({\widetilde{C}}>0\) and \(r_0 >0\) such that \({\mathbb {P}}(\Vert {\mathbf {z}}^*_1-{\mathbf {z}}_1 \Vert \leqslant r) \geqslant {\widetilde{C}} r^{d_{{\mathbf {z}}_1}}\), for all \(0 < r \leqslant r_0\), and that \({\mathbb {Q}}_{{\mathscr {Z}}_2}\) satisfies the smoothness condition invoked in either Theorem 4, Example 1 or Example 2, then the analysis in this section extends to that type of event by setting \(\alpha (r)= {\widetilde{C}} r^{d_{{\mathbf {z}}_1}} \cdot {\mathbb {P}}({\mathbf {z}}_2 \in {\mathscr {Z}}_2)\) and noticing that \({\mathbb {Q}}_{B({\mathbf {z}}_1^*, r) \times {\mathscr {Z}}_2 \times \varXi _{{\mathbf {y}}}} \in {\mathscr {R}}_{1-\alpha (r)}({\mathbb {Q}})\), \(0 < r \leqslant r_0\), where \({\mathbb {Q}}_{B({\mathbf {z}}_1^*, r) \times {\mathscr {Z}}_2 \times \varXi _{{\mathbf {y}}}}\) is the probability measure of \(({\mathbf {z}}_1,{\mathbf {z}}_2,{\mathbf {y}})\) conditional on \(({\mathbf {z}}_1,{\mathbf {z}}_2,{\mathbf {y}}) \in B({\mathbf {z}}_1^*, r)\times {\mathscr {Z}}_2 \times \varXi _{{\mathbf {y}}}\).

4 Numerical experiments

The following simulation experiments are designed to provide numerical evidence on the performance of the DRO framework with side information that we propose, with respect to other methods available in the technical literature. Here we only consider the case \(\alpha = 0\), while additional numerical experiments for the case \(\alpha > 0\) can be found in the supplementary material.

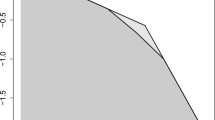

To numerically illustrate the setting \({\mathbb {Q}}({\widetilde{\varXi }}) = \alpha = 0\), we consider two well-known problems, namely, the (single-item) newsvendor problem and the portfolio allocation problem, both posed in the form \(\inf _{{\mathbf {x}}\in X} {\mathbb {E}}_{{\mathbb {Q}}} \left[ f({\mathbf {x}}, \varvec{\xi })\mid \varvec{\xi } \in {\widetilde{\varXi }}\right] \) to allow for side information. We compare four data-driven approaches to address the solution to these two problems: Our approach, i.e., problem \(\text {P}_{(\alpha _N,{\widetilde{\rho }}_N)}\) with \(\alpha _N=K_N/N\), which we denote “DROTRIMM”; a Sample Average Approximation method based on a local predictive technique, in particular, the \(K_N\) nearest neighbors, which we refer to as “KNN” (see [7] for further details); this very same local predictive method followed by a standard Wasserstein-metric-based DRO approach to robustify it, as suggested in [9, Section 5], which we call “KNNDRO”; and the robustified KNN method (4), also proposed in [9], which we term “KNNROBUST.” We clarify that KNNDRO uses the K nearest neighbors projected onto the set \({\widetilde{\varXi }}\) as the nominal “empirical” distribution that is used as the center of the Wasserstein ball in [35].

We also note that the newsvendor problem and the portfolio optimization problem are structurally different if seen from the lens of the standard Wasserstein-metric-based DRO approach. Indeed, the newsvendor problem features an objective function with a Lipschitz constant with respect to the uncertainty that is independent of the decision \({\mathbf {x}}\). Consequently, as per [35, Remark 6.7], KNNDRO renders the same minimizer for this problem as that of KNN whenever the support set \({\widetilde{\varXi }}\) is equal to the whole space. This is, in contrast, not true for the portfolio allocation problem, which has an objective function with a Lipschitz constant with regard to the uncertainty that depends on the decision \({\mathbf {x}}\).

In all the numerical experiments, we take the p-norm with \(p=1\) and, accordingly, we use the Wasserstein distance of order 1. Thus, all the optimization problems that we solve are linear programs. We consider a series of different values for the size N of the sample data. Unless stated otherwise in the text, for each N, we choose as the number of neighbors, \(K_N\), the value \(\lfloor N/\log (N+1) \rfloor \), where \(\lfloor \cdot \rfloor \) stands for the floor function. Nevertheless, for the portfolio allocation problem, we also test the values \(\lfloor N^{0.9}\rfloor \) and \(\lfloor \sqrt{N} \rfloor \) to assess the impact of the number of neighbors on the out-of-sample performance of the four methods we compare.

We estimate \({\mathbf {x}}^* \in {\mathrm{arg\,min}}_{{\mathbf {x}}\in X}\; {\mathbb {E}}_{{\mathbb {Q}}_{{\widetilde{\varXi }}}} \left[ f({\mathbf {x}}, \varvec{\xi })\right] \) and \(J^* = {\mathbb {E}}_{{\mathbb {Q}}_{{\widetilde{\varXi }}}} \left[ f(\mathbf {x^*}, \varvec{\xi })\right] \) using a discrete proxy of the true conditional distribution \({\mathbb {Q}}_{{\widetilde{\varXi }}}\). In the newsvendor problem, this proxy is made up of 1085 data points, resulting from applying the KNN method (with the logarithmic rule) to 10,000 samples from the true data-generating joint distribution. In the portfolio optimization problem, we have an explicit form of \({\mathbb {Q}}_{{\widetilde{\varXi }}}\), which we utilize to directly construct a 10,000-data-point approximation. To compare the four data-driven approaches under consideration, we use two performance metrics, specifically, the out-of-sample performance of the data-driven solution and its out-of-sample disappointment. The former is given by \(J={\mathbb {E}}_{{\mathbb {Q}}_{{\widetilde{\varXi }}}} \left[ f(\widehat{{\mathbf {x}}}_{N}^{m}, \varvec{\xi })\right] \), while the latter is calculated as \(J -{\widehat{J}}_{N}^{m}\), where \(m = \{\text {KNN, KNNROBUST, DROTRIMM, KNNDRO}\}\) and \({\widehat{J}}_{N}^{m}\) is the objective function value yielded by the data-driven optimization problem solved by method m. We note that a negative out-of-sample disappointment represents a favorable outcome.

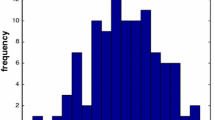

Since \({\mathbb {E}}_{{\mathbb {Q}}_{{\widetilde{\varXi }}}} \left[ f(\widehat{{\mathbf {x}}}_{N}^{m}, \varvec{\xi })\right] \) and \({\widehat{J}}_{N}^{m}\) are functions of the sample data, we conduct a certain number of runs (400 for the newsvendor problem and 200 for the portfolio optimization problem) for every N, each run with an independent sample of size N. This way we can get (visual) estimates of the out-of-sample performance and disappointment for several values of the sample size N for different independent runs. These estimates are illustrated in the form of box plots in a series of figures, where the dotted black horizontal line corresponds to either the optimal solution \({\mathbf {x}}^*\) (only in the newsvendor problem) or to its associated optimal cost \(J^*\) with complete information.

As is customary in practice, we use a data-driven procedure to tune the robustness parameter of each method. In particular, for a desired value of reliability \(1-\beta \in (0,1)\) (in our numerical experiments, we set \(\beta \) to 0.15), and for each method j, where \(j = \{\text {KNNROBUST, KNNDRO, DROTRIMM}\}\), we aim for the value of the robustness parameter for which the estimate of the objective value \({\widehat{J}}_{N}^{j}\) given by method j provides an upper \((1-\beta )\)-confidence bound on the out-of-sample performance of its respective optimal solution (see Eq. (10)), while delivering the best out-of-sample performance. As the optimal robustness parameter is unknown and depends on the available data sample, we need to derive an estimator \(param^{\beta ,j}_N\) that is also a function of the training data. We construct \(param^{\beta ,j}_N\) and the corresponding reliability-driven solution as follows:

-

1.

We generate kboot resamples (with replacement) of size N, each playing the role of a different training set. In our experiments we set \(kboot = 50\). Moreover, we build a validation dataset determining the \(K_{N_{val}}\)-neighbors of the \(N_{val}\) data points of the original sample of size N that have not been used to form the training set.

-

2.

For each resample \(k=1, \ldots ,kboot\) and each candidate value for param, we compute a solution by method j with parameter param on the k-th resample. The resulting optimal decision is denoted as \({\widehat{x}}^{j,k}_N(param)\) and its corresponding objective value as \({\widehat{J}}^{j,k}_N(param)\). Thereafter, we calculate the out-of-sample performance \(J({\widehat{x}}^{j,k}_N(param))\) of the data-driven solution \({\widehat{x}}^{j,k}_N(param)\) over the validation set.

-

3.

From among the candidate values for param such that \({\widehat{J}}^{j,k}_N(param)\) exceeds the value \(J({\widehat{x}}^{j,k}_N(param))\) in at least \((1-\beta )\times kboot\) different resamples, we take as \(param^{\beta ,j}_N\) the one yielding the best out-of-sample performance averaged over the kboot validation datasets.

-

4.

Finally, we compute the solution given by method j with parameter \(param^{\beta ,j}_N\), \({\widehat{x}}^j_N:={\widehat{x}}^{j}_N(param^{\beta ,j}_N)\) and the respective certificate \({\widehat{J}}^j_N:={\widehat{J}}^{j}_N(param^{\beta ,j}_N)\).

Recall that, in our approach, the robustness parameter \({\widetilde{\rho }}_{N}\) must be greater than or equal to the minimum transportation budget to the power of p, that is, \({\underline{\varepsilon }}^p_{N\alpha _N}\). Hence, if we decompose \({\widetilde{\rho }}_{N}\) as \({\widetilde{\rho }}_{N} = {\underline{\varepsilon }}^p_{N\alpha _N} + \varDelta {\widetilde{\rho }}_N\), what one really needs to tune in DROTRIMM is the budget excess \(\varDelta {\widetilde{\rho }}_N\). Furthermore, for the same amount of budget \(\varDelta {\widetilde{\rho }}_N\), our approach will lead to more robust decisions \({\mathbf {x}}\) than KNNDRO, because the worst-case distribution in KNNDRO is also feasible in DROTRIMM. Consequently, in practice, the tuning of one of these methods could guide the tuning of the other.