Abstract

The selection of branching variables is a key component of branch-and-bound algorithms for solving mixed-integer programming (MIP) problems since the quality of the selection procedure is likely to have a significant effect on the size of the enumeration tree. State-of-the-art procedures base the selection of variables on their “LP gains”, which is the dual bound improvement obtained after branching on a variable. There are various ways of selecting variables depending on their LP gains. However, all methods are evaluated empirically. In this paper we present a theoretical model for the selection of branching variables. It is based upon an abstraction of MIPs to a simpler setting in which it is possible to analytically evaluate the dual bound improvement of choosing a given variable. We then discuss how the analytical results can be used to choose branching variables for MIPs, and we give experimental results that demonstrate the effectiveness of the method on MIPLIB 2010 “tree” instances where we achieve a \(5\%\) geometric average time and node improvement over the default rule of SCIP, a state-of-the-art MIP solver.

Similar content being viewed by others

Change history

07 February 2017

An erratum to this article has been published.

References

Achterberg, T.: Constraint Integer Programming. Ph.D. thesis, Technische Universität Berlin (2007)

Achterberg, T.: SCIP: solving constraint integer programs. Math. Program. Comput. 1(1), 1–41 (2009)

Achterberg, T., Berthold, T.: Hybrid branching. In: Hoeve, W.J., Hooker, J.N. (eds.) Integration of AI and OR Techniques in Constraint Programming for Combinatorial Optimization Problems, Volume 5547 of Lecture Notes in Computer Science, pp. 309–311. Springer, Berlin (2009)

Achterberg, T., Koch, T., Martin, A.: MIPLIB 2003. Oper. Res. Lett. 34(4), 361–372 (2006)

Achterberg, T., Wunderling, R.: Mixed Integer Programming: Analyzing 12 Years of Progress. Springer, Berlin (2013)

Conforti, M., Cornuéjols, G., Zambelli, G.: Integer Programming, Volume 271 of Graduate Texts in Mathematics. Springer, Berlin (2014)

Cormen, T.H., Leiserson, C.E., Rivest, R.L., Stein, C.: Introduction to Algorithms, 3rd edn. MIT Press, Cambridge (2009)

Jacobson, N.: Basic Algebra I: Second Edition. Dover, Mineola (2009)

Koch, T., Achterberg, T., Andersen, E., Bastert, O., Berthold, T., Bixby, R.E., Danna, E., Gamrath, G., Gleixner, A.M., Heinz, S., Lodi, A., Mittelmann, H., Ralphs, T., Salvagnin, D., Steffy, D.E., Wolter, K.: MIPLIB 2010. Math. Program. Comput. 3(2), 103–163 (2011)

Kullmann, O.: Fundaments of branching heuristics. In: Biere, A., Heule, M., van Maaren, H., Walsh, T. (eds.) Handbook of Satisfiability, Volume 185 of Frontiers in Artificial Intelligence and Applications, pp. 205–244. IOS Press, Amsterdam (2009)

Land, A.H., Doig, A.G.: An automatic method of solving discrete programming problems. Econometrica 28(3), 497–520 (1960)

Lodi, A., Tramontani, A.: Performance variability in mixed-integer programming. Tuter. Oper. Res. Theory Driven Infl. Appl. 2, 1–12 (2013)

Nemhauser, G.L., Wolsey, L.A.: Integer and Combinatorial Optimization. Wiley, New York (1988)

Press, W.H., Teukolsky, S.A., Vetterling, W.T., Flannery, B.P.: Numerical Recipes 3rd Edition: The Art of Scientific Computing, 3rd edn. Cambridge University Press, New York (2007)

Stockmeyer, L.J.: The polynomial-time hierarchy. Theor. Comput. Sci. 3(1), 1–22 (1976)

Toda, S.: PP is as hard as the polynomial-time hierarchy. SIAM J. Comput. 20(5), 865–877 (1991)

Acknowledgements

The authors would like to thank Eduardo Uchoa for pointing out reference [10] and Graham Farr for helping with the discussion at the end of Sect. 5.1. The authors would like to extend their gratitude to the reviewers for the numerous improvements they contributed to through their comments. This research was funded by AFOSR grant FA9550-12-1-0151 of the Air Force Office of Scientific Research and the National Science Foundation Grant CCF-1415460 to the Georgia Institute of Technology.

Author information

Authors and Affiliations

Corresponding author

Additional information

The original version of this article has been revised: The format of Table 1 was incorrect. Now, the Table 1 has been corrected.

An erratum to this article is available at https://doi.org/10.1007/s10107-017-1118-7.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Appendices

Appendix 1: Proofs

1.1 Proof of Theorem 2 and Corollary 1

Theorem

When G tends to infinity, both sequences \(\root l \of {\frac{t(G+l)}{t(G)}}\) and \(\root r \of {\frac{t(G+r)}{t(G)}}\) converge to \(\varphi \), which is the unique root greater than 1 of the equation \(p(x)= x^r - x^{r-l} -1=0\).

Proof

Proposition 2 proves the case \(l=r\), therefore we suppose throughout the proof that \(r>l\). First, we define the notation

Using the recursive definition (1) of t between the first and the second lines, we can easily establish:

Similarly, \(\bar{L} = 1 + \frac{1}{\bar{R} - 1}\). Next, we obtain

Also, we have

Together, these yield

Following the same steps, we can show \(\bar{R}^l \ge \underline{L}^r\). Starting from this inequality, we prove

Likewise, we can show that the inequality \(\bar{R} \le 1 + \frac{1}{\underline{R}^\frac{l}{r} -1}\) holds.

We now introduce two monotonic sequences \(\alpha _n\) and \(\omega _n\) that respectively bound \(\underline{R}\) from below and bound \(\bar{R}\) from above, and we prove that they converge to the same limit. For all non-negative n, let \(\alpha _n\) and \(\omega _n\) be defined as follows:

where \(f(x) = 1 + \frac{1}{x^\frac{l}{r} -1}\) for all \(x \in (1, \infty )\). We first prove by induction that for all non-negative integer n, \(\alpha _n\) and \(\omega _n\) satisfy \(\alpha _n \le \underline{R} \le \bar{R} \le \omega _n\). Proposition 2 ensures that \(\alpha _0=2\) is a lower bound on \(\underline{R}\). Suppose that for a given non-negative n, the inequality \(\alpha _n \le \underline{R}\) holds. We prove that \(\bar{R} \le \omega _n\) holds too:

The same reasoning also proves that for all non-negative integers n, \(\omega _n \ge \bar{R}\) implies \(\alpha _{n+1} \le \underline{R}\). The sequence \(\alpha _n\) thus bounds \(\underline{R}\) from below, and \(\omega _n\) bounds \(\bar{R}\) from above.

We now prove that \(\alpha _n\) is monotonically increasing. Consider the inequality:

Suppose now that for a given non-negative n, \(\alpha _n > \alpha _{n-1}\). This implies that \(\omega _n < \omega _{n-1}\):

In turn, for that given n, \(\omega _n < \omega _{n-1}\) implies \(\alpha _{n+1} > \alpha _{n}\). The sequence \(\alpha _{n}\) and \(\omega _n\) are thus increasing and decreasing, respectively. Since they are bounded, each of them converges to one of the solutions of the fixed-point equation \(x=f(x)\). We now prove that there is a unique solution to that equation:

where \(X = x^{\frac{1}{r}}\). We establish in Theorem 3 that the polynomial p has a unique root in \((1, \infty )\), hence the fixed-point equation \(x=f(x)\) also has a unique solution. Consequently, it is necessary that both sequences \(\alpha _{n}\) and \(\omega _n\) converge to this unique fixed point, and thus \(\underline{R} = \bar{R}\). Furthermore, the sequence \(\root r \of {\frac{t(G+r)}{t(G)}}\) converges to the root \(\varphi > 1\) of the polynomial p.

Since we have established that \(\underline{L} = 1 + \frac{1}{\underline{R} - 1}\) and \(\bar{L} = 1 + \frac{1}{\bar{R} - 1}\), it follows that \(\underline{L} = \bar{L}\). Since \(p(\varphi )=0\), \(\varphi \) equivalently satisfies \(\varphi ^{l} = 1 + \frac{1}{\varphi ^{r} - 1}\), therefore \(\underline{L} = \bar{L} = \varphi ^l\). \(\square \)

Corollary

A numerical approximation of \(\varphi ^r\) is given by the fixed-point iteration

with the starting point \(x=2\).

Proof

Recall the definition of the sequences \(\alpha _n\) and \(\omega _n\) as given in the proof of Theorem 2 (notice that function f has the same definition). The sequence \(f_n\) generated by the fixed-point equation is \((2, f(2), f(f(2)), f(f(f(2))), \dots )\), which is equal to \((\alpha _0, \omega _0, \alpha _1, \omega _1, \dots )\). Formally, the sequence \(f_n\) generated by the fixed-point equation satisfies

In the proof of Theorem 2, we prove that both \(\alpha _n\) and \(\omega _n\) converge to \(\varphi ^r\) when n tends to infinity, therefore \(f_n\) also converges to \(\varphi ^r\). \(\square \)

Proof of Theorem 4 Recall that z is the least common multiple of all \(l_i\) and \(r_i\).

Theorem

\(\varphi = \min _i \varphi _i\)

Proof

Let \(\alpha \) be such that for all positive integers \(x \le z\),

A possible value is \(\alpha =1\). Similar to the proof of Theorem 2, we use the notation

For all variables i, we have:

where the last line follows from the lower bound on \(\alpha ^{-x}\) from (6).

Suppose there exists a variable i such that \(\alpha > \varphi _i\), then we establish, using Theorem 3:

This contradicts expression (6), hence for all variables i, \(\alpha \le \varphi _i\). Suppose that there exists a variable i such that \(\varphi _i^z < \bar{Z}\), then

which is a contradiction, hence for all variables i, \(\bar{Z} \le \varphi _i^z\). In addition, for each variable i,

Hence there must exist a variable j such that

Suppose \(\alpha < \varphi _j\), then, using Theorem 3 in the first line:

Since \(\varphi _j^z > \alpha ^z\) implies \(\underline{Z} > \alpha ^z\), then \(\underline{Z} \ge \varphi _j^z\) must be true. This can be shortly proven by writing

Since \(\varphi _i^z \ge \bar{Z}\) holds for all variables i, then variable j satisfies \(\varphi _j^z \le \underline{Z} \le \bar{Z} \le \varphi _i^z\) for all variables i. We can finally conclude that

\(\square \)

Appendix 2: Additional numerical simulations

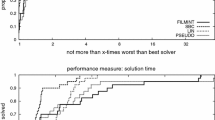

We give an additional set of simulations on the MVB model, with the same target gaps as in the simulations on GVB, so that one can compare both experimental setups easily. For the same experiment, we present two different tables, Tables 7 and 8, where the results are presented as in Tables 2 and 3, respectively. The two differences between Tables 7 and 8 are thus the presence (or absence) of the last column LB, and the reference used for relative performance (for Table 7 it is the minimum tree-size, and for Table 8 it is the tree-size produced by product).

These results show that ratio performs generally better than product and linear, and that this phenomenon becomes more significant as the gap to close increases. If we compare Table 8 to Table 3, it appears that ratio is even more at an advantage on GVB than on MVB. One reason may be that, for a given instance, the best variable for ratio and product may be the same, or not very different, and in the MVB experiments this best variable would be branched on at every node. However, in GVB, this variable would be branched on only at the root node, and the subsequent best variables chosen by ratio and product are likely to differ. Indeed, observe that there are many more ties in the MVB experiments than in the GVB experiments.

Rights and permissions

About this article

Cite this article

Le Bodic, P., Nemhauser, G. An abstract model for branching and its application to mixed integer programming. Math. Program. 166, 369–405 (2017). https://doi.org/10.1007/s10107-016-1101-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10107-016-1101-8