Abstract

The personalization of user experiences through recommendation systems has been extensively explored in Internet applications, but this has yet to be fully addressed in Virtual Reality (VR) environments. The complexity of managing geometric 3D data, computational load, and natural interactions poses significant challenges in real-time adaptation in these immersive experiences. However, tailoring VR environments to individual user needs and interests holds promise for enhancing user experiences. In this paper, we present Virtual Reality Environment Adaptation through Recommendations (VR-EAR), a framework designed to address this challenge. VR-EAR employs customizable object metadata and a hybrid recommendation system modeling implicit user feedback in VR environments. We utilize VR optimization techniques to ensure efficient performance. To evaluate our framework, we designed a virtual store where product locations dynamically adjust based on user interactions. Our results demonstrate the effectiveness of VR-EAR in adapting and personalizing VR environments in real time. domains.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Virtual Reality (VR) or Augmented Reality (AR) environments are designed and developed by experts to ensure an optimal user experience and seamless adaptation to diverse requirements (Ashtari et al. 2020; Mayor et al. 2021). However, users possess their own unique interaction preferences and mental models. Having an environment that adapts in real time to the users’ needs based on their present and past actions enables true customization of the virtual experience to each individual.

In digital scenarios like e-commerce, interfaces and products are often tailored to users’ interests (Nguyen and Hsu 2022). Integrating VR and AR technology into e-commerce and 3D store recreation offers numerous advantages. It facilitates online shopping experiences, natural interactions, and comprehensive data collection. Digital twins of physical stores enable cost-effective simulations, enriching personalized virtual environments and elevating customer experiences (Augustine 2020). Gathering user interaction data enables profiling users and understanding or predicting their behavior. This makes it possible to personalize each individual shopping experience and use recommendation systems to suggest products that the user may like to increase shop sales.

The first well-known virtual environments for e-commerce, such as Roblox or Decentraland, do not deliver personalized content and experiences adapted to customers and their actions (Rane et al. 2023), as is usually the case on Internet applications. Unfortunately, the computational cost required for virtual environments has made it difficult to implement recommendation systems that allow virtual scenarios to be personalized in real time.

Compared to physical stores, virtual stores facilitate customer tracking and data collection. While Internet shopping applications, such as websites or smartphone apps, also allow interaction data collection, e.g., clicks, server requests, etc. (Fan et al. 2022), they lack the fidelity of virtual reality environments (Al-Jundi and Tanbour 2022). Moreover, extrapolating behavior from digital interactions to the real world can be challenging.

In this work we introduce a framework called VR-EAR, which stands for Virtual Reality Environment Adaptation through Recommendations. VR-EAR considers the integration of a hybrid recommendation system into a virtual reality environment that contains customizable objects with which users can interact with. By leveraging the objects’ metadata and measuring the user’s actions in the 3D environment, VR-EAR allows setting a personalized initial state for the virtual world, as well as adjusting it in real time. Thus, the customizable objects and the appearance of the scenario adapt according to the user’s actions.

VR-EAR introduces a novel methodology to model user’s implicit VR feedback by the combination of a new set of proposed VR metrics that provide an attention and interest value for each object. This allows us to better understand the user’s preferences and needs. Periodically, the recommendation system uses the collected metrics to update the placement of the virtual objects based on the user’s actions. In this way, the virtual world iteratively adapts to the user’s preferences. We also present a series of VR optimization techniques that enable VR-EAR to run smoothly during the entire VR experience in a standalone setup (i.e., executing the framework directly in a Head Mounted Display, HMD) across different environment and object scales.

We think that an e-commerce can be the main scenario that can benefit from our framework, although we have generalized its implementation to any virtual reality environment with customizable objects. In order to analyze the results of our framework, we have built a virtual reality environment with an online shop appearance.

The rest of the paper is organized as follows. Firstly, Sect. 2 reviews the state of the art and current applications in recommendation systems and virtual environment personalization. Subsequently, Sect. 3 describes the design of VR-EAR and its evaluation in Sect. 4. Finally, Sect. 5 draws the main conclusions and discusses future work.

2 Related work

2.1 Recommendation systems

Recommendation systems have become very common in recent years. These systems help customers to discover new products that may be of interest to them.

One of the simplest recommendation approaches are non-personalized popularity-based recommendation systems (Chaudhary and Anupama 2020). These systems check which items are most popular among all users and recommend those. Their main advantage is that they provide a simple solution for the user cold-start problem, i.e., recommending relevant items to novel users without any historical data about their preferences (Lika et al. 2014). Their main disadvantage is that they do not adapt to the user, as they recommend the same items to all users based on general statistics.

Two other very popular techniques are content-based filtering and collaborative filtering approaches. Content-based filtering methods treat recommendation as a user-specific classification problem. They are based on a description of the item and a profile of the user’s preferences (Aggarwal 2016). Keywords are used to describe the items, and a user profile is built to indicate the type of items that the user likes. Since the feature representation of the items are hand-engineered to some extent, this technique requires a lot of domain knowledge (Thorat et al. 2015).

Collaborative filtering uses similarities between users and items simultaneously to provide recommendations. The feature representation of the items can be learned automatically, without relying on hand-engineering of features (Thorat et al. 2015). Nevertheless, a big disadvantage of collaborative filtering methods is that they are prone to user and item cold-start problems (difficulty to make recommendations when the users or products are new).

Some recommendation systems use hybrid approaches, combining collaborative filtering, content-based filtering, or other methods (Çano and Morisio 2017).

Recommendation systems share a common concern: modeling the user’s preferences. These can be captured via either implicit or explicit user’s feedback (Zhao et al. 2018). Explicit feedback is generally considered to be of higher quality, since it considers explicit input by users regarding their interest in products (e.g., ratings). However, the cognitive load effort to assign accurate ratings acts as a disincentive, making it difficult to assemble large user populations and contributing to data sparsity. Implicit feedback techniques seek to avoid this bottleneck by inferring the user’s preferences from observations that are available to the system and are often more abundant, e.g purchase history, browsing history, search patterns, mouse movements, etc. (Jawaheer et al. 2014).

In our work, we use a hybrid recommendation system that combines a popularity-based recommendation system with a content-based recommendation system. VR-EAR infers user’s preferences through implicit VR feedback.

2.2 Virtual environment personalization

To achieve effective personalization of VR environments, an essential step involves measuring users’ behavior to gain insights into their preferences.

Previous research works have focused on collecting users’ measurements in VR applications to classify them. Khatri et al. (2022) classify consumers using a machine learning classifier based on eye-tracking, navigation, posture, and interaction data. Pfeiffer et al. (2020) use eye-tracking to train a machine learning model to classify customers’ search behavior.

The aforementioned works are similar to ours in the sense that they involve measuring users’ behavior in VR environments. Nevertheless, they do not use their classification results to build personalized VR experiences. Another difference with our work is that we do not consider eye-tracking methodologies. Although eye-tracking is becoming more common in different models of HMD, the most commercial models do not include it yet. Additionally, we do not consider eye-tracking cues to be as informative as the head and hand movements to measure the users’ interests. Eye movements may be involuntary to any attention getter and do not demonstrate an overt interest in attention each time users shifts their gaze (Adhanom et al. 2023).

Mayor et al. (2022) adapts the method of locomotion according to the user’s gait and tolerance to cybersickness, with the aim of optimizing the workspace in the virtual world in a personalized way. However, the adaptation is done in a pre-calibration process and is not modified according to the user’s actions in real time.

Other works have focused on modeling users through physiological signals, and in some cases using them to personalize VR environments. Quintero (2023) models users by measuring motion trajectories, brain, and heart signals, with the objective of enabling adaptive VR experiences. Tasnim et al. (2024) propose an algorithm for cybersickness prediction using eye, heart rate and electrodermal activity data. Kritikos et al. (2021) introduce a system that readjusts a VR simulation environment by measuring real-time feedback from an electrodermal activity sensor. Guo and Elgendi (2013) present a recommendation system for 3D e-commerce based on feedback captured through electroencephalogram (EEG) signals. Contrary to our proposal, all of these works model users through physiological signals collected through devices that are not accessible in regular VR environments and are difficult to generalize in e-commerce.

Some research works (Contreras et al. 2018; Huang et al. 2022; Shravani et al. 2021) use recommendation systems to personalize VR experiences. To the best of our knowledge, these proposals are the most similar to our framework.

Contreras et al. (2018) use a conversational recommendation system based on user’s explicit feedback to recommend products. Once a recommendation is made, the user may ask for modifications (e.g., “a cheaper camera”, or “a different manufacturer”). The system then offers the user a new product that matches their explicit preferences. They then show the recommended products to the users in the form of textures in 2D billboards. Instead explicit feedback from the user, VR-EAR models users using the implicit VR feedback provided through their interactions with the virtual objects. Previous research has shown that asking users for explicit feedback is detrimental to the user experience, as it requires additional work from them (Jawaheer et al. 2014; Balakrishnan et al. 2016; Xiao et al. 2021).

Huang et al. (2022) propose a VR supermarket with a recommendation system. When the user buys an item, the system uses this information to present recommendations. It also takes into account the user’s position to adjust preferences (when the user stands in front of a product shelf for more than 10 s, the products in the shelf are used to recommended content). The recommendations are presented to the users in the form of textures displayed on 2D panels. We argue that acquiring or not acquiring a product is not the only information that can be leveraged from individual product-level interactions for future recommendations. VR-EAR also measures whether users look at products for certain periods of time, or grab products. These other types of interactions provide useful feedback as well, as they demonstrate user interest towards certain products, even if they do not acquire them in the end.

Shravani et al. (2021) propose a VR supermarket with an integrated recommendation system, which is built using user’s purchase history and interaction data. The purchase data is obtained through transaction history stored in databases and user interaction data is obtained through Unity Analytics, a service that tracks users throughout their session in the VR environment. The authors specify that the application is developed as a series of scenes, which the user navigates through to perform a transaction. The recommendations are shown to the users in the form of 2D panels. The paper does not specify the type of interactions that are logged, so it is difficult to determine whether the recommendation system can be effective in terms of what the user does.

Contrary to all of these works, our framework does not use 2D panels to present recommendations, as others works do. We maintain that 2D panels can have a potential negative effect on the sense of presence, which is critical in VR environments (Sra and Pattanaik 2023; Weber et al. 2021). Moreover, the use of 2D billboards defies one of the main advantages of e-commerce virtual reality applications: the visualization of 3D models representing real-world product counterparts, that allow for an enhanced perception of product size, color or style, as compared to traditional e-commerce 2D Internet applications (Lau and Lee 2019). Instead, VR-EAR leverages the recommendation system’s output to provide real time adaptation of the virtual world based on the user’s actions. Our framework places products that users may like closer to them according to their interactions. We adapt the virtual objects and the 3D scenarios using optimized graphical techniques.

Table 1 summarizes the goals and unresolved limitations, collected user data and the methods used to present the recommendations for the discussed research works.

3 Proposed design

VR-EAR is a framework designed to facilitate an immersive shopping experience tailored to individual user preferences. This section outlines its design, the challenges VR-EAR addresses, and its impact on enhancing VR e-commerce experiences.

VR-EAR models user preferences through a set of feedback interaction metrics, including object fixations, manipulations, and acquisitions, without the need for invasive eye-tracking or physiological measurements. It integrates recommendations into the 3D space using object reallocation.

We provide an overview of the design of VR-EAR in Sect. 3.1, and then, in Sect. 3.2, we discuss in a series of VR optimization techniques that we use to ensure that VR-EAR runs smoothly, regardless of its scale.

3.1 Design overview

In this section, we provide an overview of the design of our framework explaining the main components and features of VR-EAR. We outline the main components in Sect. 3.1.1, discuss how to model user’s feedback combining a set of VR metrics that measure the user’s attention and interest for each object in Sect. 3.1.2, describe the framework’s execution flow in Sect. 3.1.3, and introduce the recommendation system in Sect. 3.1.4.

3.1.1 Main components

Figure 1 shows an overview of the main components of VR-EAR. It leverages three core components to create a personalized VR e-commerce experience: the recommendation system, customizable objects along with their metadata, and the VR environment itself.

These three components interact seamlessly to adapt the virtual space in real-time, based on user interactions. They are implemented as decoupled components, so that they can be modified independently. This allows, for example, to modify the objects’ data and the recommendation system to add more objects without affecting the design of the VR environment.

The recommendation system is the brain behind VR-EAR’s ability to personalize the VR e-commerce environment. It leverages implicit user’s VR feedback based on their interactions with the VR environment to make informed predictions about their preferences. This system dynamically tailors the VR environment, ensuring that users are presented with objects that resonate with their interests, making it a pivotal component to enhance user experience and align with e-commerce goals by potentially driving up sales. The recommendation system automatically places objects of interest closer to the user and less valued objects further away. We chose to provide recommendations through a method based on object reallocation because the sense of presence is critical in VR environments (Sra and Pattanaik 2023), and this way, it will not be affected by the presence of unnatural 2D panels to present the recommendations, as other works do (Contreras et al. 2018; Shravani et al. 2021; Huang et al. 2022).

The customizable objects stand at the core of personalizing the VR e-commerce experience, as they are the primary elements that the recommendation system reallocates. They represent products and are designed with detailed 3D meshes and textures that offer a lifelike view, simulating a real-world shopping experience. They also encompass a comprehensive set of metadata, which can include product popularity scores, categories, descriptions, etc.

The VR environment is a fully immersive 3D space designed to mimic a physical store, but with the added capability of dynamically adapting to user preferences. As users interact with the customizable objects, the VR environment evolves, highlighting items of potential interest and subtly guiding users through a personalized shopping journey.

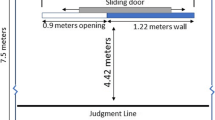

We consider the use Unity’s XR Interaction Toolkit to create the customizable objects as Grab Interactables, allowing us to detect when the user interacts with each object with the HMD controllers. We program the candidate locations where the objects can be moved to as Socket Interactors that can hold the Grab Interactables.

As an example of a virtual e-commerce environment, we have designed a virtual shop in Unity, where the customizable 3D mesh objects are movie products, although any virtual environment could be generated with metadata associated with the virtual objects. This e-commerce prototype is detailed in Sect. 4.

3.1.2 Measurement of user’s feedback

To measure user-level preferences in the VR environment, we introduce a set of novel interaction metrics that model implicit users’ VR feedback. For each customizable object i, we define and collect the following metrics:

-

(i)

\(\textit{NF}_{ft,i}\): The number of times that the user looks at object i for a fixed amount of time ft (i.e., number of fixations). In order to calculate this in a VR environment, we launch a raycast technique (Pietroszek 2018) from the center of the user’s camera, and check if the raycast collides with any visible customizable object. Once that a raycast collision takes place, we update the value of \(\textit{NF}_{ft,i}\) every ft seconds, for as long as the collision lasts.

-

(ii)

\(\textit{NG}_{i}\): The number of times that the user grabs object i, detected as the selection of a Grab Interactable by Unity’s XR Interaction Toolkit.

-

(iii)

\(\textit{NA}_{i}\): The number of times that the user has acquired object i. This can be implemented in the form of a user basket that stores the information of the objects that the user acquires during the visit.

The values of these metrics are stored by our framework both during runtime and afterwards. Depending on the scenario, the metrics are also weighted according to the properties and importance of the actions. To allow this, we consider the possibility of applying different weights to each interaction type: WF, WG and WA. In our e-commerce example, acquiring an object implies a higher user preference than grabbing it, and grabbing an object also generally implies a higher user preference than just looking at it.

Since the above metrics require measuring user interactions, we can face the user cold-start problem for new users. In order to make recommendations while we collect the above metrics, we also define object’s popularity scores. These could be measured through historical interaction data.

3.1.3 Framework’s execution flow

Figure 2 shows the execution flow of VR-EAR. When a user enters in the virtual shop, the framework checks if there are previous interaction metrics linked to that user from other visits. Depending on this, we run two different types of recommendation systems:

-

1.

Popularity-based recommendation: this type of recommendation is executed when there is no previous interaction data linked to the user. It orders the customizable objects according to their popularity: the most popular objects are placed near the user, while the least popular ones are placed farther away.

-

2.

Hybrid recommendation: this type of recommendation is executed when there is previous interaction data linked to the user, giving preference to this data since it is user-specific. If the user has previously made a considerable amount of interactions, a fully content-based recommendation system is employed. In the case that the user has made few interactions, part of the customizable objects are placed close to the user using a content-based recommendation approach, but another part of the objects (which are located farther away) are then sorted using the popularity-based recommendation approach.

The interactions that the user performs during the visit are monitored and the values of the interaction metrics (\(\textit{NF}_{ft,i}\), \(\textit{NG}_{i}\) and \(\textit{NA}_{i}\)) are updated accordingly. Each time a period of time P elapses, the recommendation system is rerun with the updated values of the interaction metrics. The value for P is carefully chosen to ensure the optimal performance of the framework, with a methodology that we discuss in Sect. 3.2.

Once the user completes the VR experience, the interaction metric values are saved for potential future readings. If the same user visits the virtual shop again, we use the previously collected values to set a personalized initial state of the virtual world by executing the hybrid recommendation system at the beginning of the virtual experience with the already collected user’s preference information.

3.1.4 Recommendation system

This section provides a detailed overview of the recommendation system. Figure 3 shows a flow graph of its key steps.

First, if there is no previous interaction data available for the current user, a fully popularity-based recommendation system is executed to tackle the user cold-start problem by sorting the objects according to their popularity. With this method, we are assuming that the user will like top-rated objects.

Second, if there is previous available interaction data, it is used to calculate a recommendation score \(\textit{rs}_{i}\) for each object i. This score is initially zero, and increases if the user has made interactions with objects that are similar to i. The score \(\textit{rs}_{i}\) represents the confidence of recommending object i (the larger that it is, the more confident that we are when recommending it).

After calculating \(\textit{rs}_{i}\), it is checked whether the user is grabbing, can see, or has object i in a shopping basket. If any of these conditions are met, object i is not reallocated. This is because we do not want to reallocate an object that the user is currently interacting with, already has the intention of acquiring, or is looking at, since we believe that this would negatively impact the user experience.

A final evaluation is conducted on the value of \(\textit{rs}_{i}\) if none of the previous three conditions are satisfied. If \(\textit{rs}_{i}\) does not exceed zero, this implies that the user did not interact with objects similar to i. In this case, i is added to a list for the application of a popularity-based recommendation. Otherwise, if \(\textit{rs}_{i}\) is positive, i is included in a separate list for the implementation of a content-based recommendation.

Finally, we use the objects in the two lists to perform a hybrid recommendation. We first recommend the objects in the content-based list, prioritizing objects with higher recommendation scores. Then, we recommend the objects in the popularity-based list, prioritizing objects with higher popularity scores. Note that, when the number of interactions is high, it is possible that all objects end up in the content-based list, and therefore the algorithm becomes fully content-based.

The pseudo-code of the recommendation system is shown in Algorithm 1. We now proceed to explain it in detail.

First, the distance between all candidate customizable objects’ locations and the user is calculated (Algorithm 1, lines 2–4), and the list of object locations is ordered according to the distance to the user in ascending order (Algorithm 1, line 5). The idea is that, in the end, the closest candidate locations to the user will be used for the objects that the user will likely prefer, while the less interesting objects will be placed farther away.

If there is no previous interaction data available for the current user (Algorithm 1, line 6), a popularity-based recommendation system is executed (Algorithm 1, lines 7–8). It places the most popular objects close the user and the least popular ones farther away (Algorithm 1, lines 7–8). If, on the contrary, there is previous interaction data available, a hybrid recommendation system is executed (Algorithm 1, lines 9–36).

We can profile the user with different levels of accuracy depending on the number of previous interactions that we have. If the number of previous interactions is not high, our hybrid recommendation system mixes a content-based recommender (Algorithm 1, lines 22–30) with a popularity-based recommender (Algorithm 1, lines 32–35). If, on the contrary, there is a considerable number of previous interactions linked to the current user, a fully content-based recommendation system is employed (Algorithm 1, lines 22–30) and the popularity-based recommendation part gets skipped (Algorithm 1, lines 32–35). We now proceed to explain the details of the hybrid recommendation system.

For the content-based recommendation part (Algorithm 1, lines 22–30), a recommendation score for each one of the n objects is used. These recommendation scores are first initialized with zero for all objects (Algorithm 1, line 10):

For each object i, three types of interaction metrics are collected (\(\textit{NF}_{ft,i}\), \(\textit{NG}_{i}\) and \(\textit{NA}_{i}\)), and different weights are employed for each one of these interactions (WF, WG and WA). For each object, we then define \(\textit{WOI}_{i}\), the total weighted object interaction, and calculate it (Algorithm 1, line 12) as:

Our aim is to recommend objects that are similar to the ones that the user mostly interacts with. We define the pair-object similarity between objects i and j as:

As with any content-based recommendation system, the computation of \(S_{i,j}\) is application-specific and requires domain knowledge. It can be calculated using object keywords and attributes. For example, for a clothes store, we could define \(S_{i,j}\) in terms of the item category, dressing style, color, price, etc.

For each object i that the user interacts with, we can then increment \(\textit{rs}_{j}\) (the recommendation score for j, an object that is similar to i), with a quantity that considers both \(\textit{WOI}_{i}\) (the interaction strength for object i) and \(S_{i,j}\) (the similarity between object i, the object that users interact with, and object j, the object that is similar to it):

Specifically, for each object that users interact with, the recommendation scores for the nr most similar objects to it are increased (Algorithm 1, lines 11–17. The nr most similar objects to object i can be obtained by sorting \(S_{i,j}\) in descending order and taking the nr first elements.). After having followed this process for all objects, the list of final computed recommendation scores is ordered in descending order, and then the list of objects accordingly (Algorithm 1, lines 18–19).

For each object i, it is then checked whether four different conditions are met at the same time (Algorithm 1, line 22): that the final value of its recommendation score \(\textit{rs}_{i}\) is positive, that it is not currently in the user’s VR view (to calculate this, we first extract the planes that form the user’s camera’s view frustum in Unity, and then calculate the customizable objects that have their bounds inside these planes (Unity 2022)), that it is not in the user’s basket (the list of objects that the user already has the intention of acquiring), and that the user is not currently grabbing it.

If all of these conditions are met, each object is ordered according to its recommendation score: the larger that it is, the closer that it is placed to the user (Algorithm 1, lines 23–25). This is a content-based recommendation. If the user is currently grabbing the object, it is in the user’s basket, or it is in the user’s VR view, the object is not moved and its location is not considered as a candidate for other objects (Algorithm 1, lines 27–29).

Depending on the type of virtual experience, it is worthwhile to consider two different cases for the list of objects that are in the user’s basket (input OB of Algorithm 1). If it makes sense to acquire the same object across two different visits (e.g., a supermarket, where the item previously acquired might have already been fully consumed since the last visit, and therefore the user may be interested in acquiring it again), we may want to consider for OB the objects that are in the user’s basket during the current visit. If, on the contrary, it does not make sense to acquire the same object across two different visits (e.g., a clothes store, where the user may have already acquired a T-shirt and will likely not be interested in acquiring it again), we may want to consider for OB the objects that were in the user’s basket during all historical visits. Note that, in both cases, if the user has already acquired an object i during the current visit or historical visits, this will benefit future recommendations for similar objects because \(\textit{NA}_{i} > 0\) in Algorithm 1, line 12.

In the case that the user is not grabbing object i, and the object is not in the user’s basket or user’s view, the only condition that would make the check fail (Algorithm 1, line 22) would be that the recommendation score for object i (\(\textit{rs}_{i}\)) was zero. This will happen in the case that object i is not similar to any of the objects that the user has interacted with, and will often occur for some objects when the number of objects that the user has interacted with is still low. In this case, the remaining objects are ordered according to their popularity (Algorithm 1, lines 32–35) and placed after the objects that were recommended based on their content, since we are less confident about recommending them (the popularity scores are not user-specific). This is the hybrid part of the algorithm, which will leverage both content-based recommendations and popularity-based recommendations when the number of user interactions is still low.

For some VR environments, it may be preferable to execute Algorithm 1 independently for different object categories. For example, a supermarket is typically divided into different sections: meat, bakery, dairy, vegetables, etc. In this case, the recommendation system could be executed independently to only consider moving objects between candidate locations that belong to the same object category. Nevertheless, even in this case, it may also be interesting to consider pair-object similarities (\(S_{i,j}\)) between different categories of objects. For instance, if a user has acquired cereal, then she/he may be interested in acquiring milk as well.

3.2 VR optimization

To avoid cybersickness, we aim for VR-EAR to run at a minimum of 60 frames per second (FPS) during the entire VR experience in a standalone setup (i.e., executing the framework directly in the HMD). To achieve that, we have used the following VR optimization techniques in Unity:

-

(i)

GPU instancing: this is a draw call optimization method that renders multiple copies of a mesh with the same material in a single draw call. This is useful for drawing things that appear multiple times in a scene.

-

(ii)

Texture compression: Unity enables configuring the texture compression format for each texture asset. Textures with lower bits per pixel (bpp) take less GPU memory bandwidth, thus helping to avoid frame rate bottlenecks.

-

(iii)

Occlusion culling: by default, cameras in Unity perform frustum culling, which excludes all renderers that do not fall within the camera’s view frustum. However, frustum culling does not check whether a renderer is occluded by other game objects. For this, we use occlusion culling. Every frame, the update loop of Unity’s XR Interaction Toolkit’s Interaction Manager queries interactors and interactables, and handles the hover and selection states. This can become a very expensive process when the number of interactors and interactables is large (e.g., when the VR experience has many grabable objects), potentially causing a substantial frame rate bottleneck. In order to tackle this issue, we came up with the following optimization technique:

-

(iv)

Interactor/interactable triggers: by default, we deactive all Unity interactable and interactor components, and create triggers that cover the objects with these components around. As soon as we detect that the user’s hand enters in any of these triggers, we active the associated interactable or interactor component. This way, the user can always use the interactables and interactors, but we avoid including the large majority of them in the update loop of the Interaction Manager, saving memory resources. We re-run Algorithm 1 every time that a time period of P passes, where \(P_{min} \le P \le P_{max}\). The value that we choose for P varies for every re-execution. In order for VR-EAR to provide recommendations that reflect the real-time user’s actions in the VR environment, it is preferable to re-run Algorithm 1 as often as possible. Nevertheless, the algorithm involves a series of steps that can be costly to compute, so it is best to execute it in moments of low resource usage to avoid frame rate drops. To select an optimal value of P each time, we employ the following logic:

-

(v)

Re-execution of Algorithm 1: once that \(P_{min}\) seconds have passed since the last execution, we check the velocity of both user’s controllers (they control the movement of the user’s hands) and the 2D axis’ magnitude of both controllers (they control the user’s movement and rotation in the VR environment). If all of these four values are low at the same time (we use a threshold of 0.01 for the velocity and a threshold of 0.1 for the 2D axis’ magnitude), it means that the user’s hands are not moving, and the user is not moving or rotating through the environment as well. In that case, we execute Algorithm 1 at that moment, since consecutive VR frames are similar to each other and the user will not be able to notice performance drops. If, on the contrary, any of the four values is larger than the threshold, we keep checking the condition until a maximum time of \(P_{max}\) seconds have passed since the last execution, in which we re-execute Algorithm 1 again regardless. As we previously mentioned, we consider running Algorithm 1 independently and sequentially for each object category, to support scalability when the number of objects is large. To do so, we first measure the distance between the user and the areas of the VR experience that contain the objects belonging to each individual object category. Then, we run Algorithm 1 independently for each object category (the closest categories are executed first, according to the measured distances to the user). Specifically, we employ the following methodology to perform sequential independent executions:

-

(vi)

Execution of Algorithm 1 through coroutines: Unity’s coroutines enable spreading tasks across several frames. In Unity, a coroutine is a method that can pause execution and return control to Unity, but then continue where it left off on the following frame. The computation of \(\textit{rs}_{j}\) (Algorithm 1, line 14) exhibits unfavorable scaling behavior with respect to the variable n (representing the number of objects in the virtual environment). Specifically, it demonstrates a quadratic dependence on it. To mitigate potential performance issues, we adopt a coroutine-based approach. Each independent execution of Algorithm 1 corresponds to an individual object category. If necessary, we can further subdivide the execution of each coroutine into smaller units, considering batches of objects within the same category. By distributing the calculation of \(\textit{rs}_{j}\) across multiple frames, we effectively manage its scaling overhead. Achieving a consistent frame rate of 60 FPS depends significantly on the value of n. We recommend dividing the algorithm’s execution across the fewest frames necessary to maintain smooth performance. Over-fragmentation should be avoided, as it could become perceptible to users (due to the inherent object reallocation involved in each coroutine execution). Notably, we prioritize executing coroutines associated with object categories physically closer to the user, as these are the most noticeable elements in the VR environment.

4 Evaluation

In order to evaluate VR-EAR, we have developed a virtual shop as a prototype that personalizes the location of its products based on the user’s experience and interactions in the environment. Our VR environment has been developed using Unity engine (version 2020.3.10f1). We have tested it using the VR headset Meta Quest 2.

The prototype contains the three components defined by our framework: a virtual environment, a product dataset and a recommendation system, which are described in the following subsections. The integration of these components is discussed in Sect. 4.4. The framework behaves differently according to the values that we set for some variables. The chosen values and the reasons to choose them are detailed in Sect. 4.5. Finally, we evaluate the recommendation system’s performance and demonstrate its successful integration into the VR environment with an illustrative example in Sect. 4.6.

4.1 Virtual environment

As a case study, we have developed a virtual reality video rental shop to test our framework design. The reason why we have chosen a virtual reality video rental shop to test our framework design is because movie datasets are a popular option to build and test recommendation systems, which makes high-quality movie benchmark datasets easily accessible. Moreover, movies are not complex in terms of 3D modeling, they can be categorized according to their genres, their popularity scores are available across several online platforms, and they can be described in terms of item features that are easy to understand without complex domain knowledge: actors, director, plot keywords and description, etc. Nevertheless, we have made sure to design the case study scenario with elements that would also apply to other types of virtual environments.

Figures 4a and b show the 3D environment that we have developed using Unity game engine. We have included 384 movies belonging to the following genres: drama, comedy, horror, romance, animation, science fiction, crime, and adventure. The movies belonging to the same genre (48 movies per genre) are located together in the same corridor. We have also developed an initial user selector screen to show existing users along with their former visit statistics (see Fig. 4c) and included avatars (see Fig. 4d).

As shown in Fig. 5a, the movies are Grab Interactables, and they are held by Socket Interactors that act as movie stands. Users can also turn around the movies to read their plot overview (see Fig. 5b).

In Algorithm 1, the movies and the sockets correspond to inputs O and L respectively. The sockets act as the new candidate locations when the movies are relocated using the recommendation system.

To calculate \(\textit{NF}_{ft,i}\) (see Fig. 6a), we only only consider raycast collisions with the front cover of the movie as valid in the case that the movie is currently being grabbed by a socket (movie stand). In the case that the movie is being grabbed by the user, we consider raycast collisions with both the front cover and the back cover as valid, since the user may be reading the movie’s overview.

There is also a basket near the user where movies can be dropped (see Fig. 6c). This basket moves automatically to follow the user along the visit. When the user drops a movie in the basket, we consider that the user has the intention of acquiring the movie, and update the value of \(\textit{NA}_{i}\) accordingly. When executing Algorithm 1, we calculate input OB (list of objects in the user’s basket) as the list of movies that are inside the basket.

One of the inputs of Algorithm 1 is PV, the list of movies in the user’s view. To calculate it, we extract the movies that have their bounds inside the planes that form the user’s camera’s view frustum in Unity (Unity 2022).

We use all of the VR optimization techniques described in Sect. 3.2 to ensure that the framework runs smoothly. Specifically, we use GPU instancing for the movie stands (they all share the same material), we compress the movie textures, and we use occlusion culling to avoid rendering any movies or objects that are occluded by other movies or objects. As shown in Fig. 7, we also create triggers for the movies and the movie stands, to be able to deactivate their Grab Interactables and Socket Interactors components when the user’s hands are not inside the triggers.

We also run Algorithm 1 independently and sequentially for each movie genre using Unity’s coroutines (i.e., the calculation of \(\textit{rs}_{j}\) is spread across 8 frames, corresponding to the number of genres). This coroutine division was enough to obtain a constant rate of a minimum of 60 FPS, and the spread of the execution across several frames was practically unnoticeable in our tests.

4.2 Product dataset

The metadata for the movies has been extracted from The Movies Dataset available in Kaggle (Banik 2017). This dataset contains metadata for approximately 45,000 movies released on or before July 2017, and is an ensemble of data collected from TMDB (TMDB 2008) and GroupLens (University of Minnesota 1992). It includes information for, among others, the cast, crew, plot keywords, budget, revenue, posters, release dates, languages, production companies, countries, TMDB vote counts and vote averages.

For the VR experience, we have extracted 384 movies from this dataset (48 movies belonging to 8 different movie genres). We have kept movies with high vote counts, as these are expected to be popular and recognizable movies, which helps to evaluate the relevance of the recommendations.

4.3 Recommendation system

As explained in Sect. 3, the recommendation system that we propose can be a fully popularity-based recommender, a hybrid recommender that mixes a popularity-based recommender with a content-based recommender, or a fully content-based recommender.

For popularity-based recommendations, we use TMDB vote averages as popularity scores. In the case that two or more movies have got the same vote average, we choose their order randomly.

For content-based recommendations, we need to calculate \(S_{i,j}\) (pair-object similarity) for all movie pairs. To be able to compute it, we first need to create feature representations of the movies. The dataset that we are using contains several fields that describe the movies. To create feature representations, we combine the director, cast (main 3 actors or actresses), a maximum of top 10 keywords, and a maximum of 3 movie genres (a movie can belong to several genres at the same time). An example can be found at Table 2. We use lowercase letters and remove spaces for compound words or names, so that they count as one word (e.g., “James Cameron” is transformed to “jamescameron”). Then, we convert the resulting collection of words to a matrix of token counts using Python scikit-learn’s Count Vectorizer. As a result, each movie is represented as a vector on the basis of the frequency (count) of each word that occurs in the entire text sequence.

We compute \(S_{i,j}\) for each movie pair as the cosine similarity (i.e., normalized dot product) of \(\varvec{v_{i}}\) and \(\varvec{v_{j}}\), the vectors that represent movies i and j:

Movies can belong to several genres, as shown in Table 2. Nevertheless, we have grouped our 384 in 8 different sections, each one belonging to a different and single movie genre. This is because we consider the first genre listed for each movie in the dataset as the main genre. As introduced in Sect. 3.2, we run Algorithm 1 independently and sequentially for each movie genre. That means that, every time that we execute the recommendation system, its inputs P (list of movies) and L (candidate movie locations) are the movies and sockets that belong to a single genre. Nevertheless, we consider the interactions for all movies when calculating \(WOI_{i}\) (Algorithm 1, line 12), and the input \(S_{i}\) may contain movie pairs belonging to different genres. This is because, for example, if the user paid interest to “The Martian” (which mainly belongs to the movie genre “drama”), then the user may also be interested in having a look at “Interstellar” (which mainly belongs to the movie genre “adventure”), because they both take place in the space and they both feature actress Jessica Chastain.

Table 3 shows the top 11 movies most similar to “Harry Potter and the Prisoner of Azkaban” (the ones with the highest \(S_{i,j}\) values). The most similar movie is itself (i = j in \(S_{i,j}\)), since all feature values are the same. We do not exclude this particular case of self-similarity from the recommendation system because the user may be interacting with a set of movies M, but may be indecisive about which one to acquire. By not excluding the self-similarity case, we will increase the recommendation score for all movies in M in Algorithm 1, line 14. This will cause that the movies in M will “follow the user around” every time that they are reallocated through the execution of Algorithm 1, unless the user is looking at them, acquires them, or is grabbing them (because of the condition in Algorithm 1, line 27).

As it is to be expected, the next most similar movies are other Harry Potter movies, since they share part of the cast, director (in some cases), some of the keywords, etc. Following other Harry Potter movies, the next ones belong to similar genres and share some keywords.

Table 4 shows another example with the top 11 most similar movies to “Mulan”. All of them are animation films, most of them also musical films produced by Walt Disney. The top ones feature other Disney princesses as well.

4.4 Integration of the components

As depicted in Fig. 1, we designed the VR environment, recommendation system and product data as decoupled components linked to each other, to be able to modify them individually.

Specifically, the movie metadata is read from an external file (in a real enterprise experience, it could be read from a database). As the start of the experience, no movie is yet placed in the VR environment. The initial placement occurs when Algorithm 1 is executed at the beginning of the visit, placing the movies using the popularity-based logic of the recommendation system (when no previous information is available for the user), or the hybrid logic (when there is previous information available for the user). This makes it possible to provide a personalized initial state of the virtual world for users for which we have interaction data, as well as the ability to modify the list of movies that appear in the VR environment by just modifying the movie metadata that is read from the external file.

In the same way, input \(S_{i}\) of Algorithm 1 is read from an external file, and is calculated using Python with the methodology explained in Sect. 4.3. This makes it possible to update the recommendation system with the new movies that are added to the movie dataset, or tweak the logic to calculate the movie pair similarity values without affecting the VR environment.

4.5 Variable value settings

VR-EAR behaves differently according to the values that we set for some variables. Table 5 contains the values that we have chosen for the prototype virtual environment. We now proceed to explain the different variables in detail.

-

(a)

ft: this variable is used to calculate \(\textit{NF}_{ft,i}\) . According to Microsoft. (2015), the average human attention span is 8 s. As the abundance of sensory information in VR environments can lead to a heightened risk of distraction when compared to traditional media or digital interfaces (LaViola et al. 2017), we empirically determined the slightly lower value of 5 s for ft.

-

(b)

fd: this variable refers to a distance threshold that we use to compute \(\textit{NF}_{ft,i}\) . As long as the movie that the user is looking at is farther away than this distance threshold from the user’s camera, we do not start counting time to compute \(\textit{NF}_{ft,i}\). This is because, when testing the framework with the Meta Quest 2, we found it hard to comfortably discern details on a movie cover at distances that exceeded this threshold. Therefore, this parameter ensures that \(\textit{NF}_{ft,i}\) is only updated when users can reasonably be expected to gather visual information from the movie objects.

-

(c)

vd: this variable refers to a distance threshold that we use to compute PV ( the list of movies in the user’s view). PV is used in Algorithm 1 to avoid relocating movies that the user can currently see, and therefore avoid causing disorientation or missed interactions. Nevertheless, when using PV to restrict candidate locations for the recommendation system, we are reducing the possibilities that we have to personalize the VR environment according to the user’s preferences. We use the distance threshold vd to relax this restriction, as we do not include in PV the movies that are farther away than a distance vd from the user, even if they are visible. We empirically determined the value of 4 ms regarding product movements that occur farther away from this distance were hardly noticeable when testing the framework with the Meta Quest 2. This variable aims to balance the need for a dynamic, personalized virtual environment with the imperative to maintain user orientation and comfort.

-

(d)

WF: the weight that we use for \(\textit{NF}_{ft,i}\) in (1). Looking at a movie for a fixed amount of time is less relevant than grabbing a movie or buying it when measuring user’s preferences. This is why we have chosen the lowest weight for this interaction type. By assigning the lowest weight to fixations, the framework acknowledges their importance in capturing attentional focus (David-John et al. 2021), but distinguishes them from more definitive actions.

-

(e)

WG: the weight that we use for \(\textit{NG}_{i}\) in (1). Grabbing a movie shows a potential interest for that particular movie that is stronger than just looking at it, so we apply a weight that is higher than WF. This is aligned with previous research showing that interactive actions require a high degree of cognitive processing and decision-making, which are indicative of interest and engagement with the content (Dede et al. 2017).

-

(f)

WA: the weight that we use for \(\textit{NA}_{i}\) in (1). Placing an item in a shopping basket is a strong indicator of user interest in any e-commerce application, so we apply the highest weight for this interaction type.

-

(g)

\(P_{min}\), \(P_{max}\): every time that a time period of P passes, we re-execute the recommendation system with the updated interaction metric values. The value that we choose for P varies for every re-execution, but we always choose \(P_{min} \le P \le P_{max}\).

-

(h)

nr: this variable refers to the number of most similar movies that we consider to compute input \(\textit{S}_{i}\) in Algorithm 1. The most similar movie to each one is always itself, since all feature values are the same, as shown in Tables 3 and 4. With a value of 5, we then include the other top 4 most similar movies. We demonstrate in Sect. 4.6 that we obtain appropriate recommendation results with this value.

4.6 Results

In this section, we first evaluate the performance of the recommendation system as a standalone component. Subsequently, we demonstrate its successful integration into the VR environment with an illustrative example.

As mentioned in Sect. 4.2, our product dataset is a subset of The Movies Dataset that contains approximately 26 million ratings from 270,000 users for 45,000 movies. Given its large scale, we use this dataset to evaluate the performance of the recommendation system as as a standalone component. We focus on evaluating the content-based recommendations, as these are the ones that are personalized for individual users.

Precision@k and Recall@k are two of the most widely used metrics to evaluate the quality and coverage of recommendation systems (Shani and Gunawardana 2011; Isinkaye et al. 2015), where k refers to the number of items that are recommended to a user when a recommendation is triggered.

These metrics are defined with the concepts of recommended items and relevant items. Recommended are items (in this case, movies) that the recommendation system suggests to particular user. Relevant are items that are actually selected (in this case, acquired) by a particular user. They are calculated as Isinkaye et al. (2015):

Since our dataset contains user ratings for movies, we can infer that users have “acquired” these movies at some point, and therefore all the movies that an user has rated would have been relevant recommendations for that user. We could filter the most relevant movies for them based on the value of their ratings, but from the point of view of our framework, we consider a recommendation as relevant if it causes enough interest for the user to acquire the product to later consume it.

In our case, k is linked to variable nr in Sect. 4.5. As explained in Sect. 4.3, we have not excluded self-similarity cases (i = j in \(S_{i,j}\)) from the recommendation system. Nevertheless, we do not include self-similarity cases in the calculation of Precision@k and Recall@k, since for this calculation, we are using a dataset with movies that users have rated to recommend movies similar to those. If we included the self-similarity cases, the values of Precision@k and Recall@k would artificially increase, because it would always be the case that we would recommend a relevant movie for the self-similarity cases. Therefore, for the calculation of Precision@k and Recall@k, \({k} = {nr} -1\) because of the exclusion of self-similarity cases.

While Precision@k focuses on measuring the quality of the recommendations, Recall@k focuses on measuring the coverage of the recommendations. These are two different objectives that often exhibit an inverse relationship, meaning that as one metric increases, the other tends to decrease (Avazpour et al. 2014). Achieving high precision often involves recommending a smaller, more targeted set of items that are highly likely to be relevant to the user. Achieving high coverage often involves recommending more items, including those that may not be as relevant to every user. Therefore, we iterate with different values of k (particularly, with \( k \in \{1, \ldots , 10\} \)) to find a value of k that offers an appropriate balance between the quality and the coverage of the recommendations.

From the ratings dataset, we only consider rating records for the 384 movies included in our product dataset (see Sect. 4.2) for the calculation of Precision@k and Recall@k, as those 384 movies are the only ones leveraged in our recommendations. We also exclude users who have not rated at least 10 (the maximum value that we consider for k) of the 384 movies, since the Precision@k would never reach its maximum value of 1.0 for users with less than 10 ratings (the number of items that are relevant would always be less than \(k = 10\)). This leaves us with 112, 122 users.

Figure 8 shows the obtained average user Precision@k–Recall@k curve for \( k \in \{1, \ldots , 10\} \) with the data of the 112,122 users. As expected, the two metrics exhibit an inverse relationship. We can observe that with k = 4, we obtain the best balance between these two objectives, and this is why we set \({nr}={k}+1=5\) in Sect. 4.5 when considering self-similarity cases. Our chosen value of k = 4 prioritizes precision over recall. This is aligned with our system’s purpose, as a focus on precision directly supports enhancing the user experience by minimizing the risk of presenting irrelevant options.

With k = 4, we achieve an average user Precision@4 of 0.1556, and an average user Recall@4 of 0.0199. This means that, on average, 15.56% of the movies recommended by the system are relevant to the users’ preferences, and the system recommends an average of 1.99% of all the movies that are relevant to the users’ preferences.

Figure 9 shows the distribution of Precision@4 and Recall@4 for the 112, 122 users. It can be observed that the distributions vary greatly per user. This variation underscores the diverse range of user preferences and behaviors. By incorporating implicit feedback from users within the VR environment into our recommendation system, we aim to address this diversity. This method allows us to tailor recommendations more closely to individual user preferences based on their interactions within the VR setting, thereby potentially improving the performance of the overall system.

Now, we proceed to demonstrate the successful integration of the recommendation system into the VR environment with an illustrative example. Figure 10a shows the moment in which the user grabs “Harry Potter and the Prisoner of Azkaban” during a visit in which he has not interacted with other movies for the sake of this example. When our framework is executed, it detects that the user is interested in the grabbed movie, and the most similar movies to it (see Table 3) are then automatically placed closer to him, as it is shown in Fig. 10b.

This example shows that our framework is capable of adapting the VR environment according to the measured users’ interactions, and products that they could reasonably be interested in are automatically located closer to them, facilitating their discovery.

As our design considers the recommendation system as a decoupled component, the recommendation results can be easily tuned to facilitate future potential recommendation improvements.

a The user grabs “Harry Potter and the Prisoner of Azkaban” b Our framework reallocates the most similar movies close to the user. The numbers in the image are the rank of the top most similar movies (see Table 3). In this example, we have set nr = 11 to show more recommendations

5 Conclusions

Personalized user experiences enable applications to not only differentiate themselves, but also to gain a sustainable competitive advantage. Applications that excel at delivering personalized experiences can generally expect loyal customers, increased revenue, and a boost to their bottom line.

The personalization of user experiences through recommendation systems has been extensively explored in Internet applications, but this has yet to be fully addressed in VR environments. The complexity of managing 3D geometric data, computational load, and natural interactions poses significant challenges in real-time adaptation in these immersive environments.

In this work we have introduced VR-EAR, a framework that enables modeling the user’s preferences through a novel methodology to measure implicit user’s VR feedback. We leverage the users’ interactions in the VR environment to understand their preferences, such as the products that they look at, grab or acquire. These interactions are taken into account to reallocate VR customizable objects in real time, placing closer to the users the ones that may reasonably be most relevant to them.

We have proposed a general framework design that can be tuned to different types of VR environments. By leveraging different VR optimization techniques, our design is also capable of running smoothly in real time across different virtual world scales and product sizes. As case study of this general design, we have implemented it in the form of a VR movie rental shop.

Throughout our research, we encountered several challenges. Firstly, addressing user diversity posed a significant challenge, as users have diverse interaction mental models and preferences. Balancing computational costs while maintaining a seamless VR experience was another challenge, which we mitigated through optimization techniques, particularly for executing the framework in HMDs. Additionally, modeling implicit user feedback in VR environments proved challenging, as traditional Internet e-commerce implicit feedback metrics, such as click-through rate, browsing history, bookmarking, scroll depth, etc., do not directly translate to VR. Developing accurate metrics to capture user attention and interest in VR was crucial for effective personalization. Lastly, evaluating the effectiveness of the VR-EAR framework required building a VR environment that accurately reflected the complexities of real-world e-commerce scenarios.

As per our evaluation results, that test the adaption of the VR environment according to the measured user’s interactions, we can conclude that our framework is capable of effectively adapting it and making relevant, non-intrusive object recommendations that are coherent with the measured interactions.

Our framework makes it easier for relevant products to be discovered. This is beneficial to both customers and businesses, since it can potentially lead to a better user experience in terms of choice satisfaction and perceived framework effectiveness, as well as increased revenue in the case of e-commerce applications.

The VR-EAR framework, while innovative, has certain limitations. It primarily relies on the computational power available to process real-time data and adapt the VR environment accordingly. The complexity of managing 3D geometric data and ensuring smooth user interactions poses challenges, especially when scaling to larger virtual environments or integrating more complex user feedback mechanisms. Despite these constraints, VR-EAR demonstrates potential in enhancing e-commerce experiences by personalizing VR environments.

Moving forward, as future work, we aim to focus on optimizing computational efficiency and explore various avenues to enhance the capabilities of our framework. Firstly, we intend to investigate alternative filtering algorithms, such as collaborative filtering, which may yield superior performance, especially when dealing with large datasets and user populations. Furthermore, we seek to enhance user interaction within VR environments by exploring advanced techniques such as hand tracking, body gestures, and voice commands. These approaches can facilitate more natural and intuitive interactions, further enhancing the user experience. Moreover, we aim to investigate the integration of multi-modal feedback, combining different sensory inputs like haptic, auditory, visual, and olfactory stimuli. This integration could lead to more immersive and effective personalized experiences, catering to a wider range of user preferences and needs. Lastly, we are interested in exploring the integration of our framework into collaborative virtual environments, such as in our inmersive data visualisation environment (Morales-Vega et al. 2024). By supporting multi-user interactions and co-presence, our framework could enable collaborative experiences while accommodating individual preferences, fostering teamwork, and enhancing overall engagement.

Data availability

The dataset analysed during the current study is a subset of The Movies Dataset available in Kaggle https://www.kaggle.com/datasets/rounakbanik/the-movies-dataset, which is an ensemble of data collected from TMDB https://www.themoviedb.org/ and GroupLens https://grouplens.org/.

References

Adhanom IB, MacNeilage P, Folmer E (2023) Eye tracking in virtual reality: a broad review of applications and challenges. Virtual Real 27(2):1481–1505

Aggarwal C (2016) Recommender systems: the textbook. Springer

Al-Jundi H, Tanbour E (2022) A framework for fidelity evaluation of immersive virtual reality systems. Virtual Real 26:1–20. https://doi.org/10.1007/s10055-021-00618-y

Ashtari N, Bunt A, McGrenere J, Nebeling M, Chilana PK (2020) Creating augmented and virtual reality applications: current practices, challenges, and opportunities. In: Proceedings of the 2020 chi conference on human factors in computing systems. New York, USA: Association for Computing Machinery. https://doi.org/10.1145/3313831.3376722

Augustine P (2020) The industry use cases for the digital twin idea. In: Raj P, Evangeline R (Eds.) The digital twin paradigm for smarter systems and environments: The industry use cases (vol 117, pp 79–105). Elsevier. https://doi.org/10.1016/bs.adcom.2019.10.008

Avazpour I, Pitakrat T, Grunske L, Grundy J (2014) Dimensions and metrics for evaluating recommendation systems. Recomm Syst Softw Eng pp 245–273

Balakrishnan V, Ahmadi K, Ravana SD (2016) Improving retrieval relevance using users’ explicit feedback. Aslib J Inf Manag 68(1):76–98

Banik R (2017) Kaggle—the movies dataset. https://www.kaggle.com/datasets/rounakbanik/the-movies-dataset. (Accessed: 2022-12-27)

Çano E, Morisio M (2017) Hybrid recommender systems: a systematic literature review. Intell Data Anal 21(6):1487–1524

Chaudhary S, Anupama C (2020) Recommendation system for big data software using popularity model and collaborative filtering. Artif Intell Evol Comput Eng Syst pp 551–559

Contreras D, Salamó M, Rodríguez I, Puig A (2018) Shopping decisions made in a virtual world: defining a state-based model of collaborative and conversational user-recommender interactions. IEEE Consum Electron Mag 7(4):26–35

David-John B, Peacock C, Zhang T, Murdison TS, Benko H, Jonker T R (2021) Towards gaze-based prediction of the intent to interact in virtual reality. In: ACM symposium on eye tracking research and applications, pp 1–7

Dede CJ, Jacobson J, Richards J (2017) Introduction: virtual, augmented, and mixed realities in education. Springer

Fan Z, Ou D, Gu Y, Fu B, Li X, Bao W, Liu Q (2022) Modeling users’ contextualized page-wise feedback for click-through rate prediction in e-commerce search. In: Proceedings of the fifteenth ACM international conference on web search and data mining, pp 262–270

Guo G, Elgendi M (2013) A new recommender system for 3D e-commerce: an EEG based approach. J Adv Manag Sci 1(1):61–65

Huang J, Zhang H, Lu H, Yu X, Li S (2022) A novel positionbased vr online shopping recommendation system based on optimized collaborative filtering algorithm. arXiv preprint arXiv:2206.15021

Isinkaye FO, Folajimi YO, Ojokoh BA (2015) Recommendation systems: principles, methods and evaluation. Egypt Inform J 16(3):261–273

Jawaheer G, Weller P, Kostkova P (2014) Modeling user preferences in recommender systems: a classification framework for explicit and implicit user feedback. ACM Trans Interact Intell Syst (TiiS) 4(2):1–26

Khatri J, Marín-Morales J, Moghaddasi M, Guixeres J, Giglioli IAC, Alcañiz M (2022) Recognizing personality traits using consumer behavior patterns in a virtual retail store. Front Psychol 13:752073

Kritikos J, Alevizopoulos G, Koutsouris D (2021) Personalized virtual reality human–computer interaction for psychiatric and neurological illnesses: a dynamically adaptive virtual reality environment that changes according to real-time feedback from electrophysiological signal responses. Front Hum Neurosci 15:596980

Lau KW, Lee PY (2019) Shopping in virtual reality: a study on consumers’ shopping experience in a stereoscopic virtual reality. Virtual Real 23(3):255–268

LaViola Jr JJ, Kruijff E, McMahan RP, Bowman D, Poupyrev IP (2017) 3D user interfaces: theory and practice. Addison-Wesley Professional

Lika B, Kolomvatsos K, Hadjiefthymiades S (2014) Facing the cold start problem in recommender systems. Expert Syst Appl 41(4):2065–2073

Mayor J, Raya L, Bayona S, Sanchez A (2022) Multi-technique redirected walking method. IEEE Trans Emerg Top Comput 10(2):997–1008. https://doi.org/10.1109/TETC.2021.3062285

Mayor J, Raya L, Sanchez A (2021) A comparative study of virtual reality methods of interaction and locomotion based on presence, cybersickness, and usability. IEEE Trans Emerg Top Comput 9(3):1542–1553. https://doi.org/10.1109/TETC.2019.2915287

Microsoft. (2015) Attention spans. Microsoft Canada, Spring

Morales-Vega J, Raya L, Sánchez-Rubio M, Sanchez A (2024) A virtual reality data visualization tool for dimensionality reduction methods. Virtual Real. https://doi.org/10.1007/s10055-024-00939-8

Nguyen TK, Hsu P-F (2022) More personalized, more useful? Reinvestigating recommendation mechanisms in e-commerce. Int J Electron Commer 26(1):90–122. https://doi.org/10.1080/10864415.2021.2010006

Pfeiffer J, Pfeiffer T, Meißner M, Weiß E (2020) Eye-tracking-based classification of information search behavior using machine learning: evidence from experiments in physical shops and virtual reality shopping environments. Inf Syst Res 31(3):675–691

Pietroszek K (2018) Encyclopedia of computer graphics and games. In: chap. Raycasting in virtual reality. Springer

Quintero L (2023) User modeling for adaptive virtual reality experiences: personalization from behavioral and physiological time series (Unpublished doctoral dissertation). Stockholm University, Department of Computer and Systems Sciences

Rane N, Choudhary S, Rane J (2023) Metaverse marketing strategies: enhancing customer experience and analysing consumer behaviour through leading-edge metaverse technologies, platforms, and models. SSRN Electron J. https://doi.org/10.2139/ssrn.4624199

Shani G, Gunawardana A (2011) Evaluating recommendation systems. Recommender systems handbook, pp 257–297

Shravani D, Prajwal Y, Atreyas P V, Shobha G (2021) Vr supermarket: a virtual reality online shopping platform with a dynamic recommendation system. In: 2021 IEEE international conference on artificial intelligence and virtual reality (AIVR), pp. 119–123

Sra M, Pattanaik SN (2023) Enhancing the sense of presence in virtual reality. IEEE Comput Gr Appl 43(4):90–96

Tasnim U, Islam R, Desai K, Quarles J (2024) Investigating personalization techniques for improved cybersickness prediction in virtual reality environments. IEEE Trans Vis Comput Gr. https://doi.org/10.1109/TVCG.2024.3372122

Thorat PB, Goudar RM, Barve S (2015) Survey on collaborative filtering, content-based filtering and hybrid recommendation system. Int J Comput Appl 110(4):31–36

TMDB (2008) The movie database. https://www.themoviedb.org/. (Accessed: 2022-12-27)

Unity. (2022). Geometryutility.testplanesaabb. https://docs.unity3d.com/ScriptReference/GeometryUtility.TestPlanesAABB.html. (Accessed: 2022-12-27)

University of Minnesota (1992) Grouplens https://grouplens.org/. (Accessed: 2022-12-27)

Weber S, Weibel D, Mast FW (2021) How to get there when you are there already? defining presence in virtual reality and the importance of perceived realism. Front Psychol 12:628298

Xiao Z, Mennicken S, Huber B, Shonkoff A, Thom J (2021) Let me ask you this: How can a voice assistant elicit explicit user feedback? Proc ACM Hum–Comput Interact 5(CSCW2):1–24

Zhao Q, Harper FM, Adomavicius G, Konstan JA (2018) Explicit or implicit feedback? engagement or satisfaction? A field experiment on machine-learning-based recommender systems. In: Proceedings of the 33rd annual ACM symposium on applied computing, pp 1331–1340

Funding

This research was funded by the Spanish Research Agency, Grant Number PID2021-122392OB-I00.

Author information

Authors and Affiliations

Contributions

SV: Writing—Original Draft, Methodology, Software, Investigation, Formal analysis; LR: Conceptualization, Writing—Reviewing and Editing, Investigation, Validation; AS: Conceptualization, Writing—Reviewing and Editing, Formal analysis, Supervision, Funding acquisition

Corresponding author

Ethics declarations

Conflict of interest

The authors have no Conflict of interest to declare that are relevant to the content of this article. This study did not involve any human participants.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Supplementary file 5 (MP4 135 kb)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Valmorisco, S., Raya, L. & Sanchez, A. Enabling personalized VR experiences: a framework for real-time adaptation and recommendations in VR environments. Virtual Reality 28, 128 (2024). https://doi.org/10.1007/s10055-024-01020-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10055-024-01020-0