Abstract

Handwriting with digital pens is a common way to facilitate human–computer interaction through the use of online handwriting (OH) trajectory reconstruction. In this work, we focus on a digital pen equipped with sensors from which one wants to reconstruct the OH trajectory. Such a pen allows to write on any surface and to get the digital trace, which can help learning to write, by writing on paper, and can be useful for many other applications such as collaborative meetings, etc. In this paper, we introduce a novel processing pipeline that maps the sensor signals of the pen to the corresponding OH trajectory. Notably, in order to tackle the difference of sampling rates between the pen and the tablet (which provides ground truth information), our preprocessing pipeline relies on Dynamic Time Warping to align the signals. We introduce a dedicated neural network architecture, inspired by a Temporal Convolutional Network, to reconstruct the online trajectory from the pen sensor signals. Finally, we also present a new benchmark dataset on which our method is evaluated both qualitatively and quantitatively, showing a notable improvement over its most notable competitor.

Similar content being viewed by others

Notes

Available free of charge for research community upon demand for research purposes only.

References

Azimi, H., Chang, S., Gold, J., et al.: Improving accuracy and explainability of online handwriting recognition. arXiv preprint arXiv:2209.09102 (2022)

Bai, S., Kolter, J.Z., Koltun, V.: An empirical evaluation of generic convolutional and recurrent networks for sequence modeling. CoRR. arXiv:1803.01271 (2018)

Bu, Y., Xie, L., Yin, Y., et al.: Handwriting-assistant: Reconstructing continuous strokes with millimeter-level accuracy via attachable inertial sensors. Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies (2021)

Chen, Z., Yang, D., Liang, J., et al.: Complex handwriting trajectory recovery: evaluation metrics and algorithm. In Proceedings of the asian conference on computer vision, pp 1060–1076 (2022)

Dai, R., Xu, S., Gu, Q., et al.: Hybrid spatio-temporal graph convolutional network: Improving traffic prediction with navigation data. In Proceedings of the 26th ACM SIGKDD international conference on knowledge discovery & data mining (2020)

Derrode, S., Li, H., Benyoussef, L.: Unsupervised pedestrian trajectory reconstruction from IMU sensors. In TAIMA 2018: Traitement et Analyse de l’Information Méthodes et Applications, Hammamet, Tunisia (2018)

Gopali, S., Abri, F., Siami-Namini, S., et al.: A comparative study of detecting anomalies in time series data using LSTM and TCN models. arXiv preprint arXiv:2112.09293 (2021)

Guirguis, K., Schorn, C., Guntoro, A., et al.: Seld-tcn: sound event localization & detection via temporal convolutional networks. In 2020 28th European signal processing conference (EUSIPCO). IEEE (2021)

Har-Peled, S., et al.: New similarity measures between polylines with applications to morphing and polygon sweeping. Discr. Comput. Geometry 28, 535–569 (2002)

Huang, H., Yang, D., Dai, G., et al.: Agtgan: unpaired image translation for photographic ancient character generation. In Proceedings of the 30th ACM international conference on multimedia, pp 5456–5467 (2022)

Klaß, A., Lorenz, S.M., Lauer-Schmaltz, M., et al.: Uncertainty-aware evaluation of time-series classification for online handwriting recognition with domain shift. arXiv preprint arXiv:2206.08640 (2022)

Kreß, F., Serdyuk, A., Hotfilter, T., et al.: Hardware-aware workload distribution for ai-based online handwriting recognition in a sensor pen. In 2022 11th mediterranean conference on embedded computing (MECO), IEEE, pp. 1–4 (2022)

Krichen, O., Corbillé, S., Anquetil, É., et al.: Combination of explicit segmentation with seq2seq recognition for fine analysis of children handwriting. International journal on document analysis and recognition (IJDAR) (2022)

Liu, Y., Huang, K., Song, X., et al.: Maghacker: eavesdropping on stylus pen writing via magnetic sensing from commodity mobile devices. In Proceedings of the 18th International conference on mobile systems, applications, and services, MobiSys ’20, New York, NY, USA, Association for Computing Machinery (2020)

McIntosh, J., Marzo, A., Sensir, M.F.: Detecting hand gestures with a wearable bracelet using infrared transmission and reflection. In: Proceedings of the 30th annual ACM symposium on user interface software and technology, New York, NY, USA (2017)

Mustafid A., Younas J., Lukowicz P., et al.: Iamonsense: multi-level handwriting classification using spatio-temporal information (2022)

Nan, M., Trăscău, M., Florea, A.M., et al.: Comparison between recurrent networks and temporal convolutional networks approaches for skeleton-based action recognition. Sensors 21(6), 2051 (2021)

Nguyen H.T., Nakamura T., Nguyen C.T., et al.: Online trajectory recovery from offline handwritten japanese kanji characters of multiple strokes. In: 2020 25th International conference on pattern recognition (ICPR), IEEE, pp. 8320–8327 (2021)

Ott, F., Rügamer, D., Heublein, L., et al.: Benchmarking online sequence-to-sequence and character-based handwriting recognition from imu-enhanced pens. arXiv preprint arXiv:2202.07036 (2022)

Ott, F., Rügamer, D., Heublein, L., et al.: Domain adaptation for time-series classification to mitigate covariate shift. In Proceedings of the 30th ACM international conference on multimedia, pp. 5934–5943 (2022)

Ott, F., Rügamer, D., Heublein, L., et al.: Joint classification and trajectory regression of online handwriting using a multi-task learning approach. In Proceedings of the IEEE/CVF winter conference on applications of computer vision, pp. 266–276 (2022)

Ott, F., Wehbi, M., Hamann, T., et al.: The onhw dataset: online handwriting recognition from imu-enhanced ballpoint pens with machine learning. Proceed. ACM Inter., Mobile, Wear. Ubiquit. Technol. 4(3), 1–20 (2020)

Pan, T-Y., Kuo, C-H., Hu, M.C.: A noise reduction method for imu and its application on handwriting trajectory reconstruction. In 2016 IEEE International conference on multimedia & Expo workshops (ICMEW), pp 1–6 (2016)

Pan, T.-Y., Kuo, C.-H., Liu, H.-T., et al.: Handwriting trajectory reconstruction using low-cost imu. IEEE Trans. Emerg. Top. Comput. Intell. 3(3), 261–270 (2018)

Sakoe, H., Chiba, S.: Dynamic programming algorithm optimization for spoken word recognition. IEEE Trans. Acoust., Speech, Sign. Process. 26(1), 43–9 (1978)

Simonnet, D., Girard, N., Anquetil, E., et al.: Evaluation of children cursive handwritten words for e-education. Patt. Recognit. Lett. 21, 133–139 (2019)

Wegmeth, L., Hoelzemann, A., Laerhoven, Van K.: Detecting handwritten mathematical terms with sensor based data. arXiv preprint arXiv:2109.05594 (2021)

Wehbi, M., Hamann, T., Barth, J., et al.: Towards an imu-based pen online handwriting recognizer. In: International conference on document analysis and recognition, pp 289–303. Springer (2021)

Wehbi, M., Luge, D., Hamann, T., et al.: Surface-free multi-stroke trajectory reconstruction and word recognition using an imu-enhanced digital pen. Sensors 22(14), 5347 (2022)

Wilhelm, M., Krakowczyk, D., Albayrak, S.: Perisense: ring-based multi-finger gesture interaction utilizing capacitive proximity sensing. Sensors 20(14), 3990 (2020)

Yan, J., Mu, L., Wang, L., et al.: Temporal convolutional networks for the advance prediction of ENSO. Scientific reports (2020)

Acknowledgements

This project is financed by the KIHT French-German bilateral ANR-21-FAI2-0007-01 project and these four partners, IRISA, KIT, Learn & Go and Stabilo. This work was performed using HPC resources from GENCI-IDRIS (Grant No. 2021-AD011013148).

Author information

Authors and Affiliations

Contributions

WS and FI wrote the main manuscript text and prepared figures. All authors reviewed the manuscript.

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

1.1 DTW alignment

The DTW algorithm [25] is based on dynamic programming to assess the similarity between time series. For two multivariate time series \(x \in \mathbb {R}^{T_x \times z}\), and \(y \in \mathbb {R}^{T_y \times z}\) of equal feature dimensionality z and respective lengths \(T_x\) and \(T_y\), the DTW is written as follows:

where E(x, y) represents the set of all admissible alignments between x and y and d is a distance metric in \(\mathbb {R}^z\). Commonly, the squared Euclidean distance \(d(x_i, y_j) = \Vert x_i -y_j\Vert ^2\) is used.

An alignment is a sequence of pairs of timestamps that is admissible if (i) it matches the first (and, respectively, the last) indices of time series x and y together, (ii) it is monotonically increasing, and (iii) it connects the two time series by matching at least one index of each series. Using dynamic programming, the admissible alignment paths can be computed according to the following recurrence formula:

where \(x_{\rightarrow i}\) denotes time series x observed up to timestamp i.

The DTW method on timestamps links multiple sensor signal points to at least one tablet signal data point. In our case, we do not allow the association of multiple points of the tablet signal to one point of the sensor signal. In other words, in the previous recurrence formula the predecessor \(DTW(x_{\rightarrow i}, y_{\rightarrow j-1})\) is removed. According to the resulting alignment path, the timestamps from the sensor signal are aligned with their corresponding timestamps from the tablet signal.

1.2 Datasets description

A group of 35 adult writers has contributed to the dataset acquisition. One recording is composed of 34 samples which are randomly selected scripts of characters, words, equations, shapes and word groups. To the next, this new dataset is called IRISA-KIHT. A writer can make several recordings that makes the IRISA-KIHT dataset to be writer imbalanced.

To evaluate the generalization capacity of our proposed approach, we decided to train and test our model on different writers. The IRISA-KIHT dataset consists of 25 writers’ recordings in the training set (3,770 samples) which represents around \(71.5\%\) of the writers’ total number. The rest 10 writers’ recordings never seen in training refer to the test set, representing \(28.5\%\) of writers (8.3% of samples). Figure 10 shows some samples of the IRISA-KIHT datasets.

The IRISA-KIHT-S dataset is a subset of the IRISA-KIHT dataset which is available on request.Footnote 3 This dataset is composed of 30 recordings and it is writer balanced as there is one recording per writer. Table 8 presents the IRISA-KIHT and IRISA-KIHT-S statistical description.

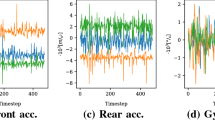

Every 34-sample recording session generates files from the data acquisition mobile app. The sensor signals file has 15 columns and N rows, where N is the number of IMU signals, timestamps, and sensor values. The table has 13 columns: milliseconds, accelerometer front (x, y, z), accelerometer rear (x, y, z), gyroscope (x, y, z), magnetometer (x, y, z) and force signals. Tablet signal files contain milliseconds, position coordinates (x, y, z) and pressure force signals. The transcription (labels) file contains labels and the start and stop timestamps for every sample. Additional files concerning the sensor calibration and recording meta data are provided. The dataset website describes the format and meta data.

1.3 TCN architecture

A TCN (Temporal Convolutional Network)-like layer consists of dilated, residual non-causal 1D convolutional layers with the same input and output lengths. The input tensor of our TCN implementation has the shape (batch_size=None, input_length=None, channels_num=13) and the output tensor has the shape (batch_size=None, output_length=None, channels_num=2). Since each TCN layer has the same input and output length, only the third dimension of the input and output tensors varies ("None" here denote the learning with batches of different size). The TCN layer consists of 4 blocks in TCN-49 and 3 blocks in TCN-373, with each block containing 100 filters followed by a RELU activation function and padded to the "same" input shape. A batch normalization layer was introduced between each pair of consecutive blocks. The performance of a TCN architecture-based model depends on the size of the receptive field (RF) of the network. Since a TCN’s receptive field depends on the network depth N as well as filter size k and dilation factor d, stabilization of deeper and larger TCNs becomes important [2]. The receptive field of our TCN based model is computed as :

The kernel size k is selected so that the receptive field covers enough context for predictions. For a regular convolution filter, the dilation d is equal to 1. Using larger dilation enables an output at the top level to represent a wider range of inputs. In the equation, there is a multiplication by 2 because there are two 1D CNN layers in a single residual block of the TCN architecture. For the purposes of the trajectory reconstruction task, we set the stride equal to 1. For the TCN-49 we choose to set k=3, N=4 and d=1, 2 and for the TCN-373 k=3, N=3 and d=1, 2, 4, 8, 16.

1.4 Fréchetdistance

The formal definition of the Fréchet distance is: Let S be a metric space, d its distance function. A curve A in S is a continuous map from the unit interval into S, i.e. \(A:[0,1]\rightarrow S \). A reparameterization \(\alpha \) of [0, 1] is a continuous, non-decreasing, surjection \(\alpha :[0,1]\rightarrow [0,1]\) Let A and B be two given curves in S. Then, the Fréchet distance between A and B is defined as the infimum over all reparameterizations \(\alpha \) and \(\beta \) of [0, 1] of the maximum over all \(t\in [0,1]\) of the distance in S between \(A(\alpha (t))\) and \(B(\beta (t))\). The Fréchet distance F is defined by the following equation:

Appropriate assessment metrics are needed to evaluate trajectory reconstruction to give students useful feedback. Fréchet distance doesn’t disagree with visual evaluation like DTW and RMSE. Figure 11 indicates that the left upper loop of the f is better reconstructed. Fréchet distance is needed to observe this.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Swaileh, W., Imbert, F., Soullard, Y. et al. Online handwriting trajectory reconstruction from kinematic sensors using temporal convolutional network. IJDAR 26, 289–302 (2023). https://doi.org/10.1007/s10032-023-00430-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10032-023-00430-1