Abstract

Using large components made of nodular cast iron (GJS) in wind turbines enables the application of lightweight construction through the high degree of design freedom. Besides the sand-casting process, casting into a permanent metal mould, i.e. chill casting, leads to a finer microstructure and higher quasi-static mechanical properties as well as higher fatigue strength. Unfortunately, in present design methodologies specific fatigue data is only available for sand cast and not for chilled cast GJS. Thus, lightweight design strategies for large, chilled cast components are not achievable, which led to the publicly funded project “Gusswelle”. Based on material investigations of EN-GJS-400-18-LT chill cast, an optimized hollow rotor shaft is developed. The design process and the resulting shaft design are presented. The optimized hollow rotor shaft prototype will be tested on a full-scale test bench to validate the design methodology. The intended validation plan as well as the test bench setup is shown in this paper. Furthermore, the decreasing wall thickness influences the interference fit between main bearing and hollow rotor shaft. Thus, through the applied bending moment, inner ring creep is more probable to occur in the main bearing seat. The creeping behaviour is investigated with finite element simulations and a measuring method is presented.

Zusammenfassung

Große Bauteile aus Sphäroguss (GJS) in Windenergieanlagen ermöglichen die Anwendung von Leichtbaudesign durch die hohe Gestaltungsfreiheit des Gussprozesses. Neben dem Sandgussverfahren führt das Kokillengussverfahren zu einer feineren Mikrostruktur und höheren quasistatischen mechanischen Eigenschaften sowie zu einer höheren Dauerfestigkeit. Unglücklicherweise sind in den gegenwärtigen Auslegungsrichtlinien spezifische Ermüdungsdaten nur für Sandguss und nicht für Kokillenguss verfügbar. Daher ist Leichtbaudesign für große Gussbauteile hergestellt mit dem Kokillengussverfahren nicht potenzialausschöpfend durchführbar, dieser Missstand führte zu dem öffentlich geförderten Forschungsprojekt „Gusswelle“. Basierend auf Materialuntersuchungen des in Kokille gegossenen Sphäroguss EN-GJS-400-18-LT wird eine optimierte Rotorhohlwelle entwickelt. Der Designprozess und die daraus resultierende Wellengeometrie werden vorgestellt. Der optimierte Prototyp einer Rotorhohlwelle wird auf einem Prüfstand in Originalgröße getestet, um die Auslegungsmethodik zu validieren. Der vorgesehene Validierungsplan sowie der Prüfstand selbst werden in diesem Dokument vorgestellt. Darüber hinaus beeinflusst die abnehmende Wandstärke die Presspassung zwischen Hauptlager und Rotorhohlwelle. Durch das eingeleitete Biegemoment ist es infolgedessen wahrscheinlicher, dass ein Wandern des Innenrings des Hauptlagers auftritt. Das Wanderverhalten wird mit Finite-Elemente-Simulationen untersucht und eine Messmethode vorgestellt.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The German federal government plans to expand offshore wind power to a capacity of 15,000 MW by year 2030 to reduce the CO2 emissions [1, 2]. This leads to more installed power and the need of lower levelized cost of energy. Using lightweight construction concepts in the design of drive train components aims at increasing the power-to-weight ratio (W/kg). Besides a better understanding of the load situation, high strength materials can be used to achieve a lightweight construction. A substantial saving of material and reduced costs for the components as well as for the wind turbine support structure are reached. The casting process offers potential for lightweight construction because it enables to adapt the component design to the individual loading situation. Through the 8% lower density of cast iron compared to the density of steel [3], lightweight design can also be considered through the choice of material if the same material strength is assumed. Moreover, reduced CO2 Emissions and energy consumption for cast components are reached due to its lower smelting temperature [4]. Despite all the advantages of cast iron, mostly forged main shafts are used in wind turbines [5, 6], because the mechanical properties of forged steel materials are higher than those of nodular cast iron [7].

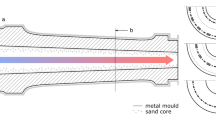

Compared to the conventional sand-casting process, the chill casting technology leads to the formation of a microstructure with smaller graphite nodules due to fast heat dissipation resulting in higher fatigue strength [8]. Components manufactured with this technology hold a fast solidification in areas near to the chill mould. These areas represent the highly stressed outer geometry of a hollow rotor shaft. Higher fatigue strength and potential for a higher material utilisation through the application of the chill cast technology is likely. However, this technology has not been considered in current guidelines, such as [7, 9, 10], so far.

The stated potential of GJS manufactured in permanent metal moulds and its unknown specific material properties led to the research project “Gusswelle”. The main objective is the optimization of design possibilities for wind turbine rotor shafts. Therefore, a raw hollow rotor design shaft made of EN-GJS-400-18-LT chill cast is developed. The project partner Fraunhofer LBF investigates the cyclic material behaviour of chill cast nodular cast iron. Based on these material strength results and the raw design, the optimized prototype of the hollow rotor shaft is developed. Through the component fatigue test with strain and deformation measurements, the design concept and the corresponding numerical finite element model will be validated.

Due to lightweight design, the overall stiffness of the main shaft will decrease, which influences the contact between the main bearing inner ring and the main shaft bearing seat. In this fitting, there is a high risk of inner ring creep and fretting corrosion, as observed in the previous project “BeBen XXL” [11]. Finite element simulations to determine the creeping tendency of the test bench setup are carried out and a method to experimentally prove the occurrence of inner ring creep is developed.

The scope of this paper is the optimization of a highly stressed component of a wind turbine’s drive train, a hollow rotor shaft, in consideration of the influence of high strength cast material. Moreover, the influence of a thin-walled main shaft on the behaviour of the main bearing interference fitting and its possible inner ring creep is theoretically examined.

The subsequent parts of the paper are structured as follows: In Sect. 2, the hollow rotor shaft design methodology is introduced, the intended validation plan is presented as well as the optimized hollow rotor shaft design. The inner ring creep, its creeping tendency on the test bench and a method to experimentally prove the phenomenon is presented in Sect. 3. At the end of the paper, the main conclusions of the work and an outlook are provided.

2 Hollow rotor shaft design methodology

The hollow rotor shaft design methodology is divided into different interdependent steps (Fig. 1). Involved in the design process are Fraunhofer LBF, Fraunhofer IWES and a participating foundry. Fraunhofer LBF determines the cyclic material behavior of EN-GJS-400-18-LT chill cast. Fraunhofer IWES designs the raw hollow rotor shaft and thereon the foundry develops the semi-finished part and executes solidification simulations. For the subsequent development and optimization process of the hollow rotor shaft, the results from Fraunhofer LBF and the foundry are used. Due to time constraints, preliminary material investigation results are used in the present stage.

An optimized hollow rotor shaft made of EN-GJS-400-18-LT chill cast is developed with the general optimization goal to save material and component costs, through the application of lightweight design for large cast components in combination with the application of the chill cast technology for nodular cast iron. Part of the investigations is a subsequent full-scale fatigue test on the main shaft fatigue test bench of Fraunhofer IWES to validate the design methodology. A technical crack as a result from fatigue damage shall be provoked in an expected area through the induced bending moment on the test rig.

2.1 Raw hollow rotor shaft design and subsequent optimization process

The raw hollow rotor shaft design is developed in consideration of different boundary conditions. The raw design is derived from an existing shaft design suited for wind turbines with a power rating of 3–4 MW. Due to the fact, that an existing main bearing and housing are used, the hollow rotor shaft’s main bearing seat is predefined. Furthermore, the rotor flange and the corresponding bolt connection are predefined.

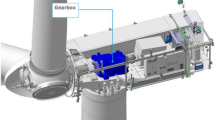

Further geometric boundary conditions are given through the design space of the main shaft fatigue test bench (Fig. 2), which will be used for the full-scale endurance test. The test rig was developed in the prior project “BeBen XXL” by Fraunhofer IWES and enables the execution of full-scale fatigue tests for wind turbine drive train components, in this case for main shafts. Major aims of this prior project were the comparison of theoretical and experimental endurance strengths, adjustment of certification guidelines and material savings for drive train components. Therefore, forged main shafts were investigated with the result that existing dimensioning guidelines are highly conservative, and the bending load spectrum can be increased more than 50% if the damage calculation is based on the fatigue test data instead of a synthetic S‑N curve (Wöhler curve) from DNV-GL [12]. The main functionality of the test bench is to apply realistic, damage-relevant loads on a wind turbine main shaft. Therefore, the test bench ensures a realistic 3‑point-suspension, where the gearbox is substituted by a four-row cylindrical roller bearing (support bearing in Fig. 2), which follows the shaft deformation. In the initial hollow rotor shaft design, the connection between gearbox and main shaft is realised via a flange connection. This bolted connection was part of investigations on the maximum load of the test bench, which led to the fact, that the flange connection needs to be replaced. The gearbox support is now realised through an extended hollow rotor shaft with directly mounted inner rings of the cylindrical roller bearing. Because the section of interest and optimisation is the hollow rotor shaft’s trumpet section, which is located far from the modified gearbox connection, the described design modification will not influence the results and their transferability. Since the main shaft fatigue test bench is modular adaptable, only specific parts need to be modified or developed to adapt the test bench to the needs of the project “Gusswelle”. The bending moment is applied on the device under test through a load lever and a cable pulley connected with a hydraulic load application system. The maximum shear force induced bending moment of the test bench is 15 MNm at 3 MN shear force with a test frequency of up to 1 Hz. Due to the new test setup, the maximum bending moment is limited to 13 MNm.

A load report of a 4 MW wind turbine is considered as load boundary condition. Through a rainflow counting for loads executed in the prior project “BeBen XXL”, the bending moment was identified as the critical load to provoke fatigue damage in the shaft [12]. Therefore, the test rig applies a bending moment through shear force. Furthermore, the test bench’s maximum bending moment is in the magnitude of the extreme bending moment a 4 MW wind turbine is designed to withstand. Therefore, the maximum shear force induced bending moment of the main shaft fatigue test bench is set as the load boundary condition. The raw hollow rotor shaft design is developed regarding the mentioned boundary conditions (Fig. 3).

Based on the raw hollow rotor shaft design, the corresponding chill mould design is developed by the foundry. Associated solidification simulations of the cast process are executed. In later tasks of the project “Gusswelle”, local stresses of the hollow rotor shaft will be correlated with local solidification times. For now, the objective is to reach a fast heat dissipation especially in highly stressed sections and a directional solidification in the casting process. The directional solidification reduces the possibility of cavities in the cast component.

The objective of the optimization of the hollow rotor shaft is to provoke a technical crack as a result of fatigue damage through the test bench run. To ensure a safe test bench operation and a reasonable sensor coverage, the area of highest stresses and thus the area of most probable fatigue crack initiation must be well defined through a suited hollow rotor shaft design. The trumpet section (Fig. 3) is defined as the area of expected crack initiation. Moreover, the tapered section between the main bearing and the support bearing is not locally highly stressed. Due to these restrictions, the optimization process focuses on the trumpet section. High stresses occur in this area due to the shear force induced bending moment on the test bench.

During the iterative design process principal stresses calculated by finite element simulations for every investigated trumpet geometry are evaluated with focus on magnitude and local expansion. The local expansion area of stress needs to be as large as possible to reach lightweight design and a high material utilisation rate. However, this expansion area needs to be limited because of the test bench application, where strain gauges are installed to measure principal strains on the circumference in the trumpet section.

The geometry of the trumpet section is designed in two steps, first the outer and second the inner geometry is defined. In the first step, the maximum principial stress of the trumpet geometry, its local expansion and the corresponding solidification time is evaluated. If these results match the expectations, the inner geometry is subsequently designed in the second step to adjust the nominal stress and the notch factor.

The boundary conditions of the trumpet geometry are defined as follows. The maximum principal stress in the trumpet section needs to be larger than the material’s fatigue strength at a failure probability of 97.5% to initiate a technical fatigue crack. The local expansion should be as large as possible, but the maximum principal stress should be concentrated to ensure a reasonable sensor coverage. The notch factor should be lower than the expected notch factor of the main bearing seat. Because the solidification proceeds from the chill mould on the outside of the shaft to the inside, as little material as possible should be removed from the outer side to keep as much fine microstructure with fast solidification times and higher fatigue strength at the highly stressed outer surface as possible.

Moreover, the highly stressed volume (HBV90%) of the trumpet section is calculated for utilisation in the posterior validation. The HBV90% holds the material, where 90% to 100% of the maximum stress occurs [13,14,15].Through the HBV90% the influence of the statistical and geometrical size effect is described. The fatigue strength is reduced due to an increasing highly stressed volume, because including a failure relevant weak point in the structure is more probable [13, 15].

2.2 Intended validation plan

The validation plan relies on the accompanying full-scale test on the main shaft fatigue test bench of Fraunhofer IWES (Fig. 2). Objective of the test bench run is a validation of the finite element model as well as the design concept through the measurements of strains. Part of the validation plan is the test plan. Three load stages and one back-up stage are intended. The magnitudes of the first three load stages depend on the cyclic material behaviour. To provoke a technical crack in the trumpet section as a result of fatigue damage, a constant rotating bending moment for a specific number of cycles will be applied on the device under test. Passing the first load stage fulfils the project objective to prove the stability under load. The subsequent load stages are expected to maximize the scientific findings. The intended load stages are defined as follows:

-

Stage 1: The induced maximum principal stress is equal to the specific fatigue strength at a failure probability of 2.5%.

-

Stage 2: The induced maximum principal stress is equal to the specific fatigue strength at a failure probability of 50.0%.

-

Stage 3: The induced maximum principal stress is equal to the specific fatigue strength at a failure probability of 97.5%.

-

Stage 4: The induced maximum principal stress is equal to 295 MPa, which exceeds the yield strength of the material and it is not desirable to run the test bench on this stage. Only if the material is much more resistant than expected, it is theoretically possible to test the full-scale specimen until a failure occurs.

-

General: If the number of cycles reaches the defined limit without an occurring failure, the load will be increased to the next stage.

The measurement concept is also part of the validation plan and is proven through the prior project. Through the application of strain gauges in representative areas, the finite element model will be validated. Furthermore, an occurring crack could be detected, and its depth could be predicted through the evaluation of the strain decrease. Therefore, 40 strain gauges will measure the strain at the circumference of the trumpet section. These measured strains will be used for crack detection and potentially for crack depth prediction. Additionally, strain gauges will be installed in further positions to measure nominal strain. Furthermore, the deflection of the load lever and the adapter disc will be measured through laser distance sensors. The validation of the finite element model will be made possible through the measured values.

2.3 Optimized hollow rotor shaft design

The optimized hollow rotor shaft design is based on the raw hollow rotor shaft design. The optimization focuses on the trumpet section with the aim to initiate a technical crack through fatigue damage. The trumpet geometry is developed in consideration of the solidification simulation and the cyclic material behaviour. The hollow rotor shaft consists of a rotor flange, one main bearing seat and one support bearing seat, as shown in Fig. 4.

The inner diameter of the four-row cylindrical roller support bearing is 820 mm. The section between main bearing and support bearing is tapered with a constant wall thickness. A spherical roller bearing with an inner diameter of 1120 mm is used as the main bearing. The rotor flange holds two M42 bolt circles each with 48 bolts. The rotor flange and the main bearing seat are predefined as aforementioned. The optimized section of the hollow rotor shaft is the trumpet section between the main bearing seat and the rotor flange. In this section, the inner diameter is increased, and the outer diameter is decreased (Fig. 5), which is a result of the aforementioned optimization process.

The maximum principal stress and its local expansion at the maximum load is shown in Fig. 6. The maximum principal stress is 295 MPa and exceeds the fatigue as well as the yield strength of the material. Therefore, it is possible to test the hollow rotor shaft until a technical crack occurs. The corresponding nominal stress is approximately 130 MPa, which leads to a notch factor of 2.26.

The relevant load case to calculate the highly stressed volume is rotary bending. Therefore, a section of 1° is evaluated and accumulated to represent the highly stressed volume during one rotation (Fig. 7). The HBV90% is 291,864 mm3, which is 48 times larger than the HBV90% of specimens used for the preliminary results of the fatigue test data. Therefore, a crack initiation in this volume is much more probable.

In the chill casting process, the material solidification occurs from the outside to the inside, shown through the increasing solidification time. The duration until solidification in the trumpet section is in the range of 4800 until 5280 s (Fig. 8). In the critical area of the trumpet section the solidification time is between 1440 and 1920 s. The solidification at the location of the maximum principal stress takes approximately 1700 s.

It is expected that the fatigue strength of the optimized hollow rotor shaft is estimated to high and the occurrence of a technical crack is more likely due to the higher HBV90% and the longer solidification time of the optimized hollow rotor shaft in comparison to the specimens in the material investigations. Thus, through its design it is more probable to initiate a technical crack through fatigue damage as the additional project objective.

The amount of saved material in the trumpet section compared to the initial design is 20% or 760 kg, saved through the application of the presented optimization procedure. It needs to be considered that this approach is valid for the project specific objective to initiate a technical crack in the trumpet section as a result from fatigue damage, when applying an extreme load as a fatigue load. Nevertheless, the general conclusion is that the application of the chill casting technology to drive train components of wind turbines leads to lightweight design, material savings and high material utilisation rates.

In comparison to a forged shaft the amount of material savings through the utilisation of a casted shaft is in the lower double-digit percentage range. Besides the saved material, the production costs of a casted hollow rotor shaft are highly relevant for a decision whether the next shaft generation is casted or forged. On one hand, the production cost of a forged shaft will not decrease with its quantity. On the other hand, the production costs of a casted shaft highly depend on the quantity of the shafts and the reusability of the casting moulds. Because of many influencing factors a general conclusion cannot be drawn. However, there is a point of intersection, especially when considering the additional process step forging and its higher necessary amount of energy.

Moreover, the validation of the design methodology is possible due to continuous strain measurements during the test bench run. Furthermore, a safe test bench operation is made possible through the definition of the area of highest stresses and probable failure occurrence.

3 Inner ring creep in the main bearing seat

Inner ring creep is a known issue, which can occur at the bearing outer and inner ring [11, 16,17,18,19]. It can be described as a continuous circumferential movement of the inner ring relative to the main shaft through a surface traveling-wave creeping in the direction of rotation. The inner ring creep is an accumulated strain moving in the rotation direction. An alternative definition describes this phenomenon as a caterpillar-like movement of the bearing ring, which occurs also without applied torque [18]. This relative movement or sliding leads to fretting, abrasive wear and surface cracks in the bearing seat [17, 18, 20].

This phenomenon was observed in the prior project “BeBen XXL”, see Fig. 9, where fatigue runs with forged main shafts of a 2 MW wind turbine as specimens were conducted on the main shaft fatigue test bench [11].

In the current project, a chill casted hollow rotor shaft will be tested on the main shaft fatigue test bench. Due to the lower E‑modulus and negative hollow shaft influence, the overall stiffness of the hollow rotor shaft compared to the solid shaft is lower and inner ring creep is more likely to occur [16]. Another important influencing factor on the inner ring creep is the contact pressure of the interference fit. The larger the contact pressure, the lower is the probability of an occurring inner ring creep [18]. However, the extent of the press fit is finite due to the assembly procedure: The entire bearing is heated up (to a limited temperature) before joining bearing and main shaft.

The typical creeping phenomenon occurs when the contact between inner ring and shaft locally opens due to the Hertzian contact pressure of the rolling element and the bearing rotates, see Fig. 10. The part of the inner ring with an open contact between ring and shaft is pushed in the rotation direction through the rollover of the rolling element. This is mostly relevant for clearance fit.

In the wind turbine industry, main bearing seats are usually realized as an interference fit. In this case, important influencing factors on the inner ring creep are the magnitude of radial load and bending moment, the wall thickness of the shaft and the contact pressure of the interference fit [18]. The risk of inner ring creep is lower when (a) the radial load and bending moment is reduced, (b) the wall thickness and (c) the contact pressure is increased. Therefore, inner ring creep is highly relevant for hollow rotor shafts of wind turbines with thin walls and low contact pressure.

3.1 A numerical method to predict inner ring creep

The prediction methodology for inner ring creep used in this case is based on the finite element simulation of the main shaft fatigue test bench. The test bench with all relevant parts is modelled in Ansys Workbench and consists of the following components: hollow rotor shaft, main bearing, distance rings, shaft nut, adapter disc, load lever and main bearing housing, see Fig. 11.

Modelling the main bearing housing considers the adjacent stiffness, which influences the deformation of the bearing. Focus area of the simulation is the main bearing seat between inner ring and hollow rotor shaft, see Fig. 12.

The contact definition in regions that are prone to creep, like the main bearing seat, are modelled with a friction coefficient of 0.3, corresponding to oil-lubricated steel-steel contact (100Cr6 E vs. 42CrMo4 +QT) [21]. Other contact regions, like the contact between rollers and inner ring, are defined as bonded contacts. To ensure the correct behavior of the spherical roller bearing, a frictionless contact is defined between outer ring and rollers. This simplification is valid, because only a static structural analysis is examined, where a shear force induced bending moment is applied to the test bench. The shear force is applied at the end of the load lever through the cable pulley, see Fig. 2. The reaction load in the main bearing seat is a combination of shear force and bending moment. This leads to an axial compression on the lower side and an axial expansion on the upper side of the main bearing seat, which can be observed through the deformation in x‑direction of the hollow rotor shaft, see Fig. 13.

Ring creep is already examined for bearings with a diameter of up to 100 mm [16]. The applied criterion for occurring ring creep relies on the sliding distance in the bearing seat. If the sliding distance exceeds a threshold value of 0.1 µm, ring creep probably occurs [16]. However, the applicability of the threshold needs to be investigated further, because the inner diameter of the examined main bearing is more than ten times larger in comparison to the abovementioned investigations. Furthermore, the occurring sliding distance through the interference fit is 29 µm, which clearly exceeds the mentioned threshold. In the definition of the interference fit in the finite element simulation, the model is already assembled, and an offset for the interference fit is defined. Initially, this offset is a penetration in the contact, which will be pushed out during the first load step, when the interference fit is generated. The deformation of the contact and the target results in a relative movement of the contact to the target elements. This relative movement is interpreted as a sliding distance, which is an unsigned scalar. Hence, simply subtracting the sliding distance of the first load step from the further load steps does not lead to the desired result. In further investigations, the sliding distances need to be calculated vectorial. Thus, the expected creeping distance per rotation as well as the accumulated creeping distance through the test bench run is not yet determined.

Evaluating the sliding distances in the interference fit shows larger axial deformation induced sliding in the upper side of the main bearing seat compared to the lower side, cf. Fig. 14. The shear force direction is in negative z‑direction. In the unloaded upper side of the bearing seat, the sliding distance is larger than the sliding distance in the loaded lower side. This can be explained through the varying contact pressure influenced by the external load. This sliding results in wear in the main bearing seat, signs of which were likewise observed in the prior project after the disassembly, see Fig. 9. The areas with lower sliding distance (Fig. 14) between the two roller rows represents the areas with less extensive signs of wear (Fig. 9).

Due to the rotary bending load case, there is no sliding free zone in the main bearing seat along the circumference. Consequently, the fatigue marks in the main bearing seat spread over the whole circumference, see Fig. 9. Because of the overlaying loads bending and shear force in the main bearing seat, it is not possible to differentiate, what the creeping phenomenon results from: Either it results from the shear force and the partial opening of the contact or from the axial compression and expansion of the hollow rotor shaft.

A reliable prediction, in which direction and how far the ring moves, is not possible with these results. Nevertheless, the fretting fatigue and inner ring creep on the main shaft fatigue test bench of Fraunhofer IWES will probably occur, if the test bench applies at least 57% of the load corresponding to load stage 4. Reaching this load will lead to a sliding zone, which exceeds half of the bearing seat.

In further investigations, the main bearing will be modelled as 3D finite element kinematic simulation. Through this approach it is expected to be able to also predict the creeping direction and distance of the inner ring creep resulting from the test bench run.

3.2 Experimental proof of inner ring creep

There are several measuring principles to quantify the creeping behavior. Capturing the inner ring creep in a qualitative way is easy and possible after the test bench run and the disassembly, by inspecting the bearing seat. This was executed in the prior project and fretting was observed in parts of the bearing seat.

Measuring the inner ring creep in a quantitative way may be possible through existing automated measuring principles, which measure the rotational frequency of the inner ring and the shaft. From the difference of the rotational frequency, the quantitative creeping could be determined [18]. Measuring the rotational frequencies could be realized by inductive or optical sensors. However, a measurement concept to quantify inner ring creep in this dimension requires high accuracy, resolution and minimum drift of the values. According to the experiences with the prior measurement concept of the main shaft fatigue test bench, the requirements to quantify the inner ring creep with the presented measuring principles could not be fulfilled. Furthermore, the available space is limited, so that this measuring method is not applicable.

Another possibility to measure the inner ring creep in a quantitative way is to install a distance sensor in the distance ring, which measures the tangential relative movement of a reference plane attached to the inner ring. However, due to the unknown magnitude of sliding degree, the reference plane could shear off. Therefore, this quantitative measurement principle is not considered applicable, either.

The chosen measuring method relies on regular visual inspection of the inner ring position relative to the shaft. For this purpose, the bearing is permanently marked, and a measuring tape is installed to determine the quantitative movement.

The measurement will go along with the test bench run and the measured value is the accumulated creeping distance of the inner ring. With this simple approach, the creeping distance per rotation is not noticeable, however the measurement is independent from the creeping distance magnitude.

4 Conclusions and outlook

In the development of optimized cast components, the higher fatigue strength of nodular cast iron should be considered. The design methodology relies on accompanying material investigations on specimens with different highly stressed volumes and solidification times. Using the chill cast technology, which offers a faster heat dissipation and strength compared to a sand-casting process, enables a lightweight design of casted GJS components. In this design methodology, a simulation of the solidification in the casting process and finite element simulations to calculate principal stresses and the highly stressed volume are necessary.

Through the application of the lightweight design methodology to the rotor shaft for a wind turbine, 20% material could be saved in the trumpet section of the optimized hollow rotor shaft in comparison to the raw design, but with fulfilment of the failure criterion. The occurrence of a technical crack through fatigue damage is the defined failure criterion. Although this optimization only focuses on the highly stressed section with the aim to initiate a technical crack, a high potential of saving material has been revealed. Additional material savings in the less stressed sections are probable through the application of this methodology to the whole hollow rotor shaft.

Benefits of the lighter and probably smaller hollow rotor shafts with a high material utilisation are material savings, less power consumption and CO2 emission during manufacturing, as well as saved production costs. Considering these benefits in the general product development even for non-rotational symmetrical parts is possible through manipulating the local fatigue strength with cooling chills in highly stressed regions during the casting process. The development of large gears and casted planet carriers are possible applications.

Due to the application of the lightweight design methodology, the entire hollow rotor shaft can become smaller, which also affects the main bearing size. Considering the dynamic load rating of the used main bearing as a reference, which is more than twice of the test bench’s dynamic shear force, a much smaller main bearing could find application. The usage of a smaller main bearing would lead to lower costs for bearing and housing. However, the use of a smaller bearing and a hollow rotor shaft with decreased stiffness may lead to other challenges like inner ring creep and fretting fatigue in the main bearing seat. These damage mechanisms are more probable with an increased specific bearing load and decreased stiffness of the hollow rotor shaft. Therefore, further investigations on inner ring creep in the main bearing seat of thin-walled hollow rotor shafts are necessary.

In the further steps of the project “Gusswelle”, the commissioning of the main shaft fatigue test bench will be accomplished. Therefore, the mechanical assembly, automation as well as the implementation of the measurement systems will be finalized. After the commissioning is finished, the full-scale test will be carried out.

References

Bundesministerium für Umwelt, Naturschutz und nukleare Sicherheit (BMU) (2016) Klimaschutzplan 2050 Klimaschutzpolitische Grundsätze und Ziele der Bundesregierung

§4 Absatz 1 Satz 1 EEG (2017). https://www.gesetze-im-internet.de/eeg_2014/EEG_2017.pdf

The Engineering Mindset (2020) Homepage. https://theengineeringmindset.com/density-of-metals/. Accessed 30 Nov 2020

Herrmann J, Rauert T, Dalhoff P, Sander M (2016) Fatigue life on a full scale test rig: forged versus cast wind turbine rotor shafts. J Phys Conf Ser. https://doi.org/10.1088/1742-6596/753/7/072021

Herrmann J, Rauert T, Dalhoff P, Sander M (2016) Fatigue and fracture mechanical behaviour of a wind turbine rotor shaft made of cast iron and forged steel. Proc Struct Integr. https://doi.org/10.1016/j.prostr.2016.06.369

Chen X, Yang G, Yang Y, Yin DQ (2014) Study on casting instead of traditional forging for main shaft of megawatt wind turbine. Appl Mech Mater 494/495:587–592. https://doi.org/10.4028/www.scientific.net/AMM.494-495.587

Forschungskuratorium Maschinenbau (2012) FKM-Richtlinie – Rechnerischer Festigkeitsnachweis für Maschinenbauteile aus Stahl, Eisenguss- und Aluminiumwerkstoffen, 6th edn. VDMA, Frankfurt am Main

Berns H, Theisen W (2008) Eisenwerkstoffe – Stahl und Gusseisen, 4th edn. Springer, Berlin, Heidelberg

DIN EN 1563:2019-04 (2019) Gießereiwesen – Gusseisen mit Kugelgraphit (Deutsche Fassung EN 1563:2018)

DNV GL (2016) Machinery for wind turbines, DNVGL-ST-0361, DNV GL AS

Rauert T, Herrmann J, Dalhoff P, Sander M (2016) Fretting fatigue induced surface cracks under shrink fitted main bearings in wind turbine rotor shafts. Proc Struct Integr. https://doi.org/10.1016/j.prostr.2016.06.449

Hochschule für Angewandte Wissenschaften Hamburg, Fraunhofer IWES, Suzlon Energy Ltd. (2018) Final report for the project: BeBen XXL – beschleunigter experimenteller Betriebsfestigkeitsnachweis von Windenergieanlagen – Großkomponenten am Beispiel der Hauptwelle https://doi.org/10.2314/KXP:1666359084

Sonsino CM (1993) Zur Bewertung des Schwingfestigkeitsverhaltens von Bauteilen mit Hilfe örtlicher Beanspruchungen. Konstruktion 45:25–33

Kuguel R (1961) A relation between theoretical stress concentration factor and fatigue notch factor deduced from the concept of highly stressed volume. ASTM Proc 61:732–748

Sonsino CM, Kaufmann H, Grubisic V (1995) Übertragbarkeit von Werkstoffkennwerten am Beispiel eines betriebsfest auszulegenden geschmiedeten Nutzfahrzeug-Achsschenkels. Konstruktion 47:222–232

Forschungsvereinigung Antriebstechnik e.V., Arbeitskreis Wälzlager (2008) Wälzlagerwandern – Wandernde Wälzlager Innen- und Außenringe unter verschiedenen Einsatzbedingungen. Forschungsvorhaben Nr. 479 I, Heft 852

T. NIWA (2013) A creep mechanism of rolling bearings. https://www.ntnglobal.com/en/products/review/pdf/NTN_TR81_en.pdf. Accessed: 26.11.2020

Schiemann T, Pörsch S, Leidich E, Sauer B (2018) Intermediate layer as measure against rolling bearing creep. Wind Energy. https://doi.org/10.1002/we.2170

Aul E (2008) Analyse von Relativbewegungen in Wälzlagersitzen. Technische Universität Kaiserslautern, Kaiserslautern

Maiwald A, Leidich E (2013) FE simulations of irreversible relative movements (creeping) in rolling bearing seats – influential parameters and remedies. In: Proceedings of the World Congress on Engineering and Computer Science 2013 23–25 October. vol II

Babbick T (2012) Wandern von Wälzlagerringen unter Punktlast. TU Kaiserslautern

Acknowledgements

The research project “Gusswelle” is carried out in collaboration with Fraunhofer LBF, TU Freiberg and the industry partners Nordex Energy and Walzengießerei Coswig. We kindly acknowledge the funding by the German Federal Ministry for Economic Affairs and Energy (BMWi).

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Kirsch, J., Kyling, H. Optimized cast components in the drive train of wind turbines and inner ring creep in the main bearing seat. Forsch Ingenieurwes 85, 199–210 (2021). https://doi.org/10.1007/s10010-021-00458-x

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10010-021-00458-x