Abstract

Motivated by the challenges related to the calibration of financial models, we consider the problem of numerically solving a singular McKean–Vlasov equation

where \(W\) is a Brownian motion and \(v\) is an adapted diffusion process. This equation can be considered as a singular local stochastic volatility model. While such models are quite popular among practitioners, its well-posedness has unfortunately not yet been fully understood and in general is possibly not guaranteed at all. We develop a novel regularisation approach based on the reproducing kernel Hilbert space (RKHS) technique and show that the regularised model is well posed. Furthermore, we prove propagation of chaos. We demonstrate numerically that a thus regularised model is able to perfectly replicate option prices coming from typical local volatility models. Our results are also applicable to more general McKean–Vlasov equations.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The present article is motivated by Guyon and Henry-Labordère [13], where the authors proposed a particle method for the calibration of local stochastic volatility models (e.g. stock price models). For ease of presentation, let us assume zero interest rates and recall that local volatility models

where \(W\) denotes a one-dimensional Brownian motion under a risk-neutral measure and \(X\) the price of a stock, can replicate any sufficiently regular implied volatility surface, provided that we choose the local volatility according to Dupire’s formula, symbolically \(\sigma := \sigma _{\text{Dup}}\); see Dupire [8]. (In case of deterministic nonzero interest rates, the discussion below remains virtually unchanged after passing to forward stock and option prices). Unfortunately, it is well understood that Dupire’s model exhibits unrealistic random price behaviour despite perfect fits to market prices of options. On the other hand, stochastic volatility models

for a suitably chosen stochastic variance process \((v_{t})\), may lead to realistic (in particular, time-homogeneous) dynamics, but are typically difficult or impossible to fit to observed implied volatility surfaces. We refer to Gatheral [11] for an overview of stochastic and local volatility models. Local stochastic volatility models can combine the advantages of both local and stochastic volatility models. Indeed, if the stock price is given by

then it exactly fits the observed market option prices provided that

This is a simple consequence of Gyöngy’s celebrated Markovian projection theorem; see Gyöngy [14, Theorem 4.6] and also Brunick and Shreve [4, Corollary 3.7]. With this choice of \(\sigma \), we have

Note that \(v\) in (1.2) can be any integrable and positive adapted stochastic process. In a sense, (1.2) may be considered as an inversion of the Markovian projection due to [14], applied to Dupire’s local volatility model, i.e., (1.1) with \(\sigma = \sigma _{\text{Dup}}\).

Thus the stochastic local volatility model of McKean–Vlasov type (1.2) solves the smile calibration problem. However, equation (1.2) is singular in a sense explained below and very hard to analyse and solve. Even the problem of proving existence or uniqueness for (1.2) (under various assumptions on \(v\)) turned out to be notoriously difficult and only a few results are available; we refer to Lacker et al. [17] for an extensive discussion and literature review. Let us recall that the theory of standard McKean–Vlasov equations of the form

with \(\mu _{t} = \mathrm{Law}(Z_{t})\) is well understood under appropriate regularity conditions, in particular, Lipschitz-continuity of \(\widetilde{H}\) and \(\widetilde{F}\) with respect to the standard Euclidean distances in the first two arguments and with respect to the Wasserstein distance in \(\mu _{t}\); see Funaki [10], Carmona and Delarue [6, Chap. 4.2], Mishura and Veretennikov [20]. Denoting \(Z_{t} := (X_{t}, Y_{t})\), it is not difficult to see that the conditional expectation \((x, \mu _{t}) \mapsto \mathbb{E}\left [ A(Y_{t}) \mid X_{t} = x \right ]\) is in general not Lipschitz-continuous in the above sense. Therefore the standard theory does not apply to (1.2).

There are a number of results available in the literature where the Lipschitz condition on drift and diffusion is not imposed. Bossy and Jabir [3] considered singular McKean–Vlasov (MV) systems of the form:

or, alternatively, the seemingly even less regular equation

where \(p(t, \,\cdot \,)\) denotes the density of \(X_{t}\). Bossy and Jabir [3] establish well-posedness of (1.3)–(1.5) under suitable regularity conditions (in particular, ellipticity) based on energy estimates of the corresponding nonlinear PDEs. Interestingly, these techniques break down when the roles of \(X\) and \(Y\) are reversed in (1.3), (1.4), that is, when \(\mathbb{E}[\gamma (X_{t}) | Y_{t}]\) is replaced by \(\mathbb{E}[\gamma (Y_{t}) | X_{t}]\) in (1.3), and similarly for the drift term. Hence the results of [3] do not imply well-posedness of (1.2). Lacker et al. [17] studied the two-dimensional SDE

where \(W\) and \(B\) are two independent one-dimensional Brownian motions. Clearly, this can be seen as a generalisation of (1.2) with a non-zero drift and with the process \(v\) chosen in a special way. The authors proved strong existence and uniqueness of solutions to (1.6), (1.7) in the stationary case. In particular, this implies strong conditions on \(b_{1}\) and \(b_{2}\), but also requires the initial value \((X_{0},Y_{0})\) to be random and to have the stationary distribution. Existence and uniqueness of solutions to (1.6), (1.7) in the general case (without the stationarity assumptions) remains open. Finally, let us mention the result of Jourdain and Zhou [16, Theorem 2.2] which established weak existence of the solution to (1.2) for the case when \(v\) is a jump process taking finitely many values.

Another question apart from well-posedness of these singular McKean–Vlasov equations is how to solve them numerically (in a certain sense). Let us recall that even for standard SDEs with singular or irregular drift, where existence/uniqueness is known for quite some time, the convergence of the corresponding Euler scheme with non-vanishing rate has been established only very recently; see Butkovsky et al. [5], Jourdain and Menozzi [15]. The situation with the singular McKean–Vlasov equations presented above is much more complicated and very few results are available in the literature. In particular, the results of Lacker et al. [17] do not provide a way to construct a numerical algorithm for solving (1.2) even in the stationary case considered there.

In this paper, we study the problem of numerically solving singular McKean–Vlasov (MV) equations of a more general form than (1.2), namely

where \(H\), \(F\), \(A_{1}\), \(A_{2}\) are sufficiently regular functions, \(W\) is a \(d\)-dimensional Brownian motion and \(Y\) is a given stochastic process, for example a diffusion process. A key issue is how to approximate the conditional expectations \(\mathbb{E}[A_{i}(Y_{t}) | X_{t} = x]\), \(i=1,2\), \(x\in \mathbb{R}^{d}\).

In their seminal paper, Guyon and Henry-Labordère [13] suggested an approach to tackle this problem (see also Antonelli and Kohatsu-Higa [1]). They used the “identity”

where \(\delta _{x}\) is the Dirac delta function concentrated at \(x\). This suggests the approximation

Here \((X^{i,N}, Y^{i,N})_{i=1,\dots , N}\) is a particle system, \(k_{\varepsilon}(\,\cdot \,) \approx \delta _{0}(\,\cdot \,)\) is a regularising kernel and \(\varepsilon >0\) is a small parameter. This technique for solving (1.8) (assuming (1.8) has a solution for a moment) works very well in practice, especially when coupled with interpolation on a grid in \(x\)-space. Due to the local nature of the performed regression, the method can be justified under only weak regularity assumptions on the conditional expectation. Note, however, that the interpolation part might require higher order regularity.

On the other hand, the method has an important disadvantage shared by all local regression methods: For any given point \(x\), only points \((X^{i},Y^{i})\) in a neighbourhood around \(x\) of size proportional to \(\varepsilon \) contribute to the estimate of \(\mathbb{E}[A(Y_{t}) | X_{t} = x]\) as we have \(k_{\varepsilon}(\,\cdot \, - x)\approx 0\) outside that neighbourhood. Hence local regression cannot take advantage of “global” information about the structure of the function \(x \mapsto \mathbb{E}[A(Y_{t}) | X_{t} = x]\). If for example the conditional expectation can be globally approximated via a polynomial, it is highly inefficient (from a computational point of view) to approximate it locally using (1.9). Taken to the extreme, if we assume a compactly supported kernel \(k\) and formally take \(\varepsilon = 0\), then the estimator (1.9) collapses to \(\mathbb{E}[A(Y_{t}) | X_{t} = X^{i,N}_{t}] \approx A(Y^{i,N}_{t})\) since only \(X^{i,N}_{t}\) is close enough to itself to contribute to the estimator. In the context of the stochastic local volatility model (1.2), this means that the dynamics silently collapses to a pure local volatility dynamics if \(\varepsilon \) is chosen too small.

This disadvantage of local regression methods can be avoided by using global regression techniques. Indeed, taking advantage of global regularity and global structural features of the unknown target function, global regression methods are often seen to be more efficient than their local counterparts; see e.g. Bach [2]. On the other hand, the global regression methods require more regularity (e.g. global smoothness) than the minimal assumptions needed for local regression methods. In addition, the choice of basis functions can be crucial for global regression methods.

In fact, the starting point of this work was to replace (1.9) by global regression based on, say, \(L\) basis functions. However, it turns out that the Lipschitz constants of the resulting approximation to the conditional expectations in terms of the particle distribution explode as \(L \to \infty \), unless the basis functions are carefully chosen.

As an alternative to Guyon and Henry-Labordère [13], we propose in this paper a novel approach based on ridge regression in the context of reproducing kernel Hilbert spaces (RKHSs) which in particular does not have either of the above mentioned disadvantages, even when the number of basis functions is infinite.

Recall that an RKHS ℋ is a Hilbert space of real-valued functions \({f: \mathcal{X}\rightarrow \mathbb{R}}\) such that the evaluation map \(\mathcal{H}\ni f \mapsto f(x)\) is continuous for every \(x\in \mathcal{X}\). This crucial property implies that there exists a positive symmetric kernel \(k: \mathcal{X}\times \mathcal{X}\) \(\rightarrow \mathbb{R}\), i.e., for any \(c_{1},\dots ,c_{n}\in \mathbb{R}\), \(x_{1},\dots ,x_{n}\in \mathcal{X}\), one has

such that \(k_{x} := k(\,\cdot \,,x) \in \mathcal{H}\) for every \(x\in \mathcal{X}\), and one has \(\langle f,k_{x} \rangle _{\mathcal{H}}= f(x)\) for all \(f\in \mathcal{H}\). As a main feature, any positive definite kernel \(k\) uniquely determines an RKHS ℋ and the other way around. In our setting, we consider \(\mathcal{X}\subseteq \mathbb{R}^{d}\). For a detailed introduction and further properties of RKHSs, we refer to the literature, for example Steinwart and Christmann [25, Chap. 4]. We recall that the RKHS framework is popular in machine learning where it is widely used for computing conditional expectations. In the learning context, kernel methods are most prominently used in order to avoid the curse of dimensionality when dealing with high-dimensional features by the kernel trick. We stress that this issue is not relevant in the application to calibration of equity models, but it might be interesting for more general, high-dimensional singular McKean–Vlasov systems.

Consider a pair of random variables \((X,Y)\) taking values in \(\mathcal{X}\times \mathcal{X}\) with finite second moments and denote \(\nu := \operatorname{Law}(X,Y)\). Suppose that \(A\colon \mathcal{X} \to \mathbb{R}\) is sufficiently regular and ℋ is large enough so that we have \(\mathbb{E}[ A(Y) | X = \,\cdot \, ] \in \mathcal{H}\). Then formally,

where

Unfortunately, in general, the operator \(\mathcal{C}^{\nu}\) is not invertible. As \(\mathcal{C}^{\nu}\) is positive definite, it is, however, possible to regularise the inversion by replacing \(\mathcal{C}^{\nu}\) by \(\mathcal{C}^{\nu }+ \lambda I_{\mathcal{H}}\) for some \(\lambda > 0\), where \(I_{\mathcal{H}}\) is the identity operator on ℋ. Indeed, it turns out that

is the solution to the minimisation problem

see Proposition 3.3. On the other hand, one also has

and therefore it is natural to expect that if \(\lambda >0\) is small enough and ℋ is large enough, then \(m^{\lambda}_{A}(\,\cdot \,; \nu )\approx \mathbb{E}[A(Y) | X = \, \cdot \,]\), that is, \(m^{\lambda}_{A}(\,\cdot \,; \nu )\) is close to the true conditional expectation.

The main result of the article is that the regularised MV system obtained by replacing the conditional expectations with their regularised versions (1.10) in (1.8) is well posed and propagation of chaos holds for the corresponding particle system; see Theorems 2.2 and 2.3. To establish these results, we study the joint regularity of \(m^{\lambda}_{A}(x;\nu )\) in the space variable \(x\) and the measure \(\nu \) for fixed \(\lambda >0\). Such results are almost absent in the literature on RKHSs and we here fill this gap. In particular, we prove that under suitable conditions, \(m^{\lambda}_{A}(x; \nu )\) is Lipschitz in both arguments, that is, with respect to the standard Euclidean norm in \(x\) and the Wasserstein-1-norm in \(\nu \), and can be calculated numerically in an efficient way; see Sect. 2. Additionally, in Sect. 3, we study the convergence of \(m^{\lambda}_{A}(\,\cdot \,; \nu )\) in (1.10) to the true conditional expectation for fixed \(\nu \) as \(\lambda \searrow 0\).

Let us note that as a further nice feature of the RKHS approach compared to the kernel method of [13], one may incorporate, at least in principle, global prior information concerning properties of \(\mathbb{E}[ A(Y) | X = \,\cdot \,]\) into the choice of the RKHS-generating kernel \(k\). In a nutshell, if one anticipates beforehand that \(\mathbb{E}[ A(Y) | X = x]\approx f(x)\) for some known “nice” function \(f\), one may pass to a new kernel given by setting \(\widetilde{k}(x,y) := k(x,y)+f(x)f(y)\). This degree of freedom is similar to, for example, the possibility of choosing basis functions in line with the problem under consideration in the usual regression methods for American options. We also note that the Lipschitz constants for \(m^{\lambda}_{A}(x;\nu )\) with respect to both arguments are expressed in bounds related to \(A\) and the kernel \(k\) only; see Theorem 2.4. In contrast, if we had dealt with standard ridge regression, that is, ridge regression based on a fixed system of basis functions, we should have to impose restrictions on the regression coefficients leading to a nonconvex constrained optimisation problem.

In summary, the contribution of the current work is fourfold. First, we propose an RKHS-based approach to regularise (1.8) and prove the well-posedness of the regularised equation. Second, we show convergence of the approximation (1.11) to the true conditional expectation as \(\lambda \searrow 0\). Third, we suggest a particle-based approximation of the regularised equation and analyse its convergence. Finally, we apply our algorithm to the problem of smile calibration in finance and illustrate its performance on simulated data. In particular, we validate our results by solving numerically a regularised version of (1.2) (with \(m^{\lambda}_{A}\) in place of the conditional expectation). We show that our system is indeed an approximate solution to (1.2) in the sense that we get very close fits of the implied volatility surface – the final goal of the smile calibration problem.

The rest of the paper is organised as follows. Our main theoretical results are given in Sect. 2. Convergence properties of the regularised conditional expectation \(m^{\lambda}_{A}\) are established in Sect. 3. A numerical algorithm for solving (1.8) and an efficient implementable approximation of \(m^{\lambda}_{A}\) are discussed in Sect. 4. Section 5 contains numerical examples. The results of the paper are summarised in Sect. 6. Finally, all the proofs are placed in Sect. 7.

Convention on constants. Throughout the paper, \(C\) denotes a positive constant whose value may change from line to line. The dependence of constants on parameters, if needed, will be indicated e.g. by \(C(\lambda )\).

2 Main results

We begin by introducing the basic notation. For \(a\in \mathbb{R}\), we set \(a^{+}:=\max (a,0)\). Let \((\Omega , \mathcal{F},\mathbb{P})\) be a probability space. For \(d\in \mathbb{N}\), let \(\mathcal{X}\subseteq \mathbb{R}^{d}\) be an open subset and \(\mathcal{P}_{2}(\mathcal{X})\) the set of all probability measures on \((\mathcal{X},\mathcal{B}(\mathcal{X}))\) with finite second moment. If \(\mu ,\nu \in \mathcal{P}_{2}(\mathcal{X}) \), \(p\in [1,2]\), we denote the Wasserstein-p (Kantorovich) distance between them by

where the infimum is taken over all random variables \(X\), \(Y\) with \(\operatorname{Law}(X)=\mu \) and \({\operatorname{Law}(Y)=\nu}\). Let \(k:\mathcal{X}\times \mathcal{X}\to \mathbb{R}\) be a symmetric, positive definite kernel and ℋ a reproducing kernel Hilbert space of functions \(f\colon \mathcal{X}\to \mathbb{R}\) associated with the kernel \(k\). That is, for any \(x\in \mathcal{X}\), \(f\in \mathcal{H}\), one has

In particular, \(\langle k(x,\,\cdot \,),k(y,\,\cdot \,)\rangle _{\mathcal{H}}=k(x,y)\) for any \(x,y\in \mathcal{X}\). We refer to Steinwart and Christmann [25, Chap. 4] for further properties of RKHSs.

Let \(A\colon \mathcal{X}\to \mathbb{R}\) be a measurable function such that \(|A(x)|\le C(1+|x|)\) for some universal constant \(C>0\) and all \(x\in \mathcal{X}\). For \(\nu \in \mathcal{P}_{2}(\mathcal{X}\times \mathcal{X})\), \(\lambda \ge 0\), consider the optimisation problem (ridge regression)

We fix \(T>0\), \(d\in \mathbb{N}\) and consider the system

where \(H\colon [0,T]\times \mathbb{R}^{d}\times \mathbb{R}^{d} \times \mathbb{R}\to \mathbb{R}^{d}\), \(F\colon [0,T]\times \mathbb{R}^{d}\times \mathbb{R}^{d} \times \mathbb{R}\to \mathbb{R}^{d}\times \mathbb{R}^{d}\), \(A_{i}\colon \mathbb{R}^{d}\to \mathbb{R}\), \(b\colon [0,T]\times \mathbb{R}^{d}\to \mathbb{R}^{d}\), \(\sigma \colon [0,T]\times \mathbb{R}^{d}\to \mathbb{R}^{d}\times \mathbb{R}^{d}\) are measurable functions, \(W^{X}\), \(W^{Y}\) are two (possibly correlated) \(d\)-dimensional Brownian motions on \((\Omega , \mathcal{F},\mathbb{P})\), and \(t\in [0,T]\). We note that our choice of \(Y\) as a diffusion process in (2.3) is mostly for convenience, and we expect our results to hold in more generality when appropriately modified.

Denote \(\mu _{t}:=\operatorname{Law}(X_{t},Y_{t})\). As mentioned above, the functional

is not Lipschitz-continuous even if \(A_{i}\) is smooth. Therefore the classical results on well-posedness of McKean–Vlasov equations are not applicable to (2.2), (2.3). The main idea of our approach is to replace the conditional expectation by the corresponding RKHS approximation (2.1) which has “nice” properties (in particular, it is Lipschitz-continuous). This implies strong existence and uniqueness of the new system. Furthermore, we demonstrate numerically that the solution to the new system is still “close” to the solution of (2.2), (2.3) in a certain sense. Thus we consider the system

where \(t\in [0,T]\). We need the following assumptions on the kernel \(k\) (formulated in a slightly redundant manner for ease of notation).

Assumption 2.1

The kernel \(k\) is twice continuously differentiable in both variables, \(k(x,x)>0\) for all \(x\in \mathcal{X}\), and

Let \(\mathcal{C}^{1}(\mathcal{X};\mathbb{R})\) be the space of all functions \(f\colon \mathcal{X}\to \mathbb{R}\) such that

Now we are ready to state our main results. Their proofs are given in Sect. 7.

Theorem 2.2

Suppose that Assumption 2.1is satisfied for the kernel \(k\) with \(\mathcal{X}= \mathbb{R}^{d}\) and

1) \(A_{i}\in \mathcal{C}^{1}(\mathbb{R}^{d};\mathbb{R})\), \(i=1,2\);

2) there exists a constant \(C>0\) such that for any \(t\in [0,T]\), \({x,y,x',y'\in \mathbb{R}^{d}}\), \({z,z'\in \mathbb{R}}\), we have

3) for any fixed \(x,y,\in \mathbb{R}^{d}\), \(z\in \mathbb{R}\), we have

4) \(\mathbb{E}[ |\widehat{X}_{0}|^{2} ] + \mathbb{E}[|Y_{0}|^{2}]<\infty \).

Then for any \(\lambda >0\), the system (2.4)–(2.6) with the initial condition \((\widehat{X}_{0}, Y_{0})\) has a unique strong solution.

To analyse a numerical scheme solving (2.4)–(2.6), we consider the particle system

where \(N\in \mathbb{N}\), \(n=1,\ldots ,N\), \(t\in [0,T]\) and the pairs of \(d\)-dimensional Brownian motions \((W^{X,n},W^{Y,n})\), \(n=1,\ldots ,N\), are independent and have the same law as \((W^{X},W^{Y})\). The following propagation of chaos result holds; it establishes both weak and strong convergence of \(X^{N,n}\).

Theorem 2.3

Suppose that all the conditions of Theorem 2.2are satisfied. Suppose the initial values \((X_{0}^{N,n},Y_{0}^{N,n})\) are independent and have the same law as \((\widehat{X}_{0},Y_{0})\). Moreover, suppose that \(\mathbb{E}[ |\widehat{X}_{0}|^{q}] + \mathbb{E}[|Y_{0}|^{q}]<\infty \) for some \(q>4\). Then there exists a constant \(C=C(\lambda ,T,\mathbb{E}[|\widehat{X}_{0}|^{q}],\mathbb{E}[|Y_{0}|^{q}])>0\) such that for any \(n=1,\ldots ,N\), \(N\in \mathbb{N}\), we have

where the process \(\widehat{X}^{n}\) solves (2.4)–(2.6) with \(W^{X,n}\), \(W^{Y,n}\) in place of \(W^{X}\), \(W^{Y}\), respectively, and where

A crucial step which allows us to obtain these results is the Lipschitz-continuity of \(m^{\lambda}\). The following holds.

Theorem 2.4

Assume that the kernel \(k\) satisfies Assumption 2.1. Let \(A\in \mathcal{C}^{1}(\mathcal{X};\mathbb{R})\). Then for any \(x,y\in \mathcal{X}\), \(\mu ,\nu \in \mathcal{P}_{2}(\mathcal{X}\times \mathcal{X})\), we have

where

may be considered to be (possibly suboptimal) Lipschitz constants with respect to the Wasserstein metric and Euclidean norm, respectively.

This result is interesting for at least two reasons. First, it shows that \(m^{\lambda}_{A}\) is Lipschitz-continuous in both arguments, provided that the kernel \(k\) is smooth enough. That is, the Lipschitz-continuity property depends on ℋ only through the smoothness of the kernel \(k\). Second, this result gives an explicit dependence of the corresponding (possibly suboptimal) Lipschitz constants on \(\lambda \) and \(k\).

Remark 2.5

Let us stress that Theorem 2.2 establishes the existence and uniqueness of a solution to (2.2), (2.3) only for a fixed regularisation parameter \(\lambda >0\) and cannot be used to study the limiting case \(\lambda \to 0\). Indeed, it follows from Theorem 2.4 that as \(\lambda \to 0\), the Lipschitz constants of \(m^{\lambda}_{A}\) blow up. However, Theorem 2.3 does not imply that the optimal Lipschitz constants blow up for \(\lambda \to 0\), or that the solution to (2.2), (2.3) blows up. We demonstrate numerically in Sect. 5 that for \(\lambda \to 0\), in the examples there, the solution to (2.2), (2.3) does not blow up. On the contrary, it weakly converges to a limit; this suggests that (at least) weak existence of a solution to (2.2), (2.3) may hold. Verifying this theoretically remains however an important open problem.

Remark 2.6

A natural question is whether (2.2), (2.3) can be formulated for a different state space, that is, for \(X\), \(Y\) taking values in \(\mathcal{X}\), \(\mathcal{Y}\) rather than \(\mathbb{R}^{d}\). Indeed, for equity models, \(\mathcal{X}= \mathcal{Y} = \mathbb{R}_{+}\) is clearly a more natural choice for both the price process and the variance process. Heuristically, the theory should hold for more general \(\mathcal{X}\) and \(\mathcal{Y}\), provided that those sets are invariant under the dynamics (2.2), (2.3) as well as under the regularised dynamics. It is, however, difficult to derive meaningful assumptions guaranteeing this kind of invariance, which prompts us to work with \(\mathbb{R}^{d}\) instead.

3 Approximation of conditional expectations

In this section, we study the approximation \(m^{\lambda}_{A}\) introduced in (2.1) in more detail. Throughout this section, we fix an open set \(\mathcal{X}\subseteq \mathbb{R}^{d}\) and a measure \(\nu \in \mathcal{P}_{2}(\mathcal{X}\times \mathcal{X})\), and impose the following relatively weak assumptions on the function \(A\colon \mathcal{X}\to \mathbb{R}\) and the positive kernel \(k\colon \mathcal{X}\times \mathcal{X}\) \(\to \mathbb{R}\).

Assumption 3.1

The function \(A\) has sublinear growth, i.e., there exists a constant \(C>0\) such that \(|A(x)|\le C(1+|x|)\) for all \(x\in \mathcal{X}\).

Assumption 3.2

The kernel \(k(\,\cdot \,,\,\cdot \,)\) is continuous on \(\mathcal{X}\times \mathcal{X}\) and satisfies

for some \(C>0\).

It is easy to see that Assumption 3.2 implies for any \(x\in \mathcal{X}\) that

Due to Assumption 3.2 and Steinwart and Christmann [25, Lemma 4.33], ℋ is a separable RKHS and one has for any \(f\in \mathcal{H}\), \(x\in \mathcal{X}\) that

where we also used (3.1). Hence every \(f\in \mathcal{H}\) has sublinear growth and, as a consequence, the objective functional in (2.1) is finite for any fixed \(\nu \in \mathcal{P}_{2}(\mathcal{X}\times \mathcal{X})\). It is also easy to see that (3.2) and (3.1) imply that for any \(x,y\in \mathcal{X}\),

Therefore, the Bochner integrals

are well-defined functions in ℋ for every \(f\in \mathcal{H}\). Moreover, it is clear that the operator \({\mathcal{C}^{\nu}\colon \mathcal{H}\to \mathcal{H}}\) is symmetric and positive semidefinite since

Thus by the Hellinger–Toeplitz theorem (see e.g. Reed and Simon [21, Sect. III.5]), \(\mathcal{C}^{\nu}\) is a bounded self-adjoint linear operator on ℋ. As a consequence, for any \(\lambda \ge 0\), the operator \(\mathcal{C}^{\nu}+\lambda I_{\mathcal{H}}\) is a bounded self-adjoint operator on ℋ with spectrum contained in the interval \([ \lambda ,\|\mathcal{C}^{\nu}\| +\lambda ]\). Hence if \(\lambda >0\), then \((\mathcal{C}^{\nu}+\lambda I_{\mathcal{H}})^{-1}\) exists and is a bounded self-adjoint operator on ℋ with norm

We are now ready to state the following useful representation for the solution to (2.1).

Proposition 3.3

Under Assumptions 3.1, 3.2, for any fixed \(\nu \in \mathcal{P}_{2}(\mathcal{X}\times \mathcal{X})\) and \(\lambda >0\), the solution to (2.1) can be represented as

This representation may be seen as an infinite sample version of the usual solution representation for a ridge regression problem based on finite samples. We thus consider it as not essentially new, but in order to keep our paper as self-contained as possible, we present a proof in Sect. 7. Proposition 3.3 allows us to prove Lipschitz-continuity of \(m^{\lambda}_{A}\), that is, Theorem 2.4.

Let us now proceed with investigating when the function \(m^{\lambda}_{A}=m^{\lambda}_{A}(\,\cdot \,;\nu )\) is a “good” approximation to the true conditional expectation

for small enough \(\lambda >0\). Consider the Hilbert space \(\mathcal{L}_{2}^{\nu}:=L_{2}(\mathcal{X}, \nu (dx,\mathcal{X}))\) with \(\nu (U,\mathcal{X}) := \nu (U \times \mathcal{X}) >0\). For \(f\in \mathcal{L}_{2}^{\nu}\), put

Recalling (3.3), it is easy to see that \(T^{\nu}\) is a linear operator \(\mathcal{L}_{2}^{\nu}\to \mathcal{L}_{2}^{\nu}\). Note that \(\mathcal{H}\subseteq \mathcal{L}_{2}^{\nu}\) due to (3.2); thus \(\mathcal{C}^{\nu}\) is the restriction of \(T^{\nu}\) to ℋ. Further, since

the kernel \(k\) is Hilbert–Schmidt on \(\mathcal{L}_{2}(\mathcal{X\times X},\nu (dx,\mathcal{X}) \otimes \nu (dy,\mathcal{X}))\), i.e.,

due to Assumption 3.2. As a consequence of standard results from functional analysis, one then has (see for example [21, Sect. VI]) that

(i) the operator \(T^{\nu}\) is self-adjoint and compact;

(ii) there exists an orthonormal system \(\left ( a_{n}\right ) _{n\in \mathbb{N}}\) in \(\mathcal{L}^{\nu}_{2}\) of eigenfunctions corresponding to nonnegative eigenvalues \(\sigma _{n}\) of \(T^{\nu}\), and \(\sigma _{1}\ge \sigma _{2}\ge \sigma _{3}\ge \cdots \);

(iii) if \(J:=\{n\in \mathbb{N}: \sigma _{n}>0\}\), one has

with \(\lim _{n\rightarrow \infty}\sigma _{n}=0\) if \(J=\mathbb{N}\).

A generalisation of Mercer’s theorem to unbounded domains, see Sun [26], implies the following statement.

Proposition 3.4

Let \(k\) be a kernel satisfying Assumption 3.2and assume that \(\nu (\,\cdot \,,\mathcal{X})\) is a nondegenerate Borel measure, that is, for every open set \(U\subseteq \mathcal{X}\), one has \(\nu (U, \mathcal{X})>0\). Then one may take the eigenfunctions \(a_{n}\) in (3.7) to be continuous and \(k\) has a series representation

with uniform convergence on compact sets. Moreover, \((\widetilde{a}_{n})_{n\in J}\) with \(\widetilde{a}_{n}:=\sqrt{\sigma _{n}}\,a_{n}\) is an orthonormal basis of ℋ, and the scalar product in ℋ takes the form

Now we are ready to present the main result of this section, which quantifies the convergence properties of \(m^{\lambda}_{A}(\,\cdot \,,\nu )\) as \(\lambda \to 0\) for a fixed measure \(\nu \). Recall the notation (3.5). Let \(P_{\overline{\mathcal{H}}}\) denote the orthogonal projection in \(\mathcal{L}_{2}^{\nu}\) onto \(\overline{\mathcal{H}}\), the closure of ℋ in \(\mathcal{L}_{2}^{\nu}\). Then for any \(f\in \mathcal{L}_{2}^{\nu}\),

since \((a_{n})_{n\in J}\) is an orthonormal system in \(\mathcal{L}_{2}^{\nu}\).

Theorem 3.5

Assume that the kernel \(k\) satisfies Assumption 3.2, \(\nu (\,\cdot \,,\mathcal{X})\) is a nondegenerate Borel measure, and that \(m_{A}(\,\cdot \,;\nu )\in \mathcal{L}_{2}^{\nu}\) (for instance when \(A\) is bounded and measurable). Then for any \(\lambda >0\),

In particular, \(\| P_{\overline{\mathcal{H}}}m_{A}(\,\cdot \,;\nu )-m_{A}^{\lambda}( \,\cdot \,;\nu ) \|_{\mathcal{L}_{2}^{\nu}}\rightarrow 0\) as \(\lambda \searrow 0\). If we have in addition \(P_{ \overline{\mathcal{H}}}m_{A}(\,\cdot \,;\nu )\in \mathcal{H}\), then

and thus \(\| P_{\overline{\mathcal{H}}}m_{A}(\,\cdot \,;\nu )-m_{A}^{\lambda }( \,\cdot \,;\nu ) \|_{\mathcal{H}}\rightarrow 0\) for \(\lambda \searrow 0\).

Theorem 3.5 establishes convergence of \(m_{A}^{\lambda}(\,\cdot \,;\nu )\) as \(\lambda \to 0\), but without a rate. Its proof is placed in Sect. 7. Additional assumptions are needed to guarantee a convergence rate. This is done in the following corollary.

Corollary 3.6

Suppose that the conditions of Theorem 3.5are satisfied and that, moreover, for some \(\theta \in (0,1]\), we have

Then

In particular, if \(\theta =1\), then \(P_{\overline{\mathcal{H}}}m_{A} \in \mathcal{H}\) and we get

Proof

Inequality (3.13) follows from (3.10), (3.12) and the fact that the maximum of the function \(x\mapsto \lambda ^{2}x^{\theta}/(x+\lambda )^{2}\), \(x>0\), is equal to \({(1-\theta /2) ^{2} (\lambda \theta /(2-\theta ) ) ^{\theta}} \). Inequality (3.14) follows from (3.8), (3.9) and (3.13). □

Remark 3.7

If the operator \(T^{\nu}\) defined in (3.6) is injective, that is, \(T^{\nu}f = 0\) for \(f\in \mathcal{L}_{2}^{\nu}\) implies \(f = 0\) \(\nu \)-a.s., then \(P_{\overline{\mathcal{H}}}=I_{\mathcal{L}_{2}^{\nu}}\). In this case, \(J=\mathbb{N}\) and Theorem 3.5 and Corollary 3.6 quantify the convergence to the true conditional expectation. A sufficient condition for \(T^{\nu}\) to be injective is that the kernel \(k\) is integrally strictly positive definite (ispd) in the sense that

for all non-zero signed Borel measures \(\mu \) defined on \(\mathcal{X}\). Indeed, for any \(f\in \mathcal{L}_{2}^{\nu}\), we may define a signed Borel measure \(\mu _{f}(A):=\int _{A}f(x)\nu (dx,\mathcal{X})\), \(A\in \mathcal{B}(\mathcal{X})\), which is finite since \(\vert \mu _{f}(A) \vert \leq \int f^{2}(x)\nu (dx,\mathcal{X})<\infty \). Hence if \(k\) is an ispd kernel, then \(T^{\nu }f = 0\) implies

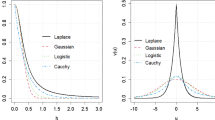

which in turn implies \(\mu _{f}=0\), i.e., \(f=0\) \(\nu \)-a.s. Furthermore, it should be noted that any ispd kernel is strictly positive definite in the usual sense, but the converse is not true. Examples of ispd kernels are Gaussian kernels, Laplace kernels and many more. For details on ispd kernels, we refer to Sriperumbudur et al. [24].

In summary, we have shown in this section that under certain conditions, \(m^{\lambda}_{A}(\,\cdot \,,\nu )\) may converge at least in the \({\mathcal{L}_{2}^{\nu}}\)-sense to the true conditional expectation \(m_{A}(\,\cdot \,,\nu )\) as \({\lambda \to 0}\). This makes the heuristic discussion around (1.10) and (1.11) in Sect. 1 more rigorous.

Remark 3.8

Note that the measure \(\widehat{\mu}_{t}\) in the solution of (2.4)–(2.6) depends on \(\lambda \) so that in fact \(\widehat{\mu}_{t}=\widehat{\mu}_{t}^{\lambda}\). Therefore, even when \(m^{\lambda}_{A}(\,\cdot \,,\nu )\to m_{A}(\,\cdot \,,\nu )\) for fixed \(\nu \) and \({\lambda \downarrow 0}\), the question whether \(m^{\lambda}_{A_{i}}(\,\cdot \,,\widehat{\mu}_{t}^{\lambda})\) converges in some sense is still not answered. We believe that this question is intimately linked to the problem of existence of a solution to (2.2), (2.3). As already explained, this is an unsolved open problem and considered beyond our scope here. However, loosely speaking, assuming that the latter system has indeed a solution (in some sense) with solution measure \(\mu _{t}\), say, it is natural to expect that for a suitable “rich enough” RKHS, we obtain \(m^{\lambda}_{A_{i}}(\,\cdot \,,\mu _{t})\to m_{A_{i}}(\,\cdot \,, \mu _{t})\) (the true conditional expectation) as \(\lambda \searrow 0\).

4 Numerical algorithm

Let us now describe in detail our numerical algorithm to construct solutions to (1.8). We begin by discussing an efficient way of calculating \(m^{\lambda}_{A}\).

4.1 Estimation of the conditional expectation

Let us recall that in order to solve the particle system (2.7)–(2.9), we need to compute

for \(t\) belonging to a certain partition of \([0,T]\) and fixed large \(N\in \mathbb{N}\); here \(A=A_{1}\) or \(A=A_{2}\). It follows from the representer theorem for RKHSs in Schölkopf et al. [23, Theorem 1] that \(m^{\lambda}_{A}\) has the representation

for some \(\alpha = (\alpha _{1}, \ldots , \alpha _{N})^{\top }\in \mathbb{R}^{N}\). Note that the optimal \(\alpha \) can be calculated explicitly by plugging the representation (4.2) into the minimisation problem (4.1) in place of \(f\) and minimising over \(\alpha \). However, computing the optimal \(\alpha \) directly takes \(O(N^{3})\) operations, which is prohibitively expensive keeping in mind that the number of particles \(N\) is going to be very large. Furthermore, even evaluating (4.2) at \(X_{t}^{N,n}\), \(n=1,\ldots , N\), for a given \(\alpha \in \mathbb{R}^{N}\) is rather expensive; it requires \(O(N^{2})\) operations and thus is impossible to implement.

To develop an efficient algorithm, let us note that many particles \(X_{t}^{N,i}\) – and as a consequence the implied basis functions \(k(X_{t}^{N,i}, \,\cdot \,)\) – will be close to each other. Therefore we can considerably reduce the computational cost by only using \({L\ll N}\) rather than \(N\) basis functions as suggested in (4.2). More precisely, we choose \(Z^{1}, \ldots , Z^{L}\) among \(X_{t}^{N,1}, \ldots , X_{t}^{N,N}\) – e.g. by random choice or by taking every \(\frac{N}{L}\)th point among the ordered sequence \(X_{t}^{N,(1)}, \ldots , X_{t}^{N,(N)}\) when \(X\) is one-dimensional – and approximate

where \(\beta = (\beta _{1}, \ldots , \beta _{L})^{\top }\in \mathbb{R}^{L}\). It is easy to see that

where \(R:= (k(Z^{j},Z^{\ell}))_{j,\ell =1,\ldots ,L}\) is an \(L\times L\) matrix. Thus recalling (4.1), we see that we have to solve

where \(G:=(A(Y_{t}^{N,n}))_{n=1,\ldots ,N}\) and \(K := (k(Z^{j},X_{t}^{N,n}))_{n=1,\ldots , N, j=1,\ldots ,L}\) is an \((N\times L)\)-matrix. Differentiating with respect to \(\beta \), we obtain that the optimal value \(\widehat{\beta}=\widehat{\beta}((X_{t}^{N}),(Y_{t}^{N}))\) satisfies

and we approximate the expectation as

Remark 4.1

The method of choosing basis points \(Z^{1}, \ldots , Z^{L}\) can be seen as a systematic and adaptive approach of choosing basis functions \(k(Z^{j}, \,\cdot \,)\), \(j=1, \ldots , L\), in a global regression method. We note that the technique of evaluating the conditional expectation only in points on a grid \(G_{f,t}\) coupled with spline-type interpolation between grid points suggested in Guyon and Henry-Labordère [13] is motivated by similar concerns regarding the explosion of computational costs.

Remark 4.2

Let us see how many operations we need to calculate \(\widehat{\beta}\), taking into account that \(L\ll N\). We need \(O(NL)\) to calculate \(K\), \(O(L^{2})\) to calculate \(R\), \(O(N L^{2})\) to calculate \(K^{\top }K\) (this is the bottleneck), \(O(L^{3})\) to invert \(K^{\top }K+N\lambda R\) and \(O(NL)\) to calculate \(K^{\top }G\) and solve (4.3). Thus in total, we need \(O(N L^{2})\) operations.

4.2 Solving the regularised McKean–Vlasov equation

With the function \(\widehat{m}_{A}^{\lambda}\) in hand, we now consider the Euler scheme for the particle system (2.7)–(2.9). We fix a time interval \([0,T]\), the number \(M\) of time steps and for simplicity consider a uniform time increment \(\delta :=T/M\). Let \(\Delta W_{i}^{X,n}\) and \(\Delta W_{i}^{Y,n}\) denote independent copies of \(W^{X}_{(i+1) \delta} - W^{X}_{i\delta}\) and \(W^{Y}_{(i+1) \delta} - W^{Y}_{i \delta}\), respectively, for \(n=1, \ldots , N\), \(i=1,\ldots , M\). Note that for stochastic volatility models, the Brownian motions driving the stock price and the variance process are usually correlated. We now define \(\widetilde{X}_{0}^{n}=X_{0}^{n}\), \(\widetilde{Y}_{0}^{n}=Y_{0}^{n}\) and for \(i=0,\ldots , M-1\),

where \(\widetilde{\mu}_{i}^{N}=\frac{1}{N}\sum _{n=1}^{N}\delta _{( \widetilde{X}_{i}^{N,n},\widetilde{Y}_{i}^{N,n})}\). Thus at each discretisation time step of (4.5), (4.6), we need to compute approximations of the conditional expectations \(\widehat{m}^{\lambda}_{A_{r}}(\widetilde{X}^{n}_{i}; \widetilde{\mu}^{N}_{i})\), \({r=1,2}\). This is done using the algorithm discussed in Sect. 4.1 and takes \(O(NL^{2})\) operations; see Remark 4.2. Thus the total number of operations needed to implement (4.5), (4.6) is \(O(MNL^{2})\).

5 Numerical examples and applications to local stochastic volatility models

As a main application of the regularisation approach presented above, we consider the problem of calibration of stochastic volatility models to market data. Fix a time period \(T>0\). To simplify the calculations, we suppose that the interest rate is \(r=0\). Let \(C(t,K)\), \(t\in [0,T]\), \(K\ge 0\), be the price at time 0 of a European call option with strike \(K\) and maturity \(t\) on a non-dividend paying stock. We assume that the market prices \((C(t,K))_{t\in [0,T], K\ge 0}\) are given and satisfy the following conditions: (i) \(C\) is continuous and increasing in \(t\) and twice continuously differentiable in \(x\), (ii) \(\partial _{xx}C(t,x)>0\), (iii) \(C(t,x)\to 0\) as \(x\to \infty \) for any \(t\ge 0\) and \(C(t,0)={\mathrm{const}}\). It is known by Lowther [19, Theorem 1.3 and Sect. 2.1] and Dupire [8] that under these conditions, there exists a diffusion process \((S_{t})_{t\in [0,T]}\) which is able to perfectly replicate the given call option prices, that is, \(\mathbb{E}[ (S_{t}-K)^{+}]=C(t,K)\). Furthermore, \(S\) solves the stochastic differential equation

where \(W\) is a Brownian motion and \(\sigma _{\text{Dup}}\) is the Dupire local volatility given by

We study local stochastic volatility (LSV) models. That is, we assume that the stock price \(X\) follows the dynamics

where \(W^{X}\) is a Brownian motion and \((Y_{t})_{t \in [0,T]}\) is a strictly positive variance process, both adapted to some filtration \(( \mathcal{F}_{t} )_{t\geq 0}\). If the function \(\sigma _{\text{LV}}\) is given by

and \(\int _{0}^{T} \mathbb{E}[ Y_{t}\sigma _{\text{LV}}(t,X_{t})^{2} X_{t}^{2} ] \,dt<\infty \), then the one-dimensional marginal distributions of \(X\) coincide with those of \(S\) (see Gyöngy [14, Theorem 4.6], Brunick and Shreve [4, Corollary 3.7]). Thus

In particular, the choice \(Y\equiv 1\) recovers the local volatility model. If \(Y\) is a diffusion process,

where \(W^{Y}\) is a Brownian motion possibly correlated with \(W^{X}\), we see that the model (5.3)–(5.5) is a special case of the general McKean–Vlasov equation (2.2), (2.3). To solve (5.3)–(5.5), we implement the algorithm described in Sect. 4; see (4.5), (4.6) together with (4.4). We validate our results by doing two different checks. First, we verify that the one-dimensional distribution of \(\widetilde{X}^{1}_{M}\) is close to the correct marginal distribution \(\operatorname{Law}(X_{T})=\operatorname{Law}(S_{T})\). To do this, we compare the call option prices obtained by the algorithm (that is, \(N^{-1} \sum _{n=1}^{N}(\widetilde{X}_{M}^{n}-K)^{+}\)) with the given prices \(C(T,K)\) for various \(T>0\) and \(K>0\). If the algorithm is correct and if \(\widetilde{\mu}^{N}_{M}\approx \operatorname{Law}(X_{T},Y_{T})\), then according to (5.4), one must have

On the other hand, if the algorithm is not correct and \(\operatorname{Law}(X_{T},Y_{T})\) is very different from \(\widetilde{\mu}^{N}_{M}\), then (5.6) will not hold.

Second, we also control the multivariate distribution of \((\widetilde{X}_{i})_{i=0,\dots ,M}\). Recall that for any \(t\in [0,T]\), we have \(\operatorname{Law}(X_{t})=\operatorname{Law}(S_{t})\). We want to make sure that the dynamics of the process \(\widetilde{X}\) is different from the dynamics of the local volatility process \(S\). As a test case, we compare option values on the quadratic variation of the logarithm of the price. More precisely, for each \(K>0\), we compare European options on quadratic variation,

and verify that these two curves are different. Here, \((S^{n}_{i})_{i=1,\dots ,N}\) is an Euler approximation of (5.1). We also check that the prices of European options on quadratic variation converge as \(N \to \infty \).

We consider two different ways to generate market prices \(C(T,K)\). First, we assume that the stock follows the Black–Scholes (BS) model, that is, we assume that \(\sigma _{\text{Dup}}\equiv {\mathrm{const}} =0.3\) and \(S_{0}=1\). Second, we consider a stochastic volatility model for the market, that is, we set \(C(T,K):=\mathbb{E}[ (\overline{S}_{T}-K)^{+} ]\), where \((\overline{S}_{t})_{t\geq 0}\) follows the Heston model

with the following parameters: \(\kappa = 2.19\), \(\theta = 0.17023\), \(\xi = 1.04\) and correlation \(\rho = -0.83\) between the driving Brownian motions \(W\) and \(B\), with initial values \(\overline{S}_{0}=1\), \(v_{0}=0.0045\); cf. similar parameter choices in Lemaire et al. [18, Table 1]. We compute option prices based on (5.7), (5.8) with the COS method; see Fang and Oosterlee [9]. We then calculate \(\sigma _{\text{Dup}}\) from \(C(T,K)\) using (5.2).

As our baseline stochastic volatility model for \(Y\), we choose a capped-from-below Heston-type model, but with different parameters than the data-generating Heston model. Specifically, we set \(b(t,x)=\lambda (\mu -x)\) and \(\sigma (t,x)=\eta \sqrt{x}\) in (5.5), where \(Y_{0}=0.0144\), \(\lambda =1\), \(\mu =0.0144\) and \(\eta =0.5751\). We cap the solution of (5.5) from below at the level \(\varepsilon _{{\mathrm{CIR}}}=10^{-3}\) to avoid singularity at 0. Numerical experiments have shown that such capping is necessary. We assume that the correlation between \(W^{X}\) and \(W^{Y}\) is very strong and equals −0.9, which makes calibration more difficult. Since the variance process has different parameters compared to the price-generating stochastic volatility model, a non-trivial local volatility function is required to match the implied volatility. Hence even though the generating model is of the same class, the calibration problem is still non-trivial and involves a singular MKV SDE.

We took ℋ to be the RKHS associated with the Gaussian kernel \(k\) with variance 0.1. We fix the number of time steps as \(M=500\) and take \(\lambda =10^{-9}\), \(L=100\). At each time step of the Euler scheme, we choose \((Z^{j}_{m})_{j=1,\dots , L}\) by the rule that

an approach comparable to the choice of the evaluation grid \(G_{f,t}\) suggested in Guyon and Henry-Labordère [13].

Figure 1 compares the theoretical and the calculated prices (in terms of implied volatilities) in the (a) Black–Scholes and (b)–(d) Heston settings for various strikes and maturities. That is, we first calculate \(C(T,K)\) using the Black–Scholes model (“Black–Scholes setting”) or (5.7), (5.8) (“Heston setting”); then we calculate \(\sigma ^{2}_{\text{Dup}}\) by (5.2); then we calculate \(\widetilde{X}^{n}_{M}\), \(n=1,\ldots ,N\), using the algorithm (4.5), (4.6) with \(H\equiv 0\), \(A_{2}(x)=x\) and

where \(\varepsilon =10^{-3}\) (see also Reisinger and Tsianni [22]); then we calculate \(\widetilde{C}(T,K)\) using (5.6); finally, we transform the prices \(C(T,K)\) and \(\widetilde{C}(T,K)\) to the implied volatilities. We should like to note that this additional capping of the function \(F\) is less critical than the capping of the baseline process \(Y\).

We plot in Fig. 1 implied volatilities for a wide range of strikes and maturities. More precisely, we consider all strikes \(K\) such that \({\mathbb{P}[S_{T}< K]}\in [0.05,0.95]\) – this corresponds to all but very far in-the-money and out-of-the-money options. One can see from Fig. 1 that already for \(N=10^{3}\) trajectories, the identity (5.6) holds up to a small error for all the considered strikes and maturities. This error further diminishes as the number of trajectories increases. At \(N=10^{5}\), the true implied volatility curve and the one calculated from our approximation model become almost indistinguishable.

We plot the prices of the options on the logarithms of quadratic variation in Fig. 2. It is immediate to see that in the Black–Scholes model ((5.1) with \(\sigma _{{\mathrm{Dup}}}\equiv \sigma \)), we have \(\langle \log S\rangle _{T}=\sigma ^{2} T\) and thus \(\mathbb{E}[(\langle \log S\rangle _{T} -K)^{+}]=(\sigma ^{2} T-K)^{+}\). As shown in Fig. 2(a), the prices of the options on the quadratic variation of \(X\) are vastly different. This implies that despite the marginal distributions of \(X\) and \(S\) being identical, their dynamics are markedly dissimilar. We also see that these curves converge as the number of particles increases to infinity. This shows that the dynamics of \((\widetilde{X}^{n})\) is stable with respect to \(n\). Options on the logarithm of quadratic variation for the Heston setting are presented in Fig. 2(b). We see that in this case, the dynamics of \(X\) and \(S\) are different as expected, and the dynamics of \((\widetilde{X}^{n})\) is also stable.

It is interesting to compare our approach with the algorithms of Guyon and Henry-Labordère [13, 12]. We consider a numerical setup similar to [12, p. 10], taking \(N=10^{6}\) particles to calculate implied volatilities. However, we calibrate our model and calculate the approximation of conditional expectation using only \(N_{1}=1000\) of these particles. We compare our results in the Black–Scholes (a) and Heston (b) settings against implied volatilities calculated via the Euler method for the local volatility model \(S\). Figure 3 shows great agreement between the results of the two methods.

Comparison with Guyon and Henry-Labordère [13]: (a) Black–Scholes setting, \(T=1\) year; (b) Heston setting, \(T=1\) year

Remark 5.1

The computational time needed for running our algorithm is comparable with the algorithm of [12], but highly dependent on implementation details in both cases.

Figure 4 shows that not only do the marginal distributions of \(X\) calculated with our method and [13] agree with each other, but so do the distributions of the processes. We also observe that in both settings, the dynamics of \(X\) are different from the dynamics of \(S\).

Comparison with Guyon and Henry-Labordère [13]. Options on quadratic variation: (a) Black–Scholes setting, \(T=1\) year; (b) Heston setting, \(T=1\) year

Now let us discuss the stability of our model as the regularisation parameter \(\lambda \to 0\). We studied the absolute error in the implied volatility of the 1-year ATM call option for various \(\lambda \in [10^{-9},1]\) in the Black–Scholes and Heston settings described above. We used \(N=10^{6}\) trajectories and \(L=100\) at each step according to (5.9), and performed 100 repetitions at each considered value of \(\lambda \). The results are presented in Fig. 5. The vertical lines in Figs. 5–7 denote the standard deviation in the absolute errors of the implied volatilities. We observe that in both settings, the error initially drops as \(\lambda \) decreases and then stabilises around \(\lambda \approx 10^{-9}\). Therefore, for all of our calculations, we took \(\lambda =10^{-9}\). It is evident that the error does not blow up as \(\lambda \) becomes very small.

Let us examine how the error in call option prices in (5.6) (and therefore the distance between the laws of the true and approximated solutions) depends on the number \(N\) of trajectories. Recall that it follows from Theorem 2.3 that this error should decrease as \(N^{-1/4}\) (note the square in the left-hand side of (2.10)). Figure 6 shows how the absolute error in the implied volatility of a 1-year ATM call option decreases as the number of trajectories increases in (a) the Black–Scholes setting and (b) the Heston setting. We took \(\lambda =10^{-9}\), \(L=100\), \(N\in [250, 2^{8}\times 250]\) and performed 100 repetitions at each value of \(N\). We see that the error decreases as \(O(N^{-1/2})\) in both settings, which is even better than predicted by theory.

We collect average errors in implied volatilities of 1-year European call options for different strikes in Table 1. We considered the Heston setting and as above, we used \(\lambda =10^{-9}\), \(L=100\), \(N=10^{5}\).

We also investigate the dependence of the error in the implied volatility on the number \(L\) of basis functions in the representation (4.4). Recall that since the number of operations depends on \(L\) quadratically (it equals \(O(MNL^{2})\)), it is extremely expensive to set \(L\) to be large. In Fig. 7, we plotted the dependence on \(L\) of the absolute error in the implied volatility of a 1-year ATM call option. We used \(N=10^{6}\) trajectories, \(\lambda =10^{-9}\), \(L\in [1,\ldots ,100]\) and did 100 repetitions at each value of the number of basis functions. We see that as the number of basis functions increases, the error first drops significantly, but then stabilises at \(L\approx 80\).

5.1 On the choice of \((\varepsilon ,\varepsilon _{{\mathrm{CIR}}})\)

We recall that there are two different truncations involved in the model. First, we cap the CIR process from below at the level of \(\varepsilon _{{\mathrm{CIR}}}=10^{-3}\). Second, in the Euler scheme (4.5), (4.6), we take as a diffusion coefficient

with \(\varepsilon =10^{-3}\). We claim that both of these truncations are necessary.

Figure 8 shows the fit of the smile for 1-year European call options depending on \(\varepsilon \) and \(\varepsilon _{{\mathrm{CIR}}}\). We use the model of Sect. 5 with \(M=500\) time steps and \(N=10^{6}\) trajectories. We see from these plots that if \(\varepsilon \) or \(\varepsilon _{{\mathrm{CIR}}}\) are either too small or too large, the smile produced by the model may not closely match the true implied volatility curve. Therefore a certain lower capping of the CIR process is indeed necessary.

6 Conclusion and outlook

In this paper, we study the problem of calibrating local stochastic volatility models via the particle approach pioneered in Guyon and Henry-Labordère [13]. We suggest a novel RKHS-based regularisation method and prove that this regularisation guarantees well-posedness of the underlying McKean–Vlasov SDE and the propagation of chaos property. Our numerical results suggest that the proposed approach is rather efficient for the calibration of various local stochastic volatility models and can obtain similar efficiency as widely used local regression methods; see [13]. There are still some questions left open here. First, it remains unclear whether the regularised McKean–Vlasov SDE remains well posed when the regularisation parameter \(\lambda \) tends to zero. This limiting case needs a separate study. Another important issue is the choice of RKHS and the number of basis functions which ideally should be adapted to the problem at hand. This problem of adaptation is left for future research.

7 Proofs

In this section, we present the proofs of the results from Sects. 2 and 3.

Proof of Proposition 3.3

Since ℋ is separable, let \(I\subseteq \mathbb{N}\) and let \(e:=(e_{i})_{i\in I}\) be a total orthonormal system in ℋ (note that \(I\) is finite if ℋ is finite-dimensional). Define the vector \(\gamma ^{\nu}\in \ell _{2}(I)\) by

Since the operator \(\mathcal{C}^{\nu}\) is bounded, it may be described by the (possibly infinite) symmetric matrix

which acts as a bounded positive semidefinite operator on \(\ell _{2}(I)\). Denote

For \(f\in \mathcal{H}\), write \(f=\sum _{i\in I} \beta _{i} e_{i}\). Then, recalling (7.1) and (7.2), we derive

where we inserted the definition (7.3) and used the fact that \(B^{\nu}+\lambda I\) is strictly positive definite for \(\lambda >0\). To complete the proof, it remains to note that

which shows (3.4). □

Proof of Theorem 2.4

Let us write

Working with respect to the orthonormal basis introduced in the proof of Proposition 3.3, see (7.3), we derive for the first term in (7.4) that

where we used (3.1) and Assumption 2.1.

Denote \(Q^{\nu}:=B^{\nu}+\lambda I\) and \(Q^{\mu}:= B^{\mu}+\lambda I\). Recalling that these are bounded operators from \(\ell _{2}(I)\) to \(\ell _{2}(I)\) with bounded inverses, it is easy to see that

Therefore we get

Now observe that for any \(i,j\in I\), we have

Hence by using the identity

we get

By the Kantorovich–Rubinstein duality formula (see Villani [27, Chap. 1]), for every \(h:\mathcal{X} \rightarrow \mathbb{R}\) with \(h\in C^{1}(\mathcal{X})\), one has

where \(\partial _{x}\) denotes the gradient with respect to \(x\). So we continue (7.8) with

and for each particular \(x\in \mathcal{X}\), we have similarly

where the last inequality follows from Assumption 2.1. Combining this with (7.9), we deduce

By a similar argument, using (7.7), we derive

where again Assumption 2.1 was used. Next note that

due to Assumption 2.1. Substituting now (7.10)–(7.12) into (7.6) and then into (7.5), we finally get

Now let us bound \(I_{2}\) in (7.4). We clearly have

Note that

with \(\partial _{1}\), \(\partial _{2}\) denoting the vector of derivatives of \(k\) with respect to the first and second argument, respectively. Recalling Assumption 2.1, we derive

Further, using (7.12), we see that

Combining this with (7.15) and substituting into (7.14), we get

This together with (7.13) and (7.4) finally yields

where \(C_{1}=(\lambda ^{-1}D_{k}+1)\lambda ^{-1}D_{k}^{2}d\|A\|_{ \mathcal{C}^{1}}\) and \(C_{2}=\sqrt {d} \,\lambda ^{-1}D_{k}^{2} \|A\|_{\mathcal{C}^{1}}\). This completes the proof. □

Now we are ready to prove the main results of Sect. 2. They follow from Theorem 2.4 obtained above.

Proof of Theorem 2.2

It follows from Theorem 2.4, the assumptions of Theorem 2.2 and the fact that the \(\mathbb{W}_{1}\)-metric can be bounded from above by the \(\mathbb{W}_{2}\)-metric that the drift and diffusion of the system (2.4)–(2.6) are Lipschitz and satisfy the conditions of Carmona and Delarue [6, Theorem 4.21]. Hence it has a unique strong solution. □

Proof of Theorem 2.3

We see that Theorem 2.4 and the conditions of Theorem 2.3 imply that all the assumptions of Carmona and Delarue [7, Theorem 2.12] hold (note that the total state dimension is \(2d\) in our case). This implies (2.10). □

Proof of Theorem 3.5

Consider the operator \(\mathcal{C}^{\nu}\) in the orthonormal basis \((\widetilde{a}_{n})_{n\in J}\) of ℋ. Put

since \(\widetilde{a}_{j}\) is an eigenvector of \(T^{\nu}\) with eigenvalue \(\sigma _{j}\). Since \(\mathcal{C}^{\nu}\) is diagonal in this basis, we see that for \(\lambda >0\), one has for \(i \in J\) that

Consider also the function \(c^{\nu}_{A}\) in this basis. We write for \(i\in J\) similarly to (7.1)

and we clearly have \(c^{\nu}_{A}=\sum _{i\in J}\eta _{i}^{\nu }\widetilde{a}_{i}\). Then, using Proposition 3.3 and (7.16), we derive for \(\lambda >0\) that

Next, since \(m_{A}\in \mathcal{L}_{2}^{\nu}\), we have

Further, for \(i\in J\), we deduce that

where we used that \(\widetilde{a}_{n}=\sqrt{\sigma _{n}}\,a_{n}\). Substituting this into (7.18) and combining with (7.17), we get

Thus the orthonormality of the \(\widetilde{a}_{i}\) gives

which is (3.10). Similarly, recalling (3.8), we get

which is finite whenever \(P_{\overline{\mathcal{H}}} m_{A} \in \mathcal{H}\), that is, \(\sum _{i\in J} \langle m_{A} ,a_{i}\rangle _{\mathcal{L}_{2}^{\nu}}^{2} \sigma _{i}^{-1}<\infty \). This shows (3.11). It is easily seen by dominated convergence that the left-hand side of (3.10) goes to zero, and if \(P_{\overline{\mathcal{H}}} m_{A} \in \mathcal{H}\), the left-hand side of (3.11) goes to zero as well. □

References

Antonelli, F., Kohatsu-Higa, A.: Rate of convergence of a particle method to the solution of the McKean–Vlasov equation. Ann. Appl. Probab. 12, 423–476 (2002)

Bach, F.: Are all kernels cursed? (2019). Available online at https://francisbach.com/cursed-kernels/

Bossy, M., Jabir, J.-F.: On the wellposedness of some McKean models with moderated or singular diffusion coefficient. In: Cohen, S.N., et al. (eds.) Frontiers in Stochastic Analysis – BSDEs, SPDEs and Their Applications, Edinburgh, July 2017, pp. 43–87. Springer, Cham (2019)

Brunick, G., Shreve, S.: Mimicking an Itô process by a solution of a stochastic differential equation. Ann. Appl. Probab. 23, 1584–1628 (2013)

Butkovsky, O., Dareiotis, K., Gerencsér, M.: Approximation of SDEs – a stochastic sewing approach. Probab. Theory Relat. Fields 181, 975–1034 (2021)

Carmona, R., Delarue, F.: Probabilistic Theory of Mean Field Games with Applications I. Mean Field FBSDEs, Control, and Games. Springer, Cham (2018)

Carmona, R., Delarue, F.: Probabilistic Theory of Mean Field Games with Applications II. Mean Field Games with Common Noise and Master Equations. Springer, Cham (2018)

Dupire, B.: Pricing with a smile. Risk 7, 18–20 (1994)

Fang, F., Oosterlee, C.W.: A novel pricing method for European options based on Fourier-cosine series expansions. SIAM J. Sci. Comput. 31, 826–848 (2009)

Funaki, T.: A certain class of diffusion processes associated with nonlinear parabolic equations. Z. Wahrscheinlichkeitstheor. Verw. Geb. 67, 331–348 (1984)

Gatheral, J.: The Volatility Surface: A Practitioner’s Guide. Wiley, New York (2011)

Guyon, J., Henry-Laborder̀e, P.: The smile calibration problem solved (2011). Preprint. Available online at https://ssrn.com/abstract=1885032

Guyon, J., Henry-Labordère, P.: Being particular about calibration. Risk Mag. 25(1), 88–93 (2012)

Gyöngy, I.: Mimicking the one-dimensional marginal distributions of processes having an Itô differential. Probab. Theory Relat. Fields 71, 501–516 (1986)

Jourdain, B., Menozzi, S.: Convergence rate of the Euler–Maruyama scheme applied to diffusion processes with \({L_{Q}}\)–\({L_{\rho}}\) drift coefficient and additive noise. Ann. Appl. Probab. 34, 1663–1697 (2024)

Jourdain, B., Zhou, A.: Existence of a calibrated regime switching local volatility model. Math. Finance 30, 501–546 (2020)

Lacker, D., Shkolnikov, M., Zhang, J.: Inverting the Markovian projection, with an application to local stochastic volatility models. Ann. Probab. 48, 2189–2211 (2020)

Lemaire, V., Montes, T., Pagès, G.: Stationary Heston model: calibration and pricing of exotics using product recursive quantization. Quant. Finance 22, 611–629 (2022)

Lowther, G.: Fitting martingales to given marginals (2008). Preprint, Available online at https://arxiv.org/abs/0808.2319

Mishura, Y.S., Veretennikov, A.Y.: Existence and uniqueness theorems for solutions of McKean–Vlasov stochastic equations. Theory Probab. Math. Stat. 103, 59–101 (2020)

Reed, M., Simon, B.: Functional Analysis. Revised and Enlarged Edition. Academic Press, San Diego (1980)

Reisinger, C., Tsianni, M.O.: Convergence of the Euler–Maruyama particle scheme for a regularised McKean–Vlasov equation arising from the calibration of local-stochastic volatility models (2023). Preprint, Available online at https://arxiv.org/abs/2302.00434

Schölkopf, B., Herbrich, R., Smola, A.J.: A generalized representer theorem. In: Helmbold, D., Williamson, B. (eds.) International Conference on Computational Learning Theory, pp. 416–426. Springer, Berlin (2001)

Sriperumbudur, B.K., Gretton, A., Fukumizu, K., Schölkopf, B., Lanckriet, G.R.G.: Hilbert space embeddings and metrics on probability measures. J. Mach. Learn. Res. 11, 1517–1561 (2010)

Steinwart, I., Christmann, A.: Support Vector Machines. Springer, Berlin (2008)

Sun, H.: Mercer theorem for RKHS on noncompact sets. J. Complex. 21, 337–349 (2005)

Villani, C.: Topics in Optimal Transportation. Am. Math. Soc., Providence (2021)

Acknowledgements

The authors would like to thank the referees and the Associate Editor for their helpful comments and feedback. We are also grateful to Peter Friz and Mykhaylo Shkolnikov for useful discussions. OB would like to thank Vadim Sukhov for very helpful conversations regarding implementing parallelised algorithms in Python.

Funding

Open Access funding enabled and organized by Projekt DEAL. DB acknowledges the financial support from Deutsche Forschungsgemeinschaft (DFG), Grant Nr. 497300407. CB, OB and JS are supported by the DFG Research Unit FOR 2402. OB is funded by the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation) under Germany’s Excellence Strategy – The Berlin Mathematics Research Center MATH+ (EXC-2046/1, project ID: 390685689, sub-project EF1-22).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing Interests

The authors declare no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Bayer, C., Belomestny, D., Butkovsky, O. et al. A reproducing kernel Hilbert space approach to singular local stochastic volatility McKean–Vlasov models. Finance Stoch 28, 1147–1178 (2024). https://doi.org/10.1007/s00780-024-00541-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00780-024-00541-5