Abstract

We introduce a particular heterogeneous formulation of a class of contagious McKean–Vlasov systems, whose inherent heterogeneity comes from asymmetric interactions with a natural and highly tractable structure. It is shown that this formulation characterises the limit points of a finite particle system, deriving from a balance-sheet-based model of solvency contagion in interbank markets, where banks have heterogeneous exposure to and impact on the distress within the system. We also provide a simple result on global uniqueness for the full problem with common noise under a smallness condition on the strength of interactions, and we show that in the problem without common noise, there is a unique differentiable solution up to an explosion time. Finally, we discuss an intuitive and consistent way of specifying how the system should jump to resolve an instability when the contagious pressures become too large. This is known to happen even in the homogeneous version of the problem, where jumps are specified by a ‘physical’ notion of solution, but no such notion currently exists for a heterogeneous formulation of the system.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

This paper studies a family of contagious McKean–Vlasov problems, modelling large clouds of stochastically evolving mean-field particles, for which contagion materialises through asymmetric interactions. More concretely, the different particles suffer a negative impact on their ‘healthiness’ (measured by their distance from the origin) as the probability of absorption increases for the other particles to which they are linked. Naturally, the degree to which the particles are affected depends on the strength of the links.

The study of this problem is motivated by the macroscopic quantification of systemic risk in large financial markets, when taking into account the heterogeneous nature of how financial institutions have an effect on and are exposed to the distress of other entities at the microscopic level. Specifically, in Feinstein and Søjmark [10], the authors of the present paper have proposed a dynamic balance-sheet-based model for solvency contagion in interbank markets building on the Gai–Kapadia approach (see Gai and Kapadia [11]) to financial contagion. When passing from a finite setting to a mean-field approximation, we show here that this model leads to precisely the type of contagious McKean–Vlasov system with heterogeneity that we now describe.

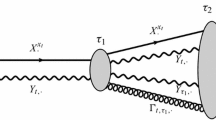

For given parameters, to be specified below, the mean-field problem is formulated as a coupled system of conditional McKean–Vlasov equations

where each \(B^{u,v}\) is a Brownian motion independent of the ‘common’ Brownian motion \(B^{0}\). We take the initial conditions to be independent of the Brownian motions, and we require that solutions of (1.1) must satisfy \(L^{v}_{0}=0\) for all \(v\) in the support of \(\pi \).

Note that \(B^{0}\) serves as a ‘common factor’ in the sense that the dynamics of the entire system are conditional upon its movements. In contrast, each \(B^{u,v}\) models instead the random fluctuations that are specific to a given mean-field particle \(X^{u,v}\). Notice also that since \(L\) is required to be \(B^{0}\)-measurable, computing \(t\mapsto \mathbb{P}[t\geq \tau _{u,v} | B^{0}]\) only relies on \(B^{u,v}\) being a Brownian motion independent of \(B^{0}\). Any relationship between the \(B^{u,v}\) is irrelevant for this, and solving the system for a different set of particle-specific Brownian drivers \(B^{u,v}\) does not change \(L\) as long as they are all independent of \(B^{0}\) and the initial conditions. When the exogenous correlation parameter \(\rho \) is zero, we see that the common factor \(B^{0}\) plays no role and \(L\) becomes deterministic.

The key reason for our interest in (1.1) is that it has a quite general, but also highly tractable, heterogeneous structure. This contrasts with the focus on purely symmetric formulations of the McKean–Vlasov problem in most of the existing literature; see e.g. Bayraktar et al. [3], Cuchiero et al. [6], Delarue et al. [7, 8], Delarue et al. [9], Hambly et al. [15], Ledger and Søjmark [20, 21] and Nadtochiy and Shkolnikov [22]. As regards the measure \(\pi \) in (1.1), this is taken to be a probability distribution on \(\mathbb{R}^{k}\times \mathbb{R}^{k}\) specifying the density or discrete support of the ‘indexing’ vectors \((u,v)\in \mathbb{R}^{k}\times \mathbb{R}^{k}\). One should think of each \(X^{u,v}\) as a tagged ‘infinitesimal’ mean-field particle within a heterogeneous ‘cloud’ or ‘continuum’ of such particles. In this respect, the role of \(\pi \) is to model how these infinitesimal particles are distributed along a possible continuum of types, as specified by the ‘indexing’ vectors that describe how the particles interact through the interaction kernel \(\kappa \).

Naturally, if \(\pi \) is supported on a finite set of indexing vectors, then (1.1) consists in effect only of a finite number of mean-field particles, but one should still think of it as there being an infinitude of identical particles for each ‘type’, where the values that \(\pi \) assigns to the indexing vectors give the proportions of these types (see also Remark 2.1 and the work of Nadtochiy and Shkolnikov [23]).

The strength with which a given ‘infinitesimal’ mean-field particle \(X^{u,v}\) feels the impact of another one, say \(X^{\hat{u},\hat{v}}\), is specified by the value \(\kappa (\hat{u},v)\geq 0\), modelling an ‘exposure’ of \(X^{u,v}_{t}\) to the probability of \(X^{\hat{u},\hat{v}}\) being absorbed by time \(t\). This is the nature of the contagious element in this system: a higher likelihood of absorption for any given \(X^{\hat{u},\hat{v}}\) puts upward pressure on the likelihood of absorption for \(X^{u,v}\) in proportion to \(\kappa (\hat{u},v)\geq 0\), and likewise throughout the system, in turn forming a positive feedback loop. Notice that for a given particle \(X^{u,v}\), the vector \(v\) influences only its exposure to impacts from other particles, while the vector \(u\) influences only how it impacts the other particles (but of course, the full exposure and impact depends also on the indexing vectors of all the other particles). This decomposition highlights why it is natural to work with a pair of indexing vectors, and we further exploit this structure below.

While we did not specify it, for the above interpretation of the contagious element, we were implicitly assuming that the functions \(t\mapsto g_{u,v}(t)\) are all nonnegative and that the map \(x\mapsto F(x)\) is both nonnegative and increasing. In that way, the effect of an increasing probability of absorption is always to decrease the ‘healthiness’ of each mean-field particle. The precise assumptions for the various parameters are presented in the next subsection; see Assumptions 1.1–1.3.

1.1 A specific formulation and structural conditions

For notational simplicity, given a pair of random vectors \((U,V)\) valued in \(\mathbb{R}^{k}\times \mathbb{R}^{k}\) and distributed according to \(\pi \), we let \(S(U)\), \(S(V)\) and \(S(U,V)\) denote the support of \(U\), \(V\) and \((U,V)\), respectively. The heterogeneity of the interactions in (1.1) is then structured according to the interaction kernel \((u,v)\mapsto \kappa (u,v)\) with a continuous nonnegative function \(\kappa : S(U) \times S(V) \rightarrow \mathbb{R}_{+}\). We rely on this notation throughout the paper. For the applications we are interested in, it is natural to take \(\kappa (u,v):=u \cdot v\) together with a distribution \(\pi \) such that \(u \cdot v \geq 0\) for all \(u\in S(U)\) and \(v \in S(V) \). For concreteness, we thus restrict to this case throughout, but we note that our arguments are performed in a way that easily extends to cover a general continuous nonnegative interaction kernel \(\kappa \).

Restricting to the case \(\kappa (u,v)=u \cdot v\), the system (1.1) can be rewritten as

where we insist on \(\mathcal{L}^{h}_{0}=0\) for \(h=1,\ldots ,k\). A nice feature of this formulation is the decomposition of \(L^{v}_{t}\) into a weighted sum of \(k\) contagion processes \(\mathcal{L}^{h}_{t}\) for \(h=1,\ldots ,k\), thereby organising the feedback felt by each particle according to \(k\) distinct characteristics modelled by the dimension \(k\) of the indexing vectors \((u,v)\in \mathbb{R}^{k}\times \mathbb{R}^{k}\). More generally, (1.2) fixes a sensible choice of the interaction kernel \(\kappa \), thereby eliminating any further free parameters. Of course, we are still left with a single free parameter \(k \in \mathbb{N}\), but this should rightly be seen as a scale for the granularity of the analysis. We refer to Feinstein and Søjmark [10] for further details.

The relevant coefficients for the model of interbank contagion considered in [10] are \(F(x)=\log (1+x)\) and \(g_{u,v}(t)=c_{u,v}\psi (t,T)\) for \(t\in [0,T]\), where each \(c_{u,v}>0\) is a positive constant and \(\psi (\,\cdot \,,T):[0,T]\rightarrow \mathbb{R}_{+}\) is a nonnegative continuous decreasing function, modelling the rate at which outstanding liabilities are gradually settled over the period \([0,T]\). There are also closely related applications in neuroscience (see Delarue et al. [7, 8] and Inglis and Talay [18]), for which the relevant choices are \(F(x)=x\) and \(g_{u,v}(t)=c_{u,v}\) for constants \(c_{u,v}>0\), although we note that one would also need to consider re-setting of the particles when they hit the origin in this case. These considerations motivate the following assumptions.

Assumption 1.1

For a given probability distribution \(\pi \) on \(\mathbb{R}^{k}\times \mathbb{R}^{k}\) determining the network structure, we write \((U,V)\sim \pi \). We then assume that (i) the marginal supports \(S(U)\) and \(S(V)\) are both compact, and (ii) we have \(u\cdot v\geq 0\) for all \(u\in S(U)\) and \(v\in S(V)\). We write \(S(U,V)\) for the joint support, which is also compact.

Assumption 1.2

The function \(x \mapsto F(x)\) is Lipschitz-continuous, nonnegative and nondecreasing with \(F(0)=0\). Writing \(b_{u,v}(s)=b(u,v,s)\), \(\sigma _{u,v}(s)=\sigma (u,v,s)\) and \(g_{u,v}(s)=g(u,v,s)\), these are deterministic continuous functions in \((u,v,s)\). Each \(s\mapsto g_{u,v}(s)\) is nonnegative and nonincreasing. Finally, we impose the nondegeneracy conditions \(\rho \in [0,1)\) and \(c\leq \sigma _{u,v}\leq C\), for given \(c,C>0\), uniformly in \((u,v) \in S(U,V)\).

Assumption 1.3

Let \(P_{0}(\,\cdot \,|u,v)\) denote the law of \(X^{u,v}_{0}\). We assume there is a density \(p_{0}(\,\cdot \,|u,v)\in L^{\infty}(0,\infty )\), so that \(dP_{0}(x|u,v)=p_{0}(x|u,v)dx\), with the properties \(p_{0}(x|u,v) \leq C_{1} x^{\beta}\) for all \(x\) near 0 and some \(\beta >0\), as well as \(\Vert p_{0}(\,\cdot \,|u,v) \Vert _{\infty }\leq C_{2}\) and \(\int _{0}^{\infty }x dP_{0}(x|u,v) \leq C_{3}\), uniformly in \((u,v)\in S(U,V)\), for given constants \(C_{1},C_{2},C_{3}>0\).

In Sect. 2.2, we present some well-posedness results under these assumptions. Thereafter, we introduce a finite interacting particle system in Sect. 2.3, which will correspond to the coupled McKean–Vlasov system (1.2) in the mean-field limit. We prove this under Assumption 2.4, which in particular ensures that the above Assumptions 1.1–1.3 are all satisfied in the limit.

1.2 A further look at applications and related literature

In many practical applications, heterogeneity plays a critical role—something that becomes particularly pertinent when seeking to understand the outcomes of contagion spreading through a complex system. In short, a homogeneous version of the McKean–Vlasov problem would be at risk of oversimplifying the conclusions that one can draw. At the same time, the streamlined analysis of a mean-field formulation can be highly instructive, so it becomes important to look for ways of exploiting such macroscopic ‘averaging’ while not throwing away all the microscopic heterogeneity.

For some concrete examples of how (1.2) can capture features of realistic core-periphery networks in interbank markets, we refer to the discussion at the start of Feinstein and Søjmark [10, Sect. 3.1] as well as [10, Online Supplement C]. Another tractable special case is the multi-type system with homogeneity within each type, considered by Nadtochiy and Skolnikov [23] (for details on this, see Remark 2.1). In relation to this, it should be noted that [23] also studies a mean-field type game, whereby jumps are ruled out due to the strategic possibility of disconnecting gradually from a given particle as it gets closer to absorption. We do not consider any game components in this paper.

The formulation (1.2) can also be highly relevant for integrate-and-fire models in mathematical neuroscience. For example, Gerstner et al. [12, Chap. 13.4] introduces a multi-type mean-field model, while Grazieschi et al. [13] considers a random graph model, where dependent random synaptic weights determine if the spiking of neuron \(i\) impacts the voltage potential of another neuron \(j\neq i\). Phrasing [13, Eq. (12)] in the language of the particle system (2.8) in Sect. 2.3, this corresponds to having \(k=1\) and letting both \(v^{i}:=\chi ^{i}\) and \(u^{i}:=\chi ^{i}\) for a family \((\chi ^{i})_{i=1,\dots ,n}\) of i.i.d. Bernoulli random variables, up to a scalar multiple. Thus the directed connection from \(i\) to \(j\) is either active or inactive depending on the realisation of \(\chi ^{i} \chi ^{j}\). It is conjectured in [13] that the mean-field limit of this particle system should take a particular form (see [13, Eq. (13)]), and we note that this conjecture is indeed confirmed by our convergence result, saying that the limit is (1.2) with \(k=1\) and \(\pi =\text{Law}(\chi ,\chi )\) on \(\mathbb{R}\times \mathbb{R}\) for a Bernoulli random variable \(\chi \) with the same parameter as the \(\chi ^{i}\)’s. As far as we are aware, Inglis and Talay [18] were the first to study mean-field convergence for integrate-and-fire model with excitatory feedback and asymmetric synaptic weights, but the scaling is chosen in such a way that the mean-field limit becomes homogeneous.

Finally, we mention an interesting line of research on agent-based modelling for macroeconomic business cycles by Gualdi et al. [14] and Sharma et al. [25]. Looking at the average over a large class of agents, the authors derive a nonlinear PDE formulation that is essentially the evolution equation for a homogeneous version of (1.2). Our framework highlights a tractable route to rigorously incorporating heterogeneity in these models, even after averaging, as the analysis takes place at the mean-field level from the beginning.

1.3 Summary of the main technical contributions

The most important contribution of this paper is to give a rigorous justification of the convergence discussed in Feinstein and Søjmark [10], connecting a finite particle system to a suitable notion of solution for the mean-field problem (1.2). The precise statements are given in Sect. 2 below, while the proofs follow in Sects. 3–5. As per Remark 2.1, our analysis also covers the mean-field problem of Nadtochiy and Shkolnikov [23].

Our main technical contributions concern the proof of Theorem 2.6, which provides the rigorous mean-field convergence result. Overall, the path we follow is similar to Delarue et al. [8], Ledger and Søjmark [21] and Nadtochiy and Shkolnikov [22], but new ideas are needed to deal with the heterogeneity and higher generality, as we discuss in what follows.

Since (1.2) allows a continuum of types, it is not immediately clear that we can hope to have solutions so that the processes \(X^{u,v}\) are measurable in the type vectors \((u,v)\). In relation to this, a first novel aspect compared to [8, 21, 22] is the identification of a suitable notion of solution, given by the formulation (2.14) and (2.15). This addresses the aforementioned issue by working instead with the laws of the processes through a notion of random Markov kernels (see Definition 2.5). Here the random aspect must also address both the fact that the common factor produces conditional limiting laws and the fact that the finite empirical measures need not become adapted to the common factor in the limit; note that this last point is analogous to Ledger and Søjmark [21].

Once we have a workable notion of solution, the second main difference from [8, 21, 22] lies in how we set up the probabilistic framework for working with the limit points in Sect. 3.3. This is ultimately what allows us to characterise the limit points as solutions to the desired McKean–Vlasov system. The approach is inspired in part by [21], but the precise constructions are closely tied in with the identification of our new notion of solution, and the reliance of this on the concept of random Markov kernels necessitates a more careful approach based on disintegration of measures.

The details of the constructions in Sect. 3.3 are crucial to the proof of the key continuity result in Lemma 3.5, as well as the subsequent martingale arguments in Propositions 3.7 and 3.8, which complete the proof of Theorem 2.6. The proofs of these two propositions draw inspiration from Ledger and Søjmark [21], but the arguments need to be tailored to the setting of Sect. 3.3 and, most importantly, we need a more delicate use of Skorokhod’s representation theorem to exploit the form of Lemma 3.5. In particular, the required continuity of the relevant functionals does not hold in general almost surely for the limiting laws, but only when passing along suitable sequences.

In terms of ensuring that limit points exist, the nonexchangeability of the particle system means that a little more care is needed in the arguments related to M1-tightness in Lemma 3.1 and Proposition 3.2. However, this does not present significant new challenges once it is observed that we can obtain good estimates for each particle that are uniform across the type space.

Finally, we return to the key Lemma 3.5, where we confront the convergence of the contagious component of the particle system. In an earlier version of this paper, we relied on an adaptation of [21, Lemma 3.13], but a referee brought our attention to an unfortunate lacuna in the proof of that lemma. We have resolved this through Proposition 3.4 and its use in the proof of Lemma 3.5. In relation to the original work of Delarue et al. [8], Proposition 3.4 plays a role akin to [8, Lemma 5.9] and Lemma 3.5 then plays a role akin to [8, Lemma 5.6 and Proposition 5.8]. Already because of the common Brownian motion, the proof of [8, Lemma 5.9] does not apply to our setting. Nevertheless, inspired by their approach, we can instead average over the laws of the particles (accounting also for the heterogeneity) and arrive at an almost sure continuity result for the first hitting time of zero as a function on the path space with respect to the M1-topology. We then give a short self-contained proof of the key Lemma 3.5, which is more streamlined than its analogues [8, Lemma 5.6 and Proposition 5.8] in the sense that it follows more directly from the critical use of the M1-topology in Proposition 3.4 rather than relying both on this and another technical M1-argument as in [8, Proposition 5.8].

2 Well-posedness and mean-field convergence

As is known already from the homogeneous versions, we cannot in general expect (1.2) to be well posed globally as a continuously evolving system; see e.g. Hambly et al. [15, Theorem 1.1]. Due to the feedback effect from the gradual loss of mass, the rate of change for the contagion processes \(t\mapsto \mathcal{L}^{h}_{t}\) can explode in finite time, and jumps in the loss of mass may materialise from within the system itself.

First we show that this need not always be the case, by presenting a simple uniqueness result under a smallness condition on the interactions which guarantees that (1.2) evolve continuously in time independently of the realisations of the common factor. Moreover, we show that when restricting to the idiosyncratic setting of \(\rho =0\) where the common factor \(B^{0}\) disappears, one can obtain a local result on uniqueness and regularity up to an explosion time. Next, we introduce a finite particle system which is a general formulation of the one coming from the interbank model in Feinstein and Søjmark [10]. It is this particle system that underpins our interest in (1.2), and our main result comes down to showing that in a suitable sense, there is weak convergence to the heterogeneous McKean–Vlasov system (1.2) as the number of particles tends to infinity.

Remark 2.1

Also motivated by the modelling of contagion in financial markets, Nadtochiy and Shkolnikov [23] were the first to study a multi-type system very close to (1.2). Specifically, they use a generalised Schauder fixed point approach to show existence of solutions for a coupled McKean–Vlasov system of the form

where \(\mathcal{X}\) is an abstract finite set and each \(Z^{x}\) is an exogenous stochastic process with suitable regularity properties. A typical example is \(dZ^{x}_{t}=b_{x}(t)dt+\sigma _{x}(t)dW^{x}_{t}\). As per [23, Remark 2.5], their analysis also allows a common Brownian motion. By the assumptions on \(G\) in [23], we can define a nondecreasing and nonnegative function \(\tilde{F}:[0,1]\rightarrow \mathbb{R}_{+}\) by \(\tilde{F}(s):=-G(1-s)\) for \(s\in [0,1]\), with \(\tilde{F}(0)=0\). Taking \(k:=|\mathcal{X}|\), it is then straightforward to define \(\pi \) as a convex combination of \(k\) point masses on fixed nonnegative vectors \((\mathrm{u}^{1},\mathrm{v}^{1}),\ldots ,(\mathrm{u}^{k},\mathrm{v}^{k})\) in such a way that (2.1) becomes equivalent to (1.2) with \(F=\mathrm{Id}\), \(g_{u,v} \equiv 1\) and

Since \(\pi \) defined from (2.1) has finite support, (1.2) further simplifies to a system of \(k\) representative processes \(X^{\mathrm{u}^{h},\mathrm{v}^{h}}_{t}\) for \(h=1,\ldots ,k\) in this case. We note that it would not pose any problems to incorporate a function \(\tilde{F}\) as above into our analysis (in addition to \(F\)), but we leave this out to avoid clouding the notation (noting also that our main motivating applications do not call for it).

2.1 On the jumps of the heterogeneous McKean–Vlasov system

It is known already for the simplest homogeneous version of (1.2) (as studied in Delarue et al. [8], Hambly et al. [15] and Nadtochiy and Skolnikov [22]) that if the feedback effect is strong enough, then solutions cannot be continuous globally in time [15, Theorem 1.1] and one is furthermore left with a non-unique choice of the jump times and jump sizes even when restricting to càdlàg solutions (see [15, Example 2.2] for a stylised example).

For clarity, let us consider the multi-type version of (1.2) with \(F=\mathrm{Id}\) and \(g_{u,v}\equiv 1\), as just discussed in Remark 2.1 above (concerning (2.1), we also take \(\tilde{F}:=\mathrm{Id}\)). Due to the finite support of \(\pi \), we can then let \(\pi _{h} := \pi (\{ (\mathrm{u}^{h} ,\mathrm{v}^{h}) \}) \) and observe that the contagion processes simplify to

In the homogeneous problem, it is natural to let the jump times and jump sizes be fixed by the so-called physical jump condition, first introduced in [8]. A possible analogue of this for the multi-type system (2.1) was briefly discussed in Nadtochiy and Shkolnikov [23]. The authors did not attempt to work with this condition, but rather emphasised that it would appear less clear if there is a condition more natural than others in the multi-type setting. After some straightforward manipulations of [23, Eqs. (2.16) and (2.17)], the condition discussed in [23] can be seen to take the simpler form

where we have set \(X^{h}:=X^{\mathrm{u}^{h},\mathrm{v}^{h}}\) and \(\tau _{h}:=\tau _{\mathrm{u}^{h},\mathrm{v}^{h}}\) for \(h=1,\ldots , k\). If \(k=1\), this is precisely the physical jump condition for the homogeneous problem. When \(k\geq 2\), however, an issue presents itself, which highlights some of the new difficulties in the multi-type setting. Indeed, (2.2) dictates that for each type \(h=1,\ldots ,k\), the jump size of \(\mathcal{L}_{h}\) at time \(t\) is equal to a multiple \(\pi _{h}\) of the total mass given by the density of \(X^{h}_{t-}\) on \([0,D_{t}]\). At the same time, a careful inspection of the dynamics in (1.2) reveals that such jump sizes, for each type, must cause mean-field particles of type \(j\) to shift exactly the mass given by its density on \([0,\sum _{i=1}^{k}\mathrm{v}^{i}_{j}\pi _{i}\Delta \mathcal{L}^{i}_{t}]\) through the origin, meaning that it is this amount of mass that ends up being absorbed. However, this does not agree with the previous observation, unless it happens that \(D_{t}\) equals \(\sum _{i=1}^{k}\mathrm{v}^{i}_{j}\pi _{i}\Delta \mathcal{L}^{i}_{t}\) for all \(j=1,\ldots ,k\) at the given time. Thus the jump condition (2.2) is in general not consistent with the prescribed dynamics of the mean-field particles.

Throughout the paper, we often refer to the total loss process

defined in agreement with the general formulation (1.1). With this notation, it was suggested in Feinstein and Søjmark [10] that a sensible condition for the jump sizes could amount to insisting that for every \(t\geq 0\), almost surely,

where the maps \(\Xi _{h}\) for \(h=1,\ldots , k\) are defined by

for all functions \(f: \mathbb{R}^{k} \rightarrow \mathbb{R}_{+}\). This corresponds to shocking the system by a small amount and tracking the contagious effects for infinitely many rounds (\(m\rightarrow \infty \)) before then sending the order of the shock to zero (\(\varepsilon \downarrow 0\)). It was observed in [10, Proposition 3.5] that any càdlàg solution (1.2) must satisfy \(\Delta L^{v}_{t} = \sum _{h=1}^{k} v_{h} \Xi _{h} (t, \Delta L^{(\, \cdot \,)}_{t} )\) for all \(v \in S(V)\) at every \(t\geq 0\). Noting that \(\Delta _{t,v}^{(m,\varepsilon )}\) is a bounded sequence increasing in both \(m\) and \(\varepsilon \), we can apply dominated convergence in (2.4) to see that the aforementioned constraint is satisfied; so (2.4) is consistent with the dynamics in (1.2).

In Sect. 2.3, we work with a similar-looking condition (2.12) for the jump sizes of the finite particle system. We suspect that (2.4) will be satisfied by the limit points of this particle system. However, we have not yet been able to prove this. Compared to the arguments in [8, 21], which show that limit points of the homogeneous particle system must satisfy the physical jump condition mentioned above, it becomes harder to work with notions of minimality for comparing solutions and it is complicated to keep track of the influence of the heterogeneity.

In this paper, the only result we prove involving (2.4) is the last part of Theorem 2.3 below. Other than that, any further analysis of jump size conditions is left for future research along with the question of whether (2.5) is satisfied by the limits points of the particle system.

2.2 Two results on uniqueness and regularity

In Feinstein and Søjmark [10, Theorem 3.4], a simple smallness condition for the feedback was derived for a particular version of (1.2), ensuring continuity globally in time for all realisations of the common noise \(B^{0}\). Here we show that this condition naturally leads to pathwise global uniqueness of (1.2).

Theorem 2.2

Let Assumptions 1.1and 1.2be satisfied. Moreover, suppose that all the mean-field particles \(X^{u,v}\) have initial densities \(p_{0}(\,\cdot \,|u,v)\) satisfying the condition

for all \((u,v) \in S(U, V)\), where \(1/\max \emptyset =+\infty \). Then each \(X^{u,v}\) must be continuous in time, for any solution to (1.2), and there is uniqueness of solutions.

We note that (2.7) corresponds to Feinstein and Søjmark [10, (3.15)] except for an explicit choice of \(g_{u,v}\) in [10]. Thus the statement about continuity in time of each \(X^{u,v}\) follows precisely as in [10, Theorem 3.4]. The uniqueness part of the theorem is proved in Sect. 4, using the ideas from Ledger and Søjmark [20]. Unfortunately, we are unable to say much about general uniqueness and regularity properties of (1.2) in the absence of the above smallness condition (2.7). Nevertheless, we do have the following local uniqueness and regularity result for the purely ‘idiosyncratic’ problem without the common noise.

Theorem 2.3

Let Assumptions 1.1–1.3be in place. Then there exists a solution to (1.2) on some interval \([0,T_{\star})\) for which the contagion processes \(\mathcal{L}^{1},\ldots ,\mathcal{L}^{k}\) are continuously differentiable up to the explosion time

and for every \(t< T_{\star}\), we have \(\partial _{s} \mathcal{L}^{h}_{s} \leq K s^{-(1-\beta )/2}\) on \([0,t]\) for some constant \(K> 0\), for each \(h=1,\ldots , k\). Moreover, if there is another càdlàg solution to (1.2) with jump sizes smaller than or equal to those given by the cascade condition (2.4), then such a solution must coincide with the above solution on \([0,T_{\star})\).

The proof of Theorem 2.3 is the subject of Sect. 5. It is proved by suitably adapting the arguments from Hambly et al. [15]. For Theorem 2.3 to be truly interesting, one would need to know that the cascade condition (2.4) is satisfied by the limit points of the particle system presented in the next section. We believe this to be true, but the question is left for future research. Likewise, this paper does not address whether there exist solutions satisfying (2.4) beyond their first jump time.

2.3 The connection to a finite particle system

In this section, we introduce a general form of the particle system studied in Feinstein and Søjmark [10]. For details on the motivating application to solvency contagion, we refer to the balance-sheet-based formulation in [10, Sect. 2] and the reformulation as a stochastic particle system in [10, Proposition 3.1]. Here we consider the general system of interacting real-valued càdlàg processes \((X^{i})_{i=1, \dots ,n}\) satisfying

where \((B^{i})_{i=0, \dots ,n}\) is a family of independent Brownian motions, the vectors \(\bar{u}^{i},\bar{v}^{i} \in \mathbb{R}^{k}\) are given by

and \((\xi _{i})_{i=1,\dots ,n}\) is a sequence of i.i.d. random variables with common law \(\mathbb{P}_{\xi}\). As in the mean-field problem, we require solutions to satisfy \(\mathcal{L}^{h;i,n}_{0}=0\) for all \(h=1,\ldots ,k\) and all \(i=1,\ldots , n\). The coefficients \(b_{i}\), \(\sigma _{i}\) and \(g_{i}\) are taken to be continuous functions of the form

with continuity in all variables. In order to have convergence of this system as \(n\,{\rightarrow}\, \infty \), the key requirement is that there is an underlying distribution \(\pi \in \mathcal{P}(\mathbb{R}^{k}\times \mathbb{R}^{k})\) for the indexing vectors such that we have weak convergence

in \(\mathcal{P}(\mathbb{R}^{k}\times \mathbb{R}^{k})\), and in turn also weak convergence

in \(\mathcal{P}(\mathbb{R}^{k}\times \mathbb{R}^{k}\times (0,\infty ))\), where \(P_{0} (\,\cdot \, |u,v):=\mathbb{P}_{\xi}\circ \tilde{\varphi}^{-1}_{u,v}\) for

with the usual notation \((U,V)\sim \pi \). This holds e.g. when \((u^{i},v^{i})_{i \in \mathbb{N}}\) are i.i.d. samples from a desired distribution \(\pi \), drawn independently of \((\xi _{i})_{i \in \mathbb{N}}\), which is the setting of the financial model in [10].

The full set of assumptions for the particle system is collected in a single statement below. As we detail in Lemma 3.3, these assumptions automatically ensure that the limiting distribution \(\pi \) in (2.9) satisfies Assumption 1.1 from above.

Assumption 2.4

We assume the coefficients satisfy Assumption 1.2, where \(g\) is now of the slightly more general form \(g(u,v,\bar{u},\bar{v},t)\). Additionally, we assume that \(\varphi \) in the definition of \(X^{i}_{0}\) is a measurable function, and that \(\sigma _{u^{i},v^{i}}(\,\cdot \,) \in \mathcal{C}^{\beta}([0,T])\) for some \(\beta >1/2\) with the Hölder norms bounded uniformly in \(i=1,\ldots ,n\) and \(n\geq 1\). Furthermore, we assume there is a constant \(C>0\) so that \(|u_{i}|+|v_{i}|\leq C\) for \(i=1,\ldots ,n\) and \(n\geq 1\), and we assume \(u^{i}\cdot v^{j}\geq 0\) for \(i,j=1,\ldots ,n\) and \(n\geq 1\). Finally, we ask that the weak convergence (2.9) and (2.10) holds with \(P_{0}\) satisfying Assumption 1.3.

After some inspection, the particle system (2.8) reveals itself to be non-unique as it is, since the dynamics may allow different sets of absorbed particles whenever a particle reaches the origin. Thus it becomes necessary to make a choice. In the interbank model of [10], it was shown that a Tarski fixed point argument gives a greatest and least càdlàg clearing capital solution to (2.8), and [10, Proposition 3.1] established that selecting the greatest clearing capital solution of this system amounts to letting the sets of absorbed particles be given by the discrete ‘cascade condition’ that we now describe.

By analogy with the total loss process (2.3), we also introduce a total loss process

for the finite particle system, and similarly to (2.5) and (2.6), we then define the maps

for \(h=1,\ldots ,k\) and \(j=1,\ldots ,n\), where we stress that at any time \(t\), the values of \(\Xi ^{n}(t,f,v)\) and \(\Theta _{j}^{n}(t,f)\) are completely specified in terms of the ‘left-limiting state’ of the particle system. That is, the maps are well defined at time \(t\) without any a priori knowledge of which particles (if any) will be absorbed at time \(t\). Armed with these definitions, we declare that at any time \(t\), the set of absorbed particles (possibly the empty set) is given by

with corresponding jump sizes

where the mapping \(v\mapsto \Delta L^{v,n}_{t}\) in (2.11) is determined by the cascade condition

Together, (2.11) and (2.12) specify the set of absorbed particles at any given time and the corresponding jumps in the total feedback felt by the particles. Rephrasing the main conclusion of Feinstein and Søjmark [10, Proposition 3.1], the two defining properties of this cascade condition for the contagion mechanism are that (i) the specification of the set \(\mathcal{D}_{t}\) of absorbed particles is consistent with the dynamics (2.8), and (ii) it gives the càdlàg solution with the greatest values of \((X^{1}_{t},\ldots ,X^{n}_{t})\) in the sense that any other consistent càdlàg specification of \(\mathcal{D}_{t}\) would yield lower values for at least one of the particles while not increasing the values of any of them. This is what gives us the greatest clearing capital in the interbank system from [10].

To connect the particle system (2.8) with the coupled McKean–Vlasov problem (1.2), we work with the empirical measures

where the family \((X^{i})_{i=1, \dots ,n}\) is the unique strong solution to (2.8) equipped with the cascade condition (2.11), (2.12). We stress that each \(X^{i}\) is viewed as a random variable with values in the Skorokhod path space \(D_{\mathbb{R}}=D_{\mathbb{R}}([0,T])\) for a given terminal time \(T>0\). When working to identify the limiting behaviour of (2.13) as \(n\rightarrow \infty \), it will be helpful to have a precise concept of a random Markov kernel, which we introduce next. In terms of notation, we remark that as in (2.13), we use boldface notation whenever we define a random probability measure.

Definition 2.5

By a Markov kernel \(P=(P_{x})_{x\in \mathcal{X}}\) on \(D_{\mathbb{R}}\), for a given Polish space \(\mathcal{X}\), we understand a probability-measure-valued mapping \(x\mapsto P_{x}\in \mathcal{P}(D_{\mathbb{R}})\) such that \(x \mapsto P_{x}[A] \) is Borel-measurable for any \(A \in \mathcal{B}(D_{\mathbb{R}})\). We say that \(\mathbf{P}=(\mathbf{P}_{ x})_{x\in \mathcal{X}}\) is a random Markov kernel on \(D_{\mathbb{R}}\) if the mapping \(x\mapsto \mathbf{P}_{ x}\) assigns to each \(x\in \mathcal{X}\) a random probability measure \(\mathbf{P}_{ x}: \Omega \rightarrow \mathcal{P}(D_{\mathbb{R}})\) on a fixed Polish background space such that \((x,\omega )\mapsto \mathbf{P}_{ x}(\omega )[A]\) is Borel-measurable for all \(A\in \mathcal{B}(D_{\mathbb{R}})\).

We can now state our main result about the limit points of the empirical measures (2.13) as we send the number of particles to infinity.

Theorem 2.6

Let \((\mathbf{P}^{n})_{n\geq 1}\) be the sequence of empirical measures defined in (2.13) and let Assumption 2.4be satisfied. Then any subsequence of \((\mathbf{P}^{n})_{n\geq 1}\) has a further subsequence, still indexed by \(n\), such that \((\mathbf{P}^{n},B^{0})\) converges in law to a limit point \((\mathbf{P}^{\star},B^{0})\). For any such limiting pair, we can identify \(\mathbf{P}^{\star}\) with a random Markov kernel \((\mathbf{P}^{\star}_{u,v})_{(u,v)\in \mathbb{R}^{k}\times \mathbb{R}^{k}}\) on \(D_{\mathbb{R}}\) (in the sense of Definition 2.5) satisfying

for all Borel-measurable functions \(\phi : D_{\mathbb{R}}\rightarrow \mathbb{R}\), where the processes \(X^{u,v;\star}\) are càdlàg solutions to the coupled McKean–Vlasov system

Furthermore, there is independence of the pair \((\mathbf{P}^{\star},B^{0})\), the particle-specific Brownian motions \(B^{u,v}\) and the initial conditions \(X^{u,v}_{0}\).

The proof of Theorem 2.6 is the subject of Sect. 3. Here we only briefly discuss the intuition behind the identification of \(\mathbf{P}^{\star}\) as a random Markov kernel and what the proof of Theorem 2.6 can then be boiled down to. Firstly, note that the empirical measures \(\mathbf{P}^{n}\) are random probability measures on \(\mathbb{R}^{k}\times \mathbb{R}^{k} \times D_{\mathbb{R}}\); so it is natural to work with weak convergence on this space. Thus a given limit point \((\mathbf{P}^{\star }, B^{0})\) yields in the first instance a random probability measure on \(\mathbb{R}^{k}\times \mathbb{R}^{k} \times D_{\mathbb{R}}\). Nevertheless, due to (2.9), we can consider \(\mathbf{P}^{\star}\) as a random variable \(\mathbf{P}^{\star }: \Omega \rightarrow \mathcal{P}_{ \pi}( D_{ \mathbb{R}})\), where the range \(\mathcal{P}_{\pi}(D_{\mathbb{R}})\) denotes the space of all Borel probability measures \(\mu \in \mathcal{P}(\mathbb{R}^{k}\times \mathbb{R}^{k} \times D_{ \mathbb{R}})\) with fixed marginal \(\mu \circ \mathfrak{p}_{1,2}^{-1}=\pi \) for \(\mathfrak{p}_{1,2}(u,v,\mu )=(u,v)\). This space \(\mathcal{P}_{ \pi}(D_{\mathbb{R}})\) can then be seen to be isomorphic to the space of all Markov kernels \((\nu _{u,v})_{(u,v)\in \mathbb{R}^{k} \times \mathbb{R}^{k}}\) for \(D_{\mathbb{R}}\) under the identification

For any limit point of the empirical measures, we thus have a random probability measure \(\mathbf{P}^{\star}: \Omega \rightarrow \mathcal{P}_{\pi}(D_{ \mathbb{R}})\) corresponding to a random Markov kernel for \(D_{\mathbb{R}}\) via the identification

which is to be understood \(\omega \) by \(\omega \), where the key requirement is that the mapping \((u,v,\omega )\mapsto \mathbf{P}^{\star}_{u,v}( \omega )[A]\) must be measurable for every \(A\in \mathcal{B}(D_{\mathbb{R}})\).

In view of the above, checking that a limit point \(\mathbf{P}^{\star}\) yields a solution to the desired McKean–Vlasov system (2.15) amounts to checking that each \(\mathbf{P}^{\star}_{u,v}\) realises the conditional law of \(X^{u,v,\star} \) on the path space \(D_{\mathbb{R}}\) given the pair \((B^{0},\mathbf{P}^{\star})\), which is precisely (2.14). In particular, we are looking for the relations

for all Borel-measurable \(f:\mathbb{R}^{k} \times \mathbb{R}^{k} \rightarrow \mathbb{R}\) and \(\phi : D_{\mathbb{R}}\rightarrow \mathbb{R}\). The proof of Theorem 2.6 is implemented in this spirit, by constructing a random Markov kernel from a given limiting pair \((\mathbf{P}^{\star},B^{0})\) in a way that the above relations can be conveniently verified (see in particular Proposition 3.8).

Remark 2.7

We note that the empirical measures \(\mathbf{P}^{n}\) become independent of the idiosyncratic noise in the limit, but the theorem does not guarantee that the corresponding limit points become strictly a function of the common noise, which explains the appearance of \(\mathbf{P}^{\star}\) on the right-hand side of (2.14) and (2.15). Nonetheless, \((\mathbf{P}^{\star},B^{0})\) being independent of the idiosyncratic noise means that this system is qualitatively the same as (1.2). Analogously to Ledger and Søjmark [21], (2.14), (2.15) is a ‘relaxed’ solution of the heterogeneous system (1.2). This is similar to the weak solution concepts for mean-field games introduced in Carmona et al. [5] and later considered for general McKean–Vlasov SDEs in Hammersley et al. [17].

Whenever there is pathwise uniqueness of (2.15), we get a unique mean-field limit, given by a pair \((\mathbf{P}^{\star},B^{0})\) for which \(\mathbf{P}^{\star}\) is in fact \(B^{0}\)-measurable. In particular, we then have full convergence of the particle system, and the additional appearance of \(\mathbf{P}^{\star}\) in the conditioning on the right-hand sides of (2.14) and (2.15) can be dropped. Since we have established results on conditions for uniqueness with continuous dynamics, we get the following result.

Theorem 2.8

Under the assumptions of Theorem 2.2, there is uniqueness of the limit points in Theorem 2.6, and therefore \((\mathbf{P}^{n},B^{0})\) converges in law to a unique limit \((\mathbf{P}^{\star},B^{0})\). Moreover, this limit is now characterised by a Brownian motion \(B^{0}\) and a random Markov kernel \((\mathbf{P}^{\star}_{u,v})_{(u,v)\in \mathbb{R}^{k}\times \mathbb{R}^{k}}\) satisfying

for all Borel-measurable \(\phi : D_{\mathbb{R}}\rightarrow \mathbb{R}\), where the system \((X^{u,v})_{(u,v)\in \mathbb{R}^{k}\times \mathbb{R}^{k}}\) constitutes the unique family of continuous processes obeying the dynamics (1.2) with \(B^{0}\) independent of each \(B^{u,v}\).

Following the arguments in Ledger and Søjmark [20, Theorem 2.3], the proof of Theorem 2.8 is a consequence of Theorem 2.6 together with the estimates in Sect. 4.

3 Limit points of the particle system

This section is dedicated to the proof of Theorem 2.6. Our first task is to establish a suitable tightness result for the pairs \((\mathbf{P}^{n},B^{0})\), which is done in Sect. 3.2, and then we conclude in Sect. 3.3 that the resulting limit points \((\mathbf{P}^{\star},B^{0})\) can be characterised as solutions to (2.14), (2.15).

To implement these arguments, we follow the broad approach of Ledger and Søjmark [21], which in turn builds on several antecedent ideas from Delarue et al. [8]. Since [21] deals with a symmetric particle system, substantial adjustments to the arguments are needed, but the overall flavour remains that of identifying a limiting martingale problem, as is typical for convergence results of this type. More generally, we stress that our particle system is not exchangeable and the positive feedback from defaults is of a rather singular nature; so the setting is quite different from the classical frameworks for propagation of chaos. In particular, the singular interaction leads us to work with Skorokhod’s M1-topology as in [8, 21, 22]. This is very different from the related work by Inglis and Talay [18], discussed in the next subsection, where the particles are smoothly interacting. For a careful introduction to the M1-topology, we refer to the excellent monograph by Whitt [27, Sects. 3.3 and 12.3].

3.1 A different approach to the heterogeneity

As we mentioned in the introduction, the first paper to look at heterogeneity in particle systems with a contagion mechanism similar to (2.8) is [18], and this remains to the best of our knowledge the only paper to have examined the issue of mean-field convergence for such particle systems.

The analysis in [18], however, differs quite substantially from ours, since the contagion mechanism is smoothed out in time. So there are no explosion times nor jumps to consider, neither in the approximating particle system nor in the mean-field limit; see the dynamics in (3.1) below. Also, there is no common noise to deal with as the system is driven by fully independent Brownian motions. Unsurprisingly, however, there are certainly some similarities in the proofs of tightness and convergence, but we need a different topology in order to have tightness, and, as we turn to next, the heterogeneity in our system plays out very differently in the analysis.

Indeed, the ‘philosophy’ of how the heterogeneity is dealt with in [18] when passing to a mean-field limit is entirely different from ours. While our aspiration is to see the heterogeneous structure reflected in the limiting problem, [18] is interested in justifying how a homogeneous limiting problem can also serve as a reasonable approximation to a large particle system with heterogeneous interactions. Slightly simplified and reformulated for the positive half-line, the contagious particle system in [18] takes the form

with \(\tau _{i}=\inf \{ t>0 : X^{i}_{t} \leq 0 \}\) and \(S_{i}^{n}:=\sum _{j=1}^{n} J_{ij}\) for \(i=1,\ldots ,n\). Clearly, the asymmetry of the so-named synaptic weights \(J_{ij}\) means that each particle in (3.1) can feel the contagion in a very different way. However, following on from the above, [18] is interested in connecting the limiting behaviour of this heterogeneous system to a single homogeneous McKean–Vlasov problem

where as usual \(\tau =\inf \{ t>0 : X_{t} \leq 0 \}\). After some results on the well-posedness of (3.2), [18] attacks the aforementioned idea by tracking the particle system (3.1) through the particular families of weighted empirical measures

The main result then says that provided we have

each \((P_{i}^{n})_{n\geq 1}\) converges weakly to the law of the unique solution to (3.2), independently of the index \(i\) and independently of any other aspects of the heterogeneity.

As a minor caveat, however, there seems to be a problem with the proof of [18, Theorem 2.4] underlying the above convergence. Specifically, the first objective is to verify that for any given \(i\), the weighted empirical measures \(P_{i}^{n}\) converge to some \(P\) as \(n\rightarrow \infty \), where \(P\) solves a suitable nonlinear martingale problem associated with the desired McKean–Vlasov limit independently of \(i\). On close inspection, a crucial step in [18, Sect. 5.3] towards affirming the aforementioned result relies on having

for \(i\neq j\), for a suitable time- and measure-dependent differential operator \(\mathfrak{L}\), yielding the martingale problem. However, since \(\mathfrak{L}_{(\cdot ,P_{i}^{n})}\) can be seen to give the generator of the \(i\)th particle and \(P_{i}^{n}\) can differ significantly from \(P_{j}^{n}\) depending on the structure of the synaptic weights \((J_{ij})_{i,j\leq n}\), the above equality would not appear to hold in general. It is not clear that this discrepancy can be fixed given just the assumptions mentioned above, but one should certainly be able to handle it by placing additional constraints on the \(J_{ij}\) so that an error term can be singled out which becomes negligible in the limit. Alternatively, one could apply the methodology of the present paper in order to arrive at a heterogeneous mean-field limit, using a kernel structure \(J_{ij}=\kappa (u^{j},v^{i})\) to capture the asymmetry of the synaptic weights.

3.2 Tightness for the finite particle systems

We wish to work with convergence in the Skorokhod space on compact time intervals \([0,T]\). This involves pointwise convergence at the initial time \(t=0\) with the limiting system having zero loss at this time, as required by our notion of solution. To ensure this, we establish uniform control over the smallness of the total feedback near the initial time, which is the subject of the next lemma. Concerning the final time, we only obtain convergence for continuity points of the limiting system. To establish tightness without an analogue of the next lemma at the endpoint, we extend the particle system continuously beyond its final time \(T\) as in Delarue et al. [7]. If one is not interested in ensuring initial regularity, one could also consider continuously embedding the particle system in the larger time interval \([-1,T]\) as in the recent paper by Cuchiero et al. [6], allowing more general initial conditions.

Lemma 3.1

Let Assumption 2.4be satisfied and define

for all \(t\geq 0\). For any \(\epsilon >0\) and \(\delta >0\), there is a small enough \(t=t(\epsilon ,\delta )>0\) such that

Proof

Let \(\epsilon ,\delta >0\) be given. Define for \(i\leq n\) the positive constants

Then \(F^{i,n}\) in (3.3) satisfies \(F^{i,n}_{s}-F^{i,n}_{s-}\leq M_{i}|\mathcal{D}_{s}|\) at any time \(s\geq 0\), where \(|\mathcal{D}_{s}|\) is the number of particles absorbed at time \(s\), given by the cascade condition (2.11), (2.12). By Assumption 2.4, the set \(S(V)\) is compact and the \(u^{i}\) likewise belong to the compact set \(S(U)\); so we can take \(M>0\) large enough such that \(M_{i}\leq M\) uniformly in \(i\leq n\) and \(n\geq 1\). This yields a bound on the jumps of each particle, namely

for any \(s > 0\). Next, we consider the number of particles starting within a distance of \(\delta \) from the origin, as given by

and we then split the probability of interest (3.4) into

By the weak convergence (2.10) as enforced by Assumption 2.4, we get

provided that

Using the bound on \(p_{0}(\,\cdot \,|u,v)\) from Assumption 1.3, we see that the left-hand side of the above inequality is of order \(O(\delta ^{1+\beta})\) as \(\delta \downarrow 0\), for some \(\beta >0\). However, decreasing \(\delta >0\) only increases the probability (3.4). Therefore, without loss of generality, we can assume that (3.7) is satisfied for our fixed \(\delta >0\).

Now let \(\varsigma _{i}=\inf \{s>0 : F^{i,n}_{s}>\delta /2 \}\). For any \(i\leq n\), we claim that on each of the events \(\{ F^{i,n}_{t}\geq \delta , N^{n}_{0,\delta}\leq \lfloor n\delta /4M \rfloor \}\), there must be at least \(\lceil n\delta /8M \rceil \) of the more than \(n-\lfloor n\delta /4M\rfloor \) particles \(X_{j}\) with initial positions \(X^{j}(0)> \delta \) which also satisfy

Indeed, if there were strictly less than \(\lceil n\delta /8M \rceil \) such particles (on any of the given events), then there could be at most a total of

particles \(X^{j}\) with \(\tau _{j}<\varsigma _{i}\land t\) or \(X^{j}_{\varsigma _{i}\land t-}=0\) (on that event), which by (3.5) can only cause a downward jump of all other particles by at most \(3\delta /8\). But this would be insufficient for any particle \(X^{j}\) with \(X^{j}_{0}> \delta \) that does not satisfy (3.8) to be absorbed before or at time \(\varsigma _{i}\land t\). Thus again by (3.5), we should end up with \(F^{i,n}_{\varsigma _{i}\land t}\leq 3\delta /8\leq \delta /2\), and hence also \(F^{i,n}_{t} \leq 3\delta /8\), which contradicts \(F^{i,n}_{t} \geq \delta \). It follows that for each \(i\leq n\), we must in particular have

where

By definition of each \(\varsigma _{i}\) and Assumption 2.4, there is a uniform \(c>0\) such that (3.8) implies

and hence

for all \(i\leq n\), where \(Y^{i}_{s} :=\int _{0}^{s}\sigma _{i}(r) \sqrt{1-\rho ^{2}} \,dB^{i}_{r}\) and \(Z^{i}_{s} :=\int _{0}^{s}\sigma _{i}(r) \rho dB^{0}_{r}\). Taking \(t<\delta 2^{-5}\) and defining

we thus have

Using the weak convergence (2.9) from Assumption 2.4 and the strong law of large numbers for the independent Brownian motions \(B^{i}\), we can deduce that

provided that

where \(B\) is a standard Brownian motion. As \(\sigma \) is bounded uniformly in \((u,v,r)\), this can certainly be achieved by taking \(t\) small enough relative to \(\delta \). Moreover, taking \(t\) small enough relative to \(\delta \) also ensures that we can make

as small as we like. Consequently, recalling (3.6) and (3.7), we can indeed find a small enough \(t=t(\delta ,\epsilon )>0\) such that

which completes the proof. □

As already mentioned in (2.13), given a family of solutions to the particle system (2.8), on an arbitrary time interval \([0,T]\), we define the empirical measures

where each \(X^{i}\) is a random variable with values in \(D_{\mathbb{R}}=D_{\mathbb{R}}([0,T])\) for the given \(T>0\). Note that each \(\mathbf{P}^{n}\) is then a random probability measure with values in \(\mathcal{P}(\mathbb{R}^{k}\times \mathbb{R}^{k}\times D_{ \mathbb{R}})\).

Our next result shows that these empirical measures are tight in a suitable sense. The previous lemma serves as a crucial ingredient in the proof.

Proposition 3.2

Let \(T>0\) be given and consider the solutions \(((X^{i}_{t})_{t\in [0,T]})_{i=1,\dots ,n}\) of (2.8), for all \(n\geq 1\), where we assume that Assumption 2.4is satisfied. For an arbitrary \(S>T\), we extend the paths of \(X^{i}\) from \([0,T]\) to \([0,S]\) by setting \(X^{i}_{t}:=X^{i}_{T}\) for all \(t\in [T,S]\). Let \(\mathbf{P}^{n}\) be the empirical measures defined as in (3.9), but with each \(X^{i}\) taking values in \(D_{\mathbb{R}}=D_{\mathbb{R}}([0,S])\), where we endow \(D_{\mathbb{R}}\) with Skorokhod’s M1-topology (see e.g. Avram and Taqqu [2, Proposition 2] and Whitt [27, Sect. 12.3]). Moreover, let \(\mathcal{P}(\mathbb{R}^{k}\times \mathbb{R}^{k}\times D_{\mathbb{R}})\) be endowed with the topology of weak convergence of measures as induced by the M1-topology on \(D_{\mathbb{R}}\). Then the empirical measures \(\mathbf{P}^{n}\) for \(n\geq 1\) form a tight sequence of random variables with values in \(\mathcal{P}(\mathbb{R}^{k}\times \mathbb{R}^{k}\times D_{\mathbb{R}})\).

Proof

As in Avram and Taqqu [2, Sect. 2], we define

and work with the oscillation function for the M1-topology given by

Recalling the definition of \(F^{i,n}_{t}\) in (3.3), it follows from Assumption 2.4 that the paths \(t\mapsto F^{i,n}_{t}\) are increasing. Exploiting this fact, we can check that

and hence, using the bounds on the coefficients given by Assumption 2.4, the nice continuous dynamics of

allow a simple application of Markov’s inequality and Burkholder–Davis–Gundy to deduce

for a fixed constant \(C_{0}>0\) that is uniform in \(i\leq n\) and \(n\geq 1\). Armed with this estimate, it follows from [2, Theorem 1] that we get the bound

for all \(\delta ,\varepsilon >0\) and \(n\geq 1\), for another fixed constant \(\tilde{C_{0}}>0\). Moreover, we can apply Lemma 3.1 to deduce that

which is the key part of why we can get tightness for the M1-topology. At the other endpoint, we automatically have

as the probability vanishes for all \(\delta < T-S\) by our continuous extension \(X^{i}_{t}=X^{i}(T)\) for \(t\in [T,S]\). Next, the dynamics of each \(X^{i}\) and Assumption 2.4 are easily seen to imply the compact containment condition

By Assumption 2.4, there is a uniform constant \(c>0\) such that \(|U_{i}|+|V_{i}|\leq c \) for all \(i=1,\ldots ,n\) and \(n\geq 1\). If we consider any set \(K \in \mathcal{B}(\mathbb{R}^{k}\times \mathbb{R}^{k})\otimes \mathcal{B}( D_{\mathbb{R}})\) of the form \(K = \bar{B}_{c}(0) \times A\), where \(\bar{B}_{c}(0)\) is the closed ball of radius \(c>0\) around the origin in \(\mathbb{R}^{k}\times \mathbb{R}^{k} \), we thus have

and so it remains to identify a suitable set \(A\) which we can control uniformly in \(i=1,\ldots ,n\) as \(n\rightarrow \infty \). To this end, let

It follows from (3.10)–(3.12) that we can find \(\delta _{\epsilon ,m}>0\) such that

Using also (3.13) and setting

for a large enough \(R_{\varepsilon}>0\), it follows from the above and (3.14) that

for any \(\varepsilon >0\). By construction, each \(A_{\varepsilon ,j}\) is relatively compact in \(D_{\mathbb{R}}\) for the M1-topology, as follows e.g. from the characterisation in Whitt [27, Theorem 12.12.2]; so each \(K_{\varepsilon ,j}\) is compact for the M1-topology. Moreover, (3.15) yields

It remains to note that the set \(\bigcap _{j=1}^{\infty }\{\mu :\mu (K^{c}_{\varepsilon ,j})\leq 2^{-j} \}\) is closed in \(\mathcal{P}(D_{\mathbb{R}})\) by the Portmanteau theorem (under the topology of weak convergence of measures induced by the M1-topology on \(D_{\mathbb{R}}\)) as each \(K^{c}_{\varepsilon ,j}\) is open, and that it forms a tight family of probability measures by construction, as each \(K_{\varepsilon ,j}\) is compact. Therefore, Prokhorov’s theorem gives that the set is compact since \(\mathcal{P}(D_{\mathbb{R}})\) is a Polish space for the topology we are working with. In turn, we can conclude that \((\mathbf{P}^{n})_{n\geq 1}\) is indeed a tight sequence of random probability measures when \(\mathcal{P}(D_{\mathbb{R}})\) is given the topology of weak convergence induced from the M1-topology on \(D_{\mathbb{R}}\). □

3.3 Identifying a suitable probabilistic setup for the mean-field limit

Recall that the empirical measures \(\mathbf{P}^{n}\) are random variables taking values in the space \(\mathcal{P}(\mathbb{R}^{k}\times \mathbb{R}^{k}\times D_{\mathbb{R}})\) of probability measures. For \((u,v,\eta )\in \mathbb{R}^{k}\times \mathbb{R}^{k}\times D_{ \mathbb{R}} \), we define the coordinate projections

as well as

Writing \(\mathbf{P}^{n}_{t}:=\mathbf{P}^{n} \circ \mathfrak{p}_{t}^{-1}\) for \(t\geq 0\) and \(\pi ^{n}:=\mathbf{P}^{n} \circ \mathfrak{p}_{(1,2)}^{-1}\), the conditions (2.9) and (2.10) from Assumption 2.4 read as

where the mode of convergence is weak convergence of measures. Given this, we can make the following simple observation, guaranteeing that the limiting distribution \(\pi \) behaves as we should like it to behave.

Lemma 3.3

Let Assumption 2.4be in place. Writing \((U,V)\sim \pi \), let \(S(U)\) and \(S(U)\) denote the support of \(U\) and \(V\), respectively. Then \(S(U)\) and \(S(V)\) are both compact in \(\mathbb{R}^{k}\), and we have that

Proof

First of all, the compactness follows by noting that (3.16) gives

for a large enough \(C>0\), due to the Portmanteau theorem and Assumption 2.4. Moreover, again by Assumption 2.4, it holds for each \(n\geq 1\) that

Since the marginals are weakly convergent, by (3.16), we have weak convergence of the product measures \(\pi ^{n}_{1} \otimes \pi ^{n}_{2}\) to \(\pi _{1} \otimes \pi _{2}\), and hence the Portmanteau theorem gives

by the previous equality. In turn, for \(\pi _{1}\)-a.e. \(v\in \mathbb{R}^{k}\), it holds that \(u \cdot v \geq 0\) for \(\pi _{2}\)-a.e. \(u\in \mathbb{R}^{k}\), which finishes the proof. □

Throughout the rest of the paper, we fix a terminal time \(T>0\) and an arbitrary \(S>T\) as in Proposition 3.2. Naturally, our arguments will apply for any \(T>0\).

Proposition 3.2 gives that any subsequence of \((\mathbf{P}^{n},B^{0})_{n\geq 1}\) has a further subsequence that converges in law (by Prokhorov’s theorem) to a limit point \((\mathbf{P}^{\star},B^{0})\), whose law we denote by \(\mathbb{P}^{\star}_{0}\). The limiting law \(\mathbb{P}^{\star}_{0}\) is realised as a Borel probability measure on the product-\(\sigma \)-algebra \(\mathcal{B}(\mathcal{P}(\mathbb{R}^{k}\times \mathbb{R}^{k} \times D_{ \mathbb{R}})) \otimes \mathcal{B}(C_{\mathbb{R}}) \) such that the second marginal of \(\mathbb{P}^{\star}_{0}\) is the law of a standard Brownian motion \(B^{0}:\Omega \rightarrow C_{\mathbb{R}}\) with \(C_{\mathbb{R}}=C_{\mathbb{R}}([0,S])\), while the first marginal is the law of a random probability measure \(\mathbf{P}^{\star}:\Omega \rightarrow \mathcal{P}(\mathbb{R}^{k} \times \mathbb{R}^{k} \times D_{\mathbb{R}})\) with \(D_{\mathbb{R}}=D_{\mathbb{R}}([0,S])\). Moreover, as Assumption 2.4 gives \(\mathbb{P}^{\star}(\omega ) \circ \mathfrak{p}_{(1,2)}^{-1} =\pi \) for all \(\omega \in \Omega \), we can write \(\mathbf{P}^{\star}:\Omega \rightarrow \mathcal{P}_{\pi}(D_{ \mathbb{R}})\), where \(\mathcal{P}_{\pi}(D_{\mathbb{R}})\) denotes the subspace of all \(\mu \in \mathcal{P}(\mathbb{R}^{k}\times \mathbb{R}^{k} \times D_{ \mathbb{R}})\) with fixed marginal \(\mu \circ \mathfrak{p}_{(1,2)}^{-1}=\pi \), as in the discussion after the statement of Theorem 2.6. Later, we use this to identify \(\mathbf{P}^{\star}\) with a random Markov kernel, as per Definition 2.5.

Throughout what follows, we fix a given limit point \((\mathbf{P}^{\star},B^{0})\). For concreteness, we take this limit point \((\mathbf{P}^{\star},B^{0})\) to be defined on the canonical background space \((\Omega _{0},\mathcal{B}(\Omega _{0}),\mathbb{P}^{\star}_{0})\), where we set \(\Omega _{0}:=\mathcal{P}_{\pi}(D_{\mathbb{R}})\times C_{\mathbb{R}}\) and as discussed above, we let \(\mathbb{P}^{\star}_{0}\in \mathcal{P}(\Omega _{0})\) denote the limiting law of the joint laws of \((\mathbf{P}^{n},B^{0})_{n\geq 1}\) along the given subsequence. Then we can simply write \((\mathbf{P}^{\star},B^{0})\) as the identity map \((\mathbf{P}^{\star},B^{0})(\mu ,w)=(\mu ,w)\) on \(\Omega _{0}\) and we have \(\mathcal{B}(\Omega _{0})=\sigma (\mathbf{P}^{\star},B^{0})\).

Given \(\mathbb{P}_{0}^{\star}\), we can define a probability measure \(\mathbb{P}^{\star}\) on

by letting

for \(O\in \mathcal{B}(\mathbb{R}^{k} \times \mathbb{R}^{k})\), \(E\in \mathcal{B} ( \mathcal{P}_{\pi}(D_{\mathbb{R}}) \times C_{ \mathbb{R}} )\) and \(A\in \mathcal{B}( D_{\mathbb{R}})\). Consider the disintegration of \(\mathbb{P}^{\star}\) with respect to the projection \(\hat{\mathfrak{p}}_{1,2}((u,v),(\mu ,w),\eta ):=((u,v),(\mu ,w))\). This yields a Markov kernel

such that

for any \(O\times E \times A \in \mathcal{B}(\mathbb{R}^{k} \times \mathbb{R}^{k}) \otimes \mathcal{B} ( \mathcal{P}_{\pi}\times C_{\mathbb{R}} ) \otimes \mathcal{B}(D_{\mathbb{R}}) \) by Tonelli’s theorem, since

Indeed, we have \(\mu [O\times D_{\mathbb{R}}]=\pi [O]\) for \(\mathbb{P}_{0}^{\star}\)-a.e. \((\mu ,w)\in E\), and hence

for any given set \(O\times E\) as above. From here, we define a family of probability measures \(\mathbb{P}^{\star}_{u,v}\) on \(\mathcal{B} ( \mathcal{P}_{\pi}(D_{\mathbb{R}})\times C_{\mathbb{R}} ) \otimes \mathcal{B}( D_{\mathbb{R}})\) by

where the joint measurability of each \(\mathbb{P}_{u,v}^{\mu , w}[A]\) ensures that

is a Markov kernel. Notice also that the marginal of \(\mathbb{P}_{u,v}^{\star}\) on \((\Omega _{0},\mathcal{B}(\Omega _{0}))\) is always \(\mathbb{P}_{0}^{ \star}\) since for any \(E\in \mathcal{B}(\Omega _{0})\),

The family of probability measures \(\mathbb{P}^{\star}_{u,v}\) will play a critical role in what follows.

On the background space \((\Omega _{\star},\mathcal{B}(\Omega _{\star}))\), we define the three random variables

By construction, for any \((u,v)\in \mathbb{R}^{k}\times \mathbb{R}^{k}\), we then have

and

for any \(A\in \mathcal{B}(D_{\mathbb{R}})\), \(E_{1}\in \mathcal{B}(\mathcal{P}_{\pi})\) and \(E_{2}\in \mathcal{B}(C_{\mathbb{R}})\). Consequently, we have

for all \(A\in \mathcal{B}(D_{\mathbb{R}})\), where the joint law of \((\mathbf{P}^{\star}, B^{0})\) is the same under every \(\mathbb{P}_{u,v}^{\star}\).

Now consider the hitting-time map \(\tau _{0}: D_{\mathbb{R}} \rightarrow \mathbb{R}\) given by

Then \(\tau _{0}(Z):\Omega ^{\star}\rightarrow \mathbb{R}\) is the first hitting time of zero for the process \(Z\) defined above. The above leads us to define candidates for the limiting feedback in our mean-field problem, namely \(\mathcal{L}^{h;\star} : \Omega _{0} \rightarrow D_{\mathbb{R}}\), for \(h=1,\ldots ,k\), given by

on the background space \((\Omega _{0},\mathcal{B}(\Omega _{0}))\). Naturally, we may also view these as stochastic processes defined on \((\Omega _{\star},\mathcal{B}(\Omega _{\star}))\). In the next section, we confirm that these candidates are indeed inducing the limiting laws of the empirical feedback \(\mathcal{L}^{h,n}\) for \(h=1,\ldots ,k\) when considered on the probability space \((\Omega _{\star},\mathcal{B}(\Omega _{\star}), \mathbb{P}_{u,v}^{ \star})\), for \(\pi \)-almost every pair of type vectors \((u,v)\in \mathbb{R}^{k}\times \mathbb{R}^{k}\). To this end, it will be useful to consider the particular set of continuity times

Observe that the complement of \(\mathbb{T}_{\star}\) in \([0,T]\) is at most countably infinite. Indeed, the assignment \(A\mapsto \int _{\mathbb{R}^{k}\times \mathbb{R}^{k}}\mathbb{P}^{ \star}_{u,v}[A]d\pi (u,v)\) yields a well-defined probability measure on \(\mathcal{B}(\Omega _{\star})\), and since \(Z\) is càdlàg by definition, dominated convergence also shows that each \(\mathcal{L}^{\star}\) is càdlàg. Hence the claim follows from Billingsley [4, Sect. 13]. We make abundant use of this fact in our convergence arguments.

Throughout what follows, we always take the Skorokhod space \(D_{\mathbb{R}}\) to be endowed with Skorokhod’s M1-topology. Moreover, we let \(\mathfrak{T}_{{\mathrm{wk}}}^{\text{M1}}\) denote the topology corresponding to weak convergence of measures in \(\mathcal{P}_{\pi}(D_{\mathbb{R}}) \cong \mathcal{P}(\mathbb{R} \times \mathbb{R} \times D_{\mathbb{R}})\) induced by the M1-topology \(D_{\mathbb{R}}\).

3.4 Convergence of the feedback along with the empirical measures

In this subsection, we study the feedback from defaults felt by each institution as the number of institutions tends to infinity along a convergent subsequence of the empirical measures. These results are essential to the motivating applications, and given tightness of the system, they form the critical technical hurdles towards obtaining the mean-field limit.

Proposition 3.4

As in Sect. 3.4, let \(\mathbf{P}^{\star}\) be a given limit point in law of the empirical measures \(\mathbf{P}^{n}\), and consider the resulting Markov kernel \((\mathbb{P}^{\star}_{u,v})_{(u,v)\in \mathbb{R}^{k}\times \mathbb{R}^{k}}\) on \(D_{\mathbb{R}}\) defined in (3.20). For \(\pi \)-almost every \((u,v)\in \mathbb{R}^{k}\times \mathbb{R}^{k}\), the hitting-time map \(\tau _{0} : D_{\mathbb{R}}\rightarrow \mathbb{R}\) from (3.21) is continuous in the M1-topology on \(D_{\mathbb{R}}\) at \(\mathbb{P}^{\star}_{u,v}\)-almost every \(\eta \in D_{\mathbb{R}}\).

Proof

Let \(Y^{i}_{t}:=\int _{0}^{t}\sigma (s)dW^{i}_{s}\), where \(W^{i}_{t}=\rho B^{0}_{t} + \sqrt{1-\rho ^{2}}B^{i}_{t}\). Then consider the family of probability measures \(\mathbb{Q}^{n}\) on the Borel \(\sigma \)-algebra of \((\mathbb{R}^{k}\times \mathbb{R}^{k}) \times D_{\mathbb{R}}\times C_{ \mathbb{R}}\), defined by

where we are averaging over the joint laws of each particle and its martingale part for the particles within a given set of types \(O\). As in the proof of Proposition 3.2, we can show that \((\mathbb{Q}^{n})_{n\geq 1}\) is tight. Following the procedure in Sect. 3.3 and exploiting the continuity of the marginal projections, we can then deduce that there is a limit point \(\mathbb{Q}^{\star }\cong (\mathbb{Q}^{\star }_{u,v})_{(u,v)\in \mathbb{R}^{k} \times \mathbb{R}^{k}} \) in \(\mathcal{P}_{\pi}( D_{\mathbb{R}}\times C_{\mathbb{R}})\) such that

for all \(A\in \mathcal{B}(D_{\mathbb{R}})\), which we utilise at the end of the proof. Moreover, we see that

gives the law of Brownian motion time-changed by \(t\mapsto \sigma (t)\) for all \(n \geq 1\). Noting also that future increments of the \(Y^{i}\) are independent of the filtration generated by all the particles up to any given time, we can therefore let \((Z^{\star},Y^{\star})(\eta ,w):=(\eta ,w)\) for all \((\eta ,w)\in D_{\mathbb{R}}\times C_{\mathbb{R}}\), and conclude from the weak convergence that \(Y^{\star}\) has the law of a time-changed Brownian motion under \(\mathbb{Q}_{u,v}^{\star}\) with respect to the filtration generated by the pair \((Z^{\star},Y^{\star})\). Let \(\mathbb{T}:=\{t\geq 0 : \int _{\mathbb{R}^{k}\times \mathbb{R}^{k}} \mathbb{Q}^{\star}_{u,v}[Z^{\star}_{t} = Z_{t-}]d\pi (u,v) = 1 \}\) and consider the events

in \(\mathcal{B}(D_{\mathbb{R}}\times C_{\mathbb{R}})\). By the definition of \(\mathbb{T}\), continuity of \((s,u,v)\mapsto b_{u,v}(s)\) and M1-continuity of the marginal projections at continuity points (see Whitt [27, Theorem 12.4.1]) imply that \((u,v,\eta ,w)\mapsto \mathbf{1}_{\{E_{u,v}\}}(\eta ,w)\) is upper semicontinuous with probability 1 under \(\mathbb{Q}^{\star}\) (for the product topology induced by the M1-topology on \(D_{\mathbb{R}}\) and the uniform topology on \(C_{\mathbb{R}}\)). In turn, the weak convergence of \(\mathbb{Q}^{n}\) to \(\mathbb{Q}^{\star}\) implies that

where the last two equalities simply follow from the definition of \(\mathbb{Q}^{n}\) and the definition of the particle system. For the rest of the proof, fix an arbitrary pair \((u,v)\) such that \(\mathbb{Q}^{\star}_{u,v}[E_{u,v}]=1\). By the previous observation, such pairs \((u,v)\) have full measure under \(\pi \). By the right-continuity of \(Z^{\star}\) and the continuity of \(Y^{\star}\), we can then conclude that \(\mathbb{Q}^{\star}_{u,v}\)-almost surely, the increment bounds in the definition of \(E_{u,v}\) hold for all pairs of times. In particular, we know that \(Z^{\star}\) can only jump downwards, with probability 1 under \(\mathbb{Q}^{\star}_{u,v}\). Moreover, we know that the restarted process \(Y^{0,\star}_{t}:=Y^{\star}_{t+\tau _{0}(Z^{\star})}-Y^{\star}_{\tau _{0}(Z^{ \star})}\) defines a new time-changed Brownian motion under \(\mathbb{Q}^{\star}_{u,v}\); so it follows from the law of the iterated logarithm that we have

\(\mathbb{Q}^{\star}_{u,v}\)-almost surely with \(h(t)=c\sqrt{t\ln \ln (1/t)}\) for some constant \(c>0\) that only depends on \(t\mapsto \sigma (t)\).

Now consider the set

in \(D_{\mathbb{R}}\). Clearly, if a path \(\eta \) comes with a (nonempty) right neighbourhood of \(\tau _{0}(\eta )\) where it only takes nonnegative values, then there is an endless supply of uniformly convergent sequences \(\eta _{n} \rightarrow \eta \) such that \(\tau _{0}(\eta _{n})\) does not converge to \(\tau _{0}(\eta )\) as \(n\rightarrow \infty \), and so \(\eta \notin E_{0}\). Conversely, one can easily deduce from the parametric representations in the definition of M1-convergence that if a given path \(\eta \) assumes strictly negative values on any right neighbourhood of \(\tau _{0}(\eta )\), then all M1-convergent sequences \(\eta _{n} \rightarrow \eta \) must satisfy \(\tau _{0}(\eta _{n})\rightarrow \tau _{0}(\eta )\) as \(n\rightarrow \infty \), which implies \(\eta \in E_{0}\). Hence the set \(E_{0}\) coincides with the event that \(Z^{\star}\) assumes strictly negative values on any right neighbourhood of \(\tau _{0}(Z^{\star})\). We can readily express this event in terms of countable unions and intersections of Borel sets, and so this event is an element of \(\mathcal{B}(D_{\mathbb{R}})\). Moreover, it is immediate from (3.25) that this event has probability 1 under \(\mathbb{Q}^{\star}_{u,v}\). Since we fixed an arbitrary vector \((u,v)\) in a set of full measure under \(\pi \), we can conclude from (3.24) that

and hence \(\mathbb{P}^{\star}_{u,v}[ E_{0}]=1\) for \(\pi \)-almost every \((u,v)\in \mathbb{R}^{k} \times \mathbb{R}^{k}\). So the proof is complete. □

The above proposition is interesting in its own right, but most importantly, it allows us to take a generalised continuous mapping approach to the convergence of the feedback, when seen as suitable functionals of the laws of the empirical measures. The starting point is the following lemma.

Lemma 3.5

Suppose \((\mathbf{Q}^{n},B^{n})\rightarrow (\mathbf{Q}^{\star}, B^{\star})\) a.s. in the product space

for a given probability space \((\Omega _{1}, \mathcal{F}_{1}, \mathbb{P}_{1})\), with \((\mathbf{Q}^{n}, B^{n})\) having the same law as \((\mathbf{P}^{n}, B^{0} )\) for all \(n\geq 1\) and the limit \((\mathbf{Q}^{\star}, B^{\star})\) having the same law as \((\mathbf{P}^{\star}, B^{0} )\). Let \((U^{n},V^{n})\rightarrow (U,V)\) be an a.s. convergent sequence in \(\mathbb{R}^{k} \times \mathbb{R}^{k}\) on a probability space \((\Omega _{2},\mathcal{F}_{2},\mathbb{P}_{2})\) for which the joint law of \((U^{n},V^{n})\) is \(\pi ^{n}\). Writing

for \(\mu \in \mathcal{P}(\mathbb{R}^{k}\times \mathbb{R}^{k}\times D_{ \mathbb{R}})\), there is an event \(E \in \mathcal{F}_{1} \) with \(\mathbb{P}_{1}[E]=1\) such that for every \(\omega \in E\), we have in ℝ the marginal convergence, as \(n\rightarrow \infty \),

\(\mathbb{P}_{2}\)-a.s., whenever \(t\) is a continuity point of each \(s\mapsto \mathcal{L}(\mathbf{Q}^{\star}(\omega ))^{h}_{s}\), \(h=1,\ldots ,n\).

Proof

By assumption, we can take an event \(E \in \mathcal{F}_{1}\) with \(\mathbb{P}_{1}[E]=1\) on which there is pointwise convergence \((\mathbf{Q}^{n}(\omega ),B^{n}(\omega ))\rightarrow (\mathbf{Q}^{ \star}(\omega ), B^{\star}(\omega ))\) for all \(\omega \in E\). Moreover, we have

as in the proof of Lemma 3.3, and hence we can restrict the event \(E\) in such a way that we still have \(\mathbb{P}_{1}[E]=1\), while also having that for all \(\omega \in E\),

\(\mathbb{P}_{2}\)-almost surely. We can view \(\mathbf{Q}^{n}(\omega )\rightarrow \mathbf{Q}^{\star}(\omega )\) as convergence in law for suitable random variables and hence apply Skorokhod’s representation theorem to yield

for \(n\geq 1\), where \(\hat{U}^{n} \rightarrow \hat{U}\) almost surely in ℝ and \(Z^{n}\rightarrow Z\) almost surely in \((D_{\mathbb{R}},\text{M1})\). Additionally, (3.27) ensures that \(V^{n}\cdot \hat{U}^{n} \geq 0\), and we have that \(V^{n},\hat{U}^{n}\) are bounded uniformly in \(n\geq 1\). In particular, each \(t\mapsto \Phi (\mathbf{Q}^{n}(\omega ), V^{n} , t)\) is of finite variation with total variation bounded by a constant uniformly in \(n\geq 1\) on any compact time interval, which we use at the end of the proof.

Next, by the constructions in Sect. 3.3, it follows from Proposition 3.4 that

and \(\mathbf{Q}^{\star}\) has the same law as \(\mathbf{P}^{\star}\) by assumption. So we may further restrict \(E\) such that taking an arbitrary \(\omega \in E\) in (3.28) implies \(\tau _{0}(Z^{n})\rightarrow \tau _{0}(Z)\) almost surely, while retaining \(\mathbb{P}_{1}[E]=1\) (the laws of \(Z^{n}, Z\) are fixed by the realisations \(\mathbf{Q}^{n}(\omega ), \mathbf{Q}^{\star}(\omega )\)). Consequently, we have \(\mathbf{1}_{\{t\geq \tau _{0}(Z^{n})\}}\rightarrow \mathbf{1}_{\{t \geq \tau _{0}(Z)\}}\) on an event of full probability minus the event \(\{\tau _{0}(Z)=t\}\), on the common probability space where these processes are defined (given by Skorokhod’s representation). Now fix an arbitrary \(\omega \in E\) and let \(t\) be an arbitrary continuity point of \(s\mapsto \mathcal{L}(\mathbf{Q}^{\star}(\omega ))^{h}_{s}\) for each \(h=1,\ldots ,n\). Then (3.26) and dominated convergence (along with right-continuity of each \(\mathcal{L}(\mathbf{Q}^{\star}(\omega ))^{h}\)) imply

Fixing a realisation \(v\) of \(V\), if \(v\cdot \hat{U}\) is non-zero (hence strictly positive) on an event of non-negligible probability (for \(\mathbb{P}_{1}\)), we must therefore have \(\tau _{0}(Z)\neq t\) on that event (up to a \(\mathbb{P}_{1}\)-nullset). Therefore, we can conclude from dominated convergence that

for our arbitrary \(\omega \in E\), \(\mathbb{P}_{2}\)-almost surely, for any common continuity point of \(s\mapsto \mathcal{L}(\mathbf{Q}^{\star}(\omega ))^{h}_{s}\) for \(h=1,\ldots ,n\). Using integration by parts for Riemann–Stieltjes integrals, we get

By Assumption 2.4, each function \(t\mapsto g_{u,v}(t)\) is continuous and nondecreasing. In particular, it is a standard fact of real analysis that the pointwise convergence \(g_{U^{n},V^{n}}(s) \rightarrow g_{U,V}(s) \) is in fact uniform over \(s\in [0,t]\) (alternatively, in the spirit of the present paper, one gets M1-relative compactness from the monotonicity, and the a priori pointwise convergence to a continuous limit then yields the uniform convergence to that limit). Since the total variation of \(s\mapsto \Phi (\mathbf{Q}^{n}(\omega ), V^{n} , s)\) on \([0,t]\) is bounded uniformly in \(n\geq 1\), the first term on the right-hand side of (3.30) vanishes as \(n\rightarrow \infty \). By (3.29), the second term on the right-hand side tends to \(g_{U,V}(t)\Phi (\mathbf{Q}^{\star}(\omega ), V , t)\) whenever \(t\) is a continuity point. Finally, \(dg_{U,V}(s)\) induces a well-defined Lebesgue–Stieltjes measure, and we get pointwise convergence of the integrands on a dense set of times \(s\in [0,t]\) by (3.29); so dominated convergence and another integration by parts complete the proof. □

We use the previous lemma several times. A first application is the following convergence result for the total feedback felt by each particle. This result is important for practical implementations of the model, showing that after fixing a particular type of bank from the true financial system, the actual feedback from defaults felt by this bank can be approximated via only the \(k\) feedback processes for the mean-field model.

Proposition 3.6

Let Assumption 2.4be satisfied and fix any given pair of indexing vectors \((u^{i},v^{i})\). Let the limit point \((\mathbf{P}^{\star},B^{0})\) be achieved along a subsequence (still indexed by \(n\)). Let \(g_{u,v}(s) := g(u,v,\mathbb{E}[U],\mathbb{E}[V],s)\). As \(n\rightarrow \infty \), the total feedback

felt by the \(i\)th particle converges in law at the process level on \(D_{\mathbb{R}}([0,T_{0}])\), for any \(T_{0}\in \mathbb{T}_{\star}\), to

where \(\mathcal{L}^{1,\star},\ldots ,\mathcal{L}^{k,\star}\) are defined by (3.22) on \((\Omega _{0},\mathcal{B}(\Omega _{0}),\mathbb{P}^{\star}_{0})\).

Proof

First of all, Assumption 2.4 implies that the total feedback processes (3.31) are nondecreasing on \([0,T]\) for each \(i=1,\ldots ,n\) and \(n\geq 1\). Due to Lemma 3.1, it is thus straightforward to verify the conditions (3.10)–(3.13) for these processes in place of the different particle trajectories \(t\mapsto X^{i}_{t}\), and so the arguments of Proposition 3.2 yield tightness of (3.31) in \(D_{\mathbb{R}}([0,T_{0}])\), for any \(T_{0}\in \mathbb{T}_{\star}\), under the M1-topology. Note that we can write

where each \(\mathcal{L}(\mu )\) is defined as in (3.26). Sending \(n\rightarrow \infty \), the second term on the right-hand side vanishes uniformly in \(i\leq n \) and \(t\in [0,T]\) by the assumptions on \(u^{i}\), \(v^{i}\) and \(g_{i}\) in Assumption 2.4, and so we only need to consider the convergence of the first term. To this end, we can see from the definition of \(\mathbb{P}_{u,v}^{\mu ,w}\) in Sect. 3.3 that as stochastic processes,

almost surely for the probability space \((\Omega _{0},\mathcal{B}(\Omega _{0}),\mathbb{P}^{\star}_{0})\) from Sect. 3.3. That is, we have in fact \(\mathcal{L}^{h;\star}=\mathcal{L}(\mathbf{P}^{\star})^{h}\) for each \(h=1,\ldots ,k\). From the definition of \(\mathbb{T}_{\star}\) in (3.23) and the relation between \(\mathbb{P}^{\star}_{u,v}\) and \(\mathbb{P}_{0}^{\star}\) in (3.20), we can furthermore see that