Abstract

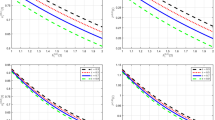

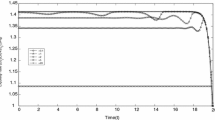

We consider the problem of maximizing the expected utility for a power investor who can allocate his wealth in a stock, a defaultable security, and a money market account. The dynamics of these security prices are governed by geometric Brownian motions modulated by a hidden continuous-time finite-state Markov chain. We reduce the partially observed stochastic control problem to a complete observation risk-sensitive control problem via the filtered regime switching probabilities. We separate the latter into predefault and postdefault dynamic optimization subproblems and obtain two coupled Hamilton–Jacobi–Bellman (HJB) partial differential equations. We prove the existence and uniqueness of a globally bounded classical solution to each HJB equation and give the corresponding verification theorem. We provide a numerical analysis showing that the investor increases his holdings in stock as the filter probability of being in high-growth regimes increases, and decreases his credit risk exposure as the filter probability of being in high default risk regimes gets larger.

Similar content being viewed by others

References

Bélanger, A., Shreve, S., Wong, D.: A general framework for pricing credit risk. Math. Finance 14, 317–350 (2004)

Bielecki, T., Jang, I.: Portfolio optimization with a defaultable security. Asia-Pac. Financ. Mark. 13, 113–127 (2006)

Bielecki, T., Rutkowski, M.: Credit Risk: Modelling, Valuation and Hedging. Springer, New York (2001)

Bo, L., Capponi, A.: Optimal investment in credit derivatives portfolio under contagion risk. Math. Financ. (2014, forthcoming). Available online http://onlinelibrary.wiley.com/doi/10.1111/mafi.12074/abstract. doi:10.1111/mafi.12074

Bo, L., Wang, Y., Yang, X.: An optimal portfolio problem in a defaultable market. Adv. Appl. Probab. 42, 689–705 (2010)

Capponi, A., Figueroa-López, J.E.: Dynamic portfolio optimization with a defaultable security and regime-switching markets. Math. Finance 24, 207–249 (2014)

Carr, P., Linetsky, V., Mendoza-Arriaga, R.: Time-changed Markov processes in unified credit-equity modeling. Math. Finance 20, 527–569 (2010)

Di Francesco, M., Pascucci, A., Polidoro, S.: The obstacle problem for a class of hypoelliptic ultraparabolic equations. Proc. R. Soc., Math. Phys. Eng. Sci. 464, 155–176 (2008)

El Karoui, N., Jeanblanc, M., Jiao, Y.: What happens after a default: the conditional density approach. Stoch. Process. Appl. 120, 1011–1032 (2010)

Elliott, R.J., Aggoun, L., Moore, J.B.: Hidden Markov Models: Estimation and Control. Springer, Berlin (1994)

Elliott, R.J., Siu, T.K.: A hidden Markov model for optimal investment of an insurer with model uncertainty. Int. J. Robust Nonlinear Control 22, 778–807 (2012)

Frey, R., Runggaldier, W.J.: Credit risk and incomplete information: a nonlinear-filtering approach. Finance Stoch. 14, 495–526 (2010)

Frey, R., Runggaldier, W.J.: Nonlinear filtering in models for interest-rate and credit risk. In: Crisan, D., Rozovski, B. (eds.) Oxford Handbook on Nonlinear Filtering, pp. 923–959. Oxford University Press, London (2011)

Frey, R., Schmidt, T.: Pricing and hedging of credit derivatives via the innovations approach to nonlinear filtering. Finance Stoch. 16, 105–133 (2012)

Friedman, A.: Partial Differential Equations of Parabolic Type. Prentice Hall, New York (1964)

Fujimoto, K., Nagai, H., Runggaldier, W.J.: Expected log-utility maximization under incomplete information and with Cox-process observations. Asia-Pac. Financ. Mark. 21, 35–66 (2014)

Fujimoto, K., Nagai, H., Runggaldier, W.J.: Expected power-utility maximization under incomplete information and with Cox-process observations. Appl. Math. Optim. 67, 33–72 (2013)

Giesecke, K., Longstaff, F., Schaefer, S., Strebulaev, I.: Corporate bond default risk: a 150-year perspective. J. Financ. Econ. 102, 233–250 (2011)

Jacod, J., Shiryaev, A.: Limit Theorems for Stochastic Processes. Springer, New York (2003)

Jiao, Y., Kharroubi, I., Pham, H.: Optimal investment under multiple defaults risk: a BSDE-decomposition approach. Ann. Appl. Probab. 23, 455–491 (2013)

Jiao, Y., Pham, H.: Optimal investment with counterparty risk: a default density approach. Finance Stoch. 15, 725–753 (2011)

Kharroubi, I., Lim, T.: Progressive enlargement of filtrations and backward SDEs with jumps. J. Theor. Probab. 27, 683–724 (2014)

Kliemann, W., Koch, G., Marchetti, F.: On the unnormalized solution of the filtering problem with counting process observations. IEEE Trans. Inf. Theory 36, 1415–1425 (1990)

Kraft, H., Steffensen, M.: Portfolio problems stopping at first hitting time with application to default risk. Math. Methods Oper. Res. 63, 123–150 (2005)

Kraft, H., Steffensen, M.: Asset allocation with contagion and explicit bankruptcy procedures. J. Math. Econ. 45, 147–167 (2009)

Kraft, H., Steffensen, M.: How to invest optimally in corporate bonds. J. Econ. Dyn. Control 32, 348–385 (2008)

Liechty, J., Roberts, G.: Markov chain Monte Carlo methods for switching diffusion models. Biometrika 88, 299–315 (2001)

Linetsky, V.: Pricing equity derivatives subject to bankruptcy. Math. Finance 16, 255–282 (2006)

Nagai, H., Runggaldier, W.: PDE approach to utility maximization for market models with hidden Markov factors. In: Dalang, R.C., Dozzi, M., Russo, F. (eds.) Seminar on Stochastic Analysis, Random Fields, and Applications V. Progress in Probability, vol. 59, pp. 493–506. Birkhäuser, Basel (2008)

Pascucci, A.: PDE and martingale methods in option pricing. Bocconi & Springer Series. Springer, Milan. Bocconi University Press, Milan (2011)

Pham, H.: Stochastic control under progressive enlargement of filtrations and applications to multiple defaults risk management. Stoch. Process. Appl. 120, 1795–1820 (2010)

Protter, P., Shimbo, K.: No arbitrage and general semimartingales. In: Ethier, S.N., Feng, J., Stockbridge, R.H. (eds.) Markov Processes and Related Topics: A Festschrift for Thomas G. Kurtz. IMS Collections, vol. 4, pp. 267–283 (2008)

Rogers, C., Williams, D.: Diffusions, Markov Processes, and Martingales: Itô Calculus. Wiley, New York (1987)

Sass, J., Haussmann, U.: Optimizing the terminal wealth under partial information: the drift process as a continuous time Markov chain. Finance Stoch. 8, 553–577 (2004)

Siu, T.K.: A BSDE approach to optimal investment of an insurer with hidden regime switching. Stoch. Anal. Appl. 31, 1–18 (2013)

Sotomayor, L., Cadenillas, A.: Explicit solutions of consumption-investment problems in financial markets with regime switching. Math. Finance 19, 251–279 (2009)

Tamura, T., Watanabe, Y.: Risk-sensitive portfolio optimization problems for hidden Markov factors on infinite time horizon. Asymptot. Anal. 75, 169–209 (2011)

Wong, E., Hajek, B.: Stochastic Processes in Engineering Systems. Springer, Berlin (1985)

Zariphopoulou, T.: Investment–consumption models with transaction fees and Markov-chain parameters. SIAM J. Control Optim. 30, 613–636 (1992)

Acknowledgements

The authors gratefully acknowledge two anonymous reviewers and Wolfgang Runggaldier for providing constructive and insightful comments, which improved significantly the quality of the manuscript. Agostino Capponi would also like to thank Ramon van Handel for very useful discussions and insights provided in the original model setup.

Author information

Authors and Affiliations

Corresponding author

Additional information

The second author’s research was partially supported by the NSF Grant: DMS-1149692.

Appendices

Appendix A: Proofs related to Sect. 3

Lemma A.1

Let

Then the dynamics of \((q_{t}^{i})_{t\geq0}\), \(i=1,\dots,N\), under the measure \(\hat{\mathbb{P}}\) is given by the system of stochastic differential equations (SDEs)

Proof

Let us introduce the notation \(H_{t}^{i}:=\mathbf{1}_{\{X_{t}=e_{i}\}}\). Note that \(X_{t}=(H^{1}_{t},\dots,H^{N}_{t})^{\top}\) and, by (2.1),

From (3.14) and (3.10) we deduce that, under \({\hat{\mathbb{P}}}\),

which yields that

Since \((Y_{t})_{t\geq0}\) and \((H_{t})_{t\geq0}\) are independent of \((X_{t})_{t\geq0}\) (and hence of \(H^{i}\)), under \({\hat{\mathbb{P}}}\), we have (see also [38, Lemma 7.3.1]) that, \({\hat{\mathbb {P}}}\)-almost surely,

Thus, applying Itô’s formula, we obtain

Since \((\varphi_{i}(t))_{t\geq0}\) is an \(((\mathcal{F}_{t}^{X})_{t\geq 0}, {\hat{\mathbb{P}}})\)-martingale and \(\mathcal{G}_{T}^{I}\) is independent of \(\mathcal{F}_{T}^{X}\) under \({\hat{\mathbb{P}}}\), we have that \(\mathbb{E}^{{\hat{\mathbb{P}}}}[\int_{0}^{t} L_{s-} d{\varphi_{i}(s)} | {\mathcal{G}_{t}^{I}} ] = 0\). Therefore, taking the \(\mathcal{G}_{t}^{I}\)-conditional expectations in (A.3), we obtain

where we have used that if \(\phi\) is \(\mathbb{G}\)-predictable, then (see, e.g., [38, Lemma 7.3.2])

Observing that \(dY_{t} = {\varSigma_{Y}} d \hat{W}_{t}\) under \({\hat{\mathbb {P}}}\), using that \(Q(t,{e_{i}},\pi_{t})\) and \(\eta(t,e_{i}, \pi_{t})\) are \((\mathcal {G}_{t}^{I})_{t\geq0}\)-adapted and that the Markov chain generator \(A(t)\) is deterministic, we obtain (A.2) upon taking the differential of (A.4). □

Lemma A.2

We have the following identities:

where \(q_{t}^{i}\), \(\hat{L}_{t}\), and \(p_{t}^{i}\) are defined, respectively, by (A.1), (3.20), and (3.18).

Proof

We first establish relation (A.5) by comparing the dynamics of \((q_{t}^{i})\) and of \(({\hat{L}_{t} p_{t}^{i}})\). The former is known from Lemma A.1 and given in (A.2). Next, we derive the latter. We have

From (3.21) and (3.19) we obtain

Using these equations, along with (3.19), we obtain

Next, observe that

Moreover,

Using (A.8) and (A.9) and straightforward simplifications, we may simplify (A.7) to

Using that \(dY_{t} = \varSigma_{Y} d\hat{W}_{t}\), we have that (A.5) holds via a direct comparison of (A.10) and (A.2). Next, we establish (A.6). Using (A.5) and \(\sum _{i=1}^{N} p_{t}^{i} = 1\), we deduce that

hence obtaining that \(\sum_{i=1}^{N} q_{t}^{i} = \hat{L}_{t}\). Using again (A.5), this gives

This completes the proof. □

Proof of Proposition 3.3

Using (3.13), (A.1), and relation (A.5) established in Lemma A.2, we have that

thus proving the statement. □

Appendix B: Proofs related to Sect. 5

We start with a lemma that will be needed in the section where the verification theorem is proved.

Lemma B.1

For any \(T>0\) and \(i\in\{1,\dots,N\}\), we have:

-

(1)

\({\mathbb{P}}[p^{i}_{t}> 0\ \textit{for}\ \textit{all}\ t\in[0,T)]=1\).

-

(2)

\({\mathbb{P}}[p^{i}_{t}< 1\ \textit{for}\ \textit{all}\ t\in[0,T)]=1\).

Proof

Define \(\varsigma= \inf\{t : p^{i}_{t} = 0\} \wedge T\). If \(p^{i}\) can hit zero, then \(\mathbb{P}[p^{i}_{\varsigma} = 0] > 0\). Recall that \(p_{t}^{i} = \frac{q_{t}^{i}}{\sum_{j} q_{t}^{j}}\) from (A.6); hence, \(p_{\varsigma}^{i} = \frac{q_{\varsigma}^{i}}{\sum_{j} q_{\varsigma}^{j}}\), where

by the optional projection property; see [33, Thm. VI.7.10]. Define the two-dimensional (observed) log-price process \(Y_{t} = (\log S_{t}, {\log P_{t}})^{\top}\). Because of \(q_{\varsigma}^{i} = \mathbb{E}^{\hat{\mathbb{P}}} [L_{\varsigma} \mathbf{1}_{\{X_{\varsigma}=e_{i}\}} \mid\mathcal {G}_{\varsigma}^{I} ]\) and using that \(L_{\varsigma}>0\), we can choose a modification \(g(Y,H,X_{\varsigma})\) of \(\mathbb{E}^{{\hat{\mathbb{P}}}}[L_{\varsigma} \mid\mathcal {G}_{\varsigma}^{I}, X_{\varsigma} ]\) such that \(g>0\) and for each \(e_{i}\), \(g(Y,H,{e_{i}})\) is \(G_{\varsigma}^{I}\)-measurable. By the tower property,

where the first equality follows because \(\varsigma\) is \(\mathcal {G}^{I}_{\varsigma}\)-measurable, and the last two because \(X\) is independent of \(\mathcal{G}^{I}\) under \(\hat{\mathbb{P}}\). Since \(\mathbb{P}[X_{t}=e_{i}] >0\) and \(g>0\), we get that \(q_{\varsigma}^{i}>0\) a.s., which contradicts that \(\mathbb{P}[p_{\varsigma}^{i}=0]>0\). This proves the first statement in the lemma. Next, we notice that

where the last equality follows from the first statement. This immediately yields the second statement. □

Proof of (5.14)

Let us first analyze the first term \(\beta_{\gamma}^{\top} \nabla_{\tilde{p}} w\) in the sup of (5.12). For brevity, we use \(\beta_{\varpi} := \beta_{\varpi}(t,\tilde{p},0)\). By definition of \(\beta_{\gamma}\) and using the maximizer \(\pi:=\pi^{*}\) in (5.13), we have

Further, again using the expression for \(\pi=\pi^{*}\), the second term in the sup is equal to

The third term in the sup may be simplified to

Using (B.1)–(B.3), we obtain that

and therefore, after rearrangement, we obtain (5.14). □

Proof of Theorem 5.3

In order to ease the notational burden, throughout the proof, we write \(\tilde{p}\) for \(\tilde{p}^{\circ}\), \(\tilde{p}_{s}\) for \(\tilde{p}^{t}_{s}\), \(\pi\) for \(\pi^{t}\), \(\tilde{\mathbb{P}}\) for \({\tilde{\mathbb{P}}^{t}}\), ℙ for \({\mathbb{P}^{t}}\), \(\tilde {W}\) for \(\tilde{W}^{t}\), \(X\) for \(X^{t}\), and \(\mathcal{G}_{s}^{I}\) for \(\mathcal{G}_{s}^{t,I}\). Let us first remark that

Indeed, set \(\tilde{p}^{N}_{s}=1-\sum_{j=1}^{N-1}\tilde{p}^{j}_{s}\) and recall from Remark 3.4 that the process \(\tilde{p}^{i}\) is given by

Therefore, using Lemma B.1, we deduce that all the \(\tilde{p}^{i}\), \(i=1,\dots,N\), remain positive in \([t,T]\) a.s., and hence (B.4) is satisfied.

Next, we prove that the feedback strategy \(\widetilde{\pi}_{s}:=(\tilde {\pi}^{S}_{s},\tilde{\pi}^{P}_{s})^{\top}\), \(\tilde{\pi}^{P}_{s} := 0\), is admissible, that is,

We have that (B.5) follows from (B.4) and the fact that \((\widetilde{\pi}^{S}(s,\tilde{p}))^{2}\) is uniformly bounded on \([0,T]\times\widetilde{\Delta}_{N-1}\). To see the latter property, note that

The first term on the right-hand side is clearly bounded since \(|\tilde{\mu}(\tilde{p})|\leq\max_{i}|\mu_{i}|\) for any \(\tilde{p}\in \widetilde{\Delta}_{N-1}\). For the second term, using the definition of \(\underline{\kappa}\) given in (5.3), we have

where \(\tilde{p}^{N}:=1-\sum_{i=1}^{N-1}\tilde{p}^{i}\). The last expression is bounded since each partial derivative term \(\partial_{\tilde{p}^{j}}\underline{w}(s,\tilde{p})\), \(j=1,\dots,N-1\), is bounded on \([0,T]\times\widetilde{\Delta}_{N-1}\) by Lemma 5.1 and following Remark 5.2. Therein we have shown \(\mathcal{C}_{P}^{2,\alpha}\) regularity for \(\underline{w}(s,\tilde{p})\), which directly implies bounded first- and second-order space derivatives on \(\widetilde{\Delta}_{N-1}\). Now fix an arbitrary feedback control \(\pi_{s}^{S}:=\pi^{S}(s,\tilde {p}_{s})\) such that \((\pi^{S},\pi^{P})\in{\mathcal{A}}(t,T;\tilde{p},1)\), where \(\pi^{P}_{s}\equiv0\) and \({\mathcal{A}}(t,T;\tilde{p},1)\) is as in Definition 4.1, and define the process

where

In what follows, we write for simplicity \(M^{\pi}\) for \(M^{\pi^{S}}\) and \(\pi\) for \(\pi^{S}\). Note that the process \((M^{\pi}_{s})_{t\leq s\leq T}\) is uniformly bounded. Indeed, (B.7) is convex in \(\pi^{S}\), and by minimizing it over \(\pi^{S}\), it follows that for any \(\tilde{p}\in\tilde{\Delta}_{N-1}\),

Therefore, since \(\underline{w}\in C([0,T]\times\tilde{\Delta }_{N-1})\), there exists a constant \(K<\infty\) for which

We prove the two assertions of Theorem 5.3 through the following steps:

(i) Define the process \(\mathcal{Y}_{s}=e^{\underline{w}(s,\tilde {p}_{s})}\). By Itô’s formula and the generator formula (4.7) with \(f(s,\tilde{p})=e^{\underline{w}(s,\tilde{p})}\),

Using the expression of \(\underline{\eta}\) in (B.7) and some rearrangement, we may write \(M^{\pi}\) as

with

Clearly, \(R(u,\tilde{p},\pi)\) is a concave function in \(\pi\) since \(R_{\pi\pi}=-\sigma^{2}\gamma(1-\gamma) < 0\). If we maximize \(R(u,\tilde{p},\pi)\) as a function of \(\pi\) for each \((u,\tilde{p})\), then we find that the optimum is given by (5.7). Upon substituting (5.7) into (B.9), we get that

where the last equality follows from (5.5). Therefore, we get the inequality

with equality if \(\pi=\widetilde{\pi}^{S}\). From (5.3), \(\sup_{\tilde{p}\in\tilde{\Delta }_{N-1}}\|\underline{\kappa}(\tilde{p})\|^{2}\leq2\max_{i}\{\mu_{i}\} /\sigma\). Since the partial derivatives \(\partial_{\tilde {p}^{j}}\underline{w}(s,\tilde{p})\) are uniformly bounded on \([0,T]\times\tilde{\Delta}_{N-1}\) (see also the argument after (B.6)), (B.8) implies that

for some nonrandom constant \(B<\infty\). We conclude that

with equality if \(\pi=\widetilde{\pi}^{S}\).

(ii) For simplicity, let us write \(\widetilde{\pi}_{s}:=\widetilde{\pi }^{S}(s,\tilde{p}_{s})\). First, note that from the fact that we have equality in (B.10) when \(\pi=\tilde{\pi }\) it follows that

Similarly, for every feedback control \(\pi_{s}=\pi(s,\tilde{p}_{s})\) such that \((\pi,0)\in\mathcal{A}(t,T;\tilde{p},1)\),

where the inequality in the previous equation comes from (B.10), and the last equality follows from (B.11). The previous relationships show the optimality of \(\tilde{\pi}\) and prove the assertions (1) and (2). □

Proof of Theorem 5.4

For brevity, define the operator

and denote by

the nonlinear term of the PDE (5.17). Notice that since \(\tilde{h} > 0\) by construction, we have \(H\le0\). Moreover, \(u\mapsto H(t,{\tilde{p}},u)\) is smooth and Lipschitz-continuous on \([\bar{c},+\infty)\) for any \(\bar{c}>0\), uniformly with respect to \((t,{\tilde{p}})\). We set

where \(c\) is a suitably large positive constant such that

Then we define recursively the sequence \((\bar{\psi}_{j})_{j \in\mathbb {N}}\) by

where \(\lambda\) is the Lipschitz constant of \(u\mapsto H(\cdot,\cdot,u)\) on \([\bar{c},+\infty)\), and \(\bar{c}\) is the strictly positive constant defined as

Let us recall that the linear problem (B.13) has a classical solution in \(C^{2,\alpha}_{P}\) whose existence can be proved as in Lemma 5.1; see also the following Remark 5.2. Next, we prove by induction that

-

(i)

\((\bar{\psi}_{j})\) is a decreasing sequence, that is,

$$ \bar{\psi}_{j+1}\le\bar{\psi}_{j},\quad j\ge0; $$(B.15) -

(ii)

\((\bar{\psi}_{j})\) is uniformly strictly positive, and in particular

$$ \bar{\psi}_{j+1}\ge\bar{c},\quad j\ge0, $$(B.16)with \(\bar{c}\) as in (B.14).

First, we observe that

Next, we prove (B.15) and (B.16) for \(j=0\). By (B.17) and (B.12) we have

where the inequality follows from the fact that \(c\) is chosen as in (B.12) and \(\bar{\psi}_{0} \geq1\) as observed in (B.17). Since the process \(\tilde{p}\) never reaches the boundary of the simplex by Lemma B.1, it follows from the Feynman–Kac representation theorem (or, equivalently, the maximum principle) that \(\bar{\psi}_{1}\le \bar{\psi}_{0}\). Indeed, we have

where the last inequality follows directly from (B.18). This proves (B.15) when \(j=0\). Using the recursive definition (B.13) along with the facts that \(H\le0\) and \(\lambda>0\) and inequality (B.19), we obtain

Then (B.16) with \(j=0\) follows again from the Feynman–Kac theorem; indeed, by (B.20) we have

where the last inequality follows from the positivity of the first expectation guaranteed by (B.20).

Next, we assume the inductive hypothesis, that is,

and prove (B.15), (B.16). Recalling that \(\lambda\) is the Lipschitz constant of the function \(u\mapsto H(\cdot,\cdot,u)\) on \([\bar{c},+\infty)\), by (B.22) we have

Thus, (B.15) follows from the Feynman–Kac theorem using the same procedure as in (B.18) and (B.19). Moreover, we have

where the inequality above follows by (B.15) and using that \(H\le 0\) and \(\lambda>0\). Then, as in (B.21), we have that (B.16) follows from the Feynman–Kac theorem.

In conclusion, for \(j\in\mathbb{N}\), we have

Now the thesis follows by proceeding as in the proof of Theorem 3.3 in [8]. Indeed, let us denote by \(\bar {\psi}\) the pointwise limit of \((\bar{\psi}_{j})\) as \(j\to+\infty\). Since \(\bar{\psi}_{j}\) is a solution of (B.13) and, by the uniform estimate (B.23), we can apply standard a priori Morrey–Sobolev-type estimates (see Theorems 2.1 and 2.2 in [8]) to conclude that for any \(\alpha\in(0,1)\), \(\|\bar{\psi}_{j}\|_{C_{P}^{1,\alpha}((0,T)\times\tilde{\Delta}_{N-1})}\) is bounded by a constant only dependent on ℬ, \(\alpha\), and \(\lambda\). Hence, by the classical Schauder interior estimate (see, e.g., Theorem 2.3 in [8]), we deduce that \(\|\bar{\psi}_{j}\|_{C_{P}^{2,\alpha}((0,T)\times\tilde{\Delta}_{N-1})}\) is bounded uniformly in \(j\in\mathbb{N}\). It follows that \((\bar{\psi}_{j})_{j\in\mathbb{N}}\) admits a subsequence (denoted by itself) that converges in \(C^{2,\alpha}\). Thus passing to the limit in (B.13) as \(j\to \infty\), we have

and \(\bar{\psi}(T,\cdot)=1\).

Finally, in order to prove that \(\bar{\psi}\in C((0,T]\times\tilde{\Delta}_{N-1})\), we use the standard argument of barrier functions. We recall that \(w\) is a barrier function for the operator \((\mathcal{B}+\frac{\bar{\varPsi}}{1-\gamma})\) on the domain \((0,T]\times\tilde{\Delta}_{N-1}\) at the point \((T,\bar{p})\) if \(w\in C^{2} (V \cap((0,T]\times\tilde{\Delta}_{N-1}))\), where \(V\) is a neighborhood of \((T,\bar{p})\), and we have

-

(i)

\((\mathcal{B}+\frac{\bar{\varPsi}}{1-\gamma}) w \leq-1\) in \(V \cap((0,T)\times\tilde{\Delta}_{N-1})\);

-

(ii)

\(w > 0\) in \(V \cap((0,T)\times\tilde{\Delta}_{N-1}) \setminus \{(T,\bar{p})\}\) and \(w(T,\bar{p}) = 0\).

Next, we fix \(\bar{p}\in\tilde{\Delta}_{N-1}\). Following [15, Sect. 3.4], it is not difficult to check that

is a barrier at \((T,\bar{p})\), provided that \(c_{1},c_{2}\) are sufficiently large. Then we set

where \(k\) is a suitably large positive constant, independent of \(j\), such that

and \(\bar{\psi}_{j}\le v^{+}\) on \(\partial(V \cap((0,T)\times\tilde{\Delta}_{N-1}))\). The maximum principle yields \(\bar{\psi}_{j}\le v^{+}\) on \(V \cap ((0,T)\times\tilde{\Delta}_{N-1})\); analogously, \(\bar{\psi}_{j}\ge v^{-}\) on the domain \(V \cap ((0,T)\times\tilde{\Delta}_{N-1})\), and letting \(j\to\infty\), we get

Therefore, we deduce that

which concludes the proof. □

Proof of Theorem 5.5

As in the proof of Theorem 5.3, to ease the notational burden, we write \(\tilde{p}\) for \(\tilde{p}^{\circ}\), \(\tilde{p}_{s}\) for \(\tilde{p}^{t}_{s}\), \(\pi\) for \(\pi^{t}\), \(\tilde{W}\) for \(\tilde{W}^{t}\), \(\tilde{\mathbb{P}}\) for \(\tilde{\mathbb{P}}^{t}\), ℙ for \(\mathbb{P}^{t}\), and \(\mathcal{G}_{s}^{I}\) for \(\mathcal{G}_{s}^{t,I}\). Similarly to the proof of Theorem 5.3, it is easy to see that the strategy \({\widetilde{\pi}_{s}:=(\widetilde{\pi}^{S}_{s},\widetilde{\pi }^{P}_{s})^{\top}}=(\widetilde{\pi}^{S}(s,{\tilde{p}_{s- },H^{t}_{s- }}), {\widetilde{\pi}^{P}(s,{\tilde{p}_{s- },H_{s- }})})^{\top}\) defined from (5.20), (5.21) is admissible, that is, satisfies (4.5). This essentially follows from condition (2.6) and the fact that both \(\underline{w}(s,\tilde{p})\) and \(\bar{w}(s,\tilde{p})\) belong to \(\mathcal{C}_{P}^{2\alpha}\); hence, their first- and second-order space derivatives are bounded on \([0,T]\times\widetilde{\Delta}_{N-1}\). Here, it is also useful to recall that \({\mathbb{P}}[\tilde{p}_{s}\in \tilde{\Delta}_{N-1},\ t\leq s\leq T]=1\) as shown in the proof of Theorem 5.3.

For a feedback control \(\pi_{s}:=(\pi_{s}^{S},\pi^{P}_{s}):=(\pi ^{S}(s,\tilde{p}_{s- },H_{s- }),\pi^{P}(s,\tilde{p}_{s- },H_{s- }))\) such that \((\pi^{S},\pi^{P})\in\bar{\mathcal{A}}(t,T;\tilde{p},0)\), define the process

where \(w(s,\tilde{p},z):=(1-z)\bar{w}(s,\tilde{p})+z\underline {w}(s,\tilde{p})\), and \(\tilde{\eta}\) is defined as in (4.13). Note that \(\tilde{\eta}\) can be written as

and thus \(-\tilde{\eta}\) is concave in \(\pi\). This in turn implies that there exists a nonrandom constant \(A<\infty\) such that

since \(\underline{w}, \bar{w}\in C([0,T]\times\tilde{\Delta}_{N-1})\). We prove the two assertions of Theorem 5.5 through the following steps:

(i) Define the processes \(\mathcal{Y}_{s}=e^{w(s,\tilde{p},H_{s})}\) and \(\mathcal{U}_{s}=e^{-\gamma\int_{t}^{s} {\tilde{\eta}(u,\tilde {p}_{u},\pi_{u})} \,du}\). By Itô’s formula, the generator formula (4.7) with \(f(s,\tilde{p},z)=e^{w(s,\tilde{p},z)}\), and the same arguments as those used to derive (4.11),

where

Using the expression of \({\eta}\) in (4.13) and arguments similar to those used to derive (4.13), we may write \(M^{\pi}\) as

with

Clearly, \(R(u,\tilde{p},\pi,z)\) is a concave function in \(\pi\) for each \((u,\tilde{p},z)\), and this function reaches its maximum at \(\tilde{\pi }(u,\tilde{p},z)=(\tilde{\pi}^{S}(u,\tilde{p},z),\tilde{\pi }^{P}(u,\tilde{p},z))\) as defined in (5.19), (5.20). Upon substituting this maximum into (B.26) and rearrangements similar to those leading to (5.5) and (5.14) (depending on whether \(z=1\) or \(z=0\)), we get

in light of (5.5) or (5.14), respectively. Therefore, we get the inequality

with equality if \(\pi=\widetilde{\pi}\). Note that \(\mathbb{E}^{{\tilde{\mathbb{P}}}}[\mathcal{M}^{c}_{T}]=0\) since it is possible to find a nonrandom constant \(B\) such that

in view of (B.24) and the fact that the partial derivatives of \(\underline{w}\) and \(\bar{w}\) are uniformly bounded on \([0,T]\times\widetilde{\Delta}_{N-1}\). The latter statement follows from the fact that both \(\underline{w}\) and \(\bar{w}\) are \(\mathcal{C}_{P}^{2,\alpha}\) on \(\widetilde{\Delta}_{N-1}\) in light of Lemma 5.1 and Theorem 5.4. To deal with \(\mathcal{M}^{d}\), note that since \(\underline{w},\bar{w}\in C([0,T]\times\tilde{\Delta}_{N-1})\) and \((\mathcal{U}_{s})_{t\leq s\leq T}\) is uniformly bounded (due to the fact that \(-\tilde{\eta}\) is concave), we have that the integrand of the second integral in (B.25) is uniformly bounded, and thus \(\mathbb{E}^{\tilde{\mathbb{P}}} [\mathcal{M}^{d}_{T}]=0\) as well. The two previous facts, together with the initial conditions \(H_{t}=0\) and \(\tilde{p}_{t}=\tilde{p}\), lead to

with equality if \(\pi=\widetilde{\pi}\).

(ii) The rest of the proof is similar to the postdefault case. Concretely, using the fact that we have equality in (B.28) when \(\pi =\tilde{\pi}\), we get

since \(w(T,\tilde{p}_{T},H_{T}):=(1-H_{T})\bar{w}(T,\tilde {p}_{T})+H_{T}\underline{w}(T,\tilde{p}_{T})\equiv0\). Also, by (B.28), for every feedback control \(\pi_{s}=\pi(s,\tilde{p}_{s},H_{s})\in \mathcal{A}(t,T;\tilde{p},0)\), we have

where the last equality follows from (B.29). This proves assertions (1) and (2). □

Rights and permissions

About this article

Cite this article

Capponi, A., Figueroa-López, J.E. & Pascucci, A. Dynamic credit investment in partially observed markets. Finance Stoch 19, 891–939 (2015). https://doi.org/10.1007/s00780-015-0272-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00780-015-0272-0