Abstract

This article describes F-IVM, a unified approach for maintaining analytics over changing relational data. We exemplify its versatility in four disciplines: processing queries with group-by aggregates and joins; learning linear regression models using the covariance matrix of the input features; building Chow-Liu trees using pairwise mutual information of the input features; and matrix chain multiplication. F-IVM has three main ingredients: higher-order incremental view maintenance; factorized computation; and ring abstraction. F-IVM reduces the maintenance of a task to that of a hierarchy of simple views. Such views are functions mapping keys, which are tuples of input values, to payloads, which are elements from a ring. F-IVM supports efficient factorized computation over keys, payloads, and updates. It treats uniformly seemingly disparate tasks: While in the key space, all tasks require general joins and variable marginalization, in the payload space, tasks differ in the definition of the sum and product ring operations. We implemented F-IVM on top of DBToaster and show that it can outperform classical first-order and fully recursive higher-order incremental view maintenance by orders of magnitude while using less memory.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Supporting modern applications that rely on accurate and real-time analytics computed over large and continuously evolving databases is a challenging data management problem [4]. Special cases are the classical problems of incremental view maintenance (IVM) [15, 32] and stream query processing [1, 36].

Recent efforts studied the problem of computing machine learning (ML) tasks over static databases. The predominant approach loosely integrates the database systems with the statistical packages [26, 34, 40, 54, 55]: First, the database system computes the input to the statistical package by joining the database relations. It then exports the join result to the statistical package for training ML models. This approach precludes real-time analytics due to the expensive export/import steps. Systems like Morpheus [35] and LMFAO [59] push the ML task inside the database and learn ML models over static normalized data. In particular, LMFAO and its precursors F [58] and AC/DC [30] decompose the task of learning classification and regression models over arbitrary joins into factorized computation of aggregates over joins and fixpoint computation of model parameters. This factorization may significantly lower the complexity by avoiding the computation of Cartesian products lurking within joins [5, 52]. Both the tight integration of the database computation step and of the statistical computation step as well as the factorized computation are pre-requisites for real-time analytics.

This article describes F-IVM,Footnote 1 a unified approach for maintaining analytics over changing relational data. We exemplify its versatility in four disciplines: processing queries with group-by aggregates and joins; learning linear regression models using the covariance matrix of the input features; building Chow-Liu trees using pairwise mutual information matrix of the input features; and matrix chain multiplication.

F-IVM has three main ingredients: higher-order incremental view maintenance (IVM); factorized computation and data representation; and ring abstraction.

The first ingredient reduces the maintenance task to that of a hierarchy of simple views. Such views are functions mapping keys, which are tuples of input values, to payloads, which are elements from a ring. In contrast to classical (first-order) IVM, which computes changes to the query result on the fly and does not use extra views, F-IVM can significantly speed up the maintenance task and lower its complexity by using carefully chosen views. Yet F-IVM can use substantially fewer views than the fully recursive IVM, which is used by the state-of-the-art IVM system DBToaster [32]. In our experiments, F-IVM outperforms first-order and higher-order IVM by up to two orders of magnitude in both runtime and memory requirements.

The second ingredient supports efficient computation and representation for keys, payloads, and updates. F-IVM exploits insights from query evaluation algorithms with best known complexity and optimizations that push aggregates past joins [3, 5, 52]. It can process bulk updates expressed as low-rank decompositions [33, 61] and maintain a factorized representation of query results, which is essential to achieve low complexity for free-connex acyclic and q-hierarchical queries.

The third ingredient allows F-IVM to treat uniformly seemingly disparate tasks. In the key space, all tasks require joins and variable marginalization. In the payload space, tasks differ in the ring operations. To maintain linear regression models and Chow-Liu trees under updates, F-IVM uses a new ring that captures the maintenance of a covariance matrix over continuous and categorical features from the input database. Furthermore, it composes rings to capture the data-dependent computation for complex analytics. Thanks to the ring abstraction, F-IVM is highly extensible: efficient maintenance for new analytics over relational databases is readily available as long as they come with appropriate sum and product ring operations.

F-IVM was introduced in prior work [46]. This article complements it as follows. Section 2 overviews the F-IVM design. Section 7 shows how F-IVM achieves the best known complexity for q-hierarchical and free-connex acyclic queries. Section 8 introduces the covariance ring over continuous and categorical features. Section. 9 includes new experiments on: the covariance matrix for continuous and categorical features; end-to-end linear regression models; Chow-Liu trees; q-hierarchical queries with eager and lazy approaches and payloads carrying the listing or the factorized representation of the query result; and path queries of increasing length on graph data to stress-test the scalability of the IVM engines.

1.1 F-IVM by example

Consider the following SQL query over a database \({\mathcal {D}}\) with relations R(A, B), S(A, C, E), and T(C, D):

A naïve query evaluation approach first computes the join and then the aggregate. This takes \({\mathcal {O}}(N^3)\) time, where N is the size of \({\mathcal {D}}\). An alternative approach exploits the distributivity of SUM over multiplication to partially push the aggregate past joins and then combine the partial aggregates. For instance, one such partial sum over S can be expressed as the view V\(_\texttt {S}\):

In the view V\(_\texttt {S}\), we identify keys, which are tuples over (A, C), and payloads, which are aggregate values S\(_\texttt {E}\). Similarly, we compute partial sums over R and T as views V\(_\texttt {R}\) and V\(_\texttt {T}\). These views are joined as depicted by the view tree in Fig. 1, which is akin to a query plan with aggregates pushed past joins. This view tree computes the result of Q in \({\mathcal {O}}(N)\) time.

View tree for the query in Ex. 1.1. The propagation paths for updates to S (right red) and to T (left blue)

Consider now the problem of learning, for each pair (a, c) of (A, C)-values in the natural join of R, S, and T, a linear function \(f_{a,c}\) with parameters \(\theta _{0}\), \(\theta _{D}\) and \(\theta _{E}\) that predicts the label B given the features D and E:

Our insight is that the same view tree in Fig. 1 can compute the gradient vector used for learning \(f_{a,c}\), where we replace the SQL SUM and * operators.

As shown in Sect. 8.1, the gradient of the square loss objective function needs the computation of three types of aggregates: the scalar \(c \) that is the count aggregate SUM(1); the vector \(\varvec{s} \) of linear aggregates SUM(i), for \(i\in \{\texttt {B},\texttt {D},\texttt {E}\}\); and the matrix \(\varvec{Q} \) of quadratic aggregates SUM(\(i*j\)), where \(i,j\in \{\texttt {B},\texttt {D},\texttt {E}\}\). These aggregates capture the correlation between the features and the label.

We treat these aggregates as one compound aggregate \((c,\varvec{s},\varvec{Q})\) so we can share computation across them. This compound aggregate can be partially pushed past joins similarly to the SUM aggregate discussed before. Its values are carried in the key payloads of views in the view tree from Fig. 1. For instance, the partial compound aggregate \((c _\texttt {T},\varvec{s} _\texttt {T},\varvec{Q} _\texttt {T})\) at the view V\(_\texttt {T}\) computes, for each C-value, the count, sum, and sum of squares of the D-values in T. Similarly, the partial aggregate \((c _\texttt {S},\varvec{s} _\texttt {S},\varvec{Q} _\texttt {S})\) at the view V\(_\texttt {S}\) computes, for each pair (A, C), the count, sum, and sum of squares of E-values in S. In the view V\(_\texttt {ST}\), which is the join of V\(_\texttt {T}\) and V\(_\texttt {S}\), each key (a, c) is associated with the multiplication of the payloads for the keys c in V\(_\texttt {T}\) and (a, c) in V\(_\texttt {S}\). This multiplication works on compound aggregates: The scalar \(c _\texttt {ST}\) is the arithmetic multiplication of \(c _\texttt {T}\) and \(c _\texttt {S}\); the vector of linear aggregates \(\varvec{s} _\texttt {ST}\) is the sum of the scalar-vector products \(c _\texttt {T}\varvec{s} _\texttt {S}\) and \(c _\texttt {S}\varvec{s} _\texttt {T}\); finally, the matrix \(\varvec{Q} _\texttt {ST}\) of quadratic aggregates is the sum of the scalar-matrix products \(c _\texttt {T}\varvec{Q} _\texttt {S}\) and \(c _\texttt {S}\varvec{Q} _\texttt {T}\), and of the outer products of the vectors \(\varvec{s} _\texttt {T}\) and the transpose of \(\varvec{s} _\texttt {S}\) and also of \(\varvec{s} _\texttt {S}\) and the transpose of \(\varvec{s} _\texttt {T}\). Our approach shares the computation across the aggregates: The scalar aggregates are used to scale up the linear and quadratic aggregates, while the linear aggregates are used to compute the quadratic aggregates.

We now turn to incremental view maintenance. F-IVM operates over view trees. Whereas for non-incremental computation we only materialize the top view in the tree and the input relations, for incremental computation we may materialize additional views to speed up the maintenance task. Our approach is an instance of higher-order IVM, where an update to one relation may trigger the maintenance of several views.

Figure 1 shows the leaf-to-root maintenance paths under changes to \(\texttt {S}\) and \(\texttt {T}\). For updates \(\delta {\texttt {S}}\) to \(\texttt {S}\), each delta view \(\delta {V_\texttt {S}}\), \(\delta {V_\texttt {ST}}\), and \(\delta {\texttt {Q}}\) is computed using delta rules:

An update may consist of both inserts and deletes, which are encoded as keys with positive and respectively negative payloads. For the count aggregate, the payload is 1 for an insert and \(-1\) for a delete. For the compound aggregate, the payload is \((1, \textbf{0}_{5 \times 1}, \textbf{0}_{5 \times 5})\) for an insert and \((-1, \textbf{0}_{5 \times 1}, \textbf{0}_{5 \times 5})\) for a delete, where \(\textbf{0}_{n \times m}\) is the n-by-m matrix with all zero values.

F-IVM materializes and maintains views depending on the update workload. For updates to all input relations, it materializes each view in the view tree. For updates to R only, it materializes V\(_\texttt {ST}\); for updates to S only, it materializes V\(_\texttt {R}\) and V\(_\texttt {T}\); for updates to T only, it materializes V\(_\texttt {R}\) and V\(_\texttt {S}\). F-IVM takes constant time for updates to S and linear time for updates to R and T; these complexities are in the number of distinct keys in the views. In contrast, the first-order IVM computes one delta query per each updated relation and without the use of extra views. It takes linear time for updates to any of the three relations for our example query. The fully recursive higher-order IVM constructs a view tree for each delta query, so overall more views, including the view materializing the join of V\(_\texttt {R}\), V\(_\texttt {S}\), and \(\delta {\texttt {T}}\).

F-IVM thus needs the same view tree and views for our query with one SUM aggregate and even for the learning task with the ten SUM aggregates. In contrast, the first-order IVM needs to compute a distinct delta query for each of these aggregates for updates to any of the three relations. DBToaster, which is the state-of-the-art fully recursive IVM, computes 31 views, ten top views and 21 auxiliary ones. Whereas F-IVM shares the computation across these aggregates, the other IVM approaches do not. This significantly widens the performance gap between F-IVM and its competitors.

2 Overview of the F-IVM system

Figure 2 overviews the main components of F-IVM, annotated with the numbers of sections where they are discussed. Applications (Sect. 8), e.g., database analytics, training linear regression model and Chow-Liu trees, and linear algebra computation, rely on queries with natural joins and group-by aggregates, where each aggregate is expressed using the sum and product operations in a ring. Queries and rings serve as input to F-IVM, together with a stream of updates (tuple inserts and deletes) to the underlying database. Section 3 details the data model, the query language supported by F-IVM, and the ring algebraic structure.

The logical optimizer creates a variable order for the input query (Sect. 4). This is akin to a query plan, albeit expressed as a partial order on the query variables as opposed to a partial order on the relations to join. Classical query evaluation uses query plans that dictate the order in which the relations are joined. F-IVM uses variable orders that dictate the order in which the variables are marginalized. For each join variable, all relations with that variable are joined. This choice is motivated by the observation that relation-at-a-time query plans is suboptimal in general, whereas the evaluation by variable orders is worst-case optimal [43].

Finding a good variable order is a hard problem. Q-hierarchical queries [9] admit asymptotically best variable orders that can be found efficiently (Sect. 7). This also applies to queries, which become q-hierarchical on databases that satisfy functional dependencies.

Given a variable order for a query, the physical optimizer creates a view tree (Sect. 4), which is a tree of views to support the maintenance and output enumeration of the query. Updates to base relations are propagated bottom-up in the tree, while output enumeration requires top-down access in the view tree. Depending on which base relations are updatable (dynamic) or non-updatable (static), F-IVM decides to materialize and maintain views in the view tree to support efficient propagation of the updates and avoid recomputation. Section 5 discusses the view materialization problem, whereas Sect. 6 discusses efficient update propagation.

Each view is accessed via indices with key-payload entries. Its primary index is a hash map over all its keys (Sect. 3). F-IVM may also need secondary and even tertiary indices, which are hash maps over different subsets of its keys. Such indices are updated lazily: the index updates are buffered and only executed when index access is required. The views for q-hierarchical queries require the primary indices to support updates that are propagated bottom-up in the view tree, and secondary indices to support output enumeration that proceeds top-down in the view tree (Sect. 7). F-IVM implements equality-based joins using in-memory hash-based join operators. Aggregation is performed using variable marginalization. To marginalize a variable, F-IVM enumerates the entries with the same key, except for the marginalized variable, and applies the aggregation on these entries on the fly.

For a view tree and ring specification for each variable to be marginalized, the compiler outputs code in DBToaster’s intermediate representation language M3. DBToaster has its own optimizer and compiler that turns M3 code into highly optimized C++ code. This code takes the stream of input data updates, maintains the views, and enumerates the query output, relying on DBToaster’s runtime library for data ingestion.

3 Data model and query language

The data model of F-IVM is based on relations over rings and its query language allows for natural joins and group-by aggregates over such relations.

Definition 1

A ring \((\textbf{D}, +, *, \varvec{0}, \varvec{1})\) is a set \(\textbf{D} \) with two closed binary operations \(+\) and \(*\), the additive identity \(\varvec{0}\), and the multiplicative identity \(\varvec{1}\) such that for all \(a,b,c\in \textbf{D} \), the following axioms are satisfied:

-

1.

\(a +b = b+a\).

-

2.

\((a +b)+c = a +(b +c)\).

-

3.

\(\varvec{0}+a = a +\varvec{0}= a\).

-

4.

\(\exists -a \in \textbf{D}: a +(-a) = (-a) +a = \varvec{0}\).

-

5.

\((a *b) *c = a *(b *c)\).

-

6.

\(a *\varvec{1}= \varvec{1}* a = a\).

-

7.

\(a *(b +c) = a *b +a *c\) and \((a +b) *c = a *c +b *c\).

A semiring \((\textbf{D}, +, *, \varvec{0}, \varvec{1})\) satisfies all of the above properties except the additive inverse property (Property 4) and adds the axiom \(\varvec{0}*a = a *\varvec{0}= \varvec{0}\). A (semi)ring for which \(a *b = b *a\) is commutative. \(\square \)

Example 1

The number sets \({\mathbb {Z}}\), \({\mathbb {Q}}\), \({\mathbb {R}}\), and \({\mathbb {C}}\) with arithmetic operations \(+\) and \(\cdot \) and numbers 0 and 1 form commutative rings. The set \({\mathcal {M}}\) of \((n \times n)\) matrices forms a non-commutative ring \(({\mathcal {M}}, \cdot , +, 0_{n,n}, I_{n})\), where \(0_{n,n}\) and \(I_{n}\) are the zero matrix and the identity matrix of size \((n \times n)\). The set \({\mathbb {N}}\) of natural numbers is a commutative semiring but not a ring because it has no additive inverse. Further examples are the max-product semiring \(({\mathbb {R}}_{+}, \max , \times , 0, 1)\), the Boolean semiring \((\{ \text {true}, \text {false} \}, \vee , \wedge , \text {false}, \text {true})\), and the set semiring \((2^{U}, \cup , \cap , \emptyset , U)\) of all possible subsets of a given set U. \(\square \)

Data. A schema \({\mathcal {S}}\) is a set of variables. Let \(\textsf {Dom}(X)\) denote the domain of a variable X. A tuple \(\textbf{t} \) over schema \({\mathcal {S}}\) has the domain \(\textsf {Dom}({\mathcal {S}}) = \prod _{X \in {\mathcal {S}}}{\textsf {Dom}(X)}\). The empty tuple \(()\) is the tuple over the empty schema.

Let \((\textbf{D},\hspace{-0.05em} +,\hspace{-0.05em} *,\hspace{-0.05em} \varvec{0},\hspace{-0.05em} \varvec{1})\) be a ring. A relation \(\textsf{R}\) over schema \({\mathcal {S}}\) and the ring \(\textbf{D} \) is a function \(\textsf{R}: \textsf {Dom}({\mathcal {S}}) \rightarrow \textbf{D} \) mapping tuples over schema \({\mathcal {S}}\) to values in \(\textbf{D} \) such that \(\textsf{R}[\textbf{t} ] \ne \varvec{0}\) for finitely many tuples \(\textbf{t} \). The tuple \(\textbf{t} \) is called a key, while its mapping \(\textsf{R}[\textbf{t} ]\) is the payload of \(\textbf{t} \) in \(\textsf{R}\). We use \(\textsf {sch}(\textsf{R})\) to denote the schema of \(\textsf{R}\). The statement \(\textbf{t} \in \textsf{R}\) tests if \(\textsf{R}[\textbf{t} ] \ne \varvec{0}\). The size \(|\textsf{R}|\) of \(\textsf{R}\) is the size of the set \(\{ \textbf{t} \mid \textbf{t} \in \textsf{R} \}\), which consists of all keys with non-\(\varvec{0}\) payloads. A database \({\mathcal {D}}\) is a collection of relations over the same ring. Its size \(|{\mathcal {D}}|\) is the sum of the sizes of its relations. This data model is in line with prior work on K-relations over provenance semirings [24], generalized multiset relations [31], and factors over semirings [3].

Each relation or materialized view \(\textsf{R}\) over schema \({\mathcal {S}}\) is implemented as a hash map or a multidimensional array that stores key-payload entries \((\textbf{t},\textsf{R}[\textbf{t} ])\) for each tuple \(\textbf{t} \) with \(\textsf{R}[\textbf{t} ] \ne \varvec{0}\). The data structure can: (1) look up, insert, and delete entries in amortized constant time, and (2) enumerate all stored entries in \(\textsf{R}\) with constant delay, i.e., the following times are constant: (i) the time between the start of the enumeration and outputting the first tuple, (ii) the time between outputting any two consecutive tuples, and (iii) the time between outputting the last tuple and the end of the enumeration [19]. For a schema \({\mathcal {X}} \subset {\mathcal {S}}\), we use an index data structure that for any \(\textbf{t} \in \textsf {Dom}({\mathcal {X}})\) can: (4) enumerate all tuples in \(\sigma _{{\mathcal {X}}=\textbf{t}} \textsf{R}\) with constant delay, (5) check \(\textbf{t} \in \pi _{{\mathcal {X}}}\textsf{R}\) in amortized constant time; and (7) insert and delete index entries in amortized constant time.

We give a hash-based example data structure that supports the above operations with the stated complexities. Consider a relation R over schema \({\mathcal {S}}\). A hash table with chaining stores key value entries of the form \((\textbf{t},R(\textbf{t}))\) for each tuple \(\textbf{t} \) over \({\mathcal {S}}\) with \(R(\textbf{t}) \ne \varvec{0}\). The entries are doubly linked to support enumeration with constant delay. The hash table can report the number of its entries in constant time and supports lookups, inserts, and deletes in amortized constant time. To support index operations on a schema \({\mathcal {X}} \subset {\mathcal {S}}\), we create another hash table with chaining where each table entry stores an \({\mathcal {X}}\)-value \(\textbf{t} \) as key and a doubly linked list of pointers to the entries in R having \(\textbf{t} \) as \({\mathcal {X}}\)-value. Looking up an index entry given \(\textbf{t} \) takes amortized constant time, and its doubly linked list enables enumeration of the matching entries in R with constant delay. Inserting an index entry into the hash table additionally prepends a new pointer to the doubly linked list for a given \(\textbf{t} \); overall, this operation takes amortized constant time. For efficient deletion of index entries, each entry in R also stores back-pointers to its index entries (one back-pointer per index for R). When an entry is deleted from R, locating and deleting its index entries in doubly linked lists takes constant time per index.

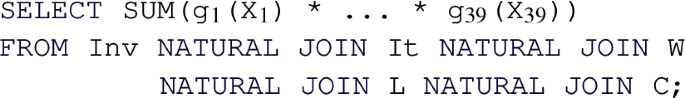

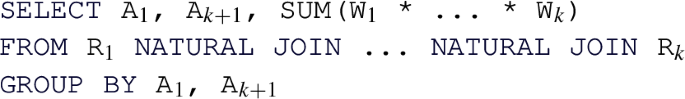

Query Language. We consider queries with natural joins and group-by aggregates:

The group-by variables \(X_1,\ldots ,X_f\) are free, while the other variables \(X_{f+1},\ldots ,X_m\) are bound. The SUM aggregate values are from a ring \((\textbf{D}, +, *, \varvec{0}, \varvec{1})\). The SUM operator uses the addition \(+\) from \(\textbf{D} \). Further aggregates can be expressed using the sum and product operations from the ring. A lifting function \(g_{k}: \textsf {Dom}(X_k) \rightarrow \textbf{D} \), for \(f < k \le m\), maps \(X_k\)-values to elements in \(\textbf{D} \): when marginalizing \(X_k\), we aggregate the values \(g_{k}(x)\) from \(\textbf{D} \) and not the values \(x\) from \(\textsf {Dom}(X_k)\).

Instead of the verbose SQL notation, we use the following more compact encoding:

where \(\bigotimes \) is the join operator, \(\textstyle \bigoplus _{X_{f+1}}\) is the aggregation operator that marginalizes over the variable \(X_{f+1}\), and each relation \(\textsf {R}_{\textsf {i}}\) is a function mapping keys over schema \({\mathcal {S}}_i\) to payloads in \(\textbf{D} \). We also need a union operator \(\uplus \) to express updates (insert/delete) to relations.

Example 2

The SQL query

over tables R(A, B), S(A, C, E), and T(C, D) can be encoded as follows in our formalism. The table R is encoded as a relation \(\textsf{R}: \textsf {Dom}(A) \times \textsf {Dom}(B) \rightarrow {\mathbb {Z}}\) that maps tuples (a, b) to their multiplicity in R; similarly, we encode the tables S and T as relations \(\textsf{S}\) and \(\textsf{T}\). We translate the SQL query into:

where \(\underset{A,B,C,D,E}{\textstyle \bigoplus }\) abbreviates \(\textstyle \bigoplus _A \cdots \textstyle \bigoplus _E\). The lifting functions used for marginalization map all values to 1. Recall that by definition \(\textsf{R}\), \(\textsf{S}\), and \(\textsf{T}\) are finite. The relation \(\textsf{Q}\) maps the empty tuple () to the count. \(\square \)

Given a ring \((\textbf{D}, +, *, \varvec{0}, \varvec{1})\), relations \(\textsf{R}\) and \(\textsf{S}\) over schema \({\mathcal {S}}_1\) and relation \(\textsf{T}\) over schema \({\mathcal {S}}_2\), a variable \(X \in {\mathcal {S}}_1\), and a lifting function \(g_{X}:\textsf {Dom}(X) \rightarrow \textbf{D} \), we define the three operators as follows:

where \({\textsf {D}}_1 = \textsf {Dom}({\mathcal {S}}_1)\), \({\textsf {D}}_2 = \textsf {Dom}({\mathcal {S}}_1 \cup {\mathcal {S}}_2)\), and \({\textsf {D}}_3 = \textsf {Dom}({\mathcal {S}}_1 {\setminus } \{X\})\), and \(\pi _{{\mathcal {S}}}(\textbf{t})\) is a tuple representing the projection of tuple \(\textbf{t} \) on the schema \({\mathcal {S}}\).

Example 3

Consider relations over a ring \((\textbf{D},\hspace{-0.05em} +,\hspace{-0.05em} *,\hspace{-0.05em} \varvec{0},\hspace{-0.05em} \varvec{1})\):

The values \(r_1\), \(r_2\), \(s_1\), \(s_2\), \(t_1\), \(t_2\) are non-\(\varvec{0}\) values from \(\textbf{D} \). The operators \(\uplus \), \(\otimes \), and \(\oplus \) are akin to union, join, and aggregation (\(g_A:\hspace{-0.1em} \textsf {Dom}(A) \hspace{-0.1em}\rightarrow \hspace{-0.1em} \textbf{D} \) is the lifting for A):

Example 4

Let us consider the SQL query from Sect. 1.1, which computes SUM(R.B * T.D * S.E) grouped by A, C. Assume that B, D, and E take values from \({\mathbb {Z}}\). We model the tables R, S, and T as relations mapping tuples to their multiplicity, as in Ex. 2. The variables A and C are free, while B, D, and E are bound.

When marginalizing over the bound variables, we apply the same lifting function to these variables: \(\forall x \in {\mathbb {Z}}: g_{B}(x) = g_{D}(x) = g_{E}(x) = x\). The SQL query can be expressed in our formalism as follows:

The computation of the aggregate SUM(R.B * T.D * S.E) now happens over payloads. \(\square \)

By using relations over rings, we avoid the intricacies of incremental computation under multiset semantics caused by the non-commutativity of inserts and deletes. We simplify delta processing by representing both inserts and deletes as tuples, with the distinction that they map to positive and respectively negative ring values. This uniform treatment allows for simple delta rules for the three operators of our query language.

4 Factorized ring computation

This section introduces a framework for query evaluation based on factorized computation and data rings. The next section extends it to incremental maintenance.

Variable Orders. Given a join query Q, a variable X depends on a variable Y if both are in the schema of a relation in Q.

Definition 2

(adapted from [52]) A variable order \(\omega \) for a join query Q is a pair (F, dep), where F is a rooted forest with one node per variable in Q, and dep is a function mapping each variable X to a set of variables in F. It satisfies the following constraints:

-

For each relation in Q, all of its variables lie along a root-to-leaf path in F.

-

For each variable X, dep(X) is the subset of its ancestors in F on which the variables in the subtree rooted at X depend.

Example 5

Consider the query from Ex. 2 that joins the relations \(\textsf{R}[A,B]\), \({{\textsf{S}}}{[{ A,C,E }]}\), and \({{\textsf{T}}}{[{ C,D }]}\). Figure 3 gives a variable order (top left) for the query. Variable D has ancestors A and C, yet it only depends on C since C and D appear in the same relation \(\textsf{T}\) and D does not occur in any relation together with A. Thus, \(dep(D)=\{C\}\). Given C, the variables D and E are independent of each other. \(\square \)

For a query Q with free variables, a variable order is free-top if no bound variable is an ancestor of a free variable [28]. Variable orders are a different syntax [52] for hypertree decompositions [23]. They are more natural for algorithms that proceed one variable at a time.

View Trees. Our framework relies on a variable order \(\omega \) for the input query Q to describe the structure of the computation and indicate which variable marginalizations are pushed past joins. Based on \(\omega \), we construct a tree of views that represent F-IVM ’s data structure to support query maintenance and enumeration.

Figure 4 gives a function \(\tau \) that constructs a view tree \(\tau \) for a variable order \(\omega \) and the set \({\mathcal {F}}\) of free variables of the query Q. Without loss of generality, we assume that \(\omega \) is a single rooted tree. Otherwise, we apply \(\tau \) to each tree in \(\omega \) to obtain a set of view trees. For simplicity, we assume that \(\omega \) was first extended with relations as children under their lowest variable.

The function \(\tau \) maps the variable order to a view tree of the same tree structure, yet with each variable X replaced by a view \({{\mathsf {V^{@X}_{rels}}}}{[{ keys }]}\). This notation states that the view \(\textsf{V}\) is (recursively) defined over the input relations rels, has free variables keys, and it corresponds to the variable X in \(\omega \); in case of a view for an input relation \(\textsf{R}\), we use the simplified notation \({{\textsf{R}}}{[{ \textsf {sch}(\textsf{R}) }]}\).

The base case (leaf in the extended variable order) is that of an input relation: We construct a view that is the relation itself. At a variable X (inner node), we distinguish two cases: If X is a bound variable, then we construct a view that marginalizes out X in the natural join of the views that are children of the current view; we thus first join on X, then apply the lifting function for X on its values, and aggregate X away. If X is a free variable, however, then we retain it in the view schema without applying the lifting function to its values. The schema of the view consists of dep(X) and the free variables in the subtree of \(\omega \) rooted at X.

(Left) view tree over the variable order \(\omega \) in Fig. 3 and \({\mathcal {F}} = \{A,C\}\); (right) View definitions

Contents of the views in the view tree from Fig. 3 in case the relations \(\textsf{R}\), \(\textsf{S}\), and \(\textsf{T}\) are over a ring \((\textbf{D}, +, *, \varvec{0}, \varvec{1})\) with \(p_i \in \textbf{D} \) for \(i \in [12]\)

Example 6

Figure 3 shows the view tree constructed by the function \(\tau \) from Fig. 4 over the variable order \(\omega \) and the empty set of free variables. Figure 5 depicts the view tree constructed over the same variable order but for the set \({\mathcal {F}} = \{A,C\}\) of free variables.

Figure 6 gives the contents of the views in the view tree from Fig. 3, where \(\textsf{R}\), \(\textsf{S}\), and \(\textsf{T}\) are relations over a ring \(\textbf{D} \) with payloads \(p_i \in \textbf{D} \) for \(i \in [12]\). Assume that \(\textbf{D} \) is the \({\mathbb {Z}}\) ring, each tuple in these relations is mapped to 1, i.e., \(p_i = 1\) for \(i \in [12]\), and the lifting functions map all values to 1. Then, the view tree computes the COUNT query from Ex. 2 and the root view \(\mathsf {V_{RST}^{@A}}\) maps the empty tuple to the overall count 10. \(\square \)

By default, the function \(\tau \) in Fig. 4 constructs one view per variable in the variable order \(\omega \). A wide relation (with many variables) leads to long branches in \(\omega \) with variables that are only local to this relation. This is, for instance, the case of our retailer dataset used in Sect. 9. Such long branches create long chains of views, where each view marginalizes one bound variable over its child view in the chain. For practical reasons, we compose such long chains into a single view that marginalizes several variables at a time.

5 Factorized higher-order IVM

We introduce incremental view maintenance in our factorized ring computation framework. Unlike evaluation, the incremental maintenance of the query result may require the materialization and maintenance of views. An update to a relation \(\textsf{R}\) triggers changes in all views from the leaf \(\textsf{R}\) to the root of the view tree.

Updates. The insertion (deletion) of a tuple \(\textbf{t} \) into (from) a relation \(\textsf{R}\) is expressed as a delta relation \(\delta \textsf{R}\) that maps \(\textbf{t} \) to \(\varvec{1}\) (and respectively \(-\varvec{1}\)). In general, \(\delta \textsf{R}\) can be a relation, thus a collection of tuples mapped to payloads. The updated relation is then the union of the old relation and the delta relation: \(\textsf{R}:= \textsf{R} \uplus \delta \textsf{R}\).

Delta Views. For each view \(\textsf{V}\) affected by an update, a delta view \(\mathsf {\delta {V}}\) defines the change in the view content. In case the view \(\textsf{V}\) represents a relation \(\textsf{R}\), then \(\mathsf {\delta {V}}=\mathsf {\delta {R}}\) if there are updates to \(\textsf{R}\) and \(\mathsf {\delta {V}}=\emptyset \) otherwise. If the view is defined using operators on other views, \(\delta \textsf{V}\) is derived using the following delta rules:

The correctness of the rules follows from the associativity of \(\uplus \) and the distributivity of \(\otimes \) over \(\uplus \); \(\textstyle \bigoplus _{X}\) is equivalent to the repeated application of \(\uplus \) for the possible values of X. The derived delta views are subject to standard simplifications: If \(\textsf{V}\) is not defined over the updated relation \(\textsf{R}\), then its delta view \(\mathsf {\delta {V}}\) is empty, and then we propagate this information using the identities \(\emptyset \uplus \textsf{V} = \textsf{V} \uplus \emptyset = \textsf{V}\) and \(\emptyset \otimes \textsf{V} = \textsf{V} \otimes \emptyset = \emptyset \).

Delta Trees. Under updates to one relation, a view tree becomes a delta tree where the affected views become delta views. The function \(\varDelta \) in Fig. 7 replaces the views along the path from the updated relation to the root with delta views. The Optimize method rewrites delta view expressions to exploit factorized updates by avoiding the materialization of Cartesian products and pushing marginalization past joins (see Sect. 6).

Example 7

Consider again the query from Ex. 2, its view tree in Fig. 3, and the same relations over the \({\mathbb {Z}}\) ring and the lifting functions that map all values to 1 as in Ex. 6. An update \(\mathsf {\delta {T}}[C,D]=\{ (c_1,d_1) \rightarrow -1, (c_2, d_2) \rightarrow 3 \}\) triggers delta computation at each view from the leaf \(\textsf{T}\) to the root of the view tree:

Given that \(\mathsf {V^{@E}_{S}} =\) \(\{(a_1,c_1) \rightarrow 2,\) \((a_1,c_2) \rightarrow 1,\) \((a_2,c_2) \rightarrow 1\}\) and \(\mathsf {V^{@B}_{R}} =\) \(\{a_1 \rightarrow 2,\) \(a_2 \rightarrow 1,\) \(a_3 \rightarrow 1\}\), we obtain \(\delta \mathsf {V^{@D}_{T}}[C] = \{c_1 \rightarrow -1,\) \(c_2 \rightarrow 3\}\), \(\delta \mathsf {V^{@C}_{ST}}[A] = \{a_1 \rightarrow 1,\) \(a_2 \rightarrow 3\}\), and \(\delta \mathsf {V^{@A}_{RST}} = \{() \rightarrow 5\}\).

A single-tuple update to \(\textsf{T}\) fixes the values for C and D. Computing \(\delta \mathsf {V^{@D}_{T}}\) then takes constant time. The delta view \(\delta \mathsf {V^{@C}_{ST}}\) iterates over all possible A-values for a fixed C-value, which takes linear time; \(\delta \mathsf {V^{@A}_{RST}}\) incurs the same linear-time cost. A single-tuple update to \(\textsf{R}\) or \(\textsf{S}\) fixes all variables on a leaf-to-root path in the delta view tree, giving a constant view maintenance cost. \(\square \)

In contrast to classical (first-order) IVM that only requires maintenance of the query result [15], our approach is higher-order IVM as updates may trigger maintenance of several interrelated views. The fully recursive IVM scheme of DBToaster [31, 32] creates one materialization hierarchy per relation in the query, whereas we use one view tree for all relations. This view tree relies on variable orders to decompose the query into views and factorize its computation and maintenance.

Which Views to Materialize and Maintain? The answer to this question depends on which relations may change. The set of the updatable relations determines the possible delta propagation paths in a view tree, and these paths may use materialized views.

Propagating changes along a leaf-to-root path is computationally most effective if each delta view joins with sibling views that are already materialized. Figure 8 gives an algorithm that reflects this idea: Given a view tree \(\tau \) and a set of updatable relations \({\mathcal {U}}\), the algorithm traverses the tree top-down to discover the views that need to be materialized. The root of the view tree \(\tau \) is always stored as it represents the query result. Every other view \(\mathsf {V_i}\) is stored only if there exists a sibling view \(\mathsf {V_j}\) defined over an updatable relation.

Example 8

We continue with our query from Ex. 7. For updates to \(\textsf{T}\) only, i.e., \({\mathcal {U}} = \{ \textsf{T} \}\), we store the root \(\mathsf {V^{@A}_{RST}}\) and the views \(\mathsf {V^{@E}_{S}}\) and \(\mathsf {V^{@B}_{R}}\) used to compute the deltas \(\mathsf {\delta {V^{@C}_{ST}}}\) and \(\mathsf {\delta {V^{@A}_{RST}}}\). Only the root view is affected: \({{\mathsf {V^{@A}_{RST}}}}{[{ ~ }]} = {{\mathsf {V^{@A}_{RST}}}}{[{ ~ }]} \uplus {{\mathsf {\delta {V^{@A}_{RST}}}}}{[{ ~ }]}\). It is not necessary to maintain other views. To also support updates to \(\textsf{R}\) and \(\textsf{S}\), we need to materialize \(\mathsf {V^{@C}_{ST}}\) and \(\mathsf {V^{@D}_{T}}\). If no updates are supported, then only the root view is stored. \(\square \)

For queries with free variables, several views in their (delta) view trees may be identical: This can happen when all variables in their keys are free and thus cannot be marginalized. For instance, a variable order \(\omega \) for the query from Ex. 4 may have the variables A and C above all other variables, in which case their views are the same in the view tree for \(\omega \). We then store only the top view out of these identical views.

IVM Triggers. For each updatable relation \(\textsf{R}\), F-IVM constructs a trigger procedure that takes as input an update \(\mathsf {\delta {R}}\) and implements the maintenance schema of the corresponding delta view tree. This procedure also maintains all materialized views needed for the given update workload.

A bulk of updates to several relations is handled as a sequence of updates, one per relation. Update sequences can also happen when updating a relation \(\textsf{R}\) that occurs several times in the query. The instances representing the same relation are at different leaves in the delta tree and lead to changes along multiple leaf-to-root paths.

6 Factorizable updates

Our focus so far has been on supporting updates represented by delta relations. We next consider an alternative approach that decomposes a delta relation into a union of factorizable relations. The cumulative size of the decomposed relations can be much less than the size of the original delta relation. Also, the complexity of propagating a factorized update can be much lower than that of its unfactorized (listing) representation, since the factorization makes explicit the independence between query variables and enables optimizations of delta propagation such as pushing marginalization past joins. Besides the factorized view computation, this is the second instance where F-IVM exploits factorization.

Factorizable updates arise in many domains such as linear algebra and machine learning. Section 8 demonstrates how our framework can be used for the incremental evaluation of matrix chain multiplication, recovering prior work on this [44]. Matrix chain computation can be phrased in our language of joins and aggregates, where matrices are binary relations. Changes to one row/column in an input matrix may be expressed as a product of two vectors. In general, an arbitrary update matrix can be decomposed into a sum of rank-1 matrices, each of them expressible as products of vectors, using low-rank tensor decomposition methods [33, 61].

Example 9

Arbitrary relations can be decomposed into a union of factorizable relations. The relation \({{\textsf{R}}}{[{ A,B }]}\) \(= \{(a_i,b_j) \rightarrow 1\mid i\in [n],j\in [m]\}\) can be decomposed as \({{\mathsf {R_1}}}{[{ A }]}\otimes {{\mathsf {R_2}}}{[{ B }]}\), where \({{\mathsf {R_1}}}{[{ A }]}=\{(a_i) \rightarrow 1\mid i\in [n]\}\) and \({{\mathsf {R_2}}}{[{ B }]}=\{(b_j) \rightarrow 1\mid j\in [m]\}\). We thus reduced a relation of size nm to two relations of cumulative size \(n+m\). If \(\textsf{R}\) were a delta relation, the delta views on top of it would now be expressed over \({{\mathsf {R_1}}}{[{ A }]}\otimes {{\mathsf {R_2}}}{[{ B }]}\) and their computation can be factorized as done for queries in Sect. 4. Product decomposition of relations can be done in linearithmic time in both the number of variables and the size of the relation [49].

Consider now \({{\mathsf {R'}}}{[{ A,B }]}\) \(=\) \({{\textsf{R}}}{[{ A,B }]}\) \(\uplus \) \(\{(a_{n+1},b_j) \rightarrow 1\mid j\in [m-1]\}\) with \(\textsf{R}\) as above. We can decompose each of the two terms in \(\mathsf {R'}\) similarly to \(\textsf{R}\), yielding overall \(n+2m\) values instead of \(nm+m-1\). A different decomposition with \(n+m+3\) values is given by a factorizable over-approximation of \(\mathsf {R'}\) compensated by a small product with negative payload: \(\{(a_i)\rightarrow 1\mid i\in [n+1]\}\otimes \{(b_j)\rightarrow 1\mid j\in [m]\}\uplus \{(a_{n+1})\rightarrow 1\}\otimes \{(b_m)\rightarrow -1\}\). \(\square \)

The Optimize method used in the delta view tree algorithm in Fig. 7 exploits the distributivity of join \(\otimes \) over marginalization \(\textstyle \bigoplus _{X}\) to push the latter past the former and down to the views with variable X. This optimization is reminiscent of pushing aggregates past joins in databases and variable elimination in probabilistic graphical models [3]. In case the delta views express Cartesian products, then they are not materialized but instead kept factorized.

Example 10

Consider the query \(\textsf{Q}\) from Example 7 and its view tree in Fig. 3. In the delta view tree derived for updates to \(\textsf{S}\), the top-level delta is computed as:

A single-tuple update \(\mathsf {\delta {S}}\) binds variables A, C, and E, and computing \(\mathsf {\delta {V^{@A}_{RST}}}\) requires \({\mathcal {O}}(1)\) lookups in \(\mathsf {V^{@D}_{T}}\) and \(\mathsf {V^{@B}_{R}}\). An arbitrary-sized update \(\mathsf {\delta {S}}\) can then be processed in \({\mathcal {O}}(|\mathsf {\delta {S}}|)\) time.

Assume now that \(\mathsf {\delta {S}}\) is factorizable as \({{\mathsf {\delta {S}}}}{[{ A,C,E }]} = {{\mathsf {\delta {S_{A}}}}}{[{ A }]} \otimes {{\mathsf {\delta {S_{C}}}}}{[{ C }]} \otimes {{\mathsf {\delta {S_{E}}}}}{[{ E }]}\). In the construction of the delta view tree, the Optimize method exploits this factorization to push the marginalization past joins at each variable; for example, the delta at E becomes:

We also transform the top-level delta into a product of three views:

The computation time for this delta is proportional to the sizes of the three views representing the update: \({\mathcal {O}}(\min (|\mathsf {V^{@B}_{R}}|, {|\mathsf {\delta {S_{A}}}|}) + \min (|\mathsf {V^{@D}_{T}}|, |\mathsf {\delta {S_{C}}}|) + |\mathsf {\delta {S_{E}}}|)\). \(\square \)

7 F-IVM for special query classes

This section shows how F-IVM maintains free-connex (\(\alpha \)-)acyclic queries [27] and q-hierarchical queries [9]. The analysis for these queries is refined into: (i) the preprocessing phase, where the view tree is constructed; (ii) the enumeration phase, where we present the query result one tuple at a time; and (iii) the update phase, where we update the view tree. The following data complexityFootnote 2 claims assume that the ring operations require constant time; otherwise, the complexity results stated in this section have an extra multiplying factor to account for the complexity of the ring operations.

Theorem 1

Let a query \(\textsf{Q}\) and a database of size N.

F-IVM can maintain \(\textsf{Q}\) with O(N) preprocessing, O(1) enumeration delay, and O(N) single-tuple update in case \(\textsf{Q}\) is free-connex acyclic.

F-IVM can maintain \(\textsf{Q}\) with O(N) preprocessing, O(1) enumeration delay, and O(1) single-tuple update in case \(\textsf{Q}\) is q-hierarchical.

Remark. F-IVM has a special treatment for cyclic queries. Whereas for acyclic join queries the size of each view is asymptotically upper-bounded by the size of the query result, for cyclic queries views may be larger than the query result. In prior work [46], we show how to reduce the size of intermediate views for cyclic queries by adding indicator projections [3] to view trees. Such projections do not affect the query result but can constrain views (e.g., create cycles) and bring asymptotic savings in space and time. To decide which indicator projections to use, we apply a variant of the GYO reduction [7] that discovers cyclic parts in the query. \(\square \)

7.1 Free-connex acyclic queries

We first introduce the class of free-connex acyclic queries and then explain how F-IVM maintains them.

Definition 3

([10, 65]) A join tree for a query is a tree, where each node is a relation and if any two nodes have variables in common, then all nodes along the path between them also have these variables.

A query is (\(\alpha \)-)acyclic if it admits a join tree. A query is free-connex acyclic if it is acyclic and remains acyclic after adding a new relation whose schema consists of the free variables of the query.

Example 11

Consider the query \({{\textsf{Q}}}{[{ A,B,C }]} = \textstyle \bigoplus _{D}\textstyle \bigoplus _{E}\) \({{\textsf{R}}}{[{ A,B }]} \otimes {{\textsf{S}}}{[{ A,C,E }]} \otimes {{\textsf{T}}}{[{ C,D }]}\). A possible join tree for \(\textsf{Q}\) is \({{\textsf{R}}}{[{ A,B }]} - {{\textsf{S}}}{[{ A,C,E }]} - {{\textsf{T}}}{[{ C,D }]}\), where “−" denotes the parent–child relationship. Hence, \(\textsf{Q}\) is acyclic.

Consider the triangle query \({{\mathsf {Q_{\vartriangle }}}}{[{ ~ }]} = \textstyle \bigoplus _{A}\textstyle \bigoplus _{B}\textstyle \bigoplus _{C}\) \({{\textsf{R}}}{[{ A,B }]} \otimes {{\textsf{S}}}{[{ B,C }]} \otimes {{\textsf{T}}}{[{ A,C }]}\). A possible tree built from the relations of \(\mathsf {Q_{\vartriangle }}\) is \({{\textsf{R}}}{[{ A,B }]} - {{\textsf{S}}}{[{ B,C }]} - {{\textsf{T}}}{[{ A,C }]}\). The variable A occurs in the first and last relations but not in the middle relation; thus, this tree is not a join tree for \(\mathsf {Q_{\vartriangle }}\). One can show that any tree built from the relations of \(\mathsf {Q_{\vartriangle }}\) is not a join tree. Hence, \(\mathsf {Q_{\vartriangle }}\) is not acyclic.

The tree \({{\textsf{R}}}{[{ A,B }]} - {{\textsf{U}}}{[{ A,B,C }]} - {{\textsf{S}}}{[{ A,C,E }]} - {{\textsf{T}}}{[{ C,D }]}\) is a join tree of \(\textsf{Q}\) extended with the relation U whose schema consists of the free variables of \(\textsf{Q}\). Hence, \(\textsf{Q}\) is free-connex acyclic. Consider now the variant \(\mathsf {Q'}\) of \(\textsf{Q}\) where only the variables B and C are free. Adding a fresh relation \(U'\) with schema (B, C) to \(\mathsf {Q'}\) turns it into a cyclic query \(\mathsf {Q''}\) that does not admit a join tree. \(\square \)

We next detail how F-IVM achieves the complexity from Theorem 1 for a free-connex acyclic query \(\textsf{Q}\).

Preprocessing. In the preprocessing phase, we create a view tree that compactly represent the result of \(\textsf{Q}\). Given a variable order, the function \(\tau \) in Fig. 4 constructs a view tree where the root view consists of all tuples over the free variables. While this view allows for constant enumeration delay, it may require superlinear computation and maintenance time as the free variables may originate from different input relations. We would like to avoid this super-linearity.

To keep the preprocessing and update times linear, we proceed as follows. We construct view trees such that the query result is kept and maintained factorized over several views at the top of the view tree. This approach still allows for constant enumeration delay, using a known enumeration approach for factorized representations [52]. We construct the view tree following a free-top variable order of the query \(\textsf{Q}\) and materialize a view over the schema \(\{X\} \cup \textit{dep}(X)\) for each variable X in the variable order. A key insight is that every free-connex acyclic query admits a free-top variable order where for each variable X, the set \(\{X\} \cup \textit{dep}(X)\) is covered by the variables of a single relation [8]. This ensures linear preprocessing and maintenance time for all views in view trees following such variable orders.

The function \(\nu \) in Fig. 9 constructs a view tree for a given free-top variable order of a free-connex query. If a variable X has at least two children, it proceeds as follows. It creates at X a view \(\mathsf {H^{@X}_{rels}}\) with schema \(\{X\} \cup \textit{dep}(X)\) that joins the child views of X. If X has at least one sibling, it additionally creates a view \(\mathsf {V^{@X}_{rels}}\) on top of \(\mathsf {H^{@X}_{rels}}\) obtained from \(\mathsf {H^{@X}_{rels}}\) by marginalizing X. The first view enables efficient enumeration of X-values in the query result given a value tuple over \(\textit{dep}(X)\); the second view enables efficient updates coming from the subtrees rooted at siblings of X. If X has only one child, the creation of the view \(\mathsf {H^{@X}_{rels}}\) is not needed for efficient enumeration. In this case, the function creates a view \(\mathsf {V^{@X}_{rels}}\) marginalizing X in the child view if X has siblings.

Example 12

Consider the free-connex acyclic query \(\textsf{Q}\) from Ex. 11. Figure 3 gives a free-top variable order \(\omega \) for \(\textsf{Q}\). Figure 10 (left) depicts the view tree \(\nu (\omega )\). The view \(\mathsf {H_{ST}^{@C}}\) can be computed by iterating over the (A, C)-tuples in \(\mathsf {V_{S}^{@E}}\) and multiplying the payload of each such tuple with the payload of the matching C-value in \(\mathsf {V_{T}^{@D}}\). Since each such (A, C)-tuple must be in \(\textsf{S}\), we need to iterate over only linearly many such tuples. Similarly, the view \(\mathsf {H_{RST}^{@A}}\) can be computed by iterating over the A-values in one of the child views and doing lookups in the other child view to retrieve the payloads. For the computation of both views \(\mathsf {H_{ST}^{@C}}\) and \(\mathsf {H_{RST}^{@A}}\), we iterate over linearly many tuples and do a constant-time lookup for each such tuple. All other views are obtained by marginalizing one variable from their child views. Hence, all views can be computed in linear time. \(\square \)

Updates. The construction of delta view trees under single-tuple updates is exactly as described by the function \(\varDelta \) in Fig. 7 (Sect. 5). Since the view trees can be constructed in linear time, the delta view trees can also be constructed in linear time.

Example 13

Continuing Ex. 12, we consider a single-tuple update \(\delta \textsf{T}[c,d]\) to relation \(\textsf{T}\). Figure 10 depicts the original view tree (left) and the delta view tree for updates to \(\textsf{T}\) (right). The difference is that along the path from \(\textsf{T}\) to the root, we now have delta views. The delta view \(\delta \mathsf {V^{@D}_{T}}\) results from \(\delta {{\textsf{T}}}{[{ c,d }]}\) by marginalizing D, which takes constant time since D is fixed to the constant d. To compute \(\delta \mathsf {H^{@C}_{ST}}\), we iterate over all A-values paired with c in \(\mathsf {V^{@E}_{S}}\). This operation takes linear time with the support of an index on variable C built for this view. We obtain \(\delta \mathsf {V^{@C}_{ST}}\) from \(\delta \mathsf {H^{@C}_{ST}}\) by marginalizing the variable C. This requires constant time because C is fixed to the constant c. The top delta view \(\delta \mathsf {H^{@A}_{RST}}\) is obtained by intersecting the two child views, e.g., by iterating over \(\delta \mathsf {V^{@C}_{ST}}\) and doing lookups in \(\mathsf {V^{@B}_R}\). This requires linear time. We conclude that the delta views can be computed in linear time. \(\square \)

Enumeration. Consider a view tree \(\tau \) constructed using the function \(\nu \) from Fig. 9 for a free-top variable order of a query \(\textsf{Q}\). We first describe how to enumerate with constant delay the distinct tuples in the result of \(\textsf{Q}\) using \(\tau \). Then, we explain how to compute the payload of each result tuple in constant time.

Let \(X_1, \ldots , X_n\) be an ordering of the free variables of the query that is compatible with a top-down traversal of the free-top variable order. We use the views \(\mathsf {V_1}, \ldots , \mathsf {V_n}\) to enumerate the distinct tuples in the result of \(\textsf{Q}\), where \(\mathsf {V_j}\) is \(\mathsf {H^{@X_j}_{rels}}\) if \(X_j\) has at least two children and it is the child view of \(X_j\) otherwise. We retrieve from \(\mathsf {V_1}\) the first \(X_1\)-value in the result. When we arrive at a view \(\mathsf {V_j}\) with \(j > 1\), we have already fixed the values of the variables above \(X_j\) in the variable order. We retrieve from \(\mathsf {V_j}\) the first \(X_j\)-value paired with these values. Once the values over all free variables are fixed, we have a complete result tuple that we output. Then, we iterate over the remaining distinct \(X_n\)-values in \(\mathsf {V_n}\) paired with the fixed values over the ancestor variables of \(X_n\) and output a new tuple for each such value. After all \(X_n\)-values are exhausted, we backtrack, i.e., we move to the next \(X_{n-1}\)-value and restart the iteration of the matching \(X_n\)-values, and so on.

Given a complete tuple \(\textbf{t} \) constructed from the view tree \(\tau \), we use the function payload from Fig. 11 to compute its payload. The function first checks whether the schema of the root view is exactly the schema \(\textsf {sch}(\textbf{t})\) of \(\textbf{t} \). If so, it returns the payload of \(\textbf{t} \) in this view. Otherwise, the root view covers only a subset of the schema of the tuple. In this case, the function recursively computes the payload for each subtree \(\tau _i\) of the root view and the projection of \(\textbf{t} \) onto the variables in \(\tau _i\). The final payload is the product of the payloads returned for the subtrees. The returned payloads are from the lowest views in the view tree whose schemas consist of free variables only. If all variables are free, then these lowest views are the input relations themselves.

Remark 1

The enumeration procedure needs the payloads of the lowest views whose schemas consist of free variables. The payloads from the views above these views thus need not be maintained, beyond keeping track of the multiplicities of each of their tuples. The maintenance of multiplicities is important for correctness, as it tells whether a tuple is to be removed from a view or still has at least one possible derivation from the input. For expensive payloads, such as those introduced in Sect. 8, it is therefore more efficient to only maintain them for the views from the input relations up to the views used to compute the payloads. Their ancestor views only need maintenance of tuple multiplicities. \(\square \)

Example 14

We enumerate the distinct result tuples of the query \({{\textsf{Q}}}{[{ A,B,C }]}\) from Ex. 11 using the view tree in Fig. 10 (left). We iterate with constant delay over the A-values in \({{\mathsf {H^{@A}_{RST}}}}{[{ A }]}\). For each such A-value a, we iterate with constant delay over the B-values in \({{\textsf{R}}}{[{ a,B }]}\) and over the C-values in \({{\mathsf {H^{@C}_{ST}}}}{[{ a,C }]}\). Each triple (a, b, c) obtained in this way is a result tuple of \(\textsf{Q}\). Its payload is \({{\textsf{R}}}{[{ a,b }]} \cdot {{\mathsf {H^{@C}_{ST}}}}{[{ a,c }]}\). \(\square \)

Remark 2

To efficiently support enumeration and updates, we may need several indices for the views in a view tree for a free-connex acyclic query. Each view (and input relation) in the view tree in Fig. 10 (left) needs an index that can retrieve the payload for a given tuple of values over its variables. This is a primary index. For (top-down) enumeration, we may also need a secondary index per view to lookup for tuples that have as prefix a tuple of values over the variables shared with its parent view. Yet in case of some views, we may also need a tertiary index to support updates, which are propagated bottom-up. For instance, the view \({{\mathsf {V^{@E}_{S}}}}{[{ A,C }]}\) requires: a primary index to retrieve the payload for each (A, C)-tuple; a secondary index to enumerate the C-values paired with a given A-value fixed by the parent view; and a tertiary index to obtain all A-values paired with a given C-value c fixed by the delta of its left sibling \(\delta {{\mathsf {V^{@D}_{T}}}}{[{ c }]}\). All other views only require primary and secondary indices and no tertiary index. \(\square \)

7.2 Q-hierarchical queries

Q-hierarchical queries form a strict subclass of the free-connex acyclic queries. They admit linear preprocessing time, constant update time, and constant enumeration delay [9]. Under widely held complexity theoretic assumptions, there is no algorithm that achieves constant update time and enumeration delay for queries that are not q-hierarchical and have no repeating relation symbols [9]. F-IVM recovers the aforementioned complexities using exactly the same approach as for free-connex acyclic queries detailed in Sect. 7.1. This directly implies linear preprocessing time and constant enumeration delay. Constant update time follows from the following observation. Every q-hierarchical query admits a free-top variables order, where each root-to-leaf path consists of variables that represent precisely the schema of a relation in the query. A single-tuple update to that relation then sets all these variables to constants, effectively making each delta view along that path of constant size. Our view tree construction also ensures that the computation of each delta view only requires one constant-time lookup per child view.

We first define q-hierarchical queries and then show how F-IVM achieves constant-time update for them. For a variable X in a query, we denote by \(\textsf {rels}(X)\) the set of relations that contain X in their schema.

Definition 4

([9, 62]) A query is hierarchical if for any two variables X and Y, it holds \(\textsf {rels}(X) \subseteq \textsf {rels}(Y)\), \(\textsf {rels}(Y) \subseteq \textsf {rels}(X)\), or \(\textsf {rels}(X) \cap \textsf {rels}(Y) = \emptyset \).

A query is q-hierarchical if it is hierarchical and for any two variables X and Y, it holds: if \(\textsf {rels}(X) \supset \textsf {rels}(Y)\) and Y is free, then X is free.

Every q-hierarchical query admits a canonical free-top variable order, where (i) each root-to-leaf path consists of variables that form the schema of a relation and (2) no bound variable is above a free variable [28]. We can construct such a variable order in polynomial time in the query size as follows. We start with the empty variable order. For each relation R, we add to the variable order a root-to-leaf path made up of R’s variables ordered as follows: a variable X is before a variable Y if (1) \(\textsf {rels}(X) \supset \textsf {rels}(Y)\) or (2) \(\textsf {rels}(X) \not \supset \textsf {rels}(Y)\), \(\textsf {rels}(X) \not \subset \textsf {rels}(Y)\), X is free, and Y is bound.

(Left) canonical free-top variable order of the query \(\mathsf {Q_h}\) from Ex. 15; (right) Corresponding view tree

Example 15

The free-connex acyclic query \({{\textsf{Q}}}{[{ A,B,C }]}\) \(=\) \(\textstyle \bigoplus _{D}\textstyle \bigoplus _{E}\) \({{\textsf{R}}}{[{ A,B }]} \otimes {{\textsf{S}}}{[{ A,C,E }]} \otimes {{\textsf{T}}}{[{ C,D }]}\) from Ex. 11 is not hierarchical: the sets \(\textsf {rels}(A)= \{\textsf{R}, \textsf{S}\}\) \(\textsf {rels}(C)= \{\textsf{S}, \textsf{T}\}\) are not disjoint, nor one is included in the other. By extending the schema of \(\textsf{T}\) with A, we obtain the q-hierarchical query \({{\mathsf {Q_h}}}{[{ A,B,C }]}\) \(=\) \(\textstyle \bigoplus _{D}\textstyle \bigoplus _{E}\) \({{\textsf{R}}}{[{ A,B }]} \otimes {{\textsf{S}}}{[{ A,C,E }]} \otimes {{\textsf{T}}}{[{ A,C,D }]}\) whose canonical free-top variable order is given in Fig. 12 (left). The variant of the query, where variable A is bound, is hierarchical but not q-hierarchical because the set \(\textsf {rels}(A)= \{\textsf{R}, \textsf{S}, \textsf{T}\}\) for the bound variable A is a strict superset of the set \(\textsf {rels}(B)= \{\textsf{R}\}\) for the free variable B. \(\square \)

We next exemplify how F-IVM achieves constant-time update for a q-hierarchical query.

Example 16

Fig. 12 shows the view tree (right) modeled on the canonical free-top variable order (left) of the q-hierarchical query \(\mathsf {Q_h}\) in Ex. 15. Figure 13 shows the delta view trees under single-tuple updates to \(\textsf{R}\) and \(\textsf{T}\).

In the delta view tree for \(\textsf{R}\), the delta view \(\delta \mathsf {H^{@A}_{RST}}\) can be computed by a constant-time lookup in \(\mathsf {V^{@C}_{ST}}\). In the delta view tree for \(\textsf{T}\), the delta views \(\delta \mathsf {H^{@C}_{ST}}\) and \(\delta \mathsf {H^{@A}_{RST}}\) can be computed by constant-time lookups in \(\mathsf {V^{@E}_{S}}\) and \(\mathsf {V^{@B}_{R}}\), respectively. All other delta views are computed by marginalizing a variable with a single value. \(\square \)

Delta view trees derived from the view tree in Fig. 12 for single-tuple updates to relations \(\textsf{R}\) (left) and \(\textsf{T}\) (right)

Remark 3

Q-hierarchical queries admit view trees whose views only need primary indices to support payload lookup and updates and possibly secondary indices to support enumeration. Consider the view tree in Fig. 12. Enumeration proceeds top-down: We iterate over the A-values in the top view and for each such value a, we look up in \({{\textsf{R}}}{[{ a,B }]}\) to enumerate over all the B-values paired with a, and also look up into \({{\mathsf {H^{@C}_{ST}}}}{[{ a,C }]}\) to enumerate over all C-values paired with a. All these look-ups require primary or secondary indices.

Figure 13 shows the delta view trees for single-tuple updates to \(\textsf{R}\) and \(\textsf{T}\). To compute a delta view along the path from the delta relation to the root of the delta view tree, we either perform a projection on a delta view or a lookup in the primary index of a sibling view (so with all keys of the index set to constants). \(\square \)

7.3 Queries under functional dependencies

Non-hierarchical queries may become hierarchical under functional dependencies (fds) [48].

Given a set \(\varSigma \) of fds, we denote by \(\textsf {CLOSURE}_\varSigma ({\mathcal {S}})\) the closure of the set \({\mathcal {S}}\) of variables under \(\varSigma \) [2]. For instance, given the fds \(\varSigma =\{A\rightarrow D;BD \rightarrow E\}\), we have \(\textsf {CLOSURE}_\varSigma (\{A,B,C\})=\{A,B,C,D,E\}\).

Definition 5

(adapted from [48]) Given a set \(\varSigma \) of fds and a query \({{\textsf{Q}}}{[{ {\mathcal {S}} }]} = \textstyle \bigoplus _{{\mathcal {B}}} {{\mathsf {R_1}}}{[{ {\mathcal {S}}_1 }]}\otimes \cdots \otimes {{\mathsf {R_n}}}{[{ {\mathcal {S}}_n }]}\), the \(\varSigma \)-reduct of \(\textsf{Q}\) under \(\varSigma \) is:

The \(\varSigma \)-reduct of a query is thus another query, where the schema of each relation is extended to include all variables in the closure of this schema under \(\varSigma \). Since the added variables are functionally determined by the original schema, they do not add more information. So, we could extend these schemas and the underlying database without increasing the number of tuples in the relations. For any database D with fds \(\varSigma \) and a query \(\textsf{Q}\), the query result \(\textsf{Q}(D)\) is the same as the result of its \(\varSigma \)-reduct over the extended database. The benefit of this rewriting is that queries may admit free-connex acyclic or even q-hierarchical \(\varSigma \)-reducts. We need not physically extend the database to reap this benefit. Instead, we use the \(\varSigma \)-reduct of \(\textsf{Q}\) to infer a free-top variable order or even a canonical free-top variable order for \(\textsf{Q}\) in case the \(\varSigma \)-reduct is free-connex acyclic or q-hierarchical, respectively. Using this variable order, we construct a view tree for \(\textsf{Q}\) that enjoys the preprocessing, update, and enumerate times as for its \(\varSigma \)-reduct.

Theorem 1 can be generalized to account for fds.

Theorem 2

Let a query \(\textsf{Q}\) and a database of size N and with a set \(\varSigma \) of functional dependencies.

F-IVM can maintain \(\textsf{Q}\) with O(N) preprocessing, O(1) enumeration delay, and O(N) single-tuple updates in case the \(\varSigma \)-reduct of \(\textsf{Q}\) is free-connex acyclic.

F-IVM can maintain \(\textsf{Q}\) with O(N) preprocessing, O(1) enumeration delay, and O(1) single-tuple updates in case the \(\varSigma \)-reduct of \(\textsf{Q}\) is q-hierarchical.

From left to right: hypergraph of the query \(\textsf{Q}\) and its \(\varSigma \)-reduct for \(\varSigma = \{B \rightarrow C, C \rightarrow D\}\) from Ex. 17; canonical variable order \(\omega \) for \(\textsf{Q}\); view tree modeled on \(\omega \)

Delta view trees derived from the view tree in Fig. 14 for single-tuple updates to \(\textsf{R}\), \(\textsf{S}\), and \(\textsf{T}\) (left to right). The values b and c functionally determine c and d, respectively

Example 17

Consider \(\varSigma = \{B \rightarrow C, C \rightarrow D\}\) and the free-connex acyclic but not hierarchical query

The \(\varSigma \)-reduct of \(\textsf{Q}\) is

Figure 14 depicts the hypergraphs of \(\textsf{Q}\) and \(\mathsf {Q'}\) (left), a free-top variable order for \(\textsf{Q}\) that is also canonical for \(\mathsf {Q'}\) (middle), and the view tree for \(\textsf{Q}\) modeled on this variable order (right). Since \(\textsf{Q}\) is free-connex acylic, we can compute the view tree in linear time and enumerate the result tuples of \(\textsf{Q}\) with constant delay, as explained in Sect. 7.1. We next describe how to achieve constant-time update by exploiting the fds. Figure 15 shows the delta view trees obtained from the view tree for \(\textsf{Q}\) for single-tuple updates to \(\textsf{R}\), \(\textsf{S}\), and \(\textsf{T}\).

Consider first the update \(\delta {{\textsf{R}}}{[{ a,b }]}\) to relation \(\textsf{R}\). The delta view \(\delta {{\mathsf {V^{@A}_{R}}}}{[{ b }]}\) is just a projection of the update tuple. The delta view \(\delta {{\mathsf {H^{@B}_{RS}}}}{[{ b,c }]}\) requires a lookup in \({{\textsf{S}}}{[{ B,C }]}\) for \(B=b\). In general, there may be many C-values paired with b. However, under the fd \(B\rightarrow C\), there is at most one C-value c paired with b. Hence, the construction of this delta view takes constant time. Similarly, the delta view \(\delta {{\mathsf {H^{@C}_{RST}}}}{[{ c,d }]}\) requires a lookup in \({{\textsf{T}}}{[{ C,D }]}\) for \(C=c\). Again, there may be many D-values paired with c, yet under the fd \(C\rightarrow D\), there is at most one D-value d paired with c. Hence, the construction of this delta view takes constant time, too.

Similar reasoning applies to the update \(\delta {{\textsf{S}}}{[{ b,c }]}\). To compute the delta view \(\delta {{\mathsf {H^{@B}_{RS}}}}{[{ c,b }]}\), we need a constant-time lookup in the view \({{\mathsf {V^{@A}_R}}}{[{ B }]}\) with \(B=b\). Computing \(\delta {{\mathsf {H^{@C}_{RST}}}}{[{ c,d }]}\) takes constant time due to the fd \(C\rightarrow D\), as with updates to \(\textsf{R}\). Processing the update \(\delta {{\textsf{T}}}{[{ c,d }]}\) takes constant time without exploiting the fds: it only requires a lookup in the view \({{\mathsf {V^{@B}_{RS}}}}{[{ C }]}\) with \(C=c\). \(\square \)

8 Applications

This section highlights four applications of F-IVM, including learning regression models, building Chow-Liu trees, computing listing or factorized representations of the results of conjunctive queries, and multiplying a sequence of matrices. They behave the same in the key space, yet differ in the rings used to define the payloads.

8.1 Covariance matrix and linear regression

We next introduce the covariance matrix ring used for training linear regression models.

Linear Regression. Consider a training dataset that consists of k samples with \((X_i)_{i\in [m-1]}\) features and a label \(X_m\) arranged into a design matrix \(\textbf{M}\) of size \(k \times m\); in our setting, this design matrix is the result of a join query. The goal of linear regression is to learn the parameters \(\varvec{\theta } = [\theta _1 \ldots \theta _m]^{\text {T}}\) of a linear functionFootnote 3\(f(X_1,...,X_{m-1}) = \sum _{i\in [m-1]}\theta _iX_i\) best satisfying \(\textbf{M} \varvec{\theta } \approx \textbf{0}_{k\times 1}\), where \(\textbf{0}_{k\times 1}\) is the zero matrix of size \(k \times 1\).

We can solve this optimization problem using batch gradient descent. This method iteratively updates the model parameters in the direction of the gradient to decrease the squared error loss and eventually converge to the optimal value. Each convergence step iterates over the entire training dataset to update the parameters, \(\varvec{\theta }:= \varvec{\theta }- \alpha \textbf{M}^{\text {T}}{} \textbf{M}\varvec{\theta } \), where \(\alpha \) is an adjustable step size. The complexity of each step is \({\mathcal {O}}(mk)\). The covariance matrix \(\textbf{M}^{\text {T}}{} \textbf{M}\) quantifies the degree of correlation for each pair of features (or feature and label) in the data. Its computation can be done once for all convergence steps [58]. This is crucial for performance in case \(m \ll k\) as each iteration step now avoids processing the entire training dataset and takes time \({\mathcal {O}}(m^2)\).

We next show how to compute the covariance matrix assuming all features have continuous domains; we consider the case with categorical features later on.

The covariance matrix \(\textbf{M}^{\text {T}}{} \textbf{M}\) accounts for the interactions SUM(X*Y) of variables X and Y with continuous domains. We can factorize their computation over training datasets defined by arbitrary join queries [58]. We can further share their computation by casting the covariance matrix computation as the computation of one compound aggregate. This compound aggregate is a triple \((c,\varvec{s},\varvec{Q})\), where \(c \) is the number of tuples in the training dataset (size k of the design matrix), \(\varvec{s} \) is an \(m\times 1\) matrix (or vector) with one sum of values per variable, and \(\varvec{Q} \) is an \(m\times m\) matrix of sums of products of values for any two variables. The covariance matrix computation can be captured by a ring.

Definition 6

Fix a ring \((\textbf{D}, +, *, \varvec{0}, \varvec{1})\) and \(m \in {\mathbb {N}}\). Let \({\textsf {C}}\) denote the set of triples \((\textbf{D}, \textbf{D}^{m}, \textbf{D}^{m \times m})\), \(\varvec{0}^{{\textsf {C}}} = (\varvec{0}, \varvec{0}_{m \times 1}, \varvec{0}_{m \times m})\), and \(\varvec{1}^{{\textsf {C}}} = (\varvec{1}, \varvec{0}_{m \times 1}, \varvec{0}_{m \times m})\), where \(\varvec{0}_{m \times n}\) is an \(m \times n\) matrix with all zeros from \(\textbf{D} \). For \(a = (c _a, \varvec{s} _a, \varvec{Q} _a) \in {{\textsf {C}}}\) and \(b = (c _b, \varvec{s} _b, \varvec{Q} _b) \in {{\textsf {C}}}\), define the operations \(+^{{\textsf {C}}}\) and \(*^{{\textsf {C}}}\) over \({{\textsf {C}}}\) as:

using matrix addition, scalar multiplication, and matrix multiplication over \(\textbf{D} \). We refer to \(({\textsf {C}}, +^{{\textsf {C}}}, *^{{\textsf {C}}}, \varvec{0}^{\textsf {C}}, \varvec{1}^{\textsf {C}})\) as the covariance structure of degree m over \(\textbf{D} \).

Theorem 3

For \(m\in {\mathbb {N}}\) and a ring \(\textbf{D} \), the covariance structure of degree m over \(\textbf{D} \) forms a commutative ring.

Definition 7

The continuous covariance ring of degree m is the covariance structure of degree m over \({\mathbb {R}}\).

We next show how to use this ring to compute the covariance matrix over a training dataset defined by a join with relations \((\mathsf {R_i})_{i\in [n]}\) over variables \((X_j)_{j\in [m]}\). The payload of each tuple in a relation is the identity \(\varvec{1}^{{\textsf {C}}}\) from the continuous covariance ring of degree m. The query computing the covariance matrix is:

For each \(X_j\)-value x, the lifting function is \(g_{X_j}(x) = (1, \varvec{s}, \varvec{Q})\), where \(\varvec{s} \) is an \(m \times 1\) vector with all zeros except the value of x at position j, i.e., \(\varvec{s} _j=x\), and \(\varvec{Q} \) is an \(m \times m\) matrix with all zeros except the value \(x^2\) at position (j, j): \(\varvec{Q} _{(j,j)}=x^2\).

Example 18

We show how to compute the covariance matrix using the join and view tree from Fig. 3 and the database from Fig. 6. We assume alphabetical order of the five variables in the covariance matrix. The leaf relations \(\textsf{R}\), \(\textsf{S}\), and \(\textsf{T}\) map tuples to \(\varvec{1}^{{\textsf {C}}}\) from the continuous covariance ring of degree 5.

In the view \(\mathsf {V^{@D}_{T}}\), each D-value d is lifted to a triple \((1, \varvec{s}, \varvec{Q})\), where \(\varvec{s} \) is a \(5\times 1\) vector with one non-zero element \(\varvec{s} _4=d\), and \(\varvec{Q} \) is a \((5 \times 5)\) matrix with one non-zero element \(\varvec{Q} _{(4,4)} = d^2\). Those covariance triples with the same key c are summed up, yielding:

The views \(\mathsf {V^{@B}_{R}}\) and \(\mathsf {V^{@E}_{S}}\) are computed similarly. The view \(\mathsf {V^{@C}_{ST}}\) joins \(\mathsf {V^{@D}_{T}}\) and \(\mathsf {V^{@E}_{S}}\) and marginalizes C. For instance, the payload for the key \(a_2\) is:

The root view \(\mathsf {V^{@A}_{RST}}\) maps the empty tuple to the ring element \(\sum _{i\in [2]}{{\mathsf {V^{@B}_{R}}}}{[{ a_i }]} *^{{\textsf {C}}} {{\mathsf {V^{@C}_{ST}}}}{[{ a_i }]} *^{{\textsf {C}}} g_{A}(a_i)\). This payload has aggregates for the entire join result: the count of tuples in the result, the vector with one sum of values per variable, and the covariance matrix. \(\square \)

Linear Regression with Categorical Variables. Real-world datasets consists of both continuous and categorical variables. The latter take on values from predefined sets of possible values (categories). It is common practice to one-hot encode categorical variables as indicator vectors. This encoding can blow up the size of the covariance matrix and increase its sparsity.

Instead of blowing up the covariance matrix with one-hot encoding, we can capture the interactions between continuous and categorical variables as group-by queries: SUM(X) group by Y, when X is continuous and Y is categorical, and SUM(1) group by X and Y, when X and Y are categorical. Using the group-by queries ensures a compact representation of such interactions by considering only those categories and interactions that exist in the join result. We can encode those interactions as values from the relational data ring, introduced next.

Definition 8

Let \({\mathbb {F}}[{\mathbb {R}}]\) denote the set of relations over the \({\mathbb {R}}\) ring, the zero \(\varvec{0}\) in \({\mathbb {F}}[{\mathbb {R}}]\) is the empty relation \(\{\}\), which maps every tuple to \(0\in {\mathbb {R}}\), and the identity \(\textbf{1}\) is the relation \(\{ () \rightarrow 1 \}\), which maps the empty tuple to \(1 \in {\mathbb {R}}\) and all other tuples to \(0 \in {\mathbb {R}}\). The structure \(({\mathbb {F}}[{\mathbb {R}}], \uplus , \otimes , \varvec{0}, \varvec{1})\) forms the relational data ring.Footnote 4

We generalize the continuous covariance ring from Definition 7 to uniformly treat continuous and categorical variables as follows: we use relations from the relational data ring as values in c, \(\varvec{s} \), and \(\varvec{Q} \) instead of scalars; we use union and join instead of scalar addition and multiplication; we use the empty relation \(\varvec{0}\) instead of the zero scalar. The operations \(+^{\textsf {C}}\) and \(*^{\textsf {C}}\) over triples \((c, \varvec{s}, \varvec{Q})\) remain unchanged.

Definition 9

The generalized covariance ring of degree m is the covariance structure of degree m over \({\mathbb {F}}[{\mathbb {R}}]\).

For clarity, we show the operations \(+^{\textsf {C}}\) and \(*^{\textsf {C}}\) of the generalized covariance ring \({\textsf {C}}\) of degree m.

where \(c = c' \uplus c''\), \(\varvec{s} _j = \varvec{s} '_{j} \uplus \varvec{s} ''_j\), \(\varvec{Q} _{(i,j)} = \varvec{Q} ''_{(i,j)} \uplus \varvec{Q} ''_{(i,j)}\);

where \(c = c' \otimes c''\), \(\varvec{s} _j = (c '' \otimes \varvec{s} '_{j}) \uplus (c ' \otimes \varvec{s} ''_j)\), and \(\varvec{Q} _{(i,j)} = (c '' \otimes \varvec{Q} '_{(i,j)}) \uplus (c ' \otimes \varvec{Q} ''_{(i,j)}) \uplus (\varvec{s} '_{i} \otimes \varvec{s} ''_{j}) \uplus (\varvec{s} ''_{i} \otimes \varvec{s} '_{j})\).

The lifting function \(g_{X_j}\) now depends on whether \(X_j\) is continuous or categorical. For each \(X_j\)-value x, \(g_{X_j}(x) = (\varvec{1}, \varvec{s}, \varvec{Q})\), where \(\varvec{1} = \{() \rightarrow 1\}\), \(\varvec{s}\) is an \(m\times 1\) vector with all \(\varvec{0}\)s except \(\varvec{s} _j = \{ () \rightarrow x \}\) if \(X_j\) is continuous and \(\varvec{s} _j =\{ x \rightarrow 1 \}\) otherwise, and \(\varvec{Q} \) is an \(m \times m\) matrix with all \(\varvec{0}\)s except \(\varvec{Q} _{(j,j)} = \{ () \rightarrow x^2 \}\) if \(X_j\) is continuous and \(\varvec{Q}_{(j,j)} = \{ x \rightarrow 1 \}\) otherwise.

Example 19

We compute the covariance matrix using the view tree and database from Ex. 18 assuming that \(C\) is categorical.