Abstract

The Mid-Atlantic region of the USA has experienced increasing annual precipitation amounts in recent decades, along with more frequent extreme events of greater magnitude. Unlike many US regions that have suffered increasing drought conditions from higher evapotranspiration demand, positive trends in the Mid-Atlantic accumulated precipitation are greater than the recent increases in reference evapotranspiration. The temporal correlation between precipitation events and soil moisture capacity is essential for determining how the nature of drought has changed in the region. This analysis has shown that soil moisture scarcity has declined in nine of ten subregions of the Mid-Atlantic that were analyzed from 1985 to 2019. Two algorithms were deployed to draw this conclusion: Climatol enabled the use of the FAO-56 Penman-Monteith equation on daily observation station data for which complete records were unavailable, and the second algorithm calculated soil moisture levels on a daily basis, more accurately capturing drought conditions than common methods using weekly or monthly summaries. Although the declining drought trends were not statistically significant, a result of more extreme events and higher evapotranspiration rates, the inclusion of direct data from an expanded set of locations provides greater clarity from the trends, allowing policymakers and landowners to anticipate changes in future Mid-Atlantic irrigation water demand.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Climate change has exacerbated drought in many regions of the USA (Prein et al. 2016; Seager et al. 2018), a trend that is forecast to continue as temperatures further warm (Easterling et al. 2017; Gowda et al. 2018). Three different definitions of drought—meteorological, hydrological, and agricultural—although correlated, rely on different metrics and measure distinct impacts (Wehner et al. 2017). Meteorological drought arises from precipitation deficits, agricultural drought measures deficiencies in direct vegetative water supply, and hydrologic drought quantifies inadequate flow and volume in bodies of water. Large-scale climate models projecting changes in seasonal or annual precipitation totals must relate these simulations to other climatic conditions for stakeholders to understand the real-life implications of the results (Lehner et al. 2017; Collins et al. 2013). This analysis will describe how soil moisture levels have recently changed in the Mid-Atlantic region in the USA, quantifying trends in agricultural drought.

The Mid-Atlantic USA is known for its temperate climate with abundant precipitation falling throughout the year (Dupigny-Giroux et al. 2018). Before European settlement, the region was 95% forested (Stolte 2000), and roughly half of this land has been converted into agricultural plots (the majority of which are rain-fed), pasture, and urban areas. Most remaining forests are privately held and yield commercial timber (USDA 2012). In addition to the importance of crop and timber production for the US economy, the region houses the most heavily populated metropolitan area in the USA, the Nation’s largest and sixth-largest cities, and the seat of the federal government. Southern parts of the Mid-Atlantic, like the Virginia Tidewater, lie firmly in the Southeast, while northern areas of metropolitan New York City are considered to be part of New England.

While other areas that are highly susceptible to wildfire and water scarcity, such as the Western USA and Mediterranean region (Hoerling et al. 2012), have received greater attention than the Mid-Atlantic, capturing trends in climatic parameters that measure drought conditions and using the findings to estimate changes in water scarcity are consequential even for an area that is less vulnerable to extreme future drying (Berkowitz and Blanco 2019). In the “Scope” section, existing literature relating to trends in the Mid-Atlantic moisture levels will be summarized, and the central questions answered by this analysis will be outlined. This will be followed by a “Methodology” section in which two algorithms deployed in the analysis are introduced and described. The results are then discussed and relevant conclusions drawn.

2 Scope

A wide scope of studies related to changes in precipitation and other climatic factors affecting soil moisture levels in the Mid-Atlantic region have been successfully conducted and provide context for this work. That research has found the region has been experiencing higher average annual amounts of rainfall and frozen precipitation due to a wetter climate, as defined in the meteorological context (Howarth et al. 2019). The magnitude of this increase depends on the period of study, selection of states, utilized datasets, and time series data methodology, but relatively large trends have been recorded. Walsh et al. (2014) found that the northeast region (an area that includes the northern two-thirds of the Mid-Atlantic) has experienced the largest overall growth in precipitation in the Continental USA, with the post-1991 period having 8% higher totals relative to the 1901–1960 timeframe. Other authors have used different methods to draw the same conclusion. Hayhoe et al. (2007) found an increase of 10 mm decade−1 examining an earlier period that covered New England and the northern Mid-Atlantic (1900–1999), while Kunkel et al. (2013) found a similar positive trend of 10.2 mm decade−1 when adding southern Mid-Atlantic states and extending the period of analysis to 2011. These results have been confirmed elsewhere (Horton et al. 2014).

Along with findings that the region has experienced enhanced precipitation, studies also have related the meteorological data to agriculturally defined dryness. Krakauer et al. (2019) found that a typical year in 2015 could expect 125 mm of additional precipitation relative to that same year at the start of the twentieth century and used the standardized precipitation evapotranspiration index (SPEI) to temporally relate how the amount of moisture hitting the surface compares to the amount transferred from the ground into the atmosphere. The authors used monthly SPEI trends estimated for gridded data to demonstrate a decrease in the frequency of long-duration drought in the northern Mid-Atlantic states, grouped with New England. Yet, hydrologic intensification was also evident by positive trends in SPEI variance. Therefore, reductions in agricultural drought intensity and duration were smaller than expected under the wetter climate if all other conditions had remained at status quo. Additional literature provided differing findings on soil dryness in the region, with some articles using data and methodology that yielded declines in drought severity (Apurv and Cai 2019) while other authors drew the opposite conclusion (Ahmadalipour et al. 2016). Using an alternative drought indicator, the Palmer Drought Severity Index (PDSI), Ficklin et al. (2015) found decreasing drought conditions, with the region wedged between wetter trends in New England and drying trends in the Southeast. The heterogeneity of these findings is unsurprising and can be attributed to the different assumptions described earlier (Frei et al. 2015).

Showing the importance of how data is chosen and how the timeframe can influence results, concurrent analyses were conducted using three datasets on different timescales (Huang et al. 2017). Direct observation records were analyzed as well as values from two gridded sets; the obtained trends varied. The study also demonstrated that precipitation trend slopes became successively steeper as the analysis period incorporated more recent data and excluded observations from the early twentieth century. In addition to these robust increases, a greater number of stations displayed positive trends in the later period, comprising 90% of the 525 locations selected. The authors postulated that the annual precipitation in the Northeast could more accurately be modeled by a changepoint in the sample mean occurring in 2002, after which time accumulated summer and fall precipitation remained at elevated levels. In a later analysis by Huang and others (Huang et al. 2018), it was found that 48% of the precipitation falling during 273 post-1996 extreme events could be attributed to tropical cycles, with another 25% caused by frontal passages.

As precipitation totals have increased, so have the number and severity of extreme events (Griffiths and Bradley 2007; Brown et al. 2010). Walsh et al. (2014) documented that from 1958 to 2012, the amount of rain and melted snow falling during the top 1% of wettest days grew by 71%. Howarth et al. (2019) found that from 1979 to 1996, there were six 24-h periods that received 150 mm or more of precipitation; this number grew to 25 instances between 1997 and 2014. This paper does not discuss the large-scale patterns of atmospheric circulation influencing the frequency, magnitude, and location of extreme events. Connections have been documented (Marquart Collow 2016, Agel et al. 2015), although the extent of anthropogenic influence on weather patterns remains uncertain (Vallis et al. 2014). Ahn and Steinschneider (2019) correlated increases in regional precipitation amounts and intensities to changes in the frequency of six prevailing weather states, finding that occurrences of the driest pattern have declined as the number of days in the wettest state has grown.

In a region prone to flooding (Wehner et al. 2017), changes in rainfall intensity are highly consequential. While drought was responsible for 38% of crop losses and damages in the Northeast USA, excess moisture caused 34% of the total (Wolfe et al. 2017). In urban areas, these trends have been exacerbated by sprawl that has grown the footprint of impervious surfaces. An analysis found that the watershed of the Accotink Creek in Fairfax County, Virginia went from having 3% of its area covered by impervious surfaces to a 33% covering 45 years later (Jennings and Jarnagin 2002). In addition to causing erosion and raising the level of pollutants, higher amounts of runoff have also been linked to agricultural and hydrological drought, as streamflow variance has increased the frequency of low- and high-flow periods (Kang and Sridhar 2017). In order to accurately quantify how extreme precipitation events have affected soil moisture levels, there must be an hourly rate estimate. For example, Howarth et al. (2019) calculated that the top 1% of daily precipitation events from 1979 to 2014 was 51 mm (approximately 2 in.). While this represents a large amount of water falling in a 24-h period, absorption rates cannot be calculated without a finer timescale resolution. If rain fell at a steady rate of 2 mm per hour over the entire day, unsaturated ground could fully absorb the water; but if that same amount of water fell within an hour, much would be lost to runoff and could not directly replenish soil moisture. Since hourly data is sparse, this analysis will assume that all precipitation reaching the unsaturated ground can replenish soil moisture on a daily basis.

As described above, the literature has shown high levels of agreement that the Mid-Atlantic has become meteorologically wetter (although most studies include areas of New England) and more susceptible to intensifying extreme events, with both trends accelerating in recent years. Drought studies have indicated decreases in dry periods, but there is lesser confidence in such trends due to higher variability in wet and dry patterns. Moreover, global and regional climate change models predict that higher evapotranspiration demand will lead to drier soils even in areas with positive precipitation trends (Brown and DeGaetano 2013), as increasing soil moisture scarcity has already been documented in areas of the USA that have experienced stable rainfall (Smith and Chang 2020). In temperate regions like the Mid-Atlantic, evapotranspiration will also rise as warming temperatures increase the length of the growing season (DeGaetano 1996; Kramer et al. 2015). Studies using the SPEI and PDSI to measure agricultural drought rely on monthly or weekly historical summaries that can miss the development of flash droughts. These droughts have an incubation time of less than 1 week (Ford and Labosier 2017) and are fueled by increasingly common warm temperatures.

To more accurately understand how climatic changes have affected soil moisture levels in the Mid-Atlantic, two algorithms were deployed for this analysis. The first, ClimatolFootnote 1, was used to homogenize observation station data to ensure a large sample size that offers sufficient coverage. The second, a recursive daily soil moisture algorithm, estimated watering demand to preserve vegetation in a well-watered state and aggregated measures of daily moisture deficits. The methodology allows for greater sensitivity and accuracy when measuring drought conditions, without sacrificing coverage found in gridded sets. While the soil moisture algorithm has been used at a small number of the Mid-Atlantic observation sites and has been introduced in previous studies (Smith and Chang 2020), it has not been applied to region-wide data capturing the majority of certified locations. Similarly, while Climatol has been utilized in studies on several continents (e.g., Zhang et al. 2020), it had not yet been deployed with North American data nor with trends in evapotranspiration. This analysis is also the first in which the algorithms have been paired.

3 Methodology

Many of the analyses mentioned above used station precipitation records from the Global Historical Climatology Network (GHCN)Footnote 2, a highly accurate dataset maintained by the National Centers for Environmental Information (NCEI). Huang et al. (2017) compared trends in GHCN data with gridded values from Livneh et al. (2013) and high-resolution reanalysis data from the North American Regional Reanalysis (NARR) (Mesinger et al. 2006). While seasonal trends in precipitation were generally in the same direction from all three sources, differences were observed with values that occasionally diverged by 2- or 3-fold. In order to preserve accuracy when estimating soil moisture balance, it was determined that climate data should originate from recorded observational data. Since GHCN records do not include dewpoint data, more limited Global Summary of the Day (GSOD)Footnote 3 observations, also maintained by the NCEI, supplemented the GHCN precipitation and wind data used here.

The Mid-Atlantic region was divided into 10 subregions (subsequently referred to as grids) in which homogenized station data was averaged to estimate the amount of water penetrating the ground compared to moisture lost to the atmosphere on a daily basis from the period 1 January 1985 to 31 December 2019. GHCN observation locations, supplying wind and precipitation values, and GSOD locations, providing maximum temperature, minimum temperature, and dewpoint (from which relative humidity was calculated), are shown in Figs. 1 and 2, respectively. In order to be included in the average, each station needed a minimum of 3650 daily observations, or 28.5% period coverage. Stations with shorter periods of records were found to have more frequent inhomogeneities and irregularities so were therefore excluded. Each station’s record was checked for homogeneity by applying the Standard Normal Homogeneity Test (SNHT) (Alexanderson 1986) via the R package ClimatolFootnote 4. Climatol used a default value of SNHT equal to 25 on data that was aggregated into monthly average values when determining if inhomogeneities existed. Since both GSOD and GHCN data has been subjected to quality checks by the National Oceanic and Atmospheric Administration, there was a low of risk of erroneous outlier observations (for which Climatol searched and removed), but inhomogeneities were still present in station records.

Inhomogeneities arise as observation equipment is replaced, if stations are relocated to a different part of a campus, or if the surrounding environment experiences changes in vegetation or other outside disturbances (Aguilar et al. 2003). For example, a grove of trees growing within close proximity of a recording station can partially shelter the station from wind and rain. A case is given to illustrate the point: In Washington, DC, there is a periodic discussion about the suitability of Ronald Reagan Washington National Airport as the District’s official observation site. While much of the controversy has focused on the location’s abnormally warm temperatures or the fact that it is not located within Washington (Foster and Leffler 1981), some believe that the increasing number of tall buildings located about 1 km to the west in Crystal City may influence precipitation or wind readings. The National Airport is the driest reporting station within 90 km of the Washington Metropolitan Area.Footnote 5 Indirect obstruction, equipment changes, or recalibrations that may influence observed values are more common in non-first-order stations with reporting gaps lasting several months or years.

Using SNHT criteria, Climatol identified breakpoints and split the series at such occurrences. As a result, the station would then have two or more partial series, depending on the number of inhomogeneities. Once disaggregated back into a daily timescale, all series were then reconstructed to have a complete record throughout the 1985–2019 period. Distance weighting, given in Eq. 1, was used in each daily reconstruction, with records from nearby neighboring stations having higher weights.

where dk,j is the distance between the stations k and j, and h is equal to 100 km.

However, this process was not done with original data, but with the normal ratio values (each series was “normalized” by dividing by its respective average), using the idea developed by Paulhus and Kohler (1952), but without taking into consideration any balance in the directions of the surrounding stations. This procedure does not make use of correlations between stations, which were assumed to be decaying with distance, allowing the adaptive use of neighboring data available at each time step even when the common observation period was short or absent. Normalized estimations of all data in each station record were obtained in this way, allowing the calculation of spatial anomalies by subtracting the estimated values from the observed series. It is then on this series of anomalies that outliers could be deleted and inhomogeneities detected by applying the SNHT in a highly recursively way, splitting the series into more homogeneous sub-periods. Finally, newly estimated data were used to infill any missing data in the sub-series and obtain full-length reconstructions, although only those adjusted from the last homogeneous sub-period were used. All reconstructed, complete, homogenous series were then averaged across each grid to produce daily measures of temperature, relative humidity, windiness, and precipitation. Further discussion of this approach will be presented later in the paper, but Climatol enabled a more inclusive approach for stations that did not continuously report during the analysis period and prevented inclusion bias. Resulting daily values were then utilized in the water demand algorithm.

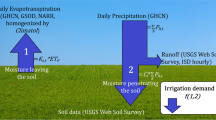

For each gridded area, reference daily evapotranspiration (ET0) (mm day−1) was estimated from the FAO-56 Penman-Monteith equation (Zontarelli et al. 2010). The standard vegetative surface covering of well-watered, clipped, cool-season fescue grass 0.12 m in height (Wright 1993) was used. In order to model the effects of the lengthening growing season, an adjustment was made on days during which the average temperature remained below 4.5 °C and grass was unable to grow: a multiplier of 0.2 was applied to ET0 (Jensen and Allen 2016). Solar radiation values are not tracked by the NCEI, so daily NARR valuesFootnote 6 obtained at each gridded area’s centroid and four vertices were averaged for the final required input of the Penman-Monteith equation.

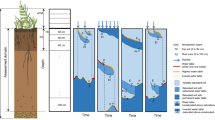

Beyond measured trends in climate parameters and ET0, all of which were aggregated into accumulated or averaged monthly values, the water demand algorithm required specific soil moisture holding capacities for its estimation of daily water availability for the reference grassy surface. Each grid’s centroid was entered into the USDA National Resources Conservation service’s soil survey database, and a 10,000 ha plot using the weighted average of the soil types’ total available water (TAW) (mm) capacity was generated for the top 1 m of soil, the depth to which fescue roots are assumed to penetrate. TAW, the difference between field capacity (saturated ground) and the wilting point (the moisture level at which plants are no longer able to draw any water and lose turgidity), can be divided into two stages. Assuming a period that begins with saturated conditions and during which no precipitation falls explains each stage. Grass will draw water at ET0 until accumulated soil moisture depletion (ASMD) reaches 40% of TAW (Wright 1993). At this point, the vegetation begins to experience moisture stress and evapotranspiration occurs at a slower rate of Ks, t ∗ ET0. The moisture stress coefficient, Ks, t, between zero and one, is given as the following:

where (t–1) is the previous day and RAWt−1 = 0.4* TAWt−1.

Equation 2 constitutes the recursive portion of the water demand algorithm, as ASMD and Ks, t is a function of each day’s previous conditions as well as any precipitation or the hypothetical irrigated water introduced on dayt at the end stage of analysis. At Ks, t values below 0.7, the visual appearance of the grass begins to degrade, acting as a trigger for irrigation when estimating required water usage (Rodriguez-Iturbe et al. 2001). Other algorithm assumptions, including the ability of past precipitation events to satiate current demand and the ability of unsaturated soils to absorb precipitation falling, can be found in Smith and Chang (2020). In summary, all moisture that reached soils below their field capacities was assumed to be retained.

Autoregressive (AR) time series modeling was performed on the seasonally adjusted data. As with the time series reconstruction performed in Climatol, all trends were calculated in R, with the order of AR model chosen, as guided by the Akaike information criteria, ranging from zero to four, with first-order AR models most commonly used. With drought variability increasing, instances of heteroskedasticity were expected and found during modeling. The Breusch-Pagan test was deployed, and when there was less than a 10% chance that sample variances were random rather than dependent on time, a robust standard error procedure was completed that provided corrected significance levels of the regression coefficients.

By summing the difference between y = 1 and Ks, t for all days of the seasonally adjusted month, a measure of drought with a daily resolution was obtained. Finally, if Ks, t ≤ 0.7 and no precipitation was recorded on dayt, a simulated watering occurred, introducing 25.4 mm (one inch) of supplemental moisture into the ground. Such events were aggregated, and trends were modeled through the AR process. By estimating water demand of preserving the well-watered reference crop, the results can provide insight to stakeholders in the agricultural and municipal water sectors of how climatic factors have changed hypothetical demand.

4 Results

Table 1 shows the number of included observation series for each climate parameter that was modeled. Reference evapotranspiration in all regions has increased, with four of ten grids showing statistically significant increases over the 1985–2019 period (Fig. 3). Statistical significance is defined as an apparent trend having less than a 10% chance of being caused by white noise in the time series. Interestingly, the only climate parameter input in the Penman-Monteith equation to show universally statistically significant trends in all regions was solar radiation, which averaged an increase of 0.26 MJ m−2 day−1 decade−1; the seasonally adjusted Mid-Atlantic average was 16.02 MJ m−2 day−1, with higher amounts in the South. Were it not for increasing solar radiation, none of the grids would have shown statistically significant ET0 trends, and four of 10 would have been negative despite higher temperatures and the lengthening growing season. This is largely due to positive trends in relative humidity in seven of ten subregions, averaging 0.62% decade−1; the 1985–2019 average value is 68.16%.

When trends in precipitation are compared with reference evapotranspiration, all grids show more robust increases in precipitation (Fig. 4). Only grid 6 has a precipitation trend that is within 200% of its respective ET0 trend. Grids 2 and 6 also are the only areas with statistically significant ET0 trends and non-significant increases in precipitation. Figures 5 and 6 illustrate the magnitude to which rainfall in an average year would increase between 1985 and 2019. These figures are not historical values of the 2 years, but rather show how much rain and frozen precipitation would be expected during normal conditions based on how the average has changed from calculated trends. While this confirms the Mid-Atlantic region is now wetter from a meteorological definition and suggests it is also wetter from an agricultural definition, trends from the water algorithm must be analyzed to examine the temporal relationship between events and soil moisture holding capacity. If, for example, the additional precipitation is associated with prolonged wet periods interspersed with deeper drought, moisture hitting saturated ground would run off or percolate into the water table, ineligible to satiate vegetative demand unless captured and stored as reserve.

Figure 7, which is unitless, shows trends in drought as measured by the integral between y = 1 and the value of Ks, t on dayt. As all data has been seasonally adjusted, the value of this drought indicator can hypothetically range between 0 (if the entire month experienced saturated soil conditions) and 30.4 (if the soil remained at its wilting point for the month). The 1986–2019 Mid-Atlantic monthly average is 3.76 (ranging from 3.18 in grid 1 to 4.32 in grid 3). The first year of the analysis period is excluded as it is used to calibrate soil moisture levels. The figure shows declining water scarcity within the region. From another perspective, there were just under 6 days out of 30.4 days (4.97 (grid 1)–6.90 (grid 3)) in a seasonally adjusted month when Ks, t remained below 0.7, the point at which the reference grass begins to degrade without supplemental water. The regional averaged trend, while not statistically significant, is − 0.32 days decade−1. This suggests a decline in drought frequency and duration. Decadal trends in the number of simulated waterings per month to maintain the reference grass were negative in all grids (− .01 (grid 6)–− 0.06 (grid 10)), although universally statistically insignificant. The average number of monthly events from 1986 to 2019 ranged from 0.40 in grid 1 to 0.55 in grid 3. The amount of modeled irrigation water demand in an average year at the beginning and end of the analysis period is mapped in Figs. 8 and 9, with Fig. 10 showing the difference in expected average 1986 usage relative to expected normal 2019 usage. Although each grid’s trend does not hold statistical significance, the universality of their negative values was noted.

5 Discussion

The combination of Climatol and the water demand algorithm provided advantages over existing approaches: using relatively complete daily observation series or relying on reconstructed gridded data. Studying a larger geographic area, Howarth et al. (2019) found 58 eligible first-order and cooperative observer network stations missing less than 5% of daily precipitation records over the 1979–2014 period. In contrast, this analysis was able to utilize 243 GHCN stations to track precipitation. After an initial analysis of all Mid-Atlantic stations in Climatol, the decision was made to only incorporate those with 10 years or more of data, but this can be adjusted. The ability to choose the SNHT criteria from which breakpoints originate and adjust the distance weighting used in series completion offered user flexibility. Moreover, Climatol’s reconstruction of partial series mitigated the risks of inclusion bias. As each subregion has small geographic climatological variations or stations with potential reporting biases, normal values differ within the partition. Thus, a warm, wet station with reporting data for the final 5 years of a period is not able to falsely influence precipitation and temperature trends. Finally, since GHCN data does not measure relative humidity or dewpoint temperature, any large-scale deployment of the FAO-56 Penman-Monteith equation is reliant on GSOD sets or prepackaged data. As part of earlier research, attempting to capture Mid-Atlantic trends using GSOD records having a minimum of 90% coverage yielded about two dozen locations over all 10 grids, with some partitions having one or no station of record.

The use of gridded sets or alternative drought metrics is subject to limitations. The discrepancies in results obtained by Huang et al. (2017) from different prepackaged products show that their composition methodology and resolution vary. Even though the authors noted a perceived bias in NARR data that underestimated coastal annual precipitation amounts and resulted in suspicious negative trends, revisions of NARR methodology could not be incorporated into the analysis. There were also advantages from the water demand algorithm that calculated daily soil moisture deficits rather than relying on trend analysis of drought indices. This algorithm’s use of the Penman-Monteith equation deploys an equation that has demonstrated robustness in capturing rapidly developing drought conditions (Peel and McMahon 2014). It also affords users’ flexibility: they may choose different levels of Ks, t at which to add irrigation water, adjust basic Penman-Monteith coefficients to reflect individual crop and soil characteristics, conduct comparisons of water demand by different vegetation, and reproduce this methodology at specific plots if accurate on-site weather observation equipment is available.

Challenges related to this methodology are briefly discussed: The averaging of recorded daily rainfall across a subregion resulted in a moderating effect that increased the number of days during which measurable precipitation fell, decreased the magnitude of extreme events, and likely underestimated modeled soil moisture deficits. For example, a first-order observation site in grid 3 had no measurable precipitation for 68.2% of the days in the 1985–2019 period and 132 mm of rain fell during its most severe 1-day precipitation event. In contrast, only 45.6% of the days in aggregated grid 3 data were dry and the largest single-day rainfall was 85 mm. Even though this resulted in a large difference in the number of wet days, the precipitation amounts were close to negligible: if a small number of stations out of 30 reported measurable precipitation, a non-zero daily value would be recorded, but this had little effect on soil moisture trends and resulting irrigation water demand. Since neither algorithm handles hourly data, the extreme rainfall moderation by grid averaging is less consequential, as the model assumes that any precipitation falling on unsaturated soils is eligible to replenish moisture levels. Given the relatively short analysis period, exceptionally dry or wet years in the beginning or end of the period can also falsely influence trends. For nearly all subregions, 2001 was the driest year on record. While 2018 was the wettest year on record in some areas, 2016, 2017, and 2019 were mostly dry to near-average years. As homogenized records were averaged across each gridded partition, there was a lower risk of outlier years exerting oversize influence, as weather systems would not have had an identical effect at all locations.

Future research is planned to estimate hourly infiltration rates of different soils and topographies. Quantifying extreme events on an hourly timescale resolution can enhance runoff modeling and determine if greater amounts of precipitation are not able to be absorbed into the ground, providing insight into Mid-Atlantic flooding issues. However, early investigation shows that fewer than one dozen Mid-Atlantic stations have sufficient hourly coverage, so probabilistic modeling would be required, and a determination of feasibility has not yet been initiated. As this area of study is focused on a coastal plain with relatively homogenous topography, the assumption that soil moisture levels in each gridded partition are independent of each other does not compromise accuracy, even as watersheds and supply are interconnected. Although this methodology is easily reproducible for many global regions where agricultural production is concentrated, it can be applied neither to valleys that receive runoff from nearby elevated terrain nor to other mountainous areas with highly variable and overall lower absorption rates without the aforementioned enhancements. Also important in the agricultural context is the source from which landowners obtain their irrigation water, as runoff that is captured in infiltration basins is eligible for reuse (Sweet et al. 2017). This issue is left for future work.

6 Conclusion

The deployment of two algorithms produced a regional analysis that used historical climate station data to evaluate trends in meteorological and agricultural drought. Using an approach that expanded the number of included observation stations reporting climate parameters necessary for the FAO-56 Penman-Monteith equation, the analysis was able to utilize high-quality data with brief periods of record that have been excluded from previous studies. The homogenized, averaged data was used to determine daily soil moisture levels and the demand for irrigation water to preserve vegetation in a well-watered state. By incorporating a high number of observation series and a daily drought estimation procedure, this methodology captured developing drought conditions that can be underrepresented in monthly or weekly historical summary analysis.

This combined methodology was used to study the Mid-Atlantic, straddling the Northeast and Southeast regions of the USA. Existing literature provided definitive evidence that the Northeast has become wetter in a meteorologically defined context in recent years, with the trend accelerating, but similar precipitation analyses for the Southeast have not shown robust increases (Easterling et al. 2017). While the Mid-Atlantic reference evapotranspiration trends are positive, largely due to increased levels of solar radiation and not attributed to declines in relative humidity, average annual precipitation has increased at a faster rate. Via the recursive soil moisture algorithm, these two trends interacted on a daily timescale, producing accurate trends of soil dryness. Results showed near-universal declines in the measure of accumulated drought severity across the Mid-Atlantic and universal declines in hypothetical irrigation water demand related to soil dryness. Only two trends in either of these categories are statistically significant, suggesting that rain is more frequently falling on saturated soils during prolonged wet periods, leading to higher variability in soil dryness than would otherwise be seen if precipitation patterns had not evolved. Interestingly, southern portions of the Mid-Atlantic had declines in drought that exceeded the decreases in the North when previous analyses have shown higher confidence in the moistening of locations at northern latitudes. As the southern Mid-Atlantic has fewer cold days with an average temperature below 4.5 °C, the threshold assumed for reference crop dormancy, the effect of the longer growing season on reference evapotranspiration in the North was more pronounced. In contrast to other regions of the USA that have shown increased drought vulnerability from climate change, the Mid-Atlantic is experiencing less drought and, if trends continue, will require less irrigation moisture per hectare to preserve vegetation in a well-watered state.

Notes

https://CRAN.R-project.org/package=climatol

References

Agel L, Barlow M, Qian J, Colby F, Douglas E, Eicher T (2015) Climatology of daily precipitation and extreme precipitation events in the northeast United States. J Hydrometeorol 16:2537–2557

Aguilar E, Auer I, Brunet M, Peterson T, Wieringa J (2003) Guidelines on climate metadata and homogenization. WCDMP-No. 53, WMO-TD No.1186. World Meteorological Organization, Geneve

Ahmadalipour A, Moradkhani H, Svoboda M (2016) Centennial drought outlook over the CONUS using NASA-NEX downscaled climate ensemble. Int J Climatol 37:2477–2491

Ahn K, Steinschneider S (2019) Seasonal predictability and change of large-scale summer precipitation patterns over the northeast United States. J Hydrometeorol 20:1275–1292

Alexanderson H (1986) A homogeneity test applied to precipitation data. J Climatol 6:661–675

Apurv T, Cai X (2019) Evaluation of the stationarity assumption for meteorological drought risk estimation at the multi-decadal scale in the contiguous U.S. Water Resour Res

Berkowitz B, Blanco A (2019) Mapping the strain on our water. The Washington Post. http://www.washingtonpost.com/climate-environment/2019/08/06/mapping-strain-our-water. Accessed 2 Mar 2020

Brown P, DeGaetano A (2013) Trends in the U.S. surface humidity, 1930-2010. J Clim Appl Meteor 52:147–163

Brown P, Bradley R, Keimig F (2010) Changes in extreme climate indicators for the northeastern United States, 1870-2005. J Clim 23:6555–6572

Collins M, Arblaster J, Dufresne J, Fichefet T, Friedlingstein P, Gao X, Gutowski W, Johns T, Krinner G, Shongwe M, Tebaldi C, Weaver A, Wehner M et al. (2013) Long-term climate change: projections, commitments and irreversibility. In Climate change 2013: the physical science basis. Contribution of Working Group I to the Fifth Assessment Report of the Intergovernmental Panel on Climate Change. Cambridge University Press, Cambridge, United Kingdom and New York, NY

DeGaetano A (1996) Recent trends in maximum and minimum temperature threshold exceedances in the northeastern United States. J Clim 9:1646–1657

Dupigny-Giroux L, Lemcke-Stampone M, Hodgkins G, Lentz E, Mills K, Lane E, Miller R, Hollinger D, Solecki W, Wellenius G, Sheffield P, MacDonald A, Caldwell C (2018) Northeast. In impacts, risks, and adaptation in the United States: fourth national climate assessment, volume II. [Reidmiller D, Avery C, Easterling D, Kunkel K, Lewis K, Maycock T, Stewart B (eds.)]. U.S. Global Change Research Program, Washington, DC, 669–742. https://doi.org/10.7930/NCA4.2018.CH18

Easterling D, Kunkel K, Arnold J, Knutson T, LeGrande A, Leung L, Vose R, Waliser D, Wehner M (2017) Precipitation change in the United States. In climate science special report: fourth national climate assessment, volume I. [Wuebbles D, Fahey D, Hibbard K, Dokken D, Stewart B, Maycock T (eds.)]. U.S. Global Change Research Program, Washington, DC, 207–230. https://doi.org/10.7930/J0H993CC

Ficklin D, Maxwell J, Letsinger S (2015) A climatic deconstruction of recent drought trends in the United States. Environ Res Lett 10:1–10

Ford T, Labosier C (2017) Meteorological conditions associated with the onset of flash drought in the Eastern United States. Agric For Meteorol 247:414–423

Foster J, Leffler R (1981) Unrepresentative Temperatures at a first-order meteorological station: Washington National Airport. Bull Am Meteor 62:1002–1006

Frei A, Kunkel K, Matonse A (2015) The seasonal nature of extreme hydrological events in the northeastern United States. J Hydrometeorol 16:2065–2085

Gowda P, Steiner J, Farrigan T, Grusak M, Boggess M (2018) Agriculture and rural communities. In climate science special report: fourth national climate assessment, volume II. [Reidmiller D, Avery C, Easterling D, Kunkel K, Lewis K, Maycock T, Stewart B (eds.)]. U.S. Global Change Research Program, Washington, DC, 391–437. https://doi.org/10.7930/NCA4.2018.CH10

Griffiths M, Bradley R (2007) Variations of twentieth-century temperature and precipitation extreme indicators in the northeast states. J Clim 20:5401–5417

Hayhoe K, Wake C, Huntington T, Luo L, Schwartz M, Sheffield J, Wood E, Anderson B, Bradbury J, DeGaetano A, Troy T, Wolfe D (2007) Past and future changes in climate and hydrological indicators in the U.S. Northeast. Clim Dyn 28:381–407

Hoerling M, Eischeid J, Perlwitz J, Quan X, Zhang T, Pegion P (2012) On the increased frequency of Mediterranean drought. J Clim 25:2146–2161

Horton R, Yohe G, Easterling W, Kates R, Ruth M, Sussman E, Whelchel A, Wolfe D, Lipschultz F (2014) Northeast. In climate change impacts in the United States: third national climate assessment. [Melillo J, Richmond T, Yohe G (eds.)]. U.S. Global Change Research Program, Washington, DC, 371–395. https://doi.org/10.7930/J0SF2T3P

Howarth M, Thorncroft C, Bosart L (2019) Changes in extreme precipitation in the Northeast United States: 1979-2014. J Hydrometeorol 20:673–689

Huang H, Winter J, Osterberg E, Horton R, Beckage B (2017) Total and extreme precipitation changes over the Northeastern United States. J Hydrometeorol 18:1783–1798

Huang H, Winter J, Osterberg E, Horton R, Beckage B (2018) Mechanisms of abrupt extreme precipitation change over the Northeastern United States. Atmos. 123:7179–7192

Jennings D, Jarnagin T (2002) Changes in anthropogenic impervious surfaces, precipitation and daily streamflow discharge: a historical perspective in a mid-atlantic watershed. J Landsc Ecol 17:471–489

Jensen M, Allen R (2016) Evaporation, evapotranspiration, and irrigation water requirements. 2nd ed. ASCE, Reston, VA. https://doi.org/10.1061/9780784414057

Kang H, Sridhar V (2017) Description of future drought indices in Virginia. Data Brief 14:278–290

Krakauer N, Lakhankar T, Hudson D (2019) Trends in drought over the Northeast United States. Water 11:1834–1851

Kramer R, Bounoua L, Zhang P, Wolfe R, Huntington T, Imhoff M, Thome K, Noyce G (2015) Evapotranspiration trends over the Eastern United States during the 20th century. Hydrology 2:93–111

Kunkel L, Stevens L, Stevens C, Sun L, Janssen E, Wuebbles D, Rennells J, DeGaetano A, Dobson J (2013) Regional climate trends and scenarios for the U.S. National Climate Assessment: Part 1: Climate of the Northeast U.S. NOAA Tech Rep NESDIS 142–1, p 79

Lehner F, Coats S, Stocker T, Pendergrass A, Sanderson B, Raible C, Smerdon J (2017) Projected drought risk in 1.5°C and 2°C warmer climates. Geophys Res Lett 44:7419–7428

Livneh B, Rosenberg E, Lin C, Nijssen B, Mishra V, Andreadis K, Maurer E, Lettenmaier D (2013) A long-term hydrologically based dataset of land surface fluxes and states for the conterminous United States. Update and extensions. J Clim 26:9384–9392

Marquart Collow A (2016) Large-scale influences on summertime extreme precipitation in the northeastern United States. J Hydrometeorol 17:2045–3061

Mesinger F, DiMego G, Kalnay E, Mitchell K, Shafran P, Ebisuzaki W, Jovic D, Woollen J, Rogers E, Berbery E et al (2006) North American regional reanalysis. Bull Amer Meteor Soc 87:343–360

Paulhus JLH, Kohler MA (1952) Interpolation of missing precipitation records. Month Weath Rev 80:129–133

Peel M, McMahon T (2014) Estimating evaporation based on standard meteorological data – progress since 2007. Prog Phys Geogr 38:241–250

Prein A, Holland G, Rasmussen R, Clark M, Tye M (2016) Running dry: The U.S. Southwest’s drift into a drier climate state. Geophys Res Lett 43:1272–1279

Rodriguez-Iturbe I, Porporato A, Laio F, Ridolfi L (2001) Plants in water-controlled ecosystems: active role in hydrologic processes and response to water stress: I. Scope and general outline. Adv Water Resour 24:695–705

Seager R, Lis N, Feldman J, Ting M, Williams A, Nakamura J, Liu H, Henderson N (2018) Whither the 100th meridian? The once and future physical and human geography of America’s arid-humid divide. Part I: the story so far. Earth Interact 22:1–22

Smith R, Chang D (2020) Utilizing recent climate data in eastern Texas to calculate trends in measures of aridity and estimate changes in watering demand for landscape preservation. J Clim Appl Meteor 59:143–152

Stolte K (2000) State of Mid-Atlantic region forests in 2000: summary report. U.S. Department of Agriculture, Forest Service, Washington, D.C.

Sweet S, Wolfe D, DeGaetano A, Benner R (2017) Anatomy of the 2016 drought in the Northeastern United States: implications for agriculture and water resources in humid climate. Agric For Meteorol 247:571–581

United States Department of Agriculture, Economic Research Service (2012) Major Land Uses. https://www.ers.usda.gov/data-products/major-land-uses. Accessed 4 Apr 2020

Vallis G, Zurita-Gotor P, Cairns C, Kidston J (2014) Response of the large-scale structure of the atmosphere to global warming. Q J Roy Meteor Soc 141:1479–1501

Walsh J, Wuebbles D, Hayhoe K, Kossin J, Kunkel K, Stephens G, Thorne P, Vose R, Wehner M, Willis J, Anderson D, Doney S, Feely R, Hennon P, Kharin V, Knutson T, Landerer F, Lenton T, Kennedy J, Somerville R (2014) Our Changing Climate. In Climate Change Impacts in the United States: The Third National Climate Assessment. [Melillo J, Richmond T, Yohe G, (eds.)]. U.S. Global Change Research Program, Washington, DC, pp 19–67. https://doi.org/10.7930/J0KW5CXT

Wehner M, Arnold J, Knutson T, Kunkel K, LeGrande A (2017) Droughts, floods, and wildfires. In climate science special report: fourth national climate assessment, volume I. [Wuebbles D, Fahey D, Hibbard K, Dokken D, Stewart B, Maycock T (eds.)]. U.S. Global Change Research Program, Washington, DC, pp 231–256. https://doi.org/10.7930/J0CJ8BNN

Wolfe D, DeGaetano A, Peck G, Carey M, Ziska L, Lea-Cox J, Kemanian A, Hoffman M, Hollinger D (2017) Unique challenges and opportunities for northeastern US crop production in a changing climate. Clim Chang 146:231–245

Wright J (1993) Nongrowing season ET from irrigated fields. [Allen R, Neale C (eds.)]. Management of irrigation and drainage systems: integrated perspectives, asce irrigation and drainage division, Park City, UT, pp 1005–1014

Zhang G, Azorin-Molina C, Chen D, Guijarro J, Kong F, Minola L, McVicar T, Son S, Shi P (2020) Variability of daily maximum wind speed across China, 1975-2016: An Examination of Likely Causes. J Clim 33:2793–2816

Zontarelli L, Dukes M, Romero C, Migliaccio K, Morgan K (2010) Step by step calculation of the Penman-Monteith evapotranspiration (FAO-56 method). University of Florida Institute of Food and Agricultural Sciences Doc AE459, p 10. http://edis.ifas.ufl.edu/ae459. Accessed 2 Feb 2020.

Acknowledgments

The authors would like recognize Kyle Belott for his assistance with the graphics.

Funding

Der-Chen Chang’s research is partially supported by a National Science Foundation grant (DMS-1408839) and the McDevitt Endowment Fund at Georgetown University.

Author information

Authors and Affiliations

Contributions

Dr. Robert Kennedy Smith was the primary author, with technical support from Drs. José A. Guijarro and Der-Chen Chang.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflicts of interest.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Smith, R.K., Guijarro, J.A. & Chang, DC. Utilizing homogenized observation records and reconstructed time series data to estimate recent trends in Mid-Atlantic soil moisture scarcity. Theor Appl Climatol 143, 1063–1076 (2021). https://doi.org/10.1007/s00704-020-03467-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00704-020-03467-y