Abstract

Autism Spectrum Disorder (ASD) is a neurodevelopmental condition with a wide spectrum of symptoms, mainly characterized by social, communication, and cognitive impairments. Latest diagnostic criteria according to DSM-5 (Diagnostic and Statistical Manual of Mental Disorders, Fifth Edition, 2013) now include sensory issues among the four restricted/repetitive behavior features defined as “hyper- or hypo-reactivity to sensory input or unusual interest in sensory aspects of environment”. Here, we review auditory sensory alterations in patients with ASD. Considering the updated diagnostic criteria for ASD, we examined research evidence (2015–2022) of the integrity of the cognitive function in auditory-related tasks, the integrity of the peripheral auditory system, and the integrity of the central nervous system in patients diagnosed with ASD. Taking into account the different approaches and experimental study designs, we reappraise the knowledge on auditory sensory alterations and reflect on how these might be linked with behavior symptomatology in ASD.

Similar content being viewed by others

Introduction

Autism spectrum disorder

Autism spectrum disorder (ASD) is a neurodevelopment condition characterized by deficits in social communication and interaction, and restricted/repetitive behavioral features (Peça et al. 2011), showing first symptoms typically around three years old (Robertson and Baron-Cohen 2017). In 2018, the Centers for Disease Control and Prevention (CDC) reported approximately one in 44 children diagnosed with ASD, with a four times higher prevalence in boys versus girls. There is a wide variation in the type and severity of symptoms in ASD. Unlike other diagnoses, such as a specific phobia, it is not easy to draw a straight line from symptoms to diagnostic criteria. Each neurodivergent individual with ASD is unique, and the diagnosis is based on behavioral observation.

ASD diagnosis evolution: DSM-5 and ICD-11

The year 2013 was an important landmark for Autism conceptualization, with the release of the latest version of the Diagnostic and Statistical Manual of Mental Disorders (DSM–5) (American Psychiatric Association (APA) 2013). Before DSM-5, there were poor diagnostic criteria with limited reliability in assigning subcategory diagnosis (Walker et al. 2004). The diagnosis of autism was categorized by subcategories (e.g., autistic disorder, Asperger’s disorder, and pervasive developmental disorder not otherwise specified). With the fifth edition of DSM, there was an important shift in the conceptualization of dimension: Autism became a single diagnosis based on multiple dimensions. The DSM-5 refers to Autism as a Spectrum Disorder, embracing an umbrella of symptoms with wide variety and severity levels. The new diagnosis of ASD includes persistent deficits in social communication and social interaction across multiple contexts, and restricted and repetitive patterns of behavior, interests, or activities (American Psychiatric Association (APA) 2013). These symptoms are present in early developmental period, significantly interfering with individuals’ daily functioning (American Psychiatric Association (APA) 2013).

In 2018, the World Health Organization updated the classification of autism in the International Classification of Diseases (ICD-11) (Organization 2018), to be more in line with DSM-5. The latest version of ICD-11 came into effect on January 2022 (Organization 2018). Both authoritative guidebooks used by medical professionals for the diagnosis and treatment of diseases and disorders collapse autism into a single diagnosis of Autism Spectrum Disorder, embracing the same two major aspects: difficulties in initiating and sustaining social communication and social interaction, and restricted interests and repetitive behaviors (Rosen et al. 2021).

The evolution of Autism criteria diagnosis reflects the evolving concern of clinicians and, particularly, researchers. If the diagnostic criteria are not easily well defined, people with autistic-like traits might be included, leading to misleading clinical cohorts that might hinder a clear understanding of autism neurobiology. As an example, as DSM-IV (American Psychiatric Association (APA) 1994), the previous version of the International Classification of Diseases, ICD-10 (Organization 1993), included a third category for language problems. The ICD-10 subdivided communication and social interaction into different clusters. Given that clinicians found it hard to categorize symptoms as either, both DSM-5 and ICD-11 now combine social and language deficits into a single measure. These deficits seem to be interrelated, being understood that a child with limited language or communication problems would have limited social interaction. However, the cause of these communication and language impairments is still unclear.

Sensory processing in ASD

Over the years, the focus of ASD diagnosis was mainly related to cognitive, communication, and social impairments. But more recently, the criteria of diagnosis started to include another feature: the sensory processing domain (Robertson and Baron-Cohen 2017). The DSM-5, and more recently the ICD-11, now include sensory hyper- and hypo-sensitivities as part of the restricted and repetitive behavior domain (American Psychiatric Association (APA) 2013; Organization 2018). Atypical responses to sensory stimuli can also help to differentiate ASD from other developmental disorders (Stewart et al. 2016).

Sensory integration is a neurobiological process that refers to the integration and interpretation of sensory stimuli from surrounding context to the brain. Atypical sensory experience seems to occur in 85% of individuals with ASD and can be noticeable early in development (Robertson and Baron-Cohen 2017). Symptoms can include hypersensitivity, avoidance, diminished responses, or even sensory seeking behavior (Robertson and Baron-Cohen 2017; Sinclair et al. 2017). These alterations in sensory processing may interfere with the typical development of higher order functions such as social communication, which requires quick, accurate integration of sensory cues in real time (Robertson and Baron-Cohen 2017; Siemann et al. 2020). Many studies have looked into multisensory processing, highlighting the role of temporal binding windows as a critical factor in information integration (Robertson and Baron-Cohen 2017). The concept of temporal binding window refers to a window of time where specific modalities are perceptually bound (Hillock et al. 2011). A recent review from Siemann and colleagues describes numerous findings related to the presence of multisensory and temporal processing deficits in individuals with ASD (2020) (Siemann et al. 2020), suggesting atypical multisensory temporal processing with increased stimulus complexity. Although atypical sensory processing can be present across several sensory domains, atypical behavioral response to environmental sounds is among the most prevalent and disabling sensory feature of autism, with more than 50% of individuals exhibiting impaired sound tolerance (Williams et al. 2021).

Auditory sensory processing in ASD

Many individuals diagnosed with ASD have auditory sensitivities, and it is common to observe children with ASD covering their ears, even in the absence of salient background noise. Individuals with ASD can present hyper- or hypo-sensitivity to a variety of sensory stimuli, which can cause a wide range of behavioral manifestations and maladaptations (Sinclair et al. 2017). Children underresponsive to sensory input appear to be unaware of auditory stimuli that are salient to others. Studies have shown that pronounced to profound bilateral hearing loss or deafness seems to be present in 3.5% of all cases, and hyperacusis (increased sensitivity or decreased tolerance to sound) affects nearly 18–40% of children with autism (Williams et al. 2021; Rosenhall et al. 1999; Wilson et al. 2017). Individuals with auditory hypersensitivity can notice auditory stimuli at intensity levels that are not salient or would not trouble others, which may cause sensory overload. For a person experiencing sensory overload, everyday sounds can be unpleasant and overwhelming, and that may lead to poor emotional and social regulation (Wilson et al. 2017), with impairments at the level of filtering out irrelevant input. The appropriate filtering of sensory information is crucial for healthy brain function, allowing salient awareness and focus on relevant social cues. Deficits in auditory processing can thus affect behavior, with profound effects on a person’s life, especially in the development of higher level skills such as social communication. However, the knowledge about phenomenology or neurocognitive underpinnings that underlie these auditory sensory processing deficits is still scarce. In fact, many studies have been performed before DSM-5 criteria, having a confound effect of potential misdiagnosis or comorbidity with other disorders.

To avoid such potential confounds, we opted for a systematic review of auditory sensory function in patients with ASD, considering studies performed between 2015 and 2022 (2 years after DSM-5 implementation), thus considering the recent adaption of autism diagnosis as a spectrum disorder.

The following questions will be addressed:

-

A.

What is known about the integrity of Cognitive Function in auditory-related tasks?

-

B.

What is known about the integrity of Peripheral Auditory System in auditory-related tasks?

-

C.

What is known about the integrity of Central Auditory Nervous System in auditory-related tasks?

Methods

Protocol

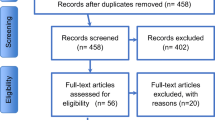

This systematic review follows the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) guidelines (Page et al. 2021), and review methods were established before initiating literature screening.

Search strategy and screening criteria

The search strategy started in September 2021 and used MeSH terms from PubMed. Used databases: PubMed, Web of Science (all databases/collections), Scopus, and Scielo. Search terms included “autis*” AND “auditory”, and were limited to studies published between 2015 and March of 2022. The minimum year of 2015 was considered as the mark of first experimental studies using DSM-5 as a diagnosis of Autism Spectrum Disorders (American Psychiatric Association (APA) 2013).

Study design

Studies were eligible if they consisted of original and reported data, with comparison group design, written in English and published in peer-reviewed journals. Conference abstracts, books and documents, editorials, letters, pilot studies, case studies, reviews, and meta-analyses were excluded.

Participants

Only studies with human participants with a clear diagnosis of ASD were considered. To avoid confounding results, studies with ASD participants that were also diagnosed with other symptomatology and/or disorders were excluded: epilepsy, Williams Syndrome, ADHD, Rett Syndrome, Angelman Syndrome, Fragile X Syndrome, etc. Participants diagnosed with ASD in early childhood but without current ASD symptoms, or participants with the diagnosis based on the DSM-IV without other assessments of diagnosis validation (e.g., ICD-11 and ADOS) were also excluded. No restrictions of age, gender, racial, ethnic, and socioeconomic groups were made.

Outcomes

Studies that did not include relevant outcome data were excluded (e.g., ASD parents’ symptomatology; studies focused on visual processing that used auditory cues in the task that are not referred to in the results; studies focused on pilot training or intervention programs).

Results and critical discussion

Most of the tools used to confirm ASD diagnosis in the studies included on this review were: Short-Sensory Profile (SPP) (Dunn 1999), Childhood Autism Rating Scale (CARS) (Schopler et al. 2010), Autism Diagnostic Observation Schedule, Second Edition (ADOS-2) (Lord et al. 2012), and the Peabody test (Dunn and Dunn 1965). To estimate global intellectual ability (full-scale IQ), most tests used were the Wechsler Adult Intelligence Scale or the Wechsler Intelligence Scale for Children (Wechsler and Kodama 1949), and Raven’s Colored Progressive Matrices Test (Measso et al. 1993). Even if there is no official diagnosis for ASD being described as low or high functioning, some papers identified some ASD groups as being high-functioning autism (usually subjects with an IQ mean higher than 85).

Integrity of cognitive function in auditory-related tasks

Cognitive function can be assessed by mental processes such as perception, attention, memory, decision-making, or language comprehension, and in the domain of social cognition (e.g., theory of mind, social and emotional processing). People with ASD have a profile of cognitive strengths and weaknesses, demonstrated by different levels of deficits in social and non-social cognition patterns. To understand how auditory input might influence cognitive function in ASD, it is useful to assess cognitive integrity at several domains, while performing auditory-related tasks.

Attention, detection, and discrimination

Communication in everyday life depends crucially on the ability to detect stimuli and dynamically shift attention between competing auditory streams. We use attention to direct our perceptual systems toward certain stimuli for further processing. Difficulties in attention processes can directly affect social interaction and communication abilities. When compared to neurotypical controls, individuals with ASD seem to have poorer performance in many auditory attention tasks: divided attention (ability to attend to two or more stimuli at the same time), sustained attention (ability to attend stimulus over longer periods), selective attention (ability to ignore details of stimulus not attended), and spatial attention (selecting a stimulus based on its spatial location). Main findings are summarized in Table 1.

Low-level auditory discrimination ability seems to vary widely within ASD. When different paradigms were compared, no differences were found for adults with ASD regarding selective attention (maintain vs switch tasks) (Emmons et al. 2022). However, infants and children with ASD presented poorer performances for sustained and control attention, attentional disengagement, and reorienting (Keehn et al. 2021), when compared with typically developing peers (TD). Spatial attention, a key component for social sound orientation, was measured by looking at central or peripheral locations of target sounds location while ignoring nearby sounds (Soskey et al. 2017). Adults diagnosed with ASD show diffuse auditory spatial attention independently of stimulus complexity: simple tones, speech sounds (vowels), and complex non-speech sounds (Soskey et al. 2017). Interestingly, children with ASD show more diffuse auditory spatial attention, indicated by increased responding to sound at adjacent non-target locations (Soskey et al. 2017). Subjects with ASD also seem to be less sensitive to global interference, or mixed cues, when compared with just one cue (local targets processed slower due to the presence of inconsistent global information) (Foster et al. 2016).

Responses to sensory stimuli: visual versus auditory—distinctive multisensory integration

Information from different sensory modalities, such as auditory and visual stimuli, can be easily integrated simultaneously by the nervous system. The visual sensory system of ASD individuals seems to be similar to TD peers, as opposed to their auditory system (Little et al. 2018). Different sensory modalities combined can create multisensory facilitation. As an example, speech perception is boosted when a listener can see the mouth of a speaker and integrate auditory and visual speech information. However, multisensory integration (MSI) is thought to be distinctive in ASD (Ainsworth et al. 2020) (Table 2), with temporal processing deficits that are not generalized across multiple sensory domains (Ganesan et al. 2016).

Low-level perceptual processes

An experimental design based on Miller’s race model (Miller 1982) was tested by assessing the accuracy and reaction times of unisensory and bisensory (visual and auditory) trials and by comparing children with ASD with typically developing peers (TD) as a control group (Stewart et al. 2016). Findings did not support impaired bisensory processing for simple non-verbal stimuli in high-functioning children with ASD (Stewart et al. 2016). Reduced bisensory facilitation was found for both ASD and TD groups, suggesting intact low-level audiovisual integration. Another study assessed the reaction time of target stimuli detection task (auditory, 3500 Hz tone; visual, white disk ‘flash’; and audiovisual, simultaneous tone and flash) by comparing younger (age 14 or younger) and older participants (age 15 and older) (Ainsworth et al. 2020). The authors found greater multisensory reaction time facilitation for neurotypical (NT) adults, increased for older participants, and reduced multisensory facilitation for ASD, both in younger and older participants.

High-level perceptual processes

Audiovisual integration of basic speech and object stimuli was compared in children and adolescents diagnosed with ASD (Smith et al. 2017). To measure audiovisual perception, a temporal window of integration was established, where individuals identify which video (temporally aligned or not) matched the auditory stimuli. Results showed similar tolerance of asynchrony for the simple speech and object stimuli for controls, while ASD adolescents showed decreased tolerance of asynchrony for speech stimuli. These results were associated with higher levels of symptom severity (Smith et al. 2017). A similar result of instantaneous adaptation to audiovisual asynchrony was found in ASD adults (Turi et al. 2016). This means that reduced adaptation effect in ASD individuals, and poorer multisensory temporal acuity, may be hindering speech comprehension and consequently affecting social communication.

Social interaction implies attentional shift, such as joint attention (when a person intentionally focuses attention on another person). A study tested whether impairments in joint attention were influenced by self-relevant processing. Results showed that gaze-triggered attention is influenced by self-relevant processing and symptom severity in ASD individuals, with reduced cueing effect to voice compared to tone targets (Zhao et al. 2019).

The stimulus presentation modality can lead to differences in figurative language comprehension, even with high-verbal ASD individuals (matched with TD peers on intelligence and language level) (Chahboun et al. 2016). When visual and auditory modalities are compared, individuals with ASD present the poorest performances in the auditory modality, showing difficulties to understand culturally based expressions (Chahboun et al. 2016). Greater difficulties in language processing seem to be correlated with higher auditory gap detection thresholds (minimum interval between sequential stimuli needed for individuals to perceive an interruption between the stimuli) (Foss-Feig et al. 2017).

The influence of noise on cognitive performance

Multisensory facilitation can be highly valuable, especially in noisy environments. A diminished capacity to integrate sensory information across modalities can potentially contribute to impaired social communication.

Cognitive performance in ASD was assessed with experimental manipulation of noise (quiet and 75 dB gated broadband noise) adding different levels of task difficulty (easier and harder) (Keith et al. 2019). Results show that for NT adolescents, it is easier to adapt to the effect of noise. In contrast, the ASD group shows a detrimental effect of noise and increased arousal in the harder condition (Keith et al. 2019). Children with ASD who attend more time to the stimulus, such as looking longer to the speaker’s face, show better listening performance (Newman et al. 2021). When different levels of signal-to-noise ratio (SNR) are compared, ASD and NT groups exhibited greater benefit at low SNRs relative to high SNRs in phoneme recognition tasks (Stevenson et al. 2017). The ASD group shows a reduced performance in both auditory and audiovisual modalities for whole-word recognition tasks with high SNRs (Stevenson et al. 2017), when compared with TD peers. High-functioning ASD adults show a speech comprehension rate of nearly 100% in the absence of noise, similar to IQ-matched controls (Piatti et al. 2021; Dwyer et al. 2021). However, under noise conditions, the ASD group presents worse speech comprehension and neural over-responsiveness (Piatti et al. 2021; Dwyer et al. 2021). Such results suggest that noise may increase the threshold needed to extract meaningful information from sensory inputs, affecting speech comprehension.

Increased auditory perceptual ability: differences in auditory stimuli detection and discrimination

Regarding auditory perceptual capacity, there is a wide variety of experimental designs, paradigms, and tested parameters, holding different results (Table 3). When ASD and TD individuals were compared, significant differences were found in the ASD group regarding several acoustic parameters, such as deficits in the intensity or loudness of stimuli, higher auditory duration discrimination threshold of stimuli, and enhanced memory for vocal melodies (Kargas and Lo 2015; Isaksson et al. 2018; Weiss et al. 2021). Musical and vocal timbre perception seems to be intact for older ASD participants (Schelinski et al. 2016), with one study showing decreased music ability, related with deficits at the level of working memory and hyperactivity/inattention of young ASD children (Sota et al. 2018).

One of the common parameters used to test auditory perceptual capacity is pitch detection, the degree of highness, or lowness of a tone. Studies have shown a heightened pitch detection in the ASD group, for expected and unexpected sounds (Remington and Fairnie 2017). Regarding the developmental trajectory of pitch perception, findings reveal enhanced pitch discrimination in childhood with stability across development in ASD (Mayer et al. 2014). Interestingly, this low-level auditory ability seems to vary widely within ASD, being related to IQ level and severity of symptoms (Kargas and Lo 2015). Adults diagnosed with ASD show superior pitch perception associated with sensory deficits (Mayer et al. 2014). Individual differences in low-level pitch discrimination tasks can predict performance on the higher level global–local tasks (Germain et al. 2018). Results seem to vary between detection and discrimination tasks across studies. A discrimination task assesses the ability to detect the presence of a difference between two or more stimuli. Interestingly, no differences were found between low and higher level pitch processing (Chowdhury et al. 2017) when ASD children were matched in terms of IQ levels and verbal and non-verbal cognitive abilities, as measured by WASI subtests yield (Wechsler and Kodama 1949). For ASD adults without intellectual impairment, when participants were asked to identify modulation-depth differences (e.g., − 3 dB), no differences were found in auditory modulation–depth discrimination tasks (Haigh et al. 2016). ASD adults showed difficulties in discriminating, learning, and recognizing unfamiliar voices (particularly pronounced for learning novel voices) (Schelinski et al. 2016). These results may allow us to speculate that an increased auditory capacity, such as heightened pitch detection, may lead to better performance at detection or discrimination tasks, but may also entail a sensory overload, greatly enhancing the difficulty of the task for different stimuli parameters.

Auditory processing and language-related impairments

Atypical sound perception and auditory processing deficits may underlie language and learning difficulties in ASD, impairing social communication skills. Studies have found that ASD patients tend to display worst performance in whispered speech compared to normal speech (Venker et al. 2019; Georgiou 2020) and several differences have been reported in terms of language processing and speech perception (Table 4).

The integration of information for successful language processing and speech comprehension relies on precise and accurate temporal integration of auditory and visual cues. Children with ASD seem to possess a wider window of audiovisual temporal integration (Stevenson et al. 2017; Noel et al. 2017), and higher auditory gap detection thresholds (Foss-Feig et al. 2017). Deficits at the level of temporal integration may contribute to impaired speech perception. Studies of auditory processing and language paradigms show that language processing can be facilitated in children with ASD using contextual references such as semantically-constrained verbs compared to neutral verbs (Venker et al. 2019).

Language components, such as phonemes, syntax, and context, work together with features to create meaningful communication among individuals. All languages use pitch and contour to carry information about emotions and to communicate non-verbally. The way we say something affects its meaning, just by using different emphasis and intonation. Phonological use of pitch dissimilarities is distinct between tone and non-tone languages at several levels of their phonological hierarchy (prosodic word, phonological phrase, intonational phrase, and utterance tiers) (Beckman and Pierrehumbert 1986). Tones are associated with lexical meaning to distinguish words. English, as a non-tone language, uses contrastive pitch specifications at a segmental level, to express syntactic, discourse, grammatical, and attitudinal functions. A tone language, like Mandarin, uses constructive pitch specifications at every level of the phonological hierarchy (Best 2019). Pitch processing was assessed in individuals who speak Mandarin, by testing melodic contour and speech intonation (Jiang et al. 2015). ASD individuals presented superior melodic contour but comparable contour discrimination, and when compared with controls, ASD performed worse on both identification and discrimination of speech intonation (Jiang et al. 2015), suggesting a differential pitch processing in music that does not compensate for speech intonation perception deficits.

Auditory processing and cognitive function assessment: EEG and ERP

Many studies focusing on language processing use electroencephalography (EEG) to measure brain activity in real time. EEG signals reflect electrical activity produced by the brain (brain waves). An approach known as event-related potential (ERP), uses EEG activity that is time-locked with ongoing sensory, motor, or cognitive events, to help identify and classify perceptual, memory, and linguistic operations (Sur and Sinha 2009). To avoid confounding effects in the interpretation of ERP results, most studies only include children with normal or corrected-to-normal vision, normal hearing, and absence of genetic or neurological disorders, history of seizures, or past head injury. To check for hearing disabilities, many studies also perform a hearing screening with pure-tone audiometry at 20 dB. However, there is a wide variation regarding the inclusion criteria of participants (Table 5).

Event-related potential waveforms have a series of positive and negative voltage deflections, referred by a letter indicating polarity (N, negative; P, positive) and a number indicating their latency in milliseconds (Luck and Kappenman 2012). As an example, N100 (also known as N1) indicates a negative polarity between 75 and 130 ms, usually associated with pre-attentive perceptual processing followed by P200 associated with stimulus detection (150–275 ms after stimulus presentation) (Key et al. 2005). Other components will be addressed in this review, such as P300 (P3; stimulus categorization and memory updating), N400 (semantics), and P600 (syntactic processing). Mismatch negativity (MMN) is the negative component of a waveform obtained by subtracting event-related potential responses to a frequent stimulus (standard) from those to a rare stimulus (deviant).

Some ASD studies have focused on early ERP components (Table 6), mainly showing reduced P1 latencies and amplitude (Patel et al. 2019; Arnett et al. 2018; Bidet-caulet et al. 2017). Looking particularly to patterns of auditory habituation, data suggest reduced habituation for ASD children in the P1 component and a decrease in MMN amplitude (Ruiz-Martínez et al. 2019). Pronounced habituation slopes have been observed for neurotypical subjects, with greatest difference at channel Fp1 and in the frontal and fronto-central electrodes (Jamal et al. 2020). For neurotypical subjects, P1 is stronger in the initial section of stimuli sequence, showing a gradual reduction in ERP amplitude over time (habituation) (Jamal et al. 2020). On the other hand, the ASD group shows absence of reduction between the first and last ERP, or even a positive slope toward the end of the experiment (reduced habituation) (Jamal et al. 2020). Regarding temporal processing with gap detection tasks, when compared with TD peers, ASD children with intact cognitive skills present reduced P2 amplitude (Foss-feig et al. 2018). The P2 component is associated with attention and stimulus classification, suggesting that the presence of a gap enhances the difficulty of primary level detection, and subsequent perceptual processes may fail to engage. In speech-in-noise tasks, high-functioning ASD adults present higher P2 amplitudes (Borgolte et al. 2021). These results suggest that the P2 component can be affected by the audiovisual process and specificities of both stimuli and condition. Early MMN (around 120 ms) show enlarged responses only for pure-tone context, with no other modulations dependent on action sound context (Piatti et al. 2021). Individual differences in auditory ERPs with four different loudness intensities (50, 60, 70, 80 dB SPL) (Dwyer et al. 2020) were tested using hierarchical clustering analysis based on ERP responses. It was possible to verify a pattern of linear increases in response strength accompanied by a disproportionately strong response to 70 dB stimuli for ASD young children, correlated with auditory distractibility (Dwyer et al. 2020). In a similar study, some clusters of ASD individuals presented weak or absent N2 by 60 dB and increased strength with higher intensities (70 or 80 dB) (Dwyer et al. 2021). However, there was an overlap between ASD and TD participants in several clusters (Dwyer et al. 2020, 2021), suggesting that sensory impairments in ASD might be largely accounted for the wide interindividual variability that exists within the spectrum.

A large-scale study tested 133 children and young adults in an auditory oddball task, comparing mismatch negativity and P3a results, as well as temporal patterns of habituation (N1 and P3a) (Hudac et al. 2018). The P3a component is indexed by different levels of attentional orienting. Results showed heightened sensitivity to change to novel sounds in ASD subjects, increased activation upon repeated auditory stimuli, and dynamic ERP differences driven by early sensitivity and prolonged processing (Ruiz-Martínez et al. 2019; Hudac et al. 2018). To test the neural indices of early perceptual and later attentional factors underlying tactile and auditory processing, ASD and age- and non-verbal IQ-matched TD peers were compared (Kadlaskar et al. 2021). Using an oddball paradigm where children watched a silent video while being presented with tactile and auditory stimuli, results showed reduced amplitudes in early ERP responses for auditory stimuli but not tactile stimuli in ASD children. The differences in neural responsivity were associated with social skills (Kadlaskar et al. 2021). Regarding top–down and bottom–up attentional processes, young ASD children with no developmental delay seemed to present more negative MMN voltages and an attenuated response of P3a mean voltages when deviant tones were presented in speech (Piatti et al. 2021). When compared with TD peers, bilingual children with ASD seemed to be less sensitive to lexical stress, with reduced MMN amplitude at the right central–parietal, temporal–parietal, and temporal sites (Zhang et al. 2019). Children with developmental delay did not differ from the control group in the P3a component (Piatti et al. 2021). Compared to adults, there was enhanced bottom–up processing of sensory stimuli in participants with high-functioning autism, with early ERP responses positively correlated to increased sensory sensitivity (Xiaoyue et al. 2017). In ASD children, more positive MMR was observed in the processing of speech pitch height (Zhang et al. 2019), suggesting hypersensitivity to higher frequency tones.

Another relevant feature for auditory processing is prosody, the rhythmic and intonational aspect of language. Event-related potential (ERP) studies show preserved processing of musical cues in ASD individuals, but with prosodic impairments (Depriest et al. 2017). Differences in prosody such as intonation are highly relevant for language-related impairments in ASD with a significant impact on communication. To test prosodic processing, many studies record brain responses to neutral and emotional prosodic deviances reflecting change detection (MMN) and orientation of attention toward change (P3a).

Children with ASD present atypical neural prosody discrimination, with distinct patterns depending on the experimental design. For instance, the P3a component becomes more prominent upon greater difference of stimulus change. Studies using sound-discrimination tasks with lexical tone contrasts based on naturally produced words (e.g., a vowel with neutral or emotional prosody such as sadness) show different P3a depending on the IQ level of ASD children. A study with high-functioning ASD children shows diminished amplitude of P3a (Lindström et al. 2018). In a study comparing children and adults, low-functioning children revealed a larger P3a compared to the control, with P3a latencies shorter in ASD adults (Charpentier et al. 2018). Regarding change detection, ASD adults presented an earlier MMN (Charpentier et al. 2018). Both results suggest atypical neural prosody discrimination and deficits in pre-attentional orientation toward any change in the auditory environment (Lindström et al. 2018; Charpentier et al. 2018). When vowel and pure tone are compared within tone languages (smaller physical difference between standard and deviants compared to non-tone languages), ASD children still presented diminished response amplitudes and delayed latency of MMN for pure tones, and smaller P3a for vowel (Huang et al. 2018). At a pre-attentive perceptual processing level, low-functional ASD children present increased response onset latencies during sustained vowel production, with reduced P1 ERP amplitudes (Patel et al. 2019; Bidet-caulet et al. 2017; Charpentier et al. 2018), lacking neural enhancement in formant-exaggerated speech tasks (Chen et al. 2021). When a non-speech sound is followed by a speech sound, TD children present match/mismatch effects at approximately 600 ms, as opposite to ASD (Galilee et al. 2017). Interestingly, when speech and non-speech sounds are compared between high-functioning ASD children matched on age, gender, and non-verbal IQ with TD group, ASD children show impaired processing ability regarding speech pitch information, but no differences are observed for non-speech sounds (Zhang et al. 2019; Chen et al. 2021). Regarding speech differentiation, there seems to be no differences in voice perception. Low-functioning children with ASD seem to present atypical response to non-vocal sounds (Bidet-caulet et al. 2017), with atypical consonant differentiation in the 84- to 308-ms period, related to individual differences in non-verbal versus verbal abilities (Key et al. 2016).

There is EEG evidence for altered semantic processing in children with ASD, characterized by delayed processing speed and limited integration with mental representations (Piatti et al. 2021; Distefano et al. 2019). Semantic processing refers to encoding the meaning of a word and relating it to similar words or meanings. During passive and active listening, there is a pre-attentive stage of sound segregation. When individuals attend to auditory stimuli, there are some components that can distinguish the stage of neural processes. The Subject’s own name (SON) is a unique auditory stimulus for triggering an orienting response. Sounds need to stand out from the background to elicit an orienting response. SON is automatically distinguished from other names without reaching awareness, causing involuntary attention take (Tateuchi et al. 2015). Children with ASD present the ability to selectively respond to one’s own name with greater negativity over frontal regions, reflecting early automatic pre-attentive detection (Thomas et al. 2019), when compared with TD peers. After the pre-attentional level, there is an orienting response to cause a shift of attention, where the auditory system makes use of amplitude and timing cues to differentiate sounds from different spatial locations, giving them context. Although high-functioning adults with ASD do not seem to have general impairment in auditory object formation, they seem to display alterations in attention-dependent aspects of auditory object formation (P400 missing) (Thomas et al. 2019), as well as disruption of auditory filtering (Huang et al. 2018). These deviations at top–down processing might be linked with deficits in perceptual decision-making.

Some studies highlight the importance of proper ability to anticipate upcoming sensory stimuli. ASD adults seem to be less flexible than controls in the modulation of their local predictions (Goris et al. 2018), affecting their function of global top–down expectations. Adults diagnosed with ASD present deficits in predictive coding of sounds and words in action–sound context, with no deficits in predictive coding for neutral, non-action, and sounds (Grisoni et al. 2019; Laarhoven et al. 2020). When using varied auditory rhythms, both ASD and TD presented decreased MMN, with no difference in error prediction (Knight et al. 2020).

There are some interesting data regarding cognitive function lateralization in children with ASD. When comparing ERP responses for speech and non-speech sounds, speech-related events where only detected over temporal electrodes in the left hemisphere (Galilee et al. 2017), compared to bilaterally N330 match/mismatch responses in neurotypical children (Galilee et al. 2017). Regarding ASD children with impairments specifically at language level, results show hypersensibility to sounds with decreased MMN latency at the left hemisphere (Green et al. 2020). Older children with non-verbal IQ impairments presented bilateral attenuation in a word-learning task, as opposed to attenuated P1 amplitude present in the left hemisphere of TD children (Arnett et al. 2018). Young children with high-functioning ASD seem to fail to activate right-hemisphere cognitive mechanisms, probably associated with social or emotional features of speech detection.

Increased auditory perceptual capacity: impact on social and emotion perception

Impairments at the level of emotional perception can be related to internal distractions or overload of sensory information that hinder social communication. Studies using sympathetic skin response show that children diagnosed with ASD exhibit delayed habituation to auditory stimuli (Bharath et al. 2021). The predominant state of sympathetic nerves can affect the predisposition to filter and perceive cues, and also influence anxiety levels or valence of social cues. Individuals with ASD seem to present higher levels of perceptual capacity, which might be correlated with higher levels of sensory sensitivity (processing more information at any one time) (Brinkert and Remington 2020).

Emotional expressiveness is highly important for emotional perception, carrying non-verbal information that helps forming social judgments, and predisposing individuals for further social engagement. A study focusing on emotion recognition in intellectually disabled children with ASD found that these children displayed poorer performance in recognizing surprise and anger in comparison to happiness and sadness (Golan et al. 2018). A different study tested emotion performance by comparing ASD children with siblings without ASD diagnosis and TD peers (Waddington et al. 2018). Interestingly, the authors found not only poorer emotion performance in terms of speed and accuracy in the ASD group, but also poorer performance in the ASD sibling group compared to TD controls (Waddington et al. 2018), suggesting a possible contextual influence in emotional perception.

Children with ASD seem to present lower autonomic reactivity to human voice, with impairments in the vocal emotion recognition tests, albeit normal pro-social functioning (social awareness and social motivation) (Anna et al. 2015; Schelinski and Kriegstein 2019). This interesting result raises the question whether social impairments in ASD could be a consequence of hyperarousal from sensory overload.

Regarding other assessment paradigms, children with ASD seem to struggle with face-to-face matching, when compared to voice-face and word-face combinations (Golan et al. 2018), with worst performance in noisy environments (Newman et al. 2021). The ability to integrate facial-voice cues seems to be correlated with socialization skills in children with ASD (Golan et al. 2018).

Together, these studies highlight the importance of reappraising cognitive function in light of the sensory systems, such as the auditory system: the Peripheral Auditory System, where auditory pathway starts, as well as the Central Auditory Nervous System, where all auditory information gets integrated and processed.

Assessing the integrity of peripheral auditory system

Sounds are produced by acoustic waves that reach the external auditory canal and travel to the eardrum causing vibration of the tympanic membrane at specific frequencies (the typical hearing frequency range in humans is 20 to 20.000 Hertz, cycles per second) (Peterson et al. 2022). The vibration of the tympanic membrane causes vibration of tiny bones in the middle ear that amplify the signal and send it to the cochlea. The signal travels to fluid-filled sections of the cochlea, the scala vestibuli and the scala tympani, and oscillations of these sections transmit energy to the scala media, causing shifts between the tectorial and basilar membrane. The basilar membrane contains receptor hair cells that can be either activated or deactivated by shifts that open or close potassium channels. Cells near the base of the cochlea respond to high frequencies, with increased flexibility to respond to lower frequencies toward the apex of the cochlea (Peterson et al. 2022; Zhao and Müller 2015; Delacroix and Malgrange 2015). Inner hair cells are responsible for the majority of auditory processing. Outer hair cells synapse only on 10% of the spiral ganglion neurons (Delacroix and Malgrange 2015). Neurons within the spiral ganglion mostly synapse at the base of hair cells to the auditory nerve (cochlear nerve). The cochlear nerve then sends up information to the brain cortex through a serious of nuclei in the brainstem: the cochlear nuclei (medulla), superior olivary complex (pons), lateral lemniscus (pons), inferior colliculus (midbrain), and medial geniculate nucleus (midbrain) (Felix et al. 2018). Although the primary auditory pathway mostly ascends to the cortex through the contralateral side of the brainstem, all levels of the auditory system have crossing fibers, receiving and processing information from both the ipsilateral and contralateral sides (Peterson et al. 2022).

The auditory brainstem response (ABR) in ASD

Reported cognitive deficits in ASD regarding speed and accuracy of sound stimuli assessment (Distefano et al. 2019; Waddington et al. 2018) might potentially be contributed by impairments in impulse initiation at the cochlear nerve, or impairments in the transmission and conduction of signals along the brainstem, as it occurs in demyelinating diseases. One tool used to measure neural functionality of the auditory brainstem is the auditory brainstem response (ABR) (Celesia 2015; Jewett and Williston 1971). Participants usually perform hearing screenings to exclude hearing disabilities, such as lesions below or within the cochlear nuclei. Of note, since 2015, there are few experimental studies assessing the integrity of the peripheral auditory system in ASD.

The auditory brainstem response (ABR) measures electrical signals associated with the propagation of sound information through the auditory nerve to higher auditory centers, after an acoustic stimulus. Major alterations in neuronal firing along this pathway can be detected as changes in auditory brain response ([99]). Complementary to the use of a short click as a classical acoustic stimulus, ABR is also often performed with complex sounds, such as syllables, which incorporate an array of complexity more similar to speech. Two major categories of ABR stimulus are detailed below: click-ABR and speech-ABR.

Most ABR studies report differences in auditory brainstem processing for ASD individuals when compared with control groups (Table 7). Results are discussed taking into consideration the age of participants and different time-points, their cognitive pattern, type of stimuli, and experimental design.

Click-evoked brainstem responses (click-ABR) in ASD

In the first 10 ms after a click, click-ABR produce five-to-seven waveforms (wave I–VII). These wave peaks reflect the propagation of electrical activity as it travels along the auditory pathway, providing information in terms of latency (speed of transmission), amplitude of the peaks (interpreted as the number of neurons firing), inter-peak latency (time between peaks), and interaural latency (correlation between left and right ear) (Musiek and Lee 1995).

Some studies with click-ABR (Table 8) show longer latencies for ASD participants in wave V, and longer latency of inter-peak intervals in waves I–V and III–V (Tecoulesco et al. 2020; Jones et al. 2020). However, these experiments were done with toddlers. Interestingly, a recent study tested older children in a click-ABR paradigm (Claesdotter-Knutsson et al. 2019). Results do not show differences in ABR latency, but reveal a higher amplitude of wave III in ASD, suggesting functional alterations at the pons region (Claesdotter-Knutsson et al. 2019). This experiment used binaural sound exposure, showing a higher degree of correlation between left and right ear in the ASD group. A different study found absence of asymmetry in the latency of wave V between the right and left sides, both in ASD and control groups (ElMoazen et al. 2020). The authors further report a reduced amplitude in the binaural interaction component in younger children with ASD, which might reflect reduced binaural interaction at younger ages not related to artificial latency shift (ElMoazen et al. 2020). A recent study looking at newborns later diagnosed with ASD at the age of 3–5 years showed ABR latency delays (Delgado et al. 2021), suggesting the emergence of differences in acoustic processing, at the brainstem level, right after birth. A more recent study tested adults with ASD and found no differences in absolute ABR wave latencies (Fujihira et al. 2021). The authors showed a shorter summating potential (SP), suggesting normal auditory processing in the brainstem for ASD adults (Fujihira et al. 2021).

Speech-evoked brainstem responses (speech-ABR) in ASD

The most common speech-ABR stimulus used is the universal syllable/da/. After the stimulus, a subcortical response emerges as an ABR waveform of seven peaks (V, A, C, D, E, F, O). Peaks can reflect either a change in the stimulus (i.e., onset, offset, or transition) or the periodicity of the stimulus. There are two main components in speech-ABR: the onset response (waves V and A) and the frequency-following response (FFR; waves D, E, and F). The wave C represents the consonant–vowel transition, while the wave O represents the end of the vowel. Analysis of FFR includes measurements of response timing (peaks), magnitude (robustness of encoding of specific frequencies), and fidelity (comparison of FFR consistency across sessions, which gives an index of how stable the FFR is from trial to trial (Krizman and Kraus 2019)).

Similarly to click-ABR, speech-ABR studies (Table 8) also show longer latencies for ASD participants, including high-functioning ASD individuals (Ramezani et al. 2019). Recently, a longitudinal study included two assessment time-points (interval of 9.68 months) to look for longitudinal changes in speech-ABR (Chen et al. 2019). No differences were found in the TD group, whereas differences were found in the ASD group (shorter latency wave V and increased amplitude wave A and C), suggesting an age effect for ASD (Chen et al. 2019). Another study had two assessment time-points to investigate neural response stability, a metric measured by trial-by-trial consistency in the neural encoding of acoustic stimuli (Tecoulesco et al. 2020). Results showed that children with a more stable neural encoding of speech sounds, in both groups ASD and TD, demonstrated better language processing at a phonetic discrimination task (Tecoulesco et al. 2020). Individuals’ performance was assessed in a different study through listening structured and repetitive listening exercises, with increasing difficulty levels (Ramezani et al. 2021). Results showed gradual improvement in ASD individuals’ temporal auditory skills (Ramezani et al. 2021).

A relevant association has been found between sensory overload and behavioral measures in ASD, but without relevant auditory processing association (Font-Alaminos et al. 2020). For children with ASD, the FFR signal has been described as unstable across trials (Otto-Meyer et al. 2018), tending to increase with stimulus repetition (Font-Alaminos et al. 2020), which suggests an unstable neural tracking at the level of subcortical auditory system (Table 9). The development pattern of auditory information processing was assessed in preschool children with ASD (Chen et al. 2019). Results show a positive correlation between wave A and Gesell Developmental Diagnosis Schedules (GDDS) language score (Chen et al. 2019). According to authors, the latency between V and A complex could suggest a weakened synchronization of neural response at the beginning of speech stimulus (Chen et al. 2019). A different study also revealed longer latencies of the transient FFR components (which include D, E, and F, ABR waves) in the ASD group (Ramezani et al. 2019). These results suggest a possible disturbance in brain pathways implicated in FFR generation [the direct pathway to the contra-lateral IC via the lateral lemniscus, and the ipsilateral pathway via superior olivary complex and the lateral lemniscus (Ramezani et al. 2019)] further raising the question whether this could be a potential compensatory mechanism in ASD.

Otoacoustic emission (OAE) studies in ASD

The integrity of the peripheral auditory system can also be evaluated using otoacoustic emissions (OAEs). Evoked otoacoustic emissions are produced by healthy ears in response to an acoustic stimulus delivered into a sealed ear canal. The acoustic stimulus causes basilar membrane motion which triggers an electromechanical amplification process by the cochlear outer hair cells, producing a sound that echoes back into the middle ear (otoacoustic emissions). These nearly inaudible emissions are measured using a sensitive microphone and help evaluate normal cochlear function. A study tested a group of children and adolescents with ASD (ASD with Full-Scale IQs higher than 85) with normal audiometric thresholds (Bennetto et al. 2016). Results showed that children with ASD presented reduced OAEs at 1 kHz frequency range, with no differences outside this critical range (at 0.5 and 4–8 kHz regions) (Bennetto et al. 2016), thus suggesting reduced outer hair cell function at 1 kHz. Outer hair cells synapse directly with neurons originating in the nuclei of the superior olivary complex. A morphological post-mortem study of subjects with ASD showed significantly fewer neurons in the Medial Superior Olive (MSO), a specialized nucleus from the superior olivary complex (Mansour and Kulesza 2020). Results also showed that the existing fewer neurons in the MSO are smaller, rounder, and with abnormal dendritic orientations (Mansour and Kulesza 2020). However, the small sample size, variability of post-mortem tissue origin and quality (7 post-mortem samples from drowning, seizure, or other death causes), may hinder conclusions from this study. Previously, a different study found no evidence regarding asymmetrical or reduced middle ear muscle (MEM) reflexes and binaural efferent suppression of transient evoked otoacoustic emissions responses (Bennetto et al. 2016). However, a more recent study showed OAE asymmetry, with the medial olivocochlear system being apparently more effective in the right than the left ear in ASD (Aslan et al. 2022).

Assessing the integrity of central auditory nervous system

ASD is a neurodevelopmental disorder with behavior and cognitive traits associated with atypicalities in the central nervous system (CNS). Integrity of the central nervous system can be assessed experimentally by several techniques, such as magnetoencephalography (MEG; Table 10), magnetic resonance imaging (MRI; Table 11), as well as other multimodal tools (Table 12).

Magnetic resonance imaging (MRI) is an imaging technique that is used to assess the anatomy and physiology of brain circuits. Magnetoencephalography (MEG) is a functional neuroimaging technique that detects, records, and analyzes the magnetic fields produced by electrical currents occurring naturally in the brain (Cohen 1972). While EEG records brain electrical fields, MEG records magnetic fields. Similar to EEG and ERPs, MEG signals can be also time-locked to particular events, being called event-related magnetic fields (ERFs). As previously described for N100 (EEG signal), M100 (MEG) refers to a peak signal occurring at a latency of about 100 ms after stimulus onset. Both MEG and EEG are non-invasive methods for recording neural activity providing data with high temporal resolution (measured in milliseconds), thus providing unique information in terms of timing, synchrony, and connectivity of neural activity (Port et al. 2015). Despite the fact that EEG signals might display superimposed sources of activity, EEG is useful to quickly determine how brain activity can change in response to stimuli and to directly detect abnormal activity, having the advantage of being fully or semi-portable with an accessible cost for researchers. MEG has the advantage that the local variations in conductivity of different brain matter do not attenuate the signal, providing more accurate spatial resolution of neural activity than EEG (Landini et al. 2018). Regarding EEG and MEG use in young children, these two techniques offer advantages over some neuroimaging techniques, including fewer physical constraints and the absence of radiation and noise (Port et al. 2015). Still, EEG and MEG have limited spatial resolution, making it difficult to determine the precise location of neuronal activity with confidence. In contrast, MRI provides data with good spatial resolution, but lacks a good temporal resolution at the electrophysiological level and cannot provide frequency band discrimination. Functional magnetic resonance imaging (fMRI) uses MRI to measure the oxygenation of blood flowing near active neurons, being a valuable tool for delineating the human neural functional architecture (Cole et al. 2010). Combining EEG/MEG with MRI can increase the spatial resolution of electromagnetic source imaging, while tracing the rapid neural processes and information pathways within the brain (Liu et al. 2006), making them good candidates for multimodal integration.

Auditory evoked magnetic fields’ studies in ASD

Results from studies with high-functioning ASD individuals show delayed latencies at M50 and M100 auditory evoked responses, suggesting impairments at early auditory processes in the primary and secondary auditory cortex (Claesdotter-Knutsson et al. 2019; Matsuzaki et al. 2020; Roberts et al. 2019; Port et al. 2016; Edgar et al. 2013). It is thought that the major activity underlying M100 is located in the supratemporal plane, with superior temporal gyrus (STG) as the primer M50 generator (Edgar et al. 2015). STG results from the ASD group suggest increased pre-stimulus abnormalities across multiple frequencies with an inability to rapidly return to a resting state before the following stimulus (Edgar et al. 2013). Neurotypical individuals present a negative association between age and latency of M50 and M100 (Matsuzaki et al. 2020; Roberts et al. 2020), which indicates a functional decrease with age. Results show a similar pattern for children with ASD regarding M50, but not with M100 latencies (Matsuzaki et al. 2020). A group of ASD children and TD peers were compared at two time-points, from approximately 8 to 11 years old, showing M100 latency and gamma-band maturation rates similar between both groups (Port et al. 2016). A study of cascading effects on speech sound processing found lower brain synchronization in the early stage of the M100 component for ASD children (Brennan et al. 2016). A group of younger ASD children with approximately 5 years old was assessed in a different study, showing shorter M100 latencies in the left hemisphere (Yoshimura et al. 2021). The M200, considered an endogenous response associated with attention and cognition, seems to have a maximum amplitude around 8 years old, decaying with age. This pattern of age-dependent decrease in neurotypical children was less clear in the ASD group (Edgar et al. 2015), indicating perhaps a maturational delay.

Looking at data across lifespan from individuals with ASD without intellectual disability, delayed latencies were found above 10 years old versus shorter latencies in younger children, suggesting atypical brain maturation in ASD. Minimally verbal or non-verbal children with ASD (ASD-MVNV) seem to present greater latencies delays in M50 and M100 (components associated with language and communication skills) compared to ASD children without intellectual disabilities (Roberts et al. 2019). Similar association for verbal comprehension was found in the left auditory cortex regarding M200 latency response (Matsuzaki et al. 2017; Demopoulos et al. 2017). In auditory vowel-contrast mismatch field experiments (MMF), an association was found between MMF delay and language impairments in children with ASD (Berman et al. 2016). Furthermore, in a study testing pre-attentive discrimination of changes in speech tone, the amplitude of the early MMF component (100–200 ms) seems to be decreased in left temporal auditory areas for ASD children (Yoshimura et al. 2017). This group of ASD children, also diagnosed with speech delay, seem to have increased activity in the left frontal cortex compared to other ASD children without speech delay (Yoshimura et al. 2017). Deficits in auditory discrimination have also been reported in children with ASD, namely bilaterally delayed MMF latencies (Matsuzaki et al. 2019a) as well as rightward lateralization of MMF amplitude (Matsuzaki et al. 2019b) contrasting with the leftward lateralization found in NT children (Matsuzaki et al. 2019a). ASD children with abnormal auditory sensitivity seem to have longer temporal and frontal residual M100/MMF latencies (Matsuzaki et al. 2017). These findings were correlated with the severity of auditory sensitivity in temporal and frontal areas for both hemispheres (Matsuzaki et al. 2017). Taking these data together, ASD seems to be characterized by atypical neural activity in the auditory cortex, together with impaired auditory discrimination in brain areas related to attention and inhibitory processing. Such findings seem to be highly associated with language and comprehension deficits.

EEG and MEG frequency bands in auditory tasks

EEG and MEG can be transformed to decompose its raw signal into frequency band components. In adults, the typical frequency bands and their approximate spectral boundaries are delta (δ, from 1 to 3 Hz), theta (θ, from 4 to 7 Hz), alpha (α, from 8 to 12 Hz), beta (β, from 13 to 30 Hz), and gamma (γ, from 30 to 100 Hz) (Saby and Marshall 2012). Regarding ASD studies, decreased resting alpha power has been observed in children with ASD (Pierce et al. 2021). In noise experiment conditions, ASD children seem to have increased recruitment of neural resources, with reduced beta band top–down modulation (required to mitigate the impact of noise on auditory processing) (Mamashli et al. 2017). Interestingly, in quiet conditions, no differences were found between ASD and TD peers (Mamashli et al. 2017). A different study using sensory distracters (distracters that disrupts the processing of social cue interpretation) found decreased activation in auditory language and frontal regions in high-functioning youth with ASD (Green et al. 2018).

In auditory habituation studies measuring galvanic skin response, an aversion effect has been reported in ASD, likely due to sensory information overload. Results show consistent patterns of reduced habituation in ASD individuals, without the predicted steady decline that would be expected for habituation experiments. Instead, ASD adults showed a steady increase in the galvanic skin response over the course of the sessions (Gandhi et al. 2021). Regarding phase-lock auditory stimuli, no differences were found in the low gamma frequency range between ASD and TD children around 8 years old. A decrease in low gamma-power was observed in TD subjects around 17 years old but not in the ASD group (Stefano et al. 2019). The atypical gamma-band network in ASD seems to be located around the left ventral central sulcus (vCS) in children around 10 years old (Floris et al. 2016). Older ASD participants showed more pronounced low gamma deficits (Stefano et al. 2019), suggesting an increased background gamma-power that, similar to noise, can affect proper processing of stimuli. Interestingly, a recent study tested ASD children with and without atypical audiovisual behavior and found that only children with atypical audiovisual behavior showed increased theta to low gamma oscillatory power in the bilateral superior temporal sulcus and temporal region (Matsuzaki et al. 2022).

Cortical excitatory–inhibitory balance in ASD

Proper development of the central nervous system requires a fine balance between excitatory and inhibitory (E/I) neurotransmission. This E/I balance seems to be very important for cortical gamma-band activity, given that gamma waves are generated through connections between GABAergic inhibitory interneurons and excitatory pyramidal cells (Stefano et al. 2019; Port et al. 2017). A multimodal imaging study combined MEG, MRI, and GABA magnetic resonance spectroscopy (MRS) to assess physiological mechanisms associated with auditory processing efficiency in high-functioning children/adolescents with ASD. The study found longer M50 latency combined with decreased GABA in the left hemisphere for ASD individuals, suggesting an association between sensory response latency and synaptic activity (Roberts et al. 2020). Similar results were shown in a multimodal study that assessed cortical GABA concentrations and gamma-band coherence in the auditory cortex and superior temporal gyrus. Decreased GABA1/Creatine levels were found in children/adolescents with ASD, without the gamma-band coherence association typically seen in neurotypical subjects (Port et al. 2017). A different study combining EEG and MRS showed reduced glutamine in the temporal–parietal cortex associated with greater hypersensibility to sensory input detection (Pierce et al. 2021). A post-mortem study assessed the cytoarchitecture of the anterior superior temporal area (area of Brodmann), involved in auditory processing and social cognition, by quantifying the number and soma volume of pyramidal neurons in the supragranular and infragranular layers (Kim et al. 2015). Results showed no differences between ASD adolescents and adults age-matched with a neurotypical group (Kim et al. 2015). A different study looked at cortical neural inhibition for auditory, visual, and motor stimulation (Murray et al. 2020). Results showed no increase in the initial transient response in ASD individuals, with widespread changes in stimulus offset responses (Murray et al. 2020). Although results show similar patterns of transient response in ASD and TD groups for all cortical regions, larger fMRI amplitudes were found at later response components, approximately 6–8 s after stimulus presentation (stimulus duration of 20 s) (Murray et al. 2020). These studies suggest cortical excitatory–inhibitory imbalance in areas related to auditory processing in subjects with ASD.

Auditory sensory sensitivities in ASD

Sensory sensitivities can be assessed at three different stages of sensory processing: initial response to the stimuli, habituation, and generalization of response to novel stimuli. Brain imaging studies in individuals with ASD have found auditory discrimination deficits (Matsuzaki et al. 2019a; Abrams et al. 2019), as well as increased neural responsiveness upon repeated stimuli, with larger fMRI response in the auditory cortex that seems specific to temporal patterns of stimulation (Millin et al. 2018). Children with high sensory over-responsivity showed reduced ability to maintain habituation in the amygdala (Green et al. 2019), together with increased resting-state functional connectivity between salience network nodes and brain regions implicated in primary sensory processing and attention (Green et al. 2018). Children with low sensory over-responsivity showed atypical neural response patterns, with increased prefrontal–amygdala regulation across sensory exposure (Green et al. 2019). A different study found intact temporal prediction responses with altered neural entrainment and anticipatory processes in children with ASD (Beker et al. 2021). These results might explain atypical behavioral responses observed in ASD during sensory processing, mediated by top–down regulatory mechanisms.

Differences in response to sound familiarity have also been reported in children with ASD, with reduced activity in right-hemisphere planum polare for unfamiliar voices, reduced activity in a broad extent of fusiform gyrus bilaterally, and less activity in the right-hemisphere posterior hippocampus (Abrams et al. 2019). In a different fMRI study, the authors compared brain responses to vocal changes with different levels of stimulus saliency (deviancy or novelty) and different emotional content (neutral, angry) (Charpentier et al. 2020). Results show no differences between ASD and neurotypical adults regarding vocal stimuli and novelty processing, independently of emotional content. Brain processing appears typical in both groups, with activation in the superior temporal gyrus, and with larger activation for emotional compared to neutral prosody in the right hemisphere (Charpentier et al. 2020). These results suggest that the processing of emotional cues may be placed at later processing stages, such as insular activation, or at the hippocampus level. Interestingly, a recent study show altered voice processing in ASD which seems to be present already at the midbrain level of the auditory pathway (Schelinski et al. 2022).

Deficits in auditory discrimination have also been found both in MEG and brain imaging studies (Ganesan et al. 2016; Claesdotter-Knutsson et al. 2019; Abrams et al. 2019). Individuals diagnosed with ASD seem to have deficits in predicting the offset of standard tones, engaging less resources for duration tasks compared with pitch discrimination tasks (Lambrechts et al. 2018). Spectrally complex sounds can trigger two continuous neural MEG responses in the auditory cortex: the auditory steady-state response (ASSR) at the frequency of stimulation, and the sustained deflection of the magnetic field (sustained field).

The ASSR is an oscillatory response phased-locked to the onset of the stimulus, where the frequency of stimulation is represented by the same frequency in the primary auditory cortex (Stroganova et al. 2020). Both ASD and neurotypical children with 5–6 years old seem to show right dominant 40 Hz ASSR (Ono et al. 2020). No differences were found regarding neural synchrony at 20 Hz for both ASD and TD children between 5 and 12 years old (Stroganova et al. 2020; Ono et al. 2020), suggesting a normal maturation of ASSR for low frequencies. Interestingly, the right-side 40 Hz ASSR increased with age in the neurotypical group, as opposed to ASD children (Ono et al. 2020). Reduced 40 Hz power was also found in adolescents with ASD at both right and left primary auditory cortex, with no difference in gamma-band responses (Seymour et al. 2021). Diminished auditory gamma-band responses were found in ASD children, indicating that peak frequencies likely vary with developmental age (Roberts et al. 2021).

The sustained field (SF) is a baseline shift in the electrical and magnetic signals upon exposure to a sound lasting for several seconds. The SF adapts to the probability of a sound pattern, reflecting the integration of pitch information across frequencies within the tonotopic map of the primary auditory cortex (A1) (Stroganova et al. 2020). Children diagnosed with ASD seem to have atypical higher order processing in the left hemisphere of the auditory cortex, with cortical sources of SF located in the left and right Heschl’s gyri (primary auditory cortex) (Stroganova et al. 2020).

The severity of sensory processing deficits in ASD also seems to be correlated with reduced inter-hemispheric connectivity of auditory cortices (Linke et al. 2018; Tanigawa et al. 2018) and lower verbal IQ (Linke et al. 2018). Interestingly, increased connectivity between the thalamus and the auditory cortex was found in patients with reduced cognitive and behavioral symptomatology (Linke et al. 2018; Tanigawa et al. 2018), which suggests high thalamocortical connectivity as a potential compensatory mechanism in ASD (Linke et al. 2018). Individuals diagnosed with ASD also seem to present abnormal modulation of connectivity between pulvinar and cortex, with greater increases in pulvinar connectivity with the amygdala (Green et al. 2017). Regarding processing of social stimuli, reduced connectivity between the left temporal voice area and the superior and medial frontal gyrus was found in ASD patients (Hoffmann et al. 2016). Decreased connectivity between the left TVA and the limbic lobe, anterior cingulate and the medial frontal gyrus as well as between the right TVA and the frontal lobe, anterior cingulate, limbic lobe and the caudate seems to be associated with increased symptom severity (Hoffmann et al. 2016).

Language and speech processing in ASD

Language acquisition involves the integration of top–down and bottom–up processes. Language deficits present in ASD diagnostic criteria may be related to one of these integration processes or a combination of both. ASD individuals can have sensory deficits in the bottom–up early sensory processing and/or prediction deficits related to higher order assessment.

Phonological processing seems to be disrupted in ASD children, displaying attenuated MEG response in the right auditory cortex to both legal and illegal phonotactic sequences (Brennan et al. 2016). Interestingly, ASD children do not seem to have differences in phonological competence but significantly differ in other measures, such as attention, syntax, and pragmatics (Brennan et al. 2016; Wagley et al. 2020), suggesting impairments at language structure knowledge. An event-related desynchrony of the auditory cortex in the 5–20 Hz range seems to index language ability in both children with ASD and neurotypical controls (Bloy et al. 2019). When speech and non-speech were compared, ASD children with poorer language composite scores presented a general auditory processing deficit, with atypical left hemisphere responses in the high order time-window of 200–400 ms (Yau et al. 2016), and different neural and behavioral effects of syllable-to-syllable processing in speech segmentation (Wagley et al. 2020).

When considering visual-speech recognition tasks, ASD individuals seem to have difficulties in extracting speech information from face movements (Borowiak et al. 2018). Decreased Blood Oxygenation Level Dependent (BOLD) responses were detected in the right visual area 5 and left temporal visual-speech area, as well as lower functional connectivity between these two brain regions implicated in visual-speech perception (Borowiak et al. 2018). This multimodal fMRI study combined with eye-tracking data showed that the ASD group had reduced responses not only for emotional but also neutral facial movements (Borowiak et al. 2018). High-functioning adults with ASD seem to have typical responses for voice-identity tasks, and dysfunctional speech recognition in the right posterior temporal sulcus (Schelinski et al. 2016).

Predictive processing was tested in a naturistic environment, by measuring surprisal values, or how much information of word contributes given some linguist context (Brennan et al. 2019). Results showed bilateral temporal effect for sentence-context linguistic predictions in an early time-window (from 26 to 254 ms), for both high-functioning ASD children and TD peers with 3–6 years old (Brennan et al. 2019). A different study showed that as surprisal values increase, the amplitude of the neural response also increases in the left primary auditory cortex, posterior superior temporal gyrus (pSTG), and inferior frontal gyrus (IFG) in neurotypical children (Wagley et al. 2020). However, ASD children with lower IQ levels did not display such learning pattern (Wagley et al. 2020).

A longitudinal study tested children in the first months of life (around 4 months old) who later developed ASD, at 3 years old (Lloyd-Fox et al. 2018). Using functional near-infrared spectroscopy (fNIRS), infants later diagnosed with ASD showed reduced activation to visual social stimuli across the inferior frontal (IFG) and posterior temporal (pSTS-TPJ) regions of the cortex, reduced activation to vocal sounds, and enhanced activation to non-vocal sounds within left lateralized temporal regions (Lloyd-Fox et al. 2018). These results suggest that atypical ASD cortical responses may be detectable at early stages.

A right ASD brain? Lateralization and inter-hemispheric connectivity

The inferior frontal and superior temporal areas in the left hemisphere are crucial for human language processing (Yoshimura et al. 2017). Some striking findings report ASD group differences in left and right hemispheres (Edgar et al. 2013; Sadeghi Bajestani et al. 2017). Results show lower right–left hemispheric synchronization in young children with ASD (Brennan et al. 2016), stronger rightward lateralization within the inferior parietal lobule, and reduced leftward lateralization extending the auditory cortex (Floris et al. 2016). Neurotypical peers show stronger activation in the left auditory cortex for semantic categorization tasks (Tietze et al. 2019), and hemispheric advantage that seems to be absent in ASD children (Edgar et al. 2015). Adolescents diagnosed with ASD show weaker cortical activation in the left ventral central sulcus at word comprehension tasks (Tanigawa et al. 2018), indicating atypical hemispheric functional asymmetries.

Resting-sate studies of the auditory network in patients with ASD

Resting-state connectivity is a correlated signal between functionally related brain regions in the absence of any stimulus (spontaneous signal fluctuation). Results show dynamic functional brain networks altered in children diagnosed with ASD, including cognitive control, subcortical, auditory, visual, bilateral limbic, and default-mode network (Stickel et al. 2019). High-functional ASD adults also present alterations in the anterior sector of the left insula and the middle ventral sub-region of the right insula in the ASD brain (Yamada et al. 2016). Atypical spread of activity seems to be present in ASD individuals, indicated by altered dynamic lag patterns in salience, executive, visual, and default-mode networks (Raatikainen et al. 2020). Regarding intrinsic neural timescales, both high-functional adults with ASD and TD peers presented longer timescales in frontal and parietal cortices and shorter timescales in sensorimotor, visual, and auditory areas (Watanabe et al. 2019). Individuals with ASD also seem to display a volumetric increase in the right insula (Yamada et al. 2016). Given that the right insula is primarily specialized for sensory and auditory-related functions, such volumetric expansion might be functionally correlated with previously reported ASD alterations in auditory stimuli processing and auditory sensitivity.

Diffusion MRI studies of the auditory network in patients with ASD

A recent study in children with ASD reports decreased gray matter volume at the fronto-parietal network, associated with the severity of communication score and restricted behaviors. In adults, an increase of gray matter was reported at the regions of auditory and visual networks, with the auditory network being correlated with the severity of the communication core, and visual networks with severity of repetitive and restricted behavior (Yamada et al. 2016). Uncoupled structure–function relationships in both auditory and language networks have also been reported in ASD (Berman et al. 2016), with changes in the relative weight of white matter contribution to structure–function relationships (Roberts et al. 2020).

Final remarks

Experimental design in ASD studies