Abstract

In the light of the adverse effects of climate change, data analysis and Machine Learning (ML) techniques can provide accurate forecasts, which enable efficient scheduling and operation of energy usage. Especially in the built environment, Energy Load Forecasting (ELF) enables Distribution System Operators or Aggregators to accurately predict the energy demand and generation trade-offs. This paper focuses on developing and comparing predictive algorithms based on historical data from a near Zero Energy Building. This involves energy load, as well as temperature data, which are used to develop and evaluate various base ML algorithms and methodologies, including Artificial Neural Networks and Decision-trees, as well as their combination. Each algorithm is fine-tuned and tested, accounting for the unique data characteristics, such as the presence of photovoltaics, in order to produce a robust approach for One-Step-Ahead ELF. To this end, a novel hybrid model utilizing ensemble methods was developed. It combines multiple base ML algorithms the outputs of which are utilized to train a meta-model voting regressor. This hybrid model acts as a normalizer for any new data input. An experimental comparison of the model against unseen data and other ensemble approaches, showed promising forecasting results (mean absolute percentage error = 5.39%), particularly compared to the base algorithms.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

As part of the efforts to mitigate climate change, the push for more energy-efficient buildings and cities is largely predicated on energy systems’ capacity to cope with greater penetration of intermittent renewable energy resources under high energy efficiency, adaptability, and resilience requirements [1]. At the same time, the daily interchange of data and information between systems and people has become the major driving force behind the technological development of the so-called ‘smart’ devices. In particular, the urban building infrastructure is at the center of the efforts to create smart grids, and to that end, benefiting from recent Internet of Things (IoT) technological advances, on-site smart monitoring systems, services, and advanced communication technologies have been developed and installed today to facilitate this ‘smart’ transition [2, 3].

Energy Load Forecasting (ELF) for buildings, has attracted research attention in order to improve energy efficiency and reduce environmental impacts. Monitoring the energy load of a building, not only enables occupants to have insights into their consumption patterns, but also acts as an important tool to provide accurate information to energy sector stakeholders to improve their distribution strategies and scheduling of electricity supply to the grid, despite possible fluctuations or shortage [4,5,6]. For example, Demand Response (DR) aggregators are able to accurately predict the potential demand reduction capabilities, and grid operators to effectively plan ahead for their short and long-term supply requirements [7]. The abrupt increase in the amount of data in this new era of digitalization and IoT applications has empowered such decision-making via data analytics techniques, most prominently Machine Learning (ML) methods, which utilize historical data to accurately forecast future energy load.

However, due to the constantly changing environment, energy load patterns of residential as well as commercial buildings often come with non-stationary characteristics and display seasonal trends [8]. Hence, there is added complexity in developing a single generalized forecasting approach that can adjust to the consumption patterns of each building [9].

In the context of ELF, this paper deals with analyzing the energy load time-series patterns of a smart building infrastructure and explores various predictive models and approaches to provide the most accurate predictions possible. The algorithms implemented can be categorized into shallow ML (sML) and Deep Learning (DL) techniques and finally, an ensemble approach for One-Step Ahead ELF (OSA-ELF). Our aim was to develop a fine-tuned time series forecasting model by benefiting from the basic principle of the ensemble approach, i.e. combining many predictions produced from different base models and utilizing the ones that result in overall superior forecasts. The main contributions of our work can be summarized as:

-

The developed hybrid model consists of heterogeneous pre-trained base algorithms. Their outputs are used to train a voting regressor meta-model, which acts as a normalizer for new data input. This ensemble technique improves model generalization and mitigates potential overfitting, without adding complexity.

-

The model achieves above-average results compared to other closely related literature findings. In particular, it demonstrates superior accuracy when compared to a research publication that employed the same training dataset and target variable (energy load). Furthermore, the developed hybrid model is validated against new unseen data (with similar properties), showing similar results as our original dataset.

The remainder of this paper is structured as follows: Sect. 2 presents background information and reviews the literature, Sect. 3 provides the problem definition along with a brief description of the datasets we used, the pre-processing steps, and methodology of the proposed approach for ELF. Section 4 presents the results of the experimental modeling and comparative results. In Sects. 5 and 6 experimental results are discussed along with final thoughts, implications, and future prospects.

2 Background

This section presents a review of relevant literature and reports on the predictive algorithms, ensemble methods and evaluation metrics associated with ELF.

2.1 Energy load forecasting (ELF)

The technological advancements regarding IoT devices have enabled the continuous monitoring of a building’s energy load via smart meters [10] in various resolutions, which form a so-called time series. At the same time, ML has become a key enabler of data analytics and enhanced control of energy systems (Fig. 1). ELF with high precision can lead to the proactive optimization of control choices in order to achieve improved energy efficiency, and lower operating costs in various domains and applications [9]. Overall, ELF can be categorized into four general cohorts, based on the time horizon of their forecasts, as follows [11, 12]:

-

1.

Very short-term load forecasting (VSTLF), aims at very-short time intervals, with their prediction ranging from a few minutes to an hour

-

2.

Short-term load forecasting (STLF), which typically deals with a prediction range of one hour up to 2 weeks and is a very commonly used forecasting horizon

-

3.

Medium-term load forecasting (MTLF), extends the prediction range from a couple of weeks to one year

-

4.

Long-term load forecasting (LTLF), which targets predictions extending over a year

Accurate STLF can be utilized to improve a building’s energy management plan [13]. MTLF is often used for scheduling maintenance and power supply [14]. Since practical limitations exist on the storage of electricity, electricity generation, transmission, and distribution must occur concurrently with demand. As a result, for the uninterrupted operation of national rids, the electric energy supply and demand must constantly maintain a dynamic equilibrium [7]. To that end, LTLF empowers energy producers and grid distributors to dynamically adjust their generation and transaction plans in the market [15].

2.2 Forecasting methods

In time series forecasting, the goal is to estimate how the sequence of historical values of the target variable will continue in the future. The complexity ranges from one-step forecasting which estimates a single future value (e.g. one hour ahead), to multi-step forecasting, which predicts a sequence of future values at each time step [15]. With the emergence of big data in energy-related domains, two main methods can be singled-out for performing time series ELF [10, 16] i.e., Statistical-based methods (Sect. 2.2.1) and ML methods (Sect. 2.2.2).

2.2.1 Statistical based methods

The basic concept behind such methods is to generate a stationary time series (e.g., a series with constant mean/variance), by filtering out the relevant pattern from the series (trend, seasonality, etc.). Some of the most widely used methods for time series analysis and forecasting are the Error Trend Seasonality (ETS), AutoRegressive Integrated Moving Average (ARIMA), and other variants thereof [17, 18]. These are well-established methods for time series forecasting, that are well understood, effective, and relatively easy to implement even by non-experts [19].

Despite their more simplistic nature and high interpretability, statistical methods, often do not yield convincing forecasting accuracy for complicated data patterns, compared to the more advanced ML models, especially regarding Deep Learning. As ML techniques are increasingly gaining prominence in recent times there have been numerous studies and research papers [12, 20,21,22,23,24,25,26], which highlight the weaknesses of these traditional statistical methods compared to the state-of-the-art ML algorithms, in terms of predictive performance. This is mainly due to the fact that statistical methods fail to robustly address potential nonlinear dependencies within the data [27, 28].

Moreover, whereas statistical methods are mostly designed to handle univariate inputs, ML models and ANNs, in particular, can process multiple inputs, hence they are more suitable to incorporate additional exogenous variables [29]. The so-called ‘M’competitions are widely known in the field of time series forecasting, with the most recent being the M5 ‘Accuracy’ competition. M5 was the first competition in which all of the top-ranked methods were based solely on ML algorithms that outperformed all statistical-based algorithms and their combinations. The ML methods utilized processed several correlated series and exogenous input variables and managed to provide the highest reductions in forecasting errors [30].

2.2.2 ML algorithms

The prediction methods and algorithms that we experimented within our work can be divided into shallow Machine Learning (sML or ML), for example, Decision Trees, Gradient Boosted Trees, Random Forest and Deep Learning (DL) techniques i.e., Artificial Neural Networks (ANNs).

Extreme gradient boosting (XGBoost) The XGBoost algorithm implements the weak learner by optimizing the structured loss function, and the XGBoost algorithm does not use the linear search method, it directly uses the first derivative and the second derivative of the loss function. XGBoost has some key advantages such as better regularization in order to avoid overfitting, automatized handling of missing values, and cross-validation at each iteration step [31].

Light gradient-boosting machine (LGBM) Compared to other decision tree algorithms, LightGBM employs a novel method called gradient-based one-side sampling to pinpoint the optimal split for input data. LightGBM expands on a leaf-basis in contrast with other boosting algorithms that have a level-wise or depth-wise decision tree growth [32]. It is considered that the LGBM is lightweight and requires fewer resources than other decision tree algorithms, resulting in faster training times, while maintaining high accuracy [33]. In fact, in the M5 forecasting competition, the LGBM was the most utilized method in the top-ranked submissions [30].

CatBoost CatBoost (for “Categorical boosting”) builds symmetric (balanced) trees and the leaves from the previous tree are divided using the same criteria in each phase. The feature-split pair with the lowest loss is chosen and utilized for all nodes in the level. Such a balanced tree structure contributes towards the efficient use of computational resources, decreases training times, and reduces overfitting as the structure serves as a regularization method. Furthermore, CatBoost is designed for both numerical and categorical features and takes advantage of dealing with them during training as opposed to pre-processing time [34, 35], making it overall highly effective in forecasting cases involving categorical, heterogeneous data.

Random forest The Random Forest algorithm utilized both for classification and regression problems is based on bagging ensemble (i.e. resampling from the original data different training data to feed the algorithm). Hence, it creates bootstrap samples of the data and then utilizes them to grow a decision tree, a random subsample of the features is used in each fitting process [7]. This process occurs a predefined number of times and the prediction is then the aggregation of the individual trees’ predictions, which for regression problems involves some type of averaging [36].

Artificial neural networks (ANN): multi-layer perceptron (MLP) A very common ANN architecture is known as a Multilayer Perceptron (MLP), consisting of a network of individual nodes, called perceptrons, organized in a series of layers, one input layer, a single hidden layer, and an output layer. The overall learning process is based on the backpropagation error utilizing the using the gradient descent search method. Despite its simple concept and design, MLP can learn any function, particularly by approximating non-linear correlations between input and output variables [37]. MLP has a very good capability of accurately learning from the series of past observations of a time series and making predictions of the next value or sequence of steps. However, the training process may require a high computational burden to be processed with a slow convergence of weights.

Artificial neural networks (ANN): long short-term memory (LSTM) Recurrent Neural Networks(RNNs) are designed to remember parts of past data through a methodology, called feedback, in which the training takes place not only from input to output but also utilizes a loop in the network to preserve some information and thus functions like a memory [26]. Long Short-Term Memory (LSTM), is a unique RNN architecture that can be utilized to learn temporal sequences and deal with long-term dependencies [38]. LSTM was designed to overcome the vanishing gradient problem of the standard RNN when dealing with long-term dependencies. In contrast to the standard RNN which has a series of repeating modules with a relatively simple structure, the hidden layers of LSTM have a more complicated format. Specifically, instead of neurons, LSTM networks have memory blocks that are connected through layers and introduce the concepts of gate and memory cells in each hidden layer.

2.2.3 Ensemble methods

In ML, ensemble learning techniques combine several simpler base algorithms to produce one predictive model with optimal performance results [39]. By combining predictions, ensemble techniques can deliver more reliable and robust forecasts than individual prediction models [40]. Two commonly applied ensemble approaches are Stacking and Voting.

Stacking Stacking Generalization is an ML technique that combines conventional predictive algorithms to produce a more accurate prediction by using the predictions of the trained base algorithms as a new input [41]. The architecture of the stacking method consists of two stages. The aim of the first stage is to combine predictions of base ML algorithms to generate the training data for a meta-model. The aim of the second stage is to generate and train the meta-model [41]. For regression and classification applications, the stacking ensemble technique has been shown to outperform individual ML algorithms [42, 43].

Voting To forecast the final prediction based on the voting strategy, a voting ensemble approach aggregates the predictions of multiple base algorithms. By gathering the knowledge of each base learner, voting can create more accurate predictions than any individual predictive algorithm. Furthermore, combining the predictions of multiple base algorithms contributes in addressing the issue of overfitting. [44]. In regression-based models built with voting, the predictions of each regression base model are averaged to produce a final prediction. Hence, a voting regressor is an ensemble meta-estimator that fits many base algorithm regressors, one at a time, on the whole dataset, and then averages the individual forecasts to generate a final prediction [45].

2.2.4 Performance evaluation metrics

The evaluation metrics produced to assess the performance of our predictive algorithms and ensemble hybrid model, in terms of time series forecasting, are summarized in the following points.

Mean absolute error (MAE) MAE calculates the mean of the non-negative differences between observed and predicted values. All differences have equal weight, as a result, MAE fails to punish large errors in prediction. MAE calculates the error on the same scale as the target, hence it is more interpretable [46]. MAE is defined by the formula (Eq. 1).

where \(y_i\) is the actual energy load value, \(\hat{y_i}\) is the energy load prediction and n is the total number of prediction values.

Root mean square error (RMSE) RMSE calculates the square root of the average squared difference between the observed and predicted values. This metric is able to identify and heavily penalize large errors, while it evaluates the fluctuation of model response in terms of variance [46] (Eq. 2).

Mean absolute percentage error (MAPE) MAPE calculates the absolute value of the difference between observed and predicted. Typically, it quantifies accuracy in percentage terms and is effective for evaluating the performance of the prediction model by applying more weight to positive errors in relation to negative ones [47] (Eq. 3).

Coefficient of determination or R-squared \((R^{2})\) R-squared is often referred to as the goodness of fit (of a regression model), as it determines the proportion of the variance in the dependent variable that is predictable from the independent variables. A high R-squared value indicates the predicted values fit the observed values quite well. Many factors influence the statistical measure’s quality, including the type of variables included in the model, the units of measurement of the variables, and the data transformation used [48] (Eq. 4).

2.3 Related work

A comprehensive literature search was conducted by creating key research queries as an initial screening process. This was followed by a short analysis, which involved examining the scope, objectives, and approach of each scientific work. Over recent years, researchers have been developing and experimenting with various ML algorithms, including various regression-based models, ANNs, Decision-tree based models, and Support Vector Machines (SVM) for ELF with promising results [18]. Especially in the context of building ELF, we present in this section key relevant studies.

In [49] the authors used data consisting of building occupancy levels, hourly measurements from energy meters, and weather conditions to experiment with Ensemble Bagging Trees (EBT). Their findings show that both computation speeds and forecasting accuracy have increased, making this approach a viable option for ELF as well as failure detection diagnostics applications in buildings.

Researchers in [39, 50, 51] developed predictive algorithms based on the XGBoost regressor, accompanied by pre-processing techniques (e.g. clustering), to accurately forecast energy load. The models utilized both energy load and exogenous parameters such as weather-related data. In all cases, XGBoost exhibits promising results over other base algorithms, while maintaining low execution times.

Authors in [11] experimented with data from the same nZEB smart building as in this paper, aiming to improve its energy load management. They deployed a robust approach for OSA-ELF consisting of various ensemble-based methods and forecasting algorithms. Their approach produced promising results, regardless of the time resolution, with low execution times, even without the presence of exogenous features.

The work of [40], developed ensemble-based forecasting models for the next-day energy demand, of a Hong Kong commercial building. The experimental data included both energy load and meteorological variables (such as mean temperature, relative humidity, etc), after outlier removal. In addition, extra features were extracted to represent the daily energy demand (e.g. min, max, mean). A recursive feature elimination (RFE) is utilized to choose the optimal inputs to the base prediction models, which are then optimized via leave-group-out cross-validation (LGOCV). The developed ensemble model was optimized with the genetic algorithm (GA) method and produced high accuracy, indicating its suitability for energy load management operations

The research of [52] implemented an ensemble approach utilizing Radial Basis Function (RBF) ANNs to predict the energy load of a Smart Home nZEB infrastructure in California. The RBF models were deployed, in a Nonlinear AutoRegressive with exogenous inputs(NARX) configuration. Apart from the energy load historical values, they used as inputs two exogenous variables (outdoor temperature, day unique code) and their lagged values. The results showed that the ensemble approach performs better than the individual algorithms, achieving good accuracy.

Researchers in [15] developed an STLF approach in which data from the Greek power system are processed by clustering algorithms and then fed as input to MLP models. The resulting evaluation metrics showed a clear improvement over other STLF methods in the relevant literature.

In particular, regarding LSTM, several research papers [5, 10, 26, 38, 53,54,55,56,57] have emerged, that thoroughly explore the performance of LSTM particularly in time series forecasting (for various forecasting horizons) and particularly ELF. A notable example is [16], which deployed an LSTM-based model with various configurations to forecast models for STLF to MTLF, with regard to metropolitan electricity consumption. To optimize the LSTM model hyperparameters and identify the optimal number of lagged features, a genetic algorithm (GA) was utilized. The results indicated that the proposed method showed lower forecast errors compared to other methods.

In the context of ensembling, we explored different approaches implemented in the field of ELF. Phyo et al. [58] implemented a voting regression mechanism to forecast energy load for a Korean region, with an average MAPE of 4.28%. Zhao et al. [59] focused on day-ahead STLF in Australia and developed a novel model based on the Transformer network, achieving an average MAPE of 1.13%. Moon et al. [60] developed a hybrid STLF model for an educational building complex, achieving a MAPE ranging from 3 to 4.7%. Massaoudi et al. [61] implemented a stacked generalization approach for STLF regarding power supply industry data.

As a summary, emphasizing performance and relevance, a structured format of the gathered key literature in terms of ELF and notable performance metrics, is illustrated in Table 1. Each of the listed forecasting approaches has its limitations and none can be considered directly applicable to all case studies. This listing comprises a detailed assessment of the results published in the respective publications, rather than an experimental evaluation by replication of the reported results. Hence the referenced metrics should be treated as a cumbersome point of comparison for the results of the developed models of the present paper.

3 Research design

3.1 Data preparation

3.1.1 Dataset description

The open-access dataset [63] includes measurements and raw data collected from and for the CERTH-ITI Smart Home infrastructure located in Greece. This prototype building is equipped with a variety of devices such as smart meters, environmental sensors, occupancy sensors, smart appliances, weather stations, etc. Hence, energy-related aspects are included in the publicly available datasets utilized, covering Energy load, Generation, and Storage, as well as weather data information, between Oct. 2018 and Sept. 2020, with a 15-minute resolution (Table 2).

3.1.2 Pre-processing

Missing values and resampling Our dataset contained a great number of missing values, in many cases for prolonged periods. This sort of irregularity in the data can have a significant impact on the accuracy of a forecasting model, hence data pre-processing procedures were used prior to the building of forecasting models. First, we proceeded to re-sample the 15-min time series to a one hour resolution. Based on the review of literature, multiple relevant studies had an input of 1 h for VSTLF/STLF. Using the sum for energy-related values (Energy load and PV Generation) and the average for ambient temperature recordings, as provides a more robust forecasting baseline for modelling. However, very long periods of missing values still existed in the re-sampled dataset(e.g.15 consecutive days). Hence, due to the many missing values, a hybrid approach was chosen using a forward and backward time-based interpolation method, for time intervals of up to four hours and omitting missing data extending to larger time periods than four hours. This procedure resulted in producing a full dataset for both target (energy load) and exogenous features. This contributed to the dataset representing a more normalized behavior and realistic daily load pattern, capturing both periodicity and trend.

Outlier detection Identifying and removing outliers is a vital component in developing forecasting models, which avoid large errors. In particular, the analysis of outliers in time series data examines anomalous behaviors across time. For example, in our dataset, there are extreme outliers such as temperatures well above 45\(^\circ\)C. Hence, an outlier detection strategy was followed, which identifies values above the 99.99th and the 99.8th percentiles for all the temperature and energy load data points and replaces them with their equivalent max values equaling the percentiles

3.2 Exploratory data analysis

This section presents certain key visualizations along with supplementary comments, to provide a holistic view of our training dataset. Figure 2, shows the Smart Home hourly Energy load in kWh, after outliers were removed.

Some notable patterns and a clear periodicity can be observed. During the winter and summer months, the average hourly energy load is higher, which is to be expected due to the increased cooling and heating demands.

It is important to highlight, that the CERTH-ITI Smart Home, is utilized as an active workspace for researchers, hence it resembles an office-type of building, rather than a typical residential home. Thus there are periods in December and August when the energy load significantly drops due to Christmas and summer vacations of employees accordingly. Figure 3 illustrates the hourly resolution of both energy load and generation on a weekly basis. We notice here that the first day of the week (Monday) shows a higher energy load, while the energy load is significantly reduced during the weekend, as there is no employee activity.

3.3 Feature engineering and selection

Overall our approach to the selection process of the most relevant input variables can be summarized in the following points:

-

1.

Target variable’s characteristics: In the context of ELF, a comprehensive understanding of the critical factors affecting energy load, specifically within the built environment, becomes imperative. Empirical observations reveal that building energy load is influenced by various exogenous factors. These factors encompass the day of the week, time of the day, month of the year, occupancy rates, and weather/climate conditions. Thus, it becomes essential to incorporate these factors into the training of the prediction model, in addition to utilizing historical load data that captures trends and periodic patterns [64].

-

2.

Visualization of data input correlations: To facilitate the process in the first point, we identified potential input variables that exhibit strong correlations or relationships with the target variable (energy load). To that end, visualizations such as scatter plots, heatmaps and correlation matrices were illustrated.

-

3.

Feature importance rating: Feature importance aims at quantifying the influence of a specific variable on the predictive outcomes generated by a given model. One widely implemented approach, utilized in our experimental modelling, is permutation feature importance. This method entails permuting (i.e., randomly shuffling) the feature values associated with a particular feature, denoted as F1. By executing this permutation process, the relationship between F1 and the remaining features is severed, thereby inducing alterations in the model’s predictive performance [65]. As a notable example, for the Random Forest and LGBM algorithms, feature importance is measured by evaluating the degree to which each feature diminishes the impurity of tree splits.

-

4.

Evaluation of model performance: Iteratively evaluate models utlising different combinations of input variables. Assess the model’s performance metrics, such as RMSE, MAPE, and compare the results. By systematically evaluating models with different input variables, we identified the set of variables that consistently lead to the best forecasting performance.

3.3.1 Temporal features

As our timeseries dataset also implements the notion of time as a feature, the time information was encoded via dummy variables, to account for the periodicity. Apart from the typically created temporal features such as the hour of the day, the day of the week, and the month of each load data point, some specific features were synthesized based on the characteristics of our dataset, acknowledging that the CERTH-ITI Smart Home is used as a work-space, such as a distinct feature of office hours (i.e. 9:00–18:00) and non-peak hours (i.e. after 18:00) (Table 3).

3.3.2 Time series transformation to supervised

The sliding window technique was implemented for feature extraction, which is a standard approach for time series forecasting. This entails transforming the data into lagged observations. That is, n prior values (t-n, t-n+1,...,t) were utilized to forecast the future value (t+1). This is accomplished by iteratively traversing the data, accepting n items as input and the next entry as output. As time-series data often exhibit trends or seasonal patterns, the relation between the model’s input variables (independent variable) and the predicted value (output variable) fluctuates with time. In order to discover the data points where the values of independent variables are substantially related, the time-lag value must be carefully chosen [66].

3.3.3 Final feature selection

In the development of supervised ML algorithms, it is important to select the features that are most associated with the prediction outcome, for dimensionality reduction. To that end, permutation feature importance scores played a key role, as a filter method to remove extraneous features from our model, thereby retaining only the variables that exhibit the strongest relationship with our target variable.An example of such feature importance scoring is presented in Fig. 4

for the LGBM base algorithm. Another important parameter is to select inputs that can facilitate the reproducibility and generalization of the developed forecasting model, in particular parameters that are often available (or easily extracted) in building case studies. Temporal features, which capture time-related patterns and dynamics, and statistical features, derived from applying statistical methods to the dataset, are prime examples of such inputs.

In our case study, the load lagged values of the preceding hours, were steadily in the top ranks in terms of contribution towards enhancing the accuracy of the forecasting models. By considering these lagged features, our model captures the temporal dependencies and patterns in the load data, further improving the accuracy of load forecasts. That said, ambient temperature proved to be a factor that is directly correlated with the energy load. Furthermore, PV generation is also an important feature as high PV power generation indicates sunny weather, thus a lower need for heating in the winter period or prolonged sunlight periods in the summer period, which typically translates to increased cooling needs. Both these exogenous features are used in addition to the historical data of the load to make a more accurate forecast.

Hence, after reviewing relevant literature, extensive experimental modelling and trials, each accompanied by a feature importance rating process, we concluded on the final training feature space. Supplementary to the temporal features (discussed in Sect. 3.3.1), the final selection of time-lagged features, to forecast one step ahead (i.e. one hour ahead) included:

-

1.

The energy load value of the day preceding the hour for which the prediction is made i.e., 24 lagged features (T-1,T-2,T-3,\(\ldots\)T-24)

-

2.

Similarly, 12 lagged features from 2 additional exogenous features (PV energy generation and ambient temperature)

-

3.

The energy load value 48 h before (T-48Cons)

-

4.

The energy load value 72 h before (T-72Cons)

-

5.

Absolute standard deviation (std) between the previous hour energy load and the previous 12 h energy load (Eq. 5)

$$\begin{aligned} \Vert \frac{Cons_{(t-1)}+Cons_{(t-12)}}{2} - Cons_{(t-1)}\Vert \end{aligned}$$(5) -

6.

Similarly, the same absolute standard deviation (std) between the previous hour energy load and the previous 24 h and 48 h, accordingly

By integrating historical load data with exogenous features like ambient temperature and temporal features such as the hour of the day, we adopt a comprehensive approach that facilitates more accurate predictions of energy load. This approach mitigates the risk of relying solely on training features that are too specific to our case study. Incorporating these additional factors enables a broader analysis, encompassing influential variables beyond the immediate context. Consequently, the prediction model becomes more robust and capable of capturing the nuanced relationships between the target variable and various exogenous and temporal features.

3.4 Modelling process overview

3.4.1 Base algorithms

In predictive analytics, hyperparameter optimization is an integral part of the modelling process. In general, models can perform well with the default parameters, however, can have a significant impact on model performance, including the potential for overfitting. Hyperparameters, such as L2 regularization, learning rate, and maximum depth, also play a role in finding the right balance between model complexity and generalization. To that end, we attempted to identify the proper parameter grids for our selected modeling algorithms, by reviewing the relevant documentation for each algorithm as well as the bibliography. To achieve this objective, we employed the Grid Search Cross-Validation (GSCV) method, which explores a predefined set of hyper-parameter combinations to identify the optimal configuration that maximizes the model’s performance [67]. Table 4 presents a summarized view of the final selection of hyperparameters for each algorithm.

For the XGBoost model, we focused on tuning the number of trees (‘n_estimators’), the maximum of a tree (‘max_depth’), the learning rate i.e. the step size shrinkage used to prevent overfitting (‘learning_rate’), the subsample (‘subsample’) which denotes the fraction of observations to be randomly sampled for each tree. Furthermore, we tuned the subsample ratio of columns when constructing each tree (‘colsample_bytree’) and the ‘objective’ which defines how the loss function will be minimized.

For Random Forest, apart from the number of estimators and the maximum depth, we also focused on tuning the ‘min_samples_leaf’, which determines the minimum number of samples required to split an internal node, and ‘min_samples_split’, which indicates the minimum number of samples required to be at a leaf node.

Regarding LGBM, the number of leaves, the minimum data samples contained in a leaf, and the max number of bins (‘max_bin’) that feature values were further tweaked. Typically, a smaller number of bins may reduce training accuracy but may increase general power (i.e. dealing with overfitting).

The parameters fine-tuned for the CatBoost algorithm were the number of iterations, the learning rate which determines the reduction of the gradient descent step, the tree depth (‘depth’), and the L2 regularization parameter (‘l2_leaf_reg’), which deals with overfitting by forcing weights to be small, but not equaling them to zero.

For the MLP, the number of neurons defines the size of the hidden layers used. The initial learning rate controls the step size in updating the weights, The activation function dictates the standard neuron activation function per layer. The optimizer specifies the gradient descent optimizer. The ‘alpha’ parameter represents the strength of the L2 regularization term, which decreases the likelihood of overfitting.

Finally, the LSTM model consists of 2 hidden layers, with 128 units each. The RMSProp optimizer was selected, as a gradient-based optimization technique, which deals with the vanishing or exploding gradient of very complex functions like ANNs, by using a moving average of the squared gradient to normalize the gradient. Another important parameter is the dropout rate, which acts as a regularizer for LSTM to avoid potential overfitting.

After the data pre-processing, we finalized the training dataset, including both temporal and lagged features of the three separate time series (Energy load, PV generation, Temperature). Since we are dealing with time series forecasting, we preserved the order of time while splitting the data (temporal train test split). The first 80% of historical data is used for training and the remaining 20% of data points are used for testing the predictions of the models. For the entire dataset, this translates to roughly 18 months for training and the remaining 6 months for testing.

3.4.2 Hybrid model design

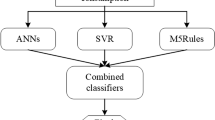

Aiming to further improve the accuracy of the predictions of the already trained base models, we developed a OSA-ELF Hybrid Model (OSA-ELF HM) which combines the benefits of both stacking and voting methods. A two-level stacking-based approach was implemented that combines the outputs of the first-level models on the second level with a meta-model, a voting regressor algorithm. We choose the three best overall performing base algorithms to synthesize our voting regressor, the CatBoost, the LGBM, and the Random Forest, selecting similar hyperparameters as our base algorithms. The overall architecture of our approach is depicted in Fig. 5. The Level-1 learners consist of all six trained base models while the Level-2 models average the predictions of three selected regressors.

Our strategy is to benefit from the forecasting potential of each heterogeneous base model and reduce their generalization error through the meta-model. At Level-1, the test feature dataset (X) is fed to the six already trained and tuned base learners. The outputs of the Level-1 models form a new training feature vector (\(\hat{y_1}, \hat{y_2},\ldots , \hat{y_6}\)). The new vector is then fed as training parameters into the Level-2 voting regressor model which outputs our final target \({\hat{y}}\), i.e., the one-step ahead load forecast.

In terms of ELF time horizon, both the base models and the hybrid model predict for one hour (i.e. 1 step) ahead, hence our approach falls in-between the VSTLF and STLF categories.

3.5 Experimental validation and comparison

3.5.1 Validation on new data

To further access the performance of our hybrid model approach we aimed to benchmark it against new and unseen data, that have similar properties as the CERTH-ITI Smart Home dataset, in order to fully utilize the capabilities and research design of our approach. In recent years, a lot of efforts focusing on building energy analytics have been made in order to create publicly available energy-related data measurements for providing test datasets or benchmarking modeling algorithms. One such prominent effort is linked with the Building Data Genome Project 2 (BDGP2) dataset, led by an international collaboration of building energy-related academics and experts [68]. BDGP2 is an open data repository derived from 1636 non-residential buildings. It includes hourly whole-building data from different kinds of (smart) meters: electricity load, heating, cooling water, steam, energy from solar (PV), and other meter data. In addition, this data set integrates outdoor temperature, humidity, and other climatic factors that can affect energy load. Hence, it matches the characteristics of the CERTH-ITI Smart Home dataset in order to benchmark our OSA-ELF HM. From the BDGP2 dataset, we selected the ‘Bobcat_education_Coleman’ building, an academic building located in the US. Two important reasons for choosing this particular building are that it exhibits solar power production (i.e. from PV) and matches the usage profile of the CERTH-ITI Smart Home as an office/academic type of building. The interval extracted (one-hour resolution), ranges from 01/2016 to 31/2016, to which we applied the same outlier detection strategy as our original dataset and removed extreme anomalies. A statistical comparison between the two datasets is summarized in Table 5.

3.5.2 Comparison with other methods

Considering the literature review presented in Sect. 2, there are several research studies that employ similar pre-processing steps, techniques and various ML algorithms, particularly ensemble methods, like our proposed approach. In most cases, research methods or approaches are carefully chosen, adjusted, and tailored to suit the specific nature of the data they are applied to, with the goal of achieving optimal results. However, in order to further validate the effectiveness of our method, we sought to conduct an experimental comparison with a relevant alternative method using the same dataset (CERTH-ITI) and under the same inputs.

To that end, we conducted an in-depth analysis of key relevant literature and approaches, particularly focusing on building applications, that can be directly compared to our results. After careful consideration, we selected the ensemble method developed by [40] to apply to our dataset for comparison purposes. The authors presented an ensemble method that emphasizes on preprocessing steps and feature selection. Similarly to our approach, their method combines eight base algorithms, and the weights of these algorithms are selected utilizing a Genetic Algorithm [69] as an optimizer. However, it is important to note that their forecasting horizon is one-day ahead, whereas ours is one-hour ahead. This difference limited our ability to extract similar temporal features for different parts of the day. While the approach presented by [40] achieved promising results (2.32% MAPE) on the tested dataset, there are two important differences from our method:

-

1.

Apart from temperature and other weather-related data, they utilized peak-power demand as a feature/input value, which further enhanced the performance of their model due to high correlation

-

2.

National holidays (i.e. employee absence days) were excluded as outliers, while our approach incorporated them in the training process, without any feature specifically distinguishing them

By conducting this experimental comparison, we aim to determine which approach yields better results in terms of forecasting accuracy, based on our dataset.

Overall, the complete workflow of our modelling approach is depicted in Fig. 6.

4 Experimental results

4.1 Base algorithms

The performance of the base algorithms was evaluated by the following evaluation metrics, MAPE, MAE, RMSE, R2, and ET, as shown in Table 6. In both cases, with and without hyperparameter tuning, overall, the sML algorithms demonstrate the best performance, compared to the ANNs. More precisely, regarding the entire dataset training and validation, all the produced evaluation metrics highlight CatBoost having a slight edge, compared to the other models, followed closely by the LGBM which offers similar results but with a reduced training elapsed time (ET).

Regarding the LGBM model, the impact of hyperparameter tuning on performance metrics was relatively modest. Specifically, MAPE exhibited an improvement of approximately -1% for LGBM and -11% for CatBoost. Likewise, MAE displayed a marginal enhancement of around \(-\)0.8% for LGBM and a more substantial improvement of \(-\)5.5% for CatBoost. These results suggest that while LGBM experienced limited advancements, CatBoost demonstrated significant progress through hyperparameter tuning. On the other hand, the Random Forest, Multi-Layer Perceptron (MLP), and Long Short-Term Memory (LSTM) models showcased higher gains in accuracy due to hyperparameter tuning and optimization. Notably, MLP, Random Forest, and LSTM exhibited the most substantial improvements among the base models. Figure 7, provides a visualization of the one-step forecasts for a typical 48 h time interval, for each of the implemented models.

The results for the Cross-Validation (CV) averaged metrics concerning the entire test dataset are presented in Table 7.

The cross-validated (CV) averaged metrics, obtained after hyperparameter tuning, exhibit slightly lower performance compared to the results of individual model runs. This observation suggests a potential presence of overfitting and could be attributed to the hyperparameter optimization process being conducted on the entire dataset’s training and test splits.Notably, the best-performing model based on the CV results aligns with the outcome of the single base learners case, namely CatBoost, which is followed closely by the second-best model, LGBM. Another noteworthy finding is the consistent performance of the LSTM, which maintains similar levels of accuracy compared to the single model results. This indicates that LSTM is more effective in mitigating potential overfitting and is better suited for handling new and unseen data that may possess conflicting patterns or frequent extremes.

4.2 Hybrid model performance

The OSA-ELF HM improves the forecasting accuracy of our target (Energy load) as all evaluation metrics are improved across the board compared with each individual base algorithm’s performance over the entire test dataset (Table 8). Focusing on MAPE and R2, the more on MAPE and R-squared, the OSA-ELF HM shows similar performance on the new unseen data as the original dataset. The ELF curves of the proposed method vs. the actual historical load for a typical 72-hour window are shown in Figs. 8 and 9.

In terms of experimental comparison with other notable ensemble methods, Table 9 illustrates the evaluation metrics of our approach and the ensemble approach implemented by [40], both tested against the CERTH-ITI Smart Home Dataset.

5 Discussion

Focusing on the performance of the base algorithms, CATBoost and LGBM produced the best forecasting results, with minimal execution times. At the same time, the ANNs developed (MLP, LSTM) required longer training/execution times since they incorporate more expensive computations, and failed to achieve improved performance over the sML base algorithms.

The most important features/predictors, at least for the sML algorithms were the previous two-hour energy load value (T-1, T-2) and the hour of the day. Overall, proper feature selection seems to be more important than the selection of base algorithms or evaluation techniques. To that end, a customized and heterogeneous feature set was synthesized, based on relevant literature findings and trial and error benchmarking. This contributed to a significant increase in the forecasting accuracy of the developed model, even more than the hyperparameter tuning exercise. The developed OSA-ELF HM model is superior to the base ML algorithms, as it improves forecasting accuracy. The ensemble technique we implemented, enhances the generalization of the model and better tackles potential overfitting. By utilizing the outcomes of the pre-trained base algorithms to retrain a second (meta) model (regressor), enables it to be able to better adapt to new data. This creates a more robust and interpretable approach, without adding excessive complexity.

In terms of comparative analysis, as a general indication, most of the relevant scientific papers identified by reviewing the literature demonstrated a MAPE in the range of 5–15%, hence our developed model falls closer to the higher end of this performance margin. We consider MAPE as the most representative metric due to its independence from the scale and magnitude of the target variable (Energy load). It also allows for direct comparisons with other pertinent scientific research, providing a standardized measure of forecasting accuracy. Moreover, one of the primary motivations behind our work and research goals was a very relevant publication [11] that involved experimental modelling of the same dataset, produced a forecasting model that achieved a MAPE of around 13%. Our aim was to develop a forecasting approach that could produce better forecasting accuracy, as direct comparisons can be made, which was achieved, without adding excessive complexity

Seeking further validation, the hybrid model was tested on unseen data, and performed well, achieving a MAPE of around 5.39%. This strongly indicates, that our model can be applied to similar smart building case studies for ELF. Furthermore, we directly compared our approach with an alternative ensemble approach, also applied to a building case study. The evaluation was performed on our original dataset, and the results demonstrated that our hybrid model outperformed the alternative approach. In such direct comparisons, it is important to highlight that typically alternative techniques are selected, customized, and adapted to match the unique characteristics of the data being analyzed in order to attain optimal results. Factors such as data granularity and scale are influential in this process. For instance, when examining the load consumption profile of an entire region, the volatility in terms of abnormal fluctuations and outliers, on a daily basis, may be considerably lower, compared to a single building.

6 Conclusions

This paper investigated relevant scientific literature, reviewing factors affecting building energy load patterns, pre-processing, and evaluation techniques. We aimed to develop the best possible forecasting approach regarding a smart building’s energy load, taking into account its unique characteristics, such as the presence of photovoltaics and the office usage nature of the building.

To achieve this, we focused on examining some of the well-known ML forecasting algorithms and ensemble methods, followed by the eventual deployment of interpretable base models. Extensive parameter tuning, feature selection, synthesis, and transformation took place to maximize model performance. The end product was a one-step ahead forecasting approach that includes the integration of multiple sML and DL algorithms, utilizing ensemble methods, which was benchmarked against new unseen data.

The novel aspect of this work is attributed to a robust ensemble approach which employs the best-performing predictive algorithms and produces a fine-tuned hybrid model. The developed model effectively addresses the issue of overfitting and demonstrates adaptability to energy load timeseries datasets of varying sizes and patterns. This stands due to the combination of heterogeneous trained base algorithms, the outputs of which are used to train a voting regressor meta-model. This meta-model acts as a normalization mechanism for new data inputs. In comparison to closely related literature findings, our approach showed above-average results. In addition, beyond theoretical claims, we conducted experimental comparisons to assess the performance of our hybrid model, by:

-

1.

Validating our hybrid model on new (unseen) field data

-

2.

Applying a similar ensemble approach, from a relevant research study, on our dataset and under the same inputs

Both comparisons served as an important test of the model’s robustness and its ability to handle novel data inputs. The result of the comparative analysis, not only confirmed the improved performance of our hybrid model, but also further validated its potential for practical applications in ELF, particularly in the context of building case studies.

6.1 Limitations-biases

The findings are subject to certain limitations or biases that might affect the validity of the results, the most important ones are summarized in the following items.

-

A.

The first potential bias is related to the training dataset itself. The initial dataset contained many missing or non-valid values, that were partly filled out via interpolation and aggregation, which limited the size of the training data. This is especially relevant to the performance of DL models (e.g. LSTM), which, as the literature suggests, typically require large amounts of data to achieve higher levels of accuracy. Furthermore, our dataset originates from an office space building, with distinct business hours patterns, hence it limits the overall robustness of our model, against, for example, data deriving from residential buildings.

-

B.

Another potential limitation is related to the exogenous parameters our developed model requires. More specifically the model requires ambient temperature measurements and energy generation from PV. The second attribute may be more rarely available, especially for a conventional or older building. However, we consider that the accuracy loss of removing this feature would be minimal, based on its feature importance score.

-

C.

Our paper recognizes the existence of various alternative time series forecasting algorithms, ensemble techniques, and approaches. However, the selection and development of the predictive algorithms presented are considered up-to-date, popular and efficient methods with several applications for time series forecasting. In the case of predictive algorithms, representative and dissimilar configurations are presented, for example, ANNs, such as MLP, and LSTM, as well as traditional ML models (e.g. XGBoost, Random Forest, LGBM).

6.2 Implications

Building-related ELF, produces predictions, not only on the lower brackets of the electricity networks, namely residential complexes, but also for large-scale consumers, such as commercial and high-tech industrial buildings. Overall, highly-accuracy ELF remains an open challenge for energy sector stakeholders, becoming even more critical, in light of recent policy updates towards further mitigating carbon emissions and augmenting the penetration of Renewable Energy Resources (RES) regarding the building sector. Such is the newly revised Energy Performance of Building Directive 2021/12/14EU (EPBD) which obligates EU Member States to achieve a minimum 55% reduction in greenhouse gas (GHG) emissions by 2030, compared with 1990 levels [70].

One of the primary challenges in the energy sector is ensuring the most efficient and dependable functioning of power systems. The integration of RES, for instance, can reduce daily energy demand changes. However, the operation of such systems is intermittent. Novel Decision Support Systems (DSSs) are necessary to facilitate their seamless integration. A promising innovative approach to overcome these obstacles focuses on the flexibility that may be achieved from distributed loads, such as buildings, which may provide Demand Response Services (DRSs). Such services, are increasingly adopted in smart buildings, allow users to monetize their flexibility, and utilize electricity in a highly efficient and financially rewarding manner, while also supporting an optimal energy balance. This may potentially contribute to i) energy cost reduction in energy transactions between Aggregators and Distribution System Operators (DSOs) and ii) improved energy distribution by allowing energy trade-offs between prosumers [71, 72].

Moreover, STLF has now gained paramount importance with the inclusion of smart grids in cities, and smart energy management systems also integrating electric vehicle charging infrastructure. This transition enables the real-time collection of load data at higher geographical granularity and frequency than in the past [73]. Such increased data availability allows the development of more localized ELF models, towards improving response times and rapid energy resource allocation to maintain optimal grid function. This research aimed to further improve building ELF accuracy and establishes a concrete baseline for experimenting with data for real-world ELF assessments regarding the build environment, towards improving energy operational security and energy savings.

6.3 Future work

he scope of experimental comparisons within our work could be further extended. An extensive experimental comparison could be a subject for future work. Such an undertaking would involve a meticulous evaluation of our method by implementing other relevant and established ensembl approaches, on the same dataset and under the same inputs. Additionally, potential enhancements and refinements to our existing methodology could be incorporated into this comprehensive analysis, further augmenting its effectiveness and applicability. This would contribute to a more comprehensive understanding of the strengths, limitations, and overall performance of our method.

Additional real-world energy load data entries for training our base algorithms could contribute towards improving the accuracy and robustness of our approach. Furthermore, the buildings examined in our case study are used primarily as office spaces, hence data from other types of buildings (e.g. residential or industrial) with PV installations and ideally weather information would provide a more comprehensive view of how to increase the forecasting accuracy, robustness, and overall applicability.

Regarding ANNs implementation, larger, more diverse training datasets and a more thorough selection of hyperparameters are needed to better assess the potential in ELF. Future work should also expand towards testing additional DL architectures and supplementing the data representation possibly creating other alternative features to further enhance the model accuracy. Another research direction is to expand the proposed single-step approach to a multi-step forecasting approach, focusing more on the STLF horizon.

References

Nik V M, Perera ATD, Chen D (2021) Towards climate resilient urban energy systems: a review. Natl Sci Rev 8. https://doi.org/10.1093/nsr/nwaa134

Jing Z, Cai M, Pipattanasomporn M, Rahman S, Kothandaraman R, Malekpour A, Paaso EA, Bahramirad S ( 2019) Commercial building load forecasts with artificial neural network. In: 2019 IEEE power and energy society innovative smart grid technologies conference, ISGT 2019 https://doi.org/10.1109/ISGT.2019.8791654

Al-Obaidi K, Hossain M, Alduais N, Al-Duais H, Omrany H, Ghaffarianhoseini A (2022) A review of using IoT for energy efficient buildings and cities: a built environment perspective. Energies 15. https://doi.org/10.3390/en15165991

de Mattos Neto PSG, de Oliveira JFL, Bassetto P, Siqueira HV, Barbosa L, Alves EP, Marinho MHN, Rissi, GF, Li F (2021) Energy consumption forecasting for smart meters using extreme learning machine ensemble. Sensors 21. https://doi.org/10.3390/s21238096

Shohan MJA, Faruque MO, Foo SY(2022) Forecasting of electric load using a hybrid lstm-neural prophet model. Energies 15. https://doi.org/10.3390/en15062158

Koukaras P, Gkaidatzis P, Bezas N, Bragatto T, Carere F, Santori F, Antal M, Ioannidis D, Tjortjis C, Tzovaras D (2021) A tri-layer optimization framework for day-ahead energy scheduling based on cost and discomfort minimization. Energies 14. https://doi.org/10.3390/en14123599

Mystakidis A, Ntozi E, Afentoulis K, Koukaras P, Giannopoulos G, Bezas N, Gkaidatzis PA, Ioannidis D, Tjortjis C, Tzovaras D ( 2022) One step ahead energy load forecasting: a multi-model approach utilizing machine and deep learning. In: 2022 57th International universities power engineering conference (UPEC), pp 1– 6. https://doi.org/10.1109/UPEC55022.2022.9917790

Bennett C, Stewart RA, Lu J (2014) Autoregressive with exogenous variables and neural network short-term load forecast models for residential low voltage distribution networks. Energies 7:2938–2960. https://doi.org/10.3390/en7052938

Wahab A, Tahir MA, Iqbal N, Ul-Hasan A, Shafait F, Kazmi SMR (2021) A novel technique for short-term load forecasting using sequential models and feature engineering. IEEE Access 9:96221–96232. https://doi.org/10.1109/ACCESS.2021.3093481

Marino DL, Amarasinghe K, Manic M (2016) Building energy load forecasting using deep neural networks. In: IECON Proceedings (Industrial Electronics Conference), 7046–7051. https://doi.org/10.1109/IECON.2016.7793413

Koukaras P, Bezas N, Gkaidatzis P, Ioannidis D, Tzovaras D, Tjortjis C (2021) Introducing a novel approach in one-step ahead energy load forecasting. Sustain Comput: Inform Syst32. https://doi.org/10.1016/j.suscom.2021.100616

Hsiao YH (2015) Household electricity demand forecast based on context information and user daily schedule analysis from meter data. IEEE Trans Ind Inf 11:33–43. https://doi.org/10.1109/TII.2014.2363584

Hou T, Fang R, Tang J, Ge G, Yang D, Liu J, Zhang W (2021) A novel short-term residential electric load forecasting method based on adaptive load aggregation and deep learning algorithms. Energies 14. https://doi.org/10.3390/en14227820

Alamaniotis M, Bargiotas D, Tsoukalas LH (2016) Towards smart energy systems: application of kernel machine regression for medium term electricity load forecasting. Springerplus 5:1–15. https://doi.org/10.1186/s40064-016-1665-z

Arvanitidis AI, Bargiotas D, Daskalopulu A, Kontogiannis D, Panapakidis IP, Tsoukalas LH (2022) Clustering informed MLP models for fast and accurate short-term load forecasting. Energies 15. https://doi.org/10.3390/en15041295

Bouktif S, Fiaz A, Ouni A, Serhani MA (2018) Optimal deep learning lstm model for electric load forecasting using feature selection and genetic algorithm: comparison with machine learning approaches. Energies 11. https://doi.org/10.3390/en11071636

Khairalla MA, Ning X, AL-Jallad NT, El-Faroug MO (2018) Short-term forecasting for energy consumption through stacking heterogeneous ensemble learning model. Energies 11. https://doi.org/10.3390/en11061605

Kuster C, Rezgui Y, Mourshed M (2017) Electrical load forecasting models: a critical systematic review. Sustain Cities Soc 35:257–270. https://doi.org/10.1016/j.scs.2017.08.009

Hewamalage H, Bergmeir C, Bandara K (2021) Recurrent neural networks for time series forecasting: current status and future directions. Int J Forecast 37:388–427. https://doi.org/10.1016/j.ijforecast.2020.06.008

Mystakidis A, Tjortjis C (2019) Big data mining for smart cities: predicting traffic congestion using classification. In: The 10th international conference on information, intelligence, systems, and applications: 15–17 July 2019, Patras, Greece

Salamanis AI, Xanthopoulou G, Bezas N, Timplalexis C, Bintoudi AD, Zyglakis L, Tsolakis AC, Ioannidis D, Kehagias D, Tzovaras D (2020) Benchmark comparison of analytical, data-based and hybrid models for multi-step short-term photovoltaic power generation forecasting. Energies 13. https://doi.org/10.3390/en13225978

Dubey AK, Kumar A, García-Díaz V, Sharma AK, Kanhaiya K (2021) Study and analysis of SARIMA and LSTM in forecasting time series data. Sustain Energy Technol Assessments 47. https://doi.org/10.1016/j.seta.2021.101474

Masum S, Liu Y, Chiverton J (2018) Multi-step time series forecasting of electric load using machine learning models. Proc ICAISC 2018:158–159

Papadopoulos S, Karakatsanis I (2015) Short-term electricity load forecasting using time series and ensemble learning methods. In: 2015 IEEE power and energy conference, PECI 2015. https://doi.org/10.1109/PECI.2015.7064913

Menculini L, Marini A, Proietti M, Garinei A, Bozza A, Moretti C, Marconi M (2021) Comparing prophet and deep learning to ARIMA in forecasting wholesale food prices. Forecasting 3:644–662. https://doi.org/10.3390/forecast3030040

Siami-Namini S, Tavakoli N, Namin AS ( 2019) The performance of LSTM and BiLSTM in forecasting time series. In: 2019 IEEE international conference on big data (big data)

Bezas N, Timplalexis C, Salamanis A, Karapatsias V, Ioannidis D, Kehagias D (2021) Novel feature extraction and model retraining techniques for short-term and day-ahead residential load forecasting

Kohzadi N, Boyd MS, Kermanshahi B, Kaastra I (1996) A comparison of artificial neural network and time series models for forecasting commodity prices. Neurocomputing 10:169–181

Calkoen F, Luijendijk A, Rivero CR, Kras E, Baart F (2021) Traditional vs. machine-learning methods for forecasting sandy shoreline evolution using historic satellite-derived shorelines. Remote Sensing 13:1–21. https://doi.org/10.3390/rs13050934

Makridakis S, Spiliotis E, Assimakopoulos V (2022) M5 accuracy competition: results, findings, and conclusions. Int J Forecast 38:1346–1364. https://doi.org/10.1016/j.ijforecast.2021.11.013

Chen T, Guestrin C (2016) Xgboost : a scalable tree boosting system. In: Proceedings of the ACM SIGKDD international conference on knowledge discovery and data mining 13–17-August–2016, 785– 794. https://doi.org/10.1145/2939672.2939785

Ke G, Meng Q, Finely T, Wang T, Chen W, Ma W, Ye Q, Liu T-Y ( 2017) Lightgbm: a highly efficient gradient boosting decision tree. In: Advances in neural information processing systems 30 (NIP 2017)

Ju Y, Sun G, Chen Q, Zhang M, Zhu H, Rehman MU (2019) A model combining convolutional Neural Network and LightGBM algorithm for ultra-short-term wind power forecasting. IEEE Access 7:28309–28318. https://doi.org/10.1109/ACCESS.2019.2901920

Dorogush AV, Ershov V, Gulin A (2018) Catboost: gradient boosting with categorical features support. CoRR arXIv: abs/1810.11363 (2018)

Prokhorenkova L, Gusev G, Vorobev A, Dorogush AV, Gulin A (2017) Catboost: unbiased boosting with categorical features

Breiman L (2001) Random forests. Mach Learn 45:5–32. https://doi.org/10.1023/A:1010933404324

Murtagh F (1991) Multilayer perceptrons for classification and regression. Neurocomputing 2(5):183–197. https://doi.org/10.1016/0925-2312(91)90023-5

Hochreiter SJ, Schmidhuber J (1997) Long short-term memory. Neural Comput 9:1735–1780

Ruiz-Abellón, M.D.C., Gabaldón, A., Guillamón, A (2018) Load forecasting for a campus university using ensemble methods based on regression trees. Energies 11. https://doi.org/10.3390/en11082038

Fan C, Xiao F, Wang S (2014) Development of prediction models for next-day building energy consumption and peak power demand using data mining techniques. Appl Energy 127:1–10. https://doi.org/10.1016/j.apenergy.2014.04.016

Wolpert DH (1992) Stacked generalization. Neural Netw 5:241–259. https://doi.org/10.1016/S0893-6080(05)80023-1

Luo X, Sun J, Wang L, Wang W, Zhao W, Luo AAX, Wu J, Wang J-H, Zhang Z, Member S (2018) Short-term wind speed forecasting via stacked extreme extreme learning machine with generalized correntropy. IEEE Trans Ind Inf 2018:14. https://doi.org/10.1109/TII.2018.2854549

Ma Z, Dai Q (2016) Selected an stacking ELMs for time series prediction. Neural Process Lett 44:831–856. https://doi.org/10.1007/s11063-016-9499-9

Osamor VC, Okezie AF (2021) Enhancing the weighted voting ensemble algorithm for tuberculosis predictive diagnosis. Sci Rep 11. https://doi.org/10.1038/s41598-021-94347-6

An K, Meng J (2010) Voting-averaged combination method for regressor ensemble. In: 6th International conference on intelligent computing: advanced intelligent computing theories and applications 6215:540–546. https://doi.org/10.1007/978-3-642-14922-1_67

Botchkarev A (2019) A new typology design of performance metrics to measure errors in machine learning regression algorithms. Interdiscip J Inf Knowl Manag 14:45–79. https://doi.org/10.28945/4184

de Myttenaere A, Golden B, Grand BL, Rossi F (2016) Mean absolute percentage error for regression models. Neurocomputing 192:38–48. https://doi.org/10.1016/j.neucom.2015.12.114

Colin Cameron A, Windmeijer FAG (1997) An r-squared measure of goodness of fit for some common nonlinear regression models. J Econom 77(2):329–342

Wang Z, Wang Y, Srinivasan RS (2018) A novel ensemble learning approach to support building energy use prediction. Energy Buildings 159:109–122. https://doi.org/10.1016/j.enbuild.2017.10.085

Liu Y, Luo H, Zhao B, Zhao X, Han Z ( 2018) Short-term power load forecasting based on clustering and XGBoost Method. In: 2018 IEEE 9th international conference on software engineering and service science (ICSESS), pp 536– 539. https://doi.org/10.1109/ICSESS.2018.8663907

Liao X, Cao N, Li M, Kang X (2019) Research on short-term load forecasting using xgboost based on similar days. Proceedings—2019 international conference on intelligent transportation, big data and Smart City, ICITBS 2019, 675–678. https://doi.org/10.1109/ICITBS.2019.00167

Bot K, Ruano A, da Graça Ruano M ( 2020) Forecasting electricity consumption in residential buildings for home energy management systems. In: Communications in Computer and information science 1237 CCIS, 313– 326. https://doi.org/10.1007/978-3-030-50146-4_24

Zheng J, Xu C, Zhang Z, Li X ( 2017) Electric load forecasting in smart grid using LSTM based recurrent neural network. In: 51st Annual conference on information sciences and systems (CISS). https://doi.org/10.1109/CISS.2017.7926112

Peñaloza AA, Leborgne RC, Balbinot A (2022) Comparative analysis of residential load forecasting with different levels of aggregation. In: The 8th international conference on time series and forecasting, 29. https://doi.org/10.3390/engproc2022018029

Wang Y, Zhang N, Chen X (2020) A short-term residential load forecasting model based on lstm recurrent neural network considering weather features. Energies 14. https://doi.org/10.3390/en14102737

Zang H, Xu R, Cheng L, Ding T, Liu L, Wei Z, Sun G (2021) Residential load forecasting based on LSTM fusing self-attention mechanism with pooling. Energy 229. https://doi.org/10.1016/j.energy.2021.120682

Han L, Peng Y, Li Y, Yong B, Zhou Q, Shu L (2019) Enhanced deep networks for short-term and medium-term load forecasting. IEEE Access 7:4045–4055. https://doi.org/10.1109/ACCESS.2018.2888978

Phyo PP, Byun YC, Park N (2022) Short-term energy forecasting using machine-learning-based ensemble voting regression. Symmetry 14. https://doi.org/10.3390/sym14010160

Zhao Z, Xia C, Chi L, Chang X, Li W, Yang T, Zomaya AY (2021) Short-term load forecasting based on the transformer model. Information (Switzerland) 12. https://doi.org/10.3390/INFO12120516

Moon J, Kim Y, Son M, Hwang E (2018) Hybrid short-term load forecasting scheme using random forest and mlp. Energies 11. https://doi.org/10.3390/en11123283

Massaoudi M, Refaat SS, Chihi I, Trabelsi M, Oueslati FS, Abu-Rub H (2021) A novel stacked generalization ensemble-based hybrid LGBM-XGB-MLP model for short-term load forecasting. Energy 214. https://doi.org/10.1016/j.energy.2020.118874

Din GMU, Marnerides AK ( 2017) Short term power load forecasting using deep neural networks. In: 2017 International conference on computing, networking and communications (ICNC). https://doi.org/10.1109/ICCNC.2017.7876196

Zyglakis L, Zikos S, Kitsikoudis K, Bintoudi AD, Tsolakis AC, Ioannidis D, Tzovaras D (2020) Greek smart house nanogrid dataset Zenodo. https://doi.org/10.5281/ZENODO.4246525

Spiliotis E, Assimakopoulos V, Makridakis S, Assimakopoulos V (2020) The M5 accuracy competition: results, findings and conclusions. Int J Forecast 38. https://doi.org/10.1016/j.ijforecast.2021.11.013

Oh S (2022) Predictive case-based feature importance and interaction. Inf Sci 593:155–176. https://doi.org/10.1016/j.ins.2022.02.003

Surakhi O, Zaidan MA, Fung PL, Motlagh NH, Serhan S, Alkhanafseh M, Ghoniem RM, Hussein T (2021) Time-lag selection for time-series forecasting using neural network and heuristic algorithm. Electronics (Switzerland) 10. https://doi.org/10.3390/electronics10202518

Gowriswari S, Brindha S ( 2022) Hyperparameters optimization using gridsearch cross validation method for machine learning models in predicting diabetes mellitus risk. In: 2022 International conference on communication, computing and Internet of Things (IC3IoT), pp 1– 4. https://doi.org/10.1109/IC3IOT53935.2022.9768005

Miller C, Kathirgamanathan A, Picchetti B, Arjunan P, Park JY, Nagy Z, Raftery P, Hobson BW, Shi Z, Meggers F (2020) The Building data genome project 2, energy meter data from the ASHRAE Great Energy Predictor III competition. Sci Data 7. https://doi.org/10.1038/s41597-020-00712-x

Katoch S, Chauhan SS, Kumar V (2020) A review on genetic algorithm: past, present, and future. Multimedia Tools Appl 80(5):8091–8126. https://doi.org/10.1007/s11042-020-10139-6

Attia S, Kurnitski J, Kosiński P, Borodiņecs A, Belafi ZD, István K, Krstić H, Moldovan M, Visa I, Mihailov N, Evstatiev B, Banionis K, Čekon M, Vilčeková S, Struhala K, Brzoň R, Laurent O (2022) Overview and future challenges of nearly zero-energy building (nZEB) design in Eastern Europe. Energy Build 267. https://doi.org/10.1016/j.enbuild.2022.112165

Mystakidis A, Ntozi E, Afentoulis K, Koukaras P, Gkaidatzis P, Ioannidis D, Tjortjis C, Tzovaras D (2023) Energy generation forecasting: elevating performance with machine and deep learning. Computing. https://doi.org/10.1007/s00607-023-01164-y

Koukaras P, Tjortjis C, Gkaidatzis P, Bezas N, Ioannidis D, Tzovaras D (2022) An interdisciplinary approach on efficient virtual microgrid to virtual microgrid energy balancing incorporating data preprocessing techniques. Computing 104:209–250. https://doi.org/10.1007/s00607-021-00929-7

Dang-Ha T-H, Bianchi FM, Olsson R (2017) Local short term electricity load forecasting: automatic approaches

Acknowledgements

This research is co-financed by Greece and the European Union (European Social Fund-SF) through the Operational Programme «Human Resources Development, Education and Lifelong Learning 2014-2020» in the context of the project “Support for International Actions of the International Hellenic University”, (MIS 5154651).

Funding

Open access funding provided by HEAL-Link Greece.

Author information

Authors and Affiliations

Contributions

Nikolaos Tsalikidis: Conceptualization, Investigation, Formal analysis, Methodology, Implementation, Writing-Original draft and Editing. Aristeidis Mystakidis: Supervision, Methodology, Writing-Reviewing and Editing Christos Tjortjis: Supervision, Conceptualization, Editing, Validation Paraskevas Koukaras: Methodology, Writing-Reviewing and Editing, Validation Dimosthenis Ioannidis: Conceptualization, Data acquisition, Reviewing.

Corresponding author

Ethics declarations

Competing interests

Researchers in Information Technologies Institute, Centre of Research & Technology or School of Science and Technology, International Hellenic University.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Tsalikidis, N., Mystakidis, A., Tjortjis, C. et al. Energy load forecasting: one-step ahead hybrid model utilizing ensembling. Computing 106, 241–273 (2024). https://doi.org/10.1007/s00607-023-01217-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00607-023-01217-2

Keywords

- Energy load forecasting

- Time series forecasting

- Machine learning

- Short-term prediction

- Ensemble methods

- Smart building