Abstract

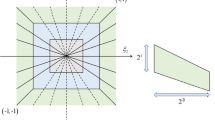

Deep belief networks (DBNs) are used in many applications such as image processing and pattern recognition. But the data vectorized resulting in the loss of high-dimensional data and valuable spatial information. The classical DBNs model is based on restricted Boltzmann machines (RBMs) and full connectivity between the visible units and the hidden units. It requires a large number of parameters to be trained using an army of training samples. However, it is difficult to obtain so many training samples in reality. To solve this problem, this paper proposes a matrix-variate deep belief networks (MVDBNs) model which is created from matrix variate RBMs whose parameter is restricted as canonical polyadic (CP) decomposition. MVDBNs are composed of two or more matrix-variate restricted Boltzmann machines (MVRBMs) whose input and latent variables are in matrix form. The MVDBNs have much fewer model parameters and deeper layer with better features to avoid overfitting and more accurate model easier to be learned. We demonstrate the capacity of MVDBNs on handwritten digit classification and medical image processing. We also extend MVRBMs to a multimodal case for image super-resolution.

Similar content being viewed by others

References

Andrew, N.: Sparse Autoencoder. CS294A Lecture notes, vol. 72 (2011). https://web.stanford.edu/class/cs294a/sparseAutoencoder.pdf

Cai, Y., Landis, M., Laidley, D.T., Kornecki, A., Lum, A., Li, S.: Multi-modal vertebrae recognition using transformed deep convolution network. Comput. Med. Imaging Graph. 51, 11–19 (2016)

De Chazal, P., Tapson, J., van Schaik, A.: A comparison of extreme learning machines and back-propagation trained feed-forward networks processing the MINST database. In: IEEE conference on (ICASSP), pp. 2165–2168 (2015). https://doi.org/10.1109/ICASSP.2015.7178354

Cun, Y.L., Boser, B., Denker, J.S., Howard, R.E., Habbard, W., Jackel, L.D.: Handwritten digit recognition with a back-propagation network. In: Advances in Neural Information Processing Systems, pp. 396–404 (1990)

Deng, L.: The MNIST database of handwritten digit images for machine learning research. IEEE Signal Process. Mag. 29(6), 141–142 (2012)

Ferroni, G., Bonfigli, R., Principi, E., Squartini, S., Piazza, F.: A deep neural network approach for voice activity detection in multi-room domestic scenarios. In: Proceedings of the International Joint Conference on Neural networks, pp. 1–8 (2015). https://doi.org/10.1109/IJCNN.2015.7280510

Fischer, A., Igel, C.: An introduction to restricted Boltzmann machines. In: Progress in Pattern Recognition, Image Analysis, Computer Vision, and Applications, pp. 14–36. Springer, Berlin (2012)

Greenspan, H., Ginneken, B., Summers, R.M.: Guest editorial deep learning in medical imaging: overview and future promise of an exciting new technique. IEEE Trans. Med. Imaging 35(5), 1153–1159 (2016)

Hinton, G.E.: Training products of experts by minimizing contrastive divergence. Neural Comput. 14(8), 1771–1800 (2002)

Hinton, G.E., Osindero, S., Teh, Y.W.: A fast learning algorithm for deep belief nets. Neural Comput. 18(7), 1527–1554 (2006)

Hinton, G.E., Salakhutdinov, R.: Reducing the dimensionality of data with neural networks. Science 313(5786), 504–507 (2006)

Hinton, G.E., Sejnowski, T.J.: Optimal perceptual inference. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 448–453 (1983)

Hutchinson, B., Deng, L., Yu, D.: Tensor deep stacking networks. IEEE Trans. Pattern Anal. Mach. Intell. 35(8), 1944–1957 (2013)

Jagadeesh, M., Kumar, M., Soman, K.: Deep belief network based part-of-speech tagger for Telugu language. In: Proceedings of the Second International Conference on Computer and Communication Technologies, pp. 75–84. Springer, Berlin (2016)

Kang, M., Gonugondla, S.K., Min-Sun, K., Shanbhag, N.R.: An energy-efficient memory-based high-throughput vlsi architecture for convolutional networks. In: IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 1037–1041 (2015). https://doi.org/10.1109/ICASSP.2015.7178127

Khamparia, A., Gupta, D., Nguyen, N., Khanna, A., Pandey, B., Tiwari, P.: Sound classification using convolutional neural network and tensor deep stacking network. IEEE Access 1(99), 7717–7727 (2019)

LeCun, Y., Kavukcuoglu, K., Farabet, C.: Convolutional networks and applications in vision. In: Proceedings of 2010 IEEE International Symposium on Circuits and Systems (ISCAS), pp. 253–256 (2010). https://doi.org/10.1109/ISCAS.2010.5537907

Liu, P., Han, S., Meng, Z., Tong, Y.: Facial expression recognition via a boosted deep belief network. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 1805–1812 (2014). https://doi.org/10.1109/CVPR.2014.233

Lu, R., Duan, Z., Zhang, C.: Audio-visual deep clustering for speech separation. IEEE/ACM Trans. Audio Speech Lang. Process. 27(11), 1697–1712 (2019)

Mleczko, W.K., Kapuściński, T., Nowicki, R.K.: Rough deep belief network-application to incomplete handwritten digits pattern classification. In: Information and Software Technologies, pp. 400–411. Springer, Berlin (2015)

Zhao, W., Ammar, H.B., Roos, N.: Dynamic object recognition using sparse coded three-way conditional restricted boltzmann machines. In: Proceedings of the 25th Benelux Conference on Artificial Intelligence, pp. 271–278 (2013)

Qi, G., Sun, Y., Gao, J., Hu, Y., Li, J.: Matrix variate restricted Boltzmann machine. In: Proceedings of the International Joint Conference on Neural Networks, pp. 389–395 (2016). https://doi.org/10.1109/IJCNN.2016.7727225

Rövid, A., Szeidl, L., Várlaki, P.: On tensor-product model based representation of neural networks. In: 2011 15th IEEE International Conference on Intelligent Engineering Systems, pp. 69–72 (2011). https://doi.org/10.1109/INES.2011.5954721

Salakhutdinov, R., Hinton, G.E.: Replicated softmax: an undirected topic model. In: Advances in Neural Information Processing Systems 22 - Proceedings of the 2009 Conference, pp. 1607–1614, Vancouver, BC, Canada (2009)

Srivastava, N., Hinton, G.E., Krizhevsky, A., Sutskever, L., Salakhutdinov, R.: Dropout: a simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 15(1), 1929–1958 (2014)

Tang, Y., Salakhutdinov, R., Hinton, G.: Tensor analyzers. In: 30 th International conference on machine learning, pp. 163–171, Atlanta, GA, United states (2013)

Xia, B., Li, Q., Jia, J., Wang, J., Chaudhary, U., Ramos-Murguialday, A., Birbaumer, N.: Electrooculogram based sleep stage classification using deep belief network. In: Proceedings of the International Joint Conference on Neural Networks (IJCNN), pp. 1–5, Killarney, Ireland (2015). https://doi.org/10.1109/IJCNN.2015.7280775

Yang, J., Wang, Z., Lin, Z., Scott, C., Thomas, H.: Coupled dictionary training for image super-resolution. IEEE Trans. Image Process. 21(8), 3467–3478 (2012)

Yang, J., Wright, J., Huang, T.S., Ma, Y.: Image super-resolution via sparse representation. IEEE Trans. Image Process. 19(11), 2861–2873 (2010)

Yu, D., Deng, L.: Deep learning and its applications to signal and information processing [exploratory DSP]. Signal Process. Mag. IEEE 28(1), 145–154 (2011)

Yu, D., Deng, L., Dahl, G.: Roles of pre-training and fine-tuning in context-dependent DBN-HMMs for real-world speech recognition. In: Proceedings of NIPS Workshop on Deep Learning and Unsupervised Feature Learning (2010)

Zhao, L., Zhou, Y., Lu, H., Fujita, H.: Parallel computing method of deep belief networks and its application to traffic flow prediction. Knowl. Based Syst. 163, 972–987 (2019)

Acknowledgements

We thank Dr. Zaiwen Liu and all our reviewers for their valuable discussions and suggestions.

Funding

This work was supported by the National Natural Science Foundation of China (NSFC) [Grant Numbers 11901319].

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by I. IDE.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

X. Hou, G. Qi: Joint first author.

Rights and permissions

About this article

Cite this article

Hou, X., Qi, G. Matrix variate deep belief networks with CP decomposition algorithm and its application. Multimedia Systems 26, 571–583 (2020). https://doi.org/10.1007/s00530-020-00666-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00530-020-00666-5