Abstract

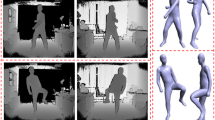

Human pose reconstruction using depth images has received much attention for human-centric applications. Body-part labeling at pixel-level has shown to be efficient for human pose reconstruction. This paper presents an accurate human pose reconstruction method from a single depth image by combining body-part labeling and nearest pose-matching techniques. New pixel-level depth difference and local curvature-encoding features are introduced to provide more contextual depth information for pixel-level body-part labeling. To reduce the misclassification error, inspired by pose-matching techniques, a corrective step is also proposed. The method extracts depth region proposals from a reference pose and finds the best match using PCT coefficients to correct uncertain labels. Tests on a set of synthetic and natural depth poses showed improved accuracy of body-part labeling compared to the state-of-the-art methods. In addition, in comparison with the previous methods and the Kinect camera, an improved accuracy for human range of motion measurement was obtained .

Similar content being viewed by others

References

Xia, S., Gao, L., Lai, Y.-K., Yuan, M.-Z., Chai, J.: A survey on human performance capture and animation. J. Comput. Sci. Technol. 32(3), 536–554 (2017)

Mündermann, L., Corazza, S., Andriacchi, T.P.: The evolution of methods for the capture of human movement leading to markerless motion capture for biomechanical applications. J. NeuroEng. Rehabil. 3(1), 6 (2006)

Han, F., Reily, B., Hoff, W., Zhang, H.: Space-time representation of people based on 3D skeletal data: a review. Comput. Vis. Image Underst. 158, 85–105 (2017)

Presti, L.L., La Cascia, M.: 3D skeleton-based human action classification: a survey. Pattern Recognit. 53, 130–147 (2016)

Wang, Y., Liu, Y., Tong, X., Dai, Q., Tan, P.: Outdoor markerless motion capture with sparse handheld video cameras. IEEE Trans. Vis. Comput. Graph. PP(99), 1 (2017)

Li, J., Grandner, M.A., Chang, Y.-P., Jungquist, C., Porock, D.: Person-centered dementia care and sleep in assisted living residents with dementia: a pilot study. Behav. Sleep Med. 15(2), 97–113 (2017)

Knippenberg, E., Verbrugghe, J., Lamers, I., Palmaers, S., Timmermans, A., Spooren, A.: Markerless motion capture systems as training device in neurological rehabilitation: a systematic review of their use, application, target population and efficacy. J. Neuroengi. Rehabil. 14(1), 61 (2017)

Shotton, J., Sharp, T., Kipman, A., Fitzgibbon, A., Finocchio, M., Blake, A., Cook, M., Moore, R.: Real-time human pose recognition in parts from single depth images. Commun. ACM. 56(1), 116–124 (2013)

Consortium, O., OpenNI, http://www.openni.ru. 17 Mar 2018

Mousavi Hondori, H., Khademi, M.: A review on technical and clinical impact of microsoft kinect on physical therapy and rehabilitation. J. Med. Eng. (2014)

Zhou, H., Hu, H.: Human motion tracking for rehabilitation: a survey. Biomed. Signal Process. Control 3(1), 1–18 (2008)

Lee, S.H., Yoon, C., Chung, S.G., Kim, H.C., Kwak, Y., Park, H.: Kim K.: Measurement of shoulder range of motion in patients with adhesive capsulitis using a kinect. PloS ONE. 10 (6), e0129398 (2015)

Pastor, I., Hayes, H.A., Bamberg, S.J.: A feasibility study of an upper limb rehabilitation system using kinect and computer games. In: Engineering in Medicine and Biology Society (EMBC), Annual International Conference of the IEEE, pp 1286–1289 (2012)

Da Gama, A., Chaves, T., Figueiredo, L., Teichrieb, V. Guidance and movement correction based on therapeutics movements for motor rehabilitation support systems. In: Virtual and Augmented Reality (SVR), 14th Symposium on IEEE, pp 191–200 (2012)

Wei, X., Zhang, P., Chai, J.: Accurate realtime full-body motion capture using a single depth camera. ACM Trans. Graph. (TOG). 31(6), 188 (2012)

Liang, H., Yuan, J., Thalmann, D.: Parsing the hand in depth images. IEEE Trans. Multimedi. 16(5), 1241–1253 (2014)

Gajdosik, R.L., Bohannon, R.W.: Clinical measurement of range of motion: review of goniometry emphasizing reliability and validity. Phys. Ther. 67(12), 1867–1872 (1987)

Zulkarnain, R.F., Kim, G.-Y., Adikrishna, A., Hong, H.P., Kim, Y.J., Jeon, I.-H.: Digital data acquisition of shoulder range of motion and arm motion smoothness using Kinect v2. J. Shoulder Elb. Surg. 26(5), 895–901 (2017)

Manghisi, V.M., Uva, A.E., Fiorentino, M., Bevilacqua, V., Trotta, G.F., Monno, G.: Real time RULA assessment using Kinect v2 sensor. Appl. Ergon. 65, 481–491 (2017)

Reither, L.R., Foreman, M.H., Migotsky, N., Haddix, C., Engsberg, J.R.: Upper extremity movement reliability and validity of the Kinect version 2. Disabil. Rehabil. Assist. Technol. 13(1), 54–59 (2018)

Bonnechere, B., Jansen, B., Salvia, P., Bouzahouene, H., Omelina, L., Moiseev, F., Sholukha, V., Cornelis, J., Rooze, M., Jan, S.V.S.: Validity and reliability of the Kinect within functional assessment activities: comparison with standard stereophotogrammetry. Gait Posture 39(1), 593–598 (2014)

Ye, M., Zhang, Q., Wang, L., Zhu, J., Yang, R., Gall, J.: A survey on human motion analysis from depth data. In: Time-of-Flight and Depth Imaging. Sensors, Algorithms, and Applications. Springer, Berlin, Heidelberg, pp 149–187 (2013)

Zhang, Q., Song, X., Shao, X., Shibasaki, R., Zhao, H.: Unsupervised skeleton extraction and motion capture from 3D deformable matching. Neurocomputing 100, 170–182 (2013)

Baak, A., Müller, M., Bharaj, G., Seidel, H.-P., Theobalt, C.: A data-driven approach for real-time full body pose reconstruction from a depth camera. In: IEEE International Conference on Computer Vision, Barcelona, Spain (2011)

Taylor, J., Shotton, J., Sharp, T., Fitzgibbon, A.: The vitruvian manifold: inferring dense correspondences for one-shot human pose estimation. In: Computer Vision and Pattern Recognition (CVPR), IEEE Conference on, pp 103–110 (2012)b b

Ganapathi, V., Plagemann, C., Koller, D., Thrun, S.: Real-time human pose tracking from range data. In: European Conference on Computer Vision, Springer, pp 738–751 (2012)

Ye, M., Wang, X., Yang, R., Ren, L., Pollefeys, M.: Accurate 3d pose estimation from a single depth image. In: IEEE International Conference on Computer Vision (ICCV), pp 731–738 (2011)

Cheng, K.-L., Tong, R.-F., Tang, M., Qian, J.-Y., Sarkis, M.: Parametric human body reconstruction based on sparse key points. IEEE Trans. Vis. Comput. Graph. 22(11), 2467–2479 (2016)

Shafaei, A., Little, J.J.: Real-time human motion capture with multiple depth cameras. In: 13th Conference on IEEE Computer and Robot Vision (CRV), pp 24–31 (2016)

Jiu, M., Wolf, C., Taylor, G., Baskurt, A.: Human body part estimation from depth images via spatially-constrained deep learning. Pattern Recognit. Lett. 50, 122–129 (2014)

Sun, M., Kohli, P., Shotton, J.: Conditional regression forests for human pose estimation. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp 3394–3401 (2012)

Girshick, R., Shotton, J., Kohli, P., Criminisi, A., Fitzgibbon, A.: Efficient regression of general-activity human poses from depth images. In: IEEE International Conference on Computer Vision (ICCV), pp 415–422 (2011)

Achanta, R., Shaji, A., Smith, K., Lucchi, A., Fua, P., sstrunk, S.: SLIC Superpixels compared to state-of-the-art superpixel methods. IEEE Trans. Pattern Anal. Mach. Intell. 34(11), 2274–2282 (2012)

Breiman, L.: Random forests. Machine learning. 45(1), 5–32 (2001)

Xia, S., Zhang, Z., Su, L.: Cascade of full-body pose regression from single depth image at 100 FPS. arXiv:1711.08126v2 (2018)

Yap, P.-T., Jiang, X., Kot, A.C.: Two-dimensional polar harmonic transforms for invariant image representation. IEEE Trans. Pattern Anal. Mach. Intell. 32(7), 1259–1270 (2010)

CMU dataset. http://mocap.cs.cmu.edu. 17 Mar 2018

Jiu, M., Wolf, C., Baskurt, A.: Integrating Spatial Layout of object parts into classification without pairwise terms-application to fast body parts estimation from depth images. In: VISAPP, pp 626–631 (2013)

Farabet, C., Couprie, C., Najman, L., LeCun, Y.: Scene parsing with multiscale feature learning, purity trees, and optimal covers. arXiv preprint arXiv 1202.2160 (2012)

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Y. Zhang.

Appendix

Appendix

Definition of upper extremity functional exercises evaluated in this paper and ROM measurement formulations.

In the following, the figure and measurement criteria of each movement are described.

-

1.

Waist bent person clings to his waist, his legs slightly open, and then moves to bend the waist. The calculated angle for this exercise is indicated in Fig. 10:

$${\text{Waist}}\,{\text{bending}}={\theta _4}=t{g^{ - 1}}\left( {\frac{{l{x_{hd}}}}{{l{z_{rd}}}}} \right).$$(13) -

2.

Elbow flexion The hands are dropped and then bent from the elbow joint, as shown in Fig. 9. The move is completed when the fingers touch the shoulder:

$${\text{Elbow~}}\,{\text{flexion}}\,1={\theta _1}={\cos ^{ - 1}}\left( {\frac{{D_{{{\text{eh}}}}^{2}+D_{{{\text{es}}}}^{2} - D_{{{\text{sh}}}}^{2}}}{{2{D_{{\text{eh}}}}{D_{{\text{es}}}}}}} \right)$$(14)$$Elbow~flexion2={\theta _2}={\cos ^{ - 1}}(\frac{{D_{{eh}}^{2}+D_{{es}}^{2} - D_{{sh}}^{2}}}{{2{D_{eh}}{D_{es}}}}).$$(15) -

3.

Shoulder horizontal abduction/adduction In this exercise, hands are pushed in the horizontal direction with respect to the camera until hands reach the opposite shoulder (adduction) and return to the initial position (abduction), as shown in Fig. 9:

$${\text{Shoulder}}\,{\text{~horizontal}}\,{\text{~abduction}}={\theta _1}={\cos ^{ - 1}}\left( {\frac{{D_{{{\text{se}}}}^{2}+D_{{{\text{ns}}}}^{2} - D_{{{\text{ne}}}}^{2}}}{{2{D_{{\text{ns}}}}{D_{{\text{se}}}}}}} \right).$$(16) -

4.

Head rotation In this exercise, the head is rotated about the X-, Y-, and Z-axes. The head can be regarded as the local coordinate frame that identifies the X, Y, and Z axes (Fig. 9).

$${\text{Head~}}\,{\text{rotatio}}{{\text{n}}_x}={\text{t}}{{\text{g}}^{ - 1}}\left( {\frac{{l{y_{hd}}}}{{l{z_{nd}}}}} \right)$$(17)$${\text{Head~}}\,{\text{rotatio}}{{\text{n}}_y}=t{g^{ - 1}}\left( {\frac{{l{y_{hd}}}}{{l{z_{nd}}}}} \right)$$(18)$${\text{head}}\,{\text{~rotatio}}{{\text{n}}_z}=t{g^{ - 1}}\left( {\frac{{l{x_{hd}}}}{{l{y_{nd}}}}} \right).$$(19)

Rights and permissions

About this article

Cite this article

Farnoosh, A., Ali, NG. Accurate body-part reconstruction from a single depth image. Multimedia Systems 25, 165–176 (2019). https://doi.org/10.1007/s00530-018-0594-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00530-018-0594-9