Abstract

We aim to investigate how closely neural networks (NNs) mimic human thinking. As a step in this direction, we study the behavior of artificial neuron(s) that fire most when the input data score high on some specific emergent concepts. In this paper, we focus on music, where the emergent concepts are those of rhythm, pitch and melody as commonly used by humans. As a black box to pry open, we focus on Google’s MusicVAE, a pre-trained NN that handles music tracks by encoding them in terms of 512 latent variables. We show that several hundreds of these latent variables are “irrelevant” in the sense that can be set to zero with minimal impact on the reconstruction accuracy. The remaining few dozens of latent variables can be sorted by order of relevance by comparing their variance. We show that the first few most relevant variables, and only those, correlate highly with dozens of human-defined measures that describe rhythm and pitch in music pieces, thereby efficiently encapsulating many of these human-understandable concepts in a few nonlinear variables.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

To better understand the manner in which artificial intelligence organizes information according to emergent concepts similar to those used by humans (as opposed to simply memorizing answers thanks to large processing power), we perform several tests on a neural network (NN) designed to reproduce (and interpolate between) music tracks.

More specifically, our study proceeds in two steps:

-

1.

Consider a pre-trained NN as a black box, open it and see if we can extract patterns from the way it has encoded information, so that we can begin to make some basic sense of its inner workings.

-

2.

If we find such patterns, ask whether this organization can be compared with pre-defined quantities used by humans to describe emergent concepts.

Other authors have shown how to take specific NNs and extract from them variables that are meaningful to humans for various types of data, showing for instance which neurons fire specifically when a CNN is shown images of ski resorts [1], asking whether some neuron’s output encapsulate concepts such as conserved quantities in mechanics problems [2], finding the number of independent variables in a physical system [3], or quantifying the type and degree of symmetry in paintings [4].

To focus on a different type of data, we choose the following NN as a testing ground: Google Magenta’s MusicVAE [5], a Variational Auto-Encoder (VAE) [6] which uses a 512-dimensional latent space to represent a few bars of music. We remind the reader that a VAE is a deep NN trained to produce outputs that closely match the input data. For this, it uses layers adapted to the data type at hand: dense layers for basic cases, convolution layers for images, recurrent layers for sequences such as music. Other examples of exploring the latent space of a VAE trained with music can be found in Refs. [7,8,9,10,11, 11,12,13].

If one were to simply use the reconstruction error as the loss function, the NN could simply learn to memorize each of the individual inputs. To avoid this, the VAE includes two related modifications: one of the intermediate layers (called the latent space) is taken to be stochastic, and the loss function is modified to balance the effects of that stochastic layer’s probability distribution against the reconstruction error.

At inference time, data are fed into the VAE from the “encoder” side (the part of the NN that is upstream of the latent space, i.e., to the left in Fig. 1). Each individual data input produces a probability distribution that is defined as a multivariate Gaussian, the means and variances of each of the (512) dimensions being given by (1024) neurons in this layer, see Fig. 1. The 512 dimensions are called latent dimensions.

A schematic depiction of the task performed by MusicVAE: a piece of music is encoded into a (multivariate Gaussian) distribution in a 512-dimensional latent space. That distribution can then be sampled from and decoded back to produce a similar “avatar” piece of music. In practice, the model we use focuses on monophonic melodies and uses recurrent networks in both its encoder and decoder

To “decode” this latent encoding back into the original data space (and hopefully produce a close match the original input), a sample is taken from the random distribution for this particular input, and inference is run through the second (decoder) part of the NN (the one downstream from the latent space, i.e., to the right in Fig. 1). For the stochastic property of the layer to play its role and spread each input’s encoding to ensure continuity and avoid memorization, the VAE is encouraged to keep each data point’s encoded probability distribution close to a common prior—the identity multivariate Gaussian in the present case. This is achieved by redefining the full loss function as the sum of the reconstruction error and of a measure of the distance between the latent distribution encoding a given input, and the target prior (namely the Kullback–Leibler divergence) Footnote 1.

The specific neurons we are interested in analyzing are the 512 that define the latent space distribution’s central value for each music input, and which we call latent variables: our aim is to study what musical information they represent. For most of this paper, we are not interested in the 512 neurons that produce the variances, except insofar as they help us learn about the neurons encoding the 512 central values.

In practice, we ask:

-

1.

Whether the latent dimensions can be ordered by relevance/importance,

-

2.

How many latent dimensions are really necessary for the VAE to perform well, i.e., can some latent dimensions be classified as irrelevant?

-

3.

Whether some of the relevant latent dimensions can be singled out as particularly important?

-

4.

If these most relevant latent dimensions correspond to some concepts of musicality as understood by humans?

Minimizing the size of latent spaces and the number of units in an NN in general may be useful to design nimbler models that are faster to train. Yet the implications of this work go beyond practical simplifications and beyond applications to music. They should be understood in the broader concept of other studies that have shown that some neurons in a NN encode information that is highly correlated with concepts developed by humans to describe the same data, be it music, painting, physical laws or images, as it helps us understand better how NNs (and humans) might learn and encode information. We believe it is important to be able to extract patterns of organization within NNs and to pinpoint neurons that encode specific information to provide another step toward Explainable AI. This could have practical implications in terms of ethics for instance, as pruning specific neurons could help in removing unwanted biases.

In Sect. 2, we illustrate how MusicVAE works on the first 2 bars of the melody “Twinkle, twinkle, little star”. This allows us to introduce the structure of the latent space, hinting at a division of latent dimensions into two sets: relevant and irrelevant.

In Sect. 3, we take a large sample of musical tunes to show that this ordering of latent dimensions according to relevance carries through for music tracks beyond “Twinkle, twinkle, little star”.

In Sect. 4, we show which latent dimensions encode the information of the human-defined quantities of rhythm and pitch.

In Sect. 5, we illustrate how sequences of random notes are encoded in the latent space and use this to test our assumptions about the difference between relevant dimensions and irrelevant dimensions.

In Sect. 6, we show how the analysis carries over to chunks of 16 bars of music and discuss the concept of melody.

2 “Twinkle, twinkle, little star” and MusicVAE.

To introduce some basic ideas, we focus as an example on the melody “Twinkle, twinkle, little star”, starting from its sheet music, or rather piano-roll representation as depicted at the top of Fig. 2, where the x-axis depicts time and the y-axis encodes frequency in the logarithmic scale of MIDI notes (equivalent to numbering the corresponding keys on a piano keyboard from left to right, including black keys).

As for any input, “Twinkle, twinkle” gets encoded into a vector \(\mu _{[1,\ldots ,512]}^{\text{twinkle}}\) of 512 central values, and a vector \(\sigma _{[1,\ldots ,512]}^{\text{twinkle}}\) of 512 standard deviations, defining a 512-dimensional Gaussian distribution in latent space.

Sampling from this 512-dimensional distribution and passing it through the decoder part of MusicVAE yields back another 2-bar note sequence that is similar but not necessarily identical to the original track, such as the one in the lower plot in Fig. 2.

Given the stochastic nature of the VAE, the output may change between two inferences on the same input. Figure 3 illustrates this in the case of our “Twinkle, twinkle” example. The top plot depicts the standard deviations \(\sigma _{[0,\ldots ,511]}^{\text{twinkle}}\) of the multivariate Gaussian distribution for the first 100 of the 512 latent dimensions, sorted from smallest \(\sigma \) to largest. The middle plot shows the first 100 central values \(\mu _{[1,\ldots ,512]}^\text{twinkle}\) in the same order.

Latent encoding for the note sequence in Fig. 2. We only show the 100 dimensions with the smallest variance, in order. Standard deviations are at the top, means in the middle, and a random sample from the distribution at the bottom. The vertical red dashed line hints at a possible split between relevant latent dimensions (to the left), and irrelevant latent dimensions to the right

Together, these lists of 512 \(\mu \)’s and 512 \(\sigma \)’s entirely specify a multivariate Gaussian distribution from which we can sample and decode to obtain variations on the original input (“Twinkle, twinkle”): the bottom plot in Fig. 3 represents a sample drawn from this distribution. Once decoded, this sample yields the melody at the bottom of Fig. 2. On the other hand, decoding the central values themselves (i.e., the values in the middle plot of Fig. 3) yields back the original version of “Twinkle, twinkle, little star”, i.e., the piano-roll at the top of Fig. 2 Footnote 2.

From the top plot of Fig. 3, we can see that the encoding of “Twinkle, twinkle” is very precisely specified (small values of \(\sigma \)) along the first few dimensions in this ordering, indicating that MusicVAE uses these dimensions to encode a lot of important information about this particular piece of music, whereas the dimensions with large spreads could be approximated by standard Gaussians with \(\sigma \approx 1\) and \(\mu \approx 0\): in the top two plots of Fig. 3. What remains to be seen is whether this is also the case for other music tracks beyond “Twinkle, twinkle”, and whether the latent dimensions remain in the same order of relevance from song to song.

3 The structure of MusicVAE’s latent space

For the application described here, we used MusicVAE’s 2-bar model, which considers monophonic sequences of notes quantized down to 16th notes: this yields 32 discrete time stamps to choose from for a note to start. As for the pitches, MIDI files specify \(2^7 = 128\) discrete MIDI notes, i.e., slightly more than the 88 keys on a piano, to which we need to add the possibility of starting a silence, and the possibility of holding a frequency for the next interval, i.e., 130 discrete possibilities. With this quantization, there are \(4 \times 10^{67}\) possible 2-bar note sequences, whereas a latent space of 512 dimensions—even if these dimensions were perceptrons (i.e., binary neurons)—can describe over \(2^7 \approx 10^{154}\) possibilities and is thus over-dimensioned to describe all possible note sequences, let alone all the ones that can be considered music to a human ear. The subspace of note sequences that can be called “music” is even smaller.

The question is: how many latent dimensions do we really need in order to describe music?

To begin answering this question, we can ask whether the pattern observed in Fig. 3 is true for other pieces of music, i.e., if one latent dimension is specified with great precision (small spread) for one music piece, will that also be true for other tracks? The answer is a resounding “YES”.

To establish this, we pick at random from the 100,000 tracks in the Lakh MIDI Dataset [15]. We use about 10,000 tracks, from which the standard algorithm in MusicVAE extracts 5 melodies each, yielding 50,000 melodies. We then encode these melodies and study their encodings in latent space Footnote 3.

While for a given music track, we could order the latent dimensions as in Fig. 3, this may not be ideal, as each music track may produce a slightly different ordering of the \(\sigma \) values. We could order the latent dimensions according to the average over music tracks of the width of each latent dimension. Yet, it is logical to think that what matters is not simply that the width is large on average, but that it is larger than the typical central value for that same latent dimension.

For instance, latent dimensions which typically have \(\mu \approx 0\) and \(\sigma \approx 1\) are dimensions for which the training did not require a trade-off between the reconstruction loss and the KL divergence, and so the training produced a situation where this dimension essentially remains close to the default values of \(\mu = 0\) and \(\sigma = 1\) as enforced by the KL divergence with a normal prior: for these specific dimensions, the posterior is equal to the prior, i.e., it has collapsed [16]. Such latent dimensions play little role in the reconstruction or generation of data, and will therefore be called “irrelevant” and can be identified by \(|\mu |\ll \sigma \).

On the other hand, latent dimensions which encode relevant information will have required a trade-off between the reconstruction term and the KL term in the loss during training and, therefore, will typically produce \(\sigma < 1\) as well as nonzero values of \(\mu \ne 0\) for many music tracks (though not necessarily for all).

In summary, a latent dimension which encodes a song with \(|\mu |\gtrsim \sigma \) is one that needs to be very precisely specified in order to reproduce that music track, i.e., it has minimized the reconstruction error at the cost of the KL term. If this is true for most music tracks, we will label this latent dimension as “relevant”.

In fact, if we look across a large number of music tracks, we can compare the variability of the central value across music tracks, compared to the average of the widths, and thus order the latent dimensions k according to the value of:

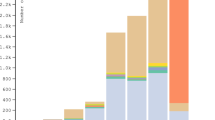

Selecting latent dimensions with relevance above a given cut-off should allow us to separate between relevant and irrelevant latent dimensions, but this first requires setting the cut-off. From Fig. 4, we can see one latent dimension with particularly high relevance \(> 15\), then 36 more dimensions with relevance larger than 0.5, then one latent dimension with relevance around 0.5, and finally a group of hundreds of latent dimensions with relevance lower than 0.5. Taking a cut-off of about 0.5 in relevance will separate the two groups: latent dimensions with high relevance (i.e., relevance above 0.5) and latent dimensions with a lower relevance (below 0.5). In this Figure, we can also see that the separation between relevant and irrelevant is well-defined as soon as we have about 100 encodings to average over, while the exact ordering of the 37 relevant dimensions is quite robust once we have gathered over 1000 encodings.

Latent dimension relevance calculated by applying the statistical estimator defined in 1 on samples of 10 to 10000 melodies. The chosen cut-off of 0.5 is depicted as a horizontal dashed black line

As a consequence of our definition of relevance, one can expect that ablating an irrelevant dimensions (setting it to zero, which is nearly its central value) will have very little effect on the accuracy of the music reproduction, while setting a relevant dimension to zero will drastically impact accuracy.

We can see in Fig. 5 that ablating all 475 irrelevant latent dimensions has very little effect, whereas ablating one of the first two relevant latent dimensions noticeably decreases the accuracy. There we use a “realistic” metric of accuracy as an intersection over union of non-trivial tokens: we count how many non-trivial tokens are common between input and output, dividing by the number of instants for which either input or output included a non-trivial tokenFootnote 4.

We can check this by looking at how much the central values of each melody vary along these same dimensions. In the lower plot of Fig. 6, we depict the central values for a random set of tracks in our database, using the same 100 latent coordinates as the top plot that depicts the variability from song to song for the width of each latent dimension. The first thing to notice is that, for all dimensions with a wide spread (the ones with \(\sigma \approx 1\) in the upper plot of the same Figure , i.e., to the right of the vertical dashed red line), the central value is always close to 0: all note sequences are encoded with the same unit Gaussian for these hundreds of dimensions, and hence, we have hundreds of irrelevant dimensions in latent space that carry very little musical information, more specifically 475 such dimensions (give or take 1 depending on the definition of the cut-off from Fig. 4).

On the other hand, for the relevant dimensions, i.e., the 37 latent dimensions to the left of the vertical dashed red line in Fig. 6, which have narrow distributions, we find that the central value fluctuates from track to track: these are the dimensions that contain the actual information about music. Not only are the central values of the relevant dimensions well-specified for each song, they vary a lot from song to song.

For our dataset, the correlation matrix in latent space is shown in Fig. 7: the 37 relevant latent dimensions we identified in Fig. 6 are quite uncorrelated. This is the subspace we will be most interested in the remainder of this paperFootnote 5 .

4 Latent variables for pitch and rhythm

In this Section, we attempt to disentangle how MusicVAE stores the information about music’s most fundamental qualities: rhythm and pitch.

For the present study, we do not need to delve into technical details of the definitions of “rhythm”, “pitch” or “melody”: suffice it to say that pitch is related to the notes’ basic frequencies (or alternatively, their location on the keyboard, with high-pitched notes to the right and lower frequencies to the left), independently of the moment/time they are played or of their relation to each other. On the other hand, rhythm is related to the temporal structure of music, i.e., the duration and arrangement of the succession of notes in time, independently of their pitch/frequency (i.e., independently of which key on the keyboard they correspond to). Finally, melody refers to relations or comparisons between the pitches of various notes played at different times in the sequence.

What the reader needs to know beyond this basic distinction is that musicologists have constructed dozens of variables to quantify various qualities of music, called “music features”Footnote 6: for monophonic music (no more than a single note played at any given time), these can be categorized under one of the three umbrellas of rhythm, pitch and melody. This implies that rhythm, pitch and melody are not usually thought of as uni-dimensional quantities by a human listenerFootnote 7.

We use the Python music21 libraryFootnote 8 to extract human-defined music features. To compute correlations that capture nonlinear dependencies, we use the correlation coefficient defined in the Python phik library [17].

The result is shown in Fig. 8. We can see that rhythm features (starting with the letter R at the bottom right in the Figure) are heavily correlated among themselves, as are some subsets of pitch features (from P11 to P15). Other pitch and melody features also form subsets of highly correlated features, but tend to correlate with other groups as well, even groups starting with a different letter (R or P).

Within our set of music tracks, we can compute correlations between latent dimension central values and human-defined music features, as displayed in Fig. 9. The salient points to notice are as follows:

-

1.

The first relevant latent variable correlates more with several rhythm music feature than any other relevant variable does.

-

2.

The second relevant latent variable correlates more with several pitch music feature than any other relevant variable does.

Figure 10 explicitly displays the high correlations between the most relevant latent variable and rhythm features. In Fig. 11, we can check that the second latent variable is (non-monotonously) correlated with several features.

Whereas humans require several music features to describe rhythm (respectively pitch), it seems as though MusicVAE condenses all of these into a single latent variable that encapsulates a lot of the information that we would intuitively qualify as rhythm (respectively pitch).

This is akin to what happens in other studies of emergent concepts in NNs, where single neurons quantify complicated concepts that may seem intuitive to humans but would be hard to describe explicitly [1,2,3]. Beyond the difference in analytic power due to its nonlinear abilities, the NN also seems to confirm that these concepts are very relevant (as indicated by the relevance of the corresponding latent dimensions) to correctly reproduce music, and it in fact suggests that rhythm is even more relevant than pitch in describing 2 bars of musicFootnote 9.

5 Random sequences of notes

MusicVAE has been trained on more than a million tracks of music of various genres, and it encodes these tracks using essentially the 37 relevant dimensions. We also saw that MusicVAE mostly uses 2 latent dimensions to represent rhythm and pitch. We can now ask what happens if we provide an input that is not real music: where does it get encoded in latent space? Are rhythm, pitch or the 37 relevant dimensions enough to distinguish music from noise?

5.1 Generating the data

We create 50,000 note sequences by switching on a random number of notes. Given that 32 sixteenth notes would fill exactly two bars at tempo 4/4, we draw the number of note switching-on events from a uniform distribution on integers from 2 to 32. Notes can only be switched on at discrete intervals (every sixteenth note), and we make sure that there is always exactly one note being played at any given timeFootnote 10.

The pitch for each note is selected from a uniform distribution over the integers from 30 to 100 in MIDI notation. This means that the pitches of the various notes in the sequence are uncorrelated and, as such, follow no melodic structure.

5.2 Distinguishing music and noise using individual latent dimensions

In Fig. 12, we show the histograms for the excitation of the four most relevant latent variables we identified above, in the case of the 50,000 melodies extracted from real music, and the 50,000 random note sequences we generated.

In the second plot from left in Fig. 12, we see that the pitches (second most relevant latent variable) of our random sequences differ from those of real music, as could be expected given the very broad distribution of pitches in our random sequences, whereas real melodies have narrower ranges of pitches.

As for rhythm on the other hand (leftmost plot in Fig. 12), the distribution of our random sequences of notes does not seem to differ that much from that of real music. This could be because we are only looking at 2 bars of music, admittedly a short sequence of notes most of the time, or because looking at one single latent dimension at a time is not enough to distinguish real music from random notes.

5.3 Counting excitations

Instead of looking at individual latent variables for each encoding, we first consider all latent variables at once, and ask whether it is possible to distinguish real music from random notes without even having identified relevant and non-relevant latent variables. We could do this at the level of samples for each encoded note sequence, but that would introduce more randomness, so we stick to using central values here. In practice, since we are asking whether the VAE can be used as a detector for music versus other sounds, we might as well use directly the information of the central values, which is provided by the VAE when performing a forward pass.

The result is shown in Fig. 13 where we see that, on average, random note sequences excite more latent dimensions than real music, as expected since the random notes are outliers that do not belong to the training distribution modeled by the VAE.

Since we know which latent dimensions actually encode the most relevant information about music, we can actually go one step further and refine this analysis by performing separate averages on the 37 most relevant latent dimensions and on the remaining 475, as shown in Fig. 14. There we see that the average excitation of relevant latent dimensions is larger than 1 for random notes, whereas it is slightly lower than 1 for real music. For the case of the irrelevant latent dimensions, the split is around 0.1, again with real music providing less excitation than random notesFootnote 11.

6 Looking for melody (variables)

As we can see from Fig. 9, we have not found a variable that can be conclusively said to encapsulate the melody information in the 2-bar case, at least not independently from rhythm: the second relevant dimension was correlated with many melody (M) features, but even more so to rhythm (R) features. This could be due to the fact that 2 bars for music are not enough to give a strong melodic signal, and we therefore turn to the 16-bar case.

6.1 Specifics of the 16-bar case

For the 16 bar case, the analysis of relevance of latent dimensions in Fig. 15, looking for two well-separated sets of latent dimensions, leads us to choose a cut-off of about 2 in relevance, see Fig. 15. We see that the 16-bar case requires about double the number of relevant dimensions than the 2-bar case: precisely 77 relevant dimensions, and that there are four highly relevant dimensions (relevance > 50).

Figure 16 collects the information that was presented in the previous sections for the case of 2 bars of music, but applied to sequences of 16 bars. We can pick out relevant dimensions 1 and 2, which are heavily correlated with pitch features, and relevant dimensions 3 and 4, which are heavily correlated with rhythm features. This exhausts the set of 4 highly relevant dimensions identified in Fig. 15.

Looking for latent variables that represent melody, we notice that the third and fourth most relevant latent dimensions are correlated with melody, but, as in the 2-bar case, they are mostly correlated with rhythm (see Fig. 9. Possible melody latent dimensions show up much later in Fig. 16, in relevance positions 27, 49, 63, 65 and 70. This might indicate that MusicVAE does not rely strongly on melody to organize its understanding of music, but that melody either appears as a consequence of the more basic concepts of pitch and rhythm, or only plays a secondary role.

7 Conclusion

We have studied how a VAE trained on a million music tracks organizes its 512-dimensional latent space into hundreds of “irrelevant dimensions” that are barely used to encode music, and a few dozens of “relevant dimensions” that actually encode musical information. It does make sense that the VAE only uses a fraction of its 512 dimensions for music, as even the space of random note sequences would not require such a large latent space.

Returning to the case of real music, and in particular 2-bar melodies, we found that MusicVAE uses 37 relevant dimensions to actually encode musical information, and of these, the first two in order of importance can be clearly identified as corresponding to pitch and rhythm.

Indeed, we have shown that several quantities defined in the literature to describe rhythm are correlated almost exclusively with the most relevant dimension, which nonlinearly encodes this complex information into a single real variable. The same occurred for pitch with the second most relevant latent variable, but not for melody, which does not appear to be encoded independently from rhythm for such short music tracks.

Moving on to chunks of 16 bars of music, we saw that MusicVAE uses 77 relevant dimensions to encode music, but most pitch information is encoded nonlinearly into the first two most important relevant dimensions. The next two relevant dimensions by order of importance encode several rhythm features. Dedicated latent dimensions that only encode melody only show up much further in order of relevance.

Whereas previous approaches have focused on enforcing a linear mapping of the human-defined quantities onto the latent space, we suggest that the nonlinear change of representation is what allows the VAE to extract “principal coordinates” that diagonalize the problem, thereby simplifying and extending the human-defined variables.

Data availability statement

Data will be made available on reasonable request.

Notes

The respective weights of the two terms in the loss function can be adjusted to emphasize reconstruction accuracy or continuity by introducing a relative coefficient \(\beta \) [14], but we will not delve into that in the present paper, since we view MusicVAE as a pre-trained black box.

While decoding the central value of the distribution for “Twinkle, twinkle” reproduces exactly the input, this will not generally be the case for all music pieces, although we typically expect the most accurate reconstruction to be obtained when using the central values of the distribution instead of a random sample.

Note that the Lakh MIDI Dataset is itself contained in the larger dataset that was used to train Google’s MusicVAE [5]. This will pretty much be the case for any dataset we can lay our hands on, since the authors of [5] scraped the web for MIDI tracks, gathering a private dataset of 1.5 million unique MIDI files. It is unlikely that we can find a dataset that is not contained within theirs. However, this is not a problem for the present study, as we are studying quantities such as correlations between latent variables or the effects of ablation, and are not concerned with evaluating the actual performance of the model on an independent test set.

The (optimistic) measure of accuracy used for training counts the proportion of correctly predicted tokens, including the (most-common) trivial token that corresponds to the information “keep playing the same note or silence”.

Note that these are correlations between central values of the encoding for each melody, not between samples from the distributions for each melody. The correlation matrix at the level of samples is essentially diagonal across the whole \(512 \times 512\) matrix, not just the subset of \(37 \times 37\). This is due to the model being designed with a 512-dimensional Gaussian prior with a diagonal covariance matrix: i.e., it is entirely specified by 512 central values and 512 standard deviations, without cross-correlations. In other words, by working at the level of central values and variances instead of using samples, we directly have access to the information we want, avoiding the noise from the random sampling.

See the whole list of music features here: https://jmir.sourceforge.net/manuals/jSymbolic_manual/featureexplanations_files/featureexplanations.html. To reduce the number of music features considered, we only include scalar music features and exclude vector features.

Even though one can sometimes hear it said that a music piece has “rhythm” or does not have it: we believe this is more often an expression of taste than an objective measurement.

Available at: https://web.mit.edu/music21/.

Instead of debating whether this statement should be understood as a fundamental truth about music, we remind the reader that the setup of the current study is still quite contrived, with notes quantized in pitch and in duration, and the training data being heavily biased toward Western music. We also note that a different ordering will be obtained in Section 6 for 16 bars of music.

To achieve this, each note is extended from it switch-on time (note-on event) until the start of the next note. To avoid boundary issues, the first note starts at time 0, while the last one extends until the end of the second bar. The total duration of the two bars is set by drawing from a uniform distribution of integer number of seconds between 1 and 8 (both inclusive).

Note that real music tracks statistically do excite some of these 475 latent dimensions: whereas the central values of these 475 latent dimensions for most music tracks are close to zero, there is some variability from melody to melody, as seen in Fig. 6. In fact, standard deviation of the central value for real music is typically of order 0.07, which provides the scale for the RMS of central values for these irrelevant dimensions seen in the right-hand side of Fig. 14.

References

Bau D, Zhu J-Y, Strobelt H, Lapedriza A, Zhou B, Torralba A (2020) Understanding the role of individual units in a deep neural network. Proc Natl Acad Sci 117(48):30071–30078

Iten Raban, Metger Tony, Wilming Henrik, del Rio Lídia, Renner Renato (2020) Discovering physical concepts with neural networks. Phys Rev Lett 124:010508

Chen B, Huang K, Raghupathi S, Chandratreya I, Du Q, Lipson H (2021) Discovering state variables hidden in experimental data

Gabriela Barenboim, Johannes Hirn, Veronica Sanz (2021) Symmetry meets AI. SciPost Phys 11:014

Roberts A, Engel JH, Raffel C, Hawthorne C (2018) and Douglas Eck. A hierarchical latent vector model for learning long-term structure in music. CoRR, arXiv:abs/1803.05428

Kingma DP, Welling M (2013) Auto-encoding variational bayes. 2nd International Conference on Learning Representations, ICLR 2014 - Conference Track Proceedings

Roberts A, Engel JH, Oore S, Eck D (2018) Learning latent representations of music to generate interactive musical palettes. In: IUI Workshops.

Pati A, Lerch A, Hadjeres G (2019) Learning to traverse latent spaces for musical score inpainting. arXiv preprint arXiv:1907.01164

Pati A, Lerch A (2019) Latent space regularization for explicit control of musical attributes. In ICML Machine Learning for Music Discovery Workshop (ML4MD), Extended Abstract, Long Beach, CA, USA

Mezza AI, Zanoni M, Sarti A (2023) A latent rhythm complexity model for attribute-controlled drum pattern generation. EURASIP J Audio, Speech, Music Process 1:11

Daniel Rivero, Iván Ramírez-Morales, Enrique Fernandez-Blanco, Norberto Ezquerra, Alejandro Pazos (2020) Classical music prediction and composition by means of variational autoencoders. Appl Sci 10(9):3053

Bryan-Kinns N, Ford C, Chamberlain A, Benford SD, Kennedy H, Li Z, Qiong W, Xia GG, Rezwana J (2023) Explainable ai for the arts: Xaixarts. In: Proceedings of the 15th Conference on Creativity and Cognition, pages 1–7

Bryan-Kinns N, Banar B, Ford C, Reed CN, Zhang Y, Colton S, Armitage J (2023) Exploring xai for the arts: Explaining latent space in generative music. arXiv preprint arXiv:2308.05496

Burgess CP, Higgins I, Pal A, Matthey L, Watters N, Desjardins G, Lerchner A (2018) Understanding disentangling in \(\beta \)-vae

Raffel C (2016) Learning-based methods for comparing sequences, with applications to audio-to-midi alignment and matching

Lucas J, Tucker G, Grosse G, Norouzi M (2019) Understanding posterior collapse in generative latent variable models

Baak M, Koopman R, Snoek H, Klous S (2020) A new correlation coefficient between categorical, ordinal and interval variables with Pearson characteristics. Comput Stat Data Anal 152:107043

Acknowledgements

GB and JH acknowledge support from the Spanish grants PID2020-113334GB-I00 / AEI / 10.13039/501100011033 and CIPROM/2021/054 (Generalitat Valenciana). JH acknowledges support from the RESECARIN project TED2021-129682B-I00 from the Spanish Ministerio de Ciencia e Innovación. VS acknowledges support from the Generalitat Valenciana PROMETEO/2021/083 and the Ministerio de Ciencia e Innovacion PID2020-113644GB-I00. This project has received funding /support from the European Union’s Horizon 2020 research and innovation programme under the Marie Skłodowska-Curie grant agreement 860881-HIDDeN.

Funding

Open Access funding provided thanks to the CRUE-CSIC agreement with Springer Nature.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Barenboim, G., Debbio, L.D., Hirn, J. et al. Exploring how a generative AI interprets music. Neural Comput & Applic (2024). https://doi.org/10.1007/s00521-024-09956-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00521-024-09956-9