Abstract

Clinical decision support systems (CDSSs) can effectively detect illnesses such as breast cancer (BC) using a variety of medical imaging techniques. BC is a key factor contributing to the rise in the death rate among women worldwide. Early detection will lessen its impact, which may motivate patients to have quick surgical therapy. Computer-aided diagnosis (CAD) systems are designed to provide radiologists recommendations to assist them in diagnosing BC. However, it is still restricted and limited, the interpretability cost, time consumption, and complexity of architecture are not considered. These limitations limit their use in healthcare devices. Therefore, we thought of presenting a revolutionary deep learning (DL) architecture based on recurrent and convolutional neural networks called Bi-xBcNet-96. In order to decrease carbon emissions while developing the DL model for medical image analysis and meet the objectives of sustainable artificial intelligence, this study seeks to attain high accuracy at the lowest computing cost. It takes into consideration the various characteristics of the pathological variation of BC disease in mammography images to obtain high detection accuracy. It consists of six stages: identifying the region of interest, detecting spatial features, discovering the effective features of the BC pathological types that have infected nearby cells in a concentrated area, identifying the relationships between distantly infected cells in some BC pathological types, weighing the extracted features, and classifying the mammography image. According to experimental findings, Bi-xBcNet-96 beat other comparable works on the benchmark datasets, attaining a classification accuracy of 98.88% in DDSM dataset, 100% in INbreast dataset with 5.08% and 0.3% improvements over the state-of-the-art methods, respectively. Furthermore, a 95.79% reduction in computing complexity was achieved.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

BC is one of the most widespread cancers in women and the top factor in cancer-related deaths worldwide. Early detection of BC improves the patient’s quality of life and raises their chances of survival. Additionally, it is possible to lower the afflicted patients’ death rate [1]. According to a study just released by the American Cancer Society [2], BC alone is thought to be the cause of 31% of all female cancer occurrences. It has the highest fatality rate of any kind of cancer in women worldwide. It is a tumor that is malignant and grows in the breast cells [3,4,5]. A tumor has the potential to spread to other parts of the body. BC is a global disease that causes havoc in the lives of women aged 25–50. As stated by the World Health Organization (WHO) [6], there are 2,261,419 new cases in 2020. It is also the primary factor of mortality among females worldwide [7, 8]. With 11.7% new cases in both sexes and 24.5% in women, BC has the greatest prevalence of any other cancer type on the globe in 2020, as shown in Figs. 1 and 2. If certain symptoms appear, BC is typically easy to recognize. On the other hand, some BC patients are women who exhibit no symptoms. Regular BC screening is therefore essential for early detection [7].

Early cancer detection, diagnosis, and treatment are crucial to the patient’s life since they can lower the risk of mortality. The period between getting a BC diagnosis and treatment starting is critical since any delay in detecting cancer in its early stages causes tumor development and treatment issues [9].

For the correct diagnosis of BC, several approaches have been devised. Breast screening, often known as mammography, is a method for detecting BC. It follows that accurate categorization of benign tumors is necessary for motivating patients to request the right treatment and receive an improved prognosis. Radiologists make judgments concerning breast masses based on their morphology and boundary characteristics in practice. The more irregular the form, the more likely it is that the lump is cancerous. In general, classification outcomes are strongly dependent on segmentation findings, and this consumes time and effort. As a result, several machine learning (ML) [10,11,12] and DL [13,14,15,16,17,18,19,20] methods have been utilized to make medical prognoses.

The majority of them call for the creation of a region of interest (ROI) before classifying lesions within it. As opposed to the ML techniques outlined above, DL systems that use an end-to-end network [21] may combine feature extraction and classification while also realizing automated feature learning. Despite the obvious benefits of DL and the apparent advancement in BC classification reported in [22,23,24], its applications in the field of healthcare are still restricted and limited. The softmax-cross-entropy loss, a popular loss function for training DL models, often overestimates probability predictions for training samples with one-hot labels [25]. These traditional DL models, such as AlexNet [26], GoogleNet [27], ResNet [28] VGGNet [29], and Inception [30], that have been extensively utilized in pathological picture analysis are hence prone to overfitting.

A Scopus analysis of publications in this field from 2018 to the present using the keyword as the following: diagnosis AND prognosis AND breast AND cancer AND DL OR ML; indicates that there is a need for further research to achieve new advances in this field. The statistics of Scopus publications are presented in Figs. 3 and 4. Although several methods have been proven, none of them can deliver an accurate and reliable outcome.

In addition, new improved DL algorithms are still required to acquire alternate responses to complicated BC real-world data or other medical data to achieve the sustainability goals and take serious steps toward Green-AI goals. These algorithms must accommodate resource limitations and the cost of time and energy to suit the nature of healthcare systems.

The need for AI processors has surged as a result of the AI explosion. Chipmaker NVIDIA announced in August 2023 that its second-quarter sales, driven by AI, reached a record $13.5 billion for the 3 months ending in July 2023 [31]. The company’s data center division showed a 141% growth from the previous quarter, indicating the growing demand for AI products and the potential for a substantial increase in AI’s energy footprint [31]. Concerns over the amount of electricity used and the possible effects of AI and data centers on the environment are brought up by this rapid development [32]. Sustainability research in AI has focused on the training phase of AI models, which is frequently thought to be the most energy expensive [33].

The insatiable appetite of CNNs for computational resources presents a growing challenge in today’s sustainability-conscious world. Training and deploying these powerful models require vast amounts of energy, generating substantial carbon footprints [34, 35]. However, a fascinating synergy emerges when we prioritize reducing the computational demands of CNNs. By streamlining these DL models, the world gain benefits for both performance and the environment. Thus, smaller, more efficient CNNs consume less energy during training and inference, directly translating to reduced carbon emissions. This aligns perfectly with the goals of green computing, where minimizing energy consumption is paramount [36, 37].

Additionally, optimized models require less powerful hardware, further lowering the environmental impact associated with manufacturing and maintenance. This shift also opens doors for deploying AI on resource-constrained devices, democratizing access to powerful technology without sacrificing sustainability [38]. Building medical technological systems that consume fewer resources and fewer costs is an essential step toward sustainability and reducing emissions resulting from the operation of these medical systems. Therefore, we seek to close the gap by addressing both challenges simultaneously, great precision must be attained with the least computational complexity, to meet the objectives of green artificial intelligence.

In this study, new model is proposed called Bi-xBcNet-96 to identify BC through X-rays, and this model aims to achieve high accuracy with the least complexity. This model consists of six stages as follows: (1) extracts region of interest, (2) detects spatial features, (3) reduces the computational complexity and discovers the effective features of the pathological types that have infected nearby cells in a concentrated area, (4) concentrates only on the most crucial infected cells in a sequence, (5) discovers the relationships between infected cells that are far apart in some pathological types of BC. (6) Weighs the extracted features and overcomes overfitting. Then, a sigmoid node is used to classify the mammography image.

The main contributions of this study are:

-

A novel model of DL is structured called Bi-xBcNet-96 to detect BC through X-rays images.

-

Two blocks of convolution neural network layers are designed to extract spatial features.

-

Three concatenated Xception blocks followed by Inception block are constructed to decrease computations and extract the effective characteristics of the pathological forms that have infected neighboring cells in a concentrated area.

-

Attention layers are also employed to reduce the computational complexity by successively focusing only on the most significant infected cells in succession.

-

Bi-directional Long Short-Term Memory (Bi-LSTM) layer is structured to discover the relationships between infected cells that are far apart in some pathological types of BC.

-

A dropout layer is also used to overcome the overfitting, followed by fully connected layers to weigh the extracted features of different pathological types of BC.

-

To address the issue of unbalanced class distribution, cost-sensitive learning is applied.

Six experiments are carried out to evaluate the structural efficiency of the proposed models. Experimental comparative studies are also implemented to test the efficiency of the proposed model with the DL models and state-of-the-art algorithms across DDSM and INbreast datasets. The experimental results showed that the accuracy of the system is 98.88% which is higher than other state-of-the-art algorithms; while, the degree of complexity is reduced by 95% to fit green AI goals. The remainder of this paper is organized as follows: The basic terminologies of breast tumors and ML are discussed in section two; while, the literature review is presented by examining the results of research methodologies connected to BCs and ML in section three. On the other hand, section four is concerned with the description of the proposed model. Section five analyzed and discussed the experimental results gleaned through real-world datasets. Finally, the paper is concluded in section six. Figure 5 presents paper outlines.

2 Background

There are many regions in the breast where BC might first start. Although some BCs (lobular cancers) can begin in the glands that produce breast milk, the majority of BCs (known as ductal cancers) grow in the ducts that bring milk to the nipple. Less frequent forms of BC include angiosarcoma and phyllodes tumors [4, 39, 40]. Sometimes, BCs appear in tissues other than the breast. Malignancies such as lymphomas and sarcomas are not commonly thought of as BC [41]. The next subsections go through the many categories of BC, its causes, symptoms, risk factors, and ways to prevent it. The key role of technology in BC diagnosis and detection will also be covered.

2.1 Overview of breast cancer

BC is a condition in which cancerous cells proliferate in the breast tissues. A tumor is a collection of unhealthy tissue. Breast tumors can be classified as “benign” (non-cancerous) or “malignant” (cancerous). Sometimes, the process of cell division goes wrong, causing new cells to develop or injured cells to stop dying when the body does not need them [42]. A medical expert skilled in the detection of breast illness, which is frequently an indication of BC, should then keep an eye on any new breast changes, including lumps, bumps, or skin changes [43].

Female age, sex, heredity, and having thick breasts are the most common and well-known risk factors for BC. The term “breast density” refers to the number of different tissues that make up a woman’s breasts as well as how they appear on mammography. Women with dense breasts are more likely to develop BC, and there is a correlation between women’s age and breast density; hence, younger women have denser breasts than older women [44].

Doctors analyze the size of the tumor and whether it has spread to lymph nodes or other places of the body to determine the cancer’s stage [45]. BC has the potential to be staged in numerous ways [46]. Stages 0–4 are grouped together in a single category, with subcategories at each step. Each of these important stages is discussed further below. Substages can offer information about the characteristics of a tumor, such as its HER2 receptor status [47]. (1) Stage 0: The cancer is still confined to the ducts of the breast and has not spread to the surrounding tissue. This is also known as ductal carcinoma in situ. (2) Stage 1: The tumor is small, up to 2 cm in diameter. It has not spread to the lymph nodes, but there may be small clusters of cancer cells in the lymph nodes. (3) Stage 2: The tumor is larger than 2 cm in diameter. It may have spread to the lymph nodes near the breast, but not too distant lymph nodes. (3) Stage 3: The tumor is large, up to 5 cm in diameter, and has spread to the lymph nodes near the breast. It may also have spread to distant lymph nodes. (4) Stage 4: The cancer has spread to other parts of the body, such as the liver, bones, lungs or brain.

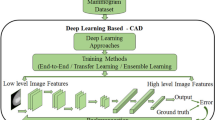

2.2 Computer-aided diagnostic systems of breast cancer

There are many studies that diagnose BC using radiographic data by a computer-aided diagnostic (CAD) system (e.g., benign vs. malignant) [48,49,50]. Breast cancer CAD systems make use of a variety of pattern recognition methods [51]. It is important to make these systems support Green-AI to make them eco-friendly and reduce technological emissions (Fig. 6).

A CAD system typically consists of three basic modules: mammography, which determines if a particular breast tumor is “round,” “oval,” “lobular,” “irregular,” or “architectural distortion.” Circumscribed oval and rounded masses are significant indicators of a benign lesion. In contrast, breast detection, segmentation, and classification boundaries, are often raised when masses of irregular form are present. Although mass detection is a difficult problem, it is important in the diagnosis of BC. If a lesion is present, the detection task is locating it on a mammogram. In general, detection consists of three modules: (1) suspicious region detection (by density, microcalcifications, and mass), (2) feature extraction, and (3) false positive region elimination.

The next step is mass segmentation, which involves dividing mammography pictures into sections with similar properties. The final step is mass classification, which labels regions of interest input image as either normal or mass based on abnormalities. Following that, Mass lesions are determined to be either benign or malignant. For the data training step, the classification of breast mass might be grouped. BC treatment would be considerably aided by breast tumor detection and breast density classification. Since normal dense tissue seem similar to masses on a mammogram, the high rate of false positives becomes the major challenge associated with using CAD systems for mass identification.

3 Literature review

The use of machine learning approaches for breast cancer classification on a variety of medical images, including pathology images, ultrasound, mammograph, and other medical imaging modalities, has transformed the area of oncology [52]. Techniques such as Support Vector Machines (SVM), Random Forest, Fuzzy cognitive map, and others have demonstrated outstanding accuracy in identifying and categorizing various types of breast lesions [53].

Machine learning algorithms can give useful insights into early detection, therapy planning, and prognosis prediction in breast cancer patients by evaluating subtle patterns and characteristics in these pictures. SVM’s capacity to establish optimal decision boundaries and Random Forest’s ensemble learning skills make them ideal for dealing with the complexity and unpredictability of imaging data [54]. The fuzzy cognitive map is a technology that not only automates the process of diagnosing but also serves as a background for openly expressing the data analysis process and the method of generating the outcome [55]. The use of these sophisticated approaches in breast cancer imaging analysis has significant potential for improving patient outcomes and advancing the discipline of oncology. The limitations of employing ML in BC classification when manipulating large datasets include computational complexity, the potential for overfitting, reduced interpretability, and scalability challenges.

In addition, several automated methods for classifying medical images have appeared; these systems employ various DL methodologies. DL models have been shown to be more accurate than traditional methods for medical diagnosis such as cancers and COVID-19 from X-rays and other types of medical scans.

J. Mozaffari et al. [56] discussed a wide range of DL models, such as VGGNet, GoogleNet, ResNet, DenseNet, CapsNet, MobileNet, and EfficientNet, that have been employed for COVID-19 identification. Data augmentation, transfer learning, and model architectural modifications have been performed to these models to increase their accuracy. The authors also highlight some of the difficulties in applying deep DL to medical diagnosis, such as the scarcity of data, processing time, and computational cost. These insights provide new avenues for investigating these issues in the context of future DL medical diagnosis models and taking steps toward sustainability.

Different approaches were also explored for BC diagnosis. To classify BC, distinguishing characteristics must be extracted and then classified [57, 58]. The next paragraphs address cutting-edge techniques for staging BC that have been proposed.

It was utilized as a pre-screening stage by Samala et al. [59] to detect suspicious grouped calcifications, followed by deep CNN was designed to distinguish true calcifications from false positives, leading to better performance than using a deep learning CNN solely. As a result of many studies [59,60,61], it appears that, while next-generation deep learning CNNs outperform conventional CAD, combining the knowledge gathered from the two results in an even better-performing system. Another important strategy for improving the performance of a single classifier is to use an ensemble of classifiers [62]. In ensemble-based classifiers, the predictions of a single classifier are merged using several approaches, which enhances the total prediction as well as generates predictions with higher accuracy than a single classifier [63].

The most common voting method is to use a plurality to aggregate the findings of the base-level classifiers. This strategy, however, does not employ metal fetching, and all training sets and classifiers employ the same voting technique [64]. The one-dimensional signature is then subdivided to obtain local contour information. Finally, these characteristics are loaded into an SVM classifier. Laroussi et al. [65] proposed two CAD systems for mammography breast density classification for two and four “BI-RADS” classes comprised of features calculated using various Law filters of varied lengths. The feature vectors are then passed into classifiers such as ‘PNN,’ ‘NFC,’ and ‘SVM’ to determine tissue density.

J. S. Cardoso et al. [66] developed a novel method for computing closed contours in the original coordinate system. They create a directed acyclic graph (DAG) that reflects the various pathways that can be taken between the image boundary and the seed point. We then use a shortest path algorithm to find the optimal path in the DAG. This approach is fast, reliable, and does not suffer from loss of resolution. The results showed that our algorithm can accurately and efficiently compute closed contours in mammograms. However, this approach has some limitations. For example, it can be computationally expensive, and it can lose resolution.

Dhungel, N., et al. [67,68,69], proposed new approaches to breast mass segmentation in mammograms using structured SVMs. The approaches combine various types of potential functions, which include one that uses DL to classify image regions. In addition, a novel quantitative analytic approach for evaluating the efficiency of breast masses segmentation methodologies was developed. Their methods depend on broadly agreed accuracy and running time measures on public datasets. Specifically, they used two public datasets (INbreast and DDSM-BCRP) to evaluate the approach. However, there are many challenges faced by the approaches, such as the time consumed and the calculations’ complexity.

Furthermore, Li, H., et al. [76] introduced an innovative complete DL model for mammography image processing that computes mass segmentation while also predicting diagnosis findings. Their solution is built on a dual-path architecture which handles the mapping as a dual-problem while also taking key form and boundary knowledge into account. One route, known as the Locality Preserving Learner (LPL), extracts and uses intrinsic properties of the input in a hierarchical manner. The second path called the Conditional Graph Learner, is a different approach that creates geometrical features by modeling pixel-by-pixel images to mask correlations. Experimental results on the benchmark DDSM dataset show that DualCoreNet outperforms other related works in both segmentation and classification tasks, achieving an AUC score of 0.85 and DI coefficient of 92.27%. DualCoreNet has competitive classification performance with AUC score of 0.93, and segmentation DI coefficient of 93.69% according to the benchmark INbreast dataset.

However, looking at all the proposed models [62,63,64,65,66,67,68,69,70,71,72,73,74,75,76,77], we found that they all share the following challenges: time and computational cost, accuracy, and efficiency for BC diagnosis. Table 1 presents a summary of all related works with all techniques, datasets, and corresponding accuracy.

Finally, previous research utilizing several classifiers yielded encouraging results. However, past research has shown that utilizing an ensemble of classifiers enhances the outcomes. All the past studies don’t consider the computational cost or any of the green AI goals. In this paper, we address this gap by presenting a strategy that employs many classification architectures to enhance BC detection outcomes with the lowest time and computational costs.

4 Methodology

In this study, an appropriate classification modeling framework for BC mammography images is proposed. The primary objective of this study is to accurately identify the mammography images with minimal computational complexity to fulfill green AI goals, which helps in implementing the proposed model into medical devices with limited resources.

BC disease can take many different pathological forms, and this pathological diversity appears in the mammography images, as shown in Fig. 7 some pathological forms have infected nearby cells and gathered in one place; whereas, others contain infected cells dispersed in irregular locations, as shown in Fig. 8. Therefore, the proposed methodology developed a revolutionary DL architecture based on Recurrent Neural Networks (RNN) and Convolutional Neural Networks (CNN); it takes into consideration the various characteristics of the pathological variety of BC disease in mammography images.

As shown in Fig. 9, the proposed model is a six-stage process; the first stage uses masks and minimal padding to extract the Region of Interest (ROI). The second one is based on CNN layers to extract spatial features. In the third stage, three concatenated Xception blocks linked by Inception block are built to simplify computations and extract the useful characteristics of pathological types that have infected nearby cells in a concentrated area. In the fourth stage, attention layers are used to simplify the computational complexity of the suggested model by focusing only on the most significant infected cells in succession. In addition, the relationships between infected cells that are far apart in some pathological types of BC are also focused. In the fifth stage by using Bi-directional Long Short-Term Memory (Bi-LSTM) layer. In the sixth stage, dropout and fully connected layers are used to weigh the extracted features and overcome overfitting. Then, a sigmoid node is used to classify the mammography image. The detailed explanation of our proposed model Bi-xBCNet-96 is presented in Fig. 10.

4.1 First stage: obtaining region of interest

In this stage, the data were pre-processed to obtain ROI in format 96*96*3 image from input image x*y*3. In addition, data augmentation was also applied to the extracted ROI images. The ROIs were retrieved using masks with a tiny bit of padding to provide context. Then, each ROI was then arbitrarily cropped three times with random flips and rotations, before being enlarged and resized to 96*96*3. Data Augmentation was also used to artificially increase the diversity and the size of the dataset. Transformations such as cropping, flipping, rotating, and adding noise to the images were applied to ROI images. This helps to improve the model’s performance by reducing the cost of data collection and labeling, improving model robustness as models are less likely to be affected by noise or outliers in the data. This aids the proposed model’s capacity to adapt to unforeseen alterations.

4.2 Second stage: spatial features extraction

The second stage is based on two blocks of CNN layers to extract spatial features from the input image. It obtained the spatial features that are relevant to breast malignant tumor classification. It has the ability to extract spatial features at different scales, which makes the proposed model able to recognize the tumor objects of different sizes. It is also able to extract spatial features that are invariant to changes in illumination, rotation, and translation.

At this stage, each block has a convolutional, batch normalization, and activation layers. The first block is built on 32 kernels; whereas, the second block is built on 64 kernels. Strides are equivalent to 2*2 and the kernel size is 3*3 to get more details. Batch Normalization is used to normalize each convolutional layer’s output before passing it into the next layer to speed up the training process. To add nonlinearity, the activation function is used to the output of layers, which couldn’t understand intricate connections between the input and output data.

4.3 Third stage: xception blocks

To extract the effective characteristics of the pathological forms that have infected neighboring cells in a concentrated area, the proposed model constructed three concatenated Xception blocks linked by Inception block at this stage.

Xception block is a deep convolutional neural network structure that is mainly based on: (1) Depthwise Separable Convolutions (DSCs) and (2) shortcuts between DSCs blocks, as shown in Fig. 11. Inception block is mainly based on only depthwise separable convolutions as shown in Fig. 12. DSCs reduce the computational complexity of the proposed model by performing two separate convolutions: a depthwise convolution and a pointwise convolution.

4.3.1 Depthwise/separable convolutions (DSCs)

A depthwise separable convolution comprises two parts: a depthwise and a pointwise convolution as shown in Fig. 11. The depthwise convolution is a spatial convolution that is conducted individually on each input channel. A regular convolution applies a single filter to all of the input channels at once. This can be computationally expensive, especially for deep neural networks with many input channels. Depthwise convolution avoids this by applying a separate filter to each input channel. This reduces the number of parameters in the convolution, makes it more efficient. In depthwise convolution, instead of having a single filter that has M channels wide, it has N filters, each of which is 1 channel wide. This means that they can only learn to extract features from a single input channel. However, by having multiple filters, depthwise convolution can still learn to extract a variety of features from the input data.

Table 2 shows the number of trainable parameters of standard convolution and depthwise separable convolution. Where N is the number filters, Dk is the size of filter, M is the number of channels, and Dp is the size of output. To show the impact of depthwise convolution networks in reducing the computational complexity, if we assume the input of regular convolution is (45 × 45x64) thus, Dp = 45, M = 64, and filter size Dk equals 3, number of filters N equals 128.

-

No. of Multiplications of Regular/Standard Conv. = 149,299,200

-

No. of Multiplications of Depthwise Separable = 1,166,400 + 5,120,000 = 6,286,400

-

Complexity Loss Ratio (CLR) = 95.79%

The computational complexity is reduced by 95.79%, which shows the influence of using the depthwise separable convolution in the proposed approach.

4.3.2 Shortcuts between DSCs blocks

Xception block is an Inception architectural enhancement, which it is based on two main points: (1) depthwise separable convolution as in Inception struct; (2) shortcuts between convolution blocks as in ResNet. As illustrated in Fig. 11, the shortcuts between DSCs blocks are utilized to learn residual functions, where the output of the current layer integrated with the output of the preceding layer. These residual connections contribute to the stabilization of the training of DSCs blocks, in order to keep the gradients from disappearing or bursting. This is due to the shortcuts’ ability to let the network bypass some of the layers. In addition, the shortcuts can also help to make DSCs blocks more efficient, which permit the CCN layers to learn more intricate features without adding more parameters. This is because the residual connections allow the network to reuse the features that it has previously learned from preceding layers to reflect distant relationships more accurately in the data.

4.4 Fourth stage: attention layer

Attention layers are also employed to lessen the computational complexity of the proposed model by concentrating only on the most crucial infected cells in a sequence. Using this attention layer increases the accuracy of the model as it selects the most effective features. It also reduces the complexity of the proposed model because of selecting the most important parameters, thus reducing the cost of calculations. The proposed model uses multi-head attention to extract the most crucial infected cells in a sequence, as shown in Fig. 13. The attention mechanism works by first computing alignment scores between the query and key vectors. These scores are then used to calculate the attention weights, which are used to weighted sum the values vectors to produce the context vector.

Multi-head attention mechanism gets headi (that is, one representation for each head) of various representations of (Q, K, V), computes self-attention for each tuple, and concatenates the results as expressed in Eq. (1). Each tuple is multiplied by a set of weights for the keys (denoted as K), queries (denoted as Q), and values (denoted as V), where W stands for learnable parameter matrices in the same notation as Eq. (2).

4.5 Fifth stage: BI-LSTM layer

In addition, the relationships between infected cells that are far apart in some pathological types of BC are also focused using the Bi-directional Long Short-Term Memory (Bi-LSTM) layer. Bi-LSTM is the act of storing sequence features in both directions, backward (future to past) and forwards (past to future). A bi-directional LSTM structure is depicted in Fig. 14.

The bi-directional LSTM network’s hidden layer stores two values. The forward computation involves A; while, the reverse calculation involves A transposed. A and A transpose affect the output value Yi, where x, h, h′, Wlh, Wh′h, and b represent input state, current state, previous state, weight at recurrent neuron, weight at input neuron, and the bias vector, respectively. The hidden layer output depends on hidden state forward, and hidden state backward as expressed in Eq. (3). It regulates how the network is taught to process data sequences in both forward and reverse directions. A higher theta value θh will give the prior hidden state more weight; whereas, a lower theta value will give the present input more weight as computed in Eq. (4).

4.6 Sixth stage: sigmoid function

In this stage, a dropout layer is used to overcome the overfitting, followed by fully connected layers to weigh the extracted features and get a one-dimension matrix to be used in the classification process. For the classification process, a sigmoid node is used to classify the mammography images. The sigmoid function is defined by Eq. 5, where σ(x) represents the sigmoid function’s output for input x, and e is the base of the natural logarithm, approximately 2.71828.

Further, Dropout is a regularization technique used in neural networks to prevent overfitting. The equation for dropout involves masking a portion of the neurons during training. Mathematically, it can be represented as Eq. 6, where y, x, and m represent the output after dropout, input to the dropout layer, and binary mask, respectively.

The idea behind using dropout is that during training, only a subset of neurons is used, making the network more robust and reducing overfitting. At test time, the dropout is usually turned off or scaled by the dropout rate to ensure the expected output magnitudes are retained. The dropout layer helps to prevent overfitting by forcing the CNN to learn to rely on all of its neurons and also help to improve the generalization performance of the CNN by making it less likely to memorize the training data.

4.7 Handling the issue of unbalanced data

The backpropagation of the error algorithm is used to modify the weights of neural networks during training, considering the errors that the neural network makes on the training dataset. However, this approach treats all samples from each class equally, which may cause issues with datasets that are imbalanced and include a class with a much smaller sample size than the others.

To solve this imbalanced data problem, cost-sensitive learning is utilized to handle this problem [78], which allows the model to focus more on samples from the minority class by weighing misclassification mistakes according to the significance of the class. A high weight is allotted to instances from the minority class; while, instances from the majority class are given a small weight.

The proposed model is based on the Adam optimizer for implementing cost-sensitive learning for imbalanced classification; it computes individual adaptive learning rates for different parameters from estimates of the first and second moments of the gradients [79]. The mathematical equations for the Adam optimizer in the context of training a weighted neural network are given in Eqs. (7–9).

where mt and vt are the first and second-moment estimates. gt equals ∇(L(w_t, x_t, y_t)), which is the gradient at time step t based on the weighted loss function. β1 and β2 are the exponential decay rates for the moment estimates, their default values are 0.9 and 0.999, respectively. The update-biased estimates of mt and vt are called m_t and v_t, respectively. α is the learning rate, which is the step size. ε is the small value to prevent division by zero, its default value is 1e-8. w_t is the model parameters (weights) at time step t.

The previous equations are used to update the weights of the neural network during training with the Adam optimizer. The adaptive learning rates and momentum provided by the Adam optimizer can help improve the convergence of the training process based on the weighted version of the binary cross-entropy loss function, which was used to deal with class imbalances as in Eq. (10).

where L_w(y, y′) is the weighted binary cross-entropy loss. w is the weight associated with the sample, which is greater for the minority class and lower for the majority class. y is the true label (0 or 1). y′ is the predicted probability of the positive class (between 0 and 1).

5 Experimental results and analysis

A new model for classifying mammograms of BC has been proposed. In order to achieve green AI aims, the key objective of the proposed model is to accurately identify the mammography images with minimal computational complexity. To test and assess the suggested model and its stages, several experiments were conducted. The first set of experiments assessed the proposed model’s structural effectiveness and its stages to study its impact on the classification performance. In the second series of experiments, the effectiveness of the suggested model’s overall structure was also assessed and compared to cutting-edge algorithms.

5.1 Datasets

The experiment was carried out using a benchmark datasets DDSM Mammography [68] and INbreast [69]. The images of both datasets are in 16-bit grayscale format. The Digital Database for Screening Mammography (DDSM) is a large database of digitized mammograms of BC. It was created by a team of researchers at the University of South Florida, the Massachusetts General Hospital, and Sandia National Laboratories. There are 55,890 mammography images in the database, 14% of which are positive; while, the remaining 86% are negative as shown in Fig. 15. The images are 299 × 299 pixels in size. The images are accompanied by patient information, such as age, breast density, and ACR (American College of Radiology) breast density rating. The database also includes ground truth information about the locations and types of suspicious regions in the images.

The INbreast dataset is a public database of full-field digital mammograms (FFDMs). It was created by a team of researchers at the Centro Hospitalar de Sao Joao, Breast Center, Porto, Portugal. The database contains 115 cases, 80% of which are positive; while, the remaining 20% are negative as shown in Fig. 16. Each case of The INbreast dataset includes four images: two craniocaudal (CC) views and two mediolateral oblique (MLO) views. The images are accompanied by patient information, such as age, breast density, and BI-RADS (Breast Imaging Reporting and Data System) assessment. The database also includes ground truth information about the locations and types of suspicious regions in the images.

5.2 Evaluation metrics

In this study, Accuracy, Precision, Recall, and F1 Score are used to assess the model’s performance. Equation (11) gives the BC image recognition accuracy. Equation (12) shows how the precision was used to calculate the ratio of properly identified images. Equation (13) shows how the proposed system's accuracy is expressed as a percentage of all classified photos. Equation (14) shows how the F1-score, which stands for precision and recall, are related. It is a reliable sign of imbalanced data.

where TN, FN, FP, and TP refer to “true negative,” “false negative,” “false positive,” and “true positive,” respectively.

5.3 Evaluation of proposed model’s structural effectiveness and its stages

On a PC with an Intel Intel(R) Core(TM) i7-4790 CPU @ 3.60 GHz CPU and a GPU 1xTesla P100, the entire set of experiments is carried out. The algorithms were developed using Python 3.7.12 and TensorFlow 2.11.0.

The proposed model consists of six stages as shown in Fig. 10. The first of which uses masks and minimal padding to extract the Region of Interest (ROI). The second stage is based on CNN layers to extract spatial features. In the third stage, three concatenated Xception blocks connected by Inception block are constructed to simplify computations and extract the useful features. In the fourth stage, an attention layer is used. In the fifth stage, the relationships between infected cells are extracted using the Bi-directional Long Short-Term Memory (Bi-LSTM). In the sixth stage, dropout and fully connected layers are used to weigh the extracted features. Then, a sigmoid node is used to classify the mammography image.

To evaluate the effect of the proposed model stages on the overall performance, six experiments were developed. Figure 17 shows a detailed explanation of these experiments.

-

Structures No. one, two, and three were constructed to evaluate the effectiveness of the Xception blocks and determine the best number of these blocks, which have the ability to extract the useful features of pathological types that have infected nearby cells in a concentrated area.

-

Structure No. four was created to assess how well the attention layer worked for selecting the most significant infected cells.

-

Structure No. five was developed to test the efficiency of the BiLSTM layer for discovering the relationships between the infected cells that are far apart in some pathological types of BC.

-

Structure number six was built in order to assess the dropout layer to enhance the classification accuracy.

Experiments were carried out using DDSM dataset on the several architectures to ensure the efficiency of each layer of the proposed model and to study its impact on the accuracy of the model. The results obtained show that the overall structure of the proposed model Bi-xBCNet-96 (Structure No. 6) has the highest accuracy 96.13% with the lowest number of trainable parameters 2,748,023 compared to the other structures as shown in Table 3. Structure No. one, two and four obtained number of trainable parameters equaled 25,825, 989,017 and 2,253,559, respectively. These values are lower than the number of trainable parameters of the proposed structure, but these structures did not achieve a high classification accuracy compared to structure No. 6. The results also demonstrated the effectiveness of structure No. 6 “Bi-xBCNet-96” to improve the proposed system’s performance, which achieved the highest accuracy with the lowest computational complexity, where contributed to making it reliable and fulfill green AI goals.

Three experiments were also implemented to determine the best number of fully connected units in stage No. 6 of the proposed model. The results obtained show that the best number of fully connected units in the 6th stage of the proposed model is 96, which achieved the highest accuracy 96.13% compared to the other different number of units as shown in Table 4.

Furthermore, three experiments were also developed to determine the best rate of dropout layer of the proposed model. The results obtained show that the value of dropout rate in the 6th stage of the proposed model is 0.5, which achieved the highest accuracy 96.13% compared to the dropout rate values as shown in Table 5.

5.4 Addressing the unbalanced data issue

The proposed model obtained 96.13% of classification accuracy in the DSSM dataset, as shown in Table 5. In order to obtain higher accuracy, the imbalanced data in this dataset is handled; in the DSSM dataset, there’s 14% positive class and 86% negative class, it comes with a highly skewed class distribution. The proposed model utilized the cost-sensitive learning Eq. (10), which allows the model to focus more on samples from the minority class by weighing misclassification mistakes according to the significance of the class.

Four experiments were implemented to determine the best rate of weight values for the cost-sensitive learning function. The obtained results show that the values in the 2nd experiment of the proposed model achieved the highest accuracy, which obtained 98.88% compared to the other weight values as shown in Table 6 and Fig. 18.

5.5 Comparison with DL models

The purpose of the proposed model is to achieve high accuracy and take into account green artificial intelligence, which helps in implementing the proposed model into medical devices with limited resources. Therefore, we constructed a new DL model called Bi-xBCNet-96 with low computational complexity to fulfill green AI goals. To evaluate the performance of Bi-xBCNet-96 model, literature comparisons were implemented to compare the performance of the proposed model with the DL models: Xception, MobileNetV2, InceptionV3, DenseNet201, ResNet50V2, VGG16, and VGG19. The DDSM dataset was used in this experiment to train and evaluate our proposed approach alongside other existing ones.

The results obtained show that Bi-xBCNet-96 has the highest accuracy 98.88% and its parameters on the DDSM dataset compared to the other DL algorithms as shown in Table 7 and Figs. 19 and 20. Additionally, the outcomes showed how effective the suggested stages were at enhancing the functionality of the suggested approach, which achieved the lowest computational complexity represented in the number of trainable parameters 2,748,023 as shown in Table 7 and the time in Fig. 21. The achieved enhancement of the proposed model stages contributed on its reliability and the fulfillment of the green AI goals:

-

Three concatenated Xception blocks connected by Inception block are constructed in the third stage to decrease computations and extract the main features of BC pathological types.

-

Attention layers are also employed to reduce the computational complexity by successively focusing only on the most significant infected cells in succession.

5.6 Comparison with state of-the-art algorithms

In this experiment, the results of state-of-the-art algorithms of BC detection are presented across DDSM and INbreast datasets in Table 7, with accuracy serving as the common evaluation metric. The experiment provides evidence that Bi-xBCNet-96 has a greater ability to discover the BC. The results obtained using the two datasets show that Bi-xBCNet-96 has the highest accuracy on the DDSM and INbreast datasets, (98.88% and 100%), respectively, as shown in Table 8.

The authors in [62] presented a new approach to closed contour computation in the original coordinate space. However, this approach has some limitations. For example, it can be computationally expensive, and it can lose resolution. [63,64,65], proposed new approaches to breast mass segmentation in mammograms using structured SVMs. However, there are many challenges faced by the approaches, such as the time consumed and the calculations’ complexity. In addition, [72] proposed a new end-to-end DL framework for processing mammography. This framework segments the masses in the image and predicts the diagnosis results. The method is built on a dual-path architecture to solve the mapping in a two-step process, while also considering important shape and boundary information. However, looking at all the proposed models [62,63,64,65,66,67,68,69,70,71,72,73], we found that they all share the following challenges: time and computational cost, accuracy, and efficiency for BC diagnosis.

In addition, they ignored the pathological diversity that appears in the mammography images, where some pathological forms have infected nearby cells and gathered in one place, and the others contain infected cells dispersed in irregular locations, as shown in Figs. 7 and 8. Therefore, the proposed methodology developed a revolutionary DL architecture called Bi-xBCNet-96; it takes into consideration the various characteristics of the pathological variety of BC disease in mammography images.

Table 8 demonstrates that Bi-xBCNet-96 performs comparably to cutting-edge techniques and is robust for differentiating between benign and malignant tumors on two separate datasets. Our model shows the highest level of accuracy for each of the two datasets. This experiment illustrates the reliability of the conventional classification approach Bi-xBCNet-96 across various BC datasets compared to other cutting-edge approaches, where the proposed model’s entire stages contributed to making it reliable and robust:

-

Extraction of spatial features in the second stage.

-

Detecting the important features of pathological types that have infected nearby cells in a concentrated area in the third stage.

-

Focusing only on the most significant infected cells in succession in the fourth stage.

-

The relationships between infected cells that are far apart in some pathological types of BC are also focused in the fifth stage.

-

Dropout and fully connected layers are used in the six stage to weigh the extracted features and overcome overfitting.

6 Conclusion

In this study, we offer Bi-xBcNet-96, a novel CNN architecture for BC classification problems. It utilizes the convolution and recurrent neural networks to acquire high detection accuracy by extracting the key features of the various pathological forms of BC disease from mammography images. Bi-xBcNet-96 works in effective and green way to concurrently learn classification. This model can be utilized end-to-end in autonomous detection practice with minimal overhead. Our method was found to be superior to other methods in terms of accuracy, speed, complexity and has achieved competitive performances in mammography diagnosis with 98.88% and 100% accuracy on DDSM and InBrest datasets, respectively. Also, we achieved 95.79% lower computational cost, and that makes our proposed model fits green AI goals. Furthermore, the network’s generalization ability is validated using both the benchmarks DDSM and INbreast datasets.

Data availability

The datasets analyzed during the current study are available in the Kaggle repository, https://www.kaggle.com/datasets/skooch/ddsm-mammography, https://www.kaggle.com/datasets/martholi/inbreast/.

References

Masud M, Hossain MS, Alhumyani H, Alshamrani SS, Cheikhrouhou O, Ibrahim S, Gupta BB (2021) Pre-trained convolutional neural networks for breast cancer detection using ultrasound images. ACM Trans Internet Technol (TOIT) 21(4):1–17

Giaquinto AN, Miller KD, Tossas KY, Winn RA, Jemal A, Siegel RL (2022) Cancer statistics for African American/black people 2022. CA A Cancer J Clin. 72(3):202–229

Kumar N, Gupta R, Gupta S (2020) Whole slide imaging (WSI) in pathology: current perspectives and future directions. J Digit Imag 33(4):1034–1040

Hotko YS (2013) Male breast cancer: clinical presentation, diagnosis, treatment. Experiment Oncol. 35(4):303–310

Breastcanser.org’s Community. What Is Breast Cancer? [Internet]. c2018 [cited 9 December 2021]. Avaliable from: https://www.breastcancer.org/symptoms/understand_bc/what_is_bc.

World health organization. Cancer country profiles 2014; 2018. http://www.who.int/cancer/country-profiles/en/#P

Kirubakaran R, Jia TC, Aris NM (2017) Awareness of breast cancer among surgical patients in a tertiary hospital in Malaysia. Asian Pacific J Cancer Prev APJCP 18(1):115

Stalin MS, Kalaimagal R (2016) Breast Cancer Diagnosis from Low Intensity Asymmetry Thermogram Breast Images using Fast Support Vector Machine. I-manager’s J Image Process 3(3):17

Caplan L (2014) Delay in breast cancer: implications for stage at diagnosis and survival. Front Public Health 2:87

Ayon SI, Islam MM, Hossain MR (2020) (2020) Coronary artery heart disease prediction: a comparative study of computational intelligence techniques. IETE J Res. https://doi.org/10.1080/03772063.2020.1713916

Naeem S, Ali A, Qadri S, Khan Mashwani W, Tairan N, Shah H, Anam S (2020) Machine-learning based hybrid-feature analysis for liver cancer classification using fused (MR and CT) images. Appl Sci 10(9):3134

Rajesh S, Choudhury NA, Moulik S (2020). Hepatocellular carcinoma (HCC) liver cancer prediction using machine learning algorithms. In: 2020 IEEE 17th India council international conference (INDICON) IEEE, pp 1–5

Islam MZ, Islam MM, Asraf A (2020) A combined deep CNN-LSTM network for the detection of novel coronavirus (COVID-19) using X-ray images. Informatics in medicine unlocked 20:100412

Wang D, Khosla A, Gargeya R, Irshad H, Beck AH (2016). Deep learning for identifying metastatic breast cancer. arXiv preprint arXiv:1606.05718.

Khan S, Islam N, Jan Z, Din IU, Rodrigues JJC (2019) A novel deep learning based framework for the detection and classification of breast cancer using transfer learning. Pattern Recognit Lett 125:1–6

Xia K, Yin H, Qian P, Jiang Y, Wang S (2019) Liver semantic segmentation algorithm based on improved deep adversarial networks in combination of weighted loss function on abdominal CT images. IEEE Access 7:96349–96358

Nasser M, Yusof UK (2023) Deep learning based methods for breast cancer diagnosis: a systematic review and future direction. Diagnostics 13(1):161

Zhou Z, Adrada BE, Candelaria RP, Elshafeey NA, Boge M, Mohamed RM, Ma J (2023) Prediction of pathologic complete response to neoadjuvant systemic therapy in triple negative breast cancer using deep learning on multiparametric MRI. Sci Rep 13(1):1171

Juhong A, Li B, Yao CY, Yang CW, Agnew DW, Lei YL, Qiu Z (2023) Super-resolution and segmentation deep learning for breast cancer histopathology image analysis. Biomed Opt Express 14(1):18–36

Mo Y, Han C, Liu Y, Liu M, Shi Z, Lin J, Liang C (2023) Hover-trans: Anatomy-aware hover-transformer for roi-free breast cancer diagnosis in ultrasound images. IEEE transactions on medical imaging.deep convolutional neural networks

LeCun Y, Bengio Y, Hinton G (2015) Deep learning. Nature 521(7553):436–444

Wei B, Han Z, He X, Yin Y (2017) Deep learning model based breast cancer histopathological image classification. In: 2017 IEEE 2nd international conference on cloud computing and big data analysis (ICCCBDA) IEEE, pp 348–353

Vesal S, Ravikumar N, Davari A, Ellmann S, Maier A (2018) Classification of breast cancer histology images using transfer learning. In: Image analysis and recognition: 15th international conference, ICIAR 2018, Póvoa de Varzim, Portugal, June 27–29, 2018, Proceedings 15. Springer International Publishing, pp 812–819

Kassani SH, Kassani PH, Wesolowski MJ, Schneider KA, Deters R (2019) Breast cancer diagnosis with transfer learning and global pooling. In: 2019 International conference on information and communication technology convergence (ICTC). IEEE, pp 519–524

Pereyra G, Tucker G, Chorowski J, Kaiser Ł, Hinton G (2017) Regularizing neural networks by penalizing confident output distributions. arXiv preprint arXiv:1701.06548

Krizhevsky A, Sutskever I, Hinton GE (2017) International conferenceImagenet classification with deep convolutional neural networks. Commun ACM 60(6):84–90

Szegedy C, Liu W, Jia Y, Sermanet P, Reed S, Anguelov D, Rabinovich A (2015) Going deeper with convolutions. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1–9

K He, et al. (2016) Deep residual learning for image recognition. In: Proc Int Conf Comput Vis Pattern Recognit, Pp 770–778

Simonyan K, Zisserman A (2014) Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556.

Arora S, Bhaskara A, Ge R, Ma T (2014) Provable bounds for learning some deep representations. In: International conference on machine learning. PMLR, pp: 584–592

Mehta C, Cherney MA, Nellis S (2023) Nvidia adds jet fuel to AI optimism with record results, $25 billion buyback. Reuters. August 23, 2023 [Internet]. Avalaiable at: https://www.reuters.com/technology/nvidia-forecaststhird-quarter-revenue-above-wall-streetexpectations-2023-08-23/

de Vries A (2023) The growing energy footprint of artificial intelligence. Joule 7(10):2191–2194

Verdecchia R, Sallou J, Cruz L (2023) A systematic review of green AI. wiley interdisciplinary reviews: data mining and knowledge discovery, e1507

Selvan R, Bhagwat N, Wolff Anthony LF, Kanding B, Dam EB (2022) Carbon footprint of selecting and training deep learning models for medical image analysis. In Raghavendra Selvan, Nikhil Bhagwat, LF. Wolff Anthony, Benjamin Kanding, EB. Dam (eds). International Conference on medical image computing and computer-assisted intervention. Springer Nature Switzerland, Cham, pp: 506–516

Gaur L, Afaq A, Arora GK, Khan N (2023) Artificial intelligence for carbon emissions using system of systems theory. Ecological Informatics, 102165

Chen Z, Wu M, Chan A, Li X, Ong YS (2023) Survey on AI sustainability: emerging trends on learning algorithms and research challenges. IEEE Comput Intell Mag 18(2):60–77

Everman B. (2022) Improving carbon, cost, and energy efficiency of large scale systems via workload analysis.

Himeur Y, Sayed A, Alsalemi A, Bensaali F, Amira A (2023) Edge AI for internet of energy: challenges and perspectives. Internet Things, 101035

Lukasik M, Bhojanapalli S, Menon A, Kumar S (2020) Does label smoothing mitigate label noise? In international conference on machine learning. PMLR, pp. 6448–6458.

Gupta A, Zhang H, Huang J (2019) The recent research and care of benign breast fibroadenoma. Yangtze Med 3(2):135–141

Kumar A, Ahluwalia R (2021) Breast cancer detection using machine learning and its classification. In Cancer prediction for industrial IoT 4.0: A machine learning perspective. Chapman and Hall/CRC, pp. 65–78

Castellino RA (2005) Computer aided detection (CAD): an overview. Cancer Imaging 5(1):17

Darcey E, McCarthy N, Moses EK, Saunders C, Cadby G, Stone J (2021) Is mammographic breast density an endophenotype for breast cancer? Cancers 13(15):3916

Wei J, Chan HP, Wu YT, Zhou C, Helvie MA, Tsodikov A, Sahiner B (2011) Association of computerized mammographic parenchymal pattern measure with breast cancer risk: a pilot case-control study. Radiology 260(1):42–49

Elsholtz FH, Asbach P, Haas M, Becker M, Beets-Tan RG, Thoeny HC, Hamm B (2021) Introducing the node reporting and data system 1.0 (Node-RADS): a concept for standardized assessment of lymph nodes in cancer. European radiology, 1–9

Shah A, Mushtaq A, Mandokhail F (2021) A review on breast cancer, risk factors, symptoms and some common treatme. SBK J Basic Sci Innov Res 1(1):34–41

BREASTCANCER.ORG. HER2 Status [Internet]. c2020 [cited 11 December 2021] Avaliable from: https://www.breastcancer.org/symptoms/diagnosis/her2.

Whitaker K (2020) Earlier diagnosis: the importance of cancer symptoms. Lancet Oncol 21(1):6–8

Asri H, Mousannif H, Al Moatassim H (2019) A hybrid data mining classifier for breast cancer prediction. In: Ezziyyani M (ed) International Conference on Advanced Intelligent Systems for Sustainable Development. Springer, Cham, pp 9–16

Sadhukhan S, Upadhyay N, Chakraborty P (2020) Breast cancer diagnosis using image processing and machine learning. In: Bhattacharya D, Mandal JK (eds) Emerging technology in modelling and graphics. Springer, Singapore, pp 113–127

Yue W, Wang Z, Chen H, Payne A, Liu X (2018) Machine learning with applications in breast cancer diagnosis and prognosis. Designs 2(2):13

Rasheed MEH, Youseffi M (2024) Breast cancer and medical imaging. IOP Science. https://doi.org/10.1088/978-0-7503-5709-8

Mukadam SB, Patil HY (2024) Machine learning and computer vision based methods for cancer classification: a systematic review. Arch Comput Method Eng. https://doi.org/10.1007/s11831-024-10065-y

Saidani O, Umer M, Alshardan A, Alturki N, Nappi M, Ashraf I (2024) Student academic success prediction in multimedia-supported virtual learning system using ensemble learning approach. Multimed Tools Appl. https://doi.org/10.1007/s11042-024-18669-z

Amirkhani A, Mosavi MR, Mohammadizadeh F, Shokouhi SB (2014) Classification of intraductal breast lesions based on the fuzzy cognitive map. Arab J Sci Eng 39:3723–3732. https://doi.org/10.1007/s13369-014-1012-z

Mozaffari J, Amirkhani A, Shokouhi SB (2023) A survey on deep learning models for detection of COVID-19. Neural Comput Appl 1–29

Amrane M, Oukid S, Gagaoua I, Ensari T (2018) Breast cancer classification using machine learning. In: 2018 Electric electronics, computer science, biomedical engineerings’ Meeting (EBBT) IEEE. pp 1–4

Al Bataineh A (2019) A comparative analysis of nonlinear machine learning algorithms for breast cancer detection. Int J Mach Learn Comput 9(3):248–254

Samala RK, Chan HP, Hadjiiski LM, Cha K, Helvie MA (2016) Deep-learning convolution neural network for computer-aided detection of microcalcifications in digital breast tomosynthesis. In medical imaging 2016: computer-aided diagnosis (Vol. 9785). International society for optics and photonics, pp: 97850Y

Ricciardi R, Mettivier G, Staffa M, Sarno A, Acampora G, Minelli S, Russo P (2021) A deep learning classifier for digital breast tomosynthesis. Phys Med 83:184–193

Teuwen J, Moriakov N, Fedon C, Caballo M, Reiser I, Bakic P, Sechopoulos I (2021) Deep learning reconstruction of digital breast tomosynthesis images for accurate breast density and patient-specific radiation dose estimation. Med Image Anal 71:102061

Rokach L (2010) Ensemble-based classifiers. Artif Intell Rev 33:1–39

Dietterich TG (1997) Machine-learning research. AI Mag 18(4):97

Breiman L (1996) Bagging predictors. Mach Learn 24:123–140

Laroussi MG, Ayed NGB, Masmoudi AD, Masmoudi DS (2013) Diagnosis of masses in mammographic images based on Zernike moments and Local binary attributes. In: 2013 world congress on computer and information technology (WCCIT) IEEE. pp 1–6

Cardoso JS, Domingues I, Oliveira HP (2015) Closed shortest path in the original coordinates with an application to breast cancer. Int J Pattern Recognit Artif Intell 29(01):1555002

Dhungel N, Carneiro G, Bradley A P (2015) Deep structured learning for mass segmentation from mammograms. In: 2015 IEEE international conference on image processing (ICIP) IEEE. pp 2950–2954

Dhungel N, Carneiro G, Bradley AP (2015) Deep learning and structured prediction for the segmentation of mass in mammograms In: Medical image computing and computer-assisted intervention–MICCAI 18th international conference, Munich, Germany, October 5–9, 2015, Proceedings Part I Springer International Publishing. Cham.pp 605–612

Dhungel N, Carneiro G, Bradley AP (2017) A deep learning approach for the analysis of masses in mammograms with minimal user intervention. Med Image Anal 37:114–128

Altae-Tran H, Ramsundar B, Pappu AS, Pande V (2017) Low data drug discovery with one-shot learning. ACS Cent Sci 3(4):283–293

Al-Antari MA, Al-Masni MA, Choi MT, Han SM, Kim TS (2018) A fully integrated computer-aided diagnosis system for digital X-ray mammograms via deep learning detection, segmentation, and classification. Int J Med Inform 117:44–54

Al-Antari MA, Al-Masni MA, Kim TS (2020) Deep learning computer-aided diagnosis for breast lesion in digital mammogram. Deep Learning in Medical Image Analysis: Challenges and Applications, 59–72

Singh VK, Rashwan HA, Romani S, Akram F, Pandey N, Sarker MMK, Torrents-Barrena J (2020) Breast tumor segmentation and shape classification in mammograms using generative adversarial and convolutional neural network. Expert Syst Appl 139:112855

Zhu W, Xiang X, Tran TD, Hager GD, Xie X (2018) Adversarial deep structured nets for mass segmentation from mammograms. In: 2018 IEEE 15th international symposium on biomedical imaging (ISBI 2018). IEEE, pp 847–850

El Houby EM, Yassin NI (2021) Malignant and nonmalignant classification of breast lesions in mammograms using convolutional neural networks. Biomed Signal Process Control 70:102954

Li H, Chen D, Nailon WH, Davies ME, Laurenson DI (2021) Dual convolutional neural networks for breast mass segmentation and diagnosis in mammography. IEEE Trans Med Imaging 41(1):3–13

Zahoor S, Shoaib U, Lali IU (2022) Breast cancer mammograms classification using deep neural network and entropy-controlled whale optimization algorithm. Diagnostics 12(2):557

Lin J, Zhong SH, Fares A (2022) Deep hierarchical LSTM networks with attention for video summarization. Comput Electr Eng 97:107618

Yi D, Ahn J, Ji S (2020) An effective optimization method for machine learning based on ADAM. Appl Sci 10(3):1073

Funding

Open access funding provided by The Science, Technology & Innovation Funding Authority (STDF) in cooperation with The Egyptian Knowledge Bank (EKB).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no affiliation with any organization with a direct or indirect financial interest in the subject matter discussed in the manuscript.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

El-Mawla, N.A., Berbar, M.A., El-Fishawy, N.A. et al. A novel deep learning approach (Bi-xBcNet-96) considering green AI to discover breast cancer using mammography images. Neural Comput & Applic (2024). https://doi.org/10.1007/s00521-024-09815-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00521-024-09815-7