Abstract

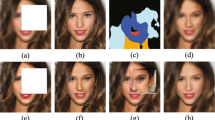

Recovering facial details from dark images has attracted increasing attention due to its potential in various applications such as video surveillance. We propose the first approach to detect and enhance human faces in extremely low-light images. We at first propose an attention module (AM) to detect the facial skin which is relatively robust to the low-quality condition. The AM further locates the landmarks as the prior knowledge to facilitate the reconstruction. Then, with the detected face position, our face hallucination module (FHM) could focus on enhancing the resolution and quality of the face. Moreover, we also introduce a low-light enhancement module to enhance the global image to merge with the hallucinated face from FHM for the final images. Extensive experiments show our method is quantitatively and qualitatively superior to the state-of-the-art in terms of enhancement quality and face hallucination.

Similar content being viewed by others

References

Baker S, Kanade T (2000) Hallucinating faces. In: Proceedings fourth IEEE international conference on automatic face and gesture recognition (Cat. No. PR00580). IEEE, pp 83–88

Jiang J, Hu R, Wang Z et al (2014) Noise robust face hallucination via locality-constrained representation. IEEE Trans Multimedia 16(5):1268–1281

Dong C, Loy CC, He K et al (2015) Image super-resolution using deep convolutional networks. IEEE Trans pattern Anal Mach Intell 38(2):295–307

Kim J, Lee JK, Lee KM (2017) Accurate image super-resolution using very deep convolutional networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1646–1654

Lai WS, Huang JB, Ahuja N et al (2017) Deep Laplacian pyramid networks for fast and accurate super-resolution. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 624–632

Zhu S, Liu S, Loy CC et al (2016) Deep cascaded bi-network for face hallucination. In: European conference on computer vision. Springer, Cham, pp 614–630

Chen Y, Tai Y, Liu X et al (2018) Fsrnet: End-to-end learning face super-resolution with facial priors. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2492–2501

Ma C, Jiang Z, Rao Y et al (2020) Deep face super-resolution with iterative collaboration between attentive recovery and landmark estimation. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 5569–5578

Zhang K, Zhang Z, Li Z et al (2016) Joint face detection and alignment using multitask cascaded convolutional networks. IEEE Signal Process Lett 23(10):1499–1503

Bai Y, Zhang Y, Ding M et al (2018) Finding tiny faces in the wild with generative adversarial network. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 21–30

Ge S, Zhao S, Li C et al (2018) Low-resolution face recognition in the wild via selective knowledge distillation. IEEE Trans Image Process 28(4):2051–2062

Bai Y, Zhang Y, Ding M et al (2018) Finding tiny faces in the wild with generative adversarial network. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 21–30

Bulat A, Tzimiropoulos G (2018) Super-fan: integrated facial landmark localization and super-resolution of real-world low resolution faces in arbitrary poses with GANS. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 109–117

Kim D, Kim M, Kwon G et al (2019) Progressive face super-resolution via attention to facial landmark[J]. In: arXiv preprint arXiv:1908.08239

Chen C, Chen Q, Xu J et al (2018) Learning to see in the dark. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 3291–3300

Huang H, He R, Sun Z et al (2019) Wavelet domain generative adversarial network for multi-scale face hallucination. Int J Comput Vis 127(6):763–784

Yu X, Porikli F (2017) Hallucinating very low-resolution unaligned and noisy face images by transformative discriminative autoencoders. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 3760–3768

Shao WZ, Xu JJ, Chen L et al (2019) On potentials of regularized Wasserstein generative adversarial networks for realistic hallucination of tiny faces. Neurocomputing 364:1–15

Zhang K, Zhang Z, Cheng CW et al (2018) Super-identity convolutional neural network for face hallucination. In: Proceedings of the European conference on computer vision (ECCV), pp 183–198

Jiang K, Wang Z, Yi P, Wang G, Ke G, Jiang J (2020) ATMFN: adaptive-threshold-based multi-model fusion network for compressed face hallucination. IEEE Trans Multimed 22(10):2734–2747

Shi Y, Li G, Cao Q et al (2019) Face hallucination by attentive sequence optimization with reinforcement learning. IEEE Trans Pattern Anal Mach Intell 42(11):2809–2824

Chen C, Li X, Yang L, Lin X, Zhang L, Wong K-YK (2021) Progressive semantic-aware style transformation for blind face restoration. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR), pp 11896–11905

Jobson DJ, Rahman Z, Woodell GA (1997) Properties and performance of a center/surround retinex. IEEE Trans Image Process 6(3):451–462

Jobson DJ, Rahman Z, Woodell GA (1997) A multiscale retinex for bridging the gap between color images and the human observation of scenes. IEEE Trans Image Process 6(7):965–976

Xiao C, Shi Z (2013) Adaptive bilateral filtering and its application in retinex image enhancement. In: 2013 seventh international conference on image and graphics, IEEE, pp 45–49

Yang J, Jiang X, Pan C et al (2016) Enhancement of low light level images with coupled dictionary learning. In: 2016 23rd international conference on pattern recognition (ICPR), IEEE, pp 751–756

Lore KG, Akintayo A, Sarkar S (2017) LLNet: a deep autoencoder approach to natural low-light image enhancement. Pattern Recogn 61:650–662

Gharbi M, Chen J, Barron JT et al (2017) Deep bilateral learning for real-time image enhancement. ACM Trans Graph (TOG) 36(4):1–12

Wei C, Wang W, Yang W et al (2018) Deep retinex decomposition for low-light enhancement. In: arXiv preprint arXiv:1808.04560

Yang W, Wang S, Fang Y et al (2020) From fidelity to perceptual quality: a semi-supervised approach for low-light image enhancement. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 3063–3072

Moran S, Marza P, McDonagh S, Parisot S, Slabaugh G (2020) DeepLPF: deep local parametric filters for image enhancement. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR), pp 12826–12835

Jiang L, Li R, Wu W et al (2020) Deeperforensics-1.0: a large-scale dataset for real-world face forgery detection. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 2889–2898

Liu Z, Qi X, Torr PHS. Global texture enhancement for fake face detection in the wild. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 8060–8069

Choi JY, Lee B (2019) Ensemble of deep convolutional neural networks with Gabor face representations for face recognition. IEEE Trans Image Process 29:3270–3281

Zhang F, Zhang T, Mao Q et al (2020) A unified deep model for joint facial expression recognition, face synthesis, and face alignment. IEEE Trans Image Process 29:6574–6589

Zhu X, Ramanan D (2012) Face detection, pose estimation, and landmark localization in the wild. In: 2012 IEEE conference on computer vision and pattern recognition. IEEE, pp 2879–2886

- Bai Y, Zhang Y, Ding M et al (2018) Finding tiny faces in the wild with generative adversarial network. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 21-30

Liu L, Li J, Li J et al (2017) Toward a comprehensive face detector in the wild. IEEE Trans Circuits Syst Video Technol 29(1):104–114

Cui Z, Li W, Xu D et al (2013) Fusing robust face region descriptors via multiple metric learning for face recognition in the wild. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 3554–3561

Ge S, Zhao S, Li C et al (2018) Low-resolution face recognition in the wild via selective knowledge distillation. IEEE Trans Image Process 28(4):2051–2062

Masi I, Chang FJ, Choi J et al (2018) Learning pose-aware models for pose-invariant face recognition in the wild. IEEE Trans Pattern Anal Mach Intell 41(2):379–393

Newell A, Yang K, Deng J (2016) Stacked hourglass networks for human pose estimation. In: European conference on computer vision. Springer, Cham, pp 483–499

Liu J, Zhang W, Tang Y et al (2020) Residual feature aggregation network for image super-resolution. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 2359–2368

Ronneberger O, Fischer P, Brox T (2015) U-net: convolutional networks for biomedical image segmentation. In: International conference on medical image computing and computer-assisted intervention. Springer, Cham, pp 234–241

Zhang Y, Tian Y, Kong Y et al (2018) Residual dense network for image super-resolution. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2472–2481

Kingma DP, Ba JA (2014) A method for stochastic optimization. In: arXiv preprint arXiv:1412.6980

Paszke A, Gross S, Chintala S et al (2017) Automatic differentiation in pytorch. In arXiv preprint 2017

Liu Z, Luo P, Wang X et al (2015) Deep learning face attributes in the wild. In: Proceedings of the IEEE international conference on computer vision, pp 3730–3738

Le V, Brandt J, Lin Z et al (2012) Interactive facial feature localization. In: European conference on computer vision. Springer, Berlin, pp 679–692

Guo S, Yan Z, Zhang K et al (2019) Toward convolutional blind denoising of real photographs. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 1712–1722

Ren Y, Ying Z, Li TH et al (2018) LECARM: low-light image enhancement using the camera response model. IEEE Trans Circuits Syst Video Technol 29(4):968–981

Grossberg MD, Nayar SK (2003) What is the space of camera response functions? In: 2003 IEEE computer society conference on computer vision and pattern recognition, 2003. Proceedings. IEEE, 2, pp II-602

Lee C H, Liu Z, Wu L et al (2020) Maskgan: Towards diverse and interactive facial image manipulation. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR), pp 5549–5558

Wang Z, Bovik AC, Sheikh HR et al (2004) Image quality assessment: from error visibility to structural similarity. IEEE Trans Image Process 13(4):600–612

Ou F-Z, Chen X, Zhang R, Huang Y, Li S, Li J, Li Y, Cao L, Wang Y-G (2021) Sdd-fiqa: unsupervised face image quality assessment with similarity distribution distance. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 7670–7679

Funding

This work was supported by National Natural Science Foundation of China (Nos. U1803262, U1736206, 61872282, 61701194) and Application Foundation Frontier Special Project of Wuhan Science and Technology Plan Project (No. 2020010601012288).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

We declare that we have no financial and personal relationships with other people or organizations that can inappropriately influence our work, there is no professional or other personal interest of any nature or kind in any product, service and/or company that could be construed as influencing the position presented in, or the review of the manuscript.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Ding, X., Hu, R. & Wang, Z. Face enhancement and hallucination in the wild. Neural Comput & Applic 35, 2399–2412 (2023). https://doi.org/10.1007/s00521-022-07713-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-022-07713-4