Abstract

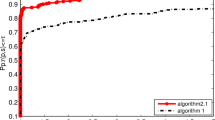

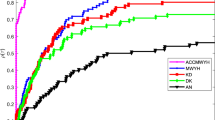

In this paper, we present a three-term conjugate gradient algorithm and three approaches are used in the designed algorithm: (i) A modified weak Wolfe-Powell line search technique is introduced to obtain \(\alpha _k\). (ii) The search direction \(d_k\) is given by a symmetrical Perry matrix which contains two positive parameters, and the sufficient descent property of the generated directions holds independent of the MWWP line search technique. (iii) A parabolic will be proposed and regarded as the projection surface, the next point \(x_{k+1}\) is generated by a new projection technique. The global convergence of the new algorithm under a MWWP line search is obtained for general functions. Numerical experiments show that the given algorithm is promising.

Similar content being viewed by others

Data Availibility

The data used to support the findings of this study are included within the article.

References

Al-Bayati AY, Sharif WH (2010) A new three-term conjugate gradient method for unconstrained optimization. Can J Sci Eng Math 1:108–124

Abubakar AB, Kumam P (2019) A descent Dai-Liao conjugate gradient method for nonlinear equations. Numer Algo 81:197–210

Awwal AM, Kumam Poom, Abubakar Auwal Bala (2019) A modified conjugate gradient method for monotone nonlinear equations with convex constraints. Appl Numer Math 145:507–520

Andrei N (2013) On three term conjugate gradient algorithms for unconstrained optimization. Appl Math Comput 219:6316–6327

Andrei N (2017) Accelerated adaptive perry conjugate gradient algorithms based on the self-scaling memoryless bfgs update. J Comput Appl Math 325:149–164

Andrei N (2018) A double parameter scaled bfgs method for unconstrained optimization. J Comput Appl Math 332:26–44

Babaie-Kafaki S, Ghanbari R (2014) A descent family of daiCliao conjugate gradient methods. Opt Methods Softw 29:83–591

Birgin EG, Martinez JM (2001) A spectral conjugate gradient method for unconstrained optimization. Appl Math Opt 43:117–128

Broyden CG (1970) The convergence of a class of double rank minimization algorithms: 2. The new algorithm. J Inst Math Appl 6:222–231

Cohen A (1972) Rate of convergence of several conjugate gradient algorithms. SIAM J Numer Anal 9:248–259

Dolan ED, Moré JJ (2002) Benchmarking optimization software with performance profiles. Math Prog 91:201–213

Dai Y, Liao L (2001) New conjugacy conditions and relatednon linear conjugate gradient methods. Appl Math Opt 43:87–101

Dai Y, Yuan Y (1999) A nonlinear conjugate gradient with a strong global convergence property. SIAM J Opt 10:177–182

Ding Y, Xiao Y, Li J (2017) A class of conjugate gradient methods for convex constrained monotone equations. Optimization 66:2309–2328

Feng D, Sun M, Wang X (2017) A family of conjugate gradient methods for large-scale nonlinear equations. J Inequal Appl 2017:236

Fletcher R (1970) A new approach to variable metric algorithms. Comput J 13:317–322

Fletcher R (1997) Practical method of optimization, vol I: unconstrained optimization. Wiley, New York

Fletcher R, Reeves CM (1964) Function minimization by conjugate gradients. J Comput 7:149–154

Geem ZW (2006) Parameter estimation for the nonlinear Muskingum model using the BFGS technique. J Irrig Drain Eng 132:474–478

Goldfarb A (1970) A family of variable metric methods derived by variational means. Math Comp 24:23–26

Hestenes MR, Stiefel E (1952) Method of conjugate gradient for solving linear equations. J Res National Bureau Stand 49:409–436

Khoshgam Z, Ashrafi A (2019) A new hybrid conjugate gradient method for large-scale unconstrained optimization problem with non-convex objective function. Comput Appl Math 38:1–14

Li G, Tang C, Wei Z (2007) New conjugacy condition and related new conjugate gradient methods for unconstrained optimization. J Comput Appl Math 202:523–539

Liu Y, Storey C (2000) Effcient generalized conjugate gradient algorithms part 1: theory. J comput Appl Math 10:177–182

Ouyang A, Liu L, Sheng Z, Wu F (2015) A class of parameter estimation methods for nonlinear Muskingum model using hybrid invasive weed optimization algorithm. Math Prob Eng , 2015, Article ID 573894

Ouyang A, Tang Z, Li K, Sallam A, Sha E (2014) Estimating parameters of Muskingum model using an adaptive hybrid PSO algorithm. Int J Pattern Recog Artif Intell . Recogn, 28, Article ID 1459003

Perry A (1978) Technical note a modified conjugate gradient algorithm. Oper Res 26:1073–1078

Perry J et al A class of conjugate gradient algorithms with a two-step variable metric memory. In: Discussion Papers 269

Polak E, Ribiére G (1969) Note Surlá convergence de directions conjugées, Rev. Francaise Informat Recherche Operationelle. 3e Anne, 16:35–43

Polyak BT (1969) The conjugate gradient method in extreme problems. USSR Comput Math Math Phys 9:94–112

Schanno J (1970) Conditions of quasi-Newton methods for function minimization. Math Comp 24:647–650

Shanno D (1978) Conjugate gradient methods with inexact line searches. Math Oper Res 3:244–256

Shanno D (1978) On the convergence of a new conjugate gradient algorithm. SIAM J Numer Anal 15:1247–1257

Sugiki K, Narushima Y, Yabe H (2012) Globally convergent three-term conjugate gradient methods that use secant conditions and generate descent search directions for unconstrained optimization. J Opt Theory Appl 153:733–757

Wolfe P (1969) Convergence conditions for ascent methods ii: Some corrections. SIAM Rev 13:226–235

Yao S, Ning L (2018) An adaptive three-term conjugate gradient method based on self-scaling memoryless bfgs matrix. J Comput Appl Math 332:2–85

Yuan G, Li T, Hu W (2020) A conjugate gradient algorithm for large-scale nonlinear equations and image restoration problems. Appl Numer Math 147:129–141

Yuan G, Lu J, Wang Z (2020) The PRP conjugate gradient algorithm with a modified WWP line search and its application in the image restoration problems. Appl Numer Math 152:1–11

Yuan G, Sheng Z, Wang B, Hu W, Li C (2018) The global convergence of a modified BFGS method for nonconvex functions. J Comput Appl Math 327:274–294

Yuan G, Wei Z, Lu X (2017) Global convergence of the BFGS method and the PRP method for general functions under a modified weak Wolfe-Powell line search. Appl Math Opt 47:811–825

Yuan G, Wei Z, Yang Y (2019) The global convergence of the Polak-Ribiére-Polyak conjugate gradient algorithm under inexact line search for nonconvex functions. J Comput Appl Math 362:262–275

Yuan Y (1993) Analysis on the conjugate gradient method. Opt Math Softw 2:19–29

Yuan Y, Sun W (1999) Theory and methods of optimization. Science Press of China, Beijing

Acknowledgements

Our deepest gratitude goes to the reviewers and editors for their careful work and thoughtful suggestions that have helped improve this paper substantially. This work was supported by the National Natural Science Foundation of China (Grant No. 11661009), the High Level Innovation Teams and Excellent Scholars Program in Guangxi institutions of higher education (Grant No. [2019]52)), the Guangxi Natural Science Key Fund (No. 2017GXNSFDA198046), and the Special Funds for Local Science and Technology Development Guided by the Central Government (No. ZY20198003) and the special foundation for Guangxi Ba Gui Scholars.

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Conflict of interest

The authors declare that there are no conflicts of interest regarding the publication of this paper

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Luo, D., Li, Y., Lu, J. et al. A conjugate gradient algorithm based on double parameter scaled Broyden–Fletcher–Goldfarb–Shanno update for optimization problems and image restoration. Neural Comput & Applic 34, 535–553 (2022). https://doi.org/10.1007/s00521-021-06383-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-021-06383-y