Abstract

Image classification tasks widely exist in many actual scenarios, including medicine, security, manufacture and finance. A major problem that hurts algorithm performance of image classification is the class imbalance of training datasets, which is caused by the difficulty in collecting minority class samples. Current methods handle this class imbalance problem from three aspects: data resample, cost-sensitive loss function and ensemble learning. However, the average accuracy of these common methods is about 95% and performance gets degenerating dramatically when the training datasets are extremely imbalanced. We propose an image classification method on class imbalance datasets using multi-scale convolutional neural network and two-stage transfer learning. Proposed methods extract multi-scale image features using convolutional kernels with different receptive fields and reuse image knowledge of other classification task to improve model representation capability using two-stage transfer strategy. Comparison experiments are carried to verify the performance of proposed methods on DAGM texture dataset, MURA medical dataset and an industrial dataset. The average accuracy obtained by proposed methods reaches about 99% which is 2.32% higher than commonly used methods over all the cases of different imbalance ratio, accuracy increase of 4.0% is achieved when some datasets are extremely imbalanced. Besides, proposed method can also achieve best accuracy of more than 99% on the industrial dataset containing only several negative samples. In addition, visualization technique is applied to prove that the accuracy boost comes from advantage of proposed architecture and training strategy.

Similar content being viewed by others

References

Masud M, Eldin Rashed AE, Hossain MS (2020) Convolutional neural network-based models for diagnosis of breast cancer. Neural Comput Appl. https://doi.org/10.1007/s00521-020-05394-5

Ramya J, Rajakumar MP, Uma Maheswari B (2021) HPWO-LS-based deep learning approach with S-ROA-optimized optic cup segmentation for fundus image classification. Neural Comput Appl. https://doi.org/10.1007/s00521-021-05732-1

Wang J, Ma Y, Zhang L et al (2018) Deep learning for smart manufacturing: methods and applications. J Manuf Syst 48:144–156

Verma AK, Nagpal S, Desai A, Sudha R (2021) An efficient neural-network model for real-time fault detection in industrial machine. Neural Comput Appl 33:1297–1310

Kamilaris A, Prenafeta-Boldú FX (2018) Deep learning in agriculture: a survey. Comput Electron Agric 147:70–90

Uğuz S, Uysal N (2020) Classification of olive leaf diseases using deep convolutional neural networks. Neural Comput Appl. https://doi.org/10.1007/s00521-020-05235-5

Buda M, Maki A, Mazurowski MA (2018) A systematic study of the class imbalance problem in convolutional neural networks. Neural Netw 106:249–259

Tao Y, Jiang B, Xue L et al (2021) Evolutionary synthetic oversampling technique and cocktail ensemble model for warfarin dose prediction with imbalanced data. Neural Comput Appl. https://doi.org/10.1007/s00521-020-05568-1

Ren F, Cao P, Li W et al (2017) Ensemble based adaptive over-sampling method for imbalanced data learning in computer aided detection of microaneurysm. Comput Med Imaging Graph 55:54–67

Wang T, Chen Y, Qiao M, Snoussi H (2018) A fast and robust convolutional neural network-based defect detection model in product quality control. Int J Adv Manuf Technol 94:3465–3471

Zou X, Zhou L, Li K et al (2020) Multi-task cascade deep convolutional neural networks for large-scale commodity recognition. Neural Comput Appl 32:5633–5647

Ali-Gombe A, Elyan E (2019) MFC-GAN: class-imbalanced dataset classification using multiple fake class generative adversarial network. Neurocomputing 361:212–221

Thai-Nghe N, Gantner Z, Member, et al (2010) Cost-sensitive learning methods for imbalanced data. In: The 2010 international joint conference on neural networks (IJCNN). IEEE, pp 1–8.

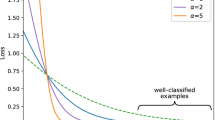

Lin T-Y, Goyal P, Girshick R, et al (2017) Focal Loss for Dense Object Detection. IEEE Trans Pattern Anal Mach Intell:2999–3007

Sahin Y, Bulkan S, Duman E (2013) A cost-sensitive decision tree approach for fraud detection. Expert Syst Appl 40:5916–5923

Arar ÖF, Ayan K (2015) Software defect prediction using cost-sensitive neural network. Appl Soft Comput 33:263–277

Khan SH, Hayat M, Bennamoun M et al (2017) Cost-sensitive learning of deep feature representations from imbalanced data. IEEE Trans neural networks Learn Syst 29:3573–3587

Díez-Pastor JF, Rodríguez JJ, García-Osorio CI, Kuncheva LI (2015) Diversity techniques improve the performance of the best imbalance learning ensembles. Inf Sci (Ny) 325:98–117

Yuan X, Xie L, Abouelenien M (2018) A regularized ensemble framework of deep learning for cancer detection from multi-class, imbalanced training data. Pattern Recognit 77:160–172

Jerhotová E, Švihlík J, Procházka A (2011) Biomedical image volumes denoising via the wavelet transform. Appl Biomed Eng Gargiulo, GD, McEwan, A, Eds 435–458

Langari B, Vaseghi S, Prochazka A et al (2016) Edge-guided image gap interpolation using multi-scale transformation. IEEE Trans Image Process 25:4394–4405

Shi C, Pun C-M (2019) Adaptive multi-scale deep neural networks with perceptual loss for panchromatic and multispectral images classification. Inf Sci (Ny) 490:1–17

Procházka A, Kuchyňka J, Vyšata O et al (2018) Multi-class sleep stage analysis and adaptive pattern recognition. Appl Sci 8:697

Dong L, Zhang H, Ji Y, Ding Y (2020) Crowd counting by using multi-level density-based spatial information: a multi-scale CNN framework. Inf Sci (Ny) 528:79–91

Ji Y, Zhang H, Wu QMJ (2018) Salient object detection via multi-scale attention CNN. Neurocomputing 322:130–140

Simonyan K, Zisserman A (2014) Very deep convolutional networks for large-scale image recognition. arXiv Prepr arXiv14091556

Srivastava N, Hinton G, Krizhevsky A et al (2014) Dropout: a simple way to prevent neural networks from overfitting. J Mach Learn Res 15:1929–1958

Pan SJ, Yang Q (2009) A survey on transfer learning. IEEE Trans Knowl Data Eng 22:1345–1359

Wu H, Jones GJF, Pitié F, Lawless S (2018) A Two-stage Transfer Learning Approach for Storytelling Linking. In: TRECVID

Shan W, Sun G, Zhou X, Liu Z (2017) Two-stage transfer learning of end-to-end convolutional neural networks for webpage saliency prediction. In: international conference on intelligent science and big data engineering. Springer, pp 316–324

Huang S, Guo Y, Liu D et al (2019) A two-stage transfer learning-based deep learning approach for production progress prediction in iot-enabled manufacturing. IEEE Internet Things J 6:10627–10638

Deng J, Dong W, Socher R, et al (2009) Imagenet: A large-scale hierarchical image database. In: 2009 IEEE conference on computer vision and pattern recognition. IEEE , pp 248–255

Kingma DP, Ba J (2014) Adam: A method for stochastic optimization. arXiv Prepr arXiv14126980

Wong SC, Gatt A, Stamatescu V, McDonnell MD (2016) Understanding data augmentation for classification: when to warp? In: 2016 international conference on digital image computing: techniques and applications (DICTA). IEEE, pp 1–6

DAGM 2007. https://hci.iwr.uni-heidelberg.de/node/3616. Accessed 10 Dec 2019

Rajpurkar P, Irvin J, Bagul A, et al (2017) Mura: Large dataset for abnormality detection in musculoskeletal radiographs. arXiv Prepr arXiv171206957

Weimer D, Scholz-Reiter B, Shpitalni M (2016) Design of deep convolutional neural network architectures for automated feature extraction in industrial inspection. CIRP Ann 65:417–420

Abadi M, Agarwal A, Barham P, et al (2016) TensorFlow: large-scale machine learning on heterogeneous distributed systems. arXiv preprint arXiv:1603.04467

Lever J, Krzywinski M, Altman N (2016) Classification evaluation. Nat Methods 13:603–604

Zhang X, Wu D (2019) On the vulnerability of CNN classifiers in EEG-based BCIs. IEEE Trans Neural Syst Rehabil Eng 27:814–825

Cui Y, Wu D, Huang J (2020) Optimize TSK fuzzy systems for classification problems: mini-batch gradient descent with uniform regularization and batch normalization. IEEE Trans Fuzzy Syst 28:3065–3075

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp 770–778

Chollet, François (2015) Keras. In: GitHub Repos. https://github.com/fchollet/keras

Lu Y-W, Liu K-L, Hsu C-Y (2019) Conditional Generative Adversarial Network for Defect Classification with Class Imbalance. In: 2019 IEEE international conference on smart manufacturing, industrial & logistics engineering (SMILE). IEEE, pp 146–149

Selvaraju RR, Cogswell M, Das A, et al (2017) Grad-cam: Visual explanations from deep networks via gradient-based localization. In: Proceedings of the IEEE international conference on computer vision. pp 618–626

Acknowledgement

The authors would like to acknowledge financial support from National Program on Key Basic Research Project (Grant No. 2019YFB1704900), National Natural Science Foundation Council of China (Grant No. 51675199), Key Basic Research Project of Guangdong Province (Grant No. 2019B090918001).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Liu, J., Guo, F., Gao, H. et al. Image classification method on class imbalance datasets using multi-scale CNN and two-stage transfer learning. Neural Comput & Applic 33, 14179–14197 (2021). https://doi.org/10.1007/s00521-021-06066-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-021-06066-8