Abstract

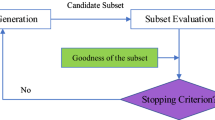

Feature selection is one of the important and difficult issues in classification. Particle swarm optimization (PSO) is an efficient evolutionary computing technique that has been widely used to deal with feature selection problem. However, it has been observed that the traditional initialization and personal best and global best updating mechanisms in PSO often limit its performance for feature selection and has to be further explored to see the full potential of PSO for the same. This paper proposes two new efficient initialization and updating mechanisms in PSO with the goal of minimizing the number of features and maximizing the classification performance in less computational time. The proposed algorithms are compared with six existing feature selection methods, including two traditional PSO-based feature selection methods and four PSO with different initialization strategy and updating mechanism-based feature selection methods. Experiments on eight benchmark dataset show that the proposed algorithms can automatically evolve a feature subset with a smaller number of features with higher classification performance than using all features. The proposed algorithms also outperform the eight existing feature selection algorithms in terms of the classification accuracy, the number of features, and the computational cost.

Similar content being viewed by others

References

Vergara JR, Estévez PA (2014) A review of feature selection methods based on mutual information. Neural Comput Appl 24(1):175–186

Guyon I, Elisseeff A (2003) An introduction to variable and feature selection. J Mach Learn Res 3:1157–1182

Sayed GI, Hassanien AE, Azar AT (2019) Feature selection via a novel chaotic crow search algorithm. Neural Comput Appl 31(1):171–188

Maldonado S, Weber R (2009) A wrapper method for feature selection using support vector machines. Inf Sci 179(13):2208–2217

Liu H, Motoda H, Setiono R, Zhao Z (2010) Feature selection: an ever evolving frontier in data mining. FSDM 10:4–13

Guyon I, Elisseeff A (2003) An introduction to variable and feature selection. J Mach Learn Res 3:1157–1182

Zhou X-X, Zhang Y, Ji G, Yang J, Dong Z, Wang S, Zhang G, Phillips P (2016) Detection of abnormal MR brains based on wavelet entropy and feature selection. IEEJ Trans Electr Electron Eng 11(3):364–373

Dash M, Liu H (1997) Feature selection for classification. Intell Data Anal 1(3):131–156

Gutlein M, Frank E, Hall M, Karwath A (2009) Large-scale attribute selection using wrappers. In: IEEE symposium on computational intelligence and data mining, 2009. CIDM’09. IEEE, pp 332–339

Whitney AW (1971) A direct method of nonparametric measurement selection. IEEE Trans Comput 100(9):1100–1103

Marill T, Green DM (1963) On the effectiveness of receptors in recognition systems. IEEE Trans Inf Theory 9(1):11–17

Shi Y, Eberhart R (1998) A modified particle swarm optimizer. In: The 1998 IEEE international conference on evolutionary computation proceedings, 1998. IEEE World Congress on Computational Intelligence. IEEE, pp 69–73

Kennedy J, Spears WM (1998) Matching algorithms to problems: an experimental test of the particle swarm and some genetic algorithms on the multimodal problem generator. In: Proceedings of the IEEE international conference on evolutionary computation, pp 78–83. Citeseer

Tan F, Xuezheng F, Zhang Y, Bourgeois AG (2008) A genetic algorithm-based method for feature subset selection. Soft Comput 12(2):111–120

Unler A, Murat A (2010) A discrete particle swarm optimization method for feature selection in binary classification problems. Eur J Oper Res 206(3):528–539

Chuang L-Y, Chang H-W, Chung-Jui T, Yang C-H (2008) Improved binary PSO for feature selection using gene expression data. Comput Biol Chem 32(1):29–38

Huda RK, Banka H (2017) Efficient feature selection and classification algorithm based on PSO and rough sets. Neural Comput Appl. https://doi.org/10.1007/s00521-017-3317-9

Wang H, Li H, Liu Y, Li C, Zeng S (2007) Opposition-based particle swarm algorithm with Cauchy mutation. In: 2007 IEEE congress on evolutionary computation. IEEE, pp 4750–4756

Xue B, Zhang M, Browne WN (2014) Particle swarm optimisation for feature selection in classification: novel initialisation and updating mechanisms. Appl Soft Comput 18:261–276

Kennedy J, Eberhart R (1942) Particle swarm optimization. In: Proceedings of 1995 IEEE international conference on neural networks

Kennedy J, Eberhart RC (1997) A discrete binary version of the particle swarm algorithm. In: 1997 IEEE international conference on systems, man, and cybernetics, 1997. Computational Cybernetics and Simulation, vol 5. IEEE, pp 4104–4108

Yoshida H, Kawata K, Fukuyama Y, Takayama S, Nakanishi Y (2000) A particle swarm optimization for reactive power and voltage control considering voltage security assessment. IEEE Trans Power Syst 15(4):1232–1239

Parsopoulos KE, Vrahatis MN (2001) Modification of the particle swarm optimizer for locating all the global minima. In: Kůrková V, Neruda R, Kárný M, Steele NC (eds) Artificial neural nets and genetic algorithms. Springer, Vienna, pp 324–327

El-Gallad A, El-Hawary M, Sallam A, Kalas A (2002) Enhancing the particle swarm optimizer via proper parameters selection. In: Canadian conference on electrical and computer engineering, 2002. IEEE CCECE 2002, vol 2. IEEE, pp 792–797

Kennedy J, Mendes R (2002) Population structure and particle swarm performance. In: Proceedings of the 2002 congress on evolutionary computation. CEC'02 (Cat. No. 02TH8600). Vol. 2. IEEE, 2002

Mendes R, Kennedy J, Neves J (2004) The fully informed particle swarm: simpler, maybe better. IEEE Trans Evolut Comput 8(3):204–210

Zhang Y, Wang S, Phillips P, Ji G (2014) Binary PSO with mutation operator for feature selection using decision tree applied to spam detection. Knowl Based Syst 64:22–31

Whitney AW (1971) A direct method of nonparametric measurement selection. IEEE Trans Comput 100(9):1100–1103

Marill T, Green D (1963) On the effectiveness of receptors in recognition systems. IEEE Trans Inf Theory 9(1):11–17

Caruana R, Freitag D (1994) Greedy attribute selection. In: ICML, pp 28–36. Citeseer

Xue B, Zhang M, Browne WN (2012) New fitness functions in binary particle swarm optimisation for feature selection. In: 2012 IEEE congress on evolutionary computation. IEEE, pp 1–8

Khanesar MA, Teshnehlab M, Shoorehdeli MA (2007) A novel binary particle swarm optimization. In: Mediterranean conference on control & automation, 2007. MED’07. IEEE, pp 1–6

Xue B, Zhang M, Browne WN (2013) Novel initialisation and updating mechanisms in PSO for feature selection in classification. In: European conference on the applications of evolutionary computation. Springer, pp 428–438

Frank A (2010) UCI machine learning repository. http://archive.ics.uci.edu/ml

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Huda, R.K., Banka, H. New efficient initialization and updating mechanisms in PSO for feature selection and classification. Neural Comput & Applic 32, 3283–3294 (2020). https://doi.org/10.1007/s00521-019-04395-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-019-04395-3