Abstract

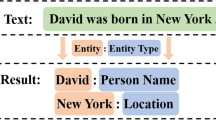

The joint extraction task aims to construct an entity-relation triple comprising two entities and the relation between them. Existing joint models make it difficult to process too many overlapping relations in Chinese patent texts (CPT). This article introduces a joint entity and relation extraction model based on directed-relation graph attention network (DGAT) oriented to CPT to locate this problem. First, word-character tokens are obtained from CPT using BERT as the DGAT model input. Global tokens are expanded using the BiLSTM network to enhance contextual connection from the model input. Second, the DGAT model encodes the global tokens as a fully connected graph whose nodes represent the global tokens and edges denote the relations between global tokens. The edges with directed relation in the fully connected graph are assigned weights by the DGAT model, and other edges are pruned, resulting in a directed-relation-connected graph. Finally, the entity-relation triples are decoded using conditional random fields (CRF) from the directed relation-connected graph. Experimental results show that the proposed model was highly accurate based on the CPT dataset.

Similar content being viewed by others

Data availability

Data cannot be available for privacy reasons.

References

Bekoulis G, Deleu J, Demeester T, Develder C (2018) Joint entity recognition and relation extraction as a multi-head selection problem. Expert Syst Appl 114:34–45. https://doi.org/10.1016/j.eswa.2018.07.032

Dai D, Xiao X, Lyu Y, Dou S, She Q, Wang H (2019) Joint extraction of entities and overlapping relations using position-attentive sequence labeling. In: Proceedings of the AAAI conference on artificial intelligence, vol 33, pp 6300–6308. https://doi.org/10.1609/aaai.v33i01.33016300

Eberts M, Ulges A (2020) Span-based joint entity and relation extraction with transformer pre-training. In: the 24th European conference on artificial intelligence 2020, pp 2006–2013. https://doi.org/10.3233/FAIA200321

Fu T-J, Li P-H, Ma W-Y (2019) Graphrel: modeling text as relational graphs for joint entity and relation extraction. In: Proceedings of the 57th annual meeting of the association for computational linguistics, pp 1409–1418. https://doi.org/10.18653/v1/P19-1136

Gao C, Zhang X, Liu H, Yun W, Jiang J (2022) A joint extraction model of entities and relations based on relation decomposition. Int J Mach Learn Cybern 13:1833–1845. https://doi.org/10.1007/s13042-021-01491-6

Gardent C, Shimorina A, Narayan S, Perez-Beltrachini L (2017) The WEBNLG challenge: generating text from RDF data. In: Proceedings of the 10th international conference on natural language generation, pp 124–133. https://doi.org/10.18653/v1/W17-3518

Geng Z, Zhang Y, Han Y (2021) Joint entity and relation extraction model based on rich semantics. Neurocomputing 429:132–140. https://doi.org/10.1016/j.neucom.2020.12.037

Grohe M (2021) The logic of graph neural networks. In: Proceedings of the 36th annual ACM/IEEE symposium on logic in computer science. https://doi.org/10.1109/LICS52264.2021.9470677

Guo Z, Zhang Y, Lu W (2020) Attention guided graph convolutional networks for relation extraction. In: Proceedings of the 57th annual meeting of the association for computational linguistics, pp 241–251. https://doi.org/10.18653/v1/P19-1024

Guo M, Lu C, Liu Z, Cheng M, Hu S (2023) Visual attention network. Comput Vis Media 9(4):733–752. https://doi.org/10.1007/s41095-023-0364-2

Hu Z, Dong Y, Wang K, Chang K-W, Sun Y (2020) Gpt-gnn: Generative pre-training of graph neural networks. In: Proceedings of the 26th ACM SIGKDD international conference on knowledge discovery and data mining, pp 1857–1867. https://doi.org/10.1145/3394486.3403237

Huang J, Shen H, Hou L, Cheng X (2021) SDGNN: learning node representation for signed directed networks. In: Proceedings of the AAAI conference on artificial intelligence, vol 35, pp 196–203. https://doi.org/10.1609/aaai.v35i1.16093

Ji S, Pan S, Cambria E, Marttinen P, Yu PS (2022) A survey on knowledge graphs: representation, acquisition, and applications. IEEE Trans Neural Netw Learn Syst 33(2):494–514. https://doi.org/10.1109/TNNLS.2021.3070843

Lai T, Cheng L, Wang D, Ye H, Zhang W (2022) RMAN: relational multi-head attention neural network for joint extraction of entities and relations. Appl Intell 52(3):3132–3142. https://doi.org/10.1007/s10489-021-02600-2

Li Q, Ji H (2014) Incremental joint extraction of entity mentions and relations. In: Proceedings of the 52nd annual meeting of the association for computational linguistics (vol 1: long papers), pp 402–412. https://doi.org/10.3115/v1/p14-1038

Li X, Luo X, Dong C, Yang D, Luan B, He Z (2021) TDEER: an efficient translating decoding schema for joint extraction of entities and relations. In: Proceedings of the 2021 conference on empirical methods in natural language processing, pp 8055–8064. https://doi.org/10.18653/v1/2021.emnlp-main.635

Li J, Sun A, Han J, Li C (2022) A survey on deep learning for named entity recognition. IEEE Trans Knowl Data Eng 34(1):50–70. https://doi.org/10.1109/TKDE.2020.2981314

Liu K (2020) A survey on neural relation extraction. Sci China Technol Sci 63(10):1971–1989. https://doi.org/10.1007/s11431-020-1673-6

Liu W, Fu X, Zhang Y, Xiao W (2021) Lexicon enhanced chinese sequence labeling using Bert adapter. In: Proceedings of the 59th annual meeting of the association for computational linguistics and the 11th international joint conference on natural language processing (vol 1: long papers), pp 5847–5858. https://doi.org/10.18653/v1/2021.acl-long.454

Liu P, Guo Y, Wang F, Li G (2022) Chinese named entity recognition: the state of the art. Neurocomputing 473:37–53. https://doi.org/10.1016/j.neucom.2021.10.101

Miwa M, Bansal M (2016) End-to-end relation extraction using lstms on sequences and tree structures. In: Proceedings of the 54th annual meeting of the association for computational linguistics (vol 1: long papers), pp 1105–1116. https://doi.org/10.18653/v1/P16-1105

Miwa M, Sasaki Y (2014) Modeling joint entity and relation extraction with table representation. In: Proceedings of the 2014 conference on empirical methods in natural language processing (EMNLP), pp 1858–1869. https://doi.org/10.3115/v1/D14-1200

Nayak T, Ng HT (2020) Effective modeling of encoder–decoder architecture for joint entity and relation extraction. Proc AAAI Conf Artif Intell 34(05):8528–8535. https://doi.org/10.1609/aaai.v34i05.6374

Nimmani P, Vodithala S, Polepally V (2021) Neural network based integrated model for information retrieval. In: 2021 5th International conference on intelligent computing and control systems, pp 1286–1289. https://doi.org/10.1109/ICICCS51141.2021.9432241

Niu Z, Zhong G, Yu H (2021) A review on the attention mechanism of deep learning. Neurocomputing 452:48–62. https://doi.org/10.1016/j.neucom.2021.03.091

Qiao B, Zou Z, Huang Y, Fang K, Zhu X, Chen Y (2022) A joint model for entity and relation extraction based on BERT. Neural Comput Appl 34(5):3471–3481. https://doi.org/10.1007/s00521-021-05815-z

Shang Y-M, Huang H, Mao X (2022) Onerel: Joint entity and relation extraction with one module in one step. In: Proceedings of the AAAI conference on artificial intelligence, vol 36, pp 11285–11293. https://doi.org/10.1609/aaai.v36i10.21379

Shen Y, Fu H, Du Z, Chen X, Burnaev E, Zorin D, Zhou K, Zheng Y (2022) GCN-denoiser: mesh denoising with graph convolutional networks. ACM Trans Graph. https://doi.org/10.1145/3480168

Takanobu R, Zhang T, Liu J, Huang M (2019) A hierarchical framework for relation extraction with reinforcement learning. In: Proceedings of the AAAI conference on artificial intelligence, vol 33, pp 7072–7079. https://doi.org/10.1609/aaai.v33i01.33017072

Tian T, Liu Y, Yang X, Lyu Y, Zhang X, Fang B (2020) QSAN: a quantum-probability based signed attention network for explainable false information detection. In: Proceedings of the 29th ACM international conference on information and knowledge management, pp 1445–1454. https://doi.org/10.1145/3340531.3411890

Wan Q, Wei L, Chen X, Liu J (2021) A region-based hypergraph network for joint entity-relation extraction. Knowl Based Syst 228:107298. https://doi.org/10.1016/j.knosys.2021.107298

Wang S, Zhang Y, Che W, Liu T (2018) Joint extraction of entities and relations based on a novel graph scheme. In: Proceedings of the twenty-seventh international joint conference on artificial intelligence, pp 4461–4467. https://doi.org/10.24963/ijcai.2018/620

Wang X, Huang T, Wang D, Yuan Y, Liu Z, He X, Chua T-S (2021) Learning intents behind interactions with knowledge graph for recommendation. In: Proceedings of the web conference 2021, pp 878–887. https://doi.org/10.1145/3442381.3450133

Wei Z, Su J, Wang Y, Tian Y, Chang Y (2020a) A novel cascade binary tagging framework for relational triple extraction. https://doi.org/10.18653/v1/2020.acl-main.136

Wei Z, Su J, Wang Y, Tian Y, Chang Y (2020b) A novel cascade binary tagging framework for relational triple extraction. In: Proceedings of the 58th annual meeting of the association for computational linguistics, pp 1476–1488. https://doi.org/10.18653/v1/2020.acl-main.136

Ye Y, Ji S (2021) Sparse graph attention networks. IEEE Trans Knowl Data Eng 35:905–916. https://doi.org/10.1109/TKDE.2021.3072345

Ye D, Lin Y, Li P, Sun M (2022) Packed levitated marker for entity and relation extraction. In: Proceedings of the 60th annual meeting of the association for computational linguistics (vol 1: long papers), pp 4904–4917. https://doi.org/10.18653/v1/2022.acl-long.337

Yuan Y, Zhou X, Pan S, Zhu Q, Song Z, Guo L (2020) A relation-specific attention network for joint entity and relation extraction. In: Proceedings of the twenty-ninth international joint conference on artificial intelligence, pp 4054–4060. https://doi.org/10.24963/ijcai.2020/561

Zeng X, Zeng D, He S, Liu K, Zhao J (2018) Extracting relational facts by an end-to-end neural model with copy mechanism. In: Proceedings of the 56th annual meeting of the association for computational linguistics (vol 1: long papers), pp 506–514. https://doi.org/10.18653/v1/P18-1047

Zeng X, He S, Zeng D, Liu K, Liu S, Zhao J (2019) Learning the extraction order of multiple relational facts in a sentence with reinforcement learning. In: Proceedings of the 2019 conference on empirical methods in natural language processing and the 9th international joint conference on natural language processing, pp 367–377. https://doi.org/10.18653/v1/D19-1035

Zhang Y, Qi P, Manning CD (2018) Graph convolution over pruned dependency trees improves relation extraction. In: Proceedings of the 2018 conference on empirical methods in natural language processing, pp 2205–2215. https://doi.org/10.18653/v1/D18-1244

Zhang S, Hu Z, Zhu G, Jin M, Li K-C (2021) Sentiment classification model for Chinese micro-blog comments based on key sentences extraction. Soft Comput 25(1):463–476. https://doi.org/10.1007/s00500-020-05160-8

Zhang S, Zhu H, Xu H, Zhu G, Li K-C (2022a) A named entity recognition method towards product reviews based on BiLSTM-attention-CRF. Int J Comput Sci Eng 25(5):479–489. https://doi.org/10.1504/IJCSE.2022.126251

Zhang S, Xu H, Zhu G, Chen X, Li K (2022b) A data processing method based on sequence labeling and syntactic analysis for extracting new sentiment words from product reviews. Soft Comput 26(2):853–866. https://doi.org/10.1007/s00500-021-06228-9

Zhang S, Wu H, Xu X, Zhu G, Hsieh M-Y (2022c) CL-ECPE: contrastive learning with adversarial samples for emotion-cause pair extraction. Connect Sci 34(1):1877–1894. https://doi.org/10.1080/09540091.2022.2082383

Zhang N, Deng S, Ye H, Zhang W, Chen H (2022d) Robust triple extraction with cascade bidirectional capsule network. Expert Syst Appl 187:115806. https://doi.org/10.1016/j.eswa.2021.115806

Zhao K, Xu H, Cheng Y, Li X, Gao K (2021) Representation iterative fusion based on heterogeneous graph neural network for joint entity and relation extraction. Knowl Based Syst 219:106888. https://doi.org/10.1016/j.knosys.2021.106888

Zheng S, Hao Y, Lu D, Bao H, Xu J, Hao H, Xu B (2017a) Joint entity and relation extraction based on a hybrid neural network. Neurocomputing 257:59–66. https://doi.org/10.1016/j.neucom.2016.12.075

Zheng S, Wang F, Bao H, Hao Y, Zhou P, Xu B (2017b) Joint extraction of entities and relations based on a novel tagging scheme. In: Proceedings of the 55th annual meeting of the association for computational linguistics, vol 1: long papers, pp 1227–1236. https://doi.org/10.18653/v1/P17-1113

Zhong Z, Chen D (2021) A frustratingly easy approach for entity and relation extraction. In: Proceedings of the 2021 conference of the North American chapter of the association for computational linguistics: human language technologies, pp 50–61. https://doi.org/10.18653/v1/2021.naacl-main.5

Zhou W, Huang K, Ma T, Huang J (2021) Document-level relation extraction with adaptive thresholding and localized context pooling. In: Proceedings of the AAAI conference on artificial intelligence, vol 35, pp 14612–14620. https://doi.org/10.1609/aaai.v35i16.17717

Zhu G, Sun Z, Zhang S, Wei S, Li K (2022) Causality extraction model based on two-stage GCN. Soft Comput 26(24):13815–13828. https://doi.org/10.1007/s00500-022-07370-8

Funding

This work was supported by the Anhui University Postgraduate Scientific Research Project (Grant No. YJS20210368), the National Natural Science Foundation of China (Grant NO.62076006), and the National Natural Science Foundation of China (Grant No. 60973050).

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Conflict of interest

The author Yushan Zhao declares that she has no conflict of interest. The author Kuan-Ching Li declares that she has no conflict of interest. The author Tengke Wang declares that she has no conflict of interest. The author Shunxiang Zhang declares that she has no conflict of interest. Also this manuscript is approved by all the authors for publication. Yushan Zhao would like to declare on behalf of all the co-authors that the work described was original research that has not been published previously. All the authors listed have approved the manuscript that is enclosed.

Ethical approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Informed consent

No humans or any individual participants are involved in this study.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhao, Y., Li, KC., Wang, T. et al. Joint entity and relation extraction model based on directed-relation GAT oriented to Chinese patent texts. Soft Comput (2024). https://doi.org/10.1007/s00500-024-09629-8

Accepted:

Published:

DOI: https://doi.org/10.1007/s00500-024-09629-8