Abstract

Predicting volatility is a critical activity for taking risk- adjusted decisions in asset trading and allocation. In order to provide effective decision-making support, in this paper we investigate the profitability of a deep Long Short-Term Memory (LSTM) Neural Network for forecasting daily stock market volatility using a panel of 28 assets representative of the Dow Jones Industrial Average index combined with the market factor proxied by the SPY and, separately, a panel of 92 assets belonging to the NASDAQ 100 index. The Dow Jones plus SPY data are from January 2002 to August 2008, while the NASDAQ 100 is from December 2012 to November 2017. If, on the one hand, we expect that this evolutionary behavior can be effectively captured adaptively through the use of Artificial Intelligence (AI) flexible methods, on the other, in this setting, standard parametric approaches could fail to provide optimal predictions. We compared the volatility forecasts generated by the LSTM approach to those obtained through use of widely recognized benchmarks models in this field, in particular, univariate parametric models such as the Realized Generalized Autoregressive Conditionally Heteroskedastic (R-GARCH) and the Glosten–Jagannathan–Runkle Multiplicative Error Models (GJR-MEM). The results demonstrate the superiority of the LSTM over the widely popular R-GARCH and GJR-MEM univariate parametric methods, when forecasting in condition of high volatility, while still producing comparable predictions for more tranquil periods.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Asset management decisions made by investors with global portfolios of equities, bonds and other asset management instruments are driven by the fundamental principle of maximizing the expected return on a given level of risk. These decisions include the allocation of assets through trading in asset management instruments subject to price changes in order to obtain the best combination of risk and reward (known as the risk-reward trade-off) that takes into account the investor’s specific assets and objectives.

There is no doubt that the decision on how to invest heritage lies primarily in the ability of human judgment to recognize opportunities for return based on the economic, social and political context and the events that condition its evolution. The altered context translates into a change in the attractiveness of asset management assets, i.e., in their value and in turn in the price that can lead to gains or losses.

Another element that helps to change the price is those factors that specifically affect a company, a sector or even a country. Prudent management relies on decisions to diversify investments between assets with a negative price correlation, i.e., assets whose price tends to move in opposite directions. In this way, any losses in value on certain assets can be offset at least in part by returns on other assets. Finally, trading activity and the additional element contribute significantly to the price fluctuations of an asset. It reflects the ability to cross supply and demand on the asset management markets.

Therefore, risk, return and asset correlation are the key measures used in asset management models. Quantifying the potential loss of assets is a major part of risk management, trading in asset management markets and asset allocation. To be able to measure these losses and make informed investment decisions, investors need to estimate risks (Blume 1971). Since volatility has some well-known statistical regularities that make it inherently forecastable, it is among one of the most accepted and used measures of risk in the financial market. These regularities include the volatility clustering effect, leading to positive and persistent auto-correlations of volatility measures, the leverage effect, which is related to the negative correlation between past returns and current volatility values, and the dynamic cross-correlation between the volatilities of different assets that give rise to the well-known phenomenon of volatility spillovers. In addition, it is worth remembering that volatility is a key ingredient for computing more refined risk measures such as the Value at Risk or the Expected Shortfall.

Predicting volatility is challenging task, where modern artificial intelligence can come at rescue. Conventional techniques, mostly based on GARCH modeling and its variant, are not able to consider a market as a whole, thus volatility spillovers. In this paper, we aim to show that deep learning can help to build models of volatility forecasting, discussing properties and experimental results. In particular, the paper focuses on LSTM as an effective tool for the purpose of estimating the forecasting volatility.

In the evaluation of volatility forecasts, identifying the underlying market regime is of fundamental importance, as generating accurate predictions is more important in periods of higher volatility of the stock markets rather than in more tranquil periods. In fact, in periods of greater price variability, such as those that accompany and follow periods of economic and financial crisis, the timing risk in trading operations exposes to a greater risk of large losses. It is, at this stage, important to be aware that the forecasting performance of different approaches to volatility forecasting can be highly dependent on the market regime, as identified in terms of the corresponding long-run volatility level. An obvious consequence is that the (ex-ante) identification of the best performing model could, and should, take into account the underlying volatility level.

Despite the significant progresses in the field of financial econometrics, see, e.g., Hamilton and Susmel (1994) and Kim and Kim (1996), and artificial intelligence, this task is still very complex and expert judgment still plays a fundamental role in recognizing the factors that anticipate and characterize moments of high uncertainty in the markets. This is due to the fact that, adequately supported by the outcome provided by quantitative forecasting models, human intervention is able to convey heterogeneous sources of information, of both quantitative and qualitative nature, that would be otherwise hardly embedded in any formalized quantitative forecasting approach.

In this paper we propose a model for volatility prediction that is based on LSTM. Comparison to existing approaches, namely R-GARCH and GJR-MEM, together with conventional RNN, is performed with respect to different volatility regimes, in order to point out how the identification of the market volatility regime is important for identification of the optimal forecasting model.

LSTM effectiveness is showcased by using a panel of 28 assets representative of the Dow Jones Industrial Average index combined with the market factor proxied by the SPY and, separately, a panel of 92 assets belonging to the NASDAQ 100 index. The Dow Jones plus SPY data are from January 2002 to August 2008, while the NASDAQ 100 is from December 2012 to November 2017. Both markets, in the periods considered, were affected by extremely critical events as the global financial crisis (GFC) of 2007–2008 and the consequences of the European debt crisis 2011–2012, which led to changes in the level and dynamics of market volatility during and after the crisis.

The remainder of the paper is structured as follows. An overview of related literature is given in Sect. 2. The data used in the empirical application and the investigated techniques are described in Sect. 3.1. The LSTM approach and the related experimental setup are briefly discussed in Sects. 3.2 and 3.3. The two parametric competitors used as benchmarks in our empirical analysis, i.e., the R-GARCH and GJR-MEM, are presented in Sects. 3.4 and 3.5, respectively, while Sect. 3.6 introduces the two loss functions used for training the models and the metrics for out-of-sample forecast evaluation. The empirical results of the out-of-sample forecasting comparison are presented and discussed in Sect. 4, followed by the comparison with Recurrent Neural Networks in Sect. 5. Conclusions are given in Sect. 6.

2 Related work

As the prediction of volatility is a major factor in risk analysis, many efforts have been made to implement parametric as well as nonparametric predictive methods for forecasting future volatility. Depending on the reference information set, the proposed approaches can be classified into two broad categories. Of these, the first includes approaches fitted to time series of daily log-returns. In the parametric world, this class of methods includes the Generalized Autoregressive Conditionally Heteroskedastic (GARCH) models (Bollerslev 1986) and their numerous univariate and multivariate recent extensions (Bauwens et al. 2006; Gerlach and Wang 2016; Huang et al. 2017; Wang et al. 2018). In the nonparametric world, we recall a consistent number of papers applying nonparametric approximators of squared returns (such as smoothing splines Langrock et al. 2015; Zhang and Teo 2015 or neural networks to time series Fischer and Krauss 2018; Kourentzes et al. 2014; Wang et al. 2015) which provides an unbiased but noisy volatility proxy.

The second and more recent class of approaches for volatility forecasting replaces this noisy volatility proxy with more efficient realized volatility measures (Andersen and Teräsvirta 2009) built from time series of high-frequency asset prices. Notable examples of parametric models falling into this second class are the Multiplicative Error Models (MEM) Engle and Russell (1998) and the Realized GARCH (R-GARCH) Hansen et al. (2012) techniques. The main structural difference between R-GARCH and MEM models is that R-GARCH uses bivariate information on log-returns and realized volatility, whereas MEM are directly fitted to a univariate realized volatility series—log-returns are eventually used only as external regressors for capturing leverage effects. Similarly, in a nonparametric environment, neural networks (McAleer and Medeiros 2011) or other nonparametric filters (Chen et al. 2018) can be applied to time series of realized volatility measures to forecast future volatility. See also Han and Zhang (2012) nonparametric volatility modeling for non-stationary time series.

Several extensions have been proposed to parametric models. For instance, in Andersen et al. (2007) the authors suggest that the total return variation process can be separated in jumps and non-jumps movements, where almost all of the predictability in daily, weekly, and monthly return volatilities comes from the non-jump component. Building on this idea, Maciel et al. (2017) investigated evolving possibilistic fuzzy modeling to forecast realized volatility with jumps. Their possibilistic model improves robustness to noisy data and outliers, which is an essential requirement in financial markets volatility modeling and forecasting.

All above-discussed approaches are univariate. Nevertheless, the presence of phenomena such as common features and volatility spillovers make the analysis of multivariate volatility panels potentially very profitable. In a parametric setting, however, the number of required model parameters explodes rapidly as the cross-sectional dimension of the panel increases, making the estimation unfeasible even for moderately large dimensions, unless some heavy, and untested parametric restrictions are imposed.

Typically, it is often assumed that all the volatilities in the panel share the same dynamic dependence structure and volatility spillovers are not present (Pakel et al. 2011). Those assumptions are clearly unrealistic and they greatly reduce the ability of the parametric models, albeit multivariate, to describe the complexity of the dynamic structure which is observed in financial time series. These considerations contribute to the scarce attention that multivariate models for volatility panels have received in the literature on parametric modeling of financial time series.

Feed-forward neural networks are a favorite class of multivariate, nonparametric models used to study dependencies and trends in the data, e.g., using multiple inputs from the past to predict the future time step (Chakraborty et al. 1992). However, when using traditional neural network models, much effort is devoted to making sure that what is presented for training in the input layer is already in a format that allows the network to recognize the significant patterns (“feature engineering”). This process usually requires some ad-hoc procedures and soon becomes one of the most time-consuming parts of neural network modeling. In a deep learning framework instead, by adding more and more layers between input and output (hence “deep”), the model allows richer intermediate representations to be built and most of the feature engineering process can be achieved by the algorithm itself, in an almost automatic fashion. This latter point improves prediction accuracy and strongly widens the domains of applications. As a drawback, deep learning models require a large amount of data to outperform other approaches and are computationally expensive to train. However, in financial applications, a large amount of data can be quickly gathered, making deep learning applications appropriate and viable.

The main focus of this paper is on proving the effectiveness of using deep learning techniques for multivariate volatility forecasting. In particular, a deep Long Short-Term Memory (LSTM) Neural Network (Hochreiter and Schmidhuber 1997) is applied. LSTMs can have several advantages compared to the modeling approaches used so far in the literature. The application of this class of models to multivariate volatility forecasting is particularly appealing since it allows to overcome the curse of dimensionality typically limiting the application of complex multivariate parametric models.

Firstly, they can be seen as nonparametric statistical models, and consequently, they do not suffer from the misspecification problems which typically affect parametric modeling strategies. Secondly, they can overcome the curse of dimensionality problem which affects both standard nonparametric estimation techniques and several multivariate parametric models (Poggio et al. 2017). This makes the use of LSTMs feasible even for high dimensional temporal datasets. Moreover, they do not require any undesirable reduction of the parameter space through untestable, unrealistic restrictions, which, otherwise, might significantly reduce the ability of the model to reveal well-known stylized facts about multivariate financial time series (such as spillovers and complex dynamics). Finally, they can benefit from modeling complex nonlinear and long-term dependencies, leading to improved accurate predictions (Gers et al. 2002).

One of the main benefits of using a recurring network such as LSTM is the ability to make the prediction adaptive to time. The concept of internal state/memory translates into an implicit stretching or warping of the time axis. This detail is well known to those involved in speech analysis, since the correspondence between patterns must necessarily take into account an inevitable misalignment along the time axis. In the financial domain we are dealing with in this paper, this translates into the need to consider a misalignment of the time series of asset prices or volatility in a market, due to market inefficiencies or propagation between sectors. To solve this problem, one of the most popular techniques is dynamic time warping (DWT) (Itakura 1975). This aspect has been very recently taken up and generalized by Rivest and Kohar (2020). In their work, they propose squared timing error (STE) as a new cost function of timing error that incorporates the principles of DWT. Among the various experiments, they also consider a price prediction problem in finance. Their method focuses on binary time series. In this case, they try to determine when a price exceeds a certain threshold. In the experiment they assume the closing prices of the shares that make up the NASDAQ-100 index in the period from April 1, 2013 until March 31, 2014. They calculate the 14-day moving average, assuming as threshold 0.7 of historical volatility in the same period, i.e., the 14-day moving standard deviation.

However, the advantage of using a recurring network, whether LSTM or any other architecture, is precisely in incorporating propagation effects into hidden state units. The question of how to make the internal memory of a recurring network effective has been the focus of many recent developments. Yu et al. (2019) offer an extensive overview of them. Besides the pioneering work of Hochreiter and Schmidhuber (1997) that led to the definition of LSTM, it is worth to mention the contributions given by Cho et al. (2014) regarding the Gated Recurrent Unit (GRU), by Kalchbrenner et al. (2015) for Grid LSTM, by Shi et al. (2015) for Convolutional LSTM. A different approach is fostered by Graves et al. (2014) for their Neural Turin Machine, suggesting to make explicit into the architecture an external addressable memory. Following this idea, one of the latest developments in this area is offered by Quan et al. (2020), where they propose to equip a RNN with an external addressable working memory (EAWM). This makes short and long-term information explicit and directly manipulable, letting SGD to train the network end-to-end.

Following a similar approach, Nápoles et al. (2020) propose long-term cognitive network (LTCN) as a neural cognitive mapping technique able to store long-term dependencies between input and output sequences, especially in a context where values of several dependent variables have to be predicted. The approach consists in preserving the knowledge of the expert encoded in a matrix of weights, trying to optimize the nonlinear relationship offered by the activation function of each neuron. In this sense, the training algorithm is called non-synaptic back-propagation.

Most of the above work focuses on sequence analysis in natural language processing problems, in particular machine translation, text comprehension and text generation. Although LSTM is now a tool made available to finance (e.g., see Troiano et al. 2018), at the best of our knowledge the method proposed in this paper is novel as no attempt to specify and estimate a comprehensive nonparametric volatility forecasting model for the whole market has been made so far. In particular, nobody approached the problem using Deep Learning (i.e., LSTM).

3 Materials and methods

As mentioned above, the methodology proposed in this work is based on the application of Long Short-Term Memory (LSTM) Neural Network for the forecast of the volatility of the assets at a particular time, given its past values.

3.1 Data

Two datasets are used for the empirical experimentation. The first dataset used by Hansen et al. (2012), includes 28 assets from the Dow Jones Industrial Average (DJI 500) index plus one exchange-traded index fund SPY, that tracks the S &P 500 index. The sample spans the period from 1st January 2002 to 31st August 2008, including 1549 trading days. The second dataset is related to 92 stocks belonging to the NASDAQ 100 index within the period 1st December 2012 to 29th November 2017, for a total of 1256 trading days. Further detail on the two datasets can be found in Tables 1 and 2, respectivelyFootnote 1.

Each asset is represented by two different time series, the realized measure, namely volatility, and the related open–close return. The realized measure \(v_t\) is given by a realized kernel estimator computed using the Parzen kernel function. This estimator is similar to the realized variance, and more importantly, it is robust to market micro-structure noise and is more accurate than the quadratic variation estimator. The implementation of the realized kernel follows the method proposed by Barndorff-Nielsen et al. (2011) that guarantees a positive estimate.

3.2 Long short-term memory network

Long Short-Term Memory (LSTM) Network (Gers et al. 1999; Hochreiter and Schmidhuber 1997) is a Recurrent Neural Network (RNN) architecture that acts as a Universal Turing Machine learner: given enough units to capture the state and a proper weighting matrix to control its evolution, the model can replicate the output of any computable function.

Because of this noticeable characteristic, LSTM is largely employed in tasks of sequence processing, e.g., in natural language processing (Sundermeyer et al. 2015; Tran et al. 2016; Yao et al. 2014), speech recognition (Graves et al. 2013; Han et al. 2017; Suwajanakorn et al. 2017), automatic control (Gers et al. 2002; Hirose and Tajima 2017), omics sciences (Lee et al. 2016; Leifert et al. 2016), and others. The LSTM networks are gaining increasing interest and popularity in time series modeling and prediction, as they can model long and short range dependencies (Bianchi et al. 2017; Zaytar and El Amrani 2016).

LSTM architecture as presented by Graves (2013). a shows the internals of a specific cell, while b shows how the sequence is propagated through the LSTM cells

There are several variations (Graves 2013; Wang 2017; Wang and Niepert 2019) of the original model proposed by Hochreiter and Schmidhuber (1997). In this paper we adopt the model presented by Graves (2013) (Fig. 1a) which is governed by the set of equations given below:

The core of the LSTM is represented by the \(c_t\) which acts as a memory accumulator of the state information at time t. The state evolves according to Eq. (3), subject to two elements—the “forget gate” and the “input gate”, represented at time t by the variables \(f_t\) and \(i_t\), respectively. The role of \(f_t\) is to erase the memory \(c_{t-1}\) according to the current input \(x_t\) (comprising open-close return and volatility), the state \(l_{t-1}\) and the memory \(c_{t-1}\) (Eq. (2)). The forget gate is counterbalanced by the input gate (Eq. (1)) that making use of the same information has instead the role of reinforcing or replacing the memory by activating a combination \(x_t\) and \(l_{t-1}\) (Eq. (3)). These last functions, as those governing the activation of \(f_t\) and \(i_t\) are learned as single-layer perceptrons using the logistic function \(\sigma \) (Eq. (1) and Eq. (2)), or the \(\tanh \) function (Eq. (3)) as activation, where \(b_i\), \(b_f\) and \(b_c\) are the respective biases. Once the memory is recomputed at time t, the LSTM emits the output \(o_t\) as a function of \(x_t\), \(l_{t-1}\) and the memory \(c_t\) (Eq. (4)). This latter function is also learned as a single-layer perceptron and finally, the LSTM computes the state \(l_t\) as given by Eq. (5). Figure 1b shows how a sequence is propagated through the LSTM.

The main advantage of this architecture is that the memory \(c_t\) is refreshed under the control of gates so that the gradient is limited to the last stage (also known as constant error carousels Gers and Schmidhuber 2001; Gers et al. 1999) and prevented from vanishing too quickly. This latter issue is a critical one and a well-known limitation of RNN based on older architectures, such as the Elman’s and Jordan’s reference models (Jozefowicz et al. 2015; Pascanu et al. 2013).

Because the LSTM learning function can be decomposed into multiple intermediate steps, LSTMs can be “stacked” such that the information produced by one LSTM step becomes the input to another. This “stacked” architecture has been applied to many real-world sequence modeling problems (Sutskever et al. 2014; Xu et al. 2015).

3.3 Experimental setup

The proposed model is a 2-layer stacked LSTM made of 2n input and n output units, respectively, with n being the number of assets. This means 58/29 for DJI 500 and 184/92 for NASDAQ 100. The output of the top LSTM is given as input to a dense activation layer designed to provide the model’s output (see Fig. 2 for a schematic description of the model). The hidden activation function is a hyperbolic tangent, while the recurrent activation is a hard sigmoid (default activation functions for LSTM, as advised in Hochreiter and Schmidhuber 1997). To avoid negative forecasts (the realized volatility is always continuous positive), a softplus function is used in the output layer.

Two topologies of LSTM are tested and evaluated: univariate and multivariate.

LSTM-1 has a univariate architecture (one model independently trained for each asset) so takes as input only one asset at a time (open-close return and volatility for a chosen past window \((r_{t-k},..,r_{t-1}, v_{t-k},..,v_{t-1} )\)) and produces as output the one-step-ahead volatility (\(v_{t}\)).

LSTM-n has a multivariate architecture, where a single model is trained using all the assets. This version takes as input the daily returns and volatilities for a given past window \((r^i_{t-k},..,r^i_{t-1}, v^i_{t-k},..,v^i_{t-1} )\), \(i=1, \ldots , n\) and outputs the n one-step-ahead volatility (\(v^i_{t}\), \(i=1,\ldots , n\)), where \(n=29\) for DJI 500 and \(n=92\) for NASDAQ 100.

The model is pre-trained by using the first 300 days of our dataset with a look-back window of 20 days. Subsequently, a rolling forecast is applied from day 301 onward. In particular, every time the one-step-ahead volatility is predicted, its observed features (realized return and volatility) are used to refine the network state, before moving the time window one step. This procedure allows having an up-to-date network, every time new information is available.

To avoid the look-ahead bias, the walk-forward testing technique (Ladyzynski et al. 2013) has been applied on the initial year of trading data and a grid search used to optimize the hyper-parameters of the network. The validation is performed by scanning the data using a sliding window of length m (i.e., the look-back) on which the model is trained on, and subsequently predicting on the following n samples (in our case, with one-step-ahead prediction, n = 1). When the end of this subset is reached the optimization window is shifted forward by n. The grid search boundaries and optimal values are reported in Table 3. The optimal number of training epochs on the initial data was found to be in line with the optimal number of days used for pre-training (i.e., 300 days), while the number of epochs for the rolling period had its best value consistent with the size of the look-back (i.e., 20 days, equivalent to four weeks of trading data). We observed that a smaller number of epochs would produce an under-fitted network (smooth forecast trending with the average of the last few data points), while a longer training would produce an over-fitting network (giving a lot of weight to the most recently shown data point). Furthermore, a larger look-back would impact the convergence (probably due to the vanishing gradient problem). In addition, dropout, a standard regularization technique used for deep learning models, was used to avoid over-fitting, and its best value was found to be around 0.20, as also suggested in Wan et al. (2013). Lastly, we employed two loss functions to train our model, which are described in Sect. 3.6.

3.3.1 LSTM computational complexity

In this section we report the computational complexity of our proposed univariate and multivariate models.

The LSTM computational complexity can be estimated by calculating the number of operations and number of trainable parameters of the model.

Considering a network taking input vectors of size m and giving output vectors of size n, the LSTM has a set of 2 matrices: U of dimension nm and W of dimension nn for each of the three gates and one set for updating the cell state. Lastly, there is a set of n biases.

The memory complexity (number of trainable weights) of the LSTM can be calculated with the following formula: \(4(nm + n^2 + n)\).

The time complexity (i.e., number of operations required to perform one training step) is calculated as follows. A matrix multiplication requires mn multiplications and \(m(n-1)\) additions, this needs to be done for mn elements, so the complexity is \(mn(n + (m-1))\) = \(n^2m+nm^2-nm\)

This operation is done for each gate (3), for the cell state, and for the number of time steps (k = 20), \(4k(n^2m+nm^2-nm)\).

While the number of operations required for one epoch grows with the size of the inputs and outputs of the model, the computational need is still negligible when compared to the available computational power when employing a GPU card. For instance, the biggest multivariate model trained in the empirical experimentation, requires roughly 744 million operations for one epoch, while the graphic card we used (i.e., NVIDIA GeForce 1070) has a computational power of 6.5 TFLOPS, which allows to run 9 epochs per second.

Table 4 reports the total number of weights and number of operations for each of the models trained in the empirical experimentation.

3.3.2 Data standardization

Before training the model, the data are standardized with 0-mean and 1-variance. Since our features are in \(\mathrm{I\!R}^+\), for each sample (\(r^2_t\), \(v_t\)), its negative (\(-r^2_t\), \(-v_t\)) is added to the data (to have a perfect bell-shaped distribution). The resulting distribution is already mean-centered, hence the values are divided by their standard deviation, and finally, the added negative values are dropped to restore the original set of observations.

3.4 Realized GARCH (R-GARCH)

The Realized GARCH introduced by Hansen et al. (2012) has extended the class of GARCH models by replacing, in the volatility dynamics, the squared returns with a much more efficient proxy such as a realized volatility measure. The structure of the R-GARCH(1, 1) in its linear formulation is given by:

where \(z_t \mathtt {\sim } i.i.d.(0,1)\) and \(u_t \mathtt {\sim } i.i.d.(0,\sigma ^2_u)\) with \(z_t\) and \(u_t\) being mutually independent. The first two equations are the return equation and the volatility equation that define a class of GARCH-X models, including those estimated in Visser (2011), Engle (2002), and Barndorff-Nielsen and Shephard (2005). The GARCH-X acronym refers to the fact that \(v_t\) is treated as an exogenous variable. It is worth noting that most variants of ARCH and GARCH models are nested in the R-GARCH framework. The measurement equation is justified by the fact that any consistent estimator of the Integrated Variance can be written as the sum of the conditional variance plus a random innovation, where the latter is captured by \(\tau (z_t) + u_t\). The function \(\tau (z_t)\) can accommodate leverage effects, because it captures the dependence between returns and future volatility. A common choice (Hansen et al. 2012) that has been found to be empirically satisfactory is to use the specification:

Substituting the measurement equation into the volatility equation, it can be easily shown that the model implies an AR(1) representation of \(h_t\):

where \( w_t = \tau (z_t) + u_t\). Furthermore, it is assumed that the expectation of \(E(w_t) = 0\). The coefficient \((\beta + \varphi \gamma )\) reflects the persistence of volatility, whereas \(\gamma \) summarizes the impact of the past realized measure on future volatility.

The general conditions required to ensure that the volatility process \(h_t\) is stationary and the unconditional variance of \(r_t\) is finite and positive are given by:

If the conditions in Eq. (11) are fulfilled, the unconditional variance of \(r_t\), taking expectations of both sides in Eq. (10), can be easily shown to be equal to \((\omega + \xi \gamma )/[1-(\beta + \varphi \gamma )]\). Finally, as for standard GARCH models, the positivity of \(h_t\) (\(\forall t\)) is achieved under the general condition that \(\omega \), \(\gamma \) and \(\beta \) are all positive.

3.5 GJR-MEM

Multiplicative Error Models (MEM) were first proposed by Engle (2002) as a generalization to non-negative variables of the Autoregressive Conditional Duration (ACD) models of Engle and Russell (1998). Namely, let \(v_t\) be a discrete time process on \([0,\infty )\) (e.g., a realized measure). A general formulation of the MEM is

where \((\epsilon _t|{\mathcal {I}}_{t-1}){\mathop {\sim }\limits ^{iid}} D^+(1,\sigma ^2)\). It can be easily seen that

where the conditional expectation of the realized measure (\(\mu _t\)) provides an estimate of the latent conditional variance \(h_t\).

The GJR-MEM model is obtained by borrowing from the GARCH literature (Engle 2002; Glosten et al. 1993) the following dynamic equation for \(\mu _t\):

which allows the reproduction of volatility clustering as well as leverage effects.

Coming to the specification of the distribution of \(\epsilon _t\), any unit mean distribution with positive support could be used. Possible choices include Gamma, Log-Normal, Weibull, Inverted-Gamma and mixtures of them. In this paper we consider the Gamma distribution which is a flexible choice able to fit a variety of empirical settings. If \((\epsilon _{t}|{\mathcal {I}}_{t-1})\sim \Gamma (\theta ,\phi )\), its density is given by

However, since \(E(\epsilon _{t}|{\mathcal {I}}_{t-1})=\theta \phi \), to ensure unit mean, it is needed to impose the constraint \(\phi =1/\theta \) giving rise to the following density

Model parameters can then be estimated maximizing the likelihood function implied by the unit mean Gamma assumption. It is worth noting that these estimates have a quasi-maximum likelihood interpretation since it can be shown that, given that \(\mu _t\) is correctly specified, they are still consistent and asymptotic normal even if the distribution of \(\epsilon _t\) is misspecified.

3.6 Evaluation metrics

We have considered both online and offline evaluations. In the online evaluation case, two alternative loss functions have been used to train the LSTM models: the widely accepted Mean Squared Error (MSE) for regression and forecasting tasks; and the QLIKE function, particularly suitable for volatility forecasting (Patton 2011). For the offline evaluation case, we have considered a test data set (not used for training) for out-of-sample evaluation using MSE, QLIKE and the Pearson correlation index.

Given a vector \({{\hat{Y}}}\) of N forecasts and the vector Y of observed values, the MSE and QLIKE are defined as follows:

These measures are proposed in our evaluation framework since they are considered to be robust for assessing volatility forecast performance (Patton 2011). A robust measure must ensure that using a proxy for the volatility (the realized kernel in our case) gives the same ranking as using the true (unobservable) volatility of an asset.

Moreover, the Pearson correlation coefficient is computed between the forecast and realized volatility of each estimated model, to assess the models ability to follow the assets trends.

Also, statistical test, namely Diebold-Mariano (DM), is used to assess models’ Conditional Predictive Ability (CPA). The one-tail DM (Diebold and Mariano 2002) is used with squared error, predictive horizon equal to 1 (for one step ahead forecast) and a significance threshold at 0.05, to test the following NULL hypothesis ‘Model \(M_i\) has better predictive ability than model \(M_j\) with a size level equal to \(\alpha = 0.05\)’.

Lastly, the results are evaluated in terms of Value At Risk (VaR) and Expected Shortfall (ES) estimation which are widely adopted by practitioners and regulators as standard measures of market risk for financial assets. The VaR encapsulates in a point-wise fashion the potential market value loss of a financial asset over a time horizon h, at a significance or coverage level \(\alpha _{VaR}\). In our case we consider h = 1 and \(\alpha _{VaR}\) = 0.05. Its performance is evaluated using two metrics: the violation ratio (VR) and the average square magnitude function (ASFM). The VR is the percentage occurrence of an actual loss that is greater than the estimated maximum loss in the VaR framework while the ASFM considers the amount of possible default measuring the average squared cost of exceptions. The ES (Acerbi and Tasche 2002) is often referred to as the conditional VaR (cVaR). The predictive performance of the models under comparison in forecasting the pair (VaR, ES) is assessed by computing the Asymmetric Laplace Score (ALS), as defined in Taylor (2019): more accurate models are expected to return lower values of the ALS criterion. The VR and ASFM metrics are defined as in Dunis et al. (2010) and Maciel et al. (2017).

4 Results and discussion

In this section, the proposed approach is implemented in two variants: univariate (LSTM-1) and multivariate (LSTM-29 and LSTM-92). The method is compared with two state-of-the art methodologies, namely R-GARCH (Hansen et al. 2012) and GJR-MEM (Glosten et al. 1993). We discuss empirical results from an out-of-sample forecasting comparison, using returns and realized measures for the 28 Dow Jones Industrial Average stocks plus one exchange-traded index fund, SPY (which tracks the S &P 500 index) over a period of 1250 days and for the 92 stocks belonging to the NASDAQ 100 index over a period of 956 days.

4.1 Dow Jones industrial average 500

A detailed comparison of the methods performance is given in Table 5 with respect to each asset. The LSTM-29 approach reaches the lowest MSE error for 18 out of the 29 assets when compared with LSTM-1, R-GARCH and GJR-MEM methods. In particular, the LSTM-29 has a lower error compared to our univariate model for 25 out of 29 assets, equal in 2 and worse in 2 cases. Compared to R-GARCH, the LSTM-29 is better again in 25 out of 29 cases, equal for 1, and worse for 3 assets. Lastly, our proposed approach is better, equal and worse than GJR-MEM in 16, 3 and 10 cases, respectively. An example of the one step ahead prediction given by the LSTM-29 for the BAC asset is presented in Fig. 3.

Having a closer look at the MSE values from Table 5, the LSTM-29 is not better than the other benchmarks on assets with very low errors and hence volatility, in the considered period (e.g., JNJ, KO, PG and SPY).

Next, the forecast models are employed in risk management applications using Value At Risk (VaR). With the VaR estimates, the models are evaluated using the VR, CVR and the ASMF. Table 6 shows the values of VR, CVR and ASMF for VaR estimation using the four models for all DJI 500 assets. The LSTM-29 achieved better VR (values closer to our selected VaR confidence level—5%) compared to LSTM-1 in 15 out of 29 assets, equal for 3 and worse for 11; compared to the R-GARCH is better in 6 cases, equal in 2 and worse in 21, while compared to the GJR-MEM is better, equal and worse in 23, 1 and 5 cases, respectively.

For the CVR , the LSTM-29 achieved better results (the lower the value, the better) compared to LSTM-1 in 11 out of 29 assets, equal for 3 and worse for 15; compared to the R-GARCH is better in 21 cases, equal in 2 and worse in 6, while compared to the GJR-MEM is better, equal and worse in 5, 1 and 23 cases, respectively.

When considering the ASMF measure, the LSTM-29 is better than the LSTM-1, R-GARCH and GJR-MEM in 21, 17 and 17 cases; and worse in 8, 12 and 12 cases, respectively.

Figure 4 is a scatter plot illustration comparing for each asset, the performance of the LSTM-29 and the other models measured in terms of MSE difference (i.e., positive values representing smaller errors and better LSTM-29 performance) versus asset volatility in terms of its variance (i.e., higher values of variance representing stronger fluctuation in daily volatilities) over the out of sample period (1250 days).

As can be seen from Fig. 4, the LSTM-29 is generally comparable with the other models at lower volatility, while outperforming the LSTM-1 and the two state-of-the-art R-GARCH and GJR-MEM approaches in higher volatility regimes. This result is confirmed by the Pearson’s correlation index with values 0.825 against LSTM-1, 0.800 against R-GARCH, and 0.608 against GJR-MEM over the 29 assets.

To verify whether the proposed approach has statistically superior predictive ability, a Diebold-Mariano test is performed using a predictive horizon equal to 1 (one-step-ahead forecast). As it can be observed from the results reported in Table 7, the LSTM-29 has a better predictive ability for 10 out of 29 assets compared to the LSTM-1, 16 over 29 against the R-GARCH, and 6 out of 29 assets for the GJR-MEM, when considering a p-value strictly lower than 0.05. It is also worth noticing that in the remaining cases LSTM-29 is never worse than the compared models.

Furthermore, to test the dependence of forecasting accuracy on volatility conditions, we evaluated the errors (mean, median, standard deviation (std) and median absolute deviation (MAD)) for four volatility clusters: very low (VL); low (L); high (H); and very high (VH) (Table 8). The clusters are calculated taking the 50, 75 and 95 percentiles of the smoothed volatility over time, using a 10-day centered moving average and moving variance of all the assets. Specifically, for the moving average, we consider the following ranges: 0 to 0.50 (up to 50%) for VL; 0.50 to 0.85 (up to 75%) for L; 0.85 to 2.80 (up to 95%) for H; and 2.80 to 13.92 (up to 100%) for VH. For the moving variance the ranges are: 0 to 1.18 (up to 25%) for VL; 1.18 to 1.79 (up to 75%) for L; 1.79 to 4.38 (up to 95%) for H; and 4.38 to 15.60 (up to 100%) for VH. As can be seen from the DM test results in Table 9, the LSTM-1 performs better than the multivariate counterpart for relatively low volatility periods, while having inferior performance for higher volatility ones. The LSTM-29 is never worse than the R-GARCH, slightly worse than the GJR-MEM for low volatilities and always statistically better in high volatility settings.

However, it is worth noticing that the difference between LSTM-1 and LSTM-29 seen from Table 8 is in practice negligible, valuing 0.010 (VL) and 0.024 (L) for the mean, 0.014 (VL) and 0.030 (L) for the median. The real impact is made by the LSTM-29 within the VH volatility regime, where the difference to LSTM-1, R-GARCH, GJR-MEM is, respectively, 10.61, 7.17, and 2.40 for the mean and 0.59, 1.35, and 0.92 for the median. Considering that the risk in trading assets is considerable at higher volatility, the VH cluster is also the most important to pay attention to.

In all regimes, we observed the tendency of LSTM-29 to provide larger values of volatility when compared to R-GARCH and GJR-MEM estimates, and to be more conservative from a risk management perspective.

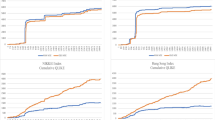

Figure 5 outlines the cumulative MSE recorded by the considered models in the four different volatility regimes. These curves are plotted by sorting the errors in decreasing order so that larger errors come first, which is the reason of the up-sloped shapes of the curves: they outline the tendency of models to accumulate larger errors along the experimentation. One can observe that the LSTM-29 performance is always better than the other models in regimes of H and VH volatility. In VL and L volatility regimes, the LSTM-29 is a little worse than GJR-MEM, but still better than R-GARCH in all considered regimes. This is not surprising as the R-GARCH and GJR-MEM are econometric models of volatility, while the LSTM is unaware of the underlying stochastic process. Instead, the LSTM-1 achieved a better accuracy for VL and L regimes, but performed poorly for the VH volatility regime. For completeness, the LSTM-29 was also trained without the index fund SPY, showing consistent results.

To investigate whether the proposed model is able to accurately predict volatility level throughout extreme market events, we also considered its predictive performance during the 2007–2008 crisis (in particular, focusing on the time span of 200 trading days starting from the 1st July 2007).

Table 10 shows the MSE scored by each model in the initial 1050 days (pre-crisis) and the last 200 days (in-crisis). As already shown in Fig. 5, the LSTM-1 and GJR-MEM are slightly better in the forecast within low volatility regimes (pre-crisis), closely followed by the LSTM-29. On the other hand, during the crisis period, the LSTM-29 performed better than LSTM-1 and R-GARCH in 28 out of 29 cases, and in 20 out of 29 cases when compared to GJR-MEM. Furthermore, R-GARCH was never able to achieve the best forecasting performance for any of the assets during both the pre-crisis and in-crisis periods.

In order to evaluate how much model A is better than model B, we compute their MSE ratio as:

which gives a value greater than 1, if model B has better accuracy than model A, and smaller than 1 otherwise. This metric has been applied in order to compare the four models performance during the pre-crisis and in-crisis periods.

As can be seen from Fig. 6a, the LSTM-29 is performing better than LSTM-1 for 28 out of 29 assets (i.e., all except for the MCD—the only point below the reference line) during the in-crisis period, with up to 1.8 MSE ratio.

When compared to the R-GARCH model (Fig. 6b), the LSTM-29 is showing similar performance. Again, the MCD is better predicted by the R-GARCH in both periods and three other assets are with worsened MSE ratio but are still better predicted by the LSTM-29. The remaining 25 assets showed an improved performance of LSTM-29 during the in-crisis period.

Lastly, the comparison with GJR-MEM (Fig. 6c), shows the LSTM-29 with increased accuracy on 15 assets during the 200 high risk days. Five assets are with slightly worsened prediction during the in-crisis period, five assets have close prediction accuracy (at near 1 ratio), and three assets (i.e., INTC, MSFT, and SPY) are better predicted by the GJR-MEM for both before and during the crisis.

Overall, the above discussed empirical results suggest that the use of the LSTM approach for volatility forecasting can be particularly profitable in turbulent periods where the economic pay-off derived from generation of more accurate volatility forecasts is potentially more substantial than those in more tranquil periods.

4.2 NASDAQ 100

Results for the NASDAQ 100 dataset are reported in Tables 11, 12 and Fig. 7. The latter shows the models’ cumulative MSE profile in the four volatility regimes for the NASDAQ 100 dataset. As already observed with the DJI 500 (Fig. 5), the proposed method generally achieves better accuracy when compared to the R-GARCH and GJR-MEM. In this experiment, the univariate LSTM-1 not only outperforms the state-of-the-art methods, but also the multivariate counterpart (LSTM-92) in all volatility regimes.

Table 11 reports the errors (mean, median, standard deviation (std) and median absolute deviation (MAD)) for the four volatility regimes. As it can be seen from Fig. 7, the LSTM-1 has smaller errors when compared to all other methods, for both moving average and moving variance volatilities over time. The mean/std are particularly high due to the difference in magnitudes across the 92 assets, which is also evident when using the more robust median/MAD metrics.

Furthermore, the DM test (Table 12) is used to statistically assess the difference in errors between the best model (LSTM-1) and the others (LSTM-92, R-GARCH and GJR-MEM). The DM test shows the LSTM-1 to be statistically better than the R-GARCH in all volatility regimes (p-value \(<0.05\)), better than LSTM-92 in three volatility regimes (i.e., VL, H and VH) for moving variance, and in another three regimes (i.e., L, H and VH) for the moving variance. In the case of GJR-MEM, the LSTM-1 results are statistically better in two volatility settings (i.e., VL and H).

As it can be seen, the LSTM-1 outperforms the multivariate model for the NASDAQ data. This limitation could be due to the fact that while we are increasing the number of assets, the number of samples in the time series is shorter (i.e., curse of dimensionality). To allow the multivariate model to better learn the assets’ interactions, there is the need for more data points.

5 Comparison with recurrent neural networks (RNN)

Eventually, we compare the proposed deep model with the classic Elman Network (also known as Simple Recurrent Network) (Elman 1990) on both DJI 500 and NASDAQ 100. The Elman RNN topology only stores the previous values of the hidden units, thus being only able to exploit information from the most recent past. This comparison is carried out to further justify the use of the more complex LSTM model. Table 13 presents the mean/std and median/MAD of the two methods (both univariate and multivariate) for the two datasets. As can be seen, the LSTMs performances are generally better than the RNN counterparts, achieving lower estimate errors for all analyzed volatility regimes and across all metrics (with only few exceptions for RNN-29 with moving variance). Furthermore, Fig. 8 illustrates the cumulative errors for the DJI 500 (Fig. 8a) and NASDAQ 100 (Fig. 8b) datasets. As can be observed, the error profiles of both univariate and multivariate LSTM are better (lower cumulative error) than those achieved by the two compared RNN models. This result further acknowledges the ability of more complex time series models to exploit both short- and long-term dependencies in the available data.

6 Conclusion

In this paper, we investigated the profitability of using LSTM for forecasting daily stock market volatility in order to support decision making in risk management applications. We applied the model to a panel of 29 assets representative of the Dow Jones Industrial Average index over the period 2002–2008, in addition to the market factor proxied by the SPY, and to 92 assets belonging to the NASDAQ 100 index within the period December 2012 to November 2017.

Both periods entail different market regimes related the outrise of two extremely critical events: the global asset management crisis (2007–2008) and the European debt crisis (2011–2012).

Our findings confirmed the superiority of the LSTM over widely popular univariate parametric benchmarks, such as the R-GARCH and GJR-MEM, when forecasting in regimes of high volatility, while still producing comparable predictions for the low/medium volatility periods. These conclusions are result of performance evaluation, using the MSE, QLIKE and the Pearson correlation index in addition to the Diebold-Mariano statistical test.

An attractive feature of the LSTM is that it easily allows taking into account volatility spillover phenomena which are dynamic dependence relationships among the volatilities of different stocks. Such dependency is hard to identify with conventional parametric approaches, due to the need of large number of parameters to be handled by the models. Furthermore, even simple models such as standard vector auto-regressive techniques are easily affected by the curse of dimensionality. On the other hand, the LSTM (belonging to an emerging class of deep learning approaches) demonstrates yet again its capability to cope with complex and highly nonlinear dependencies among the considered variables and in particular, shows superior performance in predicting and forecasting especially in high turbulence and entropy conditions for the considered high volatility stock market periods.

Results of the experiments show the ability of deep learning models to capture cross-volatility dependencies using the whole market raw data, with no background knowledge of the distribution of values and the dependency across assets over time. Therefore, application of LSTM in this framework is beneficial, and, from the perspective of practitioners in Finance, it has the relevant advantage of being almost completely data driven and model-blind from a statistical point of view. Overall, it should be remarked that the degree of complexity of the relationships linking individual asset volatilities within a market is such to prevent the specification and estimation of feasible multivariate parametric models. In this perspective, deep learning models offer a highly valuable tool for making robust and accurate inference on complex phenomena such as volatility spillovers, contagion effects and volatility co-movements and, in general, for accurate risk and volatility forecasting.

Notes

The data are publicly available on the following Zenodo repository https://zenodo.org/record/2540818.

References

Acerbi C, Tasche D (2002) Expected shortfall: a natural coherent alternative to value at risk. Econ Notes 31(2):379–388 https://doi.org/10.1111/1468-0300.00091

Andersen TG, Bollerslev T, Diebold FX (2007) Roughing it up: Including jump components in the measurement, modeling, and forecasting of return volatility. Rev Econ Stat 89(4):701–720

Andersen TG, Teräsvirta T (2009) Realized volatility. In: Handbook of financial time series. Springer, pp 555–575

Barndorff-Nielsen OE, Hansen PR, Lunde A, Shephard N (2011) Multivariate realised kernels: consistent positive semi-definite estimators of the covariation of equity prices with noise and non-synchronous trading. J Econometr 162(2):149–169

Barndorff-Nielsen OE, Shephard N (2005) Variation, jumps, market frictions and high frequency data in financial econometrics. Nuffield College Economics Working Paper, vol 1, no 1, pp 1–5

Bauwens L, Laurent S, Rombouts JV (2006) Multivariate garch models: a survey. J Appl Economet 21(1):79–109

Bianchi FM, Maiorino E, Kampffmeyer MC, Rizzi A, Jenssen R (2017) Recurrent neural networks for short-term load forecasting: an overview and comparative analysis. Springer

Blume ME (1971) On the assessment of risk. J Financ 26(1):1–10

Bollerslev T (1986) Generalized autoregressive conditional heteroskedasticity. J Econometr 31:307–327

Chakraborty K, Mehrotra K, Mohan CK, Ranka S (1992) Forecasting the behavior of multivariate time series using neural networks. Neural Netw 5(6):961–970

Chen XB, Gao J, Li D, Silvapulle P (2018) Nonparametric estimation and forecasting for time-varying coefficient realized volatility models. J Business Econ Stat 36(1):88–100

Cho K, van Merrienboer B, Gulcehre C, Bahdanau D, Bougares F, Schwenk H, Bengio Y (2014) Learning phrase representations using rnn encoder-decoder for statistical machine translation

Diebold FX, Mariano RS (2002) Comparing predictive accuracy. J Business Econ Stat 20(1):134–144. https://doi.org/10.1198/073500102753410444

Dunis CL, Laws J, Sermpinis G (2010) Modelling commodity value at risk with higher order neural networks. Appl Finan Econ 20(7):585–600

Elman JL (1990) Finding structure in time. Cogn Sci 14(2):179–211

Engle R (2002) New frontiers for arch models. J Appl Econometr 17(5):425–446

Engle RF, Russell JR (1998) Autoregressive conditional duration: a new model for irregularly spaced transaction data. Econometrica 1127–1162

Fischer T, Krauss C (2018) Deep learning with long short-term memory networks for financial market predictions. Eur J Oper Res 270(2):654–669

Gerlach R, Wang C (2016) Forecasting risk via realized garch, incorporating the realized range. Quanti Finance 16(4):501–511

Gers FA, Eck D, Schmidhuber J (2002) Applying lstm to time series predictable through time-window approaches. In: Neural nets WIRN Vietri-01. Springer, pp 193–200

Gers FA, Schmidhuber J (2001) Long short-term memory learns context free and context sensitive languages. In: Artificial neural nets and genetic algorithms. Springer, pp 134–137

Gers FA, Schmidhuber J, Cummins F (1999) Learning to forget: Continual prediction with lstm. Neural Comput 850–855

Gers FA, Schraudolph NN, Schmidhuber J (2002) Learning precise timing with lstm recurrent networks. J Mach Learn Res 3(1):115–143

Glosten LR, Jagannathan R, Runkle DE (1993) On the relation between the expected value and the volatility of the nominal excess return on stocks. J Financ 48(5):1779–1801

Graves A (2013) Generating sequences with recurrent neural networks. arXiv preprint arXiv:1308.0850

Graves A, Jaitly N, Mohamed AR (2013) Hybrid speech recognition with deep bidirectional lstm. In: 2013 IEEE workshop on automatic speech recognition and understanding (ASRU). IEEE. pp 273–278. IEEE

Graves A, Wayne G, Danihelka I (2014) Neural turing machines

Hamilton J, Susmel R (1994) Autoregressive conditional heteroskedasticity and changes in regime. J Econometr 64(1):307–333. https://doi.org/10.1016/0304-4076(94)90067-1

Han H, Zhang S (2012) Non-stationary non-parametric volatility model. Economet J 15(2):204–225

Han S, Kang J, Mao H, Hu Y, Li X, Li Y, Xie D, Luo H, Yao S, Wang Y et al (2017) Ese: Efficient speech recognition engine with sparse lstm on fpga. In: Proceedings of the 2017 ACM/sigda international symposium on field-programmable gate arrays. ACMM, pp 75–84

Hansen PR, Huang Z, Shek HH (2012) Realized garch: a joint model for returns and realized measures of volatility. J Appl Economet 27(6):877–906

Hirose N, Tajima R (2017) Modeling of rolling friction by recurrent neural network using lstm. In: 2017 IEEE International conference on robotics and automation (ICRA). IEEE, pp. 471–6478

Hochreiter S, Schmidhuber J (1997) Long short-term memory. Neural Comput 9(8):1735–1780

Huang Z, Wang T, Hansen PR (2017) Option pricing with the realized garch model: an analytical approximation approach. J Futur Mark 37(4):328–358

Itakura F (1975) Minimum prediction residual principle applied to speech recognition. IEEE Trans Acoust Speech Signal Process 23(1):67–72

Jozefowicz R, Zaremba W, Sutskever I (2015) An empirical exploration of recurrent network architectures. In: Proceedings of the 32nd international conference on machine learning (ICML-15), pp 2342–2350

Kalchbrenner N, Danihelka I, Graves A (2015) Grid long short-term memory

Kim CJ, Kim MJ (1996) Transient fads and the crash of ’87. J Appl Economet 11(1):41–58. https://doi.org/10.1002/(SICI)1099-1255(199601)11:1<41::AID-JAE364>3.0.CO;2-R

Kourentzes N, Barrow DK, Crone SF (2014) Neural network ensemble operators for time series forecasting. Expert Syst Appl 41(9):4235–4244

Ladyzynski P, Zbikowski K, Grzegorzewski P (2013) Stock trading with random forests, trend detection tests and force index volume indicators. In: International conference on artificial intelligence and soft computing. Springer, pp 441–452

Langrock R, Michelot T, Sohn A, Kneib T (2015) Semiparametric stochastic volatility modelling using penalized splines. Comput Stat 30(2):517–537

Lee B, Baek J, Park S, Yoon S (2016) deeptarget: end-to-end learning framework for microrna target prediction using deep recurrent neural networks. In: Proceedings of the 7th ACM international conference on bioinformatics, computational biology, and health informatics. ACM, pp 434–442

Leifert G, Strauß T, Grüning T, Wustlich W, Labahn R (2016) Cells in multidimensional recurrent neural networks. J Mach Learn Res 17(1):3313–3349

Maciel L, Ballini R, Gomide F (2017) Evolving possibilistic fuzzy modeling for realized volatility forecasting with jumps. IEEE Trans Fuzzy Syst 25(2):302–314

McAleer M, Medeiros MC (2011) Forecasting realized volatility with linear and nonlinear univariate models. J Econ Surv 25(1):6–18

Nápoles G, Vanhoenshoven F, Falcon R, Vanhoof K (2020) Nonsynaptic error backpropagation in long-term cognitive networks. IEEE Trans Neural Netw Learn Syst 31(3):865–875

Pakel C, Shephard N, Sheppard K (2011) Nuisance parameters, composite likelihoods and a panel of garch models. Statistica Sinica, pp 307–329

Pascanu R, Mikolov T, Bengio Y (2013) On the difficulty of training recurrent neural networks. In: International conference on machine learning, pp 1310–1318

Patton AJ (2011) Volatility forecast comparison using imperfect volatility proxies. J Econometr 160(1):246–256

Poggio T, Mhaskar H, Rosasco L, Miranda B, Liao Q (2017) Why and when can deep-but not shallow-networks avoid the curse of dimensionality: a review. Int J Autom Comput 14(5):503–519

Quan Z, Zeng W, Li X, Liu Y, Yu Y, Yang W (2020) Recurrent neural networks with external addressable long-term and working memory for learning long-term dependences. IEEE Trans Neural Netw Learn Syst 31(3):813–826

Rivest F, Kohar R (2020) A new timing error cost function for binary time series prediction. IEEE Trans Neural Netw Learn Syst 31(1):174–185

Shi X, Chen Z, Wang H, Yeung DY, Wong Wk, Woo Wc (2015) Convolutional lstm network: a machine learning approach for precipitation nowcasting. In: Proceedings of the 28th international conference on neural information processing systems—volume 1, NIPS’15. MIT Press, Cambridge, MA, USA, pp 802–810

Sundermeyer M, Ney H, Schlüter R (2015) From feedforward to recurrent lstm neural networks for language modeling. IEEE Trans Audio Speech Lang Process 23(3):517–529

Sutskever I, Vinyals O, Le QV (2014) Sequence to sequence learning with neural networks. In: Advances in neural information processing systems, pp 3104–3112

Suwajanakorn S, Seitz SM, Kemelmacher-Shlizerman I (2017) Synthesizing obama: learning lip sync from audio. ACM Trans Graph (TOG) 36(4):95

Taylor JW (2019) Forecasting value at risk and expected shortfall using a semiparametric approach based on the asymmetric laplace distribution. J Business Econ Stat 37(1):121–133. https://doi.org/10.1080/07350015.2017.1281815

Tran NT, Luong VT, Nguyen NLT, Nghiem MQ (2016) Effective attention-based neural architectures for sentence compression with bidirectional long short-term memory. In: Proceedings of the seventh symposium on information and communication technology. ACM, pp 123–130

Troiano L, Villa E, Loia V (2018) Replicating a trading strategy by means of lstm for financial industry applications. IEEE Trans Industr Inf 14(7):3226–3234. https://doi.org/10.1109/TII.2018.2811377

Visser MP (2011) Garch parameter estimation using high-frequency data. J Financ Economet 9(1):162–197

Wan L, Zeiler M, Zhang S, Le Cun Y, Fergus R (2013) Regularization of neural networks using dropconnect. In: International conference on machine learning, pp 1058–1066

Wang C (2017) Rra: Recurrent residual attention for sequence learning. arXiv preprint arXiv:1709.03714

Wang C, Chen Q, Gerlach R (2018) Bayesian realized-garch models for financial tail risk forecasting incorporating the two-sided weibull distribution. Quant Finance 1–26

Wang C, Niepert M (2019) State-regularized recurrent neural networks. arXiv preprint arXiv:1901.08817

Wang L, Zeng Y, Chen T (2015) Back propagation neural network with adaptive differential evolution algorithm for time series forecasting. Expert Syst Appl 42(2):855–863

Xu K, Ba J, Kiros R, Cho K, Courville A, Salakhudinov R, Zemel R, Bengio Y (2015) Show, attend and tell: neural image caption generation with visual attention. In: International conference on machine learning, pp 2048–2057

Yao K, Peng B, Zhang Y, Yu D, Zweig G, Shi Y (2014) Spoken language understanding using long short-term memory neural networks. In: Spoken language technology workshop (SLT), 2014 IEEE. IEEE, pp 189–194

Yu Y, Si X, Hu C, Zhang J (2019) A review of recurrent neural networks: Lstm cells and network architectures. Neural Comput 31(7):1235–1270. https://doi.org/10.1162/neco_a_01199

Zaytar MA, El Amrani C (2016) Sequence to sequence weather forecasting with long short-term memory recurrent neural networks. Int J Comput Appl 143(11)

Zhang K, Teo KL (2015) A penalty-based method from reconstructing smooth local volatility surface from American options. J Ind Manag Optim 11:631–644

Funding

The authors did not receive support from any organization for the submitted work.

Author information

Authors and Affiliations

Contributions

AP contributed to software, investigation, validation and writing (original draft, and review and editing); LT contributed to methodology, validation and writing (review and editing); AS contributed to software and investigation; IJ performed supervision and writing (review and editing); GS performed formal analysis, validation and writing (original draft); RT performed conceptualization, methodology and writing (original draft, and review and editing); MR performed conceptualization, methodology and writing (original draft).

Corresponding author

Ethics declarations

Conflict of interest

The authors have no conflicts of interest to declare that are relevant to the content of this article.

Human and animal rights

The research did not involve human participants and/or animals.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Petrozziello, A., Troiano, L., Serra, A. et al. Deep learning for volatility forecasting in asset management. Soft Comput 26, 8553–8574 (2022). https://doi.org/10.1007/s00500-022-07161-1

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-022-07161-1