Abstract

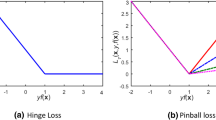

The K-nearest neighbor-weighted multi-class twin support vector machine (KWMTSVM) is an effective multi-classification algorithm which utilizes the local information of all training samples. However, it is easily affected by the noises and outliers owing to the use of the hinge loss function. That is because the outlier will obtain a huge loss and become the support vector, which will shift the separating hyperplane inappropriately. To reduce the negative influence of outliers, we use the ramp loss function to replace the hinge loss function in KWMTSVM and propose a novel sparse and robust multi-classification algorithm named ramp loss K-nearest neighbor-weighted multi-class twin support vector machine (RKWMTSVM) in this paper. Firstly, the proposed RKWMTSVM restricts the loss of outlier to a fixed value, thus the negative influence on the construction of hyperplane is suppressed and the classification performance is further improved. Secondly, since outliers will not become support vectors, the RKWMTSVM is a sparser algorithm, especially compared with KWMTSVM. Thirdly, because RKWMTSVM is a non-differentiable non-convex optimization problem, we use the concave–convex procedure (CCCP) to solve it. In each iteration of CCCP, the proposed RKWMTSVM solves a series of KWMTSVM-like problems. That also means RKWMTSVM inherits the merits of KWMTSVM, namely, it can exploit the local information of intra-class to improve the generalization ability and use inter-class information to remove the redundant constraints and accelerate the solution process. In the end, the clipping dual coordinate descent (clipDCD) algorithm is employed into our RKWMTSVM to further speed up the computational speed. We do numerical experiments on twenty-four benchmark datasets. The experimental results verify the validity and effectiveness of our algorithm.

Similar content being viewed by others

Data availability

Enquiries about data availability should be directed to the authors.

References

An Y, Xue H (2022) Indefinite twin support vector machine with dc functions programming. Pattern Recognit 121:108195

Angulo C, Parra X, Català A (2003) K-svcr. A support vector machine for multi-class classification. Neurocomputing 55(1–2):57–77

Balasundaram S, Gupta D, Kapil (2014) 1-norm extreme learning machine for regression and multiclass classification using newton method. Neurocomputing 128:4–14

Bamakan SMH, Wang H, Shi Y (2017) Ramp loss k-support vector classification-regression; a robust and sparse multi-class approach to the intrusion detection problem. Knowl Based Syst 126:113–126

Borah P, Gupta D (2020) Functional iterative approaches for solving support vector classification problems based on generalized huber loss. Neural Comput Appl 32(13):9245–9265

Borah P, Gupta D (2021) Robust twin bounded support vector machines for outliers and imbalanced data. Appl Intell 51:5314–5343

Deepak G, Bharat R, Parashjyoti B (2018) A fuzzy twin support vector machine based on information entropy for class imbalance learning. Neural Comput Appl 31:1–12

Demsar J (2006) Statistical comparisons of classifiers over multiple data sets. J Mach Learn Res 7(1):1–30

Friedrichs F, Igel C (2005) Evolutionary tuning of multiple svm parameters. Neurocomputing 64(1):107–117

Gupta D (2017) Training primal k-nearest neighbor based weighted twin support vector regression via unconstrained convex minimization. Appl Intell 47(3):1–30

Gupta D, Richhariya B (2018) Entropy based fuzzy least squares support vector machine for class imbalance learning. Appl Intell 48:4212–4231

Gutierrez PA, Perez-Ortiz M, Sanchez-Monedero J, Fernandez-Navarro F, Hervas-Martinez C (2016) Ordinal regression methods: survey and experimental study. IEEE T Knowl Data En 28(1):127–146

Hamdia KM, Ghasemi H, Zhuang X, Alajlan N, Rabczuk T (2018) Sensitivity and uncertainty analysis for flexoelectric nanostructures. Comput Method Appl M 337:95–109

Hazarika BB, Gupta D (2021) Density-weighted support vector machines for binary class imbalance learning. Neural Comput Appl 33:4243–4261

Holm S (1979) A simple sequentially rejective multiple test procedure. Scand J Stat 6(2):65–70

Hsu CW, Lin CJ (2002) A comparison of methods for multiclass support vector machines. IEEE Trans Neural Netw 13(2):415–425

Huang X, Shi L, Suykens JAK (2014a) Ramp loss linear programming support vector machine. J Mach Learn Res 15(1):2185–2211

Huang X, Shi L, Suykens JAK (2014b) Support vector machine classifier with pinball loss. IEEE Trans Pattern Anal Mach Intell 36(5):984–997

Jayadeva KR, Chandra S (2007) Twin support vector machines for pattern classification. IEEE Trans Pattern Anal Mach Intell 29(5):905–910

Jha S, Mehta AK (2020) A hybrid approach using the fuzzy logic system and the modified genetic algorithm for prediction of skin cancer. Neural Process Lett

Kumar D, Thakur M (2018) All-in-one multicategory least squares nonparallel hyperplanes support vector machine. Pattern Recogn Lett 105:165–174

Kumar MA, Gopal M (2009) Least squares twin support vector machines for pattern classification. Expert Syst Appl 36(4):7535–7543

Li S, Fang H, Liu X (2018) Parameter optimization of support vector regression based on sine cosine algorithm. Expert Syst Appl 91:63–77

Lipp T, Boyd S (2016) Variations and extension of the convex-concave procedure. Optim Eng 17(2):263–287

Liu D, Shi Y, Tian Y (2015) Ramp loss nonparallel support vector machine for pattern classification. Knowl Based Syst 85:224–233

Liu D, Shi Y, Tian Y, Huang X (2016) Ramp loss least squares support vector machine. J Comput Sci 14:61–68

Long T, Yj T, Wj L, Pm P (2020) Structural improved regular simplex support vector machine for multiclass classification. Appl Soft Comput 91(106):235

Lu S, Wang H, Zhou Z (2019) All-in-one multicategory ramp loss maximum margin of twin spheres support vector machine. Appl Intell 49:2301–2314

Mir A, Nasiri JA (2018) Knn-based least squares twin support vector machine for pattern classification. Appl Intell 48(12):4551–4564

Nasiri JA, Moghadam CN, Jalili S (2015) Least squares twin multi-class classification support vector machine. Pattern Recognit 48(3):984–992

Ortigosa HJ, Inza I, Lozano JA (2017) Measuring the class-imbalance extent of multi-class problems. Pattern Recogn Lett 98:32–38

Pan X, Luo Y, Xu Y (2015) K-nearest neighbor based structural twin support vector machine. Knowl Based Syst 88:34–44

Peng X (2011) Building sparse twin support vector machine classifiers in primal space. Inf Sci 181(18):3967–3980

Peng X (2011) Tpmsvm: a novel twin parametric-margin support vector machine for pattern recognition. Pattern Recognit 44(10):2678–2692

Peng X, Chen D (2018) Ptsvrs: regression models via projection twin support vector machine. Inf Sci 435(1):1–14

Peng X, Chen D, Kong L (2014) A clipping dual coordinate descent algorithm for solving support vector machines. Knowl Based Syst 71:266–278

Peng X, Xu D, Kong L, Chen D (2016) L1-norm loss based twin support vector machine for data recognition. Inf Sci 340–341:86–103

Prasad SC, Balasundaram S (2021) On lagrangian l2-norm pinball twin bounded support vector machine via unconstrained convex minimization. Inf Sci 571:279–302

Qi Z, Tian Y, Yong S (2013) Robust twin support vector machine for pattern classification. Pattern Recogn 46(1):305–316

Qi Z, Tian Y, Shi Y (2014) A nonparallel support vector machine for a classification problem with universum learning. J Comput Appl Math 263:288–298

Rastogi R, Pal A, Chandra S (2018) Generalized pinball loss svms. Neurocomputing 322:151–165

Rezvani S, Wang X (2021) Class imbalance learning using fuzzy art and intuitionistic fuzzy twin support vector machines. Inf Sci 278:659–682

Richhariya B, Tanveer M (2020) A reduced universum twin support vector machine for class imbalance learning. Pattern Recognit 102(107):150

Salvador G, Alberto F, Luengo J, Francisco H (2010) Advanced nonparametric tests for multiple comparisons in the design of experiments in computational intelligence and data mining: Experimental analysis of power. Inf Sci 180(10):2044–2064

Salzberg SL (1997) On comparing classifiers: pitfalls to avoid and a recommended approach. Data Min Knowl Disc 1(3):317–328

Schölkopf B, Platt J, Hofmann, T (2007) An efficient method for gradient-based adaptation of hyperparameters in SVM Models. Adv Neural Inf Process Syst 19: Proceedings of the 2006 Conference, MIT Press, 673–680

Sharma S, Rastogi R, Chandra S (2021) Large-scale twin parametric support vector machine using pinball loss function. IEEE T Syst Man CY-S 51(2):987–1003

Shi Y (2012) Twin support vector machine with universum data. Neural Netw 36:112–119

Simes RJ (1986) An improved bonferroni procedure for multiple tests of significance. Biometrika 73(3):751–754

Suykens JAK, Vandewalle J (1999) Least squares support vector machine classifiers. Neural Process Lett 9(3):293–330

Tang L, Tian Y, Li W, Pardalos PM (2021) Valley-loss regular simplex support vector machine for robust multiclass classification. Knowl-Based Syst 216(3):106–801

Tanveer M (2014) Robust and sparse linear programming twin support vector machines. Cogn Comput 7(1):137–149

Tanveer M, Aruna T, Rahul C, Jalan S (2019) Sparse pinball twin support vector machines. Appl Soft Comput 78:164–175

Tanveer M, Gautam C, Suganthan PN (2019) Comprehensive evaluation of twin svm based classifiers on uci datasets. Appl Soft Comput 83:105617

Tanveer M, Sharma A, Suganthan PN (2019) General twin support vector machine with pinball loss function. Inf Sci 494:311–327

Tanveer M, Sharma A, Suganthan PN (2021) Least squares knn-based weighted multiclass twin svm. Neurocomputing 459:454–464

Tanveer M, Sharma S, Rastogi R (2021) Sparse support vector machine with pinball loss. T Emerg Telecommun T 32(2):e3820

Tharwat A, Hassanien AE, Elnaghi BE (2017) A ba-based algorithm for parameter optimization of support vector machine. Pattern Recogn Lett 93:13–22

Tian Y, Ju X, Qi Z (2014) Efficient sparse nonparallel support vector machines for classification. Neural Comput Appl 24(5):1089–1099

Vapnik VN (1995) The nature of statistical learning theory. Springer, Berlin

Wang H, Zhou Z (2017) An improved rough margin-based \(\nu \)-twin bounded support vector machine. Knowl Based Syst 128:125–138

Wang H, Lu S, Zhou Z (2020) Ramp loss for twin multi-class support vector classification. Int J Syst Sci 51(8):1448–1463

Wang Z, Shao YH, Wu TR (2013) A ga-based model selection for smooth twin parametric-margin support vector machine. Pattern Recognit 46(8):2267–2277

Xiao Y, Wang H, Xu W (2017) Ramp loss based robust one-class svm. Pattern Recogn Lett 85:15–20

Xu Y (2016) K-nearest neighbor-based weighted multi-class twin support vector machine. Neurocomputing 205:430–438

Xu Y, Wang L, Zhong P (2012) A rough margin-based \(\nu \)-twin support vector machine. Neural Comput Appl 21:1307–1317

Xu Y, Guo R, Wang L (2013) A twin multi-class classification support vector machine. Cogn Comput 5(4):580–588

Xu Y, Yu J, Zhang Y (2014) Knn-based weighted rough v-twin support vector machine. Knowl Based Syst 71:303–313

Ye Q, Zhao C, Gao S, Zheng H (2012) Weighted twin support vector machines with local information and its application. Neural Netw 35:31–39

Yuille A, Rangarajan A (2003) The concave-convex procedure. Neural Comput 14(4):915–936

Zhou L, Wang Q, Fujita H (2017) One versus one multi-class classification fusion using optimizing decision directed acyclic graph for predicting listing status of companies. Inform Fusion 36:80-89

Zhu F, Yang J, Gao C, Xu S, Ye N, Yin T (2016) A weighted one-class support vector machine. Neurocomputing 189(12):1–10

Acknowledgements

The authors gratefully acknowledge the helpful comments and suggestions of the reviewers, which have improved the presentation.

Funding

This work was supported in part by the Fundamental Research Funds for the Central Universities (No. 2021ZY92, BLX201928), National Natural Science Foundation of China (No.11671010) and Beijing Natural Science Foundation (No. 4172035).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

Author Huiru Wang declares that she has no conflict of interest. Author Yitian Xu declares that he has no conflict of interest. Author Zhijian zhou declares that she has no conflict of interest.

Ethical approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Informed consent

Informed consent was obtained from all individual participants included in the study.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Wang, H., Xu, Y. & Zhou, Z. Ramp loss KNN-weighted multi-class twin support vector machine. Soft Comput 26, 6591–6618 (2022). https://doi.org/10.1007/s00500-022-07040-9

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-022-07040-9