Abstract

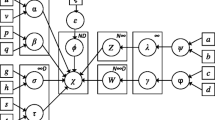

Data clustering is a fundamental unsupervised learning approach that impacts several domains such as data mining, computer vision, information retrieval, and pattern recognition. In this work, we develop a statistical framework for data clustering which uses Dirichlet processes and asymmetric Gaussian distributions. The parameters of this framework are learned using Markov Chain Monte Carlo inference approaches. We also integrate a feature selection technique to choose the features that are most informative in order to construct an appropriate model in terms of clustering accuracy. This paper reports results based on experiments that concern dynamic textures clustering as well as scene categorization.

Similar content being viewed by others

References

Adams S, Beling PA (2017) A survey of feature selection methods for gaussian mixture models and hidden markov models. Artif Intell Rev 52:1739–1779

Andonovski G, Mušič G, Blažič S, Škrjanc I (2018) Evolving model identification for process monitoring and prediction of non-linear systems. Eng Appl Artif Intell 68:214–221

Antoniak CE (1974) Mixtures of dirichlet processes with applications to bayesian nonparametric problems. Ann Statist 2(6):1152–1174

Blei DM, Jordan MI (2006) Variational inference for dirichlet process mixtures. Bayesian Anal 1(1):121–143

Bouguila N (2009) A model-based approach for discrete data clustering and feature weighting using MAP and stochastic complexity. IEEE Trans Knowl Data Eng 21(12):1649–1664

Bouguila N, Ziou D (2006) Unsupervised selection of a finite dirichlet mixture model: An mml-based approach. IEEE Trans Knowl Data Eng 18(8):993–1009

Bouguila N, Ziou D (2012) A countably infinite mixture model for clustering and feature selection. Knowl Inf Syst 33(2):351–370

Boutemedjet S, Bouguila N, Ziou D (2009) A hybrid feature extraction selection approach for high-dimensional non-gaussian data clustering. IEEE Trans Pattern Anal Mach Intell 31:1429–1443

Boutemedjet S, Ziou D, Bouguila N (2010) Model-based subspace clustering of non-gaussian data. Neurocomputing 73(10–12):1730–1739

Bouveyron C, Brunet-Saumard C (2014) Discriminative variable selection for clustering with the sparse fisher-em algorithm. Comput Stat 29(3):489–513

Channoufi I, Bourouis S, Bouguila N, Hamrouni K (2018) Color image segmentation with bounded generalized gaussian mixture model and feature selection. In: 2018 4th International conference on advanced technologies for signal and image processing (ATSIP), pp 1–6

Channoufi I, Bourouis S, Bouguila N, Hamrouni K (2018) Image and video denoising by combining unsupervised bounded generalized gaussian mixture modeling and spatial information. Multimed Tools Appl 77(19):25591–25606

Cheung Y, Zeng H (2006) Feature weighted rival penalized em for gaussian mixture clustering: Automatic feature and model selections in a single paradigm. In: 2006 International Conference on Computational Intelligence and Security, vol 1, pp 633–638

Csurka G, Dance CR, Fan L, Willamowski J, Bray C (2004) Visual categorization with bags of keypoints. In: In Workshop on Statistical Learning in Computer Vision, ECCV, pp 1–22

Doretto G, Chiuso A, Wu YN, Soatto S (2003) Dynamic textures. Int J Comp Vision 51(2):91–109

Elguebaly T, Bouguila N (2011) Bayesian learning of finite generalized gaussian mixture models on images. Sig Proces 91(4):801–820

Elguebaly T, Bouguila N (2012) Generalized gaussian mixture models as a nonparametric bayesian approach for clustering using class-specific visual features. J Vis Comun Image Represent 23(8):1199–1212

Elguebaly T, Bouguila N (2014) Background subtraction using finite mixtures of asymmetric gaussian distributions and shadow detection. Mach Vis Appl 25(5):1145–1162

Elguebaly T, Bouguila N (2015) Simultaneous high-dimensional clustering and feature selection using asymmetric gaussian mixture models. Image Vis Comput 34:27–41

Fan W, Bouguila N (2013) Learning finite beta-liouville mixture models via variational bayes for proportional data clustering. In: Proceedings of the Twenty-Third International Joint Conference on Artificial Intelligence, AAAI Press, IJCAI ’13, pp 1323–1329

Fan W, Bouguila N (2015) Dynamic textures clustering using a hierarchical pitman-yor process mixture of dirichlet distributions. In: 2015 IEEE International Conference on Image Processing (ICIP), pp 296–300

Fu S, Bouguila N (2018) Bayesian learning of finite asymmetric gaussian mixtures. In: Recent Trends and Future Technology in Applied Intelligence—31st International Conference on Industrial Engineering and Other Applications of Applied Intelligent Systems, IEA/AIE 2018, Montreal, QC, Canada, June 25-28, 2018, Proceedings, pp 355–365

Galimberti G, Manisi A, Soffritti G (2018) Modelling the role of variables in model-based cluster analysis. Statist Comput 28(1):145–169

Griffin JE, Steel MFJ (2010) Bayesian nonparametric modelling with the dirichlet process regression smoother. Statist Sinica 20(4):1507–1527

Gustafson P, Carbonetto P, Thompson N, de Freitas N (2003) Bayesian feature weighting for unsupervised learning, with application to object recognition. In: AISTATS

Hyvärinen A, Hoyer P (2000) Emergence of phase- and shift-invariant features by decomposition of natural images into independent feature subspaces. Neural Comput 12(7):1705–1720

Krishnan S, Samudravijaya K, Rao P (1996) Feature selection for pattern classification with gaussian mixture models: a new objective criterion. Pattern Recognit Lett 17(8):803–809

Laptev I (2009) Improving object detection with boosted histograms. Image Vision Comput 27(5):535–544

Law MHC, Figueiredo MAT, Jain AK (2004) Simultaneous feature selection and clustering using mixture models. IEEE Trans Pattern Anal Mach Intell 26:1154–1166

Lazebnik S, Schmid C, Ponce J (2006) Beyond bags of features: Spatial pyramid matching for recognizing natural scene categories. In: 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’06), vol 2, pp 2169–2178

Li LJ, Fei-Fei L (2007) What, where and who? classifying events by scene and object recognition. In: 2007 IEEE 11th International Conference on Computer Vision pp 1–8

Marbac M, Sedki M (2017) Variable selection for model-based clustering using the integrated complete-data likelihood. Stat Comput 27(4):1049–1063

Neal RM (2000) Markov chain sampling methods for dirichlet process mixture models. J Comput Graph Stat 9(2):249–265

Oliva A, Torralba A (2001) Modeling the shape of the scene: a holistic representation of the spatial envelope. Int J Comp Vision 42(3):145–175

Pan W, Shen X (2007) Penalized model-based clustering with application to variable selection. J Mach Learn Res 8:1145–1164

Park S, Serpedin E, Qaraqe K (2013) Gaussian assumption: The least favorable but the most useful [lecture notes]. IEEE Signal Process Magaz 30(3):183–186

Péteri R, Fazekas S, Huiskes MJ (2010) DynTex: a Comprehensive Database of Dynamic Textures. Pattern Recognit Lett 31:1627–1632

Rasmussen CE (1999) The infinite gaussian mixture model. In: Proceedings of the 12th International Conference on Neural Information Processing Systems, MIT Press, Cambridge, MA, USA, NIPS’99, pp 554–560

Škrjanc I, Iglesias JA, Sanchis A, Leite D, Lughofer E, Gomide F (2019) Evolving fuzzy and neuro-fuzzy approaches in clustering, regression, identification, and classification: A survey. Inf Sci 490:344–368

Song Z, Ali S, Bouguila N (2019) Bayesian learning of infinite asymmetric gaussian mixture models for background subtraction. In: Image Analysis and Recognition—16th International Conference, ICIAR 2019, Waterloo, ON, Canada, August 27-29, 2019, Proceedings, Part I, pp 264–274

Wang C, Blei DM, Fei-Fei L (2009) Simultaneous image classification and annotation. In: 2009 IEEE Conference on Computer Vision and Pattern Recognition pp 1903–1910

Wang S, Zhu J (2008) Variable selection for model-based high-dimensional clustering and its application to microarray data. Biometrics 64(2):440–8

Zhao G, Pietikainen M (2007) Dynamic texture recognition using local binary patterns with an application to facial expressions. IEEE Trans Pattern Anal Mach Intell 29(6):915–928

Zhu J, Li LJ, Fei-Fei L, Xing EP (2010) Large margin learning of upstream scene understanding models. In: NIPS

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Ethical approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Based on the hyperparameters setting chosen in Section 4, we deduce the posteriors for all of the parameters. For parameter \(\alpha \), the posteriors depend only on the number of observations N and the number of components M, and not on how the distributions are distributed among the mixtures:

The complete posteriors for \(\mu \), \(\mu _{irr}\), \(\lambda \) and r are obtained as follows:

The complete posteriors for \(s_{ljk}\), \(s_{rjk}\), \(s_{jk}^{irr}\), \(\beta \) and w are obtained as follows:

\(N_{jk}^{re}\) and \(N_{jk}^{irr}\) are the number of observations allocated to mixture j with feature k considered as relevant and irrelevant, respectively.

The complete posteriors for feature saliency \(\phi \) with gamma parameters a and b, with \(n_{jk}\) the number of feature k relevant for component j can then be expressed by:

Rights and permissions

About this article

Cite this article

Song, Z., Ali, S. & Bouguila, N. Bayesian inference for infinite asymmetric Gaussian mixture with feature selection. Soft Comput 25, 6043–6053 (2021). https://doi.org/10.1007/s00500-021-05598-4

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-021-05598-4