Abstract

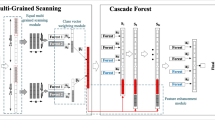

Recent researches have shown that deep forest ensemble achieves a considerable increase in classification accuracy compared with the general ensemble learning methods, especially when the training set is small. In this paper, we take advantage of deep forest ensemble and introduce the dense adaptive cascade forest (daForest). Our model has a better performance than the original cascade forest with three major features: First, we apply SAMME.R boosting algorithm to improve the performance of the model. It guarantees the improvement as the number of layers increases. Second, our model connects each layer to the subsequent ones in a feed-forward fashion, which enhances the capability of the model to resist performance degeneration. Third, we add a hyper-parameter optimization layer before the first classification layer, making our model spend less time to set up and find the optimal hyper-parameters. Experimental results show that daForest performs significantly well and, in some cases, even outperforms neural networks and achieves state-of-the-art results.

Similar content being viewed by others

References

Bache K, Lichman M (2012) UCI machine learning repository (University of California, School of Information and Computer Science). http://archive.ics.uci.edu/ml. Accessed 03 August 2012

Bahdanau D, Cho K, Bengio Y (2014) Neural machine translation by jointly learning to align and translate. In: International conference on learning representations

Bai J, Song S, Fan T, Jiao LC (2018) Medical image denoising based on sparse dictionary learning and cluster ensemble. Soft Comput 22:1467–1473

Breiman L (2001) Random forests. Mach Learn 45(1):5–32

Bulo SR, Kontschieder P (2014) Neural Decision Forests for Semantic Image Labelling. In: IEEE conference on computer vision and pattern recognition

Ciarelli PM, Oliveira E (2009) Agglomeration and elimination of terms for dimensionality reduction. In: Ninth international conference on intelligent systems design and applications, pp 547–552

Ciarelli PM, Oliveira E, Salles EOT (2010) An evolving system based on probabilistic neural network. In: Brazilian symposium on artificial neural network

Criminisi A, Shotton J (2013) Decision forests for computer vision and medical image analysis. Springer, Berlin

Freund Y, Schapire RE (1996) Experiments with a new boosting algorithm. In: International conference on machine learning

Freund Y, Schapire RE (1997) A decision-theoretic generalization of on-line learning and an application to boosting. J Comput Syst Sci 55(1):119–139

Ganin Y, Lempitsky V (2015) Unsupervised domain adaptation by backpropagation. arXiv:1409.7495v2

Gao H, Liu Z, van der Maaten L (2017) Densely connected convolutional networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, vol 1, no (2), pp 3–12

Geurts P, Ernst D, Wehenkel L (2006) Extremely randomized trees. Mach Learn 63(1):3–42

Girosi F, Jones M, Poggio T (1995) Regularization theory and neural networks architectures. Neural Comput 7(2):219–269

Goodfellow I, Bengio Y, Courville A (2016) Deep learning. MIT Press, Cambridge

Hastie T et al (2009) Multi-class adaboost. Stat Interface 2(3):349–360

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 770–778

Hinton GE, Osindero S, The Yee-Whye (2006) A fast learning algorithm for deep belief nets. Neural Comput 18(7):1527–1554

Hinton G, Deng L, Yu D, Dahl G, Mohamed A, Jaitly N, Senior A, Vanhoucke V, Nguyen P, Sainath T, Kingbury B (2012) Deep neural networks for acoustic modeling in speech recognition. IEEE Signal Process Mag 29(6):82–97

Hosni M, Idri A, Abran A, Nassif AB (2017) On the value of parameter tuning in heterogeneous ensembles effort estimation. Soft Comput 22:5977–6010

Htike KK (2018) Forests of unstable hierarchical clusters for pattern classification. Soft Comput 22:1711–1718

Kim Y (2014) Convolutional neural networks for sentence classification. arXiv:1408.5882

Kontschieder P, Fiterau M, Criminisi A, Bulo SR (2015) Deep neural decision forests. In: IEEE international conference on computer vision

Krizhenvsky A, Sutskever I, Hinton G (2012) ImageNet classification with deep convolutional neural networks. In: NIPS, pp 1097–1105

LeCun Y, Boser B, Denker JS, Henderson D, Howard RE, Hubbard W, Jackel LD (1989a) Backpropagation applied to handwritten zip code recognition. Neural Comput 1(4):541–551

LeCun Y, Bottou L, Bengio Y, Haffner P (1989b) Gradient based learning applied to document recognition. Proc IEEE 86(11):2278–2324

Liu S, Liu Z, Sun J, Liu L (2011) Application of synergetic neural network in online writeprint identification. Int J Digit Content Technol Appl 5(3):126–135

Long M, Cao Y, Wang J, Jordan MI (2015) Learning transferable features with deep adaptation networks. arXiv:1502.02791

Maas AL, Daly RE, Pham PT, Huang D, Ng AY, Potts C (2011) Learning word vectors for sentiment analysis. In: Association for computational linguistics (ACL), pp 142–150

Mnih V, Heess N, Graves A (2014) Recurrent models of visual attention. In: Advances in neural information processing systems

Pan SJ, Yang Q (2010) A survey on transfer learning. IEEE Trans Knowl Data Eng 22(10):1345–1359

Rokach L (2010) Ensemble-based classifiers. Artif Intell Rev 33(1–2):1–39

Rumelhart DE, Hinton GE, Williams RJ (1986) Learning representations by back-propagating errors. Nature 323:533–536

Russakovsky O, Deng J, Su H, Krause J, Satheesh S, Ma S, Huang Z, Karpathy A, Khosla A, Bernstein M et al (2014) Imagenet large scale visual recognition challenge. IJCV 115:211–252

Saleh AA, Weigang L (2015) A new variables selection and dimensionality reduction technique coupled with simca method for the classification of text documents. In: Proceedings of the MakeLearn and TIIM joint international conference, make learn and TIIM, pp 583–591

Schapire RE, Singer Y (1999) Improved boosting algorithms using confidence-rated predictions. Mach Learn 37(3):297–336

Segal MR (2004) Machine learning benchmarks and random forest regression. Center for Bioinformatics and Molecular Biostatistics. https://escholarship.org/uc/item/35x3v9t4

Silver D, Huang A, Maddison CJ, Guez A et al (2016) Mastering the game of Go with deep neural networks and tree search. Nature 529:484–489

Silver D, Schrittwieser J, Simonyan K, Antonoglou I et al (2017) Mastering the game of go without human knowledge. Nature 550:354–359

Simonyan K, Zisserman A (2014) Very deep convolutional networks for large-scale image recognition. arXiv:1409.1556v6

Socher R, Perelygin A, Wu J, Chuang J, Manning CD, Ng A, Potts C (2013) Recursive deep models for semantic compositionality over a sentiment treebank. In: Proceedings of the 2013 conference on empirical methods in natural language processing. pp 1631–1642

Srivastava N, Hinton G, Krizhevsky A, Sutskever I, Salakhutdinov R (2014) Dropout: a simple way to prevent neural networks from overfitting. J Mach Learn Res 15:1929–1958

Sussillo D, Barak O (2013) Opening the black box: low-dimensional dynamics in high-dimensional recurrent neural networks. Neural Comput 25(3):626–649

Viola P, Jones M (2001) Rapid object detection using a boosted cascade of simple features. Comput Vis Pattern Recognit 1:511–518

Wang L, You ZH, Xia SX, Chen X, Yan X, Zhou Y, Liu F (2018) An improved efficient rotation forest algorithm to predict the interactions among proteins. Soft Comput 22:3373–3381

Xu K, Ba JL, Kiros R, Cho K et al (2015) Show, attend and tell: neural image caption generation with visual attention. In: International conference on machine learning

Ye F (2016) Evolving the SVM model based on a hybrid method using swarm optimization techniques in combination with a genetic algorithm for medical diagnosis. Multimed Tools Appl 77(3):3889–3918

Yu D, Yao K, Su H, Li G, Seide F (2013) KL-divergence regularized deep neural network adaptation for improved large vocabulary speech recognition. In: Acoustics, speech and signal processing (ICASSP)

Zhai J, Zhang S, Zhang M, Liu X (2018) Fuzzy integral-based ELM ensemble for imbalanced big data classification. Soft Comput 22(11):3519–3531

Zhou Z-H (2012) Ensemble methods: foundations and algorithms. CRC, Boca Raton

Zhou Z-H, Feng J (2017) Deep forest: towards an alternative to deep neural networks. In: International Joint Conference on Artificial Intelligence (IJCAI)

Acknowledgement

This research was sponsored by National Key R&D Plan of China (Nos. 2017YFB1400300 and 2017YFB1400303).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that there is no conflict of interests regarding the publication of this paper.

Ethical approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Additional information

Communicated by V. Loia.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Wang, H., Tang, Y., Jia, Z. et al. Dense adaptive cascade forest: a self-adaptive deep ensemble for classification problems. Soft Comput 24, 2955–2968 (2020). https://doi.org/10.1007/s00500-019-04073-5

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-019-04073-5