Abstract

The paper addresses the problem of static state feedback linearization for nonlinear control systems defined on homogeneous time scales. Necessary and sufficient conditions for generic local linearizability of the considered systems by static state feedback and state transformation are presented in terms of a sequence of subspaces of differential one-forms related to the system.

Similar content being viewed by others

1 Introduction

There is ongoing need to make the knowledge base of control theory more compact so that the field can continue to grow. Integrating existing results by providing a more abstract structure is, therefore, always necessary, even more so in the fragmented world of today. Time scale calculus is a general framework that allows unification of the study of continuous- and discrete-time systems [8]. The term “time scales” refer to the way dynamic systems behave over time. Most engineering applications assume time to be either continuous or (uniformly) discrete, both of which are merged in time scale formalism into a general framework, and follow from the latter as special cases. The main concept of time scale calculus, the delta derivative, is the generalization of both time derivative and the difference operator. Though time scale calculus accommodates more possibilities, regarding the control theory, the most important special cases are continuous- and (uniformly) discrete-time systems that are the examples of systems defined on homogeneous time scale.

The description of the dynamics that relies on the difference operator is often called delta-domain description, see for instance [13, 17, 18, 22, 30, 37, 39]. The delta-domain approach has been promoted as an effective tool for dynamic system modeling and control. Compared to models based on the shift operator, the delta-domain models are less sensitive to round-off errors and do not yield ill-conditioned models when signals are sampled at a high sampling rate, see [23, 31, 33]. In [1], the authors demonstrate that the numerical properties of structure detection are improved by a delta-domain model and such delta-domain models provide models closely linked to the continuous-time systems. The delta-domain approach allows to address the question of preservation of the system properties under Euler discretization scheme since it treats the discretized systems as a special case of a system defined on a time scale.

Though the literature on time scale calculus is rich (see the references in [3, 6, 9, 16, 19, 20, 32, 34, 38]), there is not so many papers addressing the control problems for nonlinear systems defined on time scale [7, 12, 28]. In the earlier papers [4, 5] the algebraic formalism has been developed that allows to address various analysis and control problems for nonlinear systems, defined on homogeneous time scales. This approach has been successfully applied to address the irreducibility, reduction [28] and realization of the input–output equations in the state space form [12].

The theories of continuous- and discrete-time dynamical systems as presented in the literature are different, but the analysis on time scales is nowadays recognized as the right tool to unify the seemingly separate fields of discrete dynamical systems (i.e., difference equations) and continuous dynamical systems (i.e., differential equations), and also to present both continuous- and discrete-time theories in the same language. The goal of this paper is to study the iconic problem of static state feedback linearization for nonlinear systems defined on homogeneous time scales where the state transformation method based on one-forms is used. The presented approach covers the continuous- and discrete-time cases in such a manner that those are the special cases of the formalism. Since delta derivative (used in our paper to describe the dynamical systems) coincides with the time derivative for the continuous-time case, the earlier results for continuous-time systems, see for instance [15, 25], follow from our results as a special case, namely the case in which the time scale is the set of real numbers. Note, however, that our results do not recover those given in [2, 21, 26, 29] for the discrete-time systems, defined in terms of the shift operator since the latter is not a delta-derivative. Instead, our formalism includes the description of a discrete-time system based on the difference operator description (delta-domain approach), for which the results shown in the paper are new. Using the difference operator in the discrete case allows to unify the theories of static state feedback linearization for continuous- and discrete-time nonlinear control systems.

Static state feedback linearization is a much studied research topic since 1980s, both for continuous- and discrete-time cases, see [24, 26, 27] and the references therein. Many different techniques that speak different languages (including differential geometry and algebra) have been used in linearization studies that make their comparison a difficult task. The paper [35] establishes the explicit relationship between the two sets of necessary and sufficient solvability conditions, given in terms of integrability of certain vector spaces of differential one-forms and involutivity of distributions of vector fields. In particular, it has been demonstrated that the distributions, when they are involutive, are the maximal annihilators of the corresponding codistributions (vector spaces of one-forms). Moreover, in [35], two methods have been compared from the point of view of computational complexity and it was shown that regarding the computation of the state transformation method based on one-forms is simpler. By this reason, we decided to rely on this method in unification. Finally, note that the results of [11] are claimed in [11] to be dual to those of [2] though not shown in detail.

The paper is organized as follows. Section 2 recalls the notions from time scale calculus and algebraic formalism used in the paper, while in Sect. 3 the properties of the sequences of subspaces of differential one-forms are presented, in terms of which our main results are formulated. The basic results given in Sect. 3 together with the facts presented in Sect. 4 contain the key contribution of the paper. Section 4 is devoted to give the main results, i.e., the conditions for the generic local static state feedback linearization of control systems defined on homogeneous time scales. Finally, some illustrative examples that describe our results are presented.

2 Time scale calculus and algebraic formalism

For a general introduction to the calculus on time scales, see [8]. Here, we recall only those notions and facts that will be used later.

A time scale \({\mathbb {T}}\) is a nonempty closed subset of \({\mathbb {R}}\). We assume that the topology of \({\mathbb {T}}\) is induced by \({\mathbb {R}}\). The forward jump operator \(\sigma :{\mathbb {T}}\rightarrow {\mathbb {T}}\) is defined as \(\sigma (t):=\inf \{ s\in {\mathbb {T}}\mid \ s>t\}\), \(\sigma (\max {\mathbb {T}})=\max {\mathbb {T}}\) if there exists a finite \(\max {\mathbb {T}}\), the backward jump operator \(\rho (t):{\mathbb {T}}\rightarrow {\mathbb {T}}\) is defined as \(\rho (t):=\sup \{ s\in {\mathbb {T}}\mid \ s<t\}\), \(\rho (\min {\mathbb {T}})=\min {\mathbb {T}}\) if there exists a finite \(\min {\mathbb {T}}\). The graininess functions \(\mu : {\mathbb {T}}\rightarrow [0,\infty )\) and \(\nu :{\mathbb {T}}\rightarrow [0,\infty )\) are defined by \(\mu (t):=\sigma (t)-t\) and \(\nu (t):=t-\rho (t)\), respectively. A time scale is called homogeneous if \(\mu \) and \(\nu \) are constant functions. Homogeneous time scales are either of the form \(t_0+\mu {\mathbb {Z}}\), where \(t_0\in {\mathbb {R}}\) and \(\mu >0\), or are closed intervals, bounded or unbounded, including in particular \({\mathbb {R}}\).

From now, we assume that \({\mathbb {T}}\) is a homogeneous time scale.

Remark 1

In [5], we called time scale homogeneous if only \(\mu \) is a constant function. The reason is that when one only needs the forward shift and delta derivative, it is not necessary to have constant \(\nu \). However, if we also need the backward shift and nabla derivative, we have to assume additionally \(\nu \) to be constant since \(\mu \) being constant does not yield the latter. For example, in the set of natural numbers \(\mu \equiv 1\), but \(\nu (1)=0\) whereas \(\nu (k)=1\) for \(k\ge 1\); so \(\nu \) is not constant.

Let us now recall the definition of the delta derivative \(f^{\varDelta }\) of a real valued function f.

Definition 1

The delta derivative of a function \(f:{\mathbb {T}}\rightarrow {\mathbb {R}}\) at \(t \in {\mathbb {T}}\) is the real number \(f^{\varDelta }(t)\) (provided it exists) such that for each \(\varepsilon >0\) there exists a neighborhood \(U(\varepsilon )\) of t, \(U(\varepsilon )\subset {\mathbb {T}}\) such that for all \(\tau \in U(\varepsilon )\), \(|(f(\sigma (t))-f(\tau ))-f^{\varDelta }(t)(\sigma (t)-\tau )| \leqslant \varepsilon |\sigma (t)-\tau |\). Moreover, we say that f is delta differentiable on \({\mathbb {T}}\) provided \(f^{\varDelta }(t)\) exists for all \(t\in {\mathbb {T}}\).

Remark 2

The delta derivative is a natural extension of time derivative in the continuous-time case and forward difference operator in the discrete-time case. Therefore, for \({\mathbb {T}}={\mathbb {R}}\), \(f^{\varDelta }(t)=\lim \limits _{s \rightarrow t}\frac{f(t)-f(s)}{t-s}=f'(t)\) and for \({\mathbb {T}}={\mathbb {Z}}\), \(f^{\varDelta }(t)=\frac{f(\sigma (t))-f(t)}{\mu (t)}=f(t+1)-f(t)=:\triangle f(t)\), where \(\triangle \) is the usual forward difference operator.

For a function \(f: {\mathbb {T}}\rightarrow {\mathbb {R}}\), we can define the second delta derivative \(f^{[2]}:=(f^{\varDelta })^{\varDelta }\) provided that \(f^{\varDelta }\) is delta differentiable with derivative \(f^{[2]}: {\mathbb {T}} \rightarrow {\mathbb {R}}\). Similarly, we define higher order delta derivatives \(f^{[n]}: {\mathbb {T}} \rightarrow {\mathbb {R}}\), \(f^{[n]}:=(f^{[n-1]})^{\varDelta }\). Note that for a homogeneous time scale, there is no left-scattered maximal point in \({\mathbb {T}}\), so \(f^{[n]}\), \(n\geqslant 1\) are uniquely defined for all \(t\in {\mathbb {T}}\) .

For \(f:{\mathbb {T}} \rightarrow {\mathbb {R}}\), define \(f^\sigma :=f \circ \sigma \). Denote \(f^{\varDelta \sigma }:=(f^\varDelta )^\sigma \) and \(f^{\sigma \varDelta }:=(f^\sigma )^\varDelta \).

If f and \(f^\varDelta \) are delta differentiable functions, then for homogeneous time scale one has \(f^{\sigma \varDelta }=f^{\varDelta \sigma }\).

Now, we recall some definitions and facts given in [4, 5] that will be used in the paper.

2.1 Differential field and space of one-forms

Consider now the control system, defined on a homogenous time scale \({\mathbb {T}}\),

where \((x(t),u(t)) \in {\mathcal {X}}\times {\mathcal {U}}\), \({\mathcal {X}}\times {\mathcal {U}}\) is an open subset of \({\mathbb {R}}^n\times {\mathbb {R}}^m\), \(m\leqslant n\), and \(f:{\mathcal {X}}\times {\mathcal {U}}\rightarrow {\mathbb {R}}^n\) is analytic. We assume that the input applied to system (1) is infinitely many times delta differentiable, i.e., \(u^{[k]}\) exists for all \(k\geqslant 0\). Let us define \(\widetilde{f}(x,u):=x+\mu f(x,u)\) and assume that there exists a map \(\varphi : {\mathcal {X}}\times {\mathcal {U}}\rightarrow {\mathbb {R}}^m\) such that \(\varPhi =(\widetilde{f},\varphi )^T\) is an analytic diffeomorphismFootnote 1 from the set \({\mathcal {X}}\times {\mathcal {U}}\) onto \(\varPhi ({\mathcal {X}}\times {\mathcal {U}})\). This means that from \((\bar{x},z)=(\widetilde{f}(x,u),\varphi (x,u))=\varPhi (x,u)\), we can uniquely compute (x, u) as an analytic function of \((\bar{x},z)\). For \(\mu =0\), this condition is always satisfied with \(\varphi (x,u)=u\).

Note that for \({\mathbb {T}}={\mathbb {R}}\), the Eq. (1) becomes an ordinary differential equation and when \({\mathbb {T}}=h{\mathbb {Z}}\), for \(h>0\), it becomes the difference equation. Both \({\mathbb {T}}={\mathbb {R}}\) and \({\mathbb {T}}=h{\mathbb {Z}}\) are homogeneous time scales.

For notational convenience, \((x_1,\ldots ,x_n)\) will simply be written as x, and \((u_1^{[k]},\ldots ,u_m^{[k]})\) as \(u^{[k]}\), for \(k\geqslant 0\). For \(i \leqslant k\), let \(u^{[i..k]}:=(u^{[i]},\ldots ,u^{[k]})\). Consider the infinite set of real (independent) indeterminates

and let \({\mathcal {K}}\) be the (commutative) field of meromorphic functions in a finite number of the variables from the set \({\mathcal {C}}\). Let \(\sigma _f : {\mathcal {K}} \rightarrow {\mathcal {K}}\) be an operator defined by

where \(F\in {\mathcal {K}}\), \(\sigma _f(u^{[0..k]}) := u^{[0..k]}+\mu u^{[1..k+1]}\), for \(k\geqslant 0\) and by (1) \(\sigma _f(x):= x+\mu x^\varDelta =x+\mu f(x,u)\). Under the assumption about the existence of \(\varphi \) such that \(\varPhi \) is an analytic diffeomorphism, \(\sigma _f\) is an injective endomorphism.

The field \({\mathcal {K}}\) can be equipped with an operator \(\varDelta _f: {\mathcal {K}} \rightarrow {\mathcal {K}}\) defined by

Remark 3

Note that in both continuous-time and discrete-time case, the operator \(\varDelta _f\) corresponds to the total delta derivative of a function with respect to the dynamic of the system, i.e., for control \(u(\cdot )\) applied to system (1), for solution \(x(\cdot )\) of (1) corresponding to control u and \(F\in {\mathcal {K}}\) we get

On the right hand side of (4), the operator \(\varDelta _f\) is applied to function F which depends on indeterminates from the set \({\mathcal {C}}\) and substituting \(x(\cdot )\) and \(u^{[l]}(\cdot )\) instead of x and \(u^{[l]}\), respectively, we get relation (4) where the time appears.

The operator \(\varDelta _f\) satisfies, for all \(F,G \in {\mathcal {K}}\), the conditions

-

(i)

\(\varDelta _f(F + G)= \varDelta _f(F) +\varDelta _f(G)\),

-

(ii)

\(\varDelta _f (FG) = \varDelta _f(F) G+ \sigma _f(F)\varDelta _f(G)\) (generalized Leibniz rule).

An operator satisfying the generalized Leibniz rule is called a “\(\sigma _f\)-derivation” and a commutative field endowed with a \(\sigma _f\)-derivation is called a \(\sigma _f\)-differential field [14]. Therefore, under the assumption about the existence of \(\varphi \) such that \(\varPhi \) is an analytic diffeomorphism, \({\mathcal {K}}\) endowed with the delta derivative \(\varDelta _f\) is a \(\sigma _f\)-differential field. The \(\sigma _f\)-differential field \({\mathcal {K}}\) is called inversive, if every element of \({\mathcal {K}}\) has a pre-image in \({\mathcal {K}}\) with respect to \(\sigma _f\), i.e., \(\sigma _f^{-1}(F)\) is defined for all \(F\in {\mathcal {K}}\), see [14]. For \(\mu =0\), \(\sigma _f=\sigma _f^{-1}={\mathrm {id}}\) and \({\mathcal {K}}\) is inversive. Though \({\mathcal {K}}\) is not inversive in general, it is always possible to embed \({\mathcal {K}}\) into an inversive \(\sigma _f\)-differential overfield \({\mathcal {K}}^*\), called the inversive closure of \({\mathcal {K}}\) [14]. Since \(\sigma _f\) is an injective endomorphism, it can be extended to \({\mathcal {K}}^*\) such that \(\sigma _f : {\mathcal {K}}^* \rightarrow {\mathcal {K}}^*\) is an automorphism. For \(\mu \ne 0\), the overfield \({\mathcal {K}}^*\) consists of meromorphic functions in a finite number of the independent variables \( {\mathcal {C}}^*={\mathcal {C}}\cup \{z_s^{\langle -\ell \rangle }, \ s=1,\ldots ,m, \ \ell \geqslant 1\}\), where \(z_i^{\langle -k\rangle }=\sigma _f(z_i^{\langle -k-1 \rangle })\) for \(k\geqslant 1\), and \(z_i=\varphi _i(x,u)=\sigma _f(z_i^{\langle -1 \rangle })\), see [4, 5]. Let \(z:=(z_1,\ldots ,z_m)\). Then, \(\sigma _f^{-1} ( x , u )= \psi (x, z^{\langle -1\rangle })\), where \(\psi \) is a certain vector valued function, determined by f in (1) and the extension \(z=\varphi (x,u)\). Although the choice of variables z is not unique, all possible choices yield isomorphic field extensions. We extend the operator \(\varDelta _f\) to new variables by

The extension of operator \(\varDelta _f\) to \({\mathcal {K}}^*\) can be made in analogy to (3). Such operator \(\varDelta _f\) is now a \(\sigma _f\)-derivation of \({\mathcal {K}}^*\). A practical procedure for construction of \({\mathcal {K}}^*\) (for \(\mu \ne 0\)) is given in [5], where the explicit construction of an inversive \(\sigma _f\)-differential overfield \({\mathcal {K}}^*\) is unique up to an isomorphism, see [5].

Note that \({\mathcal {K}}^*={\mathcal {K}}\) for \(\mu =0\).

We assume that for system (1) the assumption of independence of controls

holds.Footnote 2 We will consider regular state feedbacks, which are defined as follows:

Definition 2

A regular static state feedback is an analytic mapping \(\phi : {\mathcal {X}}\times {\mathcal {V}} \rightarrow {\mathcal {U}}\),

satisfying \(\mathrm{rank}\, \frac{\partial \phi }{\partial v}(x,v)=m\) for all \((x,v)\in {\mathcal {X}}\times {\mathcal {V}}\) and \(\phi ({\mathcal {X}}\times {\mathcal {V}})={\mathcal {U}}\), where \({\mathcal {V}}\subset {\mathbb {R}}^m\).

Remark 4

The regular static state feedback defined above is not globally invertible, but the condition \(\mathrm{rank}\, \frac{\partial \phi }{\partial v}=m\) guarantees local invertibility with respect to v for each fixed point \(x\in {\mathcal {X}}\). Moreover, the map \(\vartheta : ({\mathcal {X}},{\mathcal {V}})\ni (x,v)\mapsto (x,\phi (x,v)) \in ({\mathcal {X}},{\mathcal {U}})\) is a local analytic diffeomorphism.

From now on

Consider the infinite set of differentials of indeterminates \(\mathrm{d}{\mathcal {C}}^*=\{\mathrm{d}\zeta _i,\ \zeta _i\in {\mathcal {C}}^*\}\). Then, \(\mathrm{d} x_i\), \(i=1,\ldots ,n\), \(\mathrm{d}u_j^{[k]}\), \(j=1,\ldots , m\), \(k\geqslant 0\), can be treated as differentials of coordinate functions which select \(x_i\) or \(u_j^{[k]}\) from the set of all variables, respectively. Define \({\mathcal {E}}:=\mathrm{span}_{{\mathcal {K}}^*}\mathrm{d}{\mathcal {C}}^*\). Any element of \({\mathcal {E}}\) is a vector of the form

where only a finite number of coefficients \(B_{jk}\) and \(C_{s\ell }\) are nonzero elements of \({\mathcal {K}}^*\). Since F is a meromorphic function in a finite number of variables from the set \({\mathcal {C}}^*\), then an operator \(\mathrm{d}:{\mathcal {K}}^* \rightarrow {\mathcal {E}}\) can be defined in the standard manner:

The elements of \({\mathcal {E}}\) are called differential one-forms. One says that \(\omega \in {\mathcal {E}}\) is an exact one-form if \(\omega =\mathrm{d}F\) for some \(F\in {\mathcal {K}}^{*}\). We will refer to \(\mathrm{d}F\) as to the total differential (or simply the differential) of F.

If \(\omega = \sum \limits _{i} A_i {\mathrm {d}} \zeta _i \) is a one-form, where \(A_i\in {\mathcal {K}}^*\) and \(\zeta _i\in {\mathcal {C}}^*\), one can define the operators \(\varDelta _f : {\mathcal {E}} \rightarrow {\mathcal {E}}\) and \(\sigma _f : {\mathcal {E}} \rightarrow {\mathcal {E}}\) by

and

The operator \(\sigma _f:{\mathcal {E}}\rightarrow {\mathcal {E}}\) is invertible and the inverse operator \(\sigma _f^{-1}:{\mathcal {E}}\rightarrow {\mathcal {E}}\) is given by

for \(A_i\in {\mathcal {K}}^*\) and \(\zeta _i\in {\mathcal {C}}^*\).

Since \(\sigma _f(A_i)=A_i +\mu \varDelta _f(A_i) \) (see [5]),

For the homogeneous time scale \({\mathbb {T}}\), we have for \(F\in {\mathcal {K}}^{*}\)

Note that the following relation between operators \(\varDelta _f\) and \(\sigma _f\) holds

where \(\mathrm{id}\) denotes the identity operator.

We will use sometimes the more compact notations \(F^{\varDelta _f}\), \(\omega ^{\varDelta _f}\), \(F^{\sigma _f}\) and \(\omega ^{\sigma _f}\) instead of \(\varDelta _f(F)\), \(\varDelta _f(\omega )\), \(\sigma _f(F)\) and \(\sigma _f(\omega )\).

2.2 Vector fields and p-forms

Let \({\mathcal {E}}'\) be the dual vector space of \({\mathcal {E}}\), i.e., the space of linear mappings from \({\mathcal {E}}\) to \({\mathcal {K}}^*\). The elements of \({\mathcal {E}}'\) are of the form

where \(a_i,b_{jk},c_{s\ell } \in {\mathcal {K}}^*\) and are called vector fields. Taking \(\omega =\sum _{i=1}^n A_i\mathrm{d}x_i+\sum _{k=0}^p \sum _{j=1}^m B_{jk}\mathrm{d}u_j^{[k]} +\sum _{\ell =1}^q \sum _{s=1}^m C_{s\ell }\mathrm{d}z_s^{\langle -\ell \rangle }\in {\mathcal {E}}\) and the vector field \(X\in {\mathcal {E}}'\) of the form (9), we get

The delta-derivative \(X^{\varDelta _f}\) and the forward-shift \(X^{\sigma _f}\) of \(X\in {\mathcal {E}}'\) may be defined uniquely by the equations

and

respectively, where \(\omega \) is an arbitrary one-form. Note that \(\langle X, \sigma _f^{-1}(\omega ) \rangle \in {\mathcal {K}}^*\), so \(\langle X, \sigma _f^{-1}(\omega ) \rangle ^{\sigma _f}\) and \(\langle X, \sigma _f^{-1}(\omega ) \rangle ^{\varDelta _f}\) are well defined.

Proposition 1

[4] Let \(X\in {\mathcal {E}}'\). Then for arbitrary \(\omega \in {\mathcal {E}}\)

Let \({\mathcal {E}}^{p}\) be the set of differential p-forms, see [4]. Every p-form \(\alpha \in {\mathcal {E}}^p\) has a unique representative of the form

where \(A_{i_1\cdots i_p} \in {\mathcal {K}}^*\).

The exterior product (alternatively called the wedge product) of a p-form \(\omega _1= \sum _{i_1<\cdots <i_p} F_{i_1\cdots i_p}\mathrm{d}\zeta _{i_1}\wedge \cdots \wedge \mathrm{d}\zeta _{i_p}\) and q-form \(\omega _2=\sum _{j_1<\cdots <j_q} G_{j_1\cdots j_q}\mathrm{d}\zeta _{j_1}\wedge \cdots \wedge \mathrm{d}\zeta _{j_q}\), denoted as \(\omega _1\wedge \omega _2\), is \((p+q)\)-form defined in the following way

where \(F_{i_1\cdots i_p}, G_{j_1\cdots j_q}\in {\mathcal {K}}^*\) and \(\zeta _{i_l},\zeta _{j_s}\in {\mathcal {C}}^*\), \(l=1,\ldots ,p\), \(s=1,\ldots ,q\), see [4].

Let \({\mathbb {E}}\) be the space of all forms, i.e., \({\mathbb {E}}=\biguplus _{p\geqslant 0} {\mathcal {E}}^p,\) where \({\mathcal {E}}^0:={\mathcal {K}}^*\), \({\mathcal {E}}^1:={\mathcal {E}}\).

Exterior differential \(\mathrm{d}\) is an operator \(\mathrm{d}: {\mathbb {E}} \rightarrow {\mathbb {E}}\) that satisfies the following properties:

-

(i)

\(\mathrm{d}({\mathcal {E}}^p)\in {\mathcal {E}}^{p+1}\) and \(\mathrm{d}: {\mathcal {E}}^p\rightarrow {\mathcal {E}}^{p+1}\) is an \({\mathbb {R}}\)-linear operator.

-

(ii)

\(\mathrm{d}(\alpha \wedge \beta )= \mathrm{d}\alpha \wedge \beta +(-1)^s \alpha \wedge \mathrm{d}\beta \), where \(\alpha \in {\mathcal {E}}^s\) and \(\beta \in {\mathcal {E}}^{p-s}\),

-

(iii)

if \(F\in {\mathcal {K}}^*\), then \(\mathrm{d}F\) coincides with the ordinary differential, see (7).

-

(iv)

\(\mathrm{d}^2 =0\), where \(\mathrm{d}^2=\mathrm{d}\circ \mathrm{d}:{\mathbb {E}}\rightarrow {\mathbb {E}}\).

The properties (i)–(iii) define uniquely the operator \(\mathrm{d}\).

The operators \(\varDelta _f : {\mathcal {K}}^* \rightarrow {\mathcal {K}}^*\) and \(\sigma _f: {\mathcal {K}}^* \rightarrow {\mathcal {K}}^*\), related to system (1), induce the operators \(\varDelta _f : {\mathcal {E}}^p \rightarrow {\mathcal {E}}^p\) and \(\sigma _f : {\mathcal {E}}^p \rightarrow {\mathcal {E}}^p \) by

and

where \(\zeta _{i_1},\ldots , \zeta _{i_p} \in {\mathcal {C}}^*\) and \(A_{i_1\cdots i_p}\in {\mathcal {K}}^*\).

Proposition 2

[4] Let \(\omega \in {\mathcal {E}}^p,\) \(p\geqslant 1\). Then, for a homogeneous time scale \({\mathbb {T}}{:}\)

Now let us consider an operator \(i_X : {\mathbb {E}} \rightarrow {\mathbb {E}}\) associated with a vector field \(X\in {\mathcal {E}}'\) that satisfies the following properties:

-

(i)

\(i_X ({\mathcal {E}}^{p+1})\in {\mathcal {E}}^{p}\), for \(p\geqslant 0\) and \(i_X: {\mathcal {E}}^{p+1}\rightarrow {\mathcal {E}}^{p}\) is a \({\mathcal {K}}^{*}\)-linear operator.

-

(ii)

\(i_X (\omega _1 \wedge \omega _2)= i_X (\omega _1) \wedge \omega _2+(-1)^p \omega _1 \wedge i_X(\omega _2)\), where p is the degree of \(\omega _1\);

-

(iii)

\(i_X (F)=0\), for all \(F\in {\mathcal {K}}^*\);

-

(iv)

\(i_X (\mathrm{d}x_i)=X_i\), where by \(X_i\) is denoted the ith component of X.

The operator \(i_X\) is called interior product with respect to X. Note that \(i_X (\mathrm{d}\zeta _i)=\langle X,\mathrm{d}\zeta _i\rangle \) so that for a differential 2-form \(\theta =\sum \limits _{i,j} a_{ij} \mathrm{d} \zeta _i\wedge \mathrm{d}\zeta _j\) we have

3 Sequence of subspaces of one-forms

In this section, we introduce the sequence of subspaces of \({\mathcal {E}}\) being the main tool for characterizing solvability/solution of numerous problems in control theory, for example accessibility (irreducibility), realization and feedback linearization.

Introduce, in analogy with [15], the sequence of subspaces \(({\mathcal {H}}_k)\) of \({\mathcal {E}}\) defined by

Then, \( {\mathcal {H}}_1 := \mathrm{span}_{{\mathcal {K}}^*} \{\mathrm{d}x\}\). Note that \(\dim _{{\mathcal {K}}^*} {\mathcal {H}}_0 = n+m\) and \(\dim _{{\mathcal {K}}^*} {\mathcal {H}}_1= n\).

Let \(\varDelta _f^k:= \underbrace{\varDelta _f \circ \varDelta _f \circ \cdots \circ \varDelta _f}_{k\text {-times}}\). The relative degree of a one-form \(\omega \in {\mathcal {H}}_0\) is defined as:

If such integer does not exist, set \(r=\infty \). The relative degree of a meromorphic function A(x, u) is defined to be the relative degree of the one-form \(\mathrm{d}A (x,u)\).

The following proposition is a simple consequence of the construction of \({\mathcal {H}}_k\).

Proposition 3

-

(i)

For \(k\geqslant 0,{\mathcal {H}}_k\) is the space of one-forms that have relative degree greater than or equal to k.

-

(ii)

There exists an integer \(0< k^* \leqslant n\) such that, for \(0\leqslant k \leqslant k^*,\) \({\mathcal {H}}_{k+1} \varsubsetneq {\mathcal {H}}_k\) and \({\mathcal {H}}_{k^*+1}={\mathcal {H}}_{\ell },\) \(\ell \geqslant k^*+1.\)

Proof

Point (i) is a simple consequence of definition (15). Concerning (ii), the existence of the integer \(k^*\) comes from the fact that each \({\mathcal {H}}_k\) is a finite-dimensional \({\mathcal {K}}^*\)-vector space so that, at each step, either its dimension decreases or \({\mathcal {H}}_{k+1}={\mathcal {H}}_k\). Moreover, \(k^*\leqslant n=\dim _{{\mathcal {K}}^*} {\mathcal {H}}_1\).

Define \({\mathcal {H}}_{\infty }:={\mathcal {H}}_{k^*+1}\). Then from the construction of \({\mathcal {H}}_k\), we get

Proposition 4

\({\mathcal {H}}_{\infty }\) is the largest subspace of \({\mathcal {H}}_1\) that is invariant under \(\sigma _f\)-derivation \(\varDelta _f\).

Now, we will show that the coordinate transformation yields an inversive closure isomorphic to the original one. Consequently, we have some correspondence between the constructed subspaces of one-forms.

Let \(\hat{x}=\xi (x)\), \(\xi =(\xi _1,\ldots , \xi _n)\) and \(\xi :{\mathcal {X}} \rightarrow \widehat{{\mathcal {X}}}\subset {\mathbb {R}}^n\) be an analytic diffeomorphism. Then,

where \(t\in {\mathbb {T}}\) and \(\hat{f}(\hat{x},u):=\left. \xi ^{\varDelta _f}(x,u)\right| _{x=\xi ^{-1}(\hat{x})}\).

Note that x and \(z^{\langle -\ell \rangle }\), \(\ell \geqslant 1\), belong to some open subsets of \({\mathbb {R}}^n\) and \({\mathbb {R}}^m\), respectively. Let \(\widehat{{\mathcal {K}}}^*\) be the field of functions in variables from the set \(\widehat{{\mathcal {C}}}:=\{\hat{x}, u^{[k]},z^{\langle -\ell \rangle } \mid \ k\geqslant 0, \ell \geqslant 1\}\), where \(z=\varphi (x,u)=\varphi (\xi ^{-1}(\hat{x}),u)=:\hat{\varphi }(\hat{x},u)\) and \(\hat{x}\in \xi (U)\). Moreover, \(\widehat{{\mathcal {K}}}^*\) is equipped with operators \(\widehat{\varDelta }_{\hat{f}}\) and \(\widehat{\sigma }_{\hat{f}}\) defined in a similar manner as operators \(\varDelta _f\) and \(\sigma _f\) (see definitions (3) and (2), respectively). Observe that if \({\mathcal {U}}={\mathbb {R}}^n\), then \({\mathcal {K}}^*=\widehat{{\mathcal {K}}}^*\). It is easy to see that the relation similar to (8) holds also for the operators \(\widehat{\varDelta }_{\hat{f}}\) and \(\widehat{\sigma }_{\hat{f}}\), i.e.,

Let \(\widehat{{\mathcal {E}}}:=\mathrm{span}_{\widehat{{\mathcal {K}}}^*} \{\mathrm{d}\hat{x}, \mathrm{d}u^{[k]}, \mathrm{d}z^{\langle -\ell \rangle },\ k\geqslant 0, \ell \geqslant 1\}\). Then, \(\xi \) induces the isomorphisms \(\xi ^*: \widehat{{\mathcal {K}}}^* \rightarrow {\mathcal {K}}^*\) and \(\xi ^* :\widehat{{\mathcal {E}}} \rightarrow {\mathcal {E}}\) by

and

respectively, where \(\hat{x}_i=\xi _i(x)\), \(i=1,\ldots ,n\).

Remark 5

Note that if \(\mu =0\), then the variables \(z_s^{\langle -\ell \rangle }\), \(s=1,\ldots ,m\), \(\ell \geqslant 1\) do not appear and \(\sigma _f=\widehat{\sigma }_{\hat{f}}=\mathrm{id}\).

If \(\widehat{G}\in \widehat{{\mathcal {K}}}^*\) and \(\widehat{G}\) depends only on \(u^{[0..k]},{z}^{\langle -1\rangle },\ldots ,{z}^{\langle -\ell \rangle }\) and does not depend on x, then \(\xi ^*(\widehat{G})=\widehat{G}\).

In general, we have

and

Moreover,

and for \(\ell \geqslant 2\)

Therefore, we get

Note that \(u^{[k]}\), \(k\geqslant 0\) and \(z^{\langle -\ell \rangle }\), \(\ell \geqslant 1\) are the same for \({\mathcal {K}}^*\) and \(\widehat{{\mathcal {K}}}^*\). Consequently, \(\widehat{\sigma }_{\hat{f}}(z^{\langle -\ell \rangle })=z^{\langle -\ell +1\rangle }={\sigma }_f(z^{\langle -\ell \rangle })\).

Proposition 5

For \(\widehat{A}\in \widehat{{\mathcal {K}}}^*\)

and

Proof

Let \(\widehat{A}\in \widehat{{\mathcal {K}}}^*\). Then, \(\xi ^*(\widehat{A})\in {\mathcal {K}}^*\). Since, by (3), for \(\mu \ne 0\)

and for \(\mu =0\)

we get

Using the relations (8), (16) and (19), one gets

Hence the proposition holds.

Proposition 6

Let \(\widehat{\omega }\in \widehat{{\mathcal {E}}}\). Then,

The proof is given in the Appendix.

In \(\widehat{{\mathcal {E}}}\), we have the following sequence of subspaces \((\widehat{{\mathcal {H}}}_k)\):

Proposition 7

For subspaces \({\mathcal {H}}_k,\widehat{{\mathcal {H}}}_k\) defined by (15) and (22), respectively, we have, for \(k\geqslant 0\)

Proof

The proof is by the induction principle. Since the diffeomorphism \(\xi \) induces isomorphism \(\xi ^* : \widehat{{\mathcal {E}}} \rightarrow {\mathcal {E}}\) and for arbitrary \(\widehat{\omega }_x=\sum _{i=1}^{n} \widehat{A}_i \mathrm{d}\hat{x}_i\in \widehat{{\mathcal {H}}}_1\subset \widehat{{\mathcal {H}}}_0\), \(\widehat{\omega }_u=\sum _{k\geqslant 0}\sum _{j=1}^{m} \widehat{B}_{jk} \mathrm{d}\hat{u}_j^{[k]}\in \widehat{{\mathcal {H}}}_0\) by (18) we have

and \(\xi ^*(\widehat{\omega }_x)= \sum \limits _{i=1}^{n}\sum \limits _{j=1}^{n} \xi ^*(\widehat{A}_i)\frac{\partial \xi _i}{\partial x_j} \mathrm{d}x_j\in {\mathcal {H}}_1\,\), so (23) holds for \(k=0,1\). Assume that (23) is true for \(k=n\), and let \(\widehat{\omega }\in \widehat{{\mathcal {H}}}_{n+1}\). From definition (15), we get \(\widehat{\omega }\in \widehat{{\mathcal {H}}}_{n} \ \text {and} \ \widehat{\omega }^{\widehat{\varDelta }_{\hat{f}}}\in \widehat{{\mathcal {H}}}_{n}\). Since \(\xi ^*(\widehat{{\mathcal {H}}}_n)={\mathcal {H}}_n\),

By (21), we get

and it is equivalent to \(\xi ^*(\widehat{\omega })\in {\mathcal {H}}_{n+1}\). Hence using the induction principle, we get that (23) holds for all \(k\geqslant 0\).

Remark 6

The dimension of the subspace \({\mathcal {H}}_k\), \(k\geqslant 0\), is invariant under diffeomorphism \(\xi \), i.e., \(\dim _{{\mathcal {K}}^*} {\mathcal {H}}_k =\dim _{{\mathcal {K}}^*} \widehat{{\mathcal {H}}}_k\), for \({\mathcal {H}}_k=\xi ^*(\widehat{{\mathcal {H}}}_k)\).

Let \(u=\phi (x,v)\) be a static state feedback such that the map \(\vartheta : ({\mathcal {X}},{\mathcal {V}})\ni (x,v)\mapsto (x,\phi (x,v))\in ({\mathcal {X}},{\mathcal {U}})\) is an analytic diffeomorphism. Then, the field \(\widetilde{{\mathcal {K}}}^*\) of meromorphic functions in a finite number of variables from the set \(\widetilde{{\mathcal {C}}}:=\{x, v^{[k]},z^{\langle -\ell \rangle } \mid \ k\geqslant 0, \ell \geqslant 1\}\) where \(z=\varphi (x,\phi (x,v))\), and \(\widetilde{{\mathcal {E}}}\) can be constructed similarly as \(\widehat{{\mathcal {K}}}^*\) and \(\widehat{{\mathcal {E}}}\), respectively. Then, \(\vartheta \) induces the isomorphisms \(\vartheta ^*: \widetilde{{\mathcal {K}}}^*\rightarrow {{\mathcal {K}}}^*\) and \(\vartheta ^*: \widetilde{{\mathcal {E}}}\rightarrow {{\mathcal {E}}}\) by the formulas similar to (17) and (18). Moreover, the sequence \((\widetilde{{\mathcal {H}}}_k)\) can be defined by analogy with (22). Subspaces \({\mathcal {H}}_k\) and \(\widetilde{{\mathcal {H}}}_k\), for \(k\geqslant 0\), are isomorphic similarly as in Proposition 7. In particular,

Since \(\vartheta \) is a diffeomorphism, one gets

Note that the relative degrees of the one-forms are invariant under regular static state feedback. This fact is well known for continuous-time systems, see Proposition 8.8 in [36], and carries easily over for systems, defined on homogeneous time scales. Then, the following property characterizes the subspaces \({\mathcal {H}}_k\):

Corollary 1

Let \(k\geqslant 0\). The subspaces \({\mathcal {H}}_k\) and \(\widetilde{{\mathcal {H}}}_k\) are isomorphic, i.e., \(\mathrm{dim}\, {\mathcal {H}}_k=\mathrm{dim}\, \widetilde{{\mathcal {H}}}_k,\) for \({\mathcal {H}}_k=\vartheta ^*(\widetilde{{\mathcal {H}}}_k)\). Moreover, for \(k\geqslant 1\) the subspaces \({\mathcal {H}}_k\) are invariant under regular static state feedback, so \({\mathcal {H}}_k=\widetilde{{\mathcal {H}}}_k\). Feedback invariance comes from the fact that the relative degrees are invariant under regular static state feedback and from the definition of the subspaces \({\mathcal {H}}_k\) and \(\widetilde{{\mathcal {H}}}_k,\) \(k\geqslant 1\).

Theorem 1

Suppose \({\mathcal {H}}_{\infty }=\{0\}.\) Then, there exists a list of integers \(r_1,\ldots ,r_m\) and one-forms \(\omega _1,\ldots ,\omega _m\in {\mathcal {H}}_1\) whose relative degrees are, respectively, \(r_1,\ldots ,r_m\) such that

-

(i)

\(\mathrm{span}_{{\mathcal {K}}^*} \{\omega _i^{\varDelta _f^j}, \ 1\leqslant i \leqslant m, 0\leqslant j \leqslant r_i-k \} = {\mathcal {H}}_k,\) \(k\geqslant 0,\) in particular

\(\mathrm{span}_{{\mathcal {K}}^*} \{\omega _i^{\varDelta _f^j}, \ 1\leqslant i \leqslant m, 0\leqslant j \leqslant r_i-1 \} = \mathrm{span}_{{\mathcal {K}}^*} \{\mathrm{d}x\}={\mathcal {H}}_1,\)

\(\mathrm{span}_{{\mathcal {K}}^*} \{\omega _i^{\varDelta _f^j}, \ 1\leqslant i \leqslant m, 0\leqslant j \leqslant r_i \} = \mathrm{span}_{{\mathcal {K}}^*} \{\mathrm{d}x,\mathrm{d}u\}={\mathcal {H}}_0,\)

-

(ii)

the one-forms \(\{\omega _i^{\varDelta _f^j}, \ 1\leqslant i \leqslant m, j \geqslant 0\}\) are linearly independent over the field \({\mathcal {K}}^*;\) in particular \(\sum _{i=1}^{m} r_i =n.\)

Proof

Let \({\mathcal {W}}_{k^*}=\{\eta _1,\ldots ,\eta _{r_{k^*}}\}\) be a basis for \({\mathcal {H}}_{k^*}\).Footnote 3 Then by definition (15), the elements of \({\mathcal {W}}_{k^*}\) and \({\mathcal {W}}_{k^*}^{\varDelta _f}=\{\eta _1^{\varDelta _f},\ldots ,\eta _{r_{k^*}}^{\varDelta _f}\}\) belong to \({\mathcal {H}}_{k^*-1}\). Now, we want to prove that the vectors in \({\mathcal {W}}_{k^*}\cup {\mathcal {W}}_{k^*}^{\varDelta _f}\) are independent and we prove it by contradiction. Suppose that \({\mathcal {W}}_{k^*}\cup {\mathcal {W}}_{k^*}^{\varDelta _f}\) are linearly dependent. Then, there exist some coefficients \(a_i,b_i\), \(1\leqslant i\leqslant r_{k^*}\), some of them nonzero, such that \(\sum _{i}(a_i\eta _i + b_i\eta _i^{\varDelta _f})=0\). The linear independence of the \(\eta _i\)’s implies that not all the \(b_i\)’s vanish. Denote \(\sigma _f^{-1}(b_i)\) by \(b_i^{\rho _f}\) and consider the one-form \(\omega =\sum _{i} b_i^{\rho _f} \eta _i\in {\mathcal {H}}_{k^*}\) whose delta derivative is \(\omega ^{\varDelta _f}=\sum _{i} ((b_i^{\rho _f})^{\varDelta _f} \eta _i+b_i\eta _i^{\varDelta _f})=\sum _{i} (b_i^{\rho _f})^{\varDelta _f} \eta _i-\sum _{i} a_i \eta _i\). Hence \(\omega \in {\mathcal {H}}_{k^*+1}={\mathcal {H}}_{\infty }\), which contradicts the assumption \({\mathcal {H}}_{\infty }=\{0\}.\) Therefore, \({\mathcal {W}}_{k^*}\cup {\mathcal {W}}_{k^*}^{\varDelta }\) are linearly independent. Hence it is always possible to choose a set (possibly empty) \({\mathcal {W}}_{k^*-1}\) such that \({\mathcal {W}}_{k^*} \cup {\mathcal {W}}_{k^*}^\varDelta \cup {\mathcal {W}}_{k^*-1}\) is a basis for \({\mathcal {H}}_{k^*-1}\). Repeating this procedure \(k^*-1\) times, we obtain

It can be proved by induction that, for \(0\leqslant k\leqslant k^*\), the set

is linearly independent. \({\mathcal {H}}_1=\mathrm{span}_{{\mathcal {K}}^*} \{\mathrm{d}x\}\) and assumption (5) imply \({\mathcal {W}}_0=\emptyset \). Note that \({\mathcal {H}}_0={\mathcal {H}}_1\oplus \mathrm{span}_{{\mathcal {K}}^*}\{\mathrm{d}u\}\). By (24), we get

Since \(\dim \mathrm{span}_{{\mathcal {K}}^*}\{\mathrm{d}u\}=m\), there exist \(\omega _1,\ldots ,\omega _m\) such that

\(r_i\) is the relative degree of the one-form \(\omega _i\) for \(i=1,\ldots ,m\), and

Consequently, \({\mathcal {H}}_1=\mathrm{span}_{{\mathcal {K}}^*}\{\omega _i,\ldots ,\omega _i^{\varDelta _f^{r_i-1}},i=1,\ldots ,m\}\, \) and by \(\dim {\mathcal {H}}_1=n\), we get \(\sum \limits _{i=1}^{m} r_i=n\).

Corollary 2

Suppose \({\mathcal {H}}_\infty =\{0\}\). Then, there exists a basis \(\{\omega _{i,j}, \ 1\leqslant i\leqslant m, 1\leqslant j\leqslant r_i\}\) of \({\mathcal {H}}_1\) such that

where \(a_{s,j}^i, b_j^i\in {\mathcal {K}}^*\) and \([b_j^i]\) has the inverse in the ring of \(m\times m\) matrices with entries in \({\mathcal {K}}^*\).

Proof

For \(1\leqslant i\leqslant m\) and \(1\leqslant j\leqslant r_i\), we take \(\omega _{i,j}=\omega _i^{\varDelta _f^{j-1}}\).

Proposition 8

For \(1\leqslant k\leqslant k^*+1,\) there exist \(n_k\) one-forms \(\alpha _1,\ldots ,\alpha _{n_k}\) that depend on variables \(\{x,u^{[i]} \mid \ i\geqslant 0\}\) for \(k=1\) and variables \(\{x,u^{[i]},z^{\langle -j\rangle } \mid \ i\geqslant 0, 1\leqslant j\leqslant k-1\}\) for \(k\geqslant 2\) and constitute a basis for \({\mathcal {H}}_k\).

Proof

The proof is by induction. Proposition 8 is evidently true for \(k=1\) and \(\dim {\mathcal {H}}_1=n_1=n\). Suppose it is true for some integer \(k\geqslant 1\). Let \(\{\eta _1,\ldots ,\eta _{n_k},\vartheta _1,\ldots ,\vartheta _{n_{k-1}-n_k}\}\) and \(\{\eta _1,\ldots ,\eta _{n_k}\}\) be, respectively, the bases of \({\mathcal {H}}_{k-1}\) and \({\mathcal {H}}_k\). An arbitrary element \(\alpha =\sum \limits _{i} a_i\eta _i \in {\mathcal {H}}_k\) belongs to \({\mathcal {H}}_{k+1}\) if and only if \(\alpha ^{\varDelta _f}=\sum \limits _{i} a_i^{\varDelta _f}\eta _i + a_i^{\sigma _f} \eta _i^{\varDelta _f} \in {\mathcal {H}}_k\). Since \(\eta _i\in {\mathcal {H}}_k\), it follows that \(\eta _i,\eta _i^{\varDelta _f}\in {\mathcal {H}}_{k-1}\). Hence

Thus, \(\alpha \in {\mathcal {H}}_{k+1}\) if and only if the coefficients \(a_i^{\sigma _f}\) satisfy the following system of linear equations:

System (26) has \(n_k - \mathrm{rank}_{{\mathcal {K}}^*} [c_{i\ell }]=:s\) linearly independent solutions and \(s=\dim _{{\mathcal {K}}^*} {\mathcal {H}}_{k+1} \). Observe also that \(s=n_{k+1}\) from definition of \({\mathcal {H}}_{k+1}\).

Note that from the induction assumption, the coefficients \(a_i^{\sigma _f}\) may be chosen to depend only on variables \(\{x, u^{[i]}, z^{\langle -j\rangle }\mid j\leqslant k-1, i\geqslant 0\}\). Since \(a_i=\sigma _f^{-1}(a_i^{\sigma _f})\), so \(a_i\) depends only on the variables \(\{x, u^{[i]}, z^{\langle -j\rangle }\mid j\leqslant k, i\geqslant 0\}\).

The proof of Proposition 8 provides a procedure to compute bases of the subspaces \({\mathcal {H}}_k\), \(k\geqslant 2\). Let \(\omega _{i,j}\), \(1\leqslant i\leqslant m\), \(1\leqslant j\leqslant r_i\) be a basis of \({\mathcal {H}}_1\) satisfying (25). Observe that there exists an integer number M such that one-forms \(\omega _{i,j}\) depend on \((x,u,u^{[1]},\ldots ,u^{[\iota ]},z^{\langle -1\rangle },\ldots ,z^{\langle -\kappa \rangle })\in {\mathcal {X}}\times {\mathcal {U}}\times {\mathbb {R}}^{\iota +\kappa }\subset {\mathbb {R}}^M\). Let \({\mathcal {S}}\) be an open and dense subset of \({\mathcal {X}}\times {\mathcal {U}}\times {\mathbb {R}}^{\iota +\kappa }\) such that the forms \(\omega _i^{\varDelta _f^j}\), \(1\leqslant i \leqslant k^*\), \(0\leqslant j\leqslant r_i-1\) evaluated at \((x,u,u^{[1]},\ldots ,u^{[\iota ]},z^{\langle -1\rangle },\ldots ,z^{\langle -\kappa \rangle })\in {\mathcal {S}}\) are linearly independent over \({\mathbb {R}}\).

Let us recall that a codistribution on \(V\subset {\mathbb {R}}^M\) is a map \(H: V\ni p \mapsto H_p\), where \(H_p\) is a linear subspace of \(T_p^{*}{\mathbb {R}}^M\). The codistribution H is locally integrable if for each point \(p\in V\) there exist some neighbourhood \({\mathcal {V}}\) of p and exact one-forms defined on \({\mathcal {V}}\) such that for every \(q\in {\mathcal {V}}\) the one-forms evaluated at q form the basis of \(H_q\). If \(p\mapsto \mathrm{dim}H_p\) is constant and H is generated by one-forms \(\omega _1,\ldots ,\omega _r\) (i.e., at each \(p\in V\), \(H_p\) is spanned by \(\omega _1(p),\ldots ,\omega _r(p)\)), then local integrability of H is equivalent to the Frobenius condition, i.e., \(\mathrm{d}\omega _k \wedge \omega _1 \wedge \cdots \wedge \omega _{r} =0\), for \( 1\le k\le r\), see for instance [10].

Observe that the spaces \({\mathcal {H}}_k\) are not codistributions in the sense defined above. To associate with \({\mathcal {H}}_k\) a codistribution on \({\mathcal {S}}\), for \(p\in {\mathcal {S}}\), we set \(H_p\) to be the space of \(\omega (p)\), where \(\omega \in {\mathcal {H}}_k\) is well defined at p. This codistribution is generated by the one-forms given in Proposition 8 and \({\mathcal {S}}\) is the subset of \({\mathcal {X}}\times {\mathcal {U}}\times {\mathbb {R}}^{\iota +\kappa }\) on which these one-forms are well defined and linearly independent at every point. We say that \({\mathcal {H}}_k\) is locally integrable if the codistribution associated with \({\mathcal {H}}_k\) is locally integrable.

Assumption 1

Assume that \({\mathcal {H}}_{\infty }=\{0\}\) and for each \((x,u,u^{[1]},\ldots ,u,u^{[1]},\ldots ,u^{[\iota ]},z^{\langle -1\rangle },\ldots ,z^{\langle -\kappa \rangle })\in {\mathcal {S}}\) the one-forms \(\omega _i^{\varDelta _f^j}\), \(1\leqslant i \leqslant k^*\), \(0\leqslant j\leqslant r_i-1\) evaluated at \((x,u,u^{[1]},\ldots ,u,u^{[1]},\ldots ,u^{[\iota ]},z^{\langle -1\rangle },\ldots ,z^{\langle -\kappa \rangle })\) are linearly independent over \({\mathbb {R}}\).

Observe that under Assumption 1, all \({\mathcal {H}}_k\), \(k\geqslant 2\), define constant-dimensional codistributions on \({\mathcal {S}}\).

Remark 7

Later, we shall restrict variables x and u to a neighbourhood of some \((\bar{x},\bar{u})\in {\mathcal {X}}\times {\mathcal {U}}\). This will result in \({\mathcal {H}}_k\) and \({\mathcal {H}}_\infty \) restricted to such a neighborhood. Let us notice that \({\mathcal {H}}_\infty =\{0\}\) if and only if \({\mathcal {H}}_\infty \) restricted to all such neighbourhoods is equal 0.

4 Feedback linearization

Definition 3

System \(\varSigma \) of the form (1) is said to be linearizable by static state feedback if there exist a state analytic diffeomorphism \(\xi :{\mathcal {X}}\rightarrow \widehat{{\mathcal {X}}}\)

and a static state feedback (6) such that \(\vartheta : ({\mathcal {X}},{\mathcal {V}})\ni (x,v)\mapsto (x,\phi (x,v)) \in ({\mathcal {X}},{\mathcal {U}})\) is an analytic diffeomorphism and, in new coordinates, we have

where the pair (A, B) is controllable, i.e., \(\mathrm{rank}\, [B\ AB\ \ldots \ A^{n-1}B]=n\).

The variables \((\hat{x},v)\) of system (28) belong to some open subset of \({\mathbb {R}}^n\times {\mathbb {R}}^m\).

Definition 4

System \(\varSigma \) of the form (1) is said to be generically locally linearizable by static state feedback if there is an open and dense subset T of \({\mathcal {X}}\times {\mathcal {U}}\) such that for every \((\bar{x},\bar{u})\in T\) there is a neighborhood V of \((\bar{x},\bar{u})\) contained in \({\mathcal {X}}\times {\mathcal {U}}\) such that \(\varSigma \) restricted to V is linearizable by static state feedback.

Observe that the static state feedback (6) used in Definitions 3 and 4 is regular.

Proposition 9

If system (1) is generically locally linearizable by static state feedback, then \({\mathcal {H}}_{\infty }=\{0\}\).

Proof

From the controllability of the pair (A, B) we get \(\widehat{{\mathcal {H}}}_{\infty }=\{0\}\). Since \(\widehat{{\mathcal {H}}}_{\infty }=\{0\}\), then \(\mathcal {{\mathcal {H}}}_{\infty }=\{0\}\) as the image of 0 with respect to a linear map.

Remark 8

The property \({\mathcal {H}}_{\infty }=\{0\}\) corresponds to accessibility of system (1). See [2, 15] for more details.

Theorem 2

Assume that Assumption 1 holds. Then, system (1) is generically locally linearizable by static state feedback if and only if \({\mathcal {H}}_{k}\) is locally integrable for \(1\leqslant k\leqslant k^*.\)

Proof

\(\Leftarrow \): Since from Assumption 1 \({\mathcal {H}}_{\infty }=\{0\}\), then by Corollary 2 there exists a basis \(\{\omega _{i,j}, \ 1\leqslant i\leqslant m, 1\leqslant j\leqslant r_i\}\) of \({\mathcal {H}}_1=\mathrm{span}_{{\mathcal {K}}^*}\{\mathrm{d} x\}\) such that in this basis the first-order approximation of (1), i.e.,

locally takes form (25). Note that “locally” means in some neighborhood of arbitrary point \((\bar{x},\bar{u})\in T\). By Frobenius’s Theorem, there is no loss of generality if we assume that the basis \(\{\omega _{i,j}, \ 1\leqslant i\leqslant m, 1\leqslant j\leqslant r_i\}\) contains exact one-forms. Thus, every \(\omega _{i,j}\) can be integrated, i.e., there exist \(\xi _{i,j}\) such that \(\omega _{i,j}=\mathrm{d}\xi _{i,j}(x)\). Since \(\mathrm{d}\hat{x}_{i,j}^{\varDelta }=[\mathrm{d}\xi _{i,j}(x)]^{\varDelta _f}=\omega _{i,j}^{\varDelta _f}=\omega _{i,j+1}=\mathrm{d}\xi _{i,j+1}(x)=\mathrm{d}\hat{x}_{i,j}\) for \(j=1,\ldots ,r_i-1\), in coordinates \(\hat{x}_{i,j}=\xi _{i,j}(x)\), \( 1\leqslant i\leqslant m\), \(1\leqslant j\leqslant r_i\), system (1) can be written as:

where \(\frac{\partial \hat{f}_i}{\partial \hat{x}_{s,j}}=a_{s,j}^i\) and \(\frac{\partial \hat{f}_i}{\partial {u}_{j}}=b_{j}^i\). Under the feedback \(u(t)=\phi (\hat{x}(t), v(t))\), where

the system has the Brunovsky canonical form (28) with controllability indices \(r_1,\ldots ,r_m\), where the pair (A, B) is controllable.

\(\Rightarrow \): For a linear system, the \(\widehat{{\mathcal {H}}}_k\)’s are integrable and this property is invariant under both regular static state feedback and state diffeomorphism, so for \(1\leqslant k\leqslant k^*\) the \({{\mathcal {H}}}_k\)’s are locally integrable.

Remark 9

For continuous-time case, Theorem 2 yields Theorem 9.1 in [15] which is a differential-form version of a vector-field characterization presented in [24, 27]. For discrete-time systems, the results of Theorem 2 are new because they are described in terms of difference operator while the shift operator is used for discrete time in the literature, see for instance [2].

Now let us present examples that illustrate our results. The first example has been suggested by one of the reviewers.

Example 1

Consider the following nonlinear control system defined on homogeneous time scale \({\mathbb {T}}\) with \(\mu \equiv \mathrm{const}\geqslant 0\):

where \((x_1,x_2)\in {\mathcal {X}}={\mathbb {R}}^2\) and \(u\in {\mathcal {U}}={\mathbb {R}}\).

By formula (24), \({\mathcal {H}}_0 = \mathrm{span}_{{\mathcal {K}}^*}\{\mathrm{d}x_1,\mathrm{d}x_2,\mathrm{d}u\}\). From Proposition 3 (i), it easily follows that \({\mathcal {H}}_1 = \mathrm{span}_{{\mathcal {K}}^*}\{\mathrm{d}x_1,\mathrm{d}x_2\}\), because relative degrees of \(\mathrm{d}x_1\) and \(\mathrm{d}x_2\) are obviously greater or equal to 1, and relative degree of \(\mathrm{d}u\) equals to 0. Since \(\left( \mathrm{d}x_1\right) ^{\varDelta _f}=2x_2 \mathrm{d}x_2\in {\mathcal {H}}_1\), \(\left( \mathrm{d}x_1\right) ^{\varDelta _f^2}=2u \mathrm{d}x_2+2 (x_2+\mu u) \mathrm{d}u\not \in {\mathcal {H}}_1\) and \(\left( \mathrm{d}x_2\right) ^{\varDelta _f}=\mathrm{d}u\not \in {\mathcal {H}}_1\), the relative degrees of \(\mathrm{d}x_1\) and \(\mathrm{d}x_2\) are equal to 2 and 1, respectively. Then, \({\mathcal {H}}_2= \mathrm{span}_{{\mathcal {K}}^*}\{\mathrm{d}x_1\}\) and \({\mathcal {H}}_3={\mathcal {H}}_\infty = \{0\}\).

From Theorem 1, there exist the one-form \(\omega _1=\mathrm{d}x_1\) whose relative degree is equal to 2 and

Note that all \({\mathcal {H}}_k\), \(k\geqslant 0\), are integrable, but they do not satisfy Assumption 1 at \(x_2=0\), \(x_2+\mu u=0\). But for \(x_2\ne 0\) and \(x_2+\mu u\ne 0\), we have (in terms of Corollary 2) the one-forms \(\omega _{1,1} = \mathrm{d}x_1\), \(\omega _{1,2} = 2x_2\mathrm{d}x_2\) and

Since for \({\mathcal {S}}=\{(x_1,x_2,u): x_2\ne 0 \ \wedge \ x_2+\mu u \ne 0\}\subset {\mathbb {R}}^3\) the assumptions of Theorem 2 are satisfied, the new local state variables can be defined as follows: \(\hat{x}_1:=x_1\) and \(\hat{x}_2 := x_2^2\). In these coordinates, the system takes the linearized form:

where \(v = 2u\sqrt{\hat{x}_2}+\mu u^2\).

Example 2

Consider the following nonlinear control system defined on \({\mathbb {T}}= {\mathbb {Z}}\) (with \(\mu \equiv 1\)):

where \((x_1,x_2)\in {\mathcal {X}}=\{x \mid \ x_i\ne 0, i=1,2\}\subset {\mathbb {R}}^2\) and \((u_1,u_2)\in {\mathcal {U}}={\mathbb {R}}{\setminus }\{0\}\).

By formula (24), \({\mathcal {H}}_0 = \mathrm{span}_{{\mathcal {K}}^*}\{\mathrm{d}x_1,\mathrm{d}x_2,\mathrm{d}u\}\). From Proposition 3 (i), it easily follows that \({\mathcal {H}}_1 = \mathrm{span}_{{\mathcal {K}}^*}\{\mathrm{d}x_1,\mathrm{d}x_2\}\), since relative degrees of \(\mathrm{d}x_1\) and \(\mathrm{d}x_2\) are obviously greater or equal to 1, and relative degree of \(\mathrm{d}u\) equals to 0. To compute the next elements of the sequence \({\mathcal {H}}_k\), the algorithm in the proof of Proposition 8 has to be applied repeatedly.

To find \({\mathcal {H}}_2\), we assume \(k=1\), \(r_k=2\) and \(r_{k-1}-r_k=1\). The basis vectors may be chosen as \(\eta _1 = \mathrm{d}x_1\), \(\eta _2 = \mathrm{d}x_2\) and \(\vartheta =\mathrm{d}u\). The next step is to find \(\eta _i^{\varDelta _f}\) in terms of \(\eta _1\), \(\eta _2\) and \(\vartheta \) for \(i=1,2\), i.e., \(\eta _1^{\varDelta _f}=-\mathrm{d}x_1 + 2x_2 u\mathrm{d}x_2 + x^2_2\mathrm{d}u=-\eta _1 + 2x_2 u\eta _2 + x^2_2\vartheta \) and \(\eta _2^{\varDelta _f}=-\mathrm{d}x_2 + u\mathrm{d}x_2 + x_2\mathrm{d}u=(u-1)\eta _2 + x_2\vartheta \). The coefficients of \(\vartheta \) are \((c_{11},c_{21})^\mathrm{T} =(x_2^2, x_2)^\mathrm{T}\) and thus one possible solution of (33) is \((a_1^{\sigma _f},a_2^{\sigma _f})^\mathrm{T} = (-1,x_2)^\mathrm{T}\). To find \(a_2\), we have to express \(x_2^{\sigma _f^{-1}}\) from (31). By (31), \(x_1^{\sigma _f}=x_1+x_1^{\varDelta _f} = u x_2^2\) and \(x_1^{\sigma _f}= u x_2\). Dividing the first equation by the second gives us \((x_1\cdot x_2^{-1})^{\sigma _f} = x_2\), which yields after application of the backward jump operator \(x_2^{\sigma _f^{-1}}= x_1\cdot x_2^{-1}\). Hence \((a_1,a_2)^\mathrm{T} = (-1,x_1\cdot x_2^{-1})^\mathrm{T}\) and \({\mathcal {H}}_2 = \mathrm{span}_{{\mathcal {K}}^*}\{a_1\eta _1+a_2\eta _2\}=\mathrm{span}_{{\mathcal {K}}^*}\{-\mathrm{d}x_1 + x_1\cdot x_2^{-1}\mathrm{d}x_2\} =\mathrm{span}_{{\mathcal {K}}^*}\{x_2^{-1}\mathrm{d}x_1 - x_1\cdot x_2^{-2}\mathrm{d}x_2\} = \mathrm{span}_{{\mathcal {K}}^*}\{\mathrm{d}(x_1\cdot x_2^{-1})\}\).

For computing \({\mathcal {H}}_3\), one may choose \(\eta _1 = -x_2\mathrm{d}x_1 + x_1\mathrm{d}x_2\) and \(\vartheta = \mathrm{d}x_1\). Then, \(\eta _1^{\varDelta _f} = x_2\mathrm{d}x_1 - (x_1u^2x_2^2)\mathrm{d}x_2 = -[1+(u^2x_2^2 x_1^{-1})]\eta _1-(u^2x_2^3x_1^{-1})\vartheta \). From here \(c_{11} = -u^2x_2^3 x_1^{-1}\ne 0\). Since the system \(a_1^{\sigma _f} c_{11}=0\) does not admit a non-zero solution, the subspace \({\mathcal {H}}_3 = \{0\}\).

According to Proposition 3, \(k^*=3\). Since Assumption 1 is satisfied and all the subspaces \({\mathcal {H}}_k\), \(1\leqslant k \leqslant 3\), are locally integrable, the conditions of Theorem 2 are fulfilled and thus, system (31) is generically locally linearizable by static state feedback. One may chooseFootnote 4 new state variables as \(\hat{x}_1:=x_1 x_2^{-1}, \hat{x}_2:=x_2-x_1 x_2^{-1}\). In these variables, the system takes linearized form:

where \(v=(u-1)\hat{x}_1 + (u-2)\hat{x}_2\). We could consider the system on \({\mathbb {R}}^2\), but in order to guarantee the constant dimensions of codistributions \({\mathcal {H}}_k\), \(k\geqslant 2\) the state space has to be reduced to \(\{(x_1,x_2): \ x_1\ne 0, \ x_2\ne 0\}\).

Remark 10

Note that for the systems defined on the homogeneous time scale with \(\mu >0\), by (8) and (15), we get \(\omega \in {\mathcal {H}}_{k+1}\) if and only if \(\omega \in {\mathcal {H}}_{k}\) and \(\sigma _f(\omega )\in {\mathcal {H}}_{k}\). Hence

and, consequently, \({\mathcal {H}}_{k+1}\) in (15) can be alternatively defined as:

like in [2] where the shift operator is used in the description of the subspaces of differential one-forms. Therefore, for the discrete-time systems the subspaces \({\mathcal {H}}_{k}\), \(k\geqslant 0\), defined by (15), are the same as in [2], but the formalism used in the description of the subspaces is different. We base on difference operator that is a special case of delta derivative while in [2] the shift operator formalism is used. Additionally, using the delta-domain approach we have one description that works for both the continuous and discrete systems. Though the computation of the delta-derivative is different in the continuous- and discrete-time cases, the results obtained by means of it are the same for both time domains.

Example 3

Consider a nonlinear control system defined on homogeneous time scale \({\mathbb {T}}\) with \(\mu \geqslant 0\):

where \((x_1,\ldots ,x_6)\in {\mathcal {X}}=\{x \mid \ x_3\ne 0 \text { and } x_6\ne 0\}\subset {\mathbb {R}}^6\) and \((u_1,u_2)\in {\mathcal {U}}={\mathbb {R}}^2\).

The algorithm given in the proof of Proposition 8 allows to find the subspaces \({\mathcal {H}}_k\):

The conditions of Theorem 2 are satisfied for (32), thus (32) is generically locally linearizable by static state feedback and can be represented in the form (29). Note that the computations below can be done even if some of \({\mathcal {H}}_k\)’s are non-integrable. Namely, one may always find the linearized system equations in terms of one-forms, not necessarily exact, as in (25).

Following the proof of Theorem 1, we construct the sets \({\mathcal {W}}_k\), \(k=k^*,\ldots ,1\), necessary to find the state transformations to linearize the equations. Obviously, \({\mathcal {W}}_{k^*} = {\mathcal {W}}_5={0}\). Then, one has to choose \({\mathcal {W}}_{k^*-1}={\mathcal {W}}_4\) in a such manner that the elements of \({\mathcal {W}}_5 \cup {\mathcal {W}}_5^\varDelta \cup {\mathcal {W}}_4\) form a basis for \({\mathcal {H}}_4\). Obviously, \({\mathcal {W}}_4 =\{\mathrm{d}x_5\}\). As a next step, one has to find \({\mathcal {W}}_3\) such that \({\mathcal {W}}_5 \cup {\mathcal {W}}_5^{\varDelta _f } \cup {\mathcal {W}}_5^{\varDelta _f^2}\cup {\mathcal {W}}_4\cup {\mathcal {W}}_4^{\varDelta _f}\cup {\mathcal {W}}_3\) is the basis for \({\mathcal {H}}_3\). The sets \({\mathcal {W}}_4\) and \({\mathcal {W}}_4^{\varDelta _f} = \{\mathrm{d}x_5^{\varDelta _f}\} = \{\mathrm{d}(x_2-\frac{x_3}{x_6})\}\) span \({\mathcal {H}}_3\), hence \({\mathcal {W}}_3=\emptyset \). Next, we find \({\mathcal {W}}_2\) such that \({\mathcal {W}}_5 \cup \cdots \cup {\mathcal {W}}_5^{\varDelta _f^3}\cup {\mathcal {W}}_4\cup {\mathcal {W}}_4^{\varDelta _f}\cup {\mathcal {W}}_4^{\varDelta _f^2}\cup {\mathcal {W}}_3\cup {\mathcal {W}}_3^{\varDelta _f}\cup {\mathcal {W}}_2\) is the basis for \({\mathcal {H}}_2\). The set \({\mathcal {W}}_4^{\varDelta _f^2} = \{[\mathrm{d}(x_2-\frac{x_3}{x_6})]^{\varDelta _f}\} = \{\mathrm{d}x_6\}\), thus we may choose \({\mathcal {W}}_2 = \{\mathrm{d}x_1\}\). On the last step, we have to find \({\mathcal {W}}_1\); regarding that \({\mathcal {W}}_4^{\varDelta _f^3}=\{\mathrm{d}x_6^{\varDelta _f}\}=\{\mathrm{d}(\frac{x_3}{x_6})\}\) and \({\mathcal {W}}_2^{\varDelta _f} = \{\mathrm{d}x_1^{\varDelta _f}\}=\{\mathrm{d}(\frac{x_4 x_6}{x_3})\}\) it follows that \({\mathcal {W}}_1 =\emptyset \). Finally, we may take \(\omega _1 = \mathrm{d}x_5\) and \(\omega _2 = \mathrm{d}x_1\), and their relative degrees are \(r_1=4\) and \(r_2 = 2\), respectively.

In terms of Corollary 2, the one-forms \(\omega _{1,1} = \mathrm{d}x_5\), \(\omega _{1,2} = \mathrm{d}(x_2-\frac{x_3}{x_6})\), \(\omega _{1,3} = \mathrm{d}x_6\), \(\omega _{1,4} = \mathrm{d}(\frac{x_3}{x_6})\), \(\omega _{2,1} = \mathrm{d}x_1\) and \(\omega _{2,2} = \mathrm{d}(\frac{x_4x_6}{x_3})\), and

Since the assumptions of Theorem 2 are satisfied, the new state variables can be defined as follows: \(\hat{x}_1:=x_5\), \(\hat{x}_2 := x_2-\frac{x_3}{x_6}\), \(\hat{x}_3:=x_6\), \(\hat{x}_4:=\frac{x_3}{x_6}\), \(\hat{x}_5:=x_1\) and \(\hat{x}_6:=\frac{x_4 x_6}{x_3}\). In these coordinates, the system takes the linearized form:

where \(v_1 = u_2+u_1\hat{x}_3\) and \(v_2=u_2\).

Let us now give the example where the integrability of \({\mathcal {H}}_k\) depends on the time scale.

Example 4

Consider a nonlinear control system defined on homogeneous time scale \({\mathbb {T}}\) with \(\mu \geqslant 0\):

where \((x_1,\ldots ,x_4)\in {\mathcal {X}}\subset {\mathbb {R}}^4\) and \((u_1,u_2)\in {\mathbb {R}}^2\).

Let \({\mathcal {S}}:=\{(x,u_1,u_2,x_3^{\langle -1\rangle })\mid \ x_1\ne 0, \ x_2\ne 0, \ x_3\ne 0 \text { and } 1+x_3-(1+\mu )x_3^{\langle -1\rangle }\ne 0\}\) be the subset of \({\mathcal {X}}\times {\mathcal {U}}\times {\mathbb {R}}\). Then, one gets the following subspaces:

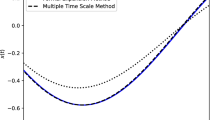

which define constant-dimensional codistributions on \({\mathcal {S}}\). Note that if \(\mu \ne 0\), then in general \({\mathcal {H}}_2\) is not integrable. For \(\mu = 0\), \(x_3^{\langle - 1\rangle }=\sigma _f^{-1}(x_3)=x_3\), \(1+x_3-(1+\mu )x_3^{\langle -1\rangle } = 1 \ne 0\) and \({\mathcal {H}}_2 =\mathrm{span}_{{\mathcal {K}}^*}\{\mathrm{d}x_2-\mathrm{d}x_4, -\mathrm{d}x_1 + x_1\mathrm{d}x_3\}\,\) is integrable. Therefore, for \(\mu =0\), the conditions of Theorem 2 are satisfied and one may define new state variables as:

Hence the coordinates \(x_i\) can be expressed in terms of \(\hat{x}_i\) as follows:

For \(\mu =0\), in the new coordinates system (33) takes the linearized form:

where \(v_1 = (1+e^{-\hat{x}_2/\hat{x}_1}u_2\hat{x}_1)\hat{x}_2 + \hat{x}_2^2/\hat{x}_1-u_2\hat{x}_1(\hat{x}_3+\hat{x}_4)\) and \(v_2 = u_1 - e^{-\hat{x}_2/\hat{x}_1} u_2(\hat{x}_1-\hat{x}_2)(e^{-\hat{x}_2/\hat{x}_1} \hat{x}_2-\hat{x}_3-\hat{x}_4)\).

5 Conclusions

In the paper, the necessary and sufficient conditions for the generic local static state feedback linearizability of nonlinear control systems defined on homogeneous time scales are given. Our main contribution has been to show the properties of subspaces of differential one-forms that contain considerable structural information about the system. Then, one of the main results, i.e., necessary and sufficient conditions for generic local linearizability by static state feedback, are formulated in terms of these subspaces. Our future goal is devoted to extend the results to non-homogeneous but regular time scales.

Notes

This assumption guarantees that the system \(x^{\sigma }=\tilde{f}(x,u)\) is submersive, that is generically \(\mathrm{rank}\, \frac{\partial \tilde{f}(x,u)}{\partial (x,u)}=n\).

This assumption, though natural, is not necessary for construction of \({\mathcal {K}}^*\).

Note that \(k^{*}\) is defined by Proposition 3.

The next example describes this process in details.

References

Anderson SR, Kadirkamanathan V (2007) Modelling and identification of nonlinear deterministic systems in delta-domain. Automatica 43:1859–1868

Aranda-Bricaire E, Kotta Ü, Moog C (1996) Linearization of discrete-time systems. SIAM J Control Optim 34(6):1999–2023. doi:10.1137/S0363012994267315

Bartosiewicz Z (2013) Linear positive control systems on time scales; controllability. Math Control Signals Syst 25:327–343. doi:10.1007/s00498-013-0106-6

Bartosiewicz Z, Kotta Ü, Pawłuszewicz E, Tõnso M, Wyrwas M (2013) Algebraic formalism of differential \(p\)-forms and vector fields for nonlinear control systems on homogeneous time scales. Proc Estonian Acad Sci 62(4):215–226. doi:10.3176/proc.2013.4.02

Bartosiewicz Z, Kotta Ü, Pawłuszewicz E, Wyrwas M (2007) Algebraic formalism of differential one-forms for nonlinear control systems on time scales. Proc Estonian Acad Sci 56(3):264–282

Bartosiewicz Z, Pawłuszewicz E (2006) Realizations of linear control systems on time scales. Control Cybern 35:769–786

Bartosiewicz Z, Piotrowska E (2013) On stabilisability of nonlinear systems on time scales. Int J Control 86:139–145. doi:10.1080/00207179.2012.721563

Bohner M, Peterson A (2001) Dynamic equations on time scales. Birkhäuser, Boston

Briat C, Jönsson U (2011) Dynamic equations on time scale: application to stability analysis and stabilization of aperiodic sampled-data systems. In: Preprints of the 18th IFAC World Congress, pp 11374–11379

Bryant R, Chern S, Gardner R, Goldschmitt H, Griffiths P (1991) Exterior differential systems, vol 18. Springer, New York

Califano C, Monaco S, Normand-Cyrot D (1999) On the problem of feedback linearization. Syst Control Lett 36:61–67

Casagrande D, Kotta Ü, Tõnso M, Wyrwas M (2010) Transfer equivalence and realization of nonlinear input–output delta-differential equations on homogeneous time scales. IEEE Trans Autom Control 55:2601–2606. doi:10.1109/TAC.2010.2060251

Chadwick MA, Kadirkamanathan V, Billings SA (2006) Analysis of fast-sampled non-linear systems: generalised frequency response functions for \(\delta \)-operator models. Signal Process 86(11):3246–3257

Cohn R (1965) Difference algebra. Wiley-Interscience, New York

Conte G, Moog C, Perdon A (2007) Algebraic methods for nonlinear control systems, 2nd edn. Lecture notes in control and information sciences, vol 242. Springer, London

Doan TS, Kalauch A, Siegmund S, Wirth F (2010) Stability radii for positive linear time-invariant systems on time scales. Syst Control Lett 59:173–179. doi:10.1016/j.sysconle.2010.01.002

Fan H, De P (2001) High speed adaptive signal progressing using the delta operator. Digit Signal Process 11:3–34. doi:10.1006/dspr.1999.0352

Goodwin GC, Graebe SF, Salgado ME (2001) Control system design. Prentice Hall, Englewood Cliffs

Girejko E, Malinowska AB, Torres Delfim FM (2012) The contingent epiderivative and the calculus of variations on time scales. Optim Lett 61(3):251–264

Girejko E, Mozyrska D, Wyrwas M (2011) Contingent epiderivatives of functions on time scale. J Convex Anal 18(4):1047–1064

Grizzle J (1993) A linear algebraic framework for the analysis of discrete-time nonlinear systems. SIAM J Control Optim 31(4):1026–1044. doi:10.1137/0331046

Halaucǎ C, Lazǎr C (2008) Delta domain predictive control for fast, unstable and non-minimum phase systems. Control Eng Appl Inf 10(4):26–31

Hoagg JB, Santillo MA, Bernstein DS (2008) Internal model control in the shift and delta domains. IEEE Trans Autom Control 53(4):1066–1072. doi:10.1109/TAC.2008.921526

Hunt LR, Su R (1981) Linear equivalents of nonlinear time varying systems. In: International symposium MTNS, Santa Monica

Isidori A (1995) Nonlinear control systems, 3rd edn. Springer, Berlin

Jakubczyk B (1987) Feedback linearization of discrete-time systems. Syst Control Lett 9:411–416

Jakubczyk B, Respondek W (1980) On linearization of control systems. Bull Acad Polonaise Sci Ser Sci Math 28:517–522

Kotta Ü, Bartosiewicz Z, Pawłuszewicz E, Wyrwas M (2009) Irreducibility, reduction and transfer equivalence of nonlinear input–output equations on homogeneous time scales. Syst Control Lett 58:646–651. doi:10.1016/j.sysconle.2009.04.006

Kotta Ü, Tõnso M, Shumsky AY, Zhirabok AN (2013) Feedback linearization and lattice theory. Syst Control Lett 62(3):248–255. doi:10.1016/j.sysconle.2012.11.014

Lauritsen MB, Rostgaard M, Poulsen NK (1997) GPC using a delta-domain emulator-based approach. Int J Control 68(1):219–232. doi:10.1080/002071797223802

Li G, Gevers M (1993) Comparative study of finite word length effects in shift and delta operator parametrizations. IEEE Trans Autom Control 38(5):803–807

Malinowska AB, Torres Delfim FM (2011) Euler-Lagrange equations for composition functionals in calculus of vibrations on time scales. Discrete Contin Dyn Syst 29(2):577–593. doi:10.3934/dcds.2011.29.577

Middleton RH, Goodwin GC (1990) Digital control and estimation: a unified approach. Prentice Hall, Englewood Cliffs

Mozyrska D, Torres DFM (2009) The natural logarithm on time scales. J Dyn Syst Geom Theor 7(1):41–48. doi:10.1080/1726037X.2009.10698561

Mullari T, Kotta U, Tõnso M (2011) Static state feedback linearizability: relationship between two methods. Proc Estonian Acad Sci 60(2):121–135. doi:10.3176/proc.2011.2.07

Nijmeijer H, van der Schaft AJ (1990) Nonlinear dynamical control systems. Springer, New York

Suchomski P (2001) Robust PI and PID controller design in delta domain. Proc Inst Electr Eng 148(5):350–354

Wyrwas M, Girejko E, Mozyrska D (2011) Subdifferentials of convex functions on time scales. Discrete Contin Dyn Syst 29(2):671–691. doi:10.3934/dcds.2011.29.671

Yuz JI, Goodwin GC (2005) On sampled-data models for nonlinear systems. IEEE Trans Autom Control 50(10):1477–1489. doi:10.1109/TAC.2005.856640

Acknowledgments

We are grateful to anonymous referees for very valuable suggestions and comments, which improved the quality of the paper. The work of Ü. Kotta and M. Tõnso was supported by the European Regional Development Fund, by the Estonian Research Council grant PUT481. The work of Z. Bartosiewicz and M. Wyrwas was supported by the Bialystok University of Technology Grant No. S/WI/2/2011.

Author information

Authors and Affiliations

Corresponding author

Appendix: The proof of Proposition 6

Appendix: The proof of Proposition 6

Proof

Let \(\widehat{\omega }=\widehat{\omega }_x+\widehat{\omega }_u+\widehat{\omega }_z\in \widehat{{\mathcal {E}}}\), where \(\widehat{\omega }_x=\sum \limits _{i}\widehat{A}_i \mathrm{d}\hat{x}_i\), \(\widehat{\omega }_u=\sum \limits _{k\geqslant 0}\sum \limits _{j=1}^{m}\widehat{B}_{jk} \mathrm{d}u_j^{[k]}\) and \(\widehat{\omega }_z=\sum \limits _{\ell \geqslant 1}\sum \limits _{s=1}^{m}\widehat{C}_{s\ell } \mathrm{d}z_s^{\langle -\ell \rangle }\). Then, \(\xi ^*\left( \widehat{\omega }\right) \in {\mathcal {E}}\) and by (19) and (20) we get

and similarly

Hence

Therefore (21) holds.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Bartosiewicz, Z., Kotta, Ü., Tõnso, M. et al. Static state feedback linearization of nonlinear control systems on homogeneous time scales. Math. Control Signals Syst. 27, 523–550 (2015). https://doi.org/10.1007/s00498-015-0150-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00498-015-0150-5