Abstract

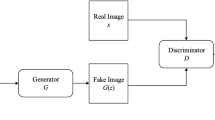

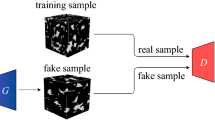

Porous media reconstruction is quite significant in the fields of environmental supervision, oil and natural gas engineering, biomedicine and material engineering. The traditional numerical reconstruction methods such as multi-point statistics (MPS) are based on the statistical characteristics of training images (TIs), but the reconstruction quality may be unsatisfactory and the process is time-consuming. Recently, with the rapid development of deep learning, its powerful ability in predicting features has been used to reconstruct porous media. Generative adversarial network (GAN) is one of the generative methods of deep learning, which is derived from the two-person zero-sum game through the confrontation between the generator and discriminator. However, the traditional GAN cannot pay special attention to the effective features in learning, and the degradation problem easily occurs with the increase of layer numbers. Besides, high-resolution (HR) and large FOV (field of view) are usually contradictory for physical imaging equipment. Therefore, in practical experiments, due to the limitations of the resolution of imaging equipment and the sample size, it is difficult to obtain large-scale HR images of porous media physically. At this point, numerical super-resolution (SR) reconstruction seems to be a cost-efficient way. In this paper, residual networks and attention mechanisms are combined with single-image GAN (SinGAN), which can learn the structural characteristics of porous media from a low-resolution (LR) 3D image, and then reconstruct 3D HR or large-scale images of porous media. Comparison to some other numerical methods has proved that our method can reconstruct high-quality HR images with practicability and effectiveness.

Similar content being viewed by others

Code and data availability

The codes and data used in this study are available in Github (https://github.com/vevvve/SASGAN).

References

Arjovsky M, Chintala S, Bottou L (2017) Wasserstein GAN. ArXiv, abs/1701.07875

Avizo (2015) Avizo user’s guide, 9th edn. FEI, USA

Ba J, Mnih V, Kavukcuoglu K (2014) Multiple object recognition with visual attention. arXiv preprint arXiv:1412.7755

Beucher H, Renard D (2016) Truncated Gaussian and derived methods. CR Geosci 348(7):510–519

Blunt MJ, Bijeljic B, Dong H, Gharbi O, Iglauer S, Mostaghimi P, Paluszny A, Pentland C (2013) Pore-scale imaging and modelling. Adv Water Resour 51:197–216

Chen L, Zhang H, Xiao J, Nie L, Shao J, Liu W, Chua TS (2017) Sca-cnn: spatial and channel-wise attention in convolutional networks for image captioning. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 5659–5667

Costanza‐Robinson MS, Estabrook BD, Fouhey DF (2011) Representative elementary volume estimation for porosity, moisture saturation, and air‐water interfacial areas in unsaturated porous media: data quality implications. Water Resour Res 47(7)

Dong H, Blunt MJ (2009) Pore-network extraction from micro-computerized-tomography images. Phys Rev E 80(3):036307

Dong C, Loy CC, He K, Tang X (2014) Learning a deep convolutional network for image super-resolution. Paper presented at the European conference on computer vision. Springer, Cham, pp 184–199

Feng J, Teng Q, He X, Wu X (2018) Accelerating multi-point statistics reconstruction method for porous media via deep learning. Acta Mater 159:296–308

Goodfellow I, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S et al (2014) Generative adversarial nets. Adv Neural Inf Process Syst 27

Gulrajani I, Ahmed F, Arjovsky M, Dumoulin V, Courville AC (2017) Improved training of wasserstein gans. Adv Neural Inf Process Syst 30

Hazlett RD (1997) Statistical characterization and stochastic modeling of pore networks in relation to fluid flow. Math Geol 29(6):801–822

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 770–778

Hinz T, Fisher M, Wang O, Wermter S (2021) Improved techniques for training single-image gans. In: Proceedings of the IEEE/CVF winter conference on applications of computer vision, pp 1300–1309

Hou J, Zhang S, Li Y (2007) Reconstruction of 3D network model through CT scanning. In: EUROPEC/EAGE conference and exhibition

Hu J, Shen L, Sun G (2018) Squeeze-and-excitation networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 7132–7141

Kim SM, Park JC, Lee KH (2005) Depth-image based full 3d modeling using trilinear interpolation and distance transform. In: Proceedings of the 2005 international conference on Augmented tele-existence, pp 259–260

Kim J, Lee JK, Lee KM (2016) Accurate image super-resolution using very deep convolutional networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1646–1654

Krishnan S, Journel AG (2003) Spatial connectivity: from variograms to multiple-point measures. Math Geol 35(8):915–925

Kundu A, Banerje S, Sarkar C, Barman S (2020) An axis based mean filter for removing high-intensity salt and pepper noise. In: 2020 IEEE Calcutta conference (CALCON), pp 363–367

Ledig C, Theis L, Huszár F, Caballero J, Cunningham A, Acosta A et al (2017) Photo-realistic single image super-resolution using a generative adversarial network. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 4681–4690

Lymberopoulos DP, Payatakes AC (1992) Derivation of topological, geometrical, and correlational properties of porous media from pore-chart analysis of serial section data. J Colloid Interface Sci 150(1):61–80

Mosser L, Dubrule O, Blunt MJ (2017) Reconstruction of three-dimensional porous media using generative adversarial neural networks. Phys Rev E 96(4):043309

Niu Z, Zhong G, Yu H (2021) A review on the attention mechanism of deep learning. Neurocomputing 452:48–62

Okabe H, Blunt MJ (2004) Prediction of permeability for porous media reconstructed using multiple-point statistics. Phys Rev E 70(6):066135

Okabe H, Blunt MJ (2005) Pore space reconstruction using multiple-point statistics. J Pet Sci Eng 46(1–2):121–137

Otsu N (1979) A threshold selection method from gray-level histograms. IEEE Trans Syst Man Cybern 9(1):62–66

Shaham TR, Dekel T, Michaeli T (2019) Singan: learning a generative model from a single natural image. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 4570–4580

Shams R, Masihi M, Boozarjomehry RB, Blunt MJ (2021) A hybrid of statistical and conditional generative adversarial neural network approaches for reconstruction of 3D porous media (ST-CGAN). Adv Water Resour 158:104064

Shi W, Caballero J, Huszár F, Totz J, Aitken AP, Bishop R et al (2016) Real-time single image and video super-resolution using an efficient sub-pixel convolutional neural network. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1874–1883

Song S, Mukerji T, Hou J (2021) Geological facies modeling based on progressive growing of generative adversarial networks (GANs). Comput GeoSci 25:1251–1273. https://doi.org/10.1007/s10596-021-10059-w

Song S, Mukerji T, Hou J (2022) Bridging the gap between geophysics and geology with generative adversarial networks (GANs). IEEE Trans Geosci Remote Sens 60:1–11. https://doi.org/10.1109/TGRS.2021.3066975

Strebelle S (2002) Conditional simulation of complex geological structures using multiple-point statistics. Math Geol 34(1):1–21

Tomutsa L, Radmilovic V (2003) Focused ion beam assisted three-dimensional rock imaging at submicron scale (No. LBNL-52648). Lawrence Berkeley National Lab. (LBNL), Berkeley, CA (United States)

Valsecchi A, Damas S, Tubilleja C, Arechalde J (2020) Stochastic reconstruction of 3D porous media from 2D images using generative adversarial networks. Neurocomputing 399:227–236

Woo S, Park J, Lee JY, Kweon, IS (2018) CBAM: convolutional block attention module. In: Proceedings of the European conference on computer vision (ECCV):3–19

Xu K, Ba J, Kiros R, Cho K, Courville A, Salakhudinov R et al (2015) Show, attend and tell: neural image caption generation with visual attention. In: International conference on machine learning (PMLR):2048–2057

Zhang T, Du Y, Huang T, Yang J, Lu F, Li X (2016) Reconstruction of porous media using ISOMAP-based MPS. Stoch Environ Res Risk Assess 30(1):395–412

Zhang T, Xia P, Lu F (2021) 3D reconstruction of digital cores based on a model using generative adversarial networks and variational auto-encoders. J Pet Sci Eng 207:109151

Funding

This work is supported by the National Natural Science Foundation of China (Nos. 41672114, 41702148).

Author information

Authors and Affiliations

Contributions

TZ: Conceptualization, Methodology, Writing–original draft. NB: Software, Writing—review and editing. QL: Conceptualization, Methodology, Software, Writing—original draft. YD: Investigation, Writing—review and editing.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflicts of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: The derivation of identity mapping in ResNets

The data flow in ResNets is designed as follows: the input x subsequently passes through the first weight layer for the first convolution computation, the first activation function Relu, the second weight layer for the second convolution computation, the second activation function Relu, and so on, until the final residual term F(x) is obtained. At the end of the residual learning unit, the input x is directly transferred to the output by a “shortcut” connection, and then the result H(x) is obtained. Through the introduction of shortcut connections, the degradation problem with the deepening of network is alleviated largely.

As mentioned previously, ResNets only need to learn F(x) = 0 to realize the identity mapping. Compared to learning H(x) = x, setting F(x) = 0 will converge much faster when updating the parameters of each layer in the learning process. The idea of constructing an identity map is introduced in this appendix.

Assume that the input and output dimensions of the nonlinear unit in the neural network are consistent, the function H(∙) to be fitted in the neural network unit can be divided into two parts, namely:

where l is the layer number and a is the input; F(∙) is the residual function; a(l−1) is the input of the (l-1)-th layer; F(a(l−1)) is the residual term of the (l-1)-th layer; H(a(l−1)) is the output of the (l-1)-th layer.

In ResNets, learning an identity mapping is equivalent to making the residual part close to 0, namely F(a(l−1)) → 0. If F(x) = 0 or H(x) = x, then an identity mapping is constructed. Generally, the output of one layer in network is the input of its next layer. Consider any two layers l2 > l1, and recursively expand Eq. (A.1). Then the input of the l2-th layer \({a}^{({l}_{2})}\) can be defined as follows:

Finally, \({a}^{({l}_{2})}\) can be formulated as follows:

It seems that in Eq. (A.4) the input of the l2-th layer \({a}^{({l}_{2})}\) is equal to the sum of the input of the l1-th layer \({a}^{({l}_{1})}\) and the sum of residual terms from the l1-th to the (l2-1)-th layer. According to Eq. (A.4), in forward propagation, the input data can propagate directly from a lower level to a higher level. Based on the chain derivation rule, the gradient of the middle output layer will be used in the back-propagation of the gradient, that is, the gradient of the intermediate output layer will be used as the gradient of the weight. In this way, the gradient of the final loss δ to the output of a lower layer can be expressed as:

Then Eq. (A.4) is substituted into Eq. (A.5) to obtain Eq. (A.6):

where SG (sum of gradients plus one) stands for \(\frac{\partial {a}^{({l}_{2})}}{\partial {a}^{({l}_{1})}}\). Finally, Eq. (A.6) is formulated as follows:

There may be several layers between the l2-th layer and the l1-th layer. In CNN, the gradient update is calculated according to the chain derivation rule, meaning from one layer to its next adjacent layer. As shown in Eq. (A.5), \(\frac{\partial \delta }{\partial {a}^{({l}_{1})}}\) is expressed by the multiplication of two partial derivatives. Therefore, when the derivatives between adjacent layers are much larger or less than 1, \(\frac{\partial \delta }{\partial {a}^{({l}_{1})}}\) will increase or decrease exponentially if there are many layers in CNN, leading to the gradient explosion or gradient disappearance. According to Eq. (A.6), SG is equal to the sum of some gradients plus one. The first term in SG is the constant (i.e. 1), and the second term is the gradient sum of residual terms (i.e. \(\frac{\partial }{\partial {a}^{({l}_{1})}}{\sum }_{i={l}_{1}}^{{l}_{2}-1}F({a}^{(i)})\)). It can be known that the second term cannot be all -1. In addition, even if the second term is very small, since there is a constant 1 in the first term, the overall gradient cannot disappear, i.e. SG cannot always be 0. From Eq. (A.5) to Eq. (A.7), it can be seen that the gradient is calculated by addition rather than multiplication, meaning that the gradient will increase relatively slow, thus avoiding gradient explosion. In CNN, the gradient is calculated according to Eq. (A.5), while Eq. (A.6) is used to calculate the gradient in ResNets through residual terms. To sum up, it is considered that residual connections make information move forward and backward more smoothly by addressing the degradation problem.

Appendix B: Pseudo codes of MPC calculation

As shown in the above pseudo codes, “j” is a variable representing the current number of pixels/voxels in one direction. The function indicator_function(u + j * h) returns 1 or 0: when the state value located at a specific position (i.e., u + j*h) is pore space, indicator_function(…) = 1; otherwise, indicator_function(…) = 0. The function calculate_expected_value(…) calculates the expected value of the input.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhang, T., Bian, N., Liu, Q. et al. 3D super-resolution reconstruction of porous media based on GANs and CBAMs. Stoch Environ Res Risk Assess 38, 1475–1504 (2024). https://doi.org/10.1007/s00477-023-02639-2

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00477-023-02639-2