Abstract

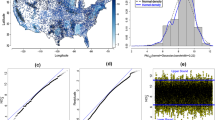

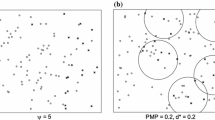

Spatial regression models are often used to analyze the ecological and environmental data sets over a continuous spatial support. Issues of collinearity among covariates have been widely discussed in modeling, but only rarely in discussing the relationship between covariates and unobserved spatial random processes. Past researches have shown that ignoring this relationship (or, spatial confounding) would have significant influences on the estimation of regression parameters. To overcome this problem, an idea of restricted spatial regression is used to ensure that the unobserved spatial random process is orthogonal to covariates, but the related inferences are mainly based on Bayesian frameworks. In this paper, an adjusted generalized least squares estimation method is proposed to estimate regression coefficients, resulting in estimators that perform better than conventional methods. Under the frequentist framework, statistical inferences of the proposed methodology are justified both in theories and via simulation studies. Finally, an application of a water acidity data set in the Blue Ridge region of the eastern U.S. is presented for illustration.

Similar content being viewed by others

References

Besag J, York JC, Mollié A (1991) Bayesian image restoration, with two applications in spatial statistics (with discussion). Ann Inst Stat Math 43:1–59

Brunton LA, Alexander N, Wint W, Ashton A, Broughan JM (2017) Using geographically weighted regression to explore the spatially heterogeneous spread of bovine tuberculosis in England and Wales. Stoch Environ Res Risk Assess 31:339–352

Clayton DG, Bernardinelli L, Montomoli C (1993) Spatial correlation in ecological analysis. Int J Epidemiol 22:1193–1202

Cressie N (1993) Statistics for spatial data, revised edn. Wiley, New York

Cressie N, Johannesson G (2008) Fixed rank kriging for very large spatial data sets. J R Stat Soc Ser B Stat Methodol 70:209–226

Gelman A, Tuerlinckx F (2000) Type S error rates for classical and Bayesian single and multiple comparison procedures. Comput Stat 15:373–390

Gu C (2002) Smoothing spline ANOVA models. Springer, New York

Hanks EM, Schliep EM, Hooten MB, Hoeting JA (2015) Restricted spatial regression in practice: geostatistical models, confounding, and robustness under model misspecification. Environmetrics 26:243–254

Harville DA (1997) Matrix algebra from a statistician’s perspective. Springer, New York

Hodges JS, Reich BJ (2010) Adding spatially-correlated errors can mess up the fixed effect you love. Am Stat 64:325–334

Hoeting JA, Davis RA, Merton AA, Thompson SE (2006) Model selection for geostatistical models. Ecol Appl 16:87–98

Huang HC, Chen CS (2007) Optimal geostatistical model selection. J Am Stat Assoc 102:1009–1024

Hughes J (2015) copCAR: a flexible regression model for areal data. J Comput Graph Stat 24:733–755

Hughes J, Haran M (2013) Dimension reduction and alleviation of confounding for spatial generalized linear mixed models. J R Stat Soc Ser B Stat Methodol 75:139–159

Matérn B (2013) Spatial variation. Springer, Berlin

Nikoloulopoulos AK (2016) Efficient estimation of high-dimensional multivariate normal copula models with discrete spatial responses. Stoch Environ Res Risk Assess 30:493–505

Paciorek CJ (2010) The importance of scale for spatial-confounding bias and precision of spatial regression estimators. Stat Sci 25:107–125

Page GL, Liu Y, He Z, Sun D (2017) Estimation and prediction in the presence of spatial confounding for spatial linear models. Scand J Stat 44:780–797

Reich BJ, Hodges JS, Zadnik V (2006) Effects of residual smoothing on the posterior of the fixed effects in disease-mapping models. Biometrics 62:1197–1206

Tzeng S, Huang HC (2018) Resolution adaptive fixed rank kriging. Technometrics 60:198–208

Wood SN (2003) Thin plate regression splines. J R Stat Soc Ser B Stat Methodol 65:95–114

Zadnik V, Reich BJ (2006) Analysis of the relationship between socioeconomic factors and stomach cancer incidence in Slovenia. Neoplasma 53:103–110

Acknowledgements

We thank the Editor, an associate editor, and two anonymous referees for their insightful and constructive comments, which have greatly improved the presentation of the article. This work was supported by the Ministry of Science and Technology of Taiwan under Grants MOST 106-2118-M-018-003-MY2 and MOST 106-2811-M-018-005.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Proof of Theorem 1

Let \(\varvec{A}=\sigma ^2_{\varepsilon }\varvec{I}\), \(\varvec{U}=\sigma ^2_w(\varvec{I}-\varvec{P}_{\varvec{X}}), \varvec{C}=\varvec{R}_{\varvec{W}}(\phi _w,\nu _w)\), and \(\varvec{V}=(\varvec{I}-\varvec{P}_{\varvec{X}})'\), then \(\varvec{\Phi }=\varvec{A}+\varvec{U}\varvec{C}\varvec{V}\). Applying the Sherman–Morrison–Woodbury formula (see, e.g., Harville 1997), we have \(\varvec{\Phi }^{-1}=\varvec{A}^{-1}-\varvec{A}^{-1}\varvec{U}\left( \varvec{C}^{-1} +\varvec{V}\varvec{A}^{-1}\varvec{U}\right) ^{-1}\varvec{V}\varvec{A}^{-1}\). Using the fact \(\varvec{X}^{\prime }(\varvec{I}-\varvec{P}_{\varvec{X}})=\varvec{0}\) together with the Sherman–Morrison–Woodbury formula of \(\varvec{\Phi }^{-1}\), we obtain

It implies that

This completes the proof.

Proof of Theorem 2

From Theorem 1, we have \(\hat{\varvec{\beta }}_{RSR}=(\varvec{X}^{\prime }\varvec{X})^{-1}\varvec{X}^{\prime }\varvec{Y}\). Therefore,

where the fourth equality follows from the measurement errors \(\varvec{\varepsilon }\) are independent of \(\varvec{X}\). This completes the proof.

Proof of Theorem 3

Let \(\varvec{A}=\left( \varvec{X}^{\prime }\varvec{\Phi }^{-1}\varvec{X}\right) ^{-1}\varvec{X}^{\prime }\varvec{\Phi }^{-1}\), we have

It means that \(\hat{\varvec{\beta }}_{Adj}\) is a linear combination of \(\varvec{Y}\). Thus, the sampling distribution of \(\hat{\varvec{\beta }}_{Adj}\) given the covariates \(\varvec{X}\) is distributed as Gaussian with mean vector and covariance matrix being

and

respectively. In \(E\left[ \hat{\varvec{\beta }}_{Adj}|\varvec{X}\right] \), the third equality follows from \(\varvec{X}^{\prime }\varvec{\Phi }^{-1}= \frac{1}{\sigma ^2_{\varepsilon }}\varvec{X}^{\prime }\) of Theorem 1 and the fourth equality follows from \(\varvec{X}^{\prime }(\varvec{I}-\varvec{P}_{\varvec{X}})=\varvec{0}\). In \(Var\left[ \hat{\varvec{\beta }}_{Adj}|\varvec{X}\right] \), the last equality follows from \(\varvec{A}=\left( \varvec{X}^{\prime }\varvec{\Phi }^{-1}\varvec{X}\right) ^{-1}\varvec{X}^{\prime } \varvec{\Phi }^{-1}=(\varvec{X}^{\prime }\varvec{X})^{-1}\varvec{X}^{\prime }\) and \(\varvec{A}\left( \varvec{I}-\varvec{P}_{\varvec{X}}\right) =(\varvec{X}^{\prime }\varvec{X})^{-1} \varvec{X}^{\prime }\left( \varvec{I}-\varvec{P}_{\varvec{X}}\right) =\varvec{0}\) . This completes the proof.

Proof of Theorem 4

From Theorem 3 and (12), we have

and

Because \(\varvec{W}\) in the above equation is a random vector, the bias of \(\hat{\varvec{\beta }}_{Adj}\) is given by

where the fifth equality follows from (13). This completes the proof.

Proof of Corollary 1

Because \(Bias\left[ \hat{\varvec{\beta }}_{Adj}|\varvec{X}\right] =\rho \frac{\sigma _w}{\sigma _x}\left( \varvec{I}-\varvec{M}_{adj} \right) \left( \varvec{X}^{\prime }\varvec{X}\right) ^{-1}\varvec{X}^{\prime } \varvec{R}^{1/2}_{\varvec{W}}\varvec{R}^{-1/2}_{\varvec{x}}\varvec{x}_p,\) the desired result for \(\rho =0\) is trivial. Moreover, if \(\varvec{R}_{\varvec{x}}=\varvec{R}_{\varvec{W}},\) we have \(\varvec{R}^{1/2}_{\varvec{W}}\varvec{R}^{-1/2}_{\varvec{x}}=\varvec{I}\) and thus \(Bias\left[ \hat{\varvec{\beta }}_{Adj}|\varvec{X}\right] =\rho \frac{\sigma _w}{\sigma _x}\left( \varvec{I}-\varvec{M}_{adj}\right) \left( \varvec{X}^{\prime }\varvec{X}\right) ^{-1}\varvec{X}^{\prime }\varvec{x}_p.\) Because \(\varvec{I}-\varvec{M}_{adj}=\hbox {diag}(1,\dots ,1,0)\) and \(\left( \varvec{X}^{\prime }\varvec{X}\right) ^{-1}\varvec{X}^{\prime }\varvec{x}_p=(0,\dots ,0,1),'\) we obtain the desired result. This completes the proof.

Proof of Corollary 2

Since \(MSE\left[ \hat{\varvec{\beta }}_{Adj}|\varvec{X}\right] =tr\left\{ Bias \left[ \hat{\varvec{\beta }}_{Adj}|\varvec{X}\right] Bias^{'}\left[ \hat{\varvec{\beta }}_{Adj}|\varvec{X}\right] \right\} + tr\left\{ Var\left[ \hat{\varvec{\beta }}_{Adj}|\varvec{X}\right] \right\}, \) it follows from (18) and (19) that

where \(\varvec{B}=\varvec{R}^{1/2}_{\varvec{W}}\varvec{R}^{-1/2}_{\varvec{x}} \varvec{x}_p\varvec{x}'_p\varvec{R}^{-1/2'}_{\varvec{x}}\varvec{R}^{1/2'}_{\varvec{W}}.\) This completes the proof.

Proof of\((\varvec{X}^{\prime }\varvec{\Sigma }^{-1}_{\varvec{Y}}\varvec{X})^{-1}=\sigma ^2_{\varepsilon } \left( \varvec{X}^{\prime }\varvec{X}\right) ^{-1}+\sigma ^2_w\left( \varvec{X}^{\prime }\varvec{X}\right) ^{-1} \varvec{X}^{\prime }\varvec{R}_{\varvec{W}}\varvec{X}\left( \varvec{X}^{\prime }\varvec{X}\right) ^{-1}.\) Let \(\varvec{A}=\sigma ^2_{\varepsilon }\varvec{I},\)\(\varvec{U}=\varvec{V}=\varvec{I},\) and \(\varvec{C}=\sigma ^2_w\varvec{R}_{\varvec{W}},\) then we have \(\varvec{\Sigma _Y}=\varvec{A}+\varvec{U}\varvec{C}\varvec{V}.\) Applying the Sherman–Morrison–Woodbury formula (see, e.g., Harville 1997), it implies that

Similarly, let \(\varvec{A}^*= \frac{1}{\sigma ^2_{\varepsilon }}\varvec{X}^{\prime }\varvec{X},\)\(\varvec{U}^*=- \frac{1}{\sigma ^4_{\varepsilon }}\varvec{X}^{\prime },\)\(\varvec{C}^*=\left[ \left( \sigma ^2_w\varvec{R}_{\varvec{W}}\right) ^{-1} +\left( \sigma ^2_{\varepsilon }\varvec{I}\right) ^{-1}\right] ^{-1},\) and \(\varvec{V}^*=\varvec{X},\) then we have \(\varvec{X}^{\prime }\varvec{\Sigma _Y}^{-1}\varvec{X}=\varvec{A}^*+\varvec{U}^*\varvec{C}^*\varvec{V}^*.\) Applying the Sherman-Morrison-Woodbury formula again, it implies that

This completes the proof.

Rights and permissions

About this article

Cite this article

Chiou, YH., Yang, HD. & Chen, CS. An adjusted parameter estimation for spatial regression with spatial confounding. Stoch Environ Res Risk Assess 33, 1535–1551 (2019). https://doi.org/10.1007/s00477-019-01716-9

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00477-019-01716-9