Abstract

The theory of intrinsic volumes of convex cones has recently found striking applications in areas such as convex optimization and compressive sensing. This article provides a self-contained account of the combinatorial theory of intrinsic volumes for polyhedral cones. Direct derivations of the general Steiner formula, the conic analogues of the Brianchon–Gram–Euler and the Gauss–Bonnet relations, and the principal kinematic formula are given. In addition, a connection between the characteristic polynomial of a hyperplane arrangement and the intrinsic volumes of the regions of the arrangement, due to Klivans and Swartz, is generalized and some applications are presented.

Similar content being viewed by others

1 Introduction

The theory of conic intrinsic volumes (or solid/internal/external/Grassmann angles) has a rich and varied history, with origins dating back at least to the work of Sommerville [32]. This theory has recently found renewed interest, owing to newly found connections with measure concentration and resulting applications in compressive sensing, optimization, and related fields [3, 5, 11, 14, 24]. Despite this recent surge in interest, the theory remains somewhat inaccessible to a general public in applied areas; this is, in part, due to the fact that many of the results are found using varying terminology (cf. Sect. 2.3), or are available as special cases of a more sophisticated theory of spherical integral geometry [13, 30, 34] that treats the subject in a level of generality (involving curvature/support measures or relying on differential geometry) that is usually more than what is needed from the point of view of the above-mentioned applications. In addition, some results, such as the relation to the theory of hyperplane arrangements, have so far not been connected to the existing body of research.

One aim of this article is therefore to provide the practitioner with a self-contained account of the basic theory of intrinsic volumes of polyhedral cones that requires little more background than some elementary polyhedral geometry and properties of the Gaussian distribution. While some of the material is classic (see, for example, [25]), we blend into the presentation a generalization of a formula of Klivans and Swartz [22], with a streamlined proof and some applications.

The focus of this text is on simplicity rather than generality, on finding the most natural relations between different results that may be derived in different orders from each other, and on highlighting parallels between different results. Despite this, the text does contain some generalizations of known results, provided these can be derived with little additional effort. In the interest of brevity, this article does not discuss the probabilistic properties of intrinsic volumes, such as their moments and concentration properties, nor does it go into related geometric problems such as random projections of polytopes [1, 35].

Section 2 is devoted to some preliminaries from the theory of polyhedral cones including a discussion of conic intrinsic volumes, a section devoted to clarifying the connections between different notation and terminology used in the literature, and a section introducing some concepts and techniques from the theory of partially ordered sets. In Sect. 3 we present a modern interpretation of the conic Steiner formula that underlies the recent developments in [5, 14, 24], and in Sect. 4, which is based on the influential work of McMullen [25], we derive and discuss the Gauss–Bonnet relation for intrinsic volumes. Section 5 contains a crisp proof of the Principal Kinematic Formula for polyhedral cones, and Sect. 6 is devoted to a generalization of a result by Klivans and Swartz [22] and some applications thereof.

1.1 Notation and Conventions

Throughout, we use boldface letters for vectors and linear transformations. To lighten the notation we denote the set consisting solely of the zero vector by \(\mathbf {0}\). We use calligraphic letters for families of sets. We use the notation \(\subseteq \) for set inclusion and \(\subset \) for strict inclusion.

2 Preliminaries

General references for basic facts about convex cones that are stated here are, for example, [9, 28, 38]. More precise references will be given when necessary. A convex cone \(C\subseteq \mathbbm {R}^d\) is a convex set such that \(\lambda C=C\) for all \(\lambda >0\). A convex cone is polyhedral if it is a finite intersection of closed half-spaces. In particular, linear subspaces are polyhedral, and polyhedral cones are closed. In what follows, unless otherwise stated, all cones are assumed to be polyhedral and non-empty. A supporting hyperplane of a convex cone C is a linear hyperplane H such that C lies entirely in one of the closed half-spaces induced by H (unless explicitly stated otherwise, all hyperplanes will be linear, i.e., linear subspaces of codimension one). A proper face of C is a set of the form \(F=C\cap H\), where H is a supporting hyperplane. If set F is called a face of C if is either a proper face or C itself. The linear span \({\text {lin}}(C)\) of a cone C is the smallest linear subspace containing C and is given by \({\text {lin}}(C)=C+(-C)\), where \(A+B=\{\varvec{x}+\varvec{y}\mathrel {\mathop {:}}\varvec{x}\in A, \varvec{y}\in B\}\) denotes the Minkowski sum of two sets A and B. The dimension of a face F is \(\dim F:=\dim {\text {lin}}(F)\), and the relative interior \({\text {relint}}(F)\) is the interior of F in \({\text {lin}}(F)\). A cone is pointed if the origin \(\mathbf {0}\) is a zero-dimensional face, or equivalently, if it does not contain a linear subspace of dimension greater than zero. If C is not pointed, then it contains a nontrivial linear subspace of maximal dimension \(k>0\), given by \(L=C\cap (-C)\), and L is contained in every supporting hyperplane (and thus, in every face) of C. Denoting by C / L the orthogonal projection of C on the orthogonal complement of L, the projection C / L is pointed, and \(C=L+C/L\) is an orthogonal decomposition of C; we call this the canonical decomposition of C.

We denote by \(\mathcal {F}(C)\) the set of faces, \(\mathcal {F}_k(C)\) the set of k-dimensional faces, and let \(f_k(C)=|\mathcal {F}_k(C)|\) denote the number of k-faces of C. The tuple \(\varvec{f}(C)=(f_0(C),\dots ,f_d(C))\) is called the f-vector of C. Note that if \(C=L+C/L\) is the canonical decomposition, then \(\varvec{f}(C)\) is a shifted version of \(\varvec{f}(C/L)\). The most fundamental property of the f-vector is the Euler relation.

Theorem 2.1

(Euler) Let \(C\subseteq \mathbbm {R}^d\) be a polyhedral cone. Then

This relation is usually stated and proved in terms of polytopes [38, Chap. 8], but intersecting a pointed cone with a suitable affine hyperplane yields a polytope with a face structure equivalent to that of the cone; the general case can be reduced to the pointed case through the canonical decomposition. A short proof of the Euler relation along with remarks on the history of this result can be found in [23].

2.1 Duality

The polar cone of a cone \(C\subseteq \mathbbm {R}^d\) is defined as

If \(C=L\) is a linear subspace, then \(C^{\circ }=L^{\perp }\) is just the orthogonal complement, and the polar cone of the polar cone is again the original cone, as will be shown below. To any face \(F\in \mathcal {F}_k(C)\) we can associate the normal face \(N_FC\in \mathcal {F}_{d-k}(C^{\circ })\) defined as \(N_FC=C^\circ \cap {\text {lin}}(F)^\bot \). To ease notation we will sometimes use \(F^{\diamond }=N_FC\) when the cone is clear. The resulting map \(\mathcal {F}_k(C)\rightarrow \mathcal {F}_{d-k}(C^\circ )\) is a bijection, which satisfies \(N_{F^\diamond }(C^\circ )=F\). This relation is easily deduced from the mentioned involution property of the polarity map, cf. Proposition 2.3 below. The polar operation is order reversing, \(C\subseteq D\) implies \(C^\circ \supseteq D^\circ \), as follows directly from the definition; more properties will be presented below.

Central to convex geometry and optimization are a variety of theorems of the alternative, the most prominent of which is known as Farkas’ Lemma (among the countless references, see for example [38, Chap. 2]). All versions of Farkas’ Lemma follow from a special case of the Hahn–Banach theorem, the separating hyperplane theorem. In what follows we need a conic version of this result.

Theorem 2.2

(Separating hyperplane for cones) Let \(C,D\subset \mathbbm {R}^d\) be non-empty, closed convex cones. Then \({\text {relint}}(C)\cap {\text {relint}}(D)=\emptyset \) if and only if there exists a linear hyperplane H, not containing both C and D, such that \(C\subseteq H_+\) and \(D\subseteq H_-\), where \(H_+,H_-\) denote the closed half-spaces defined by H.

This theorem is usually stated for closed convex sets and affine hyperplanes H (see, e.g., [28, Thm. 11.3]). Theorem 2.2 then follows from this more general version by noting that the relative interior of any non-empty, closed convex cone contains points arbitrary close to \(\mathbf {0}\), which implies \(\mathbf {0}\in H\).

The separating hyperplane theorem can be used to derive some interesting results involving the polar cone. The first such result states that polarity is an involution on the set of closed convex cones. We write \(C^{\circ \circ }:=(C^{\circ })^{\circ }\) for the polar of the polar.

Proposition 2.3

Let C be a non-empty, closed convex cone. Then \(C^{\circ \circ } = C\).

Proof

Let \(\varvec{x}\in C\). Then, by definition of the polar, for all \(\varvec{y}\in C^{\circ }\) we have \(\langle {\varvec{x}}, {\varvec{y}} \rangle \le 0\). This, in turn, implies that \(\varvec{x}\in C^{\circ \circ }\). Now let \(\varvec{x}\in C^{\circ \circ }\) and assume that \(\varvec{x}\not \in C\). In particular, \(\varvec{x}\ne \mathbf {0}\), and by closedness of C there exists \(\varepsilon >0\) such that the \(\varepsilon \)-cone around \(\varvec{x}\), \(B_\varepsilon :=\{\varvec{y}\mathrel {\mathop {:}}\langle \varvec{x},\varvec{y}\rangle \ge (1-\varepsilon )\Vert \varvec{x}\Vert \Vert \varvec{y}\Vert \}\), satisfies \({\text {relint}}(C)\cap {\text {relint}}(B_\varepsilon )=\emptyset \). By Theorem 2.2, there exists a hyperplane separating C and \(B_\varepsilon \), and thus a non-zero \(\varvec{h}\in \mathbbm {R}^d\) such that

The first condition implies \(\varvec{h}\not \in C^{\circ }\), while the second one implies \(\varvec{h}\in C^{\circ }\). It follows that \(\varvec{x}\in C\). \(\square \)

The following variation of Farkas’ Lemma for convex cones, which is slightly more general than the usual one, is taken from [4].

Lemma 2.4

(Farkas) Let C, D be closed convex cones. Then

In particular, if \(D=L\) is a linear subspace, then

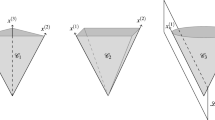

The situation in which \(D=L\) is a hyperplane is best visualised as in Fig. 1.

Proof

If \({\text {relint}}(C)\cap D=\emptyset \), then by Theorem 2.2 there exists a separating hyperplane \(H=\varvec{h}^\bot \), \(\varvec{h}\ne \mathbf {0}\), such that \(\langle \varvec{h},\varvec{x}\rangle \le 0\) for all \(\varvec{x}\in C\) and \(\langle \varvec{h},\varvec{y}\rangle \ge 0\) for all \(\varvec{y}\in D\). But this means \(\varvec{h}\in C^\circ \cap (-D^\circ )\). On the other hand, if \(\varvec{x}\in {\text {relint}}(C)\cap D\) then only in the case \(C=\mathbbm {R}^d\), for which the claim is trivial, can \(\varvec{x}=\mathbf {0}\) hold. If \(\varvec{x}\ne \mathbf {0}\), then \(C^\circ {\setminus }\mathbf {0}\) lies in the open half-space \(\{\varvec{h}\mathrel {\mathop {:}}\langle \varvec{h},\varvec{x}\rangle <0\}\) and \(-D^\circ \) lies in the closed half-space \(\{\varvec{h}\mathrel {\mathop {:}}\langle \varvec{h},\varvec{x}\rangle \ge 0\}\), and thus \(C^\circ \cap (-D^\circ )=\mathbf {0}\). The case \(D=L\) follows immediately. \(\square \)

In view of some of the later developments, it is important to understand the behaviour of duality under intersections. The following is a conic variant of [28, Cor. 23.8.1] (see also [38, Chap. 7] for a similar theme).

Proposition 2.5

The polar operation of intersection is the Minkowski sum,

Moreover, every face of \(C\cap D\) is of the form \(F\cap G\) for some \(F\in \mathcal {F}(C),G\in \mathcal {F}(D)\), and the polar face satisfies

If additionally \({\text {relint}}(F)\cap {\text {relint}}(G)\ne \emptyset \), then (2.3) holds with equality.

Proof

For the first claim, note that

where in the first equality we used Proposition 2.3; the third equality is easily verified by noting that \(\langle {\varvec{z}}, {\varvec{x}+\varvec{0}} \rangle =\langle {\varvec{z}}, {\varvec{x}} \rangle \) and \(\langle {\varvec{z}}, {\varvec{0}+\varvec{y}} \rangle =\langle {\varvec{z}}, {\varvec{y}} \rangle \). The first claim then follows by polarity and another application of Proposition 2.3.

For the second claim, note that a face \(\bar{F}\in \mathcal {F}(C\cap D)\) can be written as \(\bar{F} = \{\varvec{x}\in C\cap D\mathrel {\mathop {:}}\langle \varvec{x},\varvec{h}\rangle =0\}\) for some \(\varvec{h}\in (C\cap D)^\circ \). By the first claim, we can write the normal vector in the form \(\varvec{h}=\varvec{h}_C+\varvec{h}_D\) with \(\varvec{h}_C\in C^\circ \) and \(\varvec{h}_D\in D^\circ \). Denoting \(F:=\{\varvec{x}\in C\mathrel {\mathop {:}}\langle \varvec{x},\varvec{h}_C\rangle =0\}\in \mathcal {F}(C)\), \(G:=\{\varvec{y}\in D\mathrel {\mathop {:}}\langle \varvec{y},\varvec{h}_D\rangle =0\}\in \mathcal {F}(D)\), we obtain

where the second equality follows from the fact that \(\langle \varvec{x},\varvec{h}_C\rangle \le 0\) and \(\langle \varvec{x},\varvec{h}_D\rangle \le 0\) if \(\varvec{x}\in C\cap D\).

Finally, for the claim about the polar face, note that, by what we have just shown and using double polarity,

so that

The claim (2.3) follows by invoking polarity again.

To show that the inclusion in the above display is an equality if \({\text {relint}}(F)\cap {\text {relint}}(G)\ne \emptyset \), note first that if \(\varvec{x}\in {\text {relint}}(F)\), then for every \(\varvec{y}\in C+(-F)\) we have \(\varvec{y}+\lambda \varvec{x}\in C\) for \(\lambda >0\) large enough. Indeed, if \(\varvec{y}=\varvec{y}_C-\varvec{y}_F\) with \(\varvec{y}_C\in C\), \(\varvec{y}_F\in F\), then \(\varvec{y}+\lambda \varvec{x} = \varvec{y}_C+\lambda (\varvec{x}-\frac{1}{\lambda }\varvec{y}_F)\), and \(\varvec{x}-\frac{1}{\lambda }\varvec{y}_F\in F\) for \(\lambda >0\) large enough. Now, if \(\varvec{x}\in {\text {relint}}(F)\cap {\text {relint}}(G)\) and \(\varvec{y}\in (C+(-F))\cap (D+(-G))\), then for \(\lambda >0\) large enough, \(\varvec{y}+\lambda \varvec{x}\in C\cap D\). Hence,

which shows that (2.4), and thus (2.3), hold with equality. \(\square \)

Two faces \(F\in \mathcal {F}(C)\) and \(G\in \mathcal {F}(D)\) are said to intersect transversely, written \(F\pitchfork G\), if their relative interiors have a non-empty intersection, \({\text {relint}}(F)\cap {\text {relint}}(G)\ne \emptyset \), and \(\dim F\cap G=\dim F+\dim G-d\).

Corollary 2.6

Let C, D be cones and \(F\in \mathcal {F}(C)\), \(G\in \mathcal {F}(D)\) be faces that intersect transversely. Then \(N_FC+N_GD=N_{F\cap G}(C\cap D)\), and is a face of \(C^{\circ }+D^{\circ }\) of dimension \((d-\dim F)+(d-\dim G)\).

For a polyhedral cone \(C\subseteq \mathbbm {R}^d\), denote by \(\varvec{\Pi }_C\) the Euclidean projection,

The Moreau decomposition of a point \(\varvec{x}\in \mathbbm {R}^d\) is the sum representation

where \(\varvec{\Pi }_C(\varvec{x})\) and \(\varvec{\Pi }_{C^{\circ }}(\varvec{x})\) are orthogonal. A direct consequence is the disjoint decomposition

see also [25, Lem. 3].

2.2 Intrinsic Volumes

For \(C\subseteq \mathbbm {R}^d\) a polyhedral cone and for two faces \(F,G\in \mathcal {F}(C)\), define

where \(\varvec{g}\sim \mathcal {N}(\mathbbm {R}^d)\) is a standard Gaussian vector in \(\mathbbm {R}^d\). If \(F\subseteq G\), it follows from (2.7) that

On the other hand, since the relative interiors of faces of C are disjoint, we have \(v_F(G)=0\) if \(F\not \subseteq G\). For the most part we will consider the case \(G=C\). Define the k-th intrinsic volumes of C, \(0\le k\le d\), to be

For a fixed cone, the intrinsic volumes form a probability distribution on \(\{0,1,\ldots ,d\}\). Note that if \(F\in \mathcal {F}_k(C)\) then, by the decomposition (2.6),

For later reference, we note that in combination with Corollary 2.6, we get for cones C, D and faces \(F\in \mathcal {F}_k(C)\), \(G\in \mathcal {F}_{\ell }(D)\) that intersect transversely, with \(j=k+\ell -d\),

Example 2.7

Let \(C=L\subseteq V\) be a linear subspace of dimension i. Then

Example 2.8

Let \(C=\mathbbm {R}^d_{\ge 0}\) be the non-negative orthant, i.e., the cone consisting of points with non-negative coordinates. A vector \(\varvec{x}\) projects orthogonally to a k-dimensional face of C if and only if exactly k coordinates are non-positive. By symmetry considerations and the invariance of the Gaussian distribution under permutations of the coordinates, it follows that

The following important properties of the intrinsic volumes, which are easily verified in the setting of polyhedral cones, will be used frequently:

-

(a)

Orthogonal invariance. For an orthogonal transformation \(\varvec{Q}\in O(d)\),

$$\begin{aligned} v_k(\varvec{Q}C) = v_k(C); \end{aligned}$$ -

(b)

Polarity.

$$\begin{aligned} v_k(C) = v_{d-k}(C^{\circ }); \end{aligned}$$ -

(c)

Product rule.

$$\begin{aligned} v_k(C\times D) = \sum _{i+j=k} v_i(C)v_j(D). \end{aligned}$$(2.9)

Note that the product rule implies \(v_i(C\times L)=v_{i-k}(C)\) if \(i\ge k\) and L is a subspace of dimension k. We will sometimes be working with the intrinsic volume generating polynomial,

The product rule then states that the generating polynomial is multiplicative with respect to direct products. A direct consequence of the orthogonal invariance and the polarity rule is that the intrinsic volume sequence is symmetric for self-dual cones (i.e., cones such that \(C=-C^{\circ }\)).

An important summary parameter is the expected value of the distribution associated to the intrinsic volumes, the statistical dimension, which coincides with the expected squared norm of the projection of a Gaussian vector on the cone,

The statistical dimension reduces to the usual dimension for linear subspaces. The coincidence of the two expected values is a special case of the generalized Steiner formula 3.1, and is crucial in applications of the statistical dimension. More on the statistical dimension and its properties and applications can be found in [5, 14, 24].

2.3 Angles

In the classical works on polyhedral cones, intrinsic volumes were viewed as polytope angles, see [12] for some perspective. Polyhedral cones arise as tangent or normal cones of polyhedra \(K\subseteq \mathbbm {R}^d\). Given such a polyhedron K and a face \(F\subseteq K\), with \(\varvec{x}_0\in {\text {relint}}(F)\), the tangent cone \(T_FK\) is defined as

The normal cone to K at F is the polar of the tangent cone. To clarify the relations to the terminology used in this paper and to facilitate a translation of the results of some of the referenced papers, we provide the following list.

2.3.1 Solid Angle

When speaking about the solid angle of a cone \(C\subseteq \mathbbm {R}^d\), sometimes denoted \(\alpha (C)\), one usually assumes that C has non-empty interior, and one defines \(\alpha (C)\) as the Gaussian volume of C (or equivalently, the relative spherical volume of \(C\cap S^{d-1}\), where \(S^{d-1}\) is the \((d-1)\)-dimensional unit sphere); we extend this definition to also cover lower-dimensional cones, and define for \(\dim C=k\),

2.3.2 Internal/External Angle

The internal and external angle of a polyhedral set \(K\subseteq \mathbbm {R}^d\) at a face F are defined as the solid angle of the tangent and normal cone of K at F, respectively,

Note that we have \(v_F(C) = \beta (\mathbf {0},F)\gamma (F,C)\). Furthermore, conic polarity swaps between internal and external angles:

where we use the notation \(F^\diamond :=N_FC\) for the face of \(C^\circ \), which is polar to the face F of C. This shows that any formula involving the internal and external angles of a cone C has a polar version in terms of the internal and external angles of \(C^\circ \) where the roles of internal and external have been exchanged. (Some of the formulas in [25] are stated in this polar version.)

Remark 2.9

The Brianchon–Gram–Euler relation [27, Thm. (1)] of a convex polytope K translates in the above notation as

Replacing the bounded polytope by an unbounded cone makes this relation invalid. However, there exists a closely related conic version, which is called Sommerville’s Theorem [27, Thm. (37)]. This in turn can be used to derive a Gauss–Bonnet relation, cf. Sect. 4.

2.3.3 Grassmann Angle

The Grassmann angles of a cone C, which have been introduced and analyzed by Grünbaum [15], are defined through the probability that a uniformly random linear subspace of a specific (co)dimension intersects the cone nontrivially. The kinematic/Crofton formulae express this probability in terms of the intrinsic volumes, cf. Sect. 5. More precisely, we have

where \(L_k\subseteq \mathbbm {R}^d\) denotes a uniformly random linear subspace of codimension k. Notice that when considering the intrinsic volumes and the Grassmann angles as vectors, \((v_0,v_1,\ldots ,v_d)\) and \((h_0,h_1,\ldots ,h_d)\), then these are related through a nonsingular linear transformation. Hence, any formula in the intrinsic volumes of a cone has an equivalent form in terms of Grassmann angles and vice versa; in this paper we prefer the intrinsic volume versions.

Remark 2.10

The preference of intrinsic volumes over Grassmann angles has an odd effect on the logic behind Corollary 4.3 below, which is attributed to Grünbaum. This result is originally stated and proved in [15, Thm. 2.8] in terms of the Grassmann angles. So in order to rewrite Corollary 4.3 in its original form, one needs to apply Crofton’s formula (2.10) whose proof, given in Sect. 5, uses Gauss–Bonnet (4.4), which in turn is a direct consequence of Corollary 4.3. The resulting proof of the original result [15, Thm. 2.8] (in terms of Grassmann angles) is thus much less direct than the original one given by Grünbaum.

2.4 Some Poset Techniques

In this section we recall some notions from the theory of partially ordered sets (posets) that we will need in Sect. 6. We only recall those properties that we will directly use, see [33, Chap. 3] for more details and context.

A lattice is a poset with the property that any two elements have both a least upper bound and a greatest lower bound. We will only consider finite lattices; in particular, for these lattices the greatest and the least elements \(\hat{1},\hat{0}\) both exist. More precisely, we will consider the following two (types of) finite lattices.

Example 2.11

(Face lattice) Let \(C\subseteq \mathbbm {R}^d\) be a polyhedral cone. Then the set of faces \(\mathcal {F}(C)\) with partial order given by inclusion is a finite lattice. The elements \(\hat{1},\hat{0}\) are given by \(\hat{1}=C\) and \(\hat{0}=C\cap (-C)\).

Example 2.12

(Intersection lattice of a hyperplane arrangement) Let \(\mathcal {A}=\{H_1,\ldots ,H_n\}\) be a set of (linear) hyperplanes \(H_i\subset \mathbbm {R}^d\), \(i=1,\ldots ,n\). The set of all intersections \(\mathcal {L}(\mathcal {A})=\{\bigcap _{i\in I} H_i\mathrel {\mathop {:}}I\subseteq \{1,\ldots ,n\}\}\), endowed with the partial order given by reverse inclusion, is called the intersection lattice of the hyperplane arrangement \(\mathcal {A}\). This lattice has a disjoint decomposition into \(\mathcal {L}_0(\mathcal {A}),\dots ,\mathcal {L}_d(\mathcal {A})\), where \(\mathcal {L}_j(\mathcal {A})=\{L\in \mathcal {L}(\mathcal {A})\mathrel {\mathop {:}}\dim L=j\}\). The minimal and maximal elements are given by \(\hat{0}=\mathbbm {R}^d\) and \(\hat{1}=\bigcap _{i=1}^n H_i\).

One can define the (real) incidence algebra of a (locally) finite poset \((P,\preceq )\) as the set of all functions \(\xi :P\times P\rightarrow \mathbbm {R}\), which besides having the usual vector space structure also possesses the multiplication

defined for two functions \(\xi ,\nu :P\times P\rightarrow \mathbbm {R}\). The identity element in this algebra is the Kronecker delta, \(\delta (x,y)=1\) if \(x=y\) and \(\delta (x,y)=0\) else. Another important element is the characteristic function of the partial order, \(\zeta (x,y)=1\) if \(x\preceq y\) and \(\zeta (x,y)=0\) else. This function is invertible, and its inverse \(\mu \), called Möbius function on P, can be recursively defined by \(\mu (x,y)=0\) if \(x\not \preceq y\), and

The incidence algebra acts on the set of functions \(f:P\rightarrow \mathbbm {R}\) on the right by

The Möbius inversion is the simple fact that for two functions \(f,g:P\rightarrow \mathbbm {R}\) one has \(f\zeta =g\) if and only if \(f=g\mu \). Explicitly, this equivalence can be written out as follows:

The Möbius function of the face lattice from Example 2.11 is given by \(\mu (F,G)=(-1)^{\dim G-\dim F}\). For a whole range of techniques for computing Möbius functions we refer to [6, 33].

2.4.1 Some Elementary Facts About Hyperplane Arrangements

The last concept we need to introduce is that of a characteristic polynomial, which can be defined for any finite graded lattice; we only introduce the characteristic polynomial for hyperplane arrangements, as we will only use it in this context. We use the notation from Example 2.12. The characteristic polynomial of a hyperplane arrangement \(\mathcal {A}\) in \(\mathbbm {R}^d\) is defined as [33, Sect. 3.11.2]

More generally, we introduce the jth-level characteristic polynomial of \(\mathcal {A}\) as follows,

so that \(\chi _\mathcal {A}=\chi _{\mathcal {A},d}\), and we also introduce the bivariate polynomialFootnote 1

The jth level characteristic polynomial can be written in terms of characteristic polynomials by considering restrictions of \(\mathcal {A}\): If \(L\subseteq \mathbbm {R}^d\) is a linear subspace, then the arrangement \(\mathcal {A}^L=\{H\cap L\mathrel {\mathop {:}}H\in \mathcal {A}, L\not \subseteq H\}\) is a hyperplane arrangement relative to L. It is easily seen that we obtain

The Möbius function of the intersection lattice alternates in sign [33, Prop. 3.10.1], and so do the coefficients of the (jth-level) characteristic polynomial. Note that \(\chi _{\mathcal {A},j}(t)\) (is either zero or) has degree j and the leading coefficient is given by \(|\mathcal {L}_j(\mathcal {A})|=:\ell _j(\mathcal {A})\). For future reference we also note that in the cases \(j=0,1\) we have

The complement of the hyperplanes of an arrangement \(\mathcal {A}\), \(\mathbbm {R}^d{\setminus } \bigcup _{H\in \mathcal {A}} H\), decomposes into open convex cones. We denote by \(\mathcal {R}(\mathcal {A})\) the set of polyhedral cones given by the closures of these regions, and we denote \(r(\mathcal {A}):=|\mathcal {R}(\mathcal {A})|\). More generally, we define

so that \(\mathcal {R}(\mathcal {A})=\mathcal {R}_d(\mathcal {A})\) and \(r(\mathcal {A})=r_d(\mathcal {A})\). The following theorem by Zaslavsky [37] lies at the heart of the result by Klivans and Swartz [22] that we will present in Sect. 6.

Theorem 2.13

(Zaslavsky) Let \(\mathcal {A}\) be an arrangement of linear hyperplanes in \(\mathbbm {R}^d\). Then

Note that since the coefficients of the characteristic polynomial alternate in sign, the number of j-dimensional regions, \(r_j(\mathcal {A})\), is given by the sum of the absolute values of the coefficients of the jth-level characteristic polynomial.

3 The Conic Steiner Formula

A classic result in integral geometry is the Steiner Formula: the d-dimensional measure of the \(\varepsilon \)-neighbourhood of a convex body \(K\subset \mathbbm {R}^d\) (compact, convex) can be expressed as a polynomial in \(\varepsilon \) of degree at most d, with the intrinsic volumes as coefficients:

where \(B^d\) denotes the unit ball, \(\omega _{d-i}={{\mathrm{vol}}}(B^{d-i})=\frac{2\pi ^{(d-i)/2}}{\Gamma ((d-i)/2+1)}\), and the \(V_i(K)\) are the Euclidean intrinsic volumes (see, e.g., [21, Thm. 9.2.3]). For example, in the two-dimensional case, we have the situation of Fig. 2.

In order to state an analogous result for convex cones or spherically convex sets (intersections of convex cones with the unit sphere), we have to agree on a notion of distance. A natural choice here is the capped angle \(\sphericalangle (C,\varvec{x})=\arccos (\Vert \varvec{\Pi }_C(\varvec{x})\Vert /\Vert \varvec{x}\Vert )\). Note that with this definition, \(\sphericalangle (C,\varvec{x})\le \pi /2\), and is equal to \(\pi /2\) if and only if \(\varvec{x}\in C^{\circ }\). Note also that for \(\varvec{x}\) with \(\Vert {\varvec{x}}\Vert =1\) and \(\alpha \le \pi /2\), we have \(\sphericalangle (C,\varvec{x})\le \alpha \) if and only if \(\Vert {\Pi _C(\varvec{x})}\Vert ^2\ge \cos ^2\alpha \). Using this notion of distance, one obtains a formula similar to the Euclidean Steiner formula (3.1), which is usually called spherical/conic Steiner formula, see for example [34, Chap. 6.5] and the references given there, or the formula below.

It turns out that, when working with cones rather than spherically convex sets, it is convenient to work with the squared length of the projection on C rather than with the angle. Moreover, it turns out quite useful to also consider the squared length of the projection on the polar cone \(C^\circ \). The following general Steiner formula in the conic setting is due to McCoy and Tropp [24, Thm. 3.1]; its formulation in probabilistic terms, as suggested by Goldstein, Nourdin and Peccati [14], is remarkably elegant. We sketch their proof (in the polyhedral case) below.

Theorem 3.1

Let \(C\subseteq \mathbbm {R}^d\) be a convex polyhedral cone, let \(\varvec{g}\in \mathbbm {R}^d\) be a Gaussian vector, and let the discrete random variable V on \(\{0,1,\ldots ,d\}\) be given by \(\mathbbm {P}\{V=k\}=v_k(C)\). Then

where \(\mathop {=}\limits ^{d}\) denotes equality in distribution, and \(X_k,Y_k\) are independent \(\chi ^2\)-distributed random variables with k degrees of freedom.

A geometric interpretation of this form of the conic Steiner formula is readily obtained by considering moments of the two sides in (3.2). Indeed, the expectation of \(f\big ( \Vert {\Pi _C(\varvec{g})}\Vert ^2,\Vert {\Pi _{C^\circ }(\varvec{g})}\Vert ^2\big )\) equals the Gaussian volume of the angular neighbourhood around C of radius \(\alpha \le {\pi }/{2}\), i.e., of the set \(T_{\alpha }(C):=\{\varvec{x}\mathrel {\mathop {:}}\sphericalangle (C,\varvec{x})\le \alpha \}\), if one sets \(f(x,y)=1\) if \(x/(x+y)\ge \cos ^2\alpha \), and \(f(x,y)=0\) otherwise. For this choice of f the expectation of \(f\big ( X_V,Y_{d-V}\big )\) becomes a finite sum \(\sum _{k=0}^d v_k(C) \mathbbm {P}\{\varvec{g}\in T_\alpha (L_k)\}\), where \(T_\alpha (L_k)\) denotes the angular neighbourhood of radius \(\alpha \) around a k-dimensional linear subspace. These Gaussian volumes of angular neighborhoods of linear subspaces replace the monomials in the Euclidean Steiner formula (3.1). By taking a suitable moment of (3.2) we obtain the usual conic Steiner formula.

Proof sketch of Theorem 3.1

In order to show the claimed equality in distribution (3.2) it suffices to show that the moments coincide. Let \(f:\mathbbm {R}^2_+\rightarrow \mathbbm {R}\) be a Borel measurable function. In view of the decomposition (2.5) we can express the expectation of \(f\big ( \Vert {\Pi _C(\varvec{g})}\Vert ^2,\Vert {\Pi _{C^\circ }(\varvec{g})}\Vert ^2\big )\) as

Notice now that for \(g\in ({\text {relint}}\, F)+N_FC\) we have \(\varvec{\Pi }_C(\varvec{g}) = \varvec{\Pi }_{{\text {lin}}(F)}(\varvec{g})\) and \(\varvec{\Pi }_{C^{\circ }}(\varvec{g})=\varvec{\Pi }_{{\text {lin}}(N_FC)}(g)\). This implies

Using spherical coordinates and the orthogonal invariance of Gaussian vectors, one can deduce that the above expectation equals

where \(L_k\) denotes an arbitrary k-dimensional linear subspace. Summing up the terms gives rise to the claimed coincidence of moments, which shows equality of the distributions. \(\square \)

A useful consequence of the general Steiner formula is that the moment generating functions of the discrete random variable V from Theorem 3.1 and the continuous random variable \(\Vert \Pi _C(\varvec{g})\Vert ^2\) coincide up to reparametrization:

which directly follows from (3.2) by the well-known formula for the moment generating function of \(\chi ^2\)-distributed random variables, \({\mathbb {E}}[e^{sX_k}]=(1-2s)^{-k/2}\). This result is from [24], where it is used to derive a concentration result for the random variable V, and also underlies the argumentation in [14], where a central limit theorem for V is derived.

4 Gauss–Bonnet and the Face Lattice

The Gauss–Bonnet Theorem is a celebrated result in differential geometry connecting curvature with the Euler characteristic. In the setting of polyhedral cones, this theorem asserts that the alternating sum of the intrinsic volumes equals the alternating sum of the f-vector,

The main goal of this section is to show the connections between the Gauss–Bonnet relation, a result by Sommerville [32], which can be seen as a conic version of the Brianchon–Euler–Gram relation for polytopes [16, 14.1], and a result by Grünbaum [15, Thm. 2.8]. More precisely, we will provide an elementary proof of the result by Sommerville, which is basically an application of Farkas’ Lemma, and show how the other relations are easily deduced from this. The derivation of the Gauss–Bonnet relation from the Sommerville relation presented here follows McMullen [25], who used the language of internal and external angles (see Sect. 2.3.2).

Theorem 4.1

(Sommerville) For any polyhedral cone \(C\subseteq \mathbbm {R}^d\),

Proof

Both sides in (4.1) are zero if C contains a nonzero linear subspace. So we assume in the following that C is pointed, \(C\cap (-C)=\mathbf {0}\). Let \(\varvec{g}\) be a random Gaussian vector and \(H=\varvec{g}^{\perp }\) the orthogonal complement, which is almost surely a hyperplane. By Farkas’ Lemma 2.4,

Note that with probability 1, the intersection \(C\cap H\) is either \(\mathbf {0}\) or has dimension \(\dim C-1\). Setting \(\overline{\chi }=\sum _{i=0}^{d-1}(-1)^if_i(C\cap H)\), the Euler relation (2.1) implies \(\overline{\chi }=0\) if \(C\cap H\ne \mathbf {0}\) and \(\overline{\chi }=1\) if \(C\cap H= \mathbf {0}\). Using (4.2) we get the expected value

On the other hand, for \(0<i<d\) and using (4.2),

where in the first step we used the fact that almost surely every i-dimensional face of \(C\cap H\) is of the form \(F\cap H\), with \(F\in \mathcal {F}_{i+1}(C)\), and for every \(F\in \mathcal {F}_{i+1}(C)\) the intersection \(F\cap H\) is either an i-dimensional face of \(C\cap H\) or \(\mathbf {0}\). Alternating the sum and using linearity of expectation,

where in the final step we used the Euler relation (2.1), the fact that \(f_1(C)=2\sum _{\dim F=1} v_0(F)\) (because each \(F^{\circ }\) is a halfspace), and \(f_0(C)=v_0(\varvec{0})=1\). Combining this with (4.3) yields the claim. \(\square \)

The following theorem is a simple generalization of Sommerville’s Theorem. Recall from Sect. 2.2 that \(v_{G}(F)=0\) if G is not contained in F.

Theorem 4.2

Let \(C\subseteq \mathbbm {R}^d\) be a polyhedral cone. Then for any face \(G\subseteq C\),

Proof

If \(G=\mathbf {0}\) then we obtain Sommerville’s Theorem 4.1. Let \(G\ne \mathbf {0}\) and let C / G denote the orthogonal projection of C onto the orthogonal complement of the linear span of G. It follows from the Gaussian distribution that \(v_G(C)=v_{G}(G)\,v_0(C/G)\), which can be expressed as

where in the first step we used Sommerville’s Theorem, and in the second step we used that \(v_G(F)=0\) if G is not a face of F, and \(\dim F/G=\dim F-\dim G\). This shows the claim. \(\square \)

The following corollary is [15, Thm. 2.8], cf. Sect. 2.3.3.

Corollary 4.3

Let \(C\subseteq \mathbbm {R}^d\) be a closed convex cone. Then

Proof

Follows by summing in (4.4) over all k-dimensional faces and noting that for every face F of C we have \(\mathcal {F}_k(F)\subseteq \mathcal {F}_k(C)\). \(\square \)

Corollary 4.4

(Gauss–Bonnet) For a polyhedral cone C,

Proof

Summing the terms in (4.5) over k and using \(\sum _{k=0}^{d} v_k(C)=1\) yields

The rest follows from the Euler relation (2.1). \(\square \)

If C is not a linear subspace, then the Gauss–Bonnet relation can be interpreted as saying that the random variable V on \(\{0,1,\ldots ,d\}\) given by \(\mathbbm {P}\{V=k\}=v_k(C)\), actually decomposes into two random variables \(V^0,V^1\) on \(\{0,2,4,\ldots ,2\lfloor {d}/{2}\rfloor \}\) and \(\{1,3,5,\ldots ,2\lfloor ({d-1})/{2}\rfloor +1\}\), respectively, such that

In fact, the same argument that gives the general Steiner formula (3.2) also shows that

where \(\varvec{g}^0\) and \(\varvec{g}^1\) denote Gaussian vectors conditioned on their projection on C falling in an even- or odd-dimensional face, respectively, and \(X_k,Y_k\) are independent \(\chi ^2\)-distributed random variables with k degrees of freedom. We can paraphrase (4.5) in terms of the moments of these random variables.

Corollary 4.5

Let \(f:\mathbbm {R}_+^2\rightarrow \mathbbm {R}\) be a Borel measurable function, and for \(C\subseteq \mathbbm {R}^d\) a polyhedral cone, which is not a linear subspace, let \(\varphi _f(C),\varphi _f^0(C),\varphi _f^1(C)\) denote the moments

Then we have

Proof

The first equation is obtained by invoking the general Steiner formula and applying (4.5):

The second equation is obtained by using Möbius inversion (2.12) and noting that the Möbius function of the face lattice is \(\mu (F,C)=(-1)^{\dim C-\dim F}\). \(\square \)

We list a few more corollaries, the usefulness of which may yet need to be established. The proofs are variations of the proof of Corollary 4.4.

Corollary 4.6

For the statistical dimension \(\delta (C)\) we obtain

In particular, if \(\dim C\) is odd, then

and if \(\dim C\) is even, then

Corollary 4.7

Let \(V_C\) be the random variable on \(\{0,1,\dots ,d\}\) defined by \(\mathbbm {P}\{V_C=k\} = v_k(C)\). The alternating sum of the exponential generating function satisfies

Remark 4.8

The Gauss–Bonnet relation can also be written out as \(\sum _{F\in \mathcal {F}(C)} (-1)^{\dim F}v_F(C)=0\), if C is not a linear subspace. If \(G\in \mathcal {F}(C)\) is a proper face, i.e., \(G\ne C\), then one can apply Gauss–Bonnet to the projected cone C / G, as in the deduction of Theorem 4.2 from Sommerville’s Theorem 4.1, to obtain

Rewriting this formula in terms of internal/external angles, and extending this to include also the case \(G=C\), one obtains

where \(\le \) denotes the order relation in the face lattice, i.e., the inclusion relation. In [25] McMullen observed that this relation means that the internal and external angle functions (one of them multiplied by the Möbius function) are mutual inverses in the incidence algebra of the face lattice, cf. Sect. 2.4. More precisely, the Gauss–Bonnet relation only shows that one of them is the left-inverse of the other (and of course the other is a right-inverse of the first), but since left-inverse, right-inverse, or two-sided inverse are equivalent in the incidence algebra [33, Prop. 3.6.3] one obtains the following additional relation “for free”:

This is [25, Thm. 3].

The relation (4.2) used in the proof of Sommerville’s Theorem 4.1 is a special case of the principal kinematic formula, to be derived in more detail next.

5 Elementary Kinematics for Polyhedral Cones

The principal kinematic formulae of integral geometry relate the intrinsic volumes, or certain measures that localize these quantities, of the intersection of two or more randomly moved geometric objects to those of the individual objects. This section presents a direct derivation of the principal kinematic formula in the setting of two polyhedral cones. The results of this section are special cases of Glasauer’s Kinematic Formula for spherically convex sets [13, 34], though in the spirit of the rest of this article, our proof is combinatorial, based on the facial decomposition of the cone, and uses probabilistic terminology.

In what follows, when we say that \(\varvec{Q}\) is drawn uniformly at random from the orthogonal group O(d), we mean that it is drawn from the Haar probability measure \(\nu \) on O(d). This is the unique regular Borel measure on O(d) that is left and right invariant (\(\nu (\varvec{Q}A)=\nu (A\varvec{Q})=\nu (A)\) for \(\varvec{Q}\in O(d)\) and a Borel measurable \(A\subseteq O(d)\)) and satisfies \(\nu (O(d))=1\). Moreover, for measurable \(f:O(d)\rightarrow \mathbbm {R}_+\), we write

for the integral with respect to the Haar probability measure, and we will occasionally omit the subscript \(\varvec{Q}\in O(d)\), or just write \(\varvec{Q}\) in the subscript, when there is no ambiguity. More information on invariant measures in the context of integral geometry can be found in [34, Chap. 13].

Theorem 5.1

(Kinematic Formula) Let \(C,D\subseteq \mathbbm {R}^d\) be polyhedral cones. Then, for \(\varvec{Q}\in O(d)\) uniformly at random, and \(k>0\),

If \(D=L\) is a linear subspace of dimension \(d-m\), then

Implicit in the statement of the theorem is the integrability of \(v_k(C\cap \varvec{Q}D)\) as a function of \(\varvec{Q}\). This will be established in the proof. Recall that the intrinsic volumes of \(C\times D\) are obtained by convoluting the intrinsic volumes of C and D, cf. Sect. 2.2. The second equation in (5.1) follows from the first and from \(\sum _k v_k(C)=1\), and statement (5.2) follows from (5.1) by applying the product rule (2.9). Note also that using polarity (Proposition 2.5) on both sides of (5.1) we obtain the polar kinematic formulas

and similarly for (5.2). Combining Theorem 5.1 with the Gauss–Bonnet relation (4.6) yields the so-called Crofton formulas, which we formulate in the following corollary. They relate the Grassmann angles (see Sect. 2.3.3) to the intrinsic volumes.

Corollary 5.2

Let \(C,D\subseteq \mathbbm {R}^{d}\) be polyhedral cones such that not both of C and D are linear subspaces. Then, for \(\varvec{Q}\in O(d)\) uniformly at random,

In particular, if \(D=L\) is a linear subspace of dimension \(d-m\),

For the derivation of this corollary, and for later use, we need the following genericity lemma. Recall from Sect. 2.1 that two cones \(C,D\subseteq \mathbbm {R}^d\) are said to intersect transversely, written \(C\pitchfork D\), if \({\text {relint}}(C)\cap {\text {relint}}(D)\ne \emptyset \) and \(\dim (C\cap D) = \dim (C)+\dim (D)-d\). For the rest of this section, we use the notation \(L_C:={\text {lin}}(C)=C+(-C)\) for the linear span of a convex cone C.

Lemma 5.3

Let \(C,D\subseteq \mathbbm {R}^{d}\) be polyhedral cones. Then for \(\varvec{Q}\in O(d)\) uniformly at random, almost surely either \(C\cap \varvec{Q}D=\mathbf {0}\) or \(C\pitchfork \varvec{Q}D\) holds. In particular, if not both of C and D are linear subspaces, then almost surely either \(C\cap \varvec{Q}D=\mathbf {0}\), or \(C\cap \varvec{Q}D\) is not a linear subspace.

Proof

The set \(\mathcal {S}\) of \(\varvec{Q}\in O(d)\) with \(\dim L_F\cap \varvec{Q}L_G\ne \max \{0,\dim L_F+\dim L_G-d\}\) for some \((F,G)\in \mathcal {F}(C)\times \mathcal {F}(D)\) has measure zero, see for example [34, Lem. 13.2.1].

Assume \(\varvec{Q}\not \in \mathcal {S}\) and \(C\cap \varvec{Q}D\ne \mathbf {0}\). If \({\text {relint}}(C)\cap {\text {relint}}(\varvec{Q}D)\ne \emptyset \), then \(\varvec{Q}\not \in \mathcal {S}\) implies \(\dim C\cap \varvec{Q}D=\dim C+\dim D-d\), and hence \(C\pitchfork \varvec{Q}D\). If \({\text {relint}}(C)\cap {\text {relint}}(\varvec{Q}D)=\emptyset \), then by the Separating Hyperplane Theorem 2.2 there exists a hyperplane H such that \(C\subseteq H_+\) and \(\varvec{Q}D\subseteq H_-\). Let \(F=C\cap H\) and \(G=D\cap \varvec{Q}^{T}H\). By the assumption \(C\cap \varvec{Q}D\ne \mathbf {0}\), we have \(F\ne \mathbf {0}\) and \(G\ne \mathbf {0}\). Since \(L_F\) and \(\varvec{Q}L_G\) are in H and \(\dim H=d-1\), we get \(\dim L_F\cap \varvec{Q}L_G \ge \dim L_F+\dim L_G-d+1\) and therefore \(\varvec{Q}\in \mathcal {S}\), which contradicts our assumption. We thus established \(\{\varvec{Q}\in O(d)\mathrel {\mathop {:}}C\cap \varvec{Q}D\ne \mathbf {0}\text { and } C\not \pitchfork \varvec{Q}D\}\subseteq \mathcal {S}\).

For the second claim, assume that C is not a linear subspace. The lineality space of C, \(C\cap (-C)\), is contained in every supporting hyperplane of C, and therefore does not intersect \({\text {relint}}(C)\). If \(C\pitchfork \varvec{Q}D\), then there exists nonzero \(\varvec{x}\in {\text {relint}}(C)\cap \varvec{Q}D\). In particular, \(\varvec{x}\) does not lie in the lineality space of C. Since the lineality space of the intersection \(C\cap \varvec{Q}D\) is the intersection of the lineality spaces of C and of \(\varvec{Q}D\), it follows that \(\varvec{x}\) is in the complement of the lineality space of \(C\cap \varvec{Q}D\) in \(C\cap \varvec{Q}D\), which shows that \(C\cap \varvec{Q}D\) is not a linear subspace.\(\square \)

Proof of Corollary 5.2

Denoting \(\chi (C):=\sum _{i=0}^d (-1)^i v_i(C)\), the Gauss–Bonnet relation (4.6) says that \(\chi (C)=0\) if C is not a linear subspace, and \(\chi (\mathbf {0})=1\). By Lemma 5.3 we see that \(\chi \) is almost surely the indicator function for the event that C and D only intersect at the origin. We can therefore conclude,

The second claim follows by replacing D with L. \(\square \)

Our proof of Theorem 5.1 is based on a classic “double counting” argument; to illustrate this, we first consider an analogous situation with finite sets. We note that Proposition 5.4 generalizes without difficulties to the setting of compact groups acting on topological spaces, as in [34, Thm. 13.1.4].

Proposition 5.4

Let \(\Omega \) be a finite set and G be a finite group acting transitively on \(\Omega \). Let \(M,N\subseteq \Omega \) be subsets. Then for uniformly random \(\gamma \in G\),

Proof

Taking \(\xi \in \Omega \) uniformly at random, we obtain the cardinality of M as \(|\Omega |\cdot \mathbbm {P}\{\xi \in M\}\). Introduce the indicator function \(1_M(\xi )\) for the event \(\xi \in M\) and note that \(1_{\gamma N}(x)=1_N(\gamma ^{-1}x)\) and \({\mathbb {E}}_{\gamma \in G}[1_N(\gamma ^{-1}x)] = |N|/|\Omega |\) for any \(x\in \Omega \). It follows that the random variables \(1_M(\xi ),1_{\gamma N}(\xi )\) are uncorrelated:

\(\square \)

Lemma 5.5 uses the same idea to establish the kinematic formula for the Gaussian measure of cones of different dimensions, and Theorem 5.1 then follows by applying this to the pairwise intersection of faces.

Lemma 5.5

Let \(C,D\subseteq \mathbbm {R}^d\) be polyhedral cones with \(\dim C=j\) and \(\dim D=\ell \), and assume \(0<k\le d\). If \(j+\ell =k+d\), then for \(\varvec{Q}\in O(d)\) uniformly at random,

If \(j+\ell = d-k\), then for \(\varvec{Q}\in O(d)\) uniformly at random,

The proof of Lemma 5.5 relies crucially on the left and right invariance of the Haar measure, which implies that for any measurable \(f:O(d)\rightarrow \mathbbm {R}_+\) and fixed \(\varvec{Q}_0,\varvec{Q}_1\in O(d)\),

For a linear subspace \(L\subseteq \mathbbm {R}^d\), we can (and will) naturally identify the group O(L) of orthogonal transformations of L with the subgroup of O(d) consisting of those \(\varvec{Q}\in O(d)\) for which \(\varvec{Q}\varvec{x}=\varvec{x}\) for \(\varvec{x}\in L^{\perp }\). The group O(L) carries its own Haar probability measure. We also use the following characterization of the Gaussian volume of a convex cone \(C\subseteq \mathbbm {R}^d\):

where \(\varvec{x}\ne \mathbf {0}\) arbitrary. This characterization follows from the fact that for \(\varvec{Q}\in O(d)\) uniformly at random, the point \(\varvec{Q}\varvec{x}\) is uniformly distributed on the sphere of radius \(\Vert \varvec{x}\Vert \).

Proof of Lemma 5.5

For illustration purposes we first prove (5.5) in the case \(k=d\), as it is almost a carbon copy of the proof of Proposition 5.4 and [34, Thm. 13.1.4]. We need to show that

Note that the map \(\varvec{Q}\mapsto v_d(C\cap \varvec{Q}D)\) is in fact measurable; this follows from the characterization

the measurability of \((\varvec{x},\varvec{Q})\mapsto 1_C(\varvec{x})1_D(\varvec{Q}^T\varvec{x})\), and the fact that the integral \({\mathbb {E}}_{\varvec{g}\sim \mathcal {N}(\mathbbm {R}^d)}[1_C(\varvec{g})\,1_{D}(\varvec{Q}^T\varvec{g})]\) is then measurable in \(\varvec{Q}\), see for example [29, Thm. 8.5]. Fubini’s Theorem and (5.8) then yield

We proceed with the general case of (5.5). By Lemma 5.3, almost surely \(\dim L_C\cap \varvec{Q}L_D = k\) and \(\dim (C\cap \varvec{Q}D)=k\) or \(C\cap \varvec{Q}D =\mathbf {0}\). For generic \(\varvec{Q}\) we can therefore write

We thus need to show that

To see that the map \(\varvec{Q}\mapsto v_k(C\cap \varvec{Q}D)\) is measurable, note that, using the fact that the orthogonal projection of a Gaussian vector to a subspace is again Gaussian, we have

It is enough to verify that the projection \(\varvec{\Pi }_{L_C\cap \varvec{Q}L_D}(\varvec{x})\) is continuous in \(\varvec{x}\) and \(\varvec{Q}\) outside a set of measure zero; the measurability of \(v_k(C\cap \varvec{Q}D)\) then follows from the same considerations as in the case \(k=d\). If \(C\pitchfork \varvec{Q}D\), then \(L_C\cap \varvec{Q}L_D\) is the kernel of a matrix of rank \(d-k\) whose rows depend continuously on \(\varvec{Q}\). The projection \(\varvec{\Pi }_{L_C\cap \varvec{Q}L_D}(\varvec{x})\) depends continuously on \(\varvec{x}\) and on this matrix, and therefore also on \(\varvec{Q}\).

We now proceed to show identity (5.9). Let \(\varvec{Q}_0\in O(L_D)\). By the orthogonal invariance (5.7),

Since this holds for any \(\varvec{Q}_0\in O(L_D)\), we can choose \(\varvec{Q}_0\in O(L_D)\) uniformly at random to obtain

where in (1) we used \(\varvec{Q}_0L_D=L_D\), in (2) we used Fubini’s Theorem, and in (3) we used (5.8). For the remaining part, replacing \(\varvec{Q}\) with \(\varvec{Q}_1\varvec{Q}\) for \(\varvec{Q}_1\in O(L_C)\) uniformly at random, and applying (5.7) again,

where the last equality follows again from (5.8).

We now derive (5.6). By Lemma 5.3, for generic \(\varvec{Q}\), \(L_C\cap \varvec{Q}L_D=\mathbf {0}\) and \(\dim L_C+\varvec{Q}L_D = j+\ell = d-k\). Using the fact that an orthogonal projection of a Gaussian vector is Gaussian, we get

The integrability of this expression in \(\varvec{Q}\) follows, as above, from the fact that the projection map to \(L_C+\varvec{Q}L_D\) is continuous for almost all \(\varvec{Q}\) and \(\varvec{g}\). For generic \(\varvec{Q}\), any \(\varvec{g}\in \mathbbm {R}^d\) has a unique decomposition \(\varvec{g}=\varvec{g}_C+\varvec{g}_D+\varvec{g}^{\bot }\), with \(\varvec{g}_C\in L_C\), \(\varvec{g}_D\in \varvec{Q}L_D\), \(\varvec{g}^{\bot }\in (L_C+\varvec{Q}L_D)^{\bot }\). Note that \(\varvec{g}_C\) and \(\varvec{g}_D\) are not orthogonal projections, and that the decomposition (even \(\varvec{g}_C\)) depends on \(\varvec{Q}\).

From the uniqueness of this decomposition we get the equivalence

and therefore

Now let \(\varvec{Q}_0\in O(L_C)\) be fixed. By orthogonal invariance of the Haar measure and of the Gaussian distribution we can replace \(\varvec{Q}\) with \(\varvec{Q}_0\varvec{Q}\) and \(\varvec{g}\) with \(\varvec{g}':=\varvec{Q}_0\varvec{g}\). We next determine the decomposition \(\varvec{g}'=\varvec{g}'_C+\varvec{g}'_D+\varvec{g}'^{\bot }\) in \(L_C+\varvec{Q}_0\varvec{Q}L_D+(L_C^{\bot }\cap \varvec{Q}_0\varvec{Q}L_D^{\bot })\). Note that under this substitution,

with \(\varvec{Q}_0\varvec{g}_C\in L_C\) (by the fact that \(\varvec{Q}_0\in O(L_C)\)), \(\varvec{Q}_0\varvec{g}_D\in \varvec{Q}_0\varvec{Q}L_D\) (by definition), and \(\varvec{Q}_0\varvec{g}^{\bot }\in \varvec{Q}_0(L_C^{\bot }\cap \varvec{Q}L_D^{\bot }) = (L_C^{\bot }\cap \varvec{Q}_0\varvec{Q}L_D^{\bot })\) (since \(\varvec{Q}_0\) is the identity on \(L_C^{\bot }\)). By uniqueness of the decomposition,

We therefore have

where we used Fubini in the second and (5.8) in the last equality. Note that \(\varvec{Q}^T\varvec{g}_D\in L_D\). Repeating the argument above by replacing \(\varvec{Q}\) with \(\varvec{Q}\varvec{Q}_1^T\) for \(\varvec{Q}_1\in O(L_D)\), we get

where again we used (5.8). This finishes the proof. \(\square \)

Proof of Theorem 5.1

We first note that it suffices to prove the first equality in (5.1), as we can deduce the second from the fact that the intrinsic volumes sum up to one,

The equations in (5.2) follow directly from (5.1) as a special case, since

if L is a linear subspace of dimension \(d-m\).

The genericity Lemma 5.3 implies that the k-dimensional faces of \(C\cap \varvec{Q}D\) are generically of the form \(F\cap \varvec{Q}G\) with \((F,G)\in \mathcal {F}(C)\times \mathcal {F}(D)\) and \(\dim F+\dim G=k+d\). If we have shown that for \(F\in \mathcal {F}_j(C)\), \(G\in \mathcal {F}_{\ell }(D)\), with \(j+\ell >d\), one has

then the kinematic formula follows by noting that \(v_F(C)v_G(D)=v_{F\times G}(C\times D)\) and

It remains to show (5.11). By (2.8) and Lemma 5.3, almost surely

The integrability of these terms has been shown in the proof of Lemma 5.5, which shows the integrability in (5.1). In order to prove (5.11) we proceed as in the proof of Lemma 5.5. Let \(\varvec{Q}_0\in O(L_F)\) be uniformly at random. Note that the normal cone \(N_FC\) lies in the orthogonal complement of \(L_F\), so that \(\varvec{Q}_0\) leaves the normal cone invariant. Using the invariance of the Haar measure as in the proof of Lemma 5.5,

where in (1) we used the orthogonal invariance of the intrinsic volumes and in (2) we applied Lemma 5.5 to the inner expectation (note that the dimensions match). Comparing the first line with the last line we see that the term \(v_j(F)\) could be extracted by replacing F with \(L_F\). Repeating the same trick by replacing \(\varvec{Q}\) with \(\varvec{Q}\varvec{Q}_1\) for \(\varvec{Q}_1\in O(L_D)\), we get

where in the second equation we used that \(v_k(L_F\cap \varvec{Q}L_G) = 1\), and the last equality follows from (5.6). \(\square \)

Remark 5.6

In the literature there are roughly two different strategies used to derive kinematic formulas:

-

(1)

Use a characterisation theorem for the intrinsic volumes (or a suitable localisation thereof) that shows that certain types of functions in a cone must be linear combinations of the intrinsic volumes. This approach is common in integral geometry [21, 34], see [2, 13] for the spherical/conic setting.

-

(2)

Assume that the boundary of the cone intersected with a sphere is a smooth hypersurface; then argue over the curvature of the intersection of the boundaries. For a general version of this approach, with references to related work, see [17].

The second approach is usually also based on a double-counting argument that involves the co-area formula. Our proof can be interpreted as a piecewise-linear version of this approach.

6 The Klivans–Swartz Relation for Hyperplane Arrangements

While the most natural lattice structure associated to a polyhedral cone is arguably its face lattice, there is also the intersection lattice generated by the hyperplanes that are spanned by the facets of the cone (assuming that the cone has non-empty interior; otherwise one can argue within the linear span of the cone). In this section we present a deep and useful relation between this intersection lattice and the intrinsic volumes of the regions of the hyperplane arrangement, which is due to Klivans and Swartz [22], and which we will generalize to also include the faces of the regions. We finish this section with some applications of this result.

Let \(\mathcal {A}\) be a hyperplane arrangement in \(\mathbbm {R}^d\). Recall from (2.17) the notation \(\mathcal {R}_j(\mathcal {A})\) and \(r_j(\mathcal {A})\) for the set of j-dimensional regions of the arrangement and for their cardinality, respectively. Also recall Zaslavsky’s Theorem 2.13, which is the briefly stated identity \(r_j(\mathcal {A}) = (-1)^j\,\chi _{\mathcal {A},j}(-1)\), where \(\chi _{\mathcal {A},j}\) denotes the jth-level characteristic polynomial of the arrangement. Expressing this polynomial in the form

and using the identity \(\sum _k v_k(C)=1\), we can rewrite Zaslavsky’s result in the form

Klivans and Swartz [22] have proved that in the case \(j=d\) this equality of sums is in fact an equality of the summands. We will extend this and show that for all j the summands are equal. In particular, taking the sum of intrinsic volumes of all regions of a certain dimension j in a hyperplane arrangement yields a quantity that is solely expressible in the lattice structure of the hyperplane arrangement. So while the intrinsic volumes of a single region are certainly not necessarily invariant under any nonsingular linear transformations, the sum of intrinsic volumes over all regions of a fixed dimension is indeed invariant under any nonsingular linear transformations.

Theorem 6.1

Let \(\mathcal {A}\) be a hyperplane arrangement in \(\mathbbm {R}^d\). Then for \(0\le j\le d\),

where \(P_F(t) = \sum _k v_k(F)t^k\). In terms of the intrinsic volumes, for \(0\le k\le j\),

where \(a_{jk}\) is the coefficient of \(t^k\) in \(\chi _{\mathcal {A},j}(t)\).

Note that in the special case \(j=k\) we obtain \(\sum _{F\in \mathcal {R}_j(\mathcal {A})} v_j(F) = \ell _j(\mathcal {A})\), which is easily verified directly. We derive a concise proof of Theorem 6.1 by combining Zaslavsky’s Theorem with the kinematic formula. A similar, though slightly different, proof strategy using the kinematic formula was recently employed in [20] to derive Klivans and Swartz’s result.

The cases \(j=0,1\) will be shown directly; in the case \(j\ge 2\) we prove (6.1) by induction on k. This proof by induction naturally consists of two steps:

-

(1)

For the case \(k=0\) we need to show

$$\begin{aligned} \sum _{F\in \mathcal {R}_j(\mathcal {A})} v_0(F) = (-1)^j a_{j0}. \end{aligned}$$Let H be a hyperplane in general position relative to \(\mathcal {A}\), that is, H intersects all subspaces in \(\mathcal {L}(\mathcal {A})\) transversely. In H consider the restriction \(\mathcal {A}^H=\{H'\cap H\mathrel {\mathop {:}}H'\in \mathcal {A}\}\). The number of \((j-1)\)-dimensional regions in \(\mathcal {A}^H\) is given by the number of j-dimensional regions in \(\mathcal {A}\), which are hit by the hyperplane H. With the simplest case of the Crofton formula (4.2), we obtain for a uniformly random hyperplane H,

$$\begin{aligned} {\mathbb {E}}\big [r_{j-1}(\mathcal {A}^H)\big ]= & {} \sum _{F\in \mathcal {R}_j(\mathcal {A})} \mathbbm {P}\{F\cap H \ne \mathbf {0}\} \\= & {} \sum _{F\in \mathcal {R}_j(\mathcal {A})} (1-2v_0(F)) = r_j(\mathcal {A}) - 2\sum _{F\in \mathcal {R}_j(\mathcal {A})} v_0(F), \end{aligned}$$and therefore,

$$\begin{aligned} \sum _{F\in \mathcal {R}_j(\mathcal {A})} v_0(F) = \frac{1}{2}(r_j(\mathcal {A}) - {\mathbb {E}}[r_{j-1}(\mathcal {A}^H)]) . \end{aligned}$$(6.2)We will see below that \(r_{j-1}(\mathcal {A}^H)\) is almost surely constant (which eliminates the expectation on the left-hand side) and is in fact expressible in terms of \(\chi _{\mathcal {A},j}\). This will give the basis step in a proof by induction on k of (6.1).

-

(2)

For the induction step we use the kinematic formula (5.2) with \(m=1\), that gives for a uniformly random hyperplane H,

$$\begin{aligned} \sum _{F\in \mathcal {R}_j(\mathcal {A})} v_1(F)&= \sum _{F\in \mathcal {R}_j(\mathcal {A})} \big ({\mathbb {E}}[v_0(F\cap H)]-v_0(F)\big ) \nonumber \\&= {\mathbb {E}}\Big [\sum _{F\in \mathcal {R}_j(\mathcal {A})} v_0(F\cap H)\Big ] - \sum _{F\in \mathcal {R}_j(\mathcal {A})} v_0(F) , \end{aligned}$$(6.3)$$\begin{aligned} \sum _{F\in \mathcal {R}_j(\mathcal {A})} v_k(F)&= \sum _{F\in \mathcal {R}_j(\mathcal {A})} {\mathbb {E}}[v_{k-1}(F\cap H)] \nonumber \\&= {\mathbb {E}}\Big [\sum _{F\in \mathcal {R}_j(\mathcal {A})} v_{k-1}(F\cap H)\Big ] , \quad \text {if } k\ge 2 . \end{aligned}$$(6.4)Notice that if the summation would be over the regions in \(\mathcal {A}^H\), then we could (and in fact can if \(k\ge 2\)) apply the induction hypothesis and express \(\sum v_k(C\cap H)\) in terms of the characteristic polynomials of \(\mathcal {A}^H\), which, as we will see below, is constant for generic H and expressible in the characteristic polynomial of \(\mathcal {A}\). Since the summation is over the regions of \(\mathcal {A}\) we need to be a bit careful in the case \(k=1\).

To implement this idea we need to understand how the characteristic polynomial of a hyperplane arrangement changes when adding a hyperplane in general position.

Lemma 6.2

Let \(\mathcal {A}\) be a hyperplane arrangement in \(\mathbbm {R}^d\), and let \(j\ge 2\). If \(H\subset \mathbbm {R}^d\) is a linear hyperplane in general position relative to \(\mathcal {A}\), then the \((j-1)\)th-level characteristic polynomial of the reduced arrangement \(\mathcal {A}^H\) and the number of \((j-1)\)-dimensional regions of \(\mathcal {A}^H\) are given by

In terms of coefficients, if \(\chi _{\mathcal {A},j}(t)=\sum _k a_{jk}\, t^k\), then

Proof

Note first that if \(\tilde{L},L\in \mathcal {L}(\mathcal {A})\), with \(\dim \tilde{L},\dim L\ge 2\), then \(\tilde{L}\supseteq L\) if and only if \(\tilde{L}\cap H\supseteq L\cap H\). Indeed, if \(\tilde{L}\cap H\supseteq L\cap H\), then

where we used the assumption that H intersects all subspaces in \(\mathcal {L}(\mathcal {A})\) transversely. Hence, \(\dim L=\dim (\tilde{L}\cap L)\), and \(\tilde{L}\supseteq L\). In other words, the map \(L\mapsto L\cap H\) is a bijection between \(\mathcal {L}_j(\mathcal {A})\) and \(\mathcal {L}_{j-1}(\mathcal {A}^H)\) for all \(j\ge 2\) that is compatible with the partial orders on \(\mathcal {L}(\mathcal {A})\) and \(\mathcal {L}(\mathcal {A}^H)\). Of course, all elements in \(\mathcal {L}_0(\mathcal {A})\cup \mathcal {L}_1(\mathcal {A})\) are mapped to \(\mathbf {0}\).

Now, recall the form of the jth-level characteristic polynomial (2.13)

and also recall the recursive definition of the Möbius function (2.11), \(\mu (\tilde{L},L)=0\) if \(\tilde{L}\not \supseteq L\), and

From the above observation about the sets \(\mathcal {L}_j(\mathcal {A})\) and \(\mathcal {L}_{j-1}(\mathcal {A}^H)\) for \(j\ge 2\) we obtain

where \(\bar{\mu }\) shall denote the Möbius function on \(\mathcal {L}(\mathcal {A}^H)\). This shows the claimed formula for the nonconstant coefficients of \(\chi _{\mathcal {A}^H,j-1}\). We obtain the claim for the constant coefficient by noting that for \(L\in \mathcal {L}(\mathcal {A})\), \(\dim L\ge 2\), and \(\bar{L}:=L\cap H\),

so that the constant coefficient of \(\chi _{\mathcal {A}^H,j-1}\) is given by

The constant and linear coefficients of \(\chi _{\mathcal {A},j}\) are given by

which shows that indeed \(\bar{a}_{j-1,0}=a_{j0}+a_{j1}\). As for the claimed formula for \(r_{j-1}(\mathcal {A}^H)\) we use Zaslavsky’s Theorem 2.13 to obtain

which finishes the proof. \(\square \)

Proof of Theorem 6.1

We first verify the cases \(j=0,1\) directly. Recall from (2.16) that \(\chi _{\mathcal {A},0}(t) = \ell _0(\mathcal {A})\) and \(\chi _{\mathcal {A},1}(t) = \ell _1(\mathcal {A}) (t-\ell _0(\mathcal {A}))\), where \(\ell _j(\mathcal {A})=|\mathcal {L}_j(\mathcal {A})|\). In a linear hyperplane arrangement we have at most one 0-dimensional region, and \(\mathcal {R}_0(\mathcal {A})=\mathcal {L}_0(\mathcal {A})\) (possibly both empty). Therefore,

As for the case \(j=1\), note first that if \(r_0(\mathcal {A})=0\), then \(\mathcal {R}_1(\mathcal {A})=\mathcal {L}_1(\mathcal {A})\) and the claim follows as in the case \(j=0\). If on the other hand \(r_0(\mathcal {A})=1\), then every line \(L\in \mathcal {L}_1(\mathcal {A})\) corresponds to two rays \(F_+,F_-\in \mathcal {R}_1(\mathcal {A})\), that is, \(r_1(\mathcal {A})=2\ell _1(\mathcal {A})\). Since \(v_1(F_\pm )=v_0(F_\pm )={1}/{2}\), and \(\ell _0(\mathcal {A})=1\), we obtain

We now assume \(j\ge 2\) and proceed by induction on k starting with \(k=0\). In (6.2) we have seen that

From Lemma 6.2 we obtain that \(r_{j-1}(\mathcal {A}^H)\) is almost surely constant and given by \(r_j(\mathcal {A}) - (-1)^j 2\chi _{\mathcal {A},j}(0)\). Therefore,

This settles the case \(k=0\). For \(k>0\) we need to distinguish between \(k=1\) and \(k\ge 2\). From (6.3), we obtain, using the case \(k=0\) and Lemma 6.2,

This settles the case \(k=1\). Finally, in the case \(k\ge 2\) we argue similarly, using that \(v_i(\mathbf {0})=0\) if \(i>0\),

\(\square \)

Remark 6.3

It was pointed out to us by Rolf Schneider that for \(k>0\), \(j>0\) and a subspace L of dimension \(\dim L=d-m\), in general position relative to \(\mathcal {A}\), one can (as we did in the case \(k=0\)) use the identity

to express the sum of the Grassmann angles in terms of the number of regions of the reduced arrangement. One can then derive the expression (for example, by applying Lemma 6.2 iteratively),

to express the number of regions of the reduced arrangement in terms of the characteristic polynomial of \(\mathcal {A}\). Via the Crofton formulas 5.2, we can use this to recover the expressions for the intrinsic volumes.

6.1 Applications

In this section we compute some examples and present some applications of Theorem 6.1.

6.1.1 Product Arrangements

Let \(\mathcal {A},\mathcal {B}\) be two hyperplane arrangements in \(\mathbbm {R}^d\) and \(\mathbbm {R}^e\), respectively. The product arrangement in \(\mathbbm {R}^{d+e}\) is defined as

The characteristic polynomial is multiplicative, \(\chi _{\mathcal {A}\times \mathcal {B}}(t)=\chi _\mathcal {A}(t)\chi _\mathcal {B}(t)\), and so is the bivariate polynomial (2.14), \(X_{\mathcal {A}\times \mathcal {B}}(s,t) = X_\mathcal {A}(s,t)X_\mathcal {B}(s,t)\). This can either be shown directly [26, Chap. 2], or deduced from Theorem 6.1, as the intrinsic volumes polynomial satisfies \(P_{C\times D}(t)=P_C(t) P_D(t)\).

6.1.2 Generic Arrangements

A hyperplane arrangement \(\mathcal {A}\) is said to be in general position if the corresponding normal vectors are linearly independent.Footnote 2 Combinatorial properties of such arrangements have been studied by Cover and Efron [10], who generalize results of Schläfli [31] and Wendel [36] to get expressions for, among other things, the average number of j-dimensional faces of a region in the arrangement. We set out to compute the characteristic polynomial of an arrangement of hyperplanes in general position, and in the process recover the formulas of Cover and Efron and a formula of Hug and Schneider [18] for the expected intrinsic volumes of the regions.

Lemma 6.4

Let \(\mathcal {A}=\{H_1,\ldots ,H_n\}\) be a generic hyperplane arrangement in \(\mathbbm {R}^d\) with \(n\ge d\). Then for \(0<j\le d\),

Proof

Assume first that \(j=d\). The proof in this case relies on Whitney’s theorem [33, Prop. 3.11.3]

where \(\rho \) denotes the rank of the arrangement \(\mathcal {B}\). We can subdivide the sum into two parts:

Since \(\mathcal {A}\) is in general position, \(\rho (\mathcal {B})=|\mathcal {B}|\) if \(|\mathcal {B}|\le d\), and \(\rho (\mathcal {B})=d\) if \(|\mathcal {B}|\ge d\). Collecting terms with equal rank, we obtain

An easy induction proof shows that \(\sum _{k=d}^n \left( {\begin{array}{c}n\\ k\end{array}}\right) (-1)^k=\left( {\begin{array}{c}n-1\\ d-1\end{array}}\right) (-1)^d\), which settles the case \(j=d\).

For the case \(0<j<d\) note that if \(L\in \mathcal {L}_j(\mathcal {A})\), then L is the intersection of \(d-j\) uniquely determined hyperplanes, and the restriction \(\mathcal {A}^L\) is a generic hyperplane arrangement in L consisting of \(n-d+j\) hyperplanes. Furthermore, there are exactly \(\left( {\begin{array}{c}n\\ d-j\end{array}}\right) \) linear subspaces of dimension j in \(\mathcal {L}(\mathcal {A})\). Therefore, using the characterisation (2.15) of the jth-level characteristic polynomial, we obtain

where the second equality follows from the case \(j=d\). \(\square \)

From Zaslavsky’s Theorem 2.13 we obtain from (6.6) the number of j-dimensional regions in a generic hyperplane arrangement, \(r_j(\mathcal {A})\), by setting \(t=1\). Using the simplification

we recognize the right-hand side as Schläfli’s formula [10, (1.1)] for the number of regions of a generic arrangement of \(n-d+j\) hyperplanes in j-dimensional space. The resulting formula for \(r_j(\mathcal {A})\) allows us to recover the formula of Cover and Efron [10, Thm. 1] for the sum of the \(f_j(C)\) over all regions.

If one takes one of these j-dimensional regions uniformly at random, then one also recovers the expression for the average number of j-dimensional faces from [10, Thm. 3’]. Moreover, then (6.6) and Theorem 6.1 together yield a closed formula for the expected intrinsic volumes of the regions. In particular, the d-dimensional regions have expected intrinsic volumes of

This is [18, Thm. 4.1].

6.1.3 Braid and Coxeter Arrangements

Finally, we compute the jth-level characteristic polynomial for the three families of arrangements

These arrangements are particularly nice to work with as the d-dimensional regions are all isometric; these chambers are indeed given by

The characteristic polynomials of these arrangements are well known, see for example [6, Sect. 6.4],

The bivariate polynomial \(X_{\mathcal {A}_A}(s,t)\) (along with affine generalizations) has been computed in [7, Thm. 8.3.1]. We derive this again, along with polynomials for the other two arrangements, from the known characteristic polynomials.

Lemma 6.5

The jth-level characteristic polynomials for the above defined hyperplane arrangements are given by

where \({\big \{\begin{array}{l}{d}\\ {j}\end{array}\big \}}\) denote the Stirling numbers of the second kind.

Proof

We first discuss the case \(\mathcal {A}=\mathcal {A}_A\). From the formula for the chambers of \(\mathcal {A}\) it is seen that an element in \(\mathcal {L}(\mathcal {A})\) is of the form

where \(k_1\le \ell _1< k_2\le \ell _2 < \dots \). More precisely, for \(L\in \mathcal {L}_j(\mathcal {A})\) there exists a unique partition \(I_1,\ldots ,I_j\), each non-empty, of \(\{1,\ldots ,d\}\) such that \(L=\{\varvec{x}\in \mathbbm {R}^d\mathrel {\mathop {:}}\forall i=1,\ldots ,j, \forall a,b\in I_i, x_a=x_b\}\). The corresponding reduction \(\mathcal {A}^L\) is easily seen to be a nonsingular linear transformation of the j-dimensional braid arrangement, so that \(\chi _{\mathcal {A}^L}(t) = \prod _{i=0}^{j-1} (t-i)\). Since the number of partitions of \(\{1,\ldots ,d\}\) into j non-empty sets is given by \({\big \{\begin{array}{l}{d}\\ {j}\end{array}\big \}}\), cf. [33], and by the characterisation (2.15) of \(\chi _{\mathcal {A},j}(t)\), we obtain the claim in the case \(\mathcal {A}=\mathcal {A}_A\).

In the case \(\mathcal {A}=\mathcal {A}_{BC}\) we can argue similarly, but we need to keep in mind the extra role of the origin. For every element \(L\in \mathcal {L}(\mathcal {A})\) there exists a subset I of \(\{1,\ldots ,d\}\) of cardinality \(|I|\ge j\), and a partition \(I_1,\ldots ,I_j\) of I such that \(L=\{\varvec{x}\in \mathbbm {R}^d\mathrel {\mathop {:}}\forall a\not \in I, x_a=0 \text { and } \forall i=1,\ldots ,j, \forall a,b\in I_i, x_a=x_b\}\). The same argument as in the case \(\mathcal {A}=\mathcal {A}_A\), along with the identity \(\sum _{i=j}^d \left( {\begin{array}{c}d\\ i\end{array}}\right) {\big \{\begin{array}{l}{i}\\ {j}\end{array}\big \}} = {\big \{\begin{array}{l}{d+1}\\ {j+1}\end{array}\big \}}\), then settles the case \(\mathcal {A}=\mathcal {A}_{BC}\).

In the case \(\mathcal {A}=\mathcal {A}_D\) we have two types of linear subspaces:

For the first type of linear subspace we obtain a reduction \(\mathcal {A}^{L_1}\) that is isomorphic to the arrangement \(\mathcal {A}_D\), while for the second type we obtain a reduction \(\mathcal {A}^{L_2}\) that is isomorphic to the arrangement \(\mathcal {A}_{BC}\) (each, of course, of the corresponding dimension). The number of subspaces of type \(L_1\) is given by \({\big \{\begin{array}{l}{d}\\ {j}\end{array}\big \}}\) (as in the case \(\mathcal {A}=\mathcal {A}_A\)), while the number of subspaces of type \(L_2\) is given by \({\big \{\begin{array}{l}{d+1}\\ {j+1}\end{array}\big \}}-{\big \{\begin{array}{l}{d}\\ {j}\end{array}\big \}}\) (as in the case \(\mathcal {A}=\mathcal {A}_{BC}\), but noting that \(|I|=d\) does not give a BC-type reduction). The same argument as before now yields the formula

which settles the case \(\mathcal {A}=\mathcal {A}_D\). \(\square \)

As before in the case of generic hyperplanes in Sect. 6.1.2, we finish by considering resulting formulas for uniformly random j-dimensional regions of the arrangement. We restrict to the arrangements \(\mathcal {A}_A\) and \(\mathcal {A}_{BC}\), and we restrict the formulas to the statistical dimensions. These statistical dimensions are particularly interesting for applications as seen in [5], where only the d-dimensional regions were considered. (Here, of course, the expectation vanishes since all d-chambers of these arrangements are isometric; for the lower-dimensional regions this is no longer true.)

Recall that the statistical dimension is given by \(\delta (C)=v_C'(1)\). Using again \(r_j(\mathcal {A})=(-1)^j\chi _{\mathcal {A},j}(-1)\), we obtain

We thus obtain:

where \(H_j\) denotes the jth harmonic number. We have thus derived the following application.

Proposition 6.6

Let \(F_A\in \mathcal {R}_j(\mathcal {A}_A)\) and \(F_{BC}\in \mathcal {R}_j(\mathcal {A}_{BC})\) be chosen uniformly at random among all elements in \(\mathcal {R}_j(\mathcal {A}_A)\) and \(\mathcal {R}_j(\mathcal {A}_{BC})\), respectively. Then their expected statistical dimensions are given by

Notes

We only discuss linear hyperplane arrangements; for generic affine hyperplane arrangements see for example [6].

References

Affentranger, F., Schneider, R.: Random projections of regular simplices. Discrete Comput. Geom. 7(3), 219–226 (1992)

Amelunxen, D.: Measures on polyhedral cones: characterizations and kinematic formulas. http://arxiv.org/abs/1412.1569 (2014)

Amelunxen, D., Bürgisser, P.: Intrinsic volumes of symmetric cones and applications in convex programming. Math. Program. 149(1–2), 105–130 (2013)

Amelunxen, D., Lotz, M.: Gordon’s inequality and condition numbers in conic optimization. http://arxiv.org/abs/1408.3016 (2014)

Amelunxen, D., Lotz, M., McCoy, M.B., Tropp, J.A.: Living on the edge: phase transitions in convex programs with random data. Inf. Inference 3(3), 224–294 (2014)

Ardila, F.: Algebraic and geometric methods in enumerative combinatorics. http://arxiv.org/abs/1409.2562 (2014)

Athanasiadis, C.A.: Algebraic combinatorics of graph spectra, subspace arrangements and Tutte polynomials. PhD thesis, Massachusetts Institute of Technology, Cambridge (1996). https://dspace.mit.edu/handle/1721.1/38401

Athanasiadis, C.A.: Characteristic polynomials of subspace arrangements and finite fields. Adv. Math. 122(2), 193–233 (1996)

Barvinok, A.: A Course in Convexity. Graduate Studies in Mathematics, vol. 54. American Mathematical Society, Providence, RI (2002)

Cover, T.M., Efron, B.: Geometrical probability and random points on a hypersphere. Ann. Math. Stat. 38, 213–220 (1967)

Donoho, D.L., Tanner, J.: Counting faces of randomly projected polytopes when the projection radically lowers dimension. J. Am. Math. Soc. 22(1), 1–53 (2009)

Feldman, D.V., Klain, D.A.: Angles as probabilities. Am. Math. Monthly 116(8), 732–735 (2009)

Glasauer, S.: Integralgeometrie konvexer Körper im sphdärischen Raum. PhD thesis, Albert-Ludwigs-Universität, Freiburg im Breisgau (1995). http://www.hs-augsburg.de/~glasauer/publ/diss.pdf

Goldstein, L., Nourdin, I., Peccati, G.: Gaussian phase transitions and conic intrinsic volumes: steining the Steiner formula. http://arxiv.org/abs/1411.6265 (2014)

Grünbaum, B.: Grassmann angles of convex polytopes. Acta Math. 121, 293–302 (1968)

Grünbaum, B.: Convex Polytopes. Graduate Texts in Mathematics, vol. 221, 2nd edn. Prepared and with a preface by V. Kaibel, V. Klee, G.M. Ziegler. Springer, New York (2003)

Howard, R.: The Kinematic Formula in Riemannian Homogeneous Spaces. Memoirs of the American Mathematical Society, vol. 106(509). American Mathematical Society, Providence (1993)

Hug, D., Schneider, R.: Random conical tessellations. Discrete Comput. Geom. 56(2), 395–426 (2016)

Jurrius, R.: Relations between Möbius and coboundary polynomials. Math. Comput. Sci. 6(2), 109–120 (2012)

Kabluchko, Z., Vysotsky, V., Zaporozhets, D.: Convex hulls of random walks, hyperplane arrangements, and Weyl chambers. http://arxiv.org/abs/1510.04073 (2015)

Klain, D.A., Rota, G.-C.: Introduction to Geometric Probability. Lezioni Lincee. Cambridge University Press, Cambridge (1997)

Klivans, C.J., Swartz, E.: Projection volumes of hyperplane arrangements. Discrete Comput. Geom. 46(3), 417–426 (2011)

Lawrence, J.: A short proof of Euler’s relation for convex polytopes. Canad. Math. Bull. 40(4), 471–474 (1997)

McCoy, M.B., Tropp, J.A.: From Steiner formulas for cones to concentration of intrinsic volumes. Discrete Comput. Geom. 51(4), 926–963 (2014)

McMullen, P.: Non-linear angle-sum relations for polyhedral cones and polytopes. Math. Proc. Camb. Philos. Soc. 78(2), 247–261 (1975)

Orlik, P., Terao, H.: Arrangements of Hyperplanes. Grundlehren der Mathematischen Wissenschaften, vol. 300. Springer, Berlin (1992)

Perles, M.A., Shephard, G.C.: Angle sums of convex polytopes. Math. Scand. 21, 199–218 (1967)

Rockafellar, R.T.: Convex Analysis. Princeton Mathematical Series, vol. 28. Princeton University Press, Princeton (1970)