Abstract

Given two polygonal curves in the plane, there are many ways to define a notion of similarity between them. One popular measure is the Fréchet distance. Since it was proposed by Alt and Godau in 1992, many variants and extensions have been studied. Nonetheless, even more than 20 years later, the original \(O(n^2 \log n)\) algorithm by Alt and Godau for computing the Fréchet distance remains the state of the art (here, n denotes the number of edges on each curve). This has led Helmut Alt to conjecture that the associated decision problem is 3SUM-hard. In recent work, Agarwal et al. show how to break the quadratic barrier for the discrete version of the Fréchet distance, where one considers sequences of points instead of polygonal curves. Building on their work, we give a randomized algorithm to compute the Fréchet distance between two polygonal curves in time \(O(n^2 \sqrt{\log n}(\log \log n)^{3/2})\) on a pointer machine and in time \(O(n^2(\log \log n)^2)\) on a word RAM. Furthermore, we show that there exists an algebraic decision tree for the decision problem of depth \(O(n^{2-\varepsilon })\), for some \(\varepsilon > 0\). We believe that this reveals an intriguing new aspect of this well-studied problem. Finally, we show how to obtain the first subquadratic algorithm for computing the weak Fréchet distance on a word RAM.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Shape matching is a fundamental problem in computational geometry, computer vision, and image processing. A simple version can be stated as follows: given a database \({\mathcal {D}}\) of shapes (or images) and a query shape S, find the shape in \({\mathcal {D}}\) that most resembles S. However, before we can solve this problem, we first need to address an issue: what does it mean for two shapes to be “similar”? In the mathematical literature, on can find many different notions of distance between two sets, a prominent example being the Hausdorff distance. Informally, the Hausdorff distance is defined as the maximal distance between two elements when every element of one set is mapped to the closest element in the other. It has the advantage of being simple to describe and easy to compute for discrete sets. In the context of shape matching, however, the Hausdorff distance often turns out to be unsatisfactory: it does not take the continuity of the shapes into account. There are well known examples where the distance fails to capture the similarity of shapes as perceived by human observers [6].

In order to address this issue, Alt and Godau introduced the Fréchet distance into the computational geometry literature [8, 45]. They argued that the Fréchet distance is better suited as a similarity measure, and they described an \(O(n^2\log n)\) time algorithm to compute it on a real RAM or pointer machine.Footnote 1 Since Alt and Godau’s seminal paper, there has been a wealth of research in various directions, such as extensions to higher dimensions [7, 23, 26, 28, 33, 46], approximation algorithms [9, 10, 37], the geodesic and the homotopic Fréchet distance [29, 34, 38, 48], and much more [2, 22, 25, 35, 36, 51, 54, 55]. Most known approximation algorithms make further assumptions on the curves, and only an \(O(n^2)\)-time approximation algorithm is known for arbitrary polygonal curves [24]. The Fréchet distance and its variants, such as dynamic time-warping [13], have found various applications, with recent work particularly focusing on geographic applications such as map-matching tracking data [15, 63] and moving objects analysis [19, 20, 47].

Despite the large amount of published research, the original algorithm by Alt and Godau has not been improved, and the quadratic barrier on the running time of the associated decision problem remains unbroken. If we cannot improve on a quadratic bound for a geometric problem despite many efforts, a possible culprit may be the underlying 3SUM-hardness [44]. This situation induced Helmut Alt to make the following conjecture.Footnote 2

Conjecture 1.1

(Alt’s conjecture) Let P, Q be two polygonal curves in the plane. Then it is 3SUM-hard to decide whether the Fréchet distance between P and Q is at most 1.

Here, 1 can be considered as an arbitrary constant, which can be changed to any other bound by scaling the curves. So far, the best unconditional lower bound for the problem is \(\Omega (n \log n)\) steps in the algebraic computation tree model [21].

Recently, Agarwal et al. [1] showed how to achieve a subquadratic running time for the discrete version of the Fréchet distance, running in \(O\big (n^2 \, \frac{\log \log n}{\log n}\big )\) time. Their approach relies on reusing small parts of the solution. We follow a similar approach based on the so-called Four-Russian-trick which precomputes small recurring parts of the solution and uses table-lookup to speed up the whole computation.Footnote 3 The result by Agarwal et al. is stated in the word RAM model of computation. They ask whether their result can be generalized to the case of the original (continuous) Fréchet distance.

1.1 Our Contribution

We address the question by Agarwal et al. and show how to extend their approach to the Fréchet distance between two polygonal curves. Our algorithm requires total expected time \(O(n^2 \sqrt{\log n} (\log \log n)^{3/2})\). This is the first algorithm with a running time of \(o(n^2 \log n)\) and constitutes the first improvement for the general case since the original paper by Alt and Godau [8]. To achieve this running time, we give the first subquadratic algorithm for the decision problem of the Fréchet distance. We emphasize that these algorithms run on a real RAM/pointer machine and do not require any bit-manipulation tricks. Therefore, our results are more in the line of Chan’s recent subcubic-time algorithms for all-pairs-shortest paths [30, 31] or recent subquadratic-time algorithms for min-plus convolution [16] than the subquadratic-time algorithms for 3SUM due to Baran et al. [12]. If we relax the model to allow constant time table-lookups, the running time can be improved to be almost quadratic, up to \(O(\log \log n)\) factors. As in Agarwal et al., our results are achieved by first giving a faster algorithm for the decision version, and then performing an appropriate search over the critical values to solve the optimization problem.

In addition, we show that non-uniformly, the Fréchet distance can be computed in subquadratic time. More precisely, we prove that the decision version of the problem can be solved by an algebraic decision tree [11] of depth \(O(n^{2-\varepsilon })\), for some fixed \(\varepsilon > 0\). It is, however, not clear how to implement this decision tree in subquadratic time, which hints at a discrepancy between the decision tree and the uniform complexity of the Fréchet problem.

Finally, we consider the weak Fréchet distance, where we are allowed to walk backwards along the curves. In this case, our framework allows us to achieve a subquadratic algorithm on the work RAM. Refer to Table 1 for a comprehensive summary of our results.

1.2 Recent Developments

Recently, Ben Avraham et al. [14] presented a subquadratic algorithm for the discrete Fréchet distance with shortcuts that runs in \(O(n^{4/3}\log ^3 n)\) time. This running time resembles, at least superficially, our result on algebraic computation trees for the general discrete Fréchet distance.

When we initially announced our results, we believed that they provided strong evidence that Alt’s conjecture is false. Indeed, for a long time it was conjectured that no subquadratic decision tree exists for 3SUM [57] and an \(\Omega (n^2)\) lower bound is known in a restricted linear decision tree model [4, 40]. However, in a recent–and in our opinion quite astonishing–result, Grønlund and Pettie showed that if we allow only slightly more powerful algebraic decision trees than in the previous lower bounds, one can decide 3SUM non-uniformly in \(O(n^{2-\varepsilon })\) steps, for some fixed \(\varepsilon > 0\) [52]. They also show that this leads to a general subquadratic algorithm for 3SUM, a situation very similar to the Fréchet distance as described in the present paper. Thus, despite some interesting developments, the status of Alt’s conjecture remains as open as before. However, we can now see that there exists a wide variety of efficiently solvable problems such as (in addition to 3SUM and the Fréchet distance) Sorting \(X+Y\) [41], Min-Plus-Convolution [16], or finding the Delaunay triangulation for a point set that has been sorted in two orthogonal directions [27], for which there seems to be a noticeable gap between the decision tree complexity and the uniform complexity.

In our initial announcement, we also asked whether, besides 3SUM-hardness, there may be other reasons to believe that the quadratic running time for the Fréchet distance cannot be improved. Karl Bringmann provided an interesting answer to this question by showing that any algorithm for the Fréchet distance with running time \(O(n^{2-\varepsilon })\), for some fixed \(\varepsilon > 0\), would violate the strong exponential time hypothesis (SETH) [17]. These results were later refined and improved to show that the lower bound holds in basically all settings (with the notable exception of the one-dimensional continuous Fréchet distance, which is still unresolved) [18]. We believe that these developments show that the Fréchet distance still holds many interesting aspects to be discovered and remains an intriguing object of further study.

2 Preliminaries and Basic Definitions

Let P and Q be two polygonal curves in the plane, defined by their vertices \(p_0, p_1, \dots , p_n\) and \(q_0, q_1, \dots , q_n\). Depending on the context, we interpret P and Q either as sequences of n and n edges, or as continuous functions \(P:[0, n] \rightarrow {{\mathbb {R}}}^2\) and \(Q:[0, n] \rightarrow {{\mathbb {R}}}^2\). In the latter case, we have \(P(i + \lambda ) = (1-\lambda )p_i + \lambda p_{i+1}\) for \(i = 0, \dots , n-1\) and \(\lambda \in [0,1]\), and similarly for Q. Let \(\Psi \) be the set of all continuous and nondecreasing functions \(\alpha :[0,1] \rightarrow [0,n]\) with \(\alpha (0) = 0\) and \(\alpha (1) = n\). The Fréchet distance between P and Q is defined as

where \({\Vert }{\cdot }{\Vert }\) denotes the Euclidean distance.

The classic approach to computing \(d_F(P, Q)\) uses the free-space diagram \({{\mathrm{FSD}}}(P, Q)\). It is defined as

In other words, \({{\mathrm{FSD}}}(P, Q)\) is the subset of the joint parameter space for P and Q where the corresponding points on the curves have distance at most 1, see Fig. 1.

Two polygonal curves P and Q, together with their associated free-space diagram. The reachable region \({{\mathrm{reach}}}(P,Q)\) is shown in blue. For example, the white area in C(2, 1), denoted F(2, 1), corresponds to all points on the third edge of P and the second edge of Q that have distance at most 1. As \((5,5) \in {{\mathrm{reach}}}(P,Q)\), we have \(d_F(P,Q) \le 1\)

The structure of \({{\mathrm{FSD}}}(P,Q)\) is easy to describe. Let \(R :=[0,n] \times [0, n]\) be the ground set. We subdivide R into \(n^2\) cells \(C(i,j) = [i, i+1] \times [j, j+1]\), for \(i,j = 0, \dots , n-1\). The cell C(i, j) corresponds to the edge pair \(e_{i+1}\) and \(f_{j+1}\), where \(e_{i+1}\) is the \((i+1)\)th edge of P and \(f_{j+1}\) is the \((j+1)\)th edge of Q. Then the set \(F(i,j) :={{\mathrm{FSD}}}(P,Q) \cap C(i,j)\) represents all pairs of points on \(e_{i+1} \times f_{j+1}\) with distance at most 1. Elementary geometry shows that F(i, j) is the intersection of C(i, j) with an ellipse [8]. In particular, the set F(i, j) is convex, and the intersection of \({{\mathrm{FSD}}}(P,Q)\) with the boundary of C(i, j) consists of four (possibly empty) intervals, one on each side of \({\partial }C(i,j)\). We call these intervals the doors of C(i, j) in \({{\mathrm{FSD}}}(P,Q)\). A door is said to be closed if the interval is empty, and open otherwise.

A path \(\pi \) in \({{\mathrm{FSD}}}(P,Q)\) is bimonotone if it is both x- and y-monotone, i.e., every vertical and every horizontal line intersects \(\pi \) in at most one connected component. Alt and Godau observed that it suffices to decide whether there exists a bimonotone path from (0, 0) to (n, n) inside \({{\mathrm{FSD}}}(P,Q)\). We define the reachable region \({{\mathrm{reach}}}(P,Q)\) as the set of points in \({{\mathrm{FSD}}}(P,Q)\) that are reachable from (0, 0) on a bimonotone path. Then, \(d_F(P,Q) \le 1\) if and only if \((n,n) \in {{\mathrm{reach}}}(P,Q)\), see Fig. 1. It is not necessary to compute all of \({{\mathrm{reach}}}(P,Q)\): since \({{\mathrm{FSD}}}(P,Q)\) is convex inside each cell, we only need the intersections \({{\mathrm{reach}}}(P,Q) \cap {\partial }C(i,j)\). The sets defined by \({{\mathrm{reach}}}(P,Q) \cap {\partial }C(i,j)\) are subintervals of the doors of the free-space diagram, and they are defined by endpoints of doors in the free-space diagram in the same row or column. We call the intersection of a door with \({{\mathrm{reach}}}(P,Q)\) a reach-door. The reach-doors can be found in \(O(n^2)\) time through a simple breadth-first-traversal of the cells [8]. In the next sections, we show how to obtain the crucial information, i.e., whether \((n,n) \in {{\mathrm{reach}}}(P,Q)\), in \(o(n^2)\) time instead.

2.1 Basic Approach and Intuition

In our algorithm for the decision problem, we basically want to compute \({{\mathrm{reach}}}(P,Q)\). But instead of propagating the reachability information cell by cell, we always group \(\tau \times \tau \) cells (with \(1 \ll \tau \ll n\)) into an elementary box of cells. When processing a box, we can assume that we know which parts of the left and the bottom boundary of the box are reachable. That is, we know the reach-doors on the bottom and left boundary, and we need to compute the reach-doors on the top and right boundary of the elementary box. These reach-doors are determined by the combinatorial structure of the box. More specifically, suppose we know for every row and column the order of the door endpoints (including for the reach-doors on the left and bottom boundary). Then, we can deduce which of these door boundaries determine the reach-doors on the top and right boundary. We call the sequence of these orders, the (full) signature of the box.

The total number of possible signatures is bounded by an expression in terms of \(\tau \). Thus, if we pick \(\tau \) sufficiently small compared to n, we can pre-compute for all possible signatures the reach-doors on the top and right boundary, and build a data structure to query these quickly (Sect. 3). Since the reach-doors on the bottom and left boundary are required to make the signature, we initially have only incomplete signatures. In Sect. 4, we describe how to compute these efficiently. The incomplete signatures are then used to preprocess the data structure such that we can quickly find the full signature once we know the reach-doors of an elementary box. After building and preprocessing the data structure, it is possible to determine \(d_F(P,Q) \le 1\) efficiently by traversing the free-space diagram elementary box by elementary box, as explained in Sect. 5.

3 Building a Lookup Table

3.1 Preprocessing an Elementary Box

Before it considers the input, our algorithm builds a lookup table. As mentioned above, the purpose of this table is to speed up the computation of small parts of the free-space diagram.

Let \(\tau \in {\mathbb {N}}\) be a parameter.Footnote 4 The elementary box is a subdivision of \([0,\tau ]^2\) into \(\tau \) columns and rows, thus \(\tau ^2\) cells.Footnote 5 For \(i,j = 0, \dots , \tau -1\), we denote the cell \([i,i+1] \times [j, j+1]\) with D(i, j). We denote the left side of the boundary \({\partial }D(i,j)\) by l(i, j) and the bottom side by b(i, j). Note that l(i, j) coincides with the right side of \({\partial }D(i-1, j)\) and b(i, j) with the top of \({\partial }D(i, j-1)\). Thus, we write \(l(\tau , j)\) for the right side of \(D(\tau - 1, j)\) and \(b(i, \tau )\) for the top side of \(D(i, \tau - 1)\). Figure 2 shows the elementary box.

The door-order \(\sigma _j^r\) for a row j is a permutation of \(\{s_0, t_0, \dots , s_{\tau }, t_{\tau }\}\), having \(2\tau \,{+}\,2\) elements. For \(i = 1, \dots , \tau \), the element \(s_i\) represents the lower endpoint of the door on l(i, j), and \(t_i\) represents the upper endpoint. The elements \(s_0\) and \(t_0\) are an exception: they describe the reach-door on the boundary l(0, j) (i.e., its intersection with \({{\mathrm{reach}}}(P,Q)\)). The door-order \(\sigma _j^r\) represents the combinatorial order of these endpoints, as projected onto a vertical line, i.e., they are sorted into their vertical order. Some door-orders may encode the same combinatorial structure. In particular, when door i is closed, the exact position of \(s_i\) and \(t_i\) in a door-order is irrelevant, as long as \(t_i\) comes before \(s_i\). For a closed door i (\(i > 0\)), we assign \(s_i\) to the upper endpoint of l(i, j) and \(t_i\) to the lower endpoint. The values of \(s_0\) and \(t_0\) are defined by the reach-door and their relative order is thus a result of computation. We break ties between \(s_i\) and \(t_{i'}\) by placing \(s_i\) before \(t_{i'}\), and any other ties are resolved by index. A door-order \(\sigma _i^c\) is defined analogously for a column i. We write \(x <_i^c y\) if x comes before y in \(\sigma _i^c\), and \(x <_j^r y\) if x comes before y in \(\sigma _j^r\). An incomplete door-order is a door-order in which \(s_0\) and \(t_0\) are omitted (i.e. the intersection of \({{\mathrm{reach}}}(P,Q)\) with the door is still unknown); see Fig. 3.

The door-order of a row (the vertical order of the points) encodes the combinatorial structure of the doors. The door-order for the row in the figure is \(s_1 s_3 s_4 t_5 t_3 t_0 s_2 t_4 s_0 s_5 t_1 t_2\). Note that \(s_0\) and \(t_0\) represent the reach-door, which is empty in this case. These are omitted in the incomplete door-order

We can now define the (full) signature of the elementary box as the aggregation of the door-orders of its rows and columns. Therefore, a signature \(\Sigma \,{=}\, (\sigma _1^c, \dots , \sigma _\tau ^c, \sigma _1^r, \dots , \sigma _\tau ^r)\) consists of \(2\tau \) door-orders: one door-order \(\sigma _i^c\) for each column i and one door-order \(\sigma _j^r\) for each row j of the elementary box. Similarly, an incomplete signature is the aggregation of incomplete door-orders.

For a given signature, we define the combinatorial reachability structure of the elementary box as follows. For each column i and for each row j, the combinatorial reachability structure indicates which door boundaries in the respective column or row define the reach-door of \(b(i, \tau )\) or \(l(\tau , j)\).

Lemma 3.1

Let \(\Sigma \) be a signature for the elementary box. Then we can determine the combinatorial reachability structure of the box in total time \(O(\tau ^2)\).

Proof

We use dynamic programming, very similar to the algorithm by Alt and Godau [8]. For each vertical edge l(i, j) we define a variable \({\widehat{l}}(i,j)\), and for each horizontal edge b(i, j) we define a variable \({\widehat{b}}(i,j)\). The \({\widehat{l}}(i,j)\) are pairs of the form \((s_u, t_v)\), representing the reach-door \({{\mathrm{reach}}}(P,Q) \cap l(i,j)\). If this reach-door is closed, then \(t_v <_j^r s_u\) holds. If the reach-door is open, then it is bounded by the lower endpoint of the door on l(u, j) and by the upper endpoint of the door on l(v, j). (Note that in this case we have \(v = i\).) Once again \(s_0\) and \(t_0\) are special and represent the reach-door on l(0, j). The variables \({\widehat{b}}(i,j)\) are defined analogously.

Now we can compute \({\widehat{l}}(i,j)\) and \({\widehat{b}}(i,j)\) recursively as follows: first, we set

Next, we describe how to find \({\widehat{l}}(i,j)\) given \({\widehat{l}}(i-1, j)\) and \({\widehat{b}}(i-1, j)\), see Fig. 4.

The three cases for the recursive definition of \({\widehat{l}}(i,j)\). If the lower boundary is reachable, we can reach the whole right door (left). If neither the lower nor the left boundary is reachable, the right door is not reachable either (middle). Otherwise, the lower boundary is the maximum of \({\widehat{l}}(i-1,j)\) and the lower boundary of the right door (right)

Case 1: Suppose \({\widehat{b}}(i-1, j)\) is open. This means that \(b(i-1, j)\) intersects \({{\mathrm{reach}}}(P,Q)\), so \({{\mathrm{reach}}}(P,Q) \cap l(i,j)\) is limited only by the door on l(i, j), and we can set \({\widehat{l}}(i,j) :=(s_i, t_i)\).

Case 2: If both \({\widehat{b}}(i-1, j)\) and \({\widehat{l}}(i-1, j)\) are closed, it is impossible to reach l(i, j) and thus we set \({\widehat{l}}(i,j) :={\widehat{l}}(i-1,j)\).

Case 3: If \({\widehat{b}}(i-1, j)\) is closed and \({\widehat{l}}(i-1, j)\) is open, we may be able to reach l(i, j) via \(l(i-1,j)\). Let \(s_u\) be the lower endpoint of \({\widehat{l}}(i-1, j)\). We need to pass l(i, j) above \(s_u\) and \(s_i\) and below \(t_i\), and therefore set \({\widehat{l}}(i,j) :=(\max (s_u, s_i), t_i)\), where the maximum is taken according to the order \(<_j^r\).

The recursion for the variable \({\widehat{b}}(i,j)\) is defined similarly. We can implement the recursion in time \(O(\tau ^2)\) for any given signature, for example by traversing the elementary box column by column, while processing each column from bottom to top. \(\square \)

There are at most \(((2\tau +2)!)^{2\tau } = \tau ^{O(\tau ^2)}\) distinct signatures for the elementary box. We choose \(\tau = \lambda \sqrt{\log n/\log \log n}\) for a sufficiently small constant \(\lambda >0\), so that this number becomes o(n). Thus, during the preprocessing stage we have time to enumerate all possible signatures and determine the corresponding combinatorial reachability structure inside the elementary box. This information is then stored in an appropriate data structure.

3.2 Building the Data Structure

Before we describe this data structure, we first explain how the door-orders are represented. This depends on the computational model. By our choice of \(\tau \), there are o(n) distinct door-orders. On the word RAM, we represent each door-order and incomplete door-order by an integer between 1 and \((2\tau )!\). This fits into a word of \(\log n\) bits. On the pointer machine, we create a record for each door-order and incomplete door-order; we represent an order by a pointer to the corresponding record.

The data structure has two stages. In the first stage, we assume we know the incomplete door-order for each row and for each column of the elementary box,Footnote 6 and we wish to determine the incomplete signature. In the second stage we have obtained the reach-doors for the left and bottom sides of the elementary box, and we are looking for the full signature. The details of our method depend on the computational model. One way uses table lookup and requires the word RAM; the other way works on the pointer machine, but is a bit more involved.

3.2.1 Word RAM

We organize the lookup table as a large tree T. In the first stage, each level of T corresponds to a row or column of the elementary box. Thus, there are \(2\tau \) levels. Each node has \((2\tau )!\) children, representing the possible incomplete door-orders for the next row or column. Since we represent door-orders by positive integers, each node of T may store an array for its children; we can choose the appropriate child for a given incomplete door-order in constant time. Thus, determining the incomplete signature for an elementary box requires \(O(\tau )\) steps on a word RAM.

For the second stage, we again use a tree structure. Now the tree has \(O(\tau )\) layers, each with \(O(\log \tau )\) levels. Again, each layer corresponds to a row or column of the elementary box. The levels inside each layer then implement a balanced binary search tree that allows us to locate the endpoints of the reach-door within the incomplete signature. Since there are \(2\tau \) endpoints, this requires \(O(\log \tau )\) levels. Thus, it takes \(O(\tau \log \tau )\) time to find the full signature of a given elementary box.

3.2.2 Pointer machine

Unlike in the word RAM model, we are not allowed to store a lookup table on every level of the tree T, and there is no way to quickly find the appropriate child for a given door-order. Instead, we must rely on batch processing to achieve a reasonable running time.

Thus, suppose that during the first stage we want to find the incomplete signatures for a set B of m elementary boxes, where again for each box in B we know the incomplete door-order for each row and each column. Recall that we represent the door-order by a pointer to the corresponding record. With each such record, we store a queue of elementary boxes that is empty initially.

We now simultaneously propagate the boxes in B through T, proceeding level by level. In the first level, all of B is assigned to the root of T. Then, we go through the nodes of one level of T, from left to right. Let v be the current node of T. We consider each elementary box b assigned to v. We determine the next incomplete door-order for b, and we append b to the queue for this incomplete door-order—the queue is addressed through the corresponding record, so all elementary boxes with the same next incomplete door-order end up in the same queue. Next, we go through the nodes of the next level, again from left to right. Let \(v'\) be the current node. The node \(v'\) corresponds to a next incomplete door-order \(\sigma \) that extends the known signature of its parents. We consider the queue stored at the record for \(\sigma \). By construction, the elementary boxes that should be assigned to \(v'\) appear consecutively at the beginning of this queue. We remove these boxes from the queue and assign them to \(v'\). After this, all the queues are empty, and we can continue by propagating the boxes to the next level. During this procedure, we traverse each node of T a constant number of times, and in each level of the T we consider all the boxes in B. Since T has o(n) nodes, the total running time is \(O(n + m\tau )\).

For the second stage, the data structure works just as in the word RAM case, because no table lookup is necessary. Again, we need \(O(\tau \log \tau )\) steps to process one box. After the second stage, we obtain the combinatorial reachability structure of the box in constant time since we precomputed this information for each box (Lemma 3.1). Thus, we have shown the following lemma, independently of the computational model.

Lemma 3.2

For \(\tau = \lambda \sqrt{\log n/\log \log n}\), with a sufficiently small constant \(\lambda >0\), we can construct in o(n) time a data structure of size o(n) such that

-

given a set of m elementary boxes where the incomplete door-orders are known, we can find the incomplete signature of each box in total time \(O(n + m \tau )\);

-

given the incomplete signature and the reach-doors on the bottom and left boundary of an elementary box, we can find the full signature in \(O(\tau \log \tau )\) time;

-

given the full signature of an elementary box, we can find the combinatorial reachability structure of the box in constant time.

4 Preprocessing a Given Input

Next, we perform a second preprocessing phase that considers the input curves P and Q. Our eventual goal is to compute the intersection of \({{\mathrm{reach}}}(P,Q)\) with the cell boundaries, taking advantage of the data structure from Sect. 3. For this, we aggregate the cells of \({{\mathrm{FSD}}}(P,Q)\) into (concrete) elementary boxes consisting of \(\tau \times \tau \) cells. There are \(n^2/\tau ^2\) such boxes. We may avoid rounding issues by either duplicating vertices or handling a small part of \({{\mathrm{FSD}}}(P,Q)\) without lookup tables.

The goal is to determine the signature for each elementary box S. At this point, this is not quite possible yet, since the signature depends on the intersection of \({{\mathrm{reach}}}(P,Q)\) with the lower and left boundary of S. Nonetheless, we can find the incomplete signature, in which the positions of \(s_0, t_0\) (the reach-door) in the (incomplete) door-orders \(\sigma _i^r\), \(\sigma _j^c\) are still to be determined.

\({{\mathrm{FSD}}}(P,Q)\) is subdivided into \(n^2/\tau ^2\) elementary boxes of size \(\tau \times \tau \). The free-space diagram is subdivided into \(n^2/\tau ^2\) elementary boxes of size \(\tau \times \tau \). A strip is a column of elementary boxes: it corresponds to a subcurve of P with \(\tau \) edges

We aggregate the columns of \({{\mathrm{FSD}}}(P,Q)\) into vertical strips, each corresponding to a single column of elementary boxes (i.e., \(\tau \) consecutive columns of cells in \({{\mathrm{FSD}}}(P,Q)\)). See Fig. 5.

Let A be such a strip. It corresponds to a subcurve \(P'\) of P with \(\tau \) edges. The following lemma implies that we can build a data structure for A such that, given any segment of Q, we can efficiently find its incomplete door-order within the elementary box in A.

Lemma 4.1

Given a subcurve \(P^{\prime }\) with \(\tau \) edges, we can compute in \(O(\tau ^6)\) time a data structure that requires \(O(\tau ^6)\) space and that allows us to determine the incomplete door-order of any line segment on Q in time \(O(\log \tau )\).

Proof

Consider the arrangement \({\mathcal {A}}\) of unit circles whose centers are the vertices of \(P'\) (see Fig. 6). The incomplete door-order of a line segment s is determined by the intersections of s with the arcs of \({\mathcal {A}}\) (and for a circle not intersecting s by whether s lies inside or outside of the circle). Let \(\ell _s\) be the line spanned by line segment s. Suppose we wiggle \(\ell _s\). The order of intersections of \(\ell _{s}\) and the arcs of \({\mathcal {A}}\) changes only when \(\ell _s\) moves over a vertex of \({\mathcal {A}}\) or if \(\ell _s\) leaves or enters a circle.

By using the arrangement \({\mathcal {A}}\) defined by unit circles centered at vertices of \(P'\), we can determine the incomplete door-order of each segment s on Q. This is done by locating the dual point of \(\ell _s\) in the dual arrangement \({\mathcal {B}}\). The dual arrangement also contains pseudolines to determine when \(\ell _s\) leaves a circle of \({\mathcal {A}}\)

We use the standard duality transform that maps a line \(\ell : y = ax + b\) to the point \(\ell ^* : (a, -b)\), and vice versa. Consider a unit circle C in \({\mathcal {A}}\) with center \((c_x, c_y)\). Elementary geometry shows that the set of all lines that are tangent to C from above dualizes to the curve \(t_a^*(C): y = c_x x - c_y - \sqrt{1+x^2}\). Similarly, the lines that are tangent to C from below dualize to the curve \(t_b^*(C): y = c_x x - c_y + \sqrt{1+x^2}\). Define \(C^* :=\{t_a^*(C), t_b^*(C) \mid C \in {\mathcal {A}}\}\). Since any pair of distinct circles \(C_1\), \(C_2\) has at most four common tangents, one for each choice of above/below \(C_1\) and above/below \(C_2\), it follows that any two curves in \(C^*\) intersect at most once.

Let V be the set of vertices in \({\mathcal {A}}\), and let \(V^*\) be the lines dual to the points in V (note that \(|V| = O(\tau ^2)\)). Since for any vertex \(v \in V\) and any circle \(C \in {\mathcal {A}}\) there are at most two tangents through v on C, each line in \(V^*\) intersects each curve in \(C^*\) at most once. Thus, the arrangement \({\mathcal {B}}\) of the curves in \(V^* \cup C^*\) is an arrangement of pseudolines with complexity \(O(\tau ^4)\). Furthermore, it can be constructed in the same expected time, together with a point location structure that finds the containing cell in \({\mathcal {B}}\) of any given point in time \(O(\log \tau )\) [60, Chap. 6.6.1].

Now consider a line segment s and the supporting line \(\ell _s\). As observed in the first paragraph, the combinatorial structure of the intersection between \(\ell _s\) and \({\mathcal {A}}\) is completely determined by the cell of \({\mathcal {B}}\) that contains the dual point \(\ell _s^*\). Thus, for every cell \(f(s) \in {\mathcal {B}}\), we construct a list \(L_{f(s)}\) that represents the combinatorial structure of \(\ell _s \cap {\mathcal {A}}\). There are \(O(\tau ^4)\) such lists, each having size \(O(\tau )\). We can compute \(L_{f(s)}\) by traversing the zone of \(\ell _s\) in \({\mathcal {A}}\). Since circles intersect at most twice and since a line intersects any circle at most twice, the zone has complexity \(O(\tau 2^{\alpha (\tau )})\), where \(\alpha (\cdot )\) denotes the inverse Ackermann function [60, Thm. 5.11]. Since \(O(\tau 2^{\alpha (\tau )}) \subset O(\tau ^2)\), we can compute all lists in \(O(\tau ^{6})\) time.

Given the list \(L_{f(s)}\), the incomplete door-order of s is determined by the position of the endpoints of s in \(L_{f(s)}\). There are \(O(\tau ^2)\) possible ways for this, and we build a table \(T_{f(s)}\) that represents them. For each entry in \(T_{f(s)}\), we store a representative for the corresponding incomplete door-order. As described in the previous section, the representative is a positive integer in the word RAM model and a pointer to the appropriate record on a pointer machine.

The total size of the data structure is \(O(\tau ^{6})\) and it can be constructed in the same time. A query works as follows: given s, we can compute \(\ell _s^*\) in constant time. Then we use the point location structure of \({\mathcal {B}}\) to find f(s) in \(O(\log \tau )\) time. Using binary search on \(T_{f(s)}\) (or an appropriate tree structure in the case of a point machine), we can then determine the position of the endpoints of s in the list \(L_{f(s)}\) in \(O(\log \tau )\) time. This bound holds both on the word RAM and on the pointer machine. \(\square \)

Lemma 4.2

Given the data structure of Lemma 3.2, the incomplete signature for each elementary box can be determined in time \(O(n\tau ^{5} + n^2 (\log \tau ) / \tau )\).

Proof

By building and using the data structure from Lemma 4.1, we determine the incomplete door-order for each row in each vertical \(\tau \)-strip in total time proportional to

We repeat the procedure with the horizontal strips. Now we know for each elementary box in \({{\mathrm{FSD}}}(P,Q)\) the incomplete door-order for each row and each column. We use the data structure of Lemma 3.2 to combine these. As there are \(n^2 / \tau ^2\) boxes, the number of steps is \(O(n^2/\tau + n) = O(n^2/\tau )\). Hence, the incomplete signature for each elementary box is found in \(O(n\tau ^{5} + n^2 (\log \tau ) / \tau )\) steps. \(\square \)

5 Solving the Decision Problem

With the data structures and preprocessing from the previous sections, we have all ingredients in place to determine whether \(d_F(P,Q) \le 1\). We know for each elementary box its incomplete signature and we have a data structure to derive its full signature (and with it, the combinatorial reachability structure) when its reach-doors are known. What remains to be shown is that we can efficiently process the free-space diagram to determine whether \((n,n) \in {{\mathrm{reach}}}(P,Q)\). This is captured in the following lemma.

Lemma 5.1

If the incomplete signature for each elementary box is known, we can determine whether \((n,n) \in {{\mathrm{reach}}}(P,Q)\) in time \(O(n^2(\log \tau )/\tau )\).

Proof

We go through all elementary boxes of \({{\mathrm{FSD}}}(P,Q)\), processing them one column at a time, going from bottom to top in each column. Initially, we know the full signature for the box S in the lower left corner of \({{\mathrm{FSD}}}(P,Q)\). We use the signature to determine the intersections of \({{\mathrm{reach}}}(P,Q)\) with the upper and right boundary of S. There is a subtlety here: the signature gives us only the combinatorial reachability structure, and we need to map the resulting \(s_i, t_j\) back to the corresponding vertices on the curves. On the word RAM, this can be done easily through table lookups. On the pointer machine, we use representative records for the \(s_i, t_i\) elements and use \(O(\tau )\) time before processing the box to store a pointer from each representative record to the appropriate vertices on P and Q.

We proceed similarly for the other boxes. By the choice of the processing order of the elementary boxes we always know the incoming reach-doors on the bottom and left boundary when processing a box. Given the incoming reach-doors, we can determine the full signature and find the structure of the outgoing reach-doors in total time \(O(\tau \log \tau )\), using Lemma 3.2. Again, we need \(O(\tau )\) additional time on the pointer machine to establish the mapping from the abstract \(s_i\), \(t_i\) elements to the concrete vertices of P and Q. In total, we spend \(O(\tau \log \tau )\) time per box. Thus, it takes time \(O(n^2(\log \tau )/\tau )\) to process all boxes, as claimed. \(\square \)

As a result, we obtain the following theorem for the pointer machine (and, by extension, for the real RAM model). For the word RAM model, we can obtain an even faster algorithm (see Sect. 6).

Theorem 5.2

There is an algorithm that solves the decision version of the Fréchet problem in \(O(n^2 (\log \log n)^{3/2}/\sqrt{\log n})\) time on a pointer machine.

Proof

Set \(\tau = \lambda \sqrt{\log n/\log \log n}\), for a sufficiently small constant \(\lambda >0\). The theorem follows by applying Lemmas 3.2, 4.2, and 5.1 in sequence. \(\square \)

6 Improved Bound on Word RAM

We now explain how the running time of our algorithm can be improved if our computational model allows for constant time table-lookup. We use the same \(\tau \) as above (up to a constant factor). However, we change a number of things. “Signatures” are represented differently and the data structure to obtain combinatorial reachability structures is changed accordingly. Furthermore, we aggregate elementary boxes into clusters and determine “incomplete door-orders” for multiple boxes at the same time. Finally, we walk the free-space diagram based on the clusters to decide \(d_F(P,Q) \le 1\).

6.1 Clusters and Extended Signatures

We introduce a second level of aggregation in the free-space diagram (see Fig. 7): a cluster is a collection of \(\tau \times \tau \) elementary boxes, that is, \(\tau ^2 \times \tau ^2\) cells in \({{\mathrm{FSD}}}(P,Q)\). Let R be a row of cells in \({{\mathrm{FSD}}}(P,Q)\) of a certain cluster. As before, the row R corresponds to an edge e on Q and a subcurve \(P'\) of P with \(\tau ^2\) edges. We associate with R an ordered set \(Z = \langle e_0, z_0', z_1, z_1', z_2, z_2', \dots , z_{k}, z_{k}', e_1 \rangle \) with \(2 \cdot k + 3\) elements. Here k is the number of intersections of e with the unit circles centered at the \(\tau ^2\) vertices of \(P'\) (all but the very first). Hence, k is bounded by \(2 \tau ^2\) and |Z| is bounded by \(4 \tau ^2 + 3\). The order of Z indicates the order of these intersections with e directed along Q. Elements \(e_0\) and \(e_1\) represent the endpoints of e and take a special role. In particular, these are used to represent closed doors and snap open doors to the edge e. The elements \(z_i'\) are placeholders for the positions of the endpoints of the reach-doors: \(z_0'\) represents a possible reach-door endpoint between \(e_0\) and \(z_1\), the element \(z_1'\) represents an endpoint between \(z_1\) and \(z_2\), etc.

Consider a row \(R'\) of an elementary box inside the row R of a cluster, corresponding to an edge e of Q. The door-index of \(R'\) is an ordered set \(\langle s_0, t_0, \ldots , s_\tau , t_\tau \rangle \) of size \(2 \tau + 2\). Similar to a door-order, elements \(s_0\) and \(t_0\) represent the reach-door at the leftmost boundary of \(R'\); the elements \(s_i\) and \(t_i\) (\(1 \le i \le \tau \)) represent the door at the right boundary of the ith cell in \(R'\). However, instead of rearranging the set to indicate relative positions, the elements \(s_i\) and \(t_i\) simply refer to elements in Z. If the door is open, they refer to the corresponding intersections with e (possibly snapped to \(e_0\) or \(e_1\)). If the door is closed, \(s_i\) is set to \(e_1\) and \(t_i\) is set to \(e_0\). The elements \(s_0\) and \(t_0\) are special, representing the reach-door, and they refer to one of the elements \(z_i'\). An incomplete door-index is a door-index without \(s_0\) and \(t_0\). The advantage of a door-index over a door-order is that the reach-door is always at the start. Hence, completing an incomplete door-index to a full door-index can be done in constant time. Since a door-index has size \(2 \tau + 2\), the number of possible door-indices for \(R'\) is \(\tau ^{O(\tau )}\).

We define the door-indices for the columns analogously. We concatenate the door-indices for the rows and the columns to obtain the indexed signature for an elementary box. Similarly, we define the incomplete indexed signature. The total number of possible indexed signatures remains \(\tau ^{O(\tau ^2)}\).

For each possible incomplete indexed signature \(\Sigma \) we build a lookup table \(T_\Sigma \) as follows: the input is a word with \(4\tau \) fields of \(O(\log \tau )\) bits each. Each field stores the positions in Z of the endpoints of the ingoing reach-doors for the elementary box: \(2\tau \) fields for the left side, \(2\tau \) fields for the lower side. The output consists of a word that represents the indices for the elements in Z that represent the outgoing reach-doors for the upper and right boundary of the box. Thus, the input of \(T_\Sigma \) is a word of \(O(\tau \log \tau )\) bits, and \(T_\Sigma \) has size \(\tau ^{O(\tau )}\). Hence, for all incomplete indexed signatures combined, the size is \(\tau ^{O(\tau ^2)} = o(n)\) by our choice of \(\tau \).

6.2 Preprocessing a Given Input

During the preprocessing for a given input P, Q, we use superstrips consisting of \(\tau \) strips. That is, a superstrip is a column of clusters and consists of \(\tau ^2\) columns of the free-space diagram. Lemma 4.1 still holds, albeit with a larger constant c in place of 6. The data structure gets as input a query edge e, and it returns in \(O(\log \tau )\) time a word that contains \(\tau \) fields. Each field represents the incomplete door-index for e in the corresponding elementary box and thus consists of \(O(\tau \log \tau )\) bits. Hence, the word size is \(O(\tau ^2 \log \tau ) = O(\log n)\) by our choice of \(\tau \). Thus, the total time for building a data structure for each superstrip and for processing all rows is \(O(n/\tau ^2\;(\tau ^c + n \log \tau )) = O(n^2(\log \tau )/\tau ^2)\). We now have parts of the incomplete indexed signature for each elementary box packed into different words. To obtain the incomplete indexed signature, we need to rearrange the information such that the incomplete door-indices of the rows in one elementary box are in a single word. This corresponds to computing a transpose of a matrix, as is illustrated in Fig. 8. For this, we need the following lemma, which can be found—in slightly different form—in Thorup [62, Lem. 9].

Lemma 6.1

Let X be a sequence of \(\tau \) words that contain \(\tau \) fields each, so that X can be interpreted as a \(\tau \times \tau \) matrix. Then we can compute in time \(O(\tau \log \tau )\) on a word RAM a sequence Y of \(\tau \) words with \(\tau \) fields each that represents the transpose of X.

Proof

The algorithm is recursive and solves a more general problem: let X be a sequence of a words that represents a sequence M of b different \(a\times a\) matrices, such that the ith word in X contains the fields of the ith row of each matrix in M from left to right. Compute a sequence of words Y that represents the sequence \(M'\) of the transposed matrices in M.

The recursion works as follows: if \(a=1\), there is nothing to be done. Otherwise, we split X into the sequence \(X_1\) of the first a / 2 words and the sequence \(X_2\) of the remaining words. \(X_1\) and \(X_2\) now represent a sequence of 2b \((a/2) \times (a/2)\) matrices, which we transpose recursively. After the recursion, we put the \((a/2) \times (a/2)\) submatrices back together in the obvious way. To finish, we need to transpose the off-diagonal submatrices. This can be done simultaneously for all matrices in time O(a), by using appropriate bit-operations (or table lookup). Hence, the running time obeys a recursion of the form \(T(a) = 2T(a/2) + O(a)\), giving \(T(a) = O(a \log a)\), as desired. \(\square \)

By applying the lemma to the words that represent \(\tau \) consecutive rows in a superstrip, we obtain the incomplete door-indices of the rows for each elementary box. This takes total time proportional to

We repeat this procedure for the horizontal superstrips. By using an appropriate lookup table to combine the incomplete door-indices of the rows and columns, we obtain the incomplete indexed signature for each elementary box in total time \(O(n^2(\log \tau )/\tau ^2)\).

6.3 The Actual Computation

We traverse the free-space diagram cluster by cluster (recall that a cluster consists of \(\tau \times \tau \) elementary boxes). The clusters are processed column by column from left to right, and inside each column from bottom to top. Before processing a cluster, we walk along the left and lower boundary of the cluster to determine the incoming reach-doors. This is done by performing a binary search for each box on the boundary, and determining the appropriate elements \(z_i'\) which correspond to the incoming reach-doors. Using this information, we assemble the appropriate words that represent the incoming information for each elementary box. Since there are \(n^2/\tau ^4\) clusters, this step requires time \(O((n^2/\tau ^4)\tau ^2\log \tau ) = O(n^2 (\log \tau ) / \tau ^2)\). We then process the elementary boxes inside the cluster, in a similar fashion. Now, however, we can process each elementary box in constant time through a single table lookup, so the total time is \(O(n^2/\tau ^2)\). Hence, the total running time of our algorithm is \(O(n^2(\log \tau )/\tau ^2)\). By our choice of \(\tau = \lambda \sqrt{\log n/ \log \log n}\) for a sufficiently small \(\lambda >0\), we obtain the following theorem.

Theorem 6.2

The decision version of the Fréchet problem can be solved in \(O(n^2 (\log \log n)^{2}/\log n)\) time on a word RAM.

7 Computing the Fréchet Distance

The optimization version of the Fréchet problem, i.e., computing the Fréchet distance, can be done in \(O(n^2 \log n)\) time using parametric search with the decision version as a subroutine [8]. We showed that the decision problem can be solved in \(o(n^2)\) time. However, this does not directly yield a faster algorithm for the optimization problem: if the running time of the decision problem is T(n), parametric search gives an \(O((T(n)+n^2) \log n)\) time algorithm [8]. There is an alternative randomized algorithm by Raichel and Har-Peled [49]. Their algorithm also needs \(O((T(n)+n^2) \log n)\) time, but below we adapt it to obtain the following lemma.

Lemma 7.1

The Fréchet distance of two polygonal curves with n vertices each can be computed by a randomized algorithm in \(O(n^2 2^{\alpha (n)} + T(n) \log n)\) expected time, where T(n) is the time for the decision problem.

Before we prove the lemma, we recall that possible values of the Fréchet distance are limited to a certain set of critical values [8]:

-

1.

the distance between a vertex of one curve and a vertex of the other curve (vertex–vertex);

-

2.

the distance between a vertex of one curve and an edge of the other curve (vertex–edge); and

-

3.

for two vertices of one curve and an edge of the other curve, the distance between one of the vertices and the intersection of e with the bisector of the two vertices (if this intersection exists) (vertex–vertex–edge).

If we also include vertex–vertex–edge tuples with no intersection, we can sample a critical value uniformly at random in constant time. The algorithm now works as follows (see Har-Peled and Raichel [49] for more details): first, we sample a set S of \(K=4n^2\) critical values uniformly at random. Next, we find \(a', b' \in S\) such that the Fréchet distance lies between \(a'\) and \(b'\) and such that \([a', b']\) contains no other value from S. In the original algorithm this is done by sorting S and performing a binary search using the decision version. Using median-finding instead, this step can be done in \(O(K + T(n) \log K)\) time. Alternatively, the running time of this step could be reduced by picking a smaller K. However, this does not improve the final bound, since it is dominated by a \(O(n^2 2^{\alpha (n)})\) term. The interval \([a',b']\) with high probability contains only a small number of the remaining critical values. More precisely, for \(K=4n^2\) the probability that \([a', b']\) has more than \(2 c n \ln n\) critical values is at most \(1/n^c\) [49, Lem. 6.2].

The remainder of the algorithm proceeds as follows: first, we find all critical values of type vertex–vertex and vertex–edge that lie inside the interval \([a', b']\). This can be done in \(O(n^2)\) time by checking all vertex–vertex and vertex–edge pairs. Among these values, we again use median-finding to determine the interval \([a, b] \subseteq [a',b']\) that contains the Fréchet distance in \(O(K' + T(n) \log K')\) time. It remains to determine the critical values corresponding to vertex–vertex–edge tuples that lie in [a, b].

For this, take an edge e of P and the vertices of Q. Conceptually, we start with circles of radius a around the vertices of Q, and we increase the radii until b. During this process, we observe the evolution of the intersection points between the circle arcs and e. Because all vertex–vertex and vertex–edge events have been eliminated, each circle intersects e in either 0 or 2 points, and this does not change throughout the process. A critical value of vertex–vertex–edge type corresponds to the event that two different circles intersect e in the same point, i.e., that two intersection points meet while growing the circles. Two intersection points can meet at most once, and when they do, they exchange their order along e.

This suggests the following algorithm: let \({\mathcal {A}}_a\) be the arrangement of circles with radius a around the vertices of Q, and let \({\mathcal {A}}_b\) be the concentric arrangement of circles with radius b. We determine the ordered sequence \(I_a\) of the intersection points of the circles in \({\mathcal {A}}_a\) with e, and we number them in their order along e. Next, we find the ordered sequence of intersection points \(I_b\) between e and the circles in \({\mathcal {A}}_b\). We assign to each point in \(I_b\) the number of the corresponding intersection points in \(I_a\). Since \(|I_a| = |I_b|\), this gives a permutation of \(\{1, \dots , |I_a|\}\), Two intersection points change their order from \(I_a\) to \(I_b\) exactly if there is a vertex–vertex–edge event in [a, b], so these events correspond to the inversions of the resulting permutation. Given that there are k such inversions, we can find them in time \(O(|I_a| + k)\) using insertion sort. Thus, the overall running time to find the critical events in [a, b], ignoring the time for computing \(I_a\) and \(I_b\), is \(O(n^2 + K')\).

It remains to show that we can quickly find \(I_a\) and \(I_b\). We describe the algorithm for \(I_a\). First, compute the arrangement \({\mathcal {A}}_a\) of circles with radius a around the vertices of Q. This takes \(O(n^2)\) time [32]. To find the intersection order, traverse in \({\mathcal {A}}_a\) the zone of the line \(\ell \) spanned by e. The time for the traversal is bounded by the complexity of the zone. Since the circles pairwise intersect at most twice and \(\ell \) intersects each circle only twice, the complexity of the zone is \(O(n 2^{\alpha (n)})\) [60, Thm. 5.11]. Summing over all edges e, this adds a total of \(O(n^2 2^{\alpha (n)})\) to the running time. To find \(I_b\), we proceed similarly with \({\mathcal {A}}_b\). Thus the overall time is \(O(T(n) \log (n) + n^2 2^{\alpha (n)} + K')\). The event \(K' > 8 n \ln n\) has probability less than \(1/n^4\), and we always have \(K' = O(n^3)\). Thus, this case adds o(1) to the expected running time. Given \(K' \le 8 n \ln n\), the running time is \(O(n \log n)\). Lemma 7.1 follows. Theorem 7.2 now results from Lemma 7.1, Theorem 5.2, and Theorem 6.2.

Theorem 7.2

The Fréchet distance of two polygonal curves with n edges each can be computed by a randomized algorithm in time \(O(n^2 \sqrt{\log n}(\log \log n)^{3/2})\) on a pointer machine and in time \(O(n^2(\log \log n)^2)\) on a word RAM.

8 Discrete Fréchet Distance on the Pointer Machine

As mentioned in the introduction, Agarwal et al. [1] give a subquadratic algorithm for finding the discrete Fréchet distance between two point sequences, using the word RAM. In this section, we explain how their algorithm for the decision version of the problem can be adapted to the pointer machine. This shows that, at least for the decision version, the speed-up does not come from bit-manipulation tricks but from a deeper understanding of the underlying geometric structure. Our presentation is slightly different from Agarwal et al. [1], in order to allow for a clearer comparison with our continuous algorithm.

We recall the problem definition: we are given two sequences \(P = \langle p_1, p_2, \dots , p_n\rangle \) and \(Q = \langle q_1, q_2, \dots , q_n\rangle \) of n points in the plane. For \(\delta > 0\), we define a directed graph \(G_\delta \) with vertex set \(P \times Q\). In \(G_\delta \), there is an edge between two vertices \((p_i, q_j)\), \((p_i, q_{j+1})\) if and only if both \(d(p_i, q_j) \le \delta \) and \(d(p_i, q_{j+1}) \le \delta \). The condition is similar for an edge between vertices \((p_i, q_j)\) and \((p_{i+1}, q_j)\), and vertices \((p_i, q_j)\) and \((p_{i+1}, q_{j+1})\). There are no further edges in \(G_\delta \). The discrete Fréchet distance between P and Q is the smallest \(\delta \) for which \(G_\delta \) has a path from \((p_1, q_1)\) to \((p_n, q_n)\). In the decision version of the problem, we are given \(\delta > 0\), and we need to decide whether there is a path from \((p_1, q_1)\) to \((p_n, q_n)\) in \(G_\delta \).

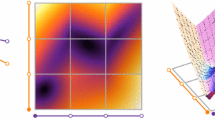

The discrete Fréchet distance: (left) two point sequences P (disks) and Q (crosses) with 5 points each; (middle) the associated free-space matrix F (\(\text {white }= 1, \text {gray } = 0\)); (right) the resulting reachability matrix M. Since \(M_{55} = 1\), the discrete Fréchet distance is at most 1

We now describe a subquadratic pointer machine algorithm for the decision version. Thus, let point sequences P, Q be given, and suppose without loss of generality that \(\delta = 1\). The discrete analogue of the free-space diagram is an \(n \times n\) Boolean matrix F where \(F_{ij} = 1\), if \(d(p_i, q_j) \le 1\), and \(F_{ij} = 0\), otherwise, for \(i, j = 1, \dots , n\). We call F the free-space matrix. Similarly, the discrete analogue of the reachable region is an \(n \times n\) Boolean matrix M that is defined recursively as follows: \(M_{11} = F_{11}\), and for \(i,j = 1, \dots , n\), \((i,j) \ne 1\), we have \(M_{ij} = 1\) if and only if \(F_{ij} = 1\) and at least one of \(M_{i-1,j}\), \(M_{i,j-1}\) or \(M_{i-1, j-1}\) equals 1 (we set \(M_{i,0} = M_{0, j} = 0\), for \(i, j = 1, \dots n\)). Then the discrete Fréchet distance between P and Q is at most 1 if and only if \(M_{nn} = 1\). We call M the reachability matrix; see Fig. 9.

Adapting the method of Agarwal et al. [1], we show how to use preprocessing and table lookup in order to decide whether \(M_{nn} = 1\) in \(o(n^2)\) steps on a pointer machine. Let \(\tau = \lambda \log n\), for a suitable constant \(\lambda > 0\). We subdivide the rows of M into \(k = O(n/\tau )\) strips, each consisting of \(\tau \) consecutive rows: the first strip \(L_1\) consists of rows \(1, \dots , \tau \), the second strip \(L_2\) consists of rows \(\tau + 1, \dots , 2\tau \), and so on. Each strip \(L_i\), \(i = 1,\dots , k\), corresponds to a contiguous subsequence \(P_i\) of \(\tau \) points on P. Let \({\mathcal {A}}_i\) be the arrangement of disks obtained by drawing a unit disk around each vertex in \(P_i\). The arrangement \({\mathcal {A}}_i\) has \(O(\tau ^2)\) faces.

Next, let \(\rho = \lambda \log n/\log \log n\), with \(\lambda > 0\) as above. We subdivide each strip \(L_i\), \(i = 1, \dots , k\) into \(l = O(n/\rho )\) elementary boxes, each consisting of \(\rho \) consecutive columns in \(L_i\). We label the elementary boxes as \(B_{ij}\), for \(i = 1, \dots , k\) and \(j = 1, \dots , l\). As above, an elementary box \(B_{ij}\) has corresponding contiguous subsequences \(P_i\) of \(\tau \) vertices on P and \(Q_j\) of \(\rho \) vertices on Q. Now, the incomplete signature of an elementary box \(B_{ij}\) consists of (i) the index i of the strip that contains it; and (ii) the sequence \(f_1, f_2, \dots , f_\rho \) of faces in the disk arrangement \({\mathcal {A}}_i\) that contain the \(\rho \) vertices of \(Q_j\), in that order. The full signature of an elementary box \(B_{ij}\) consists of its incomplete signature plus a sequence of \(\rho + \tau \) bits, that represent the entries in the reach matrix M directly above and to the left of \(B_{ij}\). We call these bits the reach bits. As in the continuous case, the information in the full signature suffices to determine how the reachability information propagates through the elementary box; see Fig. 10.

(left) We subdivide the reachability matrix M into strips of \(\tau \) rows. Each strip is subdivided into elementary boxes of \(\rho \) columns. Each elementary box corresponds to a subsequence of length \(\tau \) on P and a subsequence of length \(\rho \) on Q. (right) The incomplete signature \(\sigma \) of an elementary box \(B_{ij}\) consists of the index of the containing strip and the face sequence for \(Q_j\) in \({\mathcal {A}}_i\). The full signature \(\sigma ^f\) additionally contains \(\rho + \tau \) reach bits that represent the bits in M directly above and to the left of \(B_{ij}\)

The preprocessing phase proceeds as follows: first, we enumerate all possible incomplete signatures. For this, we need to compute all strips \(L_i\) and the corresponding disk arrangements \({\mathcal {A}}_i\), for \(i = 1, \dots , \tau \). Furthermore, we also compute a suitable point location structure for each \({\mathcal {A}}_i\). Since there are \(O(n/\tau )\) strips, each of which consists of \(\tau \) rows, this takes time \(O((n/\tau ) \cdot \tau ^2 \log \tau )\) = \(O(n \tau \log \tau ) = O(n \log n \log \log n)\). For each strip \(L_i\), since \({\mathcal {A}}_i\) has \(O(\tau ^2)\) faces, the number of possible face sequences \(f_1, \dots , f_\rho \) is \(\tau ^{O(\rho )} \le n^{1/3}\), by our choice of \(\tau \) and \(\rho \) and for \(\lambda \) small enough. Thus, there are \(O(n^{4/3}/\log n)\) incomplete signatures, and they can be enumerated in the same time. Now, for each incomplete signature \(\sigma = (i, \langle f_1, \dots , f_\rho \rangle )\) we build a lookup-table that encodes for each possible setting of the reach bits the resulting reach bits at the bottom and the right boundary of the elementary box. There are \(2^{\rho + \tau } \le n^{1/3}\) possible settings of the reach bits, by our choice of \(\tau \) and \(\rho \) and for \(\lambda \) small enough. We enumerate all of them and organize them as a complete binary tree of depth \(\rho + \tau \). For each setting of the reach bits, we use the information of the incomplete signature to determine the result through a straightforward dynamic programming algorithm [1, 18, 39] in \(O(\tau \cdot \rho ) = O(\log ^2 n)\) time, and we store the result as a linked list of length \(\rho + \tau - 1\) at the leaf for the corresponding reach bits; see Fig. 11. Thus, the total time for this part of the preprocessing phase is \(O(n^{5/3}\log n)\).

For each incomplete signature, we create a lookup table organized as a complete binary tree. Each leaf corresponds to a setting of the reach bits for the elementary box. In the leaves, we store a linked list of length \(\rho + \tau -1\) that represents the contents of the reachability matrix at the bottom and at the right of the elementary box

Next, we determine for each elementary box \(B_{ij}\) its incomplete signature. For this, we use the point location structure for \({\mathcal {A}}_i\) to determine for each vertex in \(Q_j\) the face of \({\mathcal {A}}_i\) that contains it. There are \(O(n^2/\tau \rho )\) elementary boxes, each \(Q_j\) has \(\rho \) vertices, and one point location query takes \(O(\log \tau )\) time, so the total time for this step is \(O((n^2/\tau \rho )\cdot \rho \cdot \log \tau ) = O((n^2/\log n)\log \log n)\). Using this information, we can store with each elementary box a pointer to the lookup table for the corresponding incomplete signature.

Finally, we can now use the lookup tables to propagate the reachability information through M, one elementary box at a time, as in the continuous case. The time to process one elementary box is \(O(\rho + \tau )\), because we need to traverse the corresponding lookup table to find the reach bits for the adjacent boxes. Thus, the total running time is \(O((n^2/\tau \rho ) \cdot (\rho + \tau )) = O(n^2/\rho ) = O((n^2/\log n) \log \log n)\). Thus, we get the following pointer machine version of the result by Agarwal et al. [1]

Theorem 8.1

There is an algorithm that solves the decision version of the discrete Fréchet problem in \(O((n^2/\log n) \log \log n)\) time on a pointer machine.

Remark

Agarwal et al. [1] further describe how to get a faster algorithm for the decision version by aggregating the elementary boxes into larger clusters, similar to the method given in Sect. 6. This improved algorithm finally leads to a subquadratic algorithm for computing the discrete Fréchet distance. Unfortunately, as in Sect. 6, it seems that this improvement crucially relies on constant time table lookup, so it does not directly translate to the pointer machine.

The reader may also notice that in this section we could choose \(\tau , \rho \approx \log n\), whereas in the previous sections we had \(\tau \approx \sqrt{\log n}\). This is due to the slightly different definition of signature: in the discrete case, once the subsequence \(P_i\) is fixed, there are only \(\tau ^{O(\rho )}\) possible ways how the subsequence \(Q_j\) might interact with \(P_i\). In the continuous case, this does not seem to be so clear, and we work with the weaker bound of \(\tau ^{O(\tau ^2)}\) possible interactions.

9 Decision Trees

Our results also have implications for the decision-tree complexity of the Fréchet problem. Since in that model we account only for comparisons between input elements, the preprocessing comes for free, and hence the size of the elementary boxes can be increased. Before we consider the continuous Fréchet problem, we first note that a similar result can be obtained easily for the discrete Fréchet problem.

Theorem 9.1

The discrete Fréchet problem has an algebraic computation tree of depth \({\widetilde{O}}(n^{4/3})\).

Proof

First, we consider the decision version: we are given two sequences \(P \,{=}\, p_1, \dots , p_n\) and \(Q = q_1, \dots , q_n\) of n points in the plane, and we would like to decide whether the discrete Fréchet distance between P and Q is at most 1. Katz and Sharir [53] showed that we can compute a representation of the set of pairs \((p_i, q_j)\) with \(\Vert p_i - q_j\Vert \le 1\) in \({\widetilde{O}}(n^{4/3})\) steps. This information suffices to complete the reachability matrix without further comparisons. As shown by Agarwal et al. [1], one can then solve the optimization problem at the cost of another \(O(\log n)\)-factor, which is absorbed into the \({\widetilde{O}}\)-notation. \(\square \)

Given our results above, we prove an analogous statement for the continuous Fréchet distance.

Theorem 9.2

There exists an algebraic decision tree for the Fréchet problem (decision version) of depth \(O(n^{2 - \varepsilon })\), for a fixed constant \(\varepsilon > 0\).

Proof

We reconsider the steps of our algorithm. The only phases that actually involve the input are the second preprocessing phase and the traversal of the elementary boxes. The reason of our choice for \(\tau \) was to keep the time for the first preprocessing phase small. This is no longer a problem. By Lemmas 4.2 and 5.1, the remaining cost is bounded by \(O(n\tau ^{5} + n^2 (\log \tau )/\tau )\). Choosing \(\tau = n^{1/6}\), we get a decision tree of depth \(n \cdot n^{5/6} + n^{2-1/6}\log n\). This is \(O(n^{2-(1/6)}\log n) = O(n^{2 - \varepsilon })\), for any fixed \( 0< \varepsilon < 1/6\). \(\square \)

10 Weak Fréchet Distance

The weak Fréchet distance is a variant of the Fréchet distance where we are allowed to walk backwards along the curves [8]. More precisely, let P and Q be two polygonal curves, each with n edges, and let \(\Psi '\) be the set of all continuous functions \(\alpha :[0,1] \rightarrow [0,n]\) with \(\alpha (0) = 0\) and \(\alpha (1) = n\). The weak Fréchet distance between P and Q is defined as

Compared to the regular Fréchet distance, the set \(\Psi '\) now also contains non-monotone functions. The weak Fréchet distance was also introduced by Alt and Godau [8], who showed how to compute it in \(O(n^2 \log n)\) worst-case time. We will now use our framework to obtain an algorithm that runs in \(o(n^2)\) expected time on a word RAM.

10.1 A Decision Algorithm for the Pointer Machine

As usual, we start with the decision version: given two polygonal curves P and Q, each with n edges, decide whether \(d_\text {wF}(P, Q) \le 1\). This has an easy interpretation in terms of the free-space diagram. Define an undirected graph \(G = (V, E)\) with vertex set \(V = \{0, 1, \dots , n-1\}^2\). The vertex \((i,j) \in V\) corresponds to the cell C(i, j), and there is an edge between two vertices (i, j) and \((i', j')\) if and only if the two cells C(i, j) and \(C(i', j')\) are neighboring (i.e., if \(|i - i'| + |j - j'| = 1\)) and the door between them is open. Then, \(d_\text {wF}(P, Q) \le 1\) if and only if (i) \(|P(0) - Q(0)| \le 1\); (ii) \(|P(n) - Q(n)| \le 1\); and (iii) the vertices (0, 0) and \((n-1, n-1)\) are in the same connected component of G, see Fig. 12.

The polygonal curves P and Q have weak Fréchet distance at most 1, but Fréchet distance larger than 1: the point (n, n) is not in \({{\mathrm{reach}}}(P, Q)\), but the vertices for the cells C(0, 0) and C(4, 4) are in the same connected component of G. The reachable region is shown dark blue, the edges if G are shown light blue

Let \(\tau , \rho \in {\mathbb {N}}\) be parameters, to be determined later. We subdivide the cells into \(k = O(n/\tau )\) vertical strips \(L_1, \dots , L_k\), each consisting of \(\tau \) consecutive columns. Each strip \(L_i\) corresponds to a subcurve \(P_i\) of P with \(\tau \) edges. For each such subcurve \(P_i\), we define two arrangements \({\mathcal {A}}_i\) and \({\mathcal {B}}_i\). To obtain \({\mathcal {A}}_i\), we take for each edge e of \(P_i\) the “stadium” \(c_e\) of points with distance exactly 1 from e, and we compute the resulting arrangement. Since two distinct curves \(c_e, c_{e'}\) cross in O(1) points, the complexity of \({\mathcal {A}}_i\) is \(O(\tau ^2)\), see Fig. 13. The arrangement \({\mathcal {B}}_i\) is the arrangement \({\mathcal {B}}\) described in the proof of Lemma 4.1, i.e., the arrangement of the curves dual to the tangent lines for the unit circles around the vertices of \(P_i\).

Next, we subdivide each strip into \(\ell = O(n/\rho )\) elementary boxes, each consisting of \(\rho \) consecutive rows. We label the elementary boxes as \(B_{ij}\), with \(1 \le i \le k\), \(1 \le j \le \ell \). The rows of an elementary box \(B_{ij}\) correspond to a subcurve \(Q_j\) of Q with \(\rho \) edges. The signature of \(B_{ij}\) consists of (i) the index i of the corresponding strip; (ii) for each vertex of \(Q_j\) the face of \({\mathcal {A}}_i\) that contains it; and (ii) for each edge e of \(Q_j\) the face of \({\mathcal {B}}_i\) that contains the point that is dual to the supporting line of e, plus two indices \(a,b \in \{1, \dots , \tau \}\) that indicate the first and the last unit circle around a vertex of \(P_i\) that e intersects, as we walk from one endpoint to another.

Given an elementary box B, the connection graph \(G_B\) of B has \(\tau \rho \) vertices, one for each cell in B, and an edge between two cells C, \(C'\) of B if and only if C and \(C'\) share a (horizontal or vertical) edge with an open door. The connectivity list of B is a linked list with \(2\tau + 2\rho - 4\) entries that stores for each cell C on the boundary of B a pointer to a record that represents the connected component of the connection graph \(G_B\) that contains C.

Lemma 10.1

There are \(O(n\tau ^{8\rho + 1})\) different signatures. The connection graph of an elementary box \(B_{ij}\) depends only on its signature, and the connectivity list can be computed in \(O(\tau \rho )\) time on a pointer machine, given the signature.

Proof

First, we count the signatures. There are \(O(n/\tau )\) strips. Once the strip index i is fixed, a signature consists of \(\rho +1\) faces of \({\mathcal {A}}_i\), \(\rho \) faces of \({\mathcal {B}}_i\), and \(\rho \) pairs of indices \(a,b \in \{1, \dots , \tau \}\). The arrangement \({\mathcal {A}}_i\) has \(O(\tau ^2)\) faces, and the arrangement \({\mathcal {B}}_i\) has \(O(\tau ^4)\) faces, as explained in the proof of Lemma 4.1. Finally, there are \(\tau ^2\) pairs of indices. Thus, the number of possible signatures in one strip is \(\tau ^{8\rho + 2}\). In total, we get \(O(n\tau ^{8\rho +1})\) signatures.

Next, the connection graph of an elementary box \(B_{ij}\) is determined solely by which doors are open and which doors are closed. We explain how to deduce this information from the signature. A horizontal edge of \(B_{ij}\) corresponds to an edge e of \(P_i\) and a vertex q of \(Q_j\). The door is open if and only if q has distance at most 1 from e. This is determined by the face of \({\mathcal {A}}_i\) containing q. Similarly, a vertical edge of \(B_{ij}\) corresponds to a vertex p of \(P_i\) and an edge e of \(Q_j\). The door is open if and only if e intersects the unit circle with center p. As in Lemma 4.1, this can be inferred from the face of \({\mathcal {B}}_i\) that contains the point dual to the supporting line of e, together with the indices (a, b) of the first and last circle intersected by e.

Finally, given the signature, we can build the connection graph \(G_{B_{ij}}\) in \(O(\tau \rho )\) time, assuming that the arrangements \({\mathcal {A}}_i\) and \({\mathcal {B}}_i\) provide suitable data structures. With \(G_{B_{ij}}\) at hand, the connection list can be found in \(O(\tau \rho )\) steps, using breadth first search. \(\square \)

As usual, our strategy now is to preprocess all possible signatures and to determine the signature of each elementary box. Using this information, we can then process the elementary boxes quickly in our main algorithm. The next lemma describes the preprocessing steps.

Lemma 10.2

We can determine for each elementary box \(B_{ij}\) a pointer to its connectivity list in total time \(O(n\tau ^{8\rho + 2}\rho + (n^2/\tau )\log \tau )\) on a pointer machine.

Proof

First, we compute the arrangements \({\mathcal {A}}_i\) and \({\mathcal {B}}_i\) for each vertical strip. By Lemma 4.1, this takes \(O(\tau ^6)\) steps per strip, for a total of \(O(\tau ^6 \cdot n/\tau ) = O(n\tau ^5)\). Then, we enumerate all possible signatures, and we compute the connectivity list for each of them. By Lemma 10.1, this needs \(O(n\tau ^{8\rho + 2} \rho )\) time. We store the signatures and their connectivity lists

Next, we determine the signature for each elementary box. Fix a strip \(L_i\). For each vertex q and each edge e of Q, we determine the containing faces of \({\mathcal {A}}_i\) and \({\mathcal {B}}_i\) and the pair (a, b) that represents the first and least intersection of e with the unit circles around the vertices of \(P_i\). This takes \(O(n\log \tau )\) steps in total, using appropriate point location structures for the arrangements. Now, we use the data structure from the preprocessing to connect each elementary box to its connectivity list. A simple pointer-based structure supports one lookup in \(O(\rho \log \tau )\) time. Since there are \(O(n/\rho )\) elementary boxes in one strip, we get a total running time of \(O(n \log \tau )\) per strip. Since there are \(O(n/\tau )\) strips, the resulting running time is \(O((n^2/\tau )\log \tau )\). \(\square \)

With the information from the preprocessing phase, we can easily solve the decision problem with a union-find data structure.

Lemma 10.3

Suppose that each elementary box has a pointer to its connectivity list. Then we can decide whether \(d_{wF}(P, Q) \le 1\) in time \(O\big (\frac{n^2(\tau + \rho )\alpha (n)}{\tau \rho }\big )\) on a pointer machine, where \(\alpha (\cdot )\) denotes the inverse Ackermann-function.

Proof

Let S be the set that contains the boundary cells of all elementary boxes. Then, \(|S| = O\big (\frac{n^2(\tau + \rho )}{\tau \rho }\big )\). We create a Union-Find data structure for S. Then, we go through all elementary boxes \(B_{ij}\), and for each \(B_{ij}\), we use the connectivity list to connect those subsets of boundary cells that are connected inside \(B_{ij}\). This can be done with \(O(\tau + \rho )\) Union-operations. Hence, the total running time for this step is \(O\big (\frac{n^2(\tau + \rho )}{\tau \rho }\big )\). (We do not need any Find-operations yet, because we can store the representatives of the sets with the representatives of the connected components in the connectivity list as we walk along the boundary of an elementary box.)

Next, we iterate over the boundary cells of all elementary boxes. For each boundary cell C, we determine the neighboring boundary cells on neighboring elementary boxes that share an open door with C. For each such cell D, we perform Find-operations on C and D, followed by a Union-operation. This takes \(O\big (\frac{n^2(\tau + \rho )\alpha (n)}{\tau \rho }\big )\) time [61]. Finally, we return True if and only if (i) the pairs of start and end vertices of P and Q have distance at most 1, and (ii) C(0, 0) and \(C(n-1, n-1)\) are in the same set of the resulting partition of S. The running time follows. \(\square \)

The following theorem summarizes our algorithm for the decision problem.

Theorem 10.4

Let P and Q be two polygonal curves with n edges each. We can decide whether \(d_{wF}(p, q) \le 1\) in \(O((n^2/\log n)\alpha (n)\log \log n)\) time on a pointer machine, where \(\alpha (\cdot )\) denotes the inverse Ackermann function.

Proof

We set \(\tau = \log n\) and \(\rho = \lambda \log n/\log \log n\), for a suitable constant \(\lambda > 0\). If \(\lambda \) is small enough, then \(\tau ^{8\rho + 2} = O(n^{4/3})\), and the algorithm from Lemma 10.2 runs in time \(O((n^2/\log n)\log \log n)\). After the preprocessing is finished, we can use Lemma 10.3 to obtain the final result in time \(O((n^2/\log n)\alpha (n)\log \log n)\). \(\square \)

10.2 A Faster Decision Algorithm on the Word RAM

Theorem 10.4 is too weak to obtain a subquadratic algorithm for the weak Fréchet distance. This requires the full power of the word RAM.

Theorem 10.5

Let P and Q be two polygonal curves with n edges each. We can decide whether \(d_{wF}(p, q) \le 1\) in \(O((n^2/\log ^2 n)(\log \log n)^5)\) time on a word RAM.

Proof

We set \(\tau = \lambda \log ^2 n / \log \log n\) and \(\rho = \lambda \log n/ \log \log n\). If \(\lambda \) is small enough, then \(\tau ^{8\rho + 2} = O(n^{4/3})\), and we can perform the algorithm from Lemma 10.2 in time \(O((n^2/\log ^2 n)\log \log n)\).

We modify the algorithm from Lemma 10.2 slightly. Instead of a pointer-based connectivity list, we compute a packed connectivity list. It consists of \(O(\log n)\) words of \(\log n\) bits each. Each word stores \(\Theta (\log n / \log \log n)\) entries of \(O(\log \log n)\) bits. An entry consists of O(1) fields, each with \(O(\log \log n)\) bits. As in Lemma 10.2, the entries in the packed connectivity list represent the connected components of the connection graph \(G_B\) for the boundary cells of a given elementary box B. This is done as follows: each entry of the connectivity list corresponds to a boundary cell C of B. In the first field, we store a unique identifier from \(\{1, \dots , 2\tau + 2\rho -4\}\) that identifies C. In the second field, we store the smallest identifier of any boundary cell of B that lies in the same connected component as C. The remaining fields of the entry are initialized to 0. Furthermore, we compute for each elementary box B a sequence of \(O((\tau / \log n)\log \log n)\) words that indicates for each boundary cell of B whether the corresponding door to the neighboring elementary box is open. This information can be obtained in the same time by adapting the algorithm from Lemma 10.2.

Next, we group the elementary boxes into clusters. A cluster consists of \(\log n\) vertically adjacent elementary boxes from a single strip. The first set of clusters come from bottommost \(\log n\) elementary boxes, the second set of clusters from following \(\log n\) elementary boxes, etc. The boundary and the connectivity list of a cluster are defined analogously as for an elementary box. Below, in Lemma 10.6, we show that we can compute the (pointer-based) connectivity list of a cluster in time \(O((\tau \,{+}\, \rho ) (\log \log n)^4)\). Then, the lemma follows: there are \(O(n^2/(\tau \rho \log n))\) clusters, so the total time to find connectivity lists for all clusters is \(O(n^2/(\rho \log n)(\log \log n)^4 + n^2/(\tau \log n)(\log \log n)^4) = O((n^2/\log ^2 n) (\log \log n)^{5})\). After that, we can solve the decision problem in time

as in Lemma 10.3. \(\square \)

Lemma 10.6

Given a cluster, we can compute the connectivity list for its boundary in total time \(O((\tau + \rho )(\log \log n)^4)\) on a word RAM.

Proof