Abstract

We investigate the mathematical properties of event bound functions as they are used in the worst-case response time analysis and utilization tests. We figure out the differences and similarities between the two approaches. Based on this analysis, we derive a more general form do describe events and event bounds. This new unified approach gives clear new insights in the investigation of real-time systems, simplifies the models and will support algebraic proofs in future work. In the end, we present a unified analysis which allows the algebraic definition of any scheduler. Introducing such functions to the real-time scheduling theory will lead two a more systematic way to integrate new concepts and applications to the theory. Last but not least, we show how the response time analysis in dynamic scheduling can be improved.

Similar content being viewed by others

1 Introduction

If we have a careful review of existing work in real-time scheduling theory, mainly two different approaches to satisfy the real-time capability of an embedded system exist: the bound test or, in more general, the utilization based approachFootnote 1 and the response time analysis. The bound tests tests compute the utilization of a hardware resource as the response time analysis focus on the behaviour of tasks. In system analysis, both approaches are helpful. However, looking at related work, the two approaches are different in a tiny detail: while the utilization based computations built on the floor operator, the response time analysis uses the ceiling operator. Nevertheless, looking closer to previous work leads to problems in formulating a utilization-based test for static scheduling and a response time analysis for dynamic scheduling. However, in the practical use of the scheduling theory, the analysis of static scheduling prefers the response time analysis while the analysis in dynamic scheduling prefers the utilization based test. The observation is that the mathematical expressiveness of both functions is limited: the floor and the ceiling operator does not support algebraic properties such as distributivity and commutativity. Besides, these operators are not analytical in the sense that calculus is not well supported. The work of [12] and [43] show the limitations on floor and ceil operators in the context of the real-time scheduling theory. Both papers postulate new analysis techniques if an event count with more mathematical expressiveness is known. From a practical perspective, real-time analysis work always covers one concrete problem, and the algorithms published are solving just this particular problem. Combining different ideas is difficult because the task models often change. Sometimes different algorithms are used to address different problems in the application of the theory. Ref. [5] discusses different real-time analysis methods to compute task response times to cover multiple issues in the automotive industry and find that different approaches are necessary to cover all aspects needed.

This paper presents an approach to address both problems directly. If we look at another domain in science and engineering, the problem of discrete and continuous behaviour was already addressed. In digital signal processing and digital control theory both worlds, the discrete and the continuous nature of systems are combined. The idea of this paper is to adapt mathematical models used in physics, signal- and control theory to the problem of real-time scheduling analysis. As a result, we present

-

A new universal mathematical framework, which allows to replace geometric only proofs given by diagrams and known from previous work with new algebraic and analytical methods in an intuitive way.

-

A new generic approach to formulate interfering tasks in different scheduling policies

-

and therefore a unified formulation of the bound tests- and the response time analysis in static and dynamic scheduling based on just one equation.

-

Additionally we adopt assumptions of the analysis of arbitrary deadlines to the analysis of response times in dynamic scheduled systems and found a deterministic and tighter analysis as in previous work.

2 Related work

Real-time systems are computer systems whose software must complete calculations within fixed deadlines. For this purpose, the algorithms of an application are split into individual tasks, and these tasks are independently executable. A system requires an operating system that generates predictable execution sequences to ensure a time-related response. If an operating system delivers predictable schedules a mathematical model can be derived and deadline compliance can be calculated. During the Apollo missions to the moon, the first today-like real-time computer was used for guidance and navigation (Apollo Guidance Computer, AGC, [33], p.221 ff). During this time, software engineers expect a task utilization of 80% will guarantee correct real-time behaviour of the AGC. However, on the 20th of July 1969, during the first human-crewed landing, the computer of the lunar module Eagle gave a program alarm at decent to the moon’s surface. The computer had to be reset three times during the whole landing, and the mission was short before abort. A later analysis at NASA figured out, that a wrong real-time behavior and the missing of the deadline of a flight critical task led to the problem. Later on, a mathematical analysis of [32] showed that the assumption a utilization of 80% on static real-time scheduling (rate monotonic scheduling, RMS) resulted in missing deadlines. [32] showed that the utilization limit of a static real-time task set is dependent on the number of tasks, and in the limit on a large number of tasks is only 69%. However, while this limit is only sufficient and not necessary, it was necessary to develop further real-time tests. While [32] considered the utilization of a task set in static and dynamic scheduling, other researchers followed an different approach, computing the response times of all tasks of a task set, as given by [27]. Since both, [32] as well as [27] assumed implicit deadlines defined by the period of events, [30] showed that deadline monotonic scheduling DMS) was the optimal priority assignment when the deadline is smaller than the period and [29] introduced a schedulability test on given checkpoints to DMS.

This first work in real-time scheduling theory were limited to uni-processor systems. An extention to distributed systems gives [49] by introducing a jitter based periodic event model. Later on, the response time analysis was generalized by [40] to integrate more complex event models. The response time analysis as given by [29] is limited to systems with static priorities. The extension for dynamically scheduled (earliest deadline first, EDF) real-time systems [38, 39] needs to distinguish between different dynamic cases during analysis. This makes the approach complex. The real-time analysis distinguishes between load analysis (processor load) [32] and response time analysis [29]. Therefore, both directions are discussed independently in literature. The utilization based approach was extended and improved by [9], who introduced deadlines shorter than periods to the bound tests analysis of dynamic scheduling. However, this work supports only the periodic and sporadic event model wich does not allow the formulation of bursty events. A more general approach to model different and complex worst-case event patterns was first introduced by [23]. This event stream model could be very easy combined with Baruah’s approach as shown by [3]. Because the analysis algorithm has a bad run-time complexity some approximations are introduced by [3] and [4] for dynamic scheduling and for static scheduling [21]. While the work of [23] does not model event bursts in an appropriate way, [1] introduce hierarchical event streams. Additionally, [24] use Baruahs utilization based scheduling test to design a novel response time analysis for dynamic scheduling. Other extensions are the multiframe- [34], the generalized multiframe [8] and the reccurring real-time task model [6]. These techniques allow the modeling of periodic task sequences with jobs with different execution times and extend real-time scheduling theory to the domain of stream processing systems [7, 35] and with the most powerful model of [42].

In addition to these works, which are assigned to the classical theory of real-time systems (scheduling theory), the real-time behaviour of task systems can also be verified with the real-time calculus (RTC). The real-time calculus is based on the network calculus [13, 18, 19] which describes a mathematical framework for analyzing the flow of data in networks. [36, 46] introducing the real-time calculus and apply their work [16, 44, 45] to the analysis of network processors. It was shown that the classical methods can be replaced by the real time calculus. In contrast to prior work, the real-time calculus allows the calculation of systems with many different scheduling strategies as static- (DMS) and dynamic scheduling (EDF), time-division multiplex access (TDMA) and others. While the approach is modular it also allows hierarchical scheduling. Finally, by [28, 40] response time analysis as given by the classical theory were combined with real-time calculus to build an analysis that highlights the strengths of each technique. The disadvantage of this work is that the modelling is not generic and must be redefined for each system to be modelled.

However, the existing work is split in utilization based techniques, response time analysis and the real-time calculus. Each approach has its advantages and disadvantages. Sometimes authors like to combine the different work but often they are missing event bound functions with different properties as given by the established theory. The need for new approaches is given in [12, 24, 43]. Other authors prefer an analysis technique independent from the application structure [28, 40].

A way to introduce analytical proofs in real-time scheduling theory is presented in [14]. This work is limited to the busy window approach while the goal of the presented work is to combine utilization based test with the response time analysis. Because our new approach uses advanced techniques given by theoretical physics and signal theory, it is more compact and expressive than previous work in the real-time domain. Because of its expressiveness, it allows the formulation of a closed algebraic method. This method is open to different problems in real-time analysis. Such an approach leads to an easy formulation of utilization based and response-time based analysis in static as well as in dynamic scheduling. The idea allows a straightforward combination of both scheduling techniques without the overhead to formulate different equations and algorithms. It combines different event models and gives a new approach to the response time analysis of dynamic task systems. For the first time in literature, we present an approach that allows an explicit function to describe different schedulers.

3 Model of computation

Different computational models to analyze real-time systems exist. In this work, we consider the bounded execution time model. We are assuming that the execution flow in real-time systems separates into different tasks. A task is a kind of programming function assigned to an external or internal interrupt - an event - of the system. The tasks are periodically time- or event-controlled. Each event requests a task, and the concrete instance which occurs is called a job. Each job must be executed in a limited time interval: the deadline. In the bounded execution time model, tasks are preempted by higher priority tasks. The priority of the execution of a job can be assigned statically or dynamically. Bounding jobs of a task to a deadline allows any scheduling permutation without any sophisticated scheduling algorithm. In static scheduling, like rate monotonic/deadline monotonic (RMS/DMS) scheduling, the priorities are assigned statically to each task depending on the request rate of the triggering events. In dynamic scheduling, like the earliest deadline first (EDF) policy, the priority of each job depends on the next approaching deadline. Therefore, the scheduling priority is not strictly assigned to tasks. In the classical scheduling theory by [32], the bound tests of a task set in the sense if deadlines met, is proved by computing the utilization of resources like processors or the maximal response time of any worst-case job.

3.1 Events

A timing relationship between events is needed to compute a task set’s utilization or the response time of the worst-case job or all other jobs as well. The established model defines a sequence of periodic events and the distance in time between events is denoted by a single value: the period \(p\in \mathbb {R}{^+_0}\). Because each task has different periods, a function \(p_{\tau }:=p(\tau )\) may always return the period of the considered task. This event model has been extended to the sporadic event model where the period interprets as minimal inter-task arrival time. The periodic event model with jitter allows considering distributed systems in holistic real-time analysis [47, 49]. This model was extended to include task offsets [51] and arbitrary deadlines to the response time analysis [48]. However, a more general model on events was first introduced by [23], has limitations to express bursty event patterns. Hierarchical event streams give a shorthand formulation to solve this problem. In this paper, we consider the periodic or sporadic event model and the event stream model in parallel. The periodic model in this work is used to give the reader a simple link to previous work, while the event stream model is more general and includes all derivates like the sporadic, the bursty, or the periodic model with jitter.

Definition 1

(Event stream) An event stream is an array of event tuples or an event list. Each event tuple \(\epsilon \) describes a periodic sequence of events \(\epsilon '\):

The event stream must be valid, which means the order of the time intervals \(\phi _{\epsilon }\) must be subadditive or superadditive. If the event list does not fulfil the requirement of subadditivity, we call it an event sequence.

An event tuple consists of the period \(p\) of an event sequence and a minimal distance \(\phi \) to another event. The position of the event tuple in the stream array has a meaning: The first tuple initializes the stream. It always has \(\phi =0\). The second tuple describes the minimal distance between two events, the third between three events and so on. This means the interval given in each list element contains all previous events. Therefore each tuple represents the minimal distance of the related number of events and its periodical repetition.Footnote 2 In this work, each event sequence is indexed by \(\epsilon \). Therefore, \(p_{\epsilon }\) denotes the period of event sequence \(\epsilon \) and \(\phi _{\epsilon }\) the minimal distance \(\phi \) between n-events. The number n of the events is given by the position of the event tuple in the event stream. Note, that in this model sporadic events can be described easily: an event which occurs only once has an infinite period.

Example 1

(Event model: periodic) Assume an event which occurs periodically every \(p\) time:

The minimal distance of the first initial event is \(\phi =0\). The event recurs with the period \(p\).

In the end, to formulate schedulability tests, a bound function to event streams is required:

Definition 2

(Right-continuous event bound) Assume any event stream as given by Definition 1, the event bound function or event bound  of any task is given by

of any task is given by

Final, the event bound of a task set is the sum of all independent task bounds.Footnote 3

3.2 Tasks

The inter-arrival pattern of events only describes the occurrence of events. At each event, an independent part of a program is executed by the operating system. Such an execution unit is called a task \(\tau \). A real-time application separates into several tasks. Therefore each task is an element of a task set: \(\varGamma :=\{\tau _1, \tau _2,\ldots , \tau _n\}\). All tasks must schedule on the given processor in a way that all deadlines met. A scheduler is optimal if no algorithm exists, which produces a better valid schedule. In [32] was proven that RMS is optimal for static, and EDF is optimal for dynamic scheduling. Therefore an execution time must be added to the model. Because the execution of a task’s job varies and we are only interested in worst-case bounds [32]. In the real-time analysis, a task is defined by an inter-arrival pattern of events and the two execution times. In the bounded execution model, the relative deadline specifies the time a task has to finish after being requested. If all tasks are independent, it is not necessary to consider the best case execution time. This parameter is only needed if tasks with data dependencies are running on different processors [22].

Definition 3

(Execution time) The execution time of a task is the time the execution of the task needs if a processor exclusively executes the task with no interruption by other tasks. The execution time may depend on data attributes given to the task. Therefore we distinguish between the worst-case or maximal (\(c^{+}\), WCET) and best-case or minimal execution time (\(c^{-}\), BCET).

As we consider the bounded execution model, a deadline must be assigned to each task. The deadline is a time interval in which the execution of a task must finish. It is distinguished between a relative deadline (\(d\)) and an absolute deadline (\(D\)).

Definition 4

(Relative Deadline) The relative deadline \(d_{\tau }\) of a task bounds the execution of any job related to the request time \(t^r_{}\) of this job.

Definition 5

(Absolute Deadline) The absolute deadline \(D^n_{\tau ,\epsilon }\) of the n’th job is related to \(t=0\). Therefore the n’th absolute deadline of the job is

During the execution of the task set, the operating system has to schedule jobs of the task set. The operating system determines the execution order of the jobs based on the relative or absolute deadline assigned to each job. In some cases, fixed priority numbers given by the programmer replacing deadline-based scheduling.

Definition 6

(Static priority) Let \(\pi \in \mathbb {N}\) and assume two independent tasks \(\tau \) and \(\tau '\), a task \(\tau '\) has a higher assigned priority than task \(\tau \), if \(\pi _{\tau '} > \pi _{\tau }\) and assume a task with higher priority preempts tasks with lower priority. The set of all higher priority tasks of task \(\tau \) is

Therefore, a task can be specified formally:

Definition 7

(Task) A task \(\tau \in \varGamma \) is a quadruple including the inter-arrival pattern of events \({\mathcal {E}}\), the worst-case and best-case execution time of a task and a relative deadline \(d\) by which the task execution bounds:

Note, that the relative deadline can be replaced or amended by a static priority \(\pi \). Access to the data structure of a task can be granted by task dependent functions: \(p_{\tau } = p(\tau )\), \(c_{\tau }^{+} = c^{+}(\tau )\),Footnote 4 etc.

In some work, to each job of a task different execution times assigned. In such a case, the execution times of a task are specified by a vector. Job-related execution times are introduced by the multi-frame task model [34]. If job-related deadlines added, this is called the generalized multi-frame model [8]. Therefore jobs must introduced in the task model:

Definition 8

(Job) A job is the instance of a task \(\tau _{\epsilon } \in \tau \) triggered by any event of the event stream related to a task.

4 Motivation to a unified theory

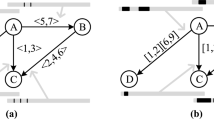

Figure 1 summarizes the two approaches for the analysis of real-time systems. The demand bound test has to check if each value given by the demand bound is smaller than a given processor resource. Consider the left side of Fig. 1, to check for an intersection of the demand bound to the bisecting line left of the demand. We must consider each left bounded step of the demand bound function. Opposite to that approach, the response-time analysis checks for the intersection of the request bound with the processor resource. As seen on the right side of Fig. 1 only the left points must be checked for an intersection. Assuming a positive worst-case execution time of the considered task, this is the most right point of the request bound of all interfering tasks. Therefore, it is clear that both bounds need different mathematical properties.

4.1 Event bound approaches to real-time systems analysis

The demand bound function (dbf,

) is a composition of the deadline-shifted request bound function (rbf,

) is a composition of the deadline-shifted request bound function (rbf,  ). On a given event bound function, the request bound is just the event bound multiplied by the worst-case execution time, while to construct the demand bound each deadline shifts this function to the right [9] as shown on the left side of Fig. 1:

). On a given event bound function, the request bound is just the event bound multiplied by the worst-case execution time, while to construct the demand bound each deadline shifts this function to the right [9] as shown on the left side of Fig. 1:

It is necessary to count the number of interfering events of a task’s job to compute the utilization of a resource or the worst-case response time. In other words, this means that the analysis adds the execution time of a job to the response time of the considered task if both interfere in the same time interval. Related work formulates variations of such a request bound to model the interfering of higher priority tasks.

Opposite to the event bound given in the bound tests test, in response time analysis, the event bound is given by a left continuous function [29]:

What is the reason for the difference? As mentioned, the demand bound test has to check the left points of the demand bound while the busy window approach looks for an intersection on the right side of the request bound with the intersecting line. The initial value of the response time analysis gives the worst-case execution time of the considered task. In contrast to the demand bound test, the time point \(t=0\) does not matter because the minimal response time is if a task does not execute and always equal the best- or worst-case execution time. As this time interval is the starting point of the fixed-point iteration, 0 never occurs in the equation. However, to find the intersection with the resource function, the bound must be left-continuous. Therefore, at the end of the busy interval, an event should not count. If a task finishes its execution and at the exact moment a new task requests, this request is superfluous.

It is obvious that  is not equivalent to

is not equivalent to  , while \(\left\lfloor \frac{0}{p_{\tau }}+ 1 \right\rfloor = 1 \ne 0 = \left\lceil \frac{0}{p_{\tau }} \right\rceil \). However, in this work we want to consider the different properties of both functions to extend the theory and therefore we discuss them in more detail:

, while \(\left\lfloor \frac{0}{p_{\tau }}+ 1 \right\rfloor = 1 \ne 0 = \left\lceil \frac{0}{p_{\tau }} \right\rceil \). However, in this work we want to consider the different properties of both functions to extend the theory and therefore we discuss them in more detail:

Lemma 1

(Identity) The event bound functions  and

and  are not identical:

are not identical:  .

.

Proof

To keep the proof simple, we only prove the lemma in the periodic case. Consider \(t_n,t' \in \mathbb {R}{^+}\) and \(\forall \tau \in \varGamma : 0 \le t \le p_{\tau }\). We investicate the three periodical repeating intervals \(\varDelta _{n-1,n} = (\{n-1\}p_{\tau }, np_{\tau }]\), \(\varDelta _{n,n+1} = [n p_{\tau }, \{n+1\}p_{\tau }]\) and \(\varDelta _{n+1,n+2} = (\{n+1\} p_{\tau }, \{n+2\}p_{\tau }]\) to find the properties of the bounds given in scheduling theorie. Additional assume \(\forall n \in \mathbb {N}{_0}: t_n := np_{\tau }+t'\), then the right-continuous event bound function has the following properties:

The properties of the left-continous event bound are given by

Both functions are inequal in all time points \(t := np_{\tau }\). The event bound considered for only one task can easily be extended to the whole task set: Because  and

and  the inequation

the inequation

is true. \(\square \)

is true. \(\square \)

To conclude, the right-continuous event bound and the left-continuous event bound differ in all-time points \(t_n=np_\tau \).

4.2 Problem formulation

Is it necessary two use two different functions? The goal of this work is to find a function which is right-continuous in all \(t_n=np_\tau \) except the last one which should be left-continuous. Therefore, the utilization test and the response time analysis should use the same function except at the end of the considered timing interval. Remember, the demand bound test evaluates all event requests until the hyper-period \({\mathcal {P}}\), and the response-time analysis counts all events until the result of the last iteration. In contrast the response time analysis, only the last time point must necessarily be considered and should be left-continuous. The left- and the right-continuous event bounds only differ in their left and right-hand limits of the investigated timing interval. The goal of this work is to find a function which is right-continuous in all \(t_n=np_\tau \) except the last one which should be left-continuous. Therefore, both algorithms should use the same function except at the end of the considered timing interval. If we extend the event bound function \(\mathbb {E}_\tau : \mathbb {R}^2 \rightarrow \mathbb {R}\) we can specify a bound time interval \(\varDelta _{a,b} := [t_a,t_b)=[a,b)\) which restricts the time in which the event bound counts. If we integrate the hyper-period as bounding restriction to the demand bound function, it is possible to formulate a general unified event bound. Let us discuss this idea in more general:

Problem 1

(Unified event bound function or unified event bound, ueb) Investigate if a function with the following properties exist: Assume the time interval \(\varDelta _{a,b} = [t_a, t_b)\) and each time point is given by \(\forall n \in \mathbb {N}{_0}: t_n := np_{\tau }+t'\). In the interval \(\varDelta _{a,b}\) a unified event bound function \(\mathbb {E}: \varGamma \times t^2 \rightarrow \mathbb {N}\) of any task must fulfill the following properties:

Such a function is equivalent to the right-continuous event bound except at \(t=t_b\). Note that for the response time analysis only this point in time is relevant and it is not necessary that all other points of the function are left-continuous. Therefore this function can be used for bound tests tests as well as for response time analysis. A function with these properties are postulated in [12, 43]. Both papers accepted an over-approximation by using the right-continuous request bound. These properties follow direct from Lemma 1.

Problem 2

(Postulated demand bound test) Assume the existence of a unified event bound function. Then the demand bound test in the periodic event model can be written as:

Problem 3

(Postulated Response Time Analysis) If a unified event bound function exists, the response time in the periodic event model can be written as:

Assume the following definition for interfering tasks: \(\tau ' \in \tau \cup \overline{\varGamma }_{\tau }\), then the response time analysis can be reformulated as the well known fixed-point iteration. In other words, the response time analysis will become a root-finding problem:

The idea to integrate the request of the considered task and all higher priority tasks in just one function simplifies the mathematical framework. As we will later see in this paper, the concept of a unified event bound allows the integration of different task models developed during the past decades to only one analysis approach. This opportunity opens a way to integrate a lot of already done work in bound tests tests to the busy-window approach and vice versa. If the unified event bound function exists for event streams as well, the new framework is responsible for each event model published during the last decades.

4.2.1 Goals and organization

The goal of this work is finding a unified event bound and investigating its mathematical properties. As shown in the previous section, the right and left-continuous event bound are only different in their limits at the integer times \(t_n = n \cdot p_{\tau }\). If we assume this as a limited value problem, calculus should be used to solve it. Digital signal theory and theoretical physics already handle discrete events or objects in a continuous environment. Therefore these methods are adapted to real-time analysis. Digital signal processing describes discrete signals by a series of Dirac pulses. This idea can be used to count events, as well. While digital signal processing builds upon a rich mathematical framework, these methods became applicable to real-time systems. This mathematical framework has one significant advantage compared with approaches in related work: Computation is not limited to bound functions. By using Dirac deltas to count events, it is possible to apply algebraic operations directly on events before computing the bound function. This advantage allows constructing constructive interference bounds to model all kind of different real-time schedulers. Therefore, problems in the real-time analysis could be expressed more simply and expressively than in the established work. As we will see later in the paper, the response time of static and dynamic scheduling is computed by only one expression. This new method is so powerful that other priority schemes are described easy in the same way.

The paper is organized as follows: First, we derive a unified event bound function using methods from calculus and distribution theory. Second, we will show how hierarchical event streams can be easily described and computed by using the Dirac delta function. Based on the idea, we develop a unified real-time scheduling theory considering static and dynamic priorities in one holistic approach for bound tests and response time analysis as well. For the first time, we derive both analysis techniques from only one axiom, the average load of a processor. We will then prove past results just by using the new theory. Applying the new theory to past results shows how the unified theory gives an algebraic toolbox for proofs. Now it is not necessary anymore to do any geometric relations on task and job requests in time-based Gantt diagrams as widely done in related work. The new approach is more straightforward and leads to accessible computational models. As a special treat, we can develop a tighter response time analysis as given in related work for dynamic scheduling at the end by just adding the same assumption to dynamic scheduling as already done to model task with arbitrary deadlines already done in static scheduling. In the end, we will compute the response times of some interesting tasks set in static, dynamic and hierarchicalFootnote 5 scheduling. The paper ends with an Appendix A concluding the used mathematical symbols and explaining special notations borough from theoretical physics. An additional Appendix B discusses a complete example calculated by a computer algebra system (CAS).

5 The unified event bound function

During the next section, we develop a strict formal view to events as known in signal theory. The idea is to express all needed mathematical properties in the model implicitly without any informal or hidden assumptions. First, we introduce events, and then we show how they can be count in an alternative way compared to the floor and ceil operation. We discuss the mathematical properties and will show how the new method is related to previous work.

5.1 A mathematical view on events and tasks

In real-time systems analysis or scheduling theory, events and jobs introduced semi-formal. Tasks or better jobs were often given as geometrical objects such as rectangles in Gantt charts. Then the length of the rectangle models execution demand of the job and the place of the rectangle determines by its position in time. The hight of the rectangles does not matter and is most often given to 1 as seen in Fig. 2a. The goal of the following section is to formalize release times and time durations appreciatively. Therefore we transform informal geometric proofs to analytical descriptions which are computed algebraically.

5.1.1 Modeling jobs

In each computer system, a computational activity has a duration or in other words, an execution time. The time between the release of a job and its non-preempted execution end starts at a defined point in time \(t_a\) and ends later at a second point in time \(t_b\). If the job is not interrupted by any other activity this time is called the worst-case execution time \(c^{+}\). However, if we assume independent tasks on a unique processor, we can concentrate on \(c^{+}\). Calling \(t_a\) the request time, each job of a task ends after \(c^{+}\) if no other job interrupts the execution. Therefore the job finishes at \(t_b=t_a + c^{+}\). Figure 2a. shows such a simple behaviour as it is described in most of the previous work by a Gantt–Chart. Therefore, during the execution of a job, the processor is busy and has a utilization of one. In contrast to related work, we first look for an algebraic formulation of this behaviour. Formally the geometric Gantt–Chart description of a job can be replaced by a composition of Heaviside functions.

Definition 9

(Heaviside function) Assume \(s \in [0,1]\). The Heaviside or step function \({\mathbb {H}}: \mathbb {R}\rightarrow \{0,1\}\) is definedFootnote 6 as

Based on this definition, it is easy to introduce the concept of the Dirac delta function or shortly the delta function, which becomes our base to define events formally:

Definition 10

(Dirac delta) The Dirac delta function \(\delta (t)\) is given by:

This equation does not define a function in a traditional, well-known way. Therefore it is correctly called a distribution. It was first introduced by Paul Dirac in the early 1930s and is a well established mathematical tool in theoretical physics and signal theory [15]. As we will see later, the idea of Paul Dirac can be applied to find and define the unified event bound. It is very important to have in mind that \(\delta (t) = 0\) for all \(t \ne 0\) which directly follows from the definition.

Let us next consider how any job of task \(\tau \) with execution time \(c_{\tau }^{+}\) requested at time \(t_{\epsilon '}\) can be modeled. Let us first assume that all jobs has the same execution demand. Therefore we call the task homogenous.

Lemma 2

(Dirac job) A job requested at time \(t_{\epsilon '}\) is modeled by

Proof

A non-preemptive real-time task instance or job needs two Heaviside functions for its algebraic description: one to represent the request \({\mathbb {H}}(t-t_a)\) and one to model the completion of the task \({\mathbb {H}}(t_b-t)\). Consider Fig. 2b. Multiplying both functions builds a rectangle of hight one defined by the execution function \(c: \mathbb {R}\rightarrow \{0,1\}\):

Alternativ it is possible to use

It is important to note that such a description does not consider preemption, and therefore, it does not support the bounded execution model completely. As a consequence, it is necessary to model the behaviour of interfering computational loads such as interrupts and higher priority jobs explicitly. The idea of the following is to describe the occurrence frequency of jobs and their requested load concerning the available computation time in a given time interval \([t_a,t]\). Let us first rewrite the equation for the computational load without changing anything:Footnote 7

The complete non-preempted execution starting at \(t_a\) is given by

The release of each job needs a context switch at the beginning and end of execution, and some time \(\varepsilon \) this context switch. It is common sense in scheduling theory not to consider this time.Footnote 8 However, to catch the value limitation problem, we first introduce this overhead, and later it will be removed mathematically:

For our first event \(\epsilon '\) we are not interested when it starts so let us move it to the origin \(t_a=0\):

Let us now modify Eq. 27 by describing the computational load not by its horizontal time:

Modelling preemptive jobs by applying Eq. 31

In real-time analysis, we are interested only in the load given by the real-time tasks themselves. Such an assumption is permissible because the job’s execution time is much longer than the interruption time by the operating system, and it should be ignored. Therefore we look at what happens if the operating system overhead approaches 0. Mathematically the request span can be simplified under the assumption that \(\int \limits _{-\infty }^{\infty } \! \frac{1}{2 \varepsilon } {\mathbb {H}}(t-\varepsilon )\cdot {\mathbb {H}}(\varepsilon -t) \, \mathrm {d}t=1\). The obvious solution to eliminate the time \(2\varepsilon \) in the Eq. 28 by setting \(\varepsilon =0\) does not work in general. If we now assume a Heaviside function with \(s=0\) then \({\mathbb {H}}(t-\varepsilon ){\mathbb {H}}(\varepsilon -t)=0\) and not 1. Therefore, we set of \(\varepsilon \) in a way, that all possible Heaviside functions \(s \in [0,1]\) will be supported as well. Mathematically we apply limit value analysis to the problem: If the term addressed by the integral is divided by \(2 \varepsilon \) and \(\varepsilon \rightarrow 0\) we get

Assume the substitution \(\delta (0) = \lim \nolimits _{\varepsilon \rightarrow 0}\frac{1}{2 \varepsilon } \cdot {\mathbb {H}}(t-\varepsilon ) \cdot {\mathbb {H}}(\varepsilon -t)\) to rewrite Eq. 28. Consider Fig. 2c. for illustration. Therefore,

And if we like to consider a job requested at time \(t_{\epsilon '}\):

\(\square \)

5.1.2 Events as Dirac delta

Let us now apply the delta function by defining events as needed in real-time systems analysis in a strictly formal way:

Definition 11

(Event) An event \(\epsilon ': \mathbb {R}\rightarrow [0,1]\) is a request at a point in time \(t_{\epsilon '} \in \mathbb {R}\) with infinitely short time span:

The time point \(t_{\epsilon '}\) calls the request time of the event.

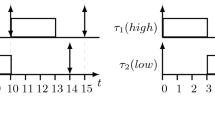

In other words, an event ist a timeless state change in any system. Computing only the area bounded by a given Heaviside function does not allow to consider preemption as needed in the bounded execution model. Multiplying a Dirac delta with any given WCET results in a peak with the amplitude of the execution time at the request time of the event, as shown in Fig. 3a by alieng equation . Running overtime t the value of these peaks is reduced exactly by t in the interval t. Because the model considers the release time of events, we can add a peak of execution time at any time an interfering job of higher priority interrupts the execution of the considered job. Consider Fig. 3 which illustrates the idea. At time \(t=0\) task \(\tau _1\) and \(\tau _2\) are requested. After the specified period \(p_{1}\) task \(\tau _1\) is requested again. Figure 3b. shows the behaviour of the resulting function. Note, that such a saw-function is equal to the well-known request bound function of these two tasks subtracting t. Changing the point of view transforms the established fixed-point iteration of the busy window approach to find the roots of the equivalent sawtooth-wave.

5.1.3 Event models

The definition of only one event does not support the modelling of tasks as a sequence of jobs. Therefore a formal description of a series or sequence of events is required to model sequential jobs. Mathematically this is expressed by a series of Dirac deltas called a Dirac comb in the case all events are strictly periodic. However, the general way to describe any sequence of requesting events is to describe event streams. An event stream can be described by a Dirac comb as well:

Definition 12

(Event density) A sequence of k events is given by:

calling  an event stream or event density.Footnote 9 Therefore an event sequenceFootnote 10\(\epsilon \) specifying k periodic events \(\epsilon '\) with offset can be written with the event tuple:

an event stream or event density.Footnote 9 Therefore an event sequenceFootnote 10\(\epsilon \) specifying k periodic events \(\epsilon '\) with offset can be written with the event tuple:

Moreover, as a short form notation a set of corresponding event tuples defines the event density formally:

We choose the notation \(<a,b>_k\) to distinguish the new approach clearly from the event stream notation.

This definition introduces a new perspective and insight into event streams. A mathematical equation now describes an event stream with precisely defined mathematical properties instead of only writing a weak set of tuples. To describe event densities which model valid event streams, we assume a maximal event density  and a minimal event density

and a minimal event density  . Both are event densities which have the mathematical property of sub- or super-additivity. Additionally, it is very easy to bound the number of events. Instead of previous models, the term event density allows specifying a fixed number of events as a sporadic or bursty event stream.

. Both are event densities which have the mathematical property of sub- or super-additivity. Additionally, it is very easy to bound the number of events. Instead of previous models, the term event density allows specifying a fixed number of events as a sporadic or bursty event stream.

Example 2

(Periodic event model) The periodic event model describes an infinite number of periodic events. Assume \(\phi _{\epsilon }=0\), therefore \(k=\infty \) and the sequence of events is given by

Assume a sporadic event which occurs only once. In the event stream model, the definition of the event bound requires to set the period of the given event tuple of the sporadic event to \(p_\tau =\infty \). Now the period is zero if an event occurs only once and the sum limits the occurrence:

Example 3

(Sporadic event) A event which is sporadic and which occurs only at \(t_\epsilon = 10\,\text {ms}\) is described by

as originally defined by [23].

Example 4

(Periodic event model with jitter) First assume the established periodic event model with jitter:

Now consider we only like to describe four events in this model:

Such a description is natural, easier to understand, and more potent than the original form.

5.1.4 To count or not to count

After defining the event density, we have to consider how to count the events. As we have seen in Lemma 2, the execution time of a job is computed by integrating a couple of Dirac deltas. Therefore, we will find the number of events by integrating over a series of Dirac deltas or events, called event density. However, the integral gives us the freedom two sum over event densities in any given time interval. As we will see, this is a significant advantage compared to the counting of events in related work.

5.1.5 Counting by integrating dirac deltas

To compute the execution demand of a processor, events and a series of events must be counted during a given time. This number of events then is multiplied by the specified execution time of the task. By changing the event model to Dirac deltas, we have to count the number of deltas in a given timing interval. Integrating the series of Dirac deltas results in the number of events given in the time bounded by the limits of the integral:

Lemma 3

(Finite event bound) Assume any time interval \(\varDelta _{a,b} := [t_a,t_b] \in \mathbb {R}\). The number of events then can be counted by a function \(\mathbb {E}: \varGamma \times \mathbb {R}\times \mathbb {N}\rightarrow \mathbb {N}\)

Also, in the particular case of the periodic event model with countless events, this simplifies to:

Proof

We have to sum all events of an event density:

According to the definition of an event \(\epsilon (t_{\epsilon }) = =\int \nolimits _{-\infty }^{\infty }{\delta (t'-t_{\epsilon })}{t'}\), we get for any event density in \(\varDelta _{a,b}\)

By definition the number of event tuples is limited and the series given by equation 43 converges absolute for \(k \in [0,\infty )\), because of the bound \(\varDelta _{a,b}\) and \(n \in \mathbb {N}\). In the case of a finite number of events, the convergence of the sum is trivial. Therefore, the integral and the sum can be switched:

Note, that the first assumption does not holt if \(\varDelta _{a,b} \in (-\infty ,\infty )\). However, as we will see later, the sum or event density is always limited. \(\square \)

By definition of the unified event bound, we do not want to count events at \(t_b\). However, by definition in calculus, the Riemann integral is bounded by the interval \([t_a, t_b]\) and this is not the postulated interval \(\varDelta _a^b \in [t_a, t_b)\). Transforming the limits of the integral in a right-open interval can be done by masking the desired interval with the help of Heaviside functions. In this case, two variants of the infinite set of Heaviside functions as defined in Definition 9 are needed:

Definition 13

(Upper Heaviside function) Assume the general Heaviside function with \(s \in [0,1]\) and let \(s = 1\). Then the upper Heaviside function \(\overline{{\mathbb {H}}}: \mathbb {R}\rightarrow \{0,1\}\) is

Definition 14

(Lower Heaviside function) Assume the general Heaviside function with \(s \in [0,1]\) and let \(s = 0\). Then the lower Heaviside function \(\underline{{\mathbb {H}}}: \mathbb {R}\rightarrow \{0,1\}\) is

By applying Definitions 13 and 14 to Lemma 3 we can formulate the unified event bound as illustrated in Fig. 4:

Theorem 1

(Unified event bound function, ueb) Assume \(\mathbb {E}: \varGamma \times \mathbb {R}^2 \times \mathbb {N}\rightarrow \mathbb {N}\), then the number of events in any bounded interval \(\varDelta _{a,b} = [t_a, t_b)\) can be counted by

in the special case of a periodic event model this becomes

Proof

Assume we count the events in a bounded interval \([t_a,t_b]\). Instead of the infinite interval given in Eq. 40, we bound the integral by its limits:

The limits of the integration include \(t_a\) and \(t_b\) by definition. While \(\overline{{\mathbb {H}}}\left( t'-t_a\right) \cdot \overline{{\mathbb {H}}}\left( t_b-t'\right) =1\) only if \(t_a \le t \le t_b\) and 0 in all other cases, equation (51) can be rewritten as:

Changing the term \(\overline{{\mathbb {H}}}\left( t_b-t'\right) \) to \(\underline{{\mathbb {H}}}\left( t_b-t'\right) \) excludes \(t_b\) from the bound.Footnote 11 Therefore, the integration over \(\varDelta _{a,b} \in [t_a,t_b)\) can be formulated as

We observe that this integral is not only bounded by \(\varDelta _{a,b}\). Assume \(t_a \le t < t_b\), then this function is also bounded by t, and therefore we can writeFootnote 12

The proof for the periodic or sporadic model is obvious.\(\square \)

In worst case response time analysis and the demand bound test, the starting time of the analysis interval is implicitly set to \(t_a = 0\) by definition. Therefore, \(\varDelta _{0,b} = [0,t_b)\). Then the unified event bound function can be written as

Compare Eq. 57 with Eq. 41 given by Lemma 3 which shows the limitation of the scheduling theory: In contrast to previous work, the unified event bound allows computing the number of events in any time interval. Therefore the computation of the bound can be moved to any time point \(t \in \mathbb {R}\), and the unified event bound is invariant in time. However, Theorem 1 has additional properties useful in real-time scheduling analysis: Defining the event bound by integrating over Dirac pulses and limiting this integral by two different Heaviside functions, the upper and lower Heaviside function, we find several and different bounds if we combine different descriptions for integral limits. This results in four different cases:

-

i

The bound of the number of events k.

-

ii

The lower timing bound \(t_a\) defined by, the lower Heaviside mask or, the lower limit of the integral.

-

iii

The timing bound t given by the limitation of the Dirac comb or the upper limit of the integral.

-

iv

The above timing bound \(t_b\) as defined by the upper Heaviside mask.

The first result of this paper shows that previous work defined different event bound functions because not considering the limits of time intervals like in calculus. We have proven that a unified function has to consider the limits of a well-defined integration problem as we can see in Fig. 4. Additionally, we know from distribution theory, that the Dirac delta is the derive of the Heaviside function [15]. As a consequence, it is obvious to call an event stream an event density: The event count in the real-time analysis is equal to an integral over a dense series of Dirac deltas.

Definition 15

(Heaviside mask) The pair of Heaviside functions limits the integration interval by masking bounds:

With \({\mathbb {H}}(t-t_a)\) the left or early mask and \({\mathbb {H}}(t_b-t)\) the right or late mask. Note, that both Heaviside functions can be upper or lower Heaviside functions. Therefore four different masks exist: \({\mathbb {M}}(t,\varDelta _{\overline{a}}^{\overline{b}})\), \({\mathbb {M}}(t,\varDelta _{\overline{a}}^{\underline{b}})\), \({\mathbb {M}}(t,\varDelta _{\underline{a}}^{\overline{b}})\) and \({\mathbb {M}}(t,\varDelta _{\underline{a}}^{\underline{b}})\).

5.1.6 Traditional unified event bound

The previous section concludes that the unified event bound is a problem of finding the correct limitations or bounds to count the events. Let us investigate whether it is possible to reformulate the standard tests as given by equation 7 in a unified way. In this section, we study if it is possible to express the floor and ceil function by the unified approach as well.

Lemma 4

(Right-continuous event bound) If \(t \in \mathbb {R}\), the right-continuous event bound function is equal to a sum of Heaviside functions bounded by \(\overline{{\mathbb {H}}}\left( 0\right) \) and \(\overline{{\mathbb {H}}}\left( \infty \right) \) which is equal to the limits of an integral:

Proof

Assume any time point \(t=np+t''\) in the periodic event model. The event bound only changes in the points \(t_n=np\). Therefore, we have only to check what happens when the time t approaches np from the left and the right site:

Now, we apply Lemma 3 at the last step.Footnote 13 Let \(t'' \in (0,p_{\tau })\), then the number of events is computed by

Note, that if \(t'' \in (0,p_{\tau })\) no event is counted in this interval. Last we have to prove the equality for \(t=np_{\tau }+p_{\tau }\):

In all three cases the number of counted events is equal to the number of events given in the proof of lemma 1. \(\square \)

After showing that the new approach can express the right continuous event bound, we check how the left continuous event bound function can be described:

Lemma 5

(Left-continuous event bound function) Assume \(t, t' and~t'' \in \mathbb {R}{^+}\). The left-continous event bound is equal to an integral over Dirac deltas bounded by \(\underline{{\mathbb {H}}}\left( t'-0\right) =\underline{{\mathbb {H}}}\left( t'\right) \) and \(\underline{{\mathbb {H}}}\left( t-t'\right) \):

Proof

Assume any time point \(t=np_{\tau }+t''\) in the periodic event model. Again we check how many events are counted at \(t=np_{\tau }\), \(t=np_{\tau }+t''\) and \(t=np_{\tau }+p_{\tau }\). If \(t=np_{\tau }\) then \(t''= 0\) and the number of events is given by

Now let \(t'' \in (0,p_{\tau })\), then the number of events is computed by

Last, we have to prove the equality for \(t=np_{\tau }+p_{\tau }\):

In all three cases the number of counted events is equal to the number of events given in the proof of lemma 1. \(\square \)

We can conclude from the previous considerations that it should be possible to formulate the unified event bound by using the ceil or floor operators. As the unified event bound has to replace the left-continuous event bound in most timing points, we construct the unified event bound with the floor operator:

Theorem 2

(Unified event bound with floor operator) Assume the interval \(\varDelta _{a,b}=[t_a, t_b)\):

and in the periodic model

is equal in the interval, and only in the \(\varDelta _{a,b}=[t_a,t_b)\) to the unified event bound function given in Eq. 47. Note, that if \(t_a < 0\) and \(t > t_b\), Eqs. 47 and 87 are not equal.

Proof

For simplification and compatibility to related work we prove this only for the periodic event model. If \(t_a \le t < t_b\) then \(\overline{{\mathbb {H}}}\left( t-t_a\right) =1\) and \(\underline{{\mathbb {H}}}\left( t_b-t\right) =1\). Then

and if \(t \ge t_b\) then \(\underline{{\mathbb {H}}}\left( t_b-t\right) =0\) and \(\underline{{\mathbb {H}}}\left( t_b-t\right) =1\)

According to Lemma 4, Eq. 59 is equal to Eq. 89 if \(t < t_b\) and Eq. 74 is equivalent to 90 if \(t \ge t_b\). \(\square \)

If we want to express the traditional event bound used in the demand bound test, we can write \(t'=\varDelta _{0,t_b}=[0, t_b)\), and therefore

Someone will remark that Theorem 1 is hard to compute numerical. However, Theorem 2 shows that this can easily be done traditionally just by modifying the well-known event bound. Therefore the new approach is a theoretical improvement of the previous related work and extends the theory. It is important to recognize that Eq. 91 does not hold for \(t \ge t_b + p\). In this case, both functions are not equal anymore. It should be easily shown that a full compatible unified event bound with the floor operation exists. However, the traditional related work can not limit the number of events by a given number of events k. In traditional notation, we always assume \(k=\infty \). Table 1 concludes the results.Footnote 14

6 Unified analysis of real-time systems

In this section, we consider how the unified event bound can be used to solve real-time analysis problems. As the new event model is more expressive than the traditional model, we will present some impressive results. For the first time in real-time analysis, it is possible to formulate the bound tests test and the response time analysis only by different expressions of the same mathematical approach in static as in dynamic scheduling as well. For the first time in real-time analysis, it is possible to formulate the bound tests test and the response time analysis only by different expressions of the same mathematical approach in static as in dynamic scheduling as well. Furthermore, it is possible to model additional conditions on task scheduling without modification of the structure of our analysis equation. Therefore it is easy to derivate variants to model bursty or hierarchical event patterns or hierarchical schedulers.Footnote 15 The first step in this section is to investigate the relationship between digital signal processing and real-time analysis. Then we will consider some useful definitions and preliminaries to derivate the analysis equations for static and dynamic bound tests and response time analysis from only two axioms. Moreover, in the end, we discuss the results related to previous work.

6.1 A general event model: The event spectrum

Simple event models become complex in bursty events. Different solutions address this problem [2, 48]. However, both approaches are not intuitive and require different models to describe the synchronization of events. The two papers solve the problem in different ways but lack to give mathematical or formal approaches to their solutins. Applying now the mathematical toolset developed in Sect. 5 a hierarchical event stream as described by [2] can be derived mathematically. Assume two independent event densities: One event density with a small period and a second one with a much larger one. Both densities together form a new bursty event stream if they are synchronized. The convolution of Dirac combs computes the composition of two event densities. Therefore, synchronization in real-time scheduling can be modelled by

Theorem 3

(Hierarchical event density composition) Any hierarchical event stream is a composition of two flat event densities and can be computed by the convolution of the two event densities:

Proof

To make the proof easy to follow, we assume \(\delta (\tau -t)_{\epsilon } = \sum \limits _{\epsilon \in {\mathcal {E}}} \! \quad \sum \nolimits _{n=0}^{k-1}{\delta (t - \phi _{{\epsilon }} - np_{{\epsilon }})}\) and \(t_n=\phi _{{\epsilon _1}} + np_{{\epsilon _1}}\) and \(t_m=\phi _{{\epsilon _2}} + mp_{{\epsilon _2}}\):

Substitute \(\xi = \tau -t_n\) and \(d\tau = d\xi \):

For \(\xi \ne 0\), the trival solution is  . The only nontrivial solution of the last equation is for \(\xi =0\): We get \(\int \limits _{-\infty }^{\infty } \! \delta (0)_{\epsilon _1} \, \mathrm {d}\xi = 1\) and therefore

. The only nontrivial solution of the last equation is for \(\xi =0\): We get \(\int \limits _{-\infty }^{\infty } \! \delta (0)_{\epsilon _1} \, \mathrm {d}\xi = 1\) and therefore

In other words, the product of the Dirac delta becomes zero, exactly if \(\tau -t_n = 0\) and if \(\tau +t_m = t\). Therefore the theorem holds. \(\square \)

Definition 16

(Event spectrum) As a result from the previous theorem the following 3-tuple describes hierarchical and synchronized event densities:

with the hierarchical event density or event spectrum

The event spectrum is the most general form of an event model. An event spectrum can express all other known event models. According to Lemma 2, the event bound is calculated only by integrating the event spectrum density. Additionally, Theorem 3 gives us the possibility to compute composite event models during analysis. To best of our knowledge, no previous work in any known real-time analysis technique covers this aspect.

6.2 Task model: the request bound

The previous presented mathematical framework allows the formulation of advanced analysis techniques. Next, we discuss how to integrate the generalized multi-frame model and how easily interfering request bounds can be constructed to describe different scheduling policies.

6.2.1 Generalized request bound

The new approach to describe events with Dirac deltas is compelling: The advantage compared to established techniques is that the Dirac comb of Definition 12 addresses each event separately, and therefore, each event may have different properties. As a result, the model allows assigning different execution times to different events without any additional effort. In the established analysis, the request bound is given by a multiplication of the event bound and the worst-case execution time of the task. However, because it is easy to address each event separately by the unified event bound, the multiframe- [34] and the generalized multiframe model [8] integrates easily into the new approach. Formulating the event- and the request bound unified allows addressing each job with separate execution time. Therefore, it is possible to model task sets with complex execution time behaviour. However, often it is not necessary to assign an own execution time to each job. In this case, the execution time vector contains fewer elements as events occur by a task. Then the execution time can be addressed by restricted access to the given vector: The length of the vector then bounds the access as it could be described by \(n \mod |{\mathcal {C}}^n_{\tau ,\epsilon }|\) as also given in the multiframe model:

Definition 17

(Execution time vector) The execution time vector introduced by [34] of k different execution times of a task is given by

Note the style of the notation: The idea is to address each component of the vector by n. If we like to address each event separately, it is not possible anymore to use the notation given in related work by defining request and demand bound functions. Addressing different events and jobs in one equation require to write an integral and two sum symbols every time. Therefore, it is necessary to introduce a short-form notation to simplify the writing and reading of event- and request bounds. Based on Einstein’s well-known shorthand notation [20],Footnote 16 it is possible to define a shorthand notation for an event- and request bound that allows us to address each event or job of a given task separately:

Definition 18

(Short form notation for request bounds) Assuming \(\delta (t'-\phi _{\epsilon }-np_{\epsilon })^{n \le k}_{\tau ,\epsilon } \cdot {\mathcal {C}}_{\tau ,\epsilon }[n]\) is a short-hand notation for  and \({\mathcal {C}}\) is a vector that contains different execution times for different jobs, it is possible to write

and \({\mathcal {C}}\) is a vector that contains different execution times for different jobs, it is possible to write

To complete the integration of the multiframe model first introduced by [8] in this work, we need to redefine the concept of deadlines:

Definition 19

(Deadline Vector) The deadline vector is given by

6.2.2 The request bound of interfering jobs

The request bound function, as defined in general, does not distinguish between task priorities. Therefore it sums the requested execution times of all tasks. It is necessary to compute the interference of jobs to differentiate between the request of higher prior jobs that interrupt and interfere with a given job and other jobs that will have no impact on the final response. The following section will consider static as dynamic priorities as well. We look at how the same approach can solve both problems. Additionally, we find a unified solution of hierarchical scheduling of both algorithms which can be used in general to describe one of the two algorithms as well as a combination of them. Finally, the functions given in this section are solving the problems given by [43] and [12]. First, we formulate an abstract interfering request bound which can easily be adapted to different scheduling criteria:

Theorem 4

(Interference request bound) Assume any criteria \(\square \) and \(\blacksquare \ge \square \) has a higher or equal priority and any job of \(\tau '_\blacksquare \) interfere with \(\tau _\square \). The interfering jobs execution time is selected by masking the request bound:

Proof

The Heaviside function as given by Definition 13 returns 1 if \(\blacksquare _{\tau '} - \square _{\tau } \ge 0\) therefore

If a priority criterium of task \(\tau '\) is higher than the criterium of task \(\tau '\), then the task \(\tau '\) interrupts \(\tau \), the Heaviside function becomes 1, and the request of the higher priority task is added to the request bound. If the criterium of task \(\tau '\) is smaller than the one of task \(\tau \) the Heaviside function is equal to 0 modelling no interrupt. \(\square \)

The idea is to describe interference of jobs is generalized to a bunch of different relations \({\mathbb {H}}: \mathbb {R}\rightarrow \{0,1\}\) mapping any difference or real or integer numbers to boolean values:

Definition 20

(Heaviside relation) Again, assume any criteria \(\square \), the main relations can be computed by the following heaviside functions:

The Kronecker delta \(\delta _{{\blacksquare },{\square }}\) is a well known short-form writing if criteria are equal.

Definition 21

(Task scheduler) Any boolean equation of Heaviside relations models a scheduler in real-time analysis because it defines whether two tasks interfere or not. Assume any Heaviside relation \(\phi \in \{{\mathbb {H}}_{\blacksquare = \square }, {\mathbb {H}}_{\blacksquare \le \square }, {\mathbb {H}}_{\blacksquare \ge \square }, {\mathbb {H}}_{\blacksquare < \square }, {\mathbb {H}}_{\blacksquare > \square }\}\) the function \({\mathbb {S}}: \varGamma ^2 \rightarrow \{0,1\}\) represents a task scheduler which describes the interference of two tasks:

Note, the operation \(\max \limits _{}\) and \(\min \limits _{}\) represents or and and on integers.

Example 5

(Static task scheduler) Assume static priorities as given in Definition 6. Two jobs interfere if

In deadline monotonic scheduling the priority is not needed, it is possible to write directly

\({\mathbb {H}}_{d_{\tau '} < d_{\tau }}\) and \({\mathbb {H}}_{d_{\tau '} = d_{\tau }}\) are disjunct, therefore

Suppose the upcoming analysis using interfering request bounds should support arbitrary deadlines, then we have to consider jobs with the same static priorities. Therefore jobs with the same priority interfere if the requested job starts later than the interfering job.Footnote 17

Example 6

(Dynamic task scheduler) In dynamic scheduling the job with the earliest absolute deadline is scheduled. Therefore we have only to change the relative deadline to the absolute deadline in definition 5. In this case, the consideration of the request time is mandatory because system designers and programmers can not guarantee different absolute deadlines if the specified relative deadlines are different.

Note, that indifference to the static task scheduler the absolute deadline of each job must considered.Footnote 18\({\mathbb {H}}_{D^n_{\tau '} < D^n_{\tau }}\) and \({\mathbb {H}}_{D^n_{\tau '} = D^n_{\tau }}\) are disjunct, therefore

The first step in the discussion is the formulation of the interfering request bound for static schedulers and task priorities specified by fixed numbers:

Corollary 1

(Interference request bound in static scheduling) Assume any static scheduler with a priority \(\pi _{\tau }\) assigned to each task. If task \(\tau ' \) has a higher priority than task \(\tau \) and a higher number of \(\pi _{\tau '} > \pi _{\tau }\) specifies this behaviour, then the interference request bound \(\mathbb {R}^{\pi _{\tau '} \ge \pi _{\tau }}: \varGamma ^2 \times \mathbb {R}^2 \rightarrow \mathbb {R}\) is given by

Contrarily, if task \(\tau '\) has a higher priority than task \(\tau \) and a lower number of \(\pi _{\tau '} < \pi _{\tau '}\) specifies this behaviour, then the priority difference in the equation changes. If we assume deadline monotone scheduling the interfering request bound can express this directly:

Proof

Consider Theorem 4: For \(\pi _{\tau '} > \pi _{\tau }\) the Heaviside function \(\underline{{\mathbb {H}}}\left( \pi _{\tau '} - \pi _{\tau }\right) =1\), and the execution request of task \(\tau '\) is added to the interference task set of \(\tau \). If two priorities are equal the job with the earliest request is scheduled. The interference mask become one if \(t^r_{\tau } - t^r_{\tau '} \ge 0\). The proof of the other DMS equation is obvious. \(\square \)

According to this well-known definition of absolute deadlines, the interfering request bound in dynamic scheduling can be formulated by:

Corollary 2

(Interference request bound in dynamic scheduling) The interfering request bound \(\mathbb {R}^{D^n_{\tau '} \ge D^n_{\tau }}: \varGamma ^2 \times \mathbb {R}^2 \rightarrow \mathbb {R}\) of higher priority tasks in dynamic scheduling is

Proof

Assume dynamic scheduling and a given job \(\tau _{\epsilon ,n}\). The request bound of this job is the sum of all execution times of job’s \(\tau '_{\epsilon ,n}\) with an absolute deadline shorter than the job’s \(\tau _{\epsilon ,n}\) deadline. According to Theorem 4, the subtraction \(D^n_{\tau } - D^n_{\tau '}\) is positive if \(D^n_{\tau '} \le D^n_{\tau }\), therefore in this case \(\underline{{\mathbb {H}}}\left( D^n_{\tau } - D^n_{\tau '}\right) =1\). If \(D^n_{\tau '} > D^n_{\tau }\) the inequality \(\underline{{\mathbb {H}}}\left( D^n_{\tau } - D^n_{\tau '}\right) =0\). This means \(\underline{{\mathbb {H}}}\left( D^n_{\tau } - D^n_{\tau '}\right) \) selects the higher priority jobs in dynamic scheduling. This approach models scheduling were any task instance with an absolute deadline smaller than the absolute deadline of the considered task instance interfere in the considered task. However, what happens if two instances have the same absolute deadline? In this case, a tie-breaking condition is needed. The instance with the smaller request is scheduled to avoid scheduling overhead.Footnote 19 This behaviour is modelled by \(\delta _{{D^n_{\tau '}},{D^n_{\tau }}} \cdot \overline{{\mathbb {H}}}\left( t^r_{\tau } - t^r_{\tau '}\right) \). \(\square \)

In real-time scheduling theory, the structure of equations changes on any new problem. As we demonstrated the unified theory, the request bound of interfering jobs can be expressed by just one equation choosing the Heaviside mask’s correct parameters. It does not matter if we like to consider static or dynamic scheduling. Having just one theoretical approach is a decisive advantage compared to related work: While the structure of an equation does not change depending on the scheduling, it is possible to construct combined schedulers and formulate their analysis by constructing new equations. As an example, let us consider a hierarchical static and dynamic or a mixed scheduler.

Theorem 5

(Interference request bound in hierarchical scheduling) Assume a task set and assign a static priority to each task. Then tasks with different priorities schedule by static scheduling and tasks with equal priority schedule according to their deadlines dynamically. In the case of such hierarchical scheduling, the interfering request bound \(\mathbb {R}^{\tau ' \ge \tau } : \varGamma ^2 \times \mathbb {R}^2 \rightarrow \mathbb {R}\) is

Proof

If a task has a higher priority than the considered task, the function \(\underline{{\mathbb {H}}}\left( \pi _{\tau '} - \pi _{\tau }\right) =1\) else it is 0. If the priority of the tasks is equal and the absolute deadline of the interfering task is smaller than the absolute deadline of the considered task the scheduling is described by \(\delta _{{\tau },{\tau '}} \cdot [\;\underline{{\mathbb {H}}}\left( D^n_{\tau } - D^n_{\tau '}\right) + \delta _{{D^n_{\tau '}},{D^n_{\tau }}} \cdot \underline{{\mathbb {H}}}\left( t^r_{\tau '} - t^r_{\tau }\right) \;]\), as we already know from corollary 2. This function is only 1 if the absolute deadline of a potential interfering task \(\tau '\) is shorter than the deadline of the considered task \(\tau \) and the request time of the interfering task \(\tau '\) is earlier than the request time of the considered task \(\tau \). Therefore, the selecting criteria to identify an interfering task leads to 0 or 1 dependently on the tasks priority or absolute deadline. The function \(\max (\underline{{\mathbb {H}}}\left( \pi _{\tau '} - \pi _{\tau }\right) ), \overline{{\mathbb {H}}}\left( D^n_{\tau } - D^n_{\tau '}\right) )=1\) if \(\max (0,1\)), \(\max (0,1\)) or \(\max (1,1)\). This implements an or-operation between static and dynamic scheduling. \(\square \)

6.3 Analysis preliminaries

We need some additional assumptions to derive bound tests tests or a response time analysis based on the interfering request bound. In this section, we will introduce the concept of the remaining load to compute the backlog, which is not proceeded by a processor during a given time interval. Besides, we will give some useful definitions related to a generalized analysis framework.

Theorem 6

(Remaining load) The remaining load of a job interfered with other jobs is the computational demand of a given time interval [0, t) which cannot be computed by the processor during this time interval. Assume that the timing interval \(\varDelta ^{t}_{0}=t\), then the remaining load \(\mathbb {L}^{\tau ' \ge \tau } : \varGamma ^2 \times \mathbb {R}\rightarrow \mathbb {R}\) is:

Proof

If \(\forall t \in \mathbb {R}: \mathbb {L}^{\tau ' \ge \tau }_{\tau ,\tau '}(t) \ge 0\) und \(\mathbb {L}^{\tau ' \ge \tau }_{\tau ,\tau '}(t) = \mathbb {R}^{\tau ' \ge \tau }_{\tau , \tau '} (t, t)-t\), then

Because \(\mathbb {L}^{\tau ' \ge \tau }_{\tau ,\tau '}(t)-\mathbb {L}^{\tau ' \ge \tau }_{\tau ,\tau '}(s) \le \mathbb {L}^{\tau ' \ge \tau }_{\tau ,\tau '}(t-s)\) ([13], p. 7) the following equation holds:

At this point, we can carefully review the properties of the unified event bound as given by Theorem 1: Note that the domain of this function is a compact space: Because we defined the limits of the integral as an open interval, the domain t is compact. If we define any analysis in a bounded domain, then the unified event bound and therefore, the request and demand bound are compact. Bounding periodic task sets to their hyper-period bounds the timing interval as well. Therefore, the supremum of the function is equal to its maximum: \(sup = max\). Then

\(\square \)

The proof builds on the leaky bucket algorithm. In the case no load is requested to a processor, the remaining load is equal to 0. Only if service is requested, the processor executes it with the rate of t. The above proof is directly adapted from the network calculus as given by ([13], p.10, f.). By applying the leaky bucket approach from network calculus to the problem of remaining load in real-time analysis is elegant and leads to a beautiful result. Because we defined a compact unified event-, request- and demand bound by using an integral and we model its limits by a Heaviside function, we can now combine the result of the network respective the real-time calculus with the work done in established scheduling theory. If supremum and infimum become maximum and minimum in all cases, we can further use effective maximization and minimization techniques supported by numerical mathematics and therefore it is easy to apply this theory to computer algebra systems or numerical math tools.

Definition 22

(Average load) Given any time interval [a, b]Footnote 20, the average load \(\varDelta ^{t}_{0}=t\), the remaining load \(U: \varGamma \times \mathbb {R}\rightarrow \mathbb {R}\) is the mean value of the requested load related to the interval. The average load of a task set is the sum of the average loads of each task.

Lemma 6

(Average load by the unified request bound) The average load in any time interval [a, b) of a task set on a processor is given by

and in the special case in the interval [0, t)

Now only tasks with the same or a higher priority should be considered, we use

and

for the interval [0, t).

Proof

The average load of the requested jobs in any interval related to the duration of this interval. In some cases, there is some load left from previous intervals. That remains in an additional load:

Note that the computation of the utilization requires a summation during [a, b). Therefore, the request bound is given by \(\mathbb {R}^{}_{\varGamma } (\varDelta ^{b}_{a}, \varDelta ^{b}_{a})\). Let us now consider the utilization in the interval [0, t):

\(\square \)

6.4 Unified bound tests analysis

bound tests tests build on utilization bounds. Therefore we have to check whether the utilization of task set is always smaller than 1 or \(100\%\). A utilization bound given for any time interval based on the interference request bound, and the average load allows bound tests tests for static, dynamic, and hierarchical scheduling.

Theorem 7

(bound tests analysis for static and dynamic scheduling) Assume a task set and a hierarchical scheduler. If a task has a higher priority than another task, it executes first, and if two tasks have the same priority, they are scheduling under earliest deadline first. The bound tests of a given independent task set executed by one computing resource with a hierarchical static, and a dynamic scheduler can then be guaranteed, if and only if

Proof

The bound tests test can be derived from the utilization boundFootnote 21

and in the special case in the interval [0, t)

A task set is feasible if \(\forall t \in [0,{\mathcal {P}}_{\varGamma }]\) for any task the utilization \(u_{\tau }(t) \le 1\). If \(t_a=t_0=0\) then \(\mathbb {L}(0) = 0\) and thereforeFootnote 22

Now we separate all higher priority tasks from tasks with the same priority and multiply by t:

or

Note that we consider tasks with the same priority and tasks with higher priorities in independent terms because we want to derive a bound tests test for static and dynamic scheduling as well. Therefore we have to consider two cases:

-

A

If \(\pi _{\tau '}=\pi _{\tau }\) then \(\delta _{{\pi _{\tau '}},{\pi _{\tau }}}=1\) and \(\underline{{\mathbb {H}}}\left( \pi _{\tau '}-\pi _{\tau }\right) =0\). The bound tests test for dynamic scheduling is then given by

$$\begin{aligned}&\forall t \in {\mathcal {P}}_{\varGamma }, \forall \tau \in \varGamma : \qquad \quad \sum \limits _{\tau ' \in \varGamma } \! \mathbb {E}_{\tau '} (t-d_{\tau '}, {\mathcal {P}}_{\varGamma }) \cdot c_{\tau '}^{+} \nonumber \\&\qquad = \sum \limits _{\tau ' \in \varGamma } \! \mathbb {R}_{\tau '} (t-d_{\tau '}, {\mathcal {P}}_{\varGamma }) \le t \end{aligned}$$(144)In this case \(\tau ' = \tau \) and therefore

$$\begin{aligned}&\forall t \in {\mathcal {P}}_{\varGamma }: \sum \limits _{\tau \in \varGamma } \! \mathbb {E}_{\tau } (t-d_{\tau }, {\mathcal {P}}_{\varGamma }) \cdot c_{\tau '}^{+} \nonumber \\&\quad = \sum \limits _{\tau \in \varGamma } \! \mathbb {R}_{\tau } (t-d_{\tau }, {\mathcal {P}}_{\varGamma }) \le t \end{aligned}$$(145)which is equal to the processor demand test or Problem 2.

-

B

Consider \(\pi _{\tau '} \ne \pi _{\tau }\): Now \(\delta _{{\pi _{\tau '}},{\pi _{\tau }}}=0\) only for the considered task \(\tau \) and in all other cases \(\delta _{{\pi _{\tau '}},{\pi _{\tau }}}=0\) and \(\underline{{\mathbb {H}}}\left( \pi _{\tau '}-\pi _{\tau }\right) =1\) if a task \(\tau '\) has a higher priority than the considered task \(\tau \). Then we get