Abstract

We study biased random walk on subcritical and supercritical Galton–Watson trees conditioned to survive in the transient, sub-ballistic regime. By considering offspring laws with infinite variance, we extend previously known results for the walk on the supercritical tree and observe new trapping phenomena for the walk on the subcritical tree which, in this case, always yield sub-ballisticity. This is contrary to the walk on the supercritical tree which always has some ballistic phase.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this paper, we investigate biased random walks on subcritical and supercritical Galton–Watson trees. These are a natural setting for studying trapping phenomena as dead-ends, caused by leaves in the trees, slow the walk. These models can be used to approach more difficult problems concerning biased random walks on percolation clusters (as studied in [11, 13, 24]) and random walk in random environment (see for example [18, 25]). For a recent review of trapping phenomena and random walk in random environment we direct the reader to [3] which covers recent developments in a range of models of directionally transient and reversible random walks on underlying graphs such as supercritical GW-trees and supercritical percolation clusters.

For supercritical GW-trees with leaves, it has been shown in [21] that, for a suitably large bias away from the root, the dead-ends in the environment create a sub-ballistic regime. In this case, it has further been observed in [4], that if the offspring distribution has finite variance then the walker follows a polynomial escape regime but cannot be rescaled properly due to a certain lattice effect. (In [5, 15] it is shown that, in a related model where the conductance along each is chosen randomly according to a distribution satisfying a certain non-lattice assumption, the tail of the trapping time obeys a pure power law and the rescaled walk converges in distribution.) Here we show that, when the offspring law has finite variance, the walk on the subcritical GW-tree conditioned to survive experiences similar trapping behaviour to the walk on the supercritical GW-tree shown in [4]. However, the main focus of the article concerns offspring laws belonging to the domain of attraction of some stable law with index \(\alpha \in (1,2)\). In this setting, although the distribution of time spent in individual traps has polynomial tail decay in both cases, the exponent varies with \(\alpha \) in the subcritical case and not in the supercritical case. This results in a polynomial escape of the walk which is always sub-ballistic in the subcritical case unlike the supercritical case which always has some ballistic phase.

We now describe the model of a biased random walk on a subcritical GW-tree conditioned to survive which will be the main focus of the article. Let \(f(s):=\sum _{k=0}^\infty p_ks^k\) denote the probability generating function of the offspring law of a GW-process with mean \(\mu >0\) and variance \(\sigma ^2>0\) (possibly infinite) and let \(Z_n\) denote the nth generation size of a process with this law started from a single individual, i.e. \(Z_0=1\). Such a process gives rise to a random tree where individuals in the process are represented by vertices and undirected edges connect individuals with their offspring.

For a fixed tree T let \(\rho \) denote its root, \(Z_n^T\) the size of the nth generation, \(\overleftarrow{x}\) the parent of \(x \in T\), c(x) the set of children of x, \(d_x:=|c(x)|\) the out-degree of x, d(x, y) the graph distance between vertices x, y, \(|x|:=d(\rho ,x)\) the graph distance between x and the root and \(T_x\) to be the descendent tree of x. A \(\beta \)-biased random walk on a fixed, rooted tree T is a random walk \((X_n)_{n \ge 0}\) on T which is \(\beta \)-times more likely to make a transition to a given child of the current vertex than the parent (which are the only options). That is, the random walk is the Markov chain started from \(X_0=z\) defined by the transition probabilities

We use \(\mathbb {P}_\rho (\cdot ):=\int P ^T_\rho (\cdot )\mathbf {P}(\text {d}T)\) for the annealed law obtained by averaging the quenched law \( P ^T_\rho \) over a law \(\mathbf {P}\) on random trees with a fixed root \(\rho \). In general we will drop the superscript T and subscript \(\rho \) when it is clear to which tree we are referring and we start the walk at the root.

We will mainly be interested in GW-trees \(\mathcal {T}\) which survive, that is \(\mathcal {H}(\mathcal {T}):=\sup \{n\ge 0: Z_n>0\}=\infty \). It is classical (e.g. [2]) that when \(\mu >1\) there is some strictly positive probability \(1-q\) that \(\mathcal {H}(\mathcal {T})=\infty \) whereas when \(\mu \le 1\) we have that \(\mathcal {H}(\mathcal {T})\) is almost surely finite. However, it has been shown in [17] that there is some well defined probability measure \(\mathbf {P}\) over f-GW trees conditioned to survive for infinitely many generations which arises as a limit of probability measures over f-GW trees conditioned to survive at least n generations. Henceforth, we assume \(\mathbf {P}\) is this law and \(X_n\) is a random walk on an f-GW-tree conditioned to survive.

The main object of interest is \(|X_n|\), that is, how the distance from the root changes over time. Due to the typical size of finite branches in the tree being small and the walk not backtracking too far we shall see that \(|X_n|\) has a strong inverse relationship with the first hitting times \(\Delta _n:=\inf \{m\ge 0:X_m \in \mathcal {Y}, \; |X_m|=n\}\) of levels along the backbone \(\mathcal {Y}:=\{x \in \mathcal {T}:\mathcal {H}(\mathcal {T}_x)=\infty \}\) so for much of the paper we will consider this instead. It will be convenient to consider the walk as a trapping model. To this end we define the underlying walk \((Y_k)_{k\ge 0}\) defined by \(Y_k:=X_{\eta _k}\) where \(\eta _0:=0\) and \(\eta _k:=\inf \{m>\eta _{k-1}: \; X_m,X_{m-1} \in \mathcal {Y}\}\) for \(k\ge 1\).

When \(X_n\) is a walk on an f-GW tree conditioned to survive for f supercritical (\(\mu >1\)), it has been shown in [21] that \(\nu (\beta ):=\lim _{n \rightarrow \infty }|X_n|/n\) exists \(\mathbb {P}\)-a.s. and is positive if and only if \(\mu ^{-1}<\beta <f'(q)^{-1}\) in which case we call the walk ballistic. Furthermore, although no explicit expression for the speed \(\nu \) is known, a description of the invariant distribution of the environment seen from the particle is used in [1] to give an expression of the speed in terms of the annealed expectation. This expression coincides with the speed of the walk on a certain regular tree where each vertex has some number of children \(m_\beta \); in particular, it can be seen that \(m_\beta \le \mu \) therefore the randomness of the tree slows the walk. If \(\beta \le \mu ^{-1}\) then the walk is recurrent because the average drift of Y acts towards the root. When \(\beta \ge f'(q)^{-1}\) the walker expects to spend an infinite amount of time in the finite trees which hang off \(\mathcal {Y}\) (see Fig. 5 in Sect. 10) thus causing a slowing effect which results in the walk being sub-ballistic. In this case, the correct scaling for some non-trivial limit is \(n^{\gamma }\) where \(\gamma \) will be defined later in (1.1). In particular, it has been shown in [4] that, when \(\sigma ^2<\infty \), the laws of \(|X_n|n^{-\gamma }\) are tight and, although \(|X_n|n^{-\gamma }\) doesn’t converge in distribution, we have that \(\Delta _nn^{-1/\gamma }\) converges in distribution under \(\mathbb {P}\) along certain subsequences to some infinitely divisible law. In Sect. 10 we extend this result by relaxing the condition that the offspring law has finite variance and instead requiring only that it belongs to the domain of attraction of some stable law of index \(\alpha >1\).

Recall that the offspring law of the process is given by \(\mathbf {P}(\xi =k):=p_k\), then we define the size-biased distribution by the probabilities \(\mathbf {P}(\xi ^*=k):=kp_k\mu ^{-1}\). It can be seen (e.g. [16]) that the subcritical (\(\mu <1\)) GW-tree conditioned to survive coincides with the following construction: Starting with a single special vertex, at each generation let every normal vertex give birth onto normal vertices according to independent copies of the original offspring distribution and every special vertex give birth onto vertices according to independent copies of the size-biased distribution, one of which is chosen uniformly at random to be special. Unlike the supercritical tree which has infinitely many infinite paths, the backbone of the subcritical tree conditioned to survive consists of a unique semi-infinite path from the initial vertex \(\rho \). We call the vertices not on \(\mathcal {Y}\) which are children of vertices on \(\mathcal {Y}\) buds and the finite trees rooted at the buds traps (see Fig. 2 in Sect. 3). In this paper we consider walks with positive bias therefore the walk is transient and only returns to the starting vertex \(\rho \) finitely often. Moreover, we are interested in the case where the trapping times are heavy tailed and therefore since the traps are i.i.d. the walk closely resembles a one dimensional directed trap model as studied in [26].

Briefly, the phenomena that can occur in the subcritical case are as follows. When \(\mathbf {E}[\xi \log ^+(\xi )]<\infty \) and \(\mu <1\) there exists a limiting speed \(\nu (\beta )\) such that \(|X_n|/n\) converges almost surely to \(\nu (\beta )\) under \(\mathbb {P}\); moreover, the walk is ballistic (\(\nu (\beta )>0\)) if and only if \(1<\beta <\mu ^{-1}\) and \(\sigma ^2<\infty \). This essentially follows from the argument used in [21] (to show the corresponding result on the supercritical tree) with the fact that, by (2.1) and (5.2), the conditions given are precisely the assumptions needed so that the expected time spent in a branch is finite (see [8] or [9] for further detail). The sub-ballistic regime has four distinct phases. When \(\beta \le 1\) the walk is recurrent and we are not concerned with this case here. When \(1<\beta <\mu ^{-1}\) and \(\sigma ^2=\infty \) the expected time spent in a trap is finite and the slowing of the walk is due to the large number of buds. When \(\beta \mu >1\) and \(\sigma ^2<\infty \), the expected time spent in a subcritical GW-tree forming a trap is infinite because the strong bias forces the walk deep into traps and long sequences of movements against the bias are required to escape. In the final case for the subcritical tree (\(\beta \mu >1\), \(\sigma ^2=\infty \)) slowing effects are caused by both strong bias and the large number of buds.

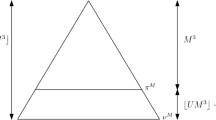

Figure 1 is the phase diagram for the almost sure limit of \(\log (|X_n|)/\log (n)\) (which is the leading order polynomial exponent in the scaling of \(|X_n|\) relative to \(\beta \) and \(\mu \)) where the offspring law has stability index \(\alpha \) (which is 2 when \(\sigma ^2<\infty \)) and we define

where we note that \(f'(q)\) and \(\mu \) are the mean number of offspring from vertices in traps of the supercritical and subcritical trees respectively. Strictly, \(f'(q)\) isn’t a function of \(\mu \) therefore the line \(\beta =f'(q)^{-1}\) is not well defined; Fig. 1 shows the particular case when the offspring distribution belongs to the geometric family. It is always the case that \(f'(q)<1\) therefore some such region always exists however the parametrisation depends on the family of distributions.

When the offspring law has finite variance, the limiting behaviour of \(|X_n|\) on the supercritical and subcritical trees is very similar. Both have a regime with linear scaling (which is, in fact, almost sure convergence of \(|X_n|/n\)) and a regime with polynomial scaling caused by the same phenomenon of deep traps (which results in \(|X_n|n^{-\gamma }\) not converging). When the offspring law has infinite variance, the bud distribution of the subcritical tree has infinite mean which causes an extra slowing effect which isn’t seen with the supercritical tree. This equates for the different exponents observed in the two models as shown in Fig. 1. The walk on the critical (\(\mu =1\)) tree experiences a similar trapping mechanism to the subcritical tree; however, the slowing is more extreme and belongs to a different universality class which had been shown in [10] to yield a logarithmic escape rate.

2 Statement of main theorems and proof outline

In this section we introduce the three sub-ballistic regimes in the subcritical case and the one further regime for the infinite variance supercritical case that we consider here. We then state the main theorems of the paper.

The subcritical tree has bud distribution \(\xi ^*-1\) where \(\mathbf {P}(\xi ^*=k)=kp_k\mu ^{-1}\) which yields the following important property relating the size biased and offspring distributions

Choosing \(\varphi \) to be the identity we have finite mean of the size-biased distribution if and only if the variance of the offspring distribution is finite. This causes a phase transition for the walk that isn’t seen in the supercritical tree. The reason for this is that in the corresponding decomposition for the supercritical tree we have subcritical GW-trees as leaves but the number of buds is exponentially tilted and therefore maintains moment properties.

If the offspring law belongs to the domain of attraction of some stable law of index \(\alpha \in (1,2)\) then taking \(\varphi (x)=x\mathbf {1}_{\{x\le t\}}\) shows that the size biased distribution belongs to the domain of attraction of some stable law with index \(\alpha -1\) and allows us to attain properties of the scaling sequences (see for example [12, IX.8]).

The first case we consider is when \(\beta \mu <1\) but \(\sigma ^2=\infty \); we refer to this as the infinite variance, finite excursion case:

Definition 1

(IVFE) The offspring distribution has mean \(\mu \) satisfying \(1<\beta <\mu ^{-1}\) and belongs to the domain of attraction of a stable law of index \(\alpha \in (1,2)\).

Under this assumption we let L vary slowly at \(\infty \) such that as \(x\rightarrow \infty \)

and choose \((a_n)_{n\ge 1}\) to be some scaling sequence for the size-biased law such that for any \(x>0\), as \(n \rightarrow \infty \) we have \(\mathbf {P}(\xi ^*\ge xa_n) \sim x^{-(\alpha -1)}n^{-1}\). Moreover for some slowly varying function \(\tilde{L}\) we have that \(a_n=n^{\frac{1}{\alpha -1}}\tilde{L}(n)\).

In this case we have that the slowing is caused by the number of excursions in traps. Since \(\beta \) is small (i.e. less than \(\mu ^{-1}\)) we have that the expected time spent in a trap is finite. The number of excursions the walk takes into a branch is of the same order as the number of buds; since the size-biased law has infinite mean there are a large number of buds and therefore a large number of excursions. The main result for IVFE is Theorem 1 which reflects that \(\Delta _n\) scales similarly to the sum of independent copies of \(\xi ^*\).

Theorem 1

For IVFE, the laws of the process

converge weakly as \(n \rightarrow \infty \) under \(\mathbb {P}\) with respect to the Skorohod \(J_1\) topology on \(D([0,\infty ),\mathbb {R})\) to the law of an \(\alpha -1\) stable subordinator \(R_t\) with Laplace transform

where \(C_{\alpha ,\beta ,\mu }\) is a constant which we shall determine during the proof (see 9.1).

We refer to the second \((\sigma ^2<\infty , \;\beta \mu >1)\) and third \((\sigma ^2=\infty , \;\beta \mu >1)\) cases as the finite variance, infinite excursion and infinite variance, infinite excursion cases respectively.

Definition 2

(FVIE) The offspring distribution has mean \(\mu \) satisfying \(1<\mu ^{-1}<\beta \) and variance \(\sigma ^2<\infty \).

Definition 3

(IVIE) The offspring distribution has mean \(\mu \) satisfying \(1<\mu ^{-1}<\beta \) and belongs to the domain of attraction of a stable law of index \(\alpha \in (1,2)\).

As for IVFE, in IVIE we let L vary slowly at \(\infty \) such that (2.2) holds and \((a_n)_{n\ge 1}\) be some scaling sequence for the size-biased law such that for any \(x>0\), as \(n \rightarrow \infty \) we have \(\mathbf {P}(\xi ^*\ge xa_n) \sim x^{-(\alpha -1)}n^{-1}\). It then follows that \(a_n=n^{\frac{1}{\alpha -1}}\tilde{L}(n)\) for some slowly varying function \(\tilde{L}\). In FVIE, \(a_n=n\) will suffice.

In FVIE and IVIE the slowing is caused by excursions in deep traps because the walk is required to make long sequences of movements against the bias in order to escape. We shall see that only the depth H (and not the foliage) is important to the scaling. By comparison with the model in which we strip all of the branch except the unique self-avoiding path to the deepest point; we see that, by transience, the walk reaches the deepest point with positive probability and then takes a geometric number of short excursions with escape probability of the order \(\beta ^{-H}\). In particular, this means that the expected time spent in a branch of height H will cluster around \(\beta ^{H}\).

Intuitively, the main reason we observe different scalings in these two cases is due to the way the number of buds affects the height of the branch. The height of a GW-tree is approximately geometric; in particular, the tallest of n independent trees will typically be close to \(\log (n)/\log (\mu ^{-1})\). In FVIE the number of buds has finite mean therefore we see order n buds by level n hence tallest will have height close to \(\log (n)/\log (\mu ^{-1})\). In IVIE the number of buds has infinite mean but belongs to the domain of attraction of some stable law. In particular, the number of buds seen by level n is equal in distribution to the sum of n independent copies of \(\xi ^*-1\) (which scales with \(a_n\)). It therefore follows that, in IVIE, the tallest tree up to level n will have height close to \(\log (a_n)/\log (\mu ^{-1})\). Since only the deepest trees are significant and the time spent in a large branch clusters around \(\beta ^H\) we see that the natural scaling is \(\beta ^{\log (n)/\log (\mu ^{-1})}=n^{1/\gamma }\) in FVIE and \(\beta ^{\log (a_n)/\log (\mu ^{-1})}=a_n^{1/\gamma }\) in IVIE.

Since H is approximately geometric we have that \(\beta ^H\) won’t belong to the domain of attraction of any stable law. For this reason, as in [4], we only see convergence along specific increasing subsequences \(n_l(t):=\lfloor t \mu ^{-l}\rfloor \) for \(t>0\) in FVIE and \(n_l(t)\) such that \(a_{n_l(t)} \sim t\mu ^{-l}\) for IVIE. Such a sequence exists for any \(t>0\) since by choosing \(n_l(t):=\sup \{m\ge 0:a_m< t\mu ^{-l}\}\) we have that \(a_{n_l}< t\mu ^{-l} \le a_{n_l+1}\) and therefore

Recalling (1.1), the main results for FVIE and IVIE are Theorems 2 and 3, which reflect slowing due to deep excursions.

Theorem 2

In FVIE, for any \(t>0\) we have that as \(l\rightarrow \infty \)

in distribution under \(\mathbb {P}\), where \(R_t\) is a random variable with an infinitely divisible law.

Theorem 3

In IVIE, for any \(t>0\) we have that as \(l\rightarrow \infty \)

in distribution under \(\mathbb {P}\), where \(R_t\) is a random variable with an infinitely divisible law.

We write \(r_n\) to be \(a_n\) in IVFE, \(n^{1/\gamma }\) in FVIE and \(a_n^{1/\gamma }\) in IVIE; then, letting \(\overline{r}_n:=\max \{m\ge 0:r_m\le n\}\) we will also prove Theorem 4. This shows that, although the laws of \(X_n/\overline{r}_n\) don’t converge in general (for FVIE and IVIE), the suitably scaled sequence is tight and we can determine the leading order polynomial exponent explicitly.

Theorem 4

In IVFE, FVIE or IVIE we have that

-

1.

The laws of \((\Delta _n/r_n)_{n\ge 0}\) under \(\mathbb {P}\) are tight on \((0,\infty )\);

-

2.

The laws of \((|X_n|/\overline{r}_n)_{n\ge 0}\) under \(\mathbb {P}\) are tight on \((0,\infty )\).

Moreover, in IVFE, FVIE and IVIE respectively, we have that \(\mathbb {P}\)-a.s.

The final case we consider is an extension of a result of [4] for the walk on the supercritical tree. The argument used for the infinite variance case is generally the same as in the finite variance case but needs some technical input. This is provided by three lemmas which we put aside until Sect. 10. For the same reason as in FVIE, we only see convergence along specific subsequences \(n_l(t):=\lfloor t f'(q)^{-l}\rfloor \) for \(t>0\).

Theorem 5

(Infinite variance supercritical case) Suppose the offspring law belongs to the domain of attraction of some stable law of index \(\alpha \in (1,2)\), has mean \(\mu >1\) and the bias satisfies the bound \(\beta >f'(q)^{-1}\). Then,

in distribution as \(l\rightarrow \infty \) under \(\mathbb {P}\), where \(R_t\) is a random variable with an infinitely divisible law whose parameters are given in [4]. Moreover, the laws of \((\Delta _nn^{-\frac{1}{\gamma }})_{n\ge 0}\) and \((|X_n|n^{-\gamma })_{n\ge 0}\) under \(\mathbb {P}\) are tight on \((0,\infty )\) and \(\mathbb {P}\)-a.s.

The proofs of Theorems 1, 2 and 3 follow a similar structure to the corresponding proof of [4] which, for the walk on the supercritical tree, only considers the case in which the variance of the offspring distribution is finite. However, for the latter reason, the proofs of Theorems 1 and 3 become more technical in some places, specifically with regards to the number of traps in a large branch. The proof can be broken down into a sequence of stages which investigate different aspects of the walk and the tree. This is ideal for extending the result onto the supercritical tree because many of these behavioural properties will be very similar for the walk on the subcritical tree due to the similarity of the traps.

In all cases it will be important to decompose large branches. In Sect. 3 we show a decomposition of the number of deep traps in any deep branch. This is only important for FVIE and IVIE since the depth of the branch plays a key role in decomposing the time spent in large branches. In Sect. 4 we determine conditions for labelling a branch as large in each of the regimes so that large branches are sufficiently far apart so that, with high probability, the underlying walk won’t backtrack from one large branch to the previous one. In Sect. 5 we justify the choice of label by showing that time spent outside these large branches is negligible. From this we then have that \(\Delta _n\) can be approximated by a sum of i.i.d. random variables whose distribution depends on n. In Sect. 6 we only consider IVFE and show that, under a suitable scaling, these variables converge in distribution which allows us to show the convergence of their sum. Similarly, in Sect. 7 we show that the random variables, suitably scaled, converge in distribution for FVIE and IVIE. We then show convergence of their sum in Sect. 8. In Sect. 9 we prove Theorem 4 which is standard following Theorems 1, 2 and 3. Finally, in Sect. 10, we prove three short lemmas which extend the main result of [4] to prove Theorem 5. We require a lot of notation much of which is very similar; a glossary follows Sect. 10 which includes most of the notation used repeatedly throughout.

3 Number of traps

In this section we show asymptotics for the probability that the height of a branch is large and use it to determine the distribution over the number of large traps in a large branch. Unless stated otherwise we assume \(\mu <1\).

In the construction of the subcritical GW-tree conditioned to survive \(\mathcal {T}\) described in the introduction, the special vertices form the infinite backbone \(\mathcal {Y}=\{\rho _0,\rho _1,\ldots \}\) consisting of all vertices with an infinite line of descent where \(\rho _i\) is the vertex in generation i. Each vertex \(\rho _i\) on the backbone is connected to buds \(\rho _{i,j}\) for \(j=1,\ldots ,d_{\rho _i}-1\) (which are the normal vertices that are offspring of special vertices in the construction). Each of these is then the root of an f-GW tree \(\mathcal {T}_{\rho _{i,j}}\). We call each \(\mathcal {T}_{\rho _{i,j}}\) a trap and the collection from a single backbone vertex (combined with the backbone vertex) \(\mathcal {T}^{*-}_{\rho _i}\) a branch. Figure 2 shows an example of the first five generations of a tree \(\mathcal {T}\). The solid line represents the backbone and the two dotted ellipses identify a sample branch and trap. The dashed ellipse indicates the children of \(\rho _1\) which, since \(\rho _1\) is on the backbone, have quantity distributed according to the size-biased law. It will be helpful throughout to work on a dummy branch which is equal in distribution to \(\mathcal {T}^{*-}_{\rho _i}\) for any i thus we define the following random tree.

Definition 4

(Dummy branch) Define \(\mathcal {T}^{*-}\) to be a random tree rooted at \(\rho \) with first generation vertices \(\rho _1,\ldots ,\rho _{\xi ^*-1}\) which are roots of independent f-GW-trees \((\mathcal {T}_i^\circ )_{i= 1}^k\) where \(\xi ^*\) is a size biased random variable independent of the rest of the tree. Define \(\mathcal {T}^\circ \) to be a dummy f-GW-tree.

The structure of the large traps will have an important role in determining the convergence of the scaled process. In this section we determine the distribution over the number of deep traps rooted at backbone vertices with at least one deep trap. We will show that there is only a single deep trap at any backbone vertex when the offspring law has finite variance whereas, when the offspring law belongs to the domain of attraction of a stable law with index \(\alpha <2\) we have that the number of deep traps converges in distribution to a certain heavy tailed law.

A fundamental result for branching processes (see, for example [20]), is that for \(\mu <1\) and \(Z_n\) an f-GW process, the sequence \(\mathbf {P}(Z_n>0)/\mu ^n\) is decreasing; moreover, \(\mathbf {E}[\xi \log (\xi )]<\infty \) if and only if the limit of \(\mathbf {P}(Z_n>0)\mu ^{-n}\) as \(n \rightarrow \infty \) exists and is strictly positive. This assumption holds under any of the hypotheses thus for this paper we will always make this assumption and let \(c_\mu \) be the constant such that

Recall that \(\mathcal {H}(T)\) denotes the height of a tree T rooted at \(\rho \). Denote

to be the probability that a given trap is of height at most \(m-1\) (although in general we shall write s for convenience). Write \(N(m):=\sum _{j=1}^{\xi ^*-1}\mathbf {1}_{\{\mathcal {H}(\mathcal {T}_i^\circ )\ge m\}}\) to be the number of traps of height at least m in the dummy branch then we are interested in the limit as \(m \rightarrow \infty \) of

for \(l \ge 1\). Recall that f is the p.g.f. of the offspring distribution, write \(f^{(k)}\) for its \(k{\text {th}}\) derivative then we have that

In particular, we have that

Lemma 3.1 shows that, when \(\sigma ^2<\infty \), with high probability there will only be a single deep trap in any deep branch.

Lemma 3.1

When \(\sigma ^2<\infty \)

Proof

Using (3.3) and (3.4) we have that

By monotonicity in s we have that

which is finite since \(\sigma ^2<\infty \). Each summand in the denominator is increasing in s for \(s\in (0,1)\) and by L’Hopital’s rule \(1-s^{k-1} \sim (k-1)(1-s)\) as \(s\rightarrow 1^\circ \) therefore, by monotone convergence, the denominator in the final term of (3.6) converges to the same limit. \(\square \)

In order to determine the correct threshold for labelling a branch as large we will need to know the asymptotic form of \(\mathbf {P}(N(m)\ge 1)\). Corollary 3.2 gives this for the finite variance case.

Corollary 3.2

Suppose \(\sigma ^2<\infty \) then

Proof

Let \(f_*\) denote the p.g.f. of \(\xi ^*\) then \(\mathbf {P}(N(m)\ge 1) = 1-s^{-1}f_*(s)\). Since \(\sigma ^2<\infty \) we have that \(f_*'(s)\) exists and is continuous for \(s\le 1\) thus as \(s \rightarrow 1^\circ \) we have that \(f_*(1)-f_*(s) \sim (1-s)f_*'(1) = (1-s)\mathbf {E}[\xi ^*]\). It therefore follows that

The result then follows by the definitions of \(c_\mu \) (3.1) and s (3.2). \(\square \)

We now consider the case when \(\sigma ^2=\infty \) but \(\xi \) belongs to the domain of attraction of a stable law of index \(\alpha \in (1,2)\). The following lemma concerning the form of the probability generating function of the offspring distribution will be fundamental in determining the distribution over the number of large traps rooted at a given backbone vertex. The case \(\mu =1\) appears in [7]; the proof of Lemma 3.3 is a simple extension of this hence the proof is omitted.

Lemma 3.3

Suppose the offspring distribution belongs to the domain of attraction of a stable law with index \(\alpha \in (1,2)\) and mean \(\mathbf {E}[\xi ]=\mu \).

-

1.

If \(\mu \le 1\) then as \(s\rightarrow 1^\circ \)

$$\begin{aligned} \mathbf {E}\left[ s^\xi \right] -s^\mu \sim \frac{\Gamma (3-\alpha )}{\alpha (\alpha -1)}(1-s)^\alpha L((1-s)^{-1}) \end{aligned}$$where \(\Gamma (t)=\int _0^\infty x^{t-1}e^{-x}\mathrm {d}x\) is the usual gamma function.

-

2.

If \(\mu >1\) then

$$\begin{aligned} 1-\mathbf {E}\left[ s^\xi \right] = \mu (1-s)+ \frac{\Gamma (3-\alpha )}{\alpha (\alpha -1)}(1-s)^\alpha \overline{L}\left( (1-s)^{-1}\right) \end{aligned}$$where \(\overline{L}\) varies slowly at \(\infty \).

When \(\mu <1\) it follows that there exists a function \(L_1\) (which varies slowly as \(s\rightarrow 1^\circ \)) such that \(\mathbf {E}[s^\xi ]-s^\mu = (1-s)^\alpha L_1((1-s)^{-1})\) and

Write \(g(x)=x^\alpha L_1(x^{-1})\) so that \(f(s)=s^\mu +g(1-s)\) then it follows that

when this exists where \((\mu )_l:=\prod _{j=0}^{l-1}(\mu -j)\) is the Pochhammer symbol. Write \(L_2(x):=L_1(x^{-1})\) which is slowly varying at 0. Using Theorem 2 of [19], we see that \(xg'(x)\sim \alpha g(x)\) as \(x \rightarrow 0\). Moreover, using an inductive argument in the proof of this result, it is straightforward to show that for all \(l \in \mathbb {N}\) we have that \(xg^{(l+1)}(x) \sim (\alpha -l)g^{(l)}(x)\) as \(x\rightarrow 0\). Therefore, for any integer \(l\ge 0\)

Proposition 3.4 is the main result of this section and determines the limiting distribution of the number of traps of height at least m in a branch of height greater than m.

Proposition 3.4

In IVIE, for \(l\ge 1\) as \(m \rightarrow \infty \)

Proof

Recall that by (3.3) and (3.4) we want to determine the asymptotics of \(1-f'(s)/\mu \) and \((1-s)^lf^{(l+1)}(s)/(l!\mu )\) as \(s \rightarrow 1^\circ \). We have that \(1-f'(s)/\mu =1-s^{\mu -1}+g'(1-s)/\mu \) and \(g'(1-s) \sim \alpha (1-s)^{\alpha -1}L_2(1-s)\) as \(s\rightarrow 1\). Since \(\alpha <2\), we have that \(\lim _{s \rightarrow 1^\circ }(1-s^{\mu -1})(1-s)^{1-\alpha } =0\) hence

For derivatives \(l \ge 1\) we have that

By (3.8) we have that \((1-s)^lg^{(l+1)}(1-s)\sim (\alpha )_{l+1}(1-s)^{\alpha -1}L_2(1-s)\). For \(l\ge 1\) we have that \(l+1-\alpha >0\) hence

Combining (3.3) with (3.9) and (3.10) gives the desired result. \(\square \)

Proposition 3.4 will be useful for determining the number of large traps in a large branch but equally important is the asymptotic relation (3.9) which gives the tail behaviour of the height of a branch \(\mathcal {T}^{*-}\). By the assumption on \(\xi \) that (2.2) holds we have that

as \(t\rightarrow \infty \). Using (3.2), (3.5), (3.7), (3.9) and (3.11), we then have that

4 Large branches are far apart

In this section we introduce the conditions for a branch to be large. This will differ in each of the cases however, since many of the proofs will generalise to all three cases, we will use the same notation for some aspects.

In IVFE we will have that the slowing is caused by the large number of traps. In particular, we will be able to show that the time spent outside branches with a large number of buds is negligible.

Definition 5

(IVFE large branch) For \(\varepsilon \in (0,1)\) write

then we have that \(\mathbf {P}(\xi ^*\ge l_{n,\varepsilon } ) \sim n^{-(1-\varepsilon )}\). We will call a branch large if the number of buds is at least \(l_{n,\varepsilon }\) and write \(\mathcal {D}^{(n)}:=\{x \in \mathcal {Y}:d_x>l_{n,\varepsilon }\}\) to be the collection of backbone vertices which are the roots of large branches.

In FVIE we will have that the slowing is caused by excursions into deep traps.

Definition 6

(FVIE large branch) For \(\varepsilon \in (0,1)\) write \(C_\mathcal {D}:=c_\mu \mathbf {E}[\xi ^*-1]\),

then by Corollary 3.2 we have that

We will call a branch large if there exists a trap within it of height at least \(h_{n,\varepsilon }\) and write \(\mathcal {D}^{(n)}:=\{x \in \mathcal {Y}:\mathcal {H}(\mathcal {T}_x^{*-})> h_{n,\varepsilon }\} \) to be the collection of backbone vertices which are the roots of large branches. By a large trap we mean any trap of height at least \(h_{n,\varepsilon }\).

In IVIE we will have that the slowing is caused by a combination of the slowing effects of the other two cases. The height and number of buds in branches have a strong link which we show more precisely later; this allows us to label branches as large based on height which will be necessary when decomposing the time spent in large branches.

Definition 7

(IVIE large branch) For \(\varepsilon \in (0,1)\) write

then by (3.12), for \(C_\mathcal {D}:=\Gamma (2-\alpha )c_\mu ^{\alpha -1}\), we have that

We will call a branch large if there exists a trap of height at least \(h_{n,\varepsilon }\) and write \(\mathcal {D}^{(n)}:=\{x \in \mathcal {Y}:\mathcal {H}(\mathcal {T}_x^{*-})> h_{n,\varepsilon }\}\) to be the collection of backbone vertices which are the roots of large branches. By a large trap we mean any trap of height at least \(h_{n,\varepsilon }\).

We want to show that, asymptotically, the large branches are sufficiently far apart to ignore any correlation and therefore approximate \(\Delta _n\) by the sum of i.i.d. random variables representing the time spent in a large branch. Much of this is very similar to [4] so we only give brief details.

Write \(\mathcal {D}_m^{(n)}:=\{x \in \mathcal {D}^{(n)}:|x|\le m\}\) to be the large roots before level m then let \(q_n:=\mathbf {P}(\rho \in \mathcal {D}^{(n)})\) be the probability that a branch is large and write

to be the event that the number of large branches by level Tn doesn’t differ too much from its expected value. Notice that in all three cases we have that \(q_n\) is of the order \(n^{-(1-\varepsilon )}\) thus we expect to see \(nq_n \approx Cn^\varepsilon \) large branches by level n.

Lemma 4.1

For any \(T>0\)

Proof

For each \(n \in \mathbb {N}\) write

where \(B_k\) are independent Bernoulli random variables with success probability \(q_n\). Then \(\mathbf {E}[M_m^n]=0\) and \(Var_{\mathbf {P}}(M_m^n)=mq_n(1-q_n)\) therefore by Kolmogorov’s maximal inequality

Since \(\left| \lfloor nt\rfloor q_n-\lfloor ntq_n\rfloor \right| \le 1\) we have that

which proves the statement. \(\square \)

We want to show that all of the large branches are sufficiently far apart such that the walk doesn’t backtrack from one to another. For \(t>0\) and \(\kappa \in (0,1-2\varepsilon )\) write

to be the event that all large branches up to level \(\lfloor nt\rfloor \) are of distance at least \(n^\kappa \) apart and the root of the tree is not the root of a large branch. A union bound shows that \(\mathbf {P}(\mathcal {D}(n,t)^c)\rightarrow 0\) as \(n \rightarrow \infty \) uniformly over t in compact sets.

We want to show that, with high probability, once the walk reaches a large branch it never backtracks to the previous one. For \(t>0\) write

to be the event that the walk never backtracks distance \(\overline{C}\log (n)\) (where \(\Delta _n^Y:=\min \{m\ge 0:Y_m=\rho _n\}\)). For \(x \in \mathcal {T}\) write \(\tau _x^+=\inf \{n>0:X_n=x\}\) to be the first return time of x. Comparison with a simple random walk on \(\mathbb {Z}\) shows that for \(k\ge 1\) we have that the escape probability is \( P _{\rho _k}\left( \tau _{\rho _{k-1}}^+<\infty \right) =\beta ^{-1}\) hence, using the Strong Markov property,

Using a union bound we see that

for \(\overline{C}\) sufficiently large. Combining this with \(\mathcal {D}(n,t)\) we have that with high probability the walk never backtracks from one large branch to a previous one.

5 Time is spent in large branches

In this section we show that the time spent up to time \(\Delta _n\) outside large branches is negligible. Combined with Sect. 4 this allows us to approximate \(\Delta _n\) by the sum of i.i.d. random variables. We begin with some general results concerning the number of excursions into traps and the expected time spent in a trap of height at most m.

Recall that \(\rho _{i,j}\) are the buds connected to the backbone vertex \(\rho _i\), that \(c(\rho _i):=\{\rho _{i,j}\}_j \cup \{\rho _{i+1}\}\) is the collection of all offspring of \(\rho _i\) and \(d_{\rho _i}=|c(\rho _i)|\) is the number of offspring. We write \(W^{i,j}:=|\{m \ge 0: X_{m-1}=\rho _i, \; X_m=\rho _{i,j}\}|\) to be the number of excursions into the \(j{\text {th}}\) trap of the \(i{\text {th}}\) branch where we set \(W^{i,j}:=0\) if \(\rho _{i,j}\) doesn’t exist in the tree. Lemma 5.1 shows that, conditional on the number of buds, the number of excursions follows a geometric law.

Lemma 5.1

For any \(i,k \in \mathbb {N}\) and \(A \subset \{1,\ldots ,d_{\rho _i}-1\}\), when \(\beta >1\)

with respect to \( P ^{\mathcal {T}}\). In particular for any \(j \le k\) we have that \(W^{i,j}\sim Geo(p)\) where \(p=(\beta -1)/(2\beta -1)\).

Moreover, under this law, \((W^{i,j})_{j \in A}\) have a negative multinomial distribution with one failure until termination and probabilities

that from \(\rho _i\) the next excursion will be into the \(j{\text {th}}\) trap (where \(j=0\) denotes escaping).

Proof

From \(\rho _{i,j}\) the walk must return to \(\rho _i\) before escaping therefore since \( P _{\rho _{i,j}}(\tau ^+_{\rho _i}<\infty )=1\), any traps not in the set we consider can be ignored and it suffices to assume that \(A = \{1,\ldots ,k\}\). By comparison with a biased random walk on \(\mathbb {Z}\) we have that \( P _{\rho _{i+1}}(\tau ^+_{\rho _i}=\infty )=1-\beta ^{-1}.\) If \(d_{\rho _i}=k+1\) then \( P _{\rho _i}(\tau ^+_x=\min _{y \in c(\rho _i)}\tau ^+_y)=(k+1)^{-1}\) for any \(x \in c(\rho _i)\). The probability of never entering a trap in the branch \(\mathcal {T}^{*-}_{\rho _i}\) is, therefore,

Each excursion ends with the walker at \(\rho _i\) thus the walk takes a geometric number of excursions into traps with escape probability \((\beta -1)/((k+1)\beta -1)\). The second statement then follows from the fact that the walker has equal probability of going into any of the traps. \(\square \)

For a fixed tree T with \(n{\text {th}}\) generation size \(Z_n^T\) where \(Z_1^T>0\) it is classical (e.g. [22]) that

Let \(\mathcal {T}^\leftarrow \) be an f-GW tree rooted at \(\overleftarrow{\rho }\) conditioned to have a single first generation vertex which we label \(\rho \). Notice that this has the same distribution as an f-GW-tree \(\mathcal {T}^\circ \) to which we append a single ancestor of the root. From (5.1) it follows that

For any \(m\ge 1\) we have that \(\mathbf {P}(\mathcal {H}(\mathcal {T}^\leftarrow )\le m)\ge p_0\) therefore, for some constant C,

Recall that \(\Delta ^Y_n\) is the first hitting time of \(\rho _n\) for the underlying walk Y and write

to be the event that level n is reached by time \(C_1n\) by the walk on the backbone. Standard large deviation estimates yield that \(\lim _{n \rightarrow \infty }\mathbb {P}(A_3(n)^c)=0\) for \(C_1>(\beta +1)/(\beta -1)\).

For the remainder of this section we mainly consider the case in which \(\xi \) belongs to the domain of attraction of a stable law of index \(\alpha \in (1,2)\). The case in which the offspring law has finite variance will proceed similarly however since the corresponding estimates are much simpler in this case we omit the proofs.

In IVIE and IVFE, for \(t>0\), let the event that there are at most \(\log (n)a_n\) buds by level \(\lfloor nt\rfloor \) be

The variables \(d_{\rho _k}\) are i.i.d. with the law of \(\xi ^*\) therefore the laws of \(a_{\lfloor nt\rfloor }^{-1}\sum _{k=1}^{\lfloor nt\rfloor }(\xi ^*_k-1)\) converge to some stable law \(G^*\) where \(\lim _{n \rightarrow \infty }\overline{G}^*(Ct^{\alpha -1}\log (n))=0\) uniformly over \(t\le T\) therefore we have that \(\lim _{n \rightarrow \infty }\mathbf {P}(A_4(n,t)^c)=0\).

In FVIE write

then Markov’s inequality gives that \(\lim _{n \rightarrow \infty }\mathbf {P}(A_4(n,t)^c)=0\).

Write

to be the event that any trap is entered at most \(C_2\log (n)\) times. By Lemma 5.1 the number of entrances into \(\rho _{i,j}\) has the law of a geometric random variable of parameter \(p=(\beta -1)/(2\beta -1)\) hence using a union bound we have that

where \(\overline{L}\) varies slowly hence the final term converges to 0 for \(C_2\) large and \(\lim _{n \rightarrow \infty }\mathbb {P}(A_5(n,t)^c)=0\).

Propositions 5.2, 5.3 and 5.4 show that any time spent outside large traps is negligible. In FVIE and IVIE we only consider the large traps in large branches. Recall that \(\mathcal {D}^{(n)}\) is the set of roots of large branches and write

to be the vertices in large traps. In IVFE we require the entire large branch and write

to be the vertices in large branches. In either case we write \(\chi _{t,n}:=|\{1\le i\le \Delta _{\lfloor nt\rfloor }: \;X_{i-1},X_i \in K(n)\}|\) to be the time spent up to \(\Delta _{\lfloor nt \rfloor }\) in large traps.

Proposition 5.2

In IVIE, for any \(t,\epsilon >0\) we have that as \(n \rightarrow \infty \)

Proof

On \(A_4(n,t)\) there are at most \(a_n\log (n)\) traps by level \(\lfloor nt\rfloor \). We can order these traps so write \(T^{(l,k)}\) to be the duration of the \(k{\text {th}}\) excursion into the \(l{\text {th}}\) trap and \(\rho (l)\) to be the root of this trap (that is, the unique bud in the trap). Here we consider an excursion to start from the bud and end at the last hitting time of the bud before returning to the backbone. Recall that on \(A_3(n)\) the walk Y reaches level n by time \(C_1n\) and on \(A_5(n)\) no trap up to level n is entered more than \(C_2\log (n)\) times. Using the estimates on \(A_3,A_4\) and \(A_5\) we have that

Since \(a_n^{\frac{1}{\gamma }}\gg a_n\log (n)^2 \gg n\), for n sufficiently large we have that, using Markov’s inequality and (5.2) with \(m= h_{n,\varepsilon }\), the second term can be bounded above by

Combining constants and slowly varying functions into a single function \(L_{t,\epsilon }\) such that for any \(\tilde{\varepsilon }>0\) we have that \(L_{t,\epsilon }(n)\le n^{\tilde{\varepsilon }}\) for n sufficiently large we then have that

which converges to 0 since \(\alpha ,\frac{1}{\gamma }>1\). \(\square \)

Using \(A_3,A_5\) and the form of \(A_4\) for FVIE, the technique used to prove Proposition 5.2 extends straightforwardly to prove Proposition 5.3 therefore we omit the proof.

Proposition 5.3

In FVIE, for any \(t, \epsilon >0\) we have that as \(n \rightarrow \infty \)

Similarly, we can show a corresponding result for IVFE.

Proposition 5.4

In IVFE, for any \(t, \epsilon >0\), as \(n \rightarrow \infty \)

Proof

We begin by bounding the total number of traps in small branches. Recall from Definition 5 that \(l_{n,\varepsilon }\le a_{n^{1-\varepsilon }}\). Let \(c \in (0,2-\alpha )\) then, by Markov’s inequality and the truncated first moment asymptotic:

as \(x\rightarrow \infty \) for some constant C (see for example [12], IX.8), for n large

where \(L_t(n)\) varies slowly at \(\infty \). This converges to 0 as \(n \rightarrow \infty \). We can order the traps in small branches and write \(T^{(l,k)}\) to be the duration of the \(k{\text {th}}\) excursion in the \(l{\text {th}}\) trap not in a large branch where we consider an excursion to start and end at the backbone. Using \(A_3\) and \(A_5\) to bound the time taken by Y to reach level nt and the number of entrances into traps up to level nt we have that for n suitably large

Using Markov’s inequality on the final term yields

for some \(L_{t,\epsilon }\) varying slowly at \(\infty \). This converges to 0 as \(n \rightarrow \infty \) hence the result holds. \(\square \)

Recall that we write \(r_n\) to be \(a_n\) in IVFE, \(n^{1/\gamma }\) in FVIE and \(a_n^{1/\gamma }\) in IVIE. Since \(\Delta _{\lfloor nt \rfloor }-\chi _{t,n}\) is non-negative and non-decreasing in t we have that \(\sup _{0\le t\le T}|\Delta _{\lfloor nt \rfloor }-\chi _{t,n}|=|\Delta _{\lfloor nT \rfloor }-\chi _{T,n}|\) therefore Corollary 5.5 follows from Propositions 5.2, 5.3 and 5.4.

Corollary 5.5

In each of IVFE, FVIE and IVIE, for any \(T>0\)

converges in \(\mathbb {P}\)-probability to 0.

Let \(\Lambda \) be the set of strictly increasing continuous functions mapping [0, T] onto itself and I the identity map on [0, T] then we consider the Skorohod \(J_1\) metric

Write \(\chi ^i_n\) to be the total time spent in large traps of the \(i{\text {th}}\) large branch; that is

where \(\rho _i^+\) is the element of \(\mathcal {D}^{(n)}\) which is \(i{\text {th}}\) closest to \(\rho \). Notice that, whereas \(\chi _{n,t}\) only accumulates time up to reaching \(\rho _{\lfloor nt\rfloor }\), each \(\chi _n^{i}\) may have contributions at arbitrarily large times. Recall that \(A_2^{(0)}(n,t)\) is the event that the walk never backtracks distance \(\overline{C}\log (n)\) along the backbone from a backbone vertex up to level \(\lfloor nt\rfloor \). On \(A_2^{(0)}(n,T)\) we therefore have that for all \(t\le T\)

where, on \(\mathcal {D}(n,t)\), the \(J_1\) distance between the two sums in the above expression can be bounded above by \(\overline{C}\log (n)\). In particular, using that \(A_2^{(0)}(n,T)\) and \(\mathcal {D}(n,t)\) occur with high probability with the tightness result we prove in Sect. 9, in order to prove Theorems 1, 2 and 3 it will suffice to consider the time spent in large traps up to level \(\lfloor nt\rfloor \) under the appropriate scaling.

Let \((X_n^{(i)})_{i\ge 1}\) be independent walks on \(\mathcal {T}\) with the law of \(X_n\) and \((Y_n^{(i)})_{i\ge 1}\) the corresponding backbone walks. For \(i\ge 1\) let \(\tilde{\chi }_n^i\) be the time spent in the \(i{\text {th}}\) large trap by \(X_n^{(i)}\) and

The random variables \(\tilde{\chi }_n^i\) are independent copies (under \(\mathbb {P}\)) of times spent in large branches; moreover, on \(\mathcal {D}(n,t)\), \(\rho \notin \mathcal {D}^{(n)}\) therefore they are identically distributed. Let \(\mathbb {E}\) extend to the enlarged space.

Lemma 5.6

In each of IVFE, FVIE and IVIE,

-

1.

as \(n \rightarrow \infty \)

$$\begin{aligned} d_{J_1}\left( \left( \sum _{i=1}^{\left| \mathcal {D}_{\lfloor nt\rfloor }^{(n)}\right| }\frac{\chi ^i_n}{r_n}\right) _{t \in [0,T]},\left( \sum _{i=1}^{\lfloor tnq_n \rfloor }\frac{\chi ^i_n}{r_n}\right) _{t \in [0,T]}\right) \end{aligned}$$converges to 0 in probability;

-

2.

for any bounded \(H:D([0,T],\mathbb {R})\rightarrow \mathbb {R}\) continuous with respect to the Skorohod \(J_1\) topology, as \(n \rightarrow \infty \)

$$\begin{aligned} \left| \mathbb {E}\left[ H\left( \left( \sum _{i=1}^{\lfloor tnq_n \rfloor }\frac{\chi ^i_n}{r_n}\right) _{t \in [0,T]}\right) \right] -\mathbb {E}\left[ H\left( \left( \sum _{i=1}^{\lfloor tnq_n \rfloor }\frac{\tilde{\chi }^i_n}{r_n}\right) _{t \in [0,T]}\right) \right] \right| \rightarrow 0. \end{aligned}$$

Proof

By definition of \(d_{J_1}\), the distance in statement 1 is equal to

For \(m \in \mathbb {N}\) let \(\lambda _n(m/n):=|\mathcal {D}_{m}^{(n)}|(nq_n)^{-1}\) then define \(\lambda _n(t)\) by the usual linear interpolation. It follows that \(|\mathcal {D}_{\lfloor nt\rfloor }^{(n)}|=\lfloor \lambda _n(t)nq_n \rfloor \) and the above expression can be bounded above by

which converges to 0 by Lemma 4.1 since \(n^{2\varepsilon /3}(nq_n)^{-1} \rightarrow 0\).

For \(i\ge 1\) let

be the analogue of \(A^{(0)}_2(n,t)\) for the \(i{\text {th}}\) copy and

be the event that \(\rho \) is not the root of a large branch, on each of the first \(\lceil ntq_n\rceil \) copies the walk never backtracks distance \(\overline{C}\log (n)\) and that large branches are of distance at least \(n^\kappa \) apart.

therefore

which converges to 0 as \(n \rightarrow \infty \) for \(\overline{C}\) large by the same argument as (4.3) and that \(\mathbf {P}(\mathcal {D}(n,T)^c)\rightarrow 0\). \(\square \)

Using Corollary 5.5 and Lemma 5.6, in order to show the convergence of \(\Delta _{\lfloor nt\rfloor }/r_n\), it suffices to show the convergence of the scaled sum of independent random variables \(\tilde{\chi }_{t,n}/r_n\).

6 Excursion times in dense branches

In this section we only consider IVFE. The main tool will be Theorem 6, which is Theorem 10.2 in [4], and is itself a consequence of Theorem IV.6 in [23].

Theorem 6

Let \(n(t):[0,\infty )\rightarrow \mathbb {N}\) and for each t let \(\{R_k(t)\}_{k=1}^{n(t)}\) be a sequence of i.i.d. random variables. Assume that for every \(\epsilon >0\) it is true that

Now let \(\mathcal {L}(x):\mathbb {R}{\setminus }\{0\}\rightarrow \mathbb {R}\) be a real, non-decreasing function satisfying \(\lim _{x\rightarrow \infty }\mathcal {L}(x)=0\) and \(\int _0^ax^2\mathrm {d}\mathcal {L}(x)<\infty \) for all \(a>0\). Suppose \(d \in \mathbb {R}\) and \(\sigma \ge 0\), then the following statements are equivalent:

-

1.

As \(t\rightarrow \infty \)

$$\begin{aligned} \sum _{k=1}^{n(t)}R_k(t) \mathop {\rightarrow }\limits ^{{\mathrm{d}}}R_{d,\sigma ,\mathcal {L}} \end{aligned}$$where \(R_{d,\sigma ,\mathcal {L}}\) has the law \(\mathcal {I}(d,\sigma ,\mathcal {L})\), that is,

$$\begin{aligned} \mathbb {E}\left[ e^{itR_{d,\sigma ,\mathcal {L}}}\right] :=\exp \left( idt+\int _0^\infty \left( e^{itx}-1-\frac{itx}{1+x^2}\right) \mathrm {d}\mathcal {L}(x)\right) . \end{aligned}$$(6.1) -

2.

For \(\tau >0\) let \(\overline{R}_\tau (t):=R_1(t)\mathbf {1}_{\{|R_1(t)| \le \tau \}}\) then for every continuity point x of \(\mathcal {L}\)

$$\begin{aligned} d&= \lim _{t\rightarrow \infty }n(t) \mathbb {E}[\overline{R}_\tau (t)]+\int _{|x|>\tau } \frac{x}{1+x^2}\mathrm {d}\mathcal {L}(x)-\int _{0<|x|\le \tau }\frac{x^3}{1+x^2}\mathrm {d}\mathcal {L}(x), \\ \sigma ^2&= \lim _{\tau \rightarrow 0}\limsup _{t\rightarrow \infty }n(t)Var(\overline{R}_\tau (t)), \\ \mathcal {L}(x)&= {\left\{ \begin{array}{ll} \lim _{t\rightarrow \infty }n(t)\mathbb {P}(R_1(t)\le x) &{} x<0 \\ -\lim _{t\rightarrow \infty }n(t)\mathbb {P}(R_1(t)>x) &{} x>0 \end{array}\right. } \end{aligned}$$

In our case, n(t) will be the number of large branches up to level \(\lfloor nt \rfloor \) and \(\{R_k\}_{k=1}^{n(t)}\) independent copies of the time spent in a large branch.

Since we are now working with i.i.d. random variables we will simplify notation by considering the dummy branch \(\mathcal {T}^{*-}\) defined in Definition 4 which has root \(\rho \) and first generation vertices \(\rho _1,\ldots ,\rho _{\xi ^*-1}\) which are roots of f-GW-trees \((\mathcal {T}_j^\circ )_{j=1}^{\xi ^*-1}\) (Fig. 3). We then let \((W^j)_{j=1}^{\xi ^*-1}\) have the multinomial distribution determined in Lemma 5.1; that is, \(W^j\) represents the number of excursions into the \(j{\text {th}}\) trap of \(\mathcal {T}^{*-}\). For the biased random walk \(X_n\) on \(\mathcal {T}^{*-}\) started from \(\rho \), let \(T^{j,k}\) denote the duration of the \(k{\text {th}}\) excursion in the \(j{\text {th}}\) trap where we recall that in IVFE the excursion starts and ends at the root \(\rho \). We then have that

is equal in distribution under \(\mathbb {P}(\cdot |\xi ^*>l_{n,\varepsilon })\) to \(\tilde{\chi }^i_n\) under \(\mathbb {P}\) for any i.

For \(K \ge l_{n,\varepsilon }-l_{n,0}\) write \(\overline{L}_{\scriptscriptstyle {K}}:=l_{n,0}+K\) then denote \(\mathbb {P}^{\scriptscriptstyle {K}}(\cdot ):=\mathbb {P}\left( \cdot |\xi ^*-1=\overline{L}_{\scriptscriptstyle {K}}\right) \) and \(\mathbf {P}^{\scriptscriptstyle {K}}(\cdot ) :=\mathbf {P}\left( \cdot |\xi ^*-1=\overline{L}_{\scriptscriptstyle {K}}\right) \). We now proceed to show that under \(\mathbb {P}^{\scriptscriptstyle {K}}\)

converges in distribution to some random variable \(Z_\infty \) whose distribution doesn’t depend on K.

We start by showing that the excursion times \(T^{j,k}\) don’t differ greatly from \( E ^{\mathcal {T}^{*-}}[T^{j,k}]\). In order to do this we require moment bounds on \(T^{j,k}\) however since \(\mathbf {E}[\xi ^2]=\infty \) we don’t have finite variance of the excursion times and thus we require a more subtle treatment. Recall that for a tree T we denote \(Z_n^T\) to be the size of the \(n{\text {th}}\) generation. Excursion times are first return times \(\tau _\rho ^+\) conditioned on the first step therefore pruning buds and using (5.1) we have that the expected excursion time in a trap \(\mathcal {T}_j^\circ \) is

Using that \(\mathbf {P}(Z_n^{\mathcal {T}^\circ }>0)\sim c_\mu \mu ^n\) (from (3.1)) we see that for n large there are no traps of height greater than \(C\log (n)\) for some constant C thus for our purposes it will suffice to study \(\sup _nZ_n^{\mathcal {T}^\circ }\beta ^n\).

Lemma 6.1

Let \(Z_n\) be a subcritical Galton–Watson process with mean \(\mu \) and offspring \(\xi \) satisfying \(\mathbf {E}[\xi ^{1+\tilde{\varepsilon }}]<\infty \) for some \(\tilde{\varepsilon }>0\). Suppose \(1<\beta <\mu ^{-1}\), then there exists \(\kappa >0\) such that for all \(\epsilon \in (0,\kappa )\) we have that \((Z_n\beta ^n)^{1+\epsilon }\) is a supermartingale.

Proof

Let \(\mathcal {F}_n:=\sigma (Z_k; \; k \le n)\) denote the natural filtration of \(Z_n\) and \((\xi _k)_{k\ge 1}\) be independent copies of \(\xi \).

where the inequality follows by convexity of \(f(x)=x^{1+\epsilon }\). From this it follows that for \(\epsilon \in (0,\alpha -1)\)

Fix \(\lambda =(\mu /\beta )^{1/2}\) then \(\mu <\lambda \) and for \(\epsilon >0\) sufficiently small \(\lambda \beta ^{1+\epsilon }<1\). By dominated convergence \(\mathbf {E}[\xi ^{1+\epsilon }]<\lambda \) for all \(\epsilon \) small. In particular, \(\beta ^{1+\epsilon }\mathbf {E}[\xi ^{1+\epsilon }]<1\) for \(\epsilon \) suitably small and therefore \((Z_n\beta ^n)^{1+\epsilon }\) is a supermartingale. \(\square \)

Lemma 6.2

In IVFE, we can choose \(\varepsilon >0\) such that for any \(t>0\) there exists a constant \(C_t\) such that

Proof

Write \(E_m:=\bigcap _{j=1}^m \left\{ \mathcal {H}(\mathcal {T}_j^\circ ) \le C\log (m)\right\} \) to be the event that none of the first m trees have height greater than \(C\log (m)\). From (3.1) \(\mathbf {P}(\mathcal {H}(\mathcal {T}_j^\circ )\ge m)\sim c_\mu \mu ^m\) hence we can choose \(\tilde{C}>c_\mu \) such that

Thus choosing \(C>1/\log (\mu ^{-1})\) and \(c=C\log (\mu ^{-1})-1>0\) we have that \(\mathbf {P}(E^c_m)\le \tilde{C}m^{-c}\) for m sufficiently large. By Lemma 6.1 we have that \((Z_k\beta ^k)^{1+\epsilon }\) is a supermartingale for \(\epsilon >0\) sufficiently small where \(Z_n\) is the process associated to \(\mathcal {T}^\circ \) therefore by Doob’s supermartingale inequality

Using the expression (6.4) for the expected excursion time it follows that

In particular, for some slowly varying function \(\overline{L}\)

Let \(\kappa =\epsilon /(2(1+\epsilon ))\) then write \(\overline{E}_m:=E_m \cap \bigcap _{j=1}^m\{ E ^{\mathcal {T}_j^\circ }[T^{j,1}]\le m^{1-\kappa }\}\) to be the event that no trap is of height greater than \(C\log (m)\) and the expected excursion time in any trap is at most \(m^{1-\kappa }\). For m sufficiently large by (6.5) we have that

Write \(\overline{\overline{E}}_m:=\overline{E}_m\cap \bigcap _{j=1}^m\{W^j\le C'\log (m)\}\) for \(C'>(2\beta -1)/(\beta -1)\) to be the event that no trap is of height greater than \(C\log (m)\), entered more than \(C'\log (m)\) times or has expected excursion time greater than \(m^{1-\kappa }\). Then, by a union bound and the geometric distribution of \(W^j\) from Lemma 5.1

for m large. Since \((1\,-\,\kappa )(1\,+\,\epsilon )>1\) we can choose \(\varepsilon <\frac{1}{2}\min \left\{ (1-\kappa )(1+\epsilon )-1, \; c, C'\frac{\beta -1}{2\beta -1}-1 \right\} \) then we have that \(\mathbb {P}\left( \overline{\overline{E}}_m^c\right) \le \tilde{C}m^{-2\varepsilon }\) and

for some slowly varying function \(\overline{L}\). Here the first inequality comes from Chebyshev and the second holds due to (6.6). Since \(\epsilon >0\) we can choose \(\varepsilon \in (0,\epsilon /2)\) then

In particular, this holds for \(m=\overline{L}_{\scriptscriptstyle {K}}\ge a_{n^{1-\varepsilon }}\) thus since \(\alpha <2\)

which is bounded above by \(C_tn^{-2\varepsilon }\) for n large whenever \(\varepsilon <(2-\alpha )/(\alpha -1)\). \(\square \)

Using this we can now show that the average time spent in a trap indeed converges to its expectation.

Lemma 6.3

In IVFE, we can find \(\varepsilon >0\) such that for sufficiently large n we have that

uniformly over \(t\ge 0\) where \(r(n)=o(1)\).

Proof

We continue using the notation defined in Lemma 6.2 and also define the event

that the \(j{\text {th}}\) trap isn’t tall, entered many times and that the expected excursion time in it isn’t large.

Since \(\mathbb {E}[ E ^{\mathcal {T}_j^\circ }[T^{j,1}\mathbf {1}_{E_m^j}]]=\mathbb {E}[T^{j,1}\mathbf {1}_{E_m^j}] \ne \mathbb {E}[\mathbb {E}[T^{1,1}]\mathbf {1}_{E_m^j}]\) we have that the summand in the right hand side doesn’t have zero mean thus we perform the splitting:

By Chebyshev’s inequality and the tail bound \(\mathbb {E}[ E ^{\mathcal {T}_j^\circ }[T^{j,1}]^2\mathbf {1}_{\{E_m^j\}}]\le Cm^{1-\epsilon }L(m)\) from (6.6) we have that the first term is bounded above by

for some slowly varying function \(\overline{L}\). The second term is equal to

by (6.7). The final term can be written as

which converges to 0 as \(m \rightarrow \infty \) by dominated convergence since, by (5.2), \(\mathbb {E}[T^{1,1}]<\infty \). We therefore have that the statement holds by setting \(m=\overline{L}_{\scriptscriptstyle {K}}\). \(\square \)

Recall from (6.3) that, under \(\mathbb {P}^{\scriptscriptstyle {K}}\), \(\zeta ^{(n)}\) is the average time spent in a trap of a branch with \(\xi ^*-1=\overline{L}_{\scriptscriptstyle {K}}\) buds. From Lemmas 6.2 and 6.3 we have that as \(n\rightarrow \infty \)

Using (5.1) we have that \(\mathbb {E}[T^{1,1}]=2/(1-\beta \mu )\). Write \(\theta =(\beta -1)(1-\beta \mu )/(2\beta )\) and let \(Z^\infty \sim \exp (\theta )\).

Corollary 6.4

In IVFE, we can find \(\varepsilon >0\) such that for sufficiently large n we have that

uniformly over \(t\ge 0\) where \(\tilde{r}(n)=o(1)\).

Proof

By Lemma 5.1 the sum of \(W^j\) have a geometric law. In particular,

for some constant C independent of K. It therefore follows that the laws of \(\zeta ^{(n)}\) converge under \(\mathbb {P}^{\scriptscriptstyle {K}}\) to an exponential law. In particular, using Lemmas 6.2 and 6.3 with the bound

with \(\epsilon =r(n)^{1/2}t\), we have the result since \(\overline{L}_{\scriptscriptstyle {K}}\ge l_{n,\varepsilon } \gg n^\varepsilon \). \(\square \)

Corollary 6.5

In IVFE, for any \(\tau >0\) fixed

Lemma 6.6 shows that the product of an exponential random variable with a heavy tailed random variable has a similar tail to the heavy tailed variable.

Lemma 6.6

Let \(X \sim exp(\theta )\) and \(\xi \) be an independent variable which belongs to the domain of attraction of a stable law of index \(\alpha \in (0,2)\). Then \(\mathbf {P}(X\xi>x) \sim \theta ^{-\alpha }\Gamma (\alpha +1)\mathbf {P}(\xi >x)\) as \(x \rightarrow \infty \).

Proof

For some slowly varying function L we have that \(\mathbf {P}(\xi \ge t) \sim x^{-\alpha }L(x)\) as \(x \rightarrow \infty \).

Fix \(0<u<1<v<\infty \) then \(\forall y \le u\) we have that \(x/y>x\) thus \(\mathbf {P}(\xi \ge x/y)\le \mathbf {P}(\xi \ge x)\) it therefore follows that

For \(y \in [u,v]\) we have that \(\mathbf {P}(\xi \ge x/y)/\mathbf {P}(\xi \ge x)\rightarrow y^\alpha \) uniformly over y therefore

Moreover, since this holds for all \(u \ge 0\) and \(1-e^{\theta u} \rightarrow 0\) as \(u \rightarrow 0\) we have that

Since \(0<\mathbf {P}(\xi \ge x)\le 1\) for all \(x<\infty \) we have that L is bounded away from \(0,\infty \) on any compact interval thus satisfies the requirements of Potter’s theorem (see for example [6], 1.5.4) that if L is slowly varying and bounded away from \(0,\infty \) on any compact subset of \([0,\infty )\) then for any \(\epsilon >0\) there exists \(A_\epsilon >1\) such that for \(x,y>0\)

Moreover, \(\exists c_1,c_2>0\) such that \(c_1t^{-\alpha }L(t) \le \mathbf {P}(\xi \ge t) \le c_2t^{-\alpha }L(t)\) hence we have that for all \(y >v\) \(\mathbf {P}(\xi \ge x/y)/\mathbf {P}(\xi \ge x) \le Cy^{\alpha +\epsilon }\). By dominated convergence we therefore have that

Combining this with (6.8) we have that

\(\square \)

We write \(\mathbb {P}^>(\cdot ):=\mathbb {P}(\cdot | \xi ^*>l_{n,\varepsilon })\) and \(\mathbf {P}^>(\cdot ):=\mathbf {P}(\cdot | \xi ^*>l_{n,\varepsilon })\) to be the laws conditioned on the branch \(\mathcal {T}^{*-}\) being large. From (6.2) we have that (under \(\mathbb {P}\)) \(\tilde{\chi }^i_n\) are independent copies of the time spent in a branch \(\tilde{\chi }_n\) with respect to \(\mathbb {P}^>\). Define \(\tilde{\chi }^\infty _n:= (\xi ^*-1)Z^\infty \) where \(Z^\infty \) is the exponential random variable used in Corollaries 6.4 and 6.5. Recall that \(R_{d,\sigma ,\mathcal {L}}\) has the infinitely divisible given by (6.1). Fix the sequence \((\lambda _n)_{n\ge 1}\) converging to some \(\lambda >0\) and denote \(M_n^\lambda :=\lfloor \lambda _nn^\varepsilon \rfloor \).

Proposition 6.7

In IVFE, for any \(\lambda >0\), as \(n \rightarrow \infty \)

where

Proof

Let \(\epsilon >0\) then by Markov’s inequality

which converges to 0 as \(n \rightarrow \infty \). Thus, by Theorem 6, it suffices to show that

-

1.

$$\begin{aligned} \lim _{\tau \rightarrow 0^+}\limsup _{n \rightarrow \infty } M_n^\lambda Var_{\mathbb {P}^>}\left( \frac{\tilde{\chi }_n}{a_n} \mathbf {1}_{\{\frac{\tilde{\chi }_n}{a_n} \le \tau \}}\right) =0, \end{aligned}$$

-

2.

$$\begin{aligned} \mathcal {L}_\lambda (x)={\left\{ \begin{array}{ll} \lim _{n \rightarrow \infty } M_n^\lambda \mathbb {P}^>\left( \frac{\tilde{\chi }_n}{a_n} \le x\right) &{} x<0, \\ -\lim _{n \rightarrow \infty } M_n^\lambda \mathbb {P}^>\left( \frac{\tilde{\chi }_n}{a_n}>x\right) &{} x>0, \\ \end{array}\right. } \end{aligned}$$

-

3.

$$\begin{aligned} d_\lambda= & {} \lim _{n \rightarrow \infty } M_n^\lambda \mathbb {E}^>\left[ \frac{\tilde{\chi }_n}{a_n} \mathbf {1}_{\{\frac{\tilde{\chi }_n}{a_n} \le \tau \}}\right] +\int _{|x|>\tau }\frac{x}{1+x^2} d\mathcal {L}_\lambda (x)\\&-\int _{0<|x|\le \tau }\frac{x^3}{1+x^2}d\mathcal {L}_\lambda (x) \end{aligned}$$

where \(d_\lambda \) and \(\mathcal {L}_\lambda \) are as stated above.

We start with the first condition and since \(\lambda _n \rightarrow \lambda \) there exists a constant C such that

By the definition of \(a_n\) we have that

Conditional on the number of buds \(\xi ^*\) we have that the number of excursions \(W^j\) into the \(j{\text {th}}\) trap are independent from the excursion times \(T^{j,k}\) and both the number of excursions and the excursion times have finite mean hence

where the asymptotic holds as \(n \rightarrow \infty \) by (5.4). In particular, by combining this with (6.10) in (6.9) we have that \(M_n^\lambda Var_{\mathbb {P}^>}(\frac{\tilde{\chi }_n}{a_n} \mathbf {1}_{\{\frac{\tilde{\chi }_n}{a_n} \le \tau \}}) \le C(\tau ^2+\tau )\) for some constant C depending on \(\lambda \) hence, as \(\tau \rightarrow 0^+\), we indeed have convergence to 0 and therefore the first condition holds.

We now move on to the Lévy spectral function \(\mathcal {L}_\lambda \). Clearly for \(x<0\) we have that \(\mathcal {L}_\lambda (x)=0\) since \(\tilde{\chi }_n\) is a positive random variable. It therefore suffices to consider \(x>0\). By Corollary 6.4 we have that the scaled time spent in a large trap \(\zeta ^{(n)}\) [from (6.3)] converges in distribution to an exponential random variable \(Z^\infty \) with parameter \(\theta \) (which is independent of K) therefore, since \(M_n^\lambda \sim \lambda n^\varepsilon \) and \(\tilde{\chi }^\infty _n=(\xi ^*-1)Z^\infty \) we have that

Where the final asymptotic holds by Lemma 6.6 and because

which converges to 0 as \(n \rightarrow \infty \) since \(l_{n,\varepsilon }=a_{\lfloor n^{1-\varepsilon }\rfloor }\) (and therefore \(a_n/l_{n,\varepsilon }>>n^\varepsilon \)). It now suffices to show that \(n^\varepsilon \left( \mathbb {P}^>\left( \frac{\tilde{\chi }^\infty _n}{a_n}>x\right) -\mathbb {P}^>\left( \frac{\tilde{\chi }_n}{a_n}>x\right) \right) \) converges to 0 as \(n \rightarrow \infty \). To do this we condition on the number of buds:

We consider positive and negative K separately. For \(K \ge 0\) we have that

By (6.10) \(n^\varepsilon \mathbf {P}^>(\xi ^*-1\ge a_n)\) converges as \(n \rightarrow \infty \) hence, using Corollary 6.4, (6.11) converges to 0. For \(K\le 0\), by Corollary 6.4 we have that

For some constant C we have that \(\mathbf {P}(\xi ^*-1\ge l_{n,\varepsilon })\sim Cn^{-(1-\varepsilon )}\) thus by (5.4)

In particular, since \(\tilde{r}(n)=o(1)\), we indeed have that this converges to zero and thus we have the required convergence for \(\mathcal {L}_\lambda \).

Finally, we consider the drift term \(d_\lambda \). Since \(\int _{0<x\le \tau }x\mathrm {d}\mathcal {L}_\lambda (x)<\infty \) we have that

We want to show that \(d_\lambda =\int _0^\infty \frac{x}{1+x^2}\mathrm {d}\mathcal {L}_\lambda (x)\) thus we need to show that the other terms cancel. By definition of \(\mathbb {P}^>\) we have that

By Lemma 6.6, \((\xi ^*-1)Z^\infty \) belongs to the domain of attraction of a stable law of index \(\alpha -1\) and satisfies the scaling properties of \(\xi ^*\) (up to a constant factor). Therefore, using that \(a_n \gg l_{n,\varepsilon }\), we have that

Using the form of the Lévy spectral function we have that

thus it remains to show that

Similarly to the previous parts we condition on \(\xi ^*-1=\overline{L}_{\scriptscriptstyle {K}}\) and consider the sums over K positive and negative separately. For \(K \le 0\)

By definition of \(l_{n,\varepsilon }\) and properties of stable laws \(n^\varepsilon \mathbb {E}\left[ (\xi ^*-1)/a_n\mathbf {1}_{\{\xi ^*\le a_n\}}\right] /\mathbf {P}(\xi ^*\ge l_{n,\varepsilon })\) converges to some constant as \(n \rightarrow \infty \). By Corollary 6.5 we therefore have that this converges to 0. Similarly for \(K\ge 0\) we have that

We have that \(n^\varepsilon \mathbf {P}(\xi ^*\ge l_{n,0})/\mathbf {P}(\xi ^*\ge l_{n,\varepsilon })\) converges to some constant as \(n \rightarrow \infty \). The result then follows by Corollary 6.5. \(\square \)

This shows the convergence result of Theorem 1 in the sense of finite dimensional distributions. In Sect. 9 we prove a tightness result which concludes the proof.

7 Excursion times in deep branches

In this section we decompose the time spent in large branches. In FVIE this will be very similar to the decomposition used in [4] and we won’t consider the argument in great detail. However, the decomposition required in IVIE requires greater delicacy.

In Lemmas 7.1, 7.2 and Proposition 7.3 we consider a construction of a GW-tree conditioned on its height from [14] to show that the time spent in deep traps essentially consists of some geometric number of excursions from the deepest point in the trap to itself. That is, as in [4], excursions which don’t reach the deepest point are negligible as is the time taken for the walk to reach the deepest point from the root of the trap and the time taken to return to the root from the deepest point when this happens before returning to the deepest point.

In the remainder of the section we show that, conditional on the exact height of the branch \(\overline{H}\), the time spent in the branch scaled by \(\beta ^{\overline{H}}\) converges in distribution along the given subsequences. In Lemma 7.5 we determine an important asymptotic relation for the distribution over the number of buds conditional on the height of the branch. In Lemmas 7.6–7.9 we provide various bounds which allow us, in Proposition 7.10, to show that the excursion time in a large branch is close to the random variable \(Z_\infty ^n\) (defined in (7.23)) which removes some of the dependency on n.

The main result of the section is Proposition 7.14 which shows that the scaled time spent in a large branch converges in distribution along the given subsequences. As a prelude to this we prove Lemmas 7.11–7.13 which show that we can reintroduce small traps into the branch and that the height of a trap is sufficiently close to a geometric random variable. We then conclude the section by showing that the scaled excursion times can be dominated by some random variable with a certain moment property which will be important in Sect. 8.

Recall that \(\mathcal {T}^\circ \) is an f-GW-tree and \(\mathcal {H}(\mathcal {T}^\circ )\) is its height then, following notation of [4], we denote \((\phi _{n+1},\psi _{n+1})_{n \ge 0}\) to be a sequence of i.i.d. pairs with joint law

for \(k = 1,2,\ldots \) and \(j=1,\ldots ,k\). Under this law \(\psi _{n+1}\) has the law of the degree of the root of a GW-tree conditioned to be of height \(n+1\) and \(\phi _{n+1}\) has the law over the first bud to give rise onto a tree of height exactly n. We then construct a sequence of trees recursively as follows: Set \(\mathcal {T}^\prec _0=\{\delta \}\) then

-

1.

Let the first generation of \(\mathcal {T}^\prec _{n+1}\) be of size \(\psi _{n+1}\).

-

2.

Attach \(\mathcal {T}^\prec _n\) to the \(\phi _{n+1}{\text {th}}\) first generation vertex of \(\mathcal {T}^\prec _{n+1}\).

-

3.

Attach f-GW-trees conditioned to have height at most \(n-1\) to the first \(\phi _{n+1}-1\) vertices of the first generation of \(\mathcal {T}^\prec _{n+1}\).

-

4.

Attach f-GW-trees conditioned to have height at most n to the remaining \(\psi _{n+1}-\phi _{n+1}\) first generation vertices of \(\mathcal {T}^\prec _{n+1}\).

Under this construction \(\mathcal {T}^\prec _{n+1}\) has the distribution of an f-GW-tree conditioned to have height exactly \(n+1\). Write \(\delta _0=\delta \) to be the deepest point of the tree and for \(n=1,2,\ldots \) write \(\delta _n\) to be the ancestor of \(\delta \) of distance n. The sequence \(\delta _0,\delta _1,\ldots \) form a ‘spine’ from the deepest point to the root of the tree. We denote \(\mathcal {T}^\prec \) to be the tree asymptotically attained. By a subtrap of \(\mathcal {T}^\prec \) we mean some vertex x on the spine together with a descendant y off the spine and all of the descendants of y. This is itself a tree with root x and we write \(\mathcal {S}_x\) to be the collection of subtraps rooted at x. Figure 4 shows a construction of \(\mathcal {T}^\prec _4\) where the solid line represents the spine and the dashed lines represent subtraps.

We denote \(\mathcal {S}^{n,j,1}\) to be the \(j{\text {th}}\) subtrap conditioned to have height at most \(n-1\) attached to \(\delta _n\) and \(\mathcal {S}^{n,j,2}\) to be the \(j{\text {th}}\) subtrap conditioned to have height at most n attached to \(\delta _n\). Recall that d(x, y) denotes the graph distance between vertices x, y then for \(k=1,2\) let

denote the weight of \(\mathcal {S}^{n,j,k}\) under the invariant measure associated to the conductance model with conductances \(\beta ^{i+1}\) between levels \(i,i+1\) and the roots of \(\mathcal {S}^{n,j,k}\) (spinal vertices) denoting level 0. We then write

to denote the total weight of the subtraps of \(\delta _n\) then,

is the expected time \(\mathcal {R}_\infty \) taken for a walk on \(\mathcal {T}^\prec \) started from \(\delta \) to return to \(\delta \).

Lemma 7.1

Suppose that \(\xi \) belongs to the domain of attraction of a stable law of index \(\alpha \in (1,2]\) and \(\beta \mu >1\) then

Proof

Since \(\beta >1\) we have that \(2\sum _{n=0}^\infty \beta ^{-n}=2/(1-\beta ^{-1})\) thus by (7.2) it suffices to find an appropriate bound on \(\mathbf {E}[\Lambda _n]\).

\(\mathbf {E}[\Pi ^{n,j,1}]\le \mathbf {E}[\Pi ^{n,j,2}]\) since conditioning the height of the trap to be small only reduces the weight; therefore, by independence of \(\psi _n\) and \(\Pi ^{n,j,2}\)

Using that conditioning the height of a GW-tree \(\mathcal {T}^\circ \) to be small only decreases the expected generation sizes and that \(\mu \beta >1\), by (5.1)

for some constant c where \(Z_k\) are the generation sizes of \(\mathcal {T}^\circ \). Summing over j in (7.1) shows that \(\mathbf {P}(\psi _{n+1}=k) = \mathbf {P}(Z_1=k|\mathcal {H}(\mathcal {T}^\circ )=n+1)\). Recalling that \(s_n=\mathbf {P}(\mathcal {H}(\mathcal {T}^\circ )< n)\),

By (3.1) \(1-s_{n+1} \sim c\mu ^n\) for some positive constant c. Let \(\epsilon >0\) be such that \(1-\epsilon -\mu (1+\epsilon )>0\), then for n large we have that \((1-\epsilon )c\mu ^n\le 1-s_{n+1}\le (1+\epsilon )c\mu ^n\). Therefore,

for some positive constant C. In particular, when \(\sigma ^2<\infty \), there exists some constant c such that

where the final inequality comes from that \((1-s^k)(1-s)^{-1}\) is increasing in s and converges to k for any \(k\ge 1\). It therefore follows that \(\mathbf {E}[\Lambda _n]\le C(\beta \mu )^n\) so indeed

When \(\xi \) has infinite variance but belongs to the domain of attraction of a stable law

hence by (3.9) as \(n \rightarrow \infty \) we have that \( \mathbf {E}[\psi _{n+1}] \sim c\mu ^{n(\alpha -2)}L_2(\mu ^n)\). Combining this with (7.3) and (7.4) we have

therefore using (7.2) for C chosen sufficiently large we have that

\(\square \)

We therefore have that the expected time taken for a walk started from the deepest point in a trap (of height H) to return to the deepest point is bounded above by \(\mathbb {E}[\mathcal {R}_\infty ]<\infty \) independently of its height. Recall that \(\tau ^+_x\) is the first return time to x. The following lemma gives the probabilities of reaching the deepest point in a trap, escaping the trap from the deepest point and the transition probabilities for the walk in the trap conditional on reaching the deepest point before escaping. The proof is straightforward by comparison with the biased walk on \(\mathbb {Z}\) with nearest neighbour edges so we omit it.

Lemma 7.2

For any tree T of height \(H+1\) (with \(H \ge 1\)), root \(\rho \) and deepest vertex \(\delta \) we have that

is the probability of reaching the deepest point without escaping and

is the probability of escaping from the deepest point before returning. Moreover,

is the probability that the walk restricted to the spine conditioned on reaching \(\delta \) before returning to \(\rho \) moves towards \(\delta \).

Since the first two probabilities are independent of the structure of the tree except for the height we write

to be the probability that the walk reaches the deepest vertex in the tree before returning to the root starting from the bud and

to be the probability of escaping from the tree.

For the remainder of the section we will consider only the case that the offspring distribution belongs to the domain of attraction of some stable law of index \(\alpha \in (1,2)\). The first aim is to prove Proposition 7.3 which shows that the time on excursions in deep traps essentially consists of some geometric number of excursions from the deepest point to itself. We will then conclude with Corollary 7.4 which is an adaptation for FVIE and of which we omit the proof.

Recall that \(\rho _i^+\) is the root of the \(i{\text {th}}\) large branch and \(\tilde{\chi }_n^i\) is the time spent in this branch by the \(i{\text {th}}\) walk \(X^{(i)}_n\). This branch has some number \(N^i\) buds which are roots of large traps where, by Proposition 3.4, \(N^i\) converges to a heavy tailed distribution. Let \(\rho ^+_{i,j}\) be the bud of the \(j{\text {th}}\) large trap \(\mathcal {T}_{i,j}^+\) in this branch then \(W^{i,j}:=|\{m\ge 0:X_{m-1}^{(i)}=\rho _i^+, X_m^{(i)}=\rho ^+_{i,j}\}| \) is the number of times that the \(j{\text {th}}\) large trap in the \(i{\text {th}}\) large branch is visited by the \(i{\text {th}}\) copy of the walk. Let \(\omega ^{(i,j,0)}:=0\) then for \(k\le W^{i,j}\) write \(\omega ^{(i,j,k)}:=\min \{m > \omega ^{(i,j,k-1)}:X_{m-1}^{(i)}=\rho _i^+,X_m^{(i)}=\rho ^+_{i,j}\}\) to be the start time of the \(k{\text {th}}\) excursion into \(\mathcal {T}_{i,j}^+\) and \(T^{(i,j,k)}:=|\{m \in [\omega ^{(i,j,k)}, \omega ^{(i,j,k+1)}): X_m^{(i)} \in \mathcal {T}_{i,j}^+\}|\) its duration. We can then write the time spent in large traps of the \(i{\text {th}}\) large branch as

For \(0\le k\le \mathcal {H}(\mathcal {T}_{i,j}^+)\) write \(\delta ^{(i,j)}_k\) to be the spinal vertex of distance k from the deepest point in \(\mathcal {T}_{i,j}^+\). Let \(T^{*(i,j,k)}:=0\) if there does not exist \(m\in [\omega ^{(i,j,k)},\omega ^{(i,j,k+1)}]\) such that \(X_m=\delta _0^{(i,j)}=:\delta ^{(i,j)}\) and

otherwise to be the duration of the \(k{\text {th}}\) excursion into \(\mathcal {T}_{i,j}^+\) without the first passage to the deepest point and the final passage from the deepest point to the exit. We can then define

to be the time spent in the \(i{\text {th}}\) large trap without the first passage to and last passage from \(\delta ^{(i,j)}\) on each excursion. We want to show that the difference between this and \(\tilde{\chi }^i_n\) is negligible. In particular, recalling that \(\mathcal {D}_n^{(n)}\) is the collection of large branches by level n, we will show that for all \(t>0\) as \(n \rightarrow \infty \)

For \(\epsilon >0\) denote