Abstract

Estimating the effects of variants found in disease driver genes opens the door to personalized therapeutic opportunities. Clinical associations and laboratory experiments can only characterize a tiny fraction of all the available variants, leaving the majority as variants of unknown significance (VUS). In silico methods bridge this gap by providing instant estimates on a large scale, most often based on the numerous genetic differences between species. Despite concerns that these methods may lack reliability in individual subjects, their numerous practical applications over cohorts suggest they are already helpful and have a role to play in genome interpretation when used at the proper scale and context. In this review, we aim to gain insights into the training and validation of these variant effect predicting methods and illustrate representative types of experimental and clinical applications. Objective performance assessments using various datasets that are not yet published indicate the strengths and limitations of each method. These show that cautious use of in silico variant impact predictors is essential for addressing genome interpretation challenges.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The need of estimating variant impact

The drastic reduction in the cost of genome sequencing over the last decade (DNA Sequencing Costs: Data 2020) led to a proliferation of large-scale next-generation sequencing (NGS) datasets (Pereira et al. 2020). The NCBI dbGaP database (Mailman et al. 2007) hosts a vast collection of genotype–phenotype data in a common repository, but many other data sets of broad interest reside elsewhere and not all have simple access (Gutierrez-Sacristan et al. 2021). Currently, dbGaP contains more than 500 NGS case–control studies, including studies of such diseases as Alzheimer's (Beecham et al. 2017), Parkinson's (Rosenthal et al. 2016), autism spectrum disorder (ASD) (Fischbach and Lord 2010), and others (Taliun et al. 2021). Additional case–control studies on the same or similar traits are available through other independent initiatives (Marek et al. 2018; Petersen et al. 2010). Other sources, called biobanks, contain sequencing data and clinical diagnostics from individuals representing a population, without a focus on any particular disease. Examples include the UK Biobank, which currently holds genetic data from about 500,000 individuals (Backman et al. 2021; Bycroft et al. 2018), as well as the All of Us Research Program (All of Us Research Program et al. 2019) among others (Wichmann et al. 2011). Some datasets focus on ethnic differences, such as The 1000 Genomes Project (The 1000 Genomes Project Consortium 2015) and various population-specific cohorts (Genome of the Netherlands Consortium 2014; Jeon et al. 2020; Kim et al. 2018; Nagasaki et al. 2015). Each of these sources contains rich data on human genetic variations over which we can address questions of disease etiology for mechanistic understanding, risk for prevention and early screening, personalized therapy for precision medicine, epistatic effects for complex epidemiology, pharmacogenomics for patient stratification, and ethnic diversity for health equity. Accurate metrics for the impact of individual variants is critical to guide answers to these questions.

There are many types of genetic variants, broadly grouped by the region in which the variant occurs and the number of nucleotides affected. Variants can occur in protein coding regions or non-coding regions of the genome. Although the protein coding region represents only 1.2% of the human genome (Encode Project Consortium 2012), past variant interpretation efforts focused on these variants due to their effects in protein synthesis. However, a large proportion of the non-coding genome is functional and harbors variants that drive diseases by influencing regulatory regions controlling gene expression and untranslated regions affecting mRNA translation (French and Edwards 2020). Variants can also encompass single or multiple nucleotides. Substitutions that add or remove nucleotides are called insertions or deletions, respectively, and they are rare compared to single nucleotide variants (SNVs) that account for about 90% of the variants (1000 Genomes Project Consortium et al. 2010). Protein coding insertion and deletion (indel) variants may be pathogenic when they shift the reading frame of mRNA or map to functionally important sites (Lin et al. 2017; Mullaney et al. 2010). Protein coding SNVs may truncate the protein (stop gain and start loss), cause no change to the protein sequence (synonymous), or alter one amino acid (non-synonymous/missense). Stop gain and start loss variants exert profound effects on protein function, resulting in strong selection against them (Bartha et al. 2015). Synonymous variants are assumed to be benign, although they can cause various pre-translational changes (Zeng and Bromberg 2019) and affect codon usage bias (Plotkin and Kudla 2011). The impact of non-synonymous variants is challenging to predict, since they may affect a number of protein characteristics, such as folding (Wang and Moult 2001), protein interactions (Teng et al. 2009), dynamics (Uversky et al. 2008), post‐translational modifications (Yang et al. 2019), solubility (Monplaisir et al. 1986), and others (Stefl et al. 2013), with approximately 30% of variants having a strong impact (Chasman and Adams 2001). Purifying selection accounts for all the above effects and reduces diversity within species (Cvijovic et al. 2018), allowing only beneficial and nearly neutral variants to spread and become fixed (Fu and Akey 2013; Patwa and Wahl 2008). Assuming the variant effects differ little between homologous proteins, the genetic differences between the species offer valuable information for estimating the overall effect of a variant (Ng and Henikoff 2001). The 3D structures of proteins can also provide additional complementary insights (Orengo et al. 1999; Ramensky et al. 2002). Consequently, homology and structure information have been the two main types of input for estimating the effects of coding variants on protein function.

Available methods for predicting variant effects

Many computational methods estimate variant effects (Hu et al. 2019), but their aims may differ. Some methods focus on specific aspects of protein function, such as folding stability of the mutated protein (Capriotti et al. 2005, 2008; Cheng et al. 2006; Dehouck et al. 2011; Fariselli et al. 2015; Guerois et al. 2002; Parthiban et al. 2006; Pires et al. 2014; Quan et al. 2016; Worth et al. 2011; Zhou and Zhou 2002) or a combination of folding stability and binding affinity (Berliner et al. 2014). Typically, these folding prediction methods estimate the free energy change of folding (∆∆G) due to mutation from 3D structures in addition to scores derived from different force-fields or evolutionary information. Encouragingly, about three-fourths of variants that cause Mendelian disorders affect protein stability (Wang and Moult 2001; Yue et al. 2014), suggesting that folding stability prediction methods can prioritize candidate disease drivers (Bocchini et al. 2016; Pey et al. 2007; Siekierska et al. 2012) for chaperone treatment (Chaudhuri and Paul 2006). However, these methods are partially limited by the availability of protein structure data. Although the Research Collaboratory for Structural Bioinformatics Protein Data Bank (RCSB PDB) currently contains more than 50,000 human protein structures, many are redundant and 30% of the human proteins have no PDB structure that corresponds to their sequence or any homologous sequence with as low as 30% sequence identity (Somody et al. 2017). Alternatively, stability prediction methods may take advantage of the recent development of high-quality protein structure predictors, such as AlphaFold (Jumper et al. 2021) and RosettaFold (Baek et al. 2021), which broaden the available number of protein structures. However, evolution-based stability prediction methods have competitive performance, despite the fact they do not use any structure information (Fariselli et al. 2015; Montanucci et al. 2019).

Toward broader genome interpretation of variant effect, other prediction methods rely on protein sequence homology primarily, supported when available by function annotations and structural information. Table 1 lists well-established representatives of these methods, grouped according to the type of input data they use and whether the input includes scores from available predicting methods (Ensemble). Homology-based methods hypothesize that the frequent substitutions across clades are benign, under the implied assumption that homologous proteins share identical functions. Inversely, substitutions that do not occur across phylogenetic branches indicate negative selection and possible pathogenicity (Ng and Henikoff 2001). To overcome limitations that stem from this hypothesis, homology-based prediction methods employ various techniques, such as pseudocounts (Henikoff and Henikoff 1996), phylogenetic distances (Davydov et al. 2010; Katsonis and Lichtarge 2014; Reva et al. 2011), Hidden Markov Models (Garber et al. 2009; Rogers et al. 2018; Shihab et al. 2013, 2015; Siepel et al. 2005; Thomas et al. 2003), similarity scores (Choi et al. 2012), normalized probabilities in localized sequence segments (Capriotti et al. 2006), and restricting sequence alignments to mostly orthologous proteins (Mathe et al. 2006). These homology-based prediction methods are different from methods of residue importance (see a selected list in Table 2), which provide a "conservation" score for each protein residue rather than for each amino acid substitution.

To further improve variant effect predictions, additional insights into molecular functions (Calabrese et al. 2009) and physicochemical characteristics (Stone and Sidow 2005) can be considered, posing the problem of how to weigh the contributions of heterogeneous information sources. For this reason, machine learning techniques are routinely used to select and combine the numerous features that may indicate pathogenic or benign effects. Developers may select different techniques that work best for their purpose, including Support Vector Machines (Kircher et al. 2014; Yue et al. 2006), Random Forests and other Decision Trees (Carter et al. 2013; Raimondi et al. 2016, 2017; Ramensky et al. 2002; Zhou et al. 2018a), Neural Networks (Hecht et al. 2015; Qi et al. 2021; Quang et al. 2015; Sundaram et al. 2018), Naïve Bayes (Adzhubei et al. 2010), Logistic Regression (Baugh et al. 2016), or combinations of these (Li et al. 2009).

Some methods pool pre-existing prediction methods to estimate the protein function effects of variants with better accuracy. In Table 1, we labeled as "Ensemble" the meta-methods that combine multiple pre-existing methods to obtain a single overall protein function impact score. Machine-learning approaches are typically used for such purposes. However, some of these methods have used pre-existing prediction method scores together with additional features in their training, so we labeled them as "Ensemble + ". The method DANN (Quang et al. 2015) used the same feature set and training data as the method CADD (Kircher et al. 2014), but a different learning approach, and we subsequently labeled it "Ensemble + ". Figure 1 reflects the popularity for a large collection of available methods, indicated by the citations of the original articles as a function of the publication year.

Number of citations to the primary paper of variant prediction methods as a function of the year it was published. The number of citations were obtained by Google Scholar search on the 7th of March 2022. When methods could be matched to multiple primary papers or newer versions were introduced, the paper with the most citations was used here. Methods are classified as (i) analytical models not trained on available variant annotations (red color), (ii) machine learning approaches trained on variant annotations (blue color), (iii) ensemble models that integrate scores from available predictors (purple color), and (iv) models that combine scores from available predictors and additional features (black color)

The aim of this review

In view of this diversity and importance of variant impact prediction methods, several reviews discuss the most common tools for predicting pathogenic variations, focusing on their underlying principles (Cline and Karchin 2011; Hassan et al. 2019; Katsonis et al. 2014; Tang and Thomas 2016), their clinical value (Ghosh et al. 2017; Yazar and Ozbek 2021; Zhou et al. 2018b), their mutual agreement (Castellana and Mazza 2013), or the integration of large-scale data (Cardoso et al. 2015; Chakravorty and Hegde 2018). Here, this review assesses the agreement of variant effect prediction methods with experimental and clinical data, summarizes their performance in objective blind challenges, and presents some of their successful applications to interpret variant impact and link genes to phenotypes. We find that, despite common concerns regarding performance (Castellana and Mazza 2013; Flanagan et al. 2010; Mahmood et al. 2017), numerous practical applications show that variant effect prediction methods prove reliable when used cautiously in the proper context. Thus, objective assessment exercises (Andreoletti et al. 2019; Hoskins et al. 2017) are critical to define appropriate use cases for each method, to set expectations for accuracy, and to evaluate performance improvements due to methodological refinements. Together with the interpretation of other variant types (Liu et al. 2019; Spurdle et al. 2008; Zeng and Bromberg 2019) and the integration with multi-omics data (Gomez-Cabrero et al. 2014; Huang et al. 2017a; Subramanian et al. 2020), variant effect prediction methods can help in understanding the genotype–phenotype relationship.

Main text

Method training and validation

Most methods estimate variant impact by weighing homology and/or structural information in unique ways. Homology-based predictors use multiple sequence alignments (MSA) to achieve their goal, which may either be narrow sets of orthologous sequences or larger sets that include distant homologs and paralogs. The choice of MSA affects the performance of the prediction methods and using the MSA provided by each method does not guarantee optimal performance (Hicks et al. 2011). MSA are also useful in predictors that go beyond basic homology and infer additional properties. Distinct properties are captured by conservation scores (Sunyaev et al. 1999), phylogenetic correlations of residues (Lichtarge et al. 1996), amino acid substitution frequency (Henikoff and Henikoff 1992), biochemical properties at the position of interest (Grantham 1974) and whole substitution profiles. These properties may define the context in which the mutation is observed, together with protein structure variables when a protein structure is available. Solvent accessibility (Ahmad et al. 2004; Lee and Richards 1971), secondary structure (Kabsch and Sander 1983), B-factors (Sun et al. 2019), intrinsic disorder (Dunker et al. 2002), and ligand binding sites (Hendlich et al. 1997; Yang et al. 2013) provide insights into changes occurring in the protein that can only be minimally inferred by homology-based methods. Given the plethora of features to choose and combine, differences between variant impact predictors are not surprising. In addition, many methods make use of various training data to refine predictions and select the most important features. The type of data chosen to train a method will likely influence the predicted impact to be more relevant to experimental, clinical, or evolutionary effects.

Experimental data to train and validate prediction methods

To validate, benchmark, and compare methods, predictors may turn to the experimental outcomes of large mutagenesis studies. For this purpose, four readily accessible datasets gained special popularity and were frequently used: (i) the repression activity of 4041 lac repressor mutations in E. coli (Markiewicz et al. 1994), (ii) the transactivation activity of 2314 human p53 mutations (Kato et al. 2003), (iii) the break-up of the host cell walls due to 2015 lysozyme mutations in bacteriophage T4 (Rennell et al. 1991), and (iv) the cleavage of Gag and Gag-Pol due to 336 HIV-1 protease mutations (Loeb et al. 1989). More broadly, some dedicated databases curate experimental variation data for benchmarking (Kawabata et al. 1999; Sasidharan Nair and Vihinen 2013). In addition, many more large mutagenesis studies are now available and could be used for additional benchmarking (Gray et al. 2017; Livesey and Marsh 2020; Sruthi et al. 2020). Overall, variant impact prediction methods using these large datasets perform well, if not superbly, according to developer benchmark analyses. Pearson’s correlations were nearly perfect when the experimental data were binned (Katsonis and Lichtarge 2014), showing excellent agreement with the overall trend despite point-by-point fluctuations. Accuracy was about 70% for SNAP (Bromberg and Rost 2007), 68–80% for SIFT (Ng and Henikoff 2001), and slightly better for MAPP (Stone and Sidow 2005). Areas under the ROC curve (AUC) have been reported to be 81–87% for PROVEAN (Choi et al. 2012) and from 86 to 89% for Evolutionary Action (Katsonis and Lichtarge 2014).

Clinical data to train and validate prediction methods

For further training and validation, prediction methods can also call upon databases of clinical annotations. A well-known source of clinical associations is the ClinVar repository which reports associations between genetic variation and clinical phenotypes (Landrum et al. 2018). Variants in this database were found in patient samples and annotated according to the guidelines of the American College of Medical Genetics (ACMG) and of the Association for Molecular Pathology (AMP) (Richards et al. 2015), with the goal to provide clinicians with the most robust consensus assessment. The ClinGen group convened Variant Curation Expert Panels to validate genetic annotations for specific genes or sets of genes and update variant annotations in ClinVar (Rehm et al. 2015; Rivera-Munoz et al. 2018). An alternative dataset that is frequently used in training variant impact predicting methods is the Human Gene Mutation Database (HGMD) (Stenson et al. 2020). HGMD is a manually curated, proprietary collection of published germline mutations in nuclear genes associated with human inherited disease. Also, HumSavar is an open access collection of missense variant reports, curated from literature according to ACMG/AMP guidelines (Richards et al. 2015), and it is available through UniProtKB/Swiss-Prot (Wu et al. 2006). Moreover, Cancer-specific sources are available for somatic and germline variants, such as COSMIC (Tate et al. 2019), International Agency for Research on Cancer (IARC) (Petitjean et al. 2007), Database of Curated Mutations (DoCM) (Ainscough et al. 2016), CanProVar (Li et al. 2010), and BRCA Exchange (Cline et al. 2018). Other sources include LOVD (Fokkema et al. 2005), HuVarBase (Ganesan et al. 2019), and disease-specific collections, such as Pompe disease (Kroos et al. 2008), Wilson disease (Kumar et al. 2020), and others (Gout et al. 2007; Peltomaki and Vasen 2004). The mouse-specific database Mutagenetix (Wang et al. 2018, 2015) also contains genotype–phenotype correlations, using various phenotype assays. Using these databases, many studies showed that the disease driver variants are strongly enriched for pathogenic predictions. Performance, however, varies with the choice of reference dataset and the confidence level of the clinical association, and typically ranges between AUCs of 70% and 90% (Choi et al. 2012; Dong et al. 2015; Ioannidis et al. 2016; Niroula et al. 2015; Pejaver et al. 2020; Qi et al. 2021). Nearly perfect AUCs are occasionally reported (Alirezaie et al. 2018; Ghosh et al. 2017), but such outstanding performances may be overly optimistic and prone to indirect circularity (Grimm et al. 2015).

Population fitness effect data to train and validate prediction methods

Population-wide sequencing efforts are also used to train or validate predictions. The pioneering 1000 Genomes Project ran from 2008 to 2015 and sequenced more than 2500 individuals across 26 populations from five continental groups (The 1000 Genomes Project Consortium 2015). Since 2015, the International Genome Sample Resource (IGSR) maintains The 1000 Genomes Project (Clarke et al. 2017) and added, thus far, samples from sources such as The Gambian Genome Variation Project (Malaria Genomic Epidemiology Network 2019), The Simons Genome Diversity Project (Mallick et al. 2016), and The Human Genome Diversity Project (Bergstrom et al. 2020). Larger population-wide sequencing datasets, such as the Exome Aggregation Consortium (ExAC) (Karczewski et al. 2017; Lek et al. 2016) and the more recent Genome Aggregation Database (gnomAD) (Karczewski et al. 2020), seek to aggregate variants from various projects. These projects mostly include data from disease-specific studies. ExAC and gnomAD provide allele frequency information instead of the individuals’ genomes and may contain more disease driver variants than expected in healthy individuals. Other databases describing population-wide variation include the UK Biobank (Sudlow et al. 2015), dbSNP (Markiewicz et al. 1975), the Exome Variant Server (NHLBI Exome Sequencing Project 2011), HapMap (The International HapMap Consortium 2003), and various population-specific data sets (All of Us Research Program et al. 2019; Ameur et al. 2017; GenomeAsia 100K Consortium 2019; Jain et al. 2021; John et al. 2018; Jung et al. 2020). These sources of human polymorphisms often provide the benign set of variants for training many predictors (such as FATHMM –MKL and –XF, M-CAP, MISTIC, MPC, MutationTaster, PON-P, PrimateAI, REVEL, and VEST). The underlying hypothesis is that human polymorphisms have been under negative selection pressure that may eliminate or prevent the spread of many pathogenic variants. Therefore, most of the observed variants, especially those with high allele frequency should have nearly neutral or positive effects on protein function (Kimura 1979). Prediction scores that were not trained on human polymorphisms supported this hypothesis, showing that human polymorphisms were enriched in low pathogenicity scores, with the enrichment becoming stronger for variants with higher minor allele frequency (Katsonis and Lichtarge 2014). Another study used VEST to show that sources based on complete genomes, such as The 1000 Genomes Project, contain less pathogenic-predicted variants than sources of compiled clinical data, such as SwissProt (Carter et al. 2013). However, pathogenic variants exist within clinical genomes and studies suggest that predictors of protein function effects can prioritize them to identify candidate disease variants (Chennen et al. 2020; Ioannidis et al. 2016; Jagadeesh et al. 2016).

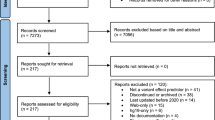

Performance assessment of variant impact prediction methods – CAGI challenges

The performance of variant impact prediction methods is hard to assess unambiguously. Independent studies (Chan et al. 2007; Dong et al. 2015; Ghosh et al. 2017; Gunning et al. 2020; Leong et al. 2015; Li et al. 2018; Livesey and Marsh 2020; Michels et al. 2019; Miosge et al. 2015; Suybeng et al. 2020; Tian et al. 2019; Yadegari and Majidzadeh 2019) have compared a limited number of methods each, using specific sets of variants and evaluation tests, but ignoring potential training circularities, and making cutoff assumptions that may not fit each method equally well. In contrast, efforts to systematically and objectively assess variant impact prediction methods come from the Critical Assessment of Genome Interpretation (CAGI) community. CAGI, so far, has organized five assessment experiments that include many different challenges, with a new, sixth assessment currently in progress. The challenges may evaluate prediction performance with respect to the impact of variants on a specific protein function (Clark et al. 2019; Kasak et al. 2019a; Xu et al. 2017), clinical sequalae (Carraro et al. 2017; Cline et al. 2019; Voskanian et al. 2019), or global fitness, such as in yeast competition assays (Zhang et al. 2019, 2017a). Critically, all CAGI challenges use new and unpublished data, developer groups make predictions blind to pathogenic associations, and independent judges use multiple criteria to score success blind to the developer identity. The aims of these challenges are to recognize advantageous strategies used by the developers and bottlenecks that prevent the field from advancing. This goal is achieved through direct comparison of each method’s performance and the features they use. In our view, the performance on the CAGI challenges did not point to obvious links between the type of predictor and the type of challenge, because it was subject to several cofounding factors (including input data availability, participation, predictor adjustments and approximations, assessor choices, assay or clinical data interpretation). Some methods clearly performed better than others according to multiple assessment metrics, but often different metrics indicated different top methods for the same challenge, highlighting the need for combining multiple metrics. Consistently top-ranked predictions come from the Evolutionary Action (Katsonis and Lichtarge 2017, 2019), MutPred (Pejaver et al. 2017), SNAP (Kasak et al. 2019a), and ensemble methods (Yin et al. 2017). It is worth noting that many less well-known participating methods showed better performance than PolyPhen2 and SIFT, which are very popular and widely used variant impact prediction methods (Katsonis and Lichtarge 2017). Very simple predictive models, such as baseline sequence conservation predictors, may perform on par or better than sophisticated methods (Zhang et al. 2019, 2017a). Also, for a given approach (submissions from the Yang & Zhou lab on the cell proliferation rates upon CDKN2A variants), gradually adding features to the prediction model led to gradual performance improvements (Carraro et al. 2017), indicating that future development should focus on the aggregation, refinement, and validation of new features. In the PCM1 challenge that evaluated 38 human missense mutations implicated in schizophrenia with a zebrafish model assay (Monzon et al. 2019), all submitted predictions had poor performance and yielded a nearly random distribution of balanced accuracy (Katsonis and Lichtarge 2019), leaving questions about the source of disagreement (Miller et al. 2019). In the CALM1 gene challenge, the performance of all submitted predictions improved when the yeast complementation assay data points were limited to those with gradually smaller experimental standard deviation (Katsonis and Lichtarge 2019), indicating a potential underestimation of the performance in CAGI challenges due to experimental errors.

In addition to these variant-level challenges, exome-level CAGI challenges have suggested that variant effect prediction methods can help in finding the disease risk of individuals (Cai et al. 2017; Chandonia et al. 2017; Daneshjou et al. 2017). However, addressing such challenges requires weighing the contribution of genes to each trait and combining the effects of multiple variants, which are not straightforward, so the successes were limited. Three CAGI challenges were related to predicting the risk of Crohn`s disease, with two of them resulting in unreliable performance evaluations due to sample stratification issues and the third one showing AUCs of up to 0.7 (Giollo et al. 2017). The performance was slightly worse for predicting the risk for venous thromboembolism, with AUCs up to 0.65 and accuracies up to 0.63 (McInnes et al. 2019). In a more complex challenge, two methods performed significantly better than chance for matching the clinical descriptions of undiagnosed patients (Kasak et al. 2019b). However, it proved harder to distinguish between individuals of different intellectual disability phenotypes (Carraro et al. 2019). Overall, predicting the risk of individuals for complex diseases remains challenging and methods predicting variant effect may complement current efforts to solve the etiology of more cases.

Applications of variant impact prediction methods

The methods predicting variant impact are already used in numerous practical applications, as indicated by the thousands of citations of PolyPhen2 (Adzhubei et al. 2010), SIFT (Kumar et al. 2009), and CADD (Kircher et al. 2014), amongst other methods. Typically, they are used to assess the impact of new variants of unknown significance, narrow down driver candidates, and to support evidence for pathogenic effects. The ACMG and AMP guidelines encourage using multiple lines of computational evidence to support pathogenic or benign classification (Richards et al. 2015). In addition, variant impact prediction methods have been used to guide mutagenesis studies and associate genes to phenotype. Next, we discuss representative practical applications of predicting methods that illustrate their value in genome interpretation. Although these applications regard specific predicting methods each, the CAGI experiments suggest that other methods could be equally or more successful in addressing the same scientific questions.

Guiding mutagenesis studies

Targeted mutagenesis experimental studies can take advantage of the variant impact predicting methods to reduce experimental cost and effort while maximizing return without missing key results (Sruthi and Prakash 2020). However, such applications are rare and typically complement other prioritization strategies that account for the available protein structures and methods that predict protein residue importance, such as the methods in Table 2. For example, evolutionarily important residues were used to identify functional motifs in the DNA-dependent protein kinase catalytic subunit and the analysis of variant impact unveiled functional insights and implications (Lees-Miller et al. 2021). Variant effect prediction methods indicated human NAGK polymorphisms that reduced its binding to the dynein subunit DYNLRB1, an interaction that promotes cellular growth and other functions (Dash et al. 2021). Mutation impact and important residues also guided mutagenesis studies aiming to uncover the interaction between the RecA and LexA protein in E. coli, which controls antibiotic resistance (Adikesavan et al. 2011; Marciano et al. 2014). In G-protein-coupled receptors, variant impact scores correlated with the phenotypic change (Gallion et al. 2017; Schonegge et al. 2017), and they were used in selecting targeted mutations that recode the allosteric pathway specificity (Peterson et al. 2015; Rodriguez et al. 2010; Schonegge et al. 2017). More recently, variant impact score analysis guided the development of a mutant esterase that gained stereospecificity properties while maintaining a 53-substrate repertoire (Cea-Rama et al. 2021). These examples show that variant effect prediction methods can effectively prioritize and reduce the workload of mutagenic experimental studies.

Supporting clinical associations

Methods predicting variant impact can help with the association of variants to traits, which currently relies on observational statistics in family studies (Borecki and Province 2008) and genome-wide association studies (GWAS) (Bush and Moore 2012; MacArthur et al. 2017). Because of linkage disequilibrium (Pritchard and Przeworski 2001), GWAS variants do not necessarily indicate causal effects (Cooper and Shendure 2011), highlighting the need for a transition to functional associations (Gallagher and Chen-Plotkin 2018). Currently, predicting methods are used in both monogenic and polygenic traits, supporting pathogenic effects for variants observed in cases and showing that candidate disease drivers are enriched in pathogenic scores, collectively. Any predicting method or the consensus of multiple methods can serve this supporting role. Few examples are variants causing FARS2 deficiency (Almannai et al. 2018), ANO5 variants causing Gnathodiaphyseal Dysplasia (Otaify et al. 2018), DHCR24 variants causing Desmosterolosis (Schaaf et al. 2011), variants in multiple genes causing ALS (Gibson et al. 2017; Kenna et al. 2016), Parkinson's disease (Oluwole et al. 2020), and Alzheimer’s disease (Vardarajan et al. 2015). Similar analyses for somatic mutations in tumors revealed strong selection patterns, either on numerous candidate genes (Bailey et al. 2018) or on specific genes such as in PTPN12 (Nair et al. 2018), BAP1 (Sharma et al. 2019), and TP53 (Li et al. 2016). Therefore, computational approaches can prioritize somatic variants for their role in cancer, using variant effect predictors and gene features (Kaminker et al. 2007). Predicting methods may also prioritize germline variants of trait-associated genes for further examination, such as in cardiovascular diseases (Rababa'h et al. 2013; Suryavanshi et al. 2018; Wang et al. 2021b). With the spread of SARS-CoV-2, computational prediction methods have presented a functional site overview for all SARS-CoV-2 proteins (Wang et al. 2021a) and suggested that their mutational hotspots can alter protein stability and binding affinity (Teng et al. 2021; Wu et al. 2021; Zou et al. 2020). These studies show that predicting methods have practical value in a variety of clinical associations, in both Mendelian and complex diseases, including cancer.

Informing diagnoses and clinical decision making

Variant impact predictors may extend from the bench to the bedside. First tier clinical tests typically use chromosomal microarrays, with reported diagnostic yield of 15–20% in patients with developmental disabilities or congenital anomalies (Miller et al. 2010). Unexplained cases may proceed to whole exome sequencing (WES) or whole genome sequencing (WGS) to fill this gap. Variant impact predictors can aid in the interpretation of the sequenced variants, offering significant increase in diagnostic yield (Grunseich et al. 2021; Stavropoulos et al. 2016). Pharmacogenomics studies may take advantage of predicting methods to interpret the impact of amino acid variations on drug metabolism (Isvoran et al. 2017; Matimba et al. 2009). Additionally, the predicted pathogenicity of somatic mutations in cancer was used in a classification system that may inform patient management (Sukhai et al. 2016). In a study of how TP53 variants affect the health of head and neck cancer patients, Evolutionary Action was able to stratify the overall survival and time to metastasis (Neskey et al. 2015), indicated resistance to cisplatin therapy (Osman et al. 2015b), and provoked suggestions for personalized treatment (Osman et al. 2015a). Similarly, survival stratification was obtained in two independent studies for colorectal liver metastases patients (Chun et al. 2019) and myelodysplastic syndrome patients (Kanagal-Shamanna et al. 2021). Therefore, predicting methods contribute in clinical diagnosis and can open paths toward precision medicine.

Associating genes to phenotype

Variant impact scores may lead to associations of genes to traits. Typically, gene-trait associations rely on detecting selection patterns within a group of individuals who share the trait (cases) compared to unaffected individuals (controls). These selection patterns arise because trait driver genes harbor several pathogenic variants in cases, in addition to non-pathogenic variants that may appear in either the cases or the controls. Current gene discovery methods may quantify patterns such as whether the gene has more mutations compared to the expected number (Lawrence et al. 2013), the mutations cluster in the protein structure or sequence compared to homogeneous spread (Tamborero et al. 2013), and the characteristic nucleotide context of the mutations differs from the context of all other mutations (Dietlein et al. 2020). Since the driver variants have larger predicted pathogenicity values compared to random nucleotide substitutions, methods that predict protein function effects offer an additional pattern toward pointing to candidate trait-driver genes. This selection pattern is orthogonal and complementary to the aforementioned measures, making variant impact prediction methods valuable for gene discovery. Next, we note such applications to somatic, de novo, and inherited variants.

Genes under selection in somatic mutations

Many cancer studies use variant impact prediction methods either as supporting evidence for the pathogenicity of gene variants (Bailey et al. 2018; Cancer Genome Atlas Research Network 2011) or as the main evidence to establish a gene-cancer link through an automated discovery process (Davoli et al. 2013; Gonzalez-Perez and Lopez-Bigas 2012; Hsu et al. 2022; Parvandeh et al. 2022). The underlying hypotheses are that most somatic mutations are passengers (i.e. they do not contribute to oncogenesis) and that driver mutations (i.e. they contribute to the development of cancer) occur selectively in specific genes (Greenman et al. 2007; Stratton et al. 2009). Because the driver variants affect protein function, predicting methods should statistically score driver variants as more pathogenic than passenger variants (Carter et al. 2009; Chen et al. 2020; Cline et al. 2019; Mullany et al. 2015; Reva et al. 2011) and point to cancer driver genes. Moreover, protein effect prediction methods can inform regarding the role of each gene in cancer, with tumor suppressor genes having mostly loss-of-function variants with high impact scores and oncogenes having mostly gain-of-function variants with intermediate to high scores (Hsu et al. 2022; Shi and Moult 2011). Gene pathway information may complement variant impact prediction methods in finding cancer driver genes (Cancer Genome Atlas Research Network 2017), even for small patient sets, such as 29 patients with sporadic Parathyroid Cancer (Clarke et al. 2019). These applications suggest that variant impact prediction methods can help in finding candidate driver genes within whole cancer cohorts and within their cancer type divisions.

Genes under selection in de novo mutations

Variant impact prediction methods are commonly used in prioritizing the functional effects of de novo variants (Hu et al. 2016; Pejaver et al. 2020; Wang et al. 2019; Willsey et al. 2017). However, de novo variants are typically absent from the general population, with each individual harboring less than two coding de novo variants (Iossifov et al. 2012; Sevim Bayrak et al. 2020). This fact limits the gene-level analysis of de novo variants, even for large datasets, such as the Simons Simplex Collection (SSC) (Fischbach and Lord 2010), which contains sequencing data from more than 2500 families with at least one child diagnosed with autism spectrum disorder (ASD) (Iossifov et al. 2012; Lord et al. 2020, 2018). Typically, such data are analyzed in the contexts of known gene-phenotype associations and the human interactome network (Chen et al. 2018). Variant impact prediction methods, such as MutPred2 (Pejaver et al. 2020) and VIPUR (Buja et al. 2018), have shown that de novo variants in ASD cases have a higher fraction of predicted pathogenic variants compared to healthy siblings. Going one step further, a study using the Evolutionary Action (EA) method and gene pathway information without prior knowledge of phenotype associations identified 398 genes (representing 23 pathways) as candidate drivers for ASD, based on the enrichment of de novo variants to pathogenic scores (Koire et al. 2021). The same study proposed polygenic risk scores based on the EA scores of either de novo or rare inherited variants on candidate genes and showed that these scores correlated with the Intelligence Quotient (IQ) of patients. These correlations were stronger when the contribution of each gene was weighted by Residual Variation Intolerance Scores (RVIS), a measure of genic intolerance to mutations (Petrovski et al. 2013). Similar analyses can be done for more phenotypes, such as congenital heart disease (Jin et al. 2017), where cases appear to have a higher fraction of predicted pathogenic variants compared to healthy controls (Qi et al. 2021). Such large family datasets provide de novo mutations that can use variant impact predictions together with other information to discover new genes toward decoding the genotype–phenotype relationship.

Genes under selection in inherited mutations

Case–control studies are routinely designed for the discovery of genes associated with a particular trait. For Mendelian traits, these associations are straightforward, and methods predicting protein function effects can help (Carter et al. 2013; Hu et al. 2013). For complex traits, the standard is gene-based GWAS for the trait of interest using all variants within a gene rather than each variant individually (Huang et al. 2011; Liu et al. 2010), but phenome-wide association studies can also serve the same purpose (Denny et al. 2010). However, spurious associations resulting from correlations with the true risk factors can lead to false-positive results (Risch 2000). Mendelian randomization may be used to overcome confounding (Grover et al. 2017) and complementary analyses including, but not limited to, literature text-mining (Bhasuran and Natarajan 2018; Zhou and Fu 2018) and gene co-expression analyses (van Dam et al. 2018) can also help. Additionally, variant impact predictors can aid in deprioritizing variants predicted to have low functional impact, thus reducing such false positive discoveries (Lee et al. 2014; Wei et al. 2011). For example, FATHMM-XF, SIFT, PolyPhen2, and CADD were used to prioritize 190 candidate genes for driving neuroticism (Belonogova et al. 2021) and similarly for other traits (Bacchelli et al. 2016; Zhang et al. 2018). In CAGI challenges, many participants predicted the risk of individuals based on genomic data and matched genotypes to phenotypes better than random (Kasak et al. 2019b; Katsonis and Lichtarge 2019; Pal et al. 2017, 2020; Wang and Bromberg 2019). The imputed Deviation in Evolutionary Action Load (iDEAL) approach used protein function predictions to discover trait drivers (Kim et al. 2021). Specifically, it was applied to late-onset Alzheimer's disease (AD) patients that paradoxically carried the AD-protective APOE ɛ2 allele compared to healthy individuals that carried the AD-risk APOE ɛ4 allele. This study identified 216 genes with differential Evolutionary Action load between the two populations. These genes showed a robust predictive power even in the independent set of APOE ɛ3 homozygote individuals and are potential drug targets. Therefore, there is strong evidence that methods predicting protein function effects have the potential to help in genome interpretation of complex diseases in a post-GWAS era.

Discussion

This review of current computational estimates of protein function effects due to variants illustrates several practical applications. They routinely guide experimental studies of protein structure and function and clinical studies of variants of unknown significance that are candidate disease drivers. Most recently, they played a major role in identifying new genes associated with traits, for either somatic, de novo, or inherited variants. This ability to translate genomic data into quantitative traits raises hope for improved diagnostic tests with polygenic risk scores that account for functional effects rather than relying only on observational statistics. A caveat is that the basis for most methods remains rooted in homology information. The scores will thus tend to assess long-term "evolutionary" effects. Generally, and depending on the prediction method and the test data, these effects will tend to align with clinical or experimental impact as shown by strong correlations through extensive validation studies and objective assessments. In other words, the fitness landscape may appear similar at different scales.

Criticism and value

In the past, variant impact prediction methods sustained pointed criticism (Flanagan et al. 2010; Mahmood et al. 2017; Tchernitchko et al. 2004) and this curtailed their use as prognostic tools. Most often, the criticism was fed on the one hand by a demand for nearly perfect accuracy in clinical diagnostics (Walters-Sen et al. 2015), and on the other hand by disagreements, first, between different methods (Chun and Fay 2009), and second, between prediction methods and experimental data or clinical annotations (Mahmood et al. 2017; Miller et al. 2019). At some degree, these discrepancies are due to misalignments between the hypotheses adopted by the method developers and the data analysts: a key is useful only when it is properly applied to the right lock. In the light of epistatic interactions, inaccuracy is expected for single variant estimations, since each individual has a unique genetic, epigenetic, and environmental background that may modify the impact of this variant. These factors may result in incomplete penetrance, where two individuals with the same genetic variant can have either benign or disease phenotype linked to that variant (Cooper et al. 2013; Waalen and Beutler 2009; Zlotogora 2003). Predictors can explicitly capture residue dependencies between positions to improve accuracy (Hopf et al. 2017) and focused methods can detect covariation signals in multiple sequence alignments to identify residue pairs with epistatic effects (Jones et al. 2012; Morcos et al. 2011; Salinas and Ranganathan 2018; Shen and Li 2016). However, most predictors of protein function effects provide estimates in a broader view, as when individual background effects are averaged out over cohorts of individuals, suggesting they are more informative in high-penetrance genes and disorders. Literally, homology-based prediction methods ignore the context and answer whether a specific variant is pathogenic in an "evolutionary sense," which at best matches the human population at large rather than addressing the context-dependent effects of the variants (DiGiammarino et al. 2002). The choice of a multiple sequence alignment input defines the “average context” of the computation and its potential biases and errors will affect the accuracy of the predictions (Hicks et al. 2011). Each algorithmic approach weighs input features differently from the other methods, which may influence prediction accuracy dramatically. Since both the sequence alignment input and the algorithmic approach affect prediction accuracy, we should avoid generalizing the performance conclusions based on a single analysis. Moreover, the assessors should ensure their hypothesis does not conflict those underlying each prediction method. The CAGI challenges offer useful insights into the performance of different methods since method developers are able to modify their approach according to the needs of each challenge and independent assessors ensure objectivity. These assessments demonstrate progress in the field of variant impact prediction and the need to adjust predictors given specific tasks. Newer approaches achieve strong correlations with experimental assay data and perform consistently better than well-known methods (Katsonis and Lichtarge 2017). Such correlations may improve when the impact of experimental noise is reduced, using only data points with small standard deviations (Katsonis and Lichtarge 2019) or combining multiple experimental assays (Gallion et al. 2017). This suggests that even systematic assessments may under-estimate the performance of predicting methods.

Predicting the impact of other variant types

Whole genome sequencing shows that non-synonymous variants are less than 0.3% of the total calls (Shen et al. 2013). There is therefore growing interest in prediction methods of other variant types. Stop-gain and frameshift insertion and deletion (fs-indel) variants result in protein sequence truncation and are traditionally viewed as pathogenic, but many of them appear frequently in human genomes even in a homozygous state (MacArthur and Tyler-Smith 2010). Non-frameshifting insertion and deletion (indel) variants are also of interest due to their link to diverse clinical effects and their substantial genetic load in most humans (Mullaney et al. 2010). Methods such as SIFT Indel (Hu and Ng 2012), DDIG-in (Folkman et al. 2015), VEST‐Indel (Douville et al. 2016), and MutPred-LOF/-Indel (Pagel et al. 2017, 2019) may use homology, structure, intrinsic disorder predictions, and gene importance features to prioritize nonsense and indel variants with reported balanced accuracy of 80–90% (Douville et al. 2016). PROVEAN (Choi et al. 2012) and MutationTaster2 (Schwarz et al. 2014) also provide predictions to non-frameshifting indel variants following the same framework they used for predicting the impact of missense variants. CADD (Kircher et al. 2014) is designed to predict the impact of all classes of genetic variation, including splice sites (Rentzsch et al. 2021) and non-coding variations. Methods that focus on predicting splicing effects use as input the genomic sequence of the pre-mRNA transcripts and include SpliceAI (Jaganathan et al. 2019), MutPred Splice (Mort et al. 2014), Human Splicing Finder (Desmet et al. 2009), SPiCE (Leman et al. 2018), and Skippy (Woolfe et al. 2010). Methods that focus on predicting noncoding variant effects rely on functional genomics data, such as various sequence conservation and constraint scores (Dousse et al. 2016; Garber et al. 2009; Siepel et al. 2005), in silico predictions of transcription factor binding sites, enhancer regions, and long noncoding RNAs (lncRNAs) (Abugessaisa et al. 2021; Fu et al. 2014; Loots and Ovcharenko 2004; Pachkov et al. 2013), and experimental evidence provided by the Encyclopedia of DNA Elements (ENCODE) (Davis et al. 2018; Encode Project Consortium 2012), including transcription factor ChIP-seq, DNA methylation arrays, and small RNA-seq projects. Some non-coding functional impact predictors include, but are not limited to, LINSIGHT (Huang et al. 2017b), GenoCanyon (Lu et al. 2015), FATHMM-MKL (Shihab et al. 2015), FATHMM-XF (Rogers et al. 2018), PAFA (Zhou and Zhao 2018), DIVAN (Chen et al. 2016), and GWAVA (Ritchie et al. 2014). Synonymous variants, despite often assumed to be benign, are implicated in many diseases (Zeng and Bromberg 2019). SiVA (Buske et al. 2013), TraP (Gelfman et al. 2017), DDIG-SN (Livingstone et al. 2017), regSNPs-splicing (Zhang et al. 2017b), IDSV (Shi et al. 2019), and synVep (Zeng et al. 2021) have used conservation, RNA, DNA, splicing, and protein features to prioritize synonymous variants with typical performances of 0.85–0.90 AUC (Zeng and Bromberg 2019). Although we still need to objectively assess these methods, they may be useful for a transition to whole-genome interpretation.

Significance in personalized therapy

Genome interpretation relies on the classification of genetic variants as pathogenic or benign, which necessitates the estimation of impact for all single variants. Clinical associations and experimental data are too limited for characterizing all variants, since more than 98% of the variants in human exomes have frequency of less than 1% (Karczewski et al. 2020; Van Hout et al. 2020) and over 40% of the ClinVar entries are catalogued as variants of unknown significance (Henrie et al. 2018). Protein function effect prediction methods have shown strong correlations with established associations and may be cautiously used to start bridging the gap in genome interpretation. With the advent of less costly sequencing technologies, clinicians can read patient’s genomes and search for precise therapies tailored to the genetic etiology of the disease. The insights provided by variant impact prediction methods may assist clinicians in selecting beneficial treatments.

Availability of data and material

Not applicable.

Code availability

Not applicable.

References

1000 Genomes Project Consortium, Abecasis GR, Altshuler D, Auton A, Brooks LD, Durbin RM, Gibbs RA, Hurles ME, McVean GA (2010) A map of human genome variation from population-scale sequencing. Nature 467:1061–1073. https://doi.org/10.1038/nature09534

Abugessaisa I, Ramilowski JA, Lizio M, Severin J, Hasegawa A, Harshbarger J, Kondo A, Noguchi S, Yip CW, Ooi JLC, Tagami M, Hori F, Agrawal S, Hon CC, Cardon M, Ikeda S, Ono H, Bono H, Kato M, Hashimoto K, Bonetti A, Kato M, Kobayashi N, Shin J, de Hoon M, Hayashizaki Y, Carninci P, Kawaji H, Kasukawa T (2021) FANTOM enters 20th year: expansion of transcriptomic atlases and functional annotation of non-coding RNAs. Nucleic Acids Res 49:D892–D898. https://doi.org/10.1093/nar/gkaa1054

Adikesavan AK, Katsonis P, Marciano DC, Lua R, Herman C, Lichtarge O (2011) Separation of recombination and SOS response in Escherichia coli RecA suggests LexA interaction sites. PLoS Genet 7:e1002244. https://doi.org/10.1371/journal.pgen.1002244

Adzhubei IA, Schmidt S, Peshkin L, Ramensky VE, Gerasimova A, Bork P, Kondrashov AS, Sunyaev SR (2010) A method and server for predicting damaging missense mutations. Nat Methods 7:248–249. https://doi.org/10.1038/nmeth0410-248

Ahmad S, Gromiha M, Fawareh H, Sarai A (2004) ASAView: database and tool for solvent accessibility representation in proteins. BMC Bioinform 5:51. https://doi.org/10.1186/1471-2105-5-51

Ainscough BJ, Griffith M, Coffman AC, Wagner AH, Kunisaki J, Choudhary MN, McMichael JF, Fulton RS, Wilson RK, Griffith OL, Mardis ER (2016) DoCM: a database of curated mutations in cancer. Nat Methods 13:806–807. https://doi.org/10.1038/nmeth.4000

Alirezaie N, Kernohan KD, Hartley T, Majewski J, Hocking TD (2018) ClinPred: prediction tool to identify disease-relevant nonsynonymous single-nucleotide variants. Am J Hum Genet 103:474–483. https://doi.org/10.1016/j.ajhg.2018.08.005

All of Us Research Program I, Denny JC, Rutter JL, Goldstein DB, Philippakis A, Smoller JW, Jenkins G, Dishman E (2019) The “All of Us” Research Program. N Engl J Med 381:668–676. https://doi.org/10.1056/NEJMsr1809937

Almannai M, Wang J, Dai H, El-Hattab AW, Faqeih EA, Saleh MA, Al Asmari A, Alwadei AH, Aljadhai YI, AlHashem A, Tabarki B, Lines MA, Grange DK, Benini R, Alsaman AS, Mahmoud A, Katsonis P, Lichtarge O, Wong LC (2018) FARS2 deficiency; new cases, review of clinical, biochemical, and molecular spectra, and variants interpretation based on structural, functional, and evolutionary significance. Mol Genet Metab 125:281–291. https://doi.org/10.1016/j.ymgme.2018.07.014

Ameur A, Dahlberg J, Olason P, Vezzi F, Karlsson R, Martin M, Viklund J, Kahari AK, Lundin P, Che H, Thutkawkorapin J, Eisfeldt J, Lampa S, Dahlberg M, Hagberg J, Jareborg N, Liljedahl U, Jonasson I, Johansson A, Feuk L, Lundeberg J, Syvanen AC, Lundin S, Nilsson D, Nystedt B, Magnusson PK, Gyllensten U (2017) SweGen: a whole-genome data resource of genetic variability in a cross-section of the Swedish population. Eur J Hum Genet 25:1253–1260. https://doi.org/10.1038/ejhg.2017.130

Andreoletti G, Pal LR, Moult J, Brenner SE (2019) Reports from the fifth edition of CAGI: The critical assessment of genome interpretation. Hum Mutat 40: 1197–1201. https://doi.org/10.1002/humu.23876

Armon A, Graur D, Ben-Tal N (2001) ConSurf: an algorithmic tool for the identification of functional regions in proteins by surface mapping of phylogenetic information. J Mol Biol 307:447–463. https://doi.org/10.1006/jmbi.2000.4474

Bacchelli E, Cainazzo MM, Cameli C, Guerzoni S, Martinelli A, Zoli M, Maestrini E, Pini LA (2016) A genome-wide analysis in cluster headache points to neprilysin and PACAP receptor gene variants. J Headache Pain 17:114. https://doi.org/10.1186/s10194-016-0705-y

Backman JD, Li AH, Marcketta A, Sun D, Mbatchou J, Kessler MD, Benner C, Liu D, Locke AE, Balasubramanian S, Yadav A, Banerjee N, Gillies CE, Damask A, Liu S, Bai X, Hawes A, Maxwell E, Gurski L, Watanabe K, Kosmicki JA, Rajagopal V, Mighty J, Regeneron Genetics C, DiscovEhr JM, Mitnaul L, Stahl E, Coppola G, Jorgenson E, Habegger L, Salerno WJ, Shuldiner AR, Lotta LA, Overton JD, Cantor MN, Reid JG, Yancopoulos G, Kang HM, Marchini J, Baras A, Abecasis GR, Ferreira MAR (2021) Exome sequencing and analysis of 454,787 UK Biobank participants. Nature 599:628–634. https://doi.org/10.1038/s41586-021-04103-z

Baek M, DiMaio F, Anishchenko I, Dauparas J, Ovchinnikov S, Lee GR, Wang J, Cong Q, Kinch LN, Schaeffer RD, Millan C, Park H, Adams C, Glassman CR, DeGiovanni A, Pereira JH, Rodrigues AV, van Dijk AA, Ebrecht AC, Opperman DJ, Sagmeister T, Buhlheller C, Pavkov-Keller T, Rathinaswamy MK, Dalwadi U, Yip CK, Burke JE, Garcia KC, Grishin NV, Adams PD, Read RJ, Baker D (2021) Accurate prediction of protein structures and interactions using a three-track neural network. Science 373:871–876. https://doi.org/10.1126/science.abj8754

Bailey MH, Tokheim C, Porta-Pardo E, Sengupta S, Bertrand D, Weerasinghe A, Colaprico A, Wendl MC, Kim J, Reardon B, Ng PK, Jeong KJ, Cao S, Wang Z, Gao J, Gao Q, Wang F, Liu EM, Mularoni L, Rubio-Perez C, Nagarajan N, Cortes-Ciriano I, Zhou DC, Liang WW, Hess JM, Yellapantula VD, Tamborero D, Gonzalez-Perez A, Suphavilai C, Ko JY, Khurana E, Park PJ, Van Allen EM, Liang H, Group MCW, Cancer Genome Atlas Research N, Lawrence MS, Godzik A, Lopez-Bigas N, Stuart J, Wheeler D, Getz G, Chen K, Lazar AJ, Mills GB, Karchin R, Ding L (2018) Comprehensive characterization of cancer driver genes and mutations. Cell 173: 371–385 e18. https://doi.org/10.1016/j.cell.2018.02.060

Bartha I, Rausell A, McLaren PJ, Mohammadi P, Tardaguila M, Chaturvedi N, Fellay J, Telenti A (2015) The characteristics of heterozygous protein truncating variants in the human genome. PLoS Comput Biol 11:e1004647. https://doi.org/10.1371/journal.pcbi.1004647

Baugh EH, Simmons-Edler R, Muller CL, Alford RF, Volfovsky N, Lash AE, Bonneau R (2016) Robust classification of protein variation using structural modelling and large-scale data integration. Nucleic Acids Res 44:2501–2513. https://doi.org/10.1093/nar/gkw120

Beecham GW, Bis JC, Martin ER, Choi SH, DeStefano AL, van Duijn CM, Fornage M, Gabriel SB, Koboldt DC, Larson DE, Naj AC, Psaty BM, Salerno W, Bush WS, Foroud TM, Wijsman E, Farrer LA, Goate A, Haines JL, Pericak-Vance MA, Boerwinkle E, Mayeux R, Seshadri S, Schellenberg G (2017) The Alzheimer’s disease sequencing project: study design and sample selection. Neurol Genet 3:e194. https://doi.org/10.1212/NXG.0000000000000194

Belonogova NM, Zorkoltseva IV, Tsepilov YA, Axenovich TI (2021) Gene-based association analysis identifies 190 genes affecting neuroticism. Sci Rep 11:2484. https://doi.org/10.1038/s41598-021-82123-5

Bendl J, Stourac J, Salanda O, Pavelka A, Wieben ED, Zendulka J, Brezovsky J, Damborsky J (2014) PredictSNP: robust and accurate consensus classifier for prediction of disease-related mutations. PLoS Comput Biol 10:e1003440. https://doi.org/10.1371/journal.pcbi.1003440

Bendl J, Musil M, Stourac J, Zendulka J, Damborsky J, Brezovsky J (2016) PredictSNP2: a unified platform for accurately evaluating snp effects by exploiting the different characteristics of variants in distinct genomic regions. PLoS Comput Biol 12:e1004962. https://doi.org/10.1371/journal.pcbi.1004962

Bergstrom A, McCarthy SA, Hui R, Almarri MA, Ayub Q, Danecek P, Chen Y, Felkel S, Hallast P, Kamm J, Blanche H, Deleuze JF, Cann H, Mallick S, Reich D, Sandhu MS, Skoglund P, Scally A, Xue Y, Durbin R, Tyler-Smith C (2020) Insights into human genetic variation and population history from 929 diverse genomes. Science. https://doi.org/10.1126/science.aay5012

Berliner N, Teyra J, Colak R, Garcia Lopez S, Kim PM (2014) Combining structural modeling with ensemble machine learning to accurately predict protein fold stability and binding affinity effects upon mutation. PLoS One 9:e107353. https://doi.org/10.1371/journal.pone.0107353

Bhasuran B, Natarajan J (2018) Automatic extraction of gene-disease associations from literature using joint ensemble learning. PLoS One 13:e0200699. https://doi.org/10.1371/journal.pone.0200699

Bocchini CE, Nahmod K, Katsonis P, Kim S, Kasembeli MM, Freeman A, Lichtarge O, Makedonas G, Tweardy DJ (2016) Protein stabilization improves STAT3 function in autosomal dominant hyper-IgE syndrome. Blood 128:3061–3072. https://doi.org/10.1182/blood-2016-02-702373

Borecki IB, Province MA (2008) Genetic and genomic discovery using family studies. Circulation 118:1057–1063. https://doi.org/10.1161/CIRCULATIONAHA.107.714592

Bromberg Y, Rost B (2007) SNAP: predict effect of non-synonymous polymorphisms on function. Nucleic Acids Res 35:3823–3835. https://doi.org/10.1093/nar/gkm238

Buja A, Volfovsky N, Krieger AM, Lord C, Lash AE, Wigler M, Iossifov I (2018) Damaging de novo mutations diminish motor skills in children on the autism spectrum. Proc Natl Acad Sci U S A 115:E1859–E1866. https://doi.org/10.1073/pnas.1715427115

Bush WS, Moore JH (2012) Chapter 11: Genome-wide association studies. PLoS Comput Biol 8: e1002822. https://doi.org/10.1371/journal.pcbi.1002822

Buske OJ, Manickaraj A, Mital S, Ray PN, Brudno M (2013) Identification of deleterious synonymous variants in human genomes. Bioinformatics 29:1843–1850. https://doi.org/10.1093/bioinformatics/btt308

Bycroft C, Freeman C, Petkova D, Band G, Elliott LT, Sharp K, Motyer A, Vukcevic D, Delaneau O, O’Connell J, Cortes A, Welsh S, Young A, Effingham M, McVean G, Leslie S, Allen N, Donnelly P, Marchini J (2018) The UK Biobank resource with deep phenotyping and genomic data. Nature 562:203–209. https://doi.org/10.1038/s41586-018-0579-z

Cai B, Li B, Kiga N, Thusberg J, Bergquist T, Chen YC, Niknafs N, Carter H, Tokheim C, Beleva-Guthrie V, Douville C, Bhattacharya R, Yeo HTG, Fan J, Sengupta S, Kim D, Cline M, Turner T, Diekhans M, Zaucha J, Pal LR, Cao C, Yu CH, Yin Y, Carraro M, Giollo M, Ferrari C, Leonardi E, Tosatto SCE, Bobe J, Ball M, Hoskins RA, Repo S, Church G, Brenner SE, Moult J, Gough J, Stanke M, Karchin R, Mooney SD (2017) Matching phenotypes to whole genomes: Lessons learned from four iterations of the personal genome project community challenges. Hum Mutat 38:1266–1276. https://doi.org/10.1002/humu.23265

Calabrese R, Capriotti E, Fariselli P, Martelli PL, Casadio R (2009) Functional annotations improve the predictive score of human disease-related mutations in proteins. Hum Mutat 30:1237–1244. https://doi.org/10.1002/humu.21047

Cancer Genome Atlas Research Network (2011) Integrated genomic analyses of ovarian carcinoma. Nature 474:609–615. https://doi.org/10.1038/nature10166

Cancer Genome Atlas Research Network (2017) Comprehensive and Integrative Genomic Characterization of Hepatocellular Carcinoma. Cell 169(1327–1341):e23. https://doi.org/10.1016/j.cell.2017.05.046

Capriotti E, Fariselli P, Casadio R (2005) I-Mutant2.0: predicting stability changes upon mutation from the protein sequence or structure. Nucleic Acids Res 33:W306–W310. https://doi.org/10.1093/nar/gki375

Capriotti E, Calabrese R, Casadio R (2006) Predicting the insurgence of human genetic diseases associated to single point protein mutations with support vector machines and evolutionary information. Bioinformatics 22:2729–2734. https://doi.org/10.1093/bioinformatics/btl423

Capriotti E, Fariselli P, Rossi I, Casadio R (2008) A three-state prediction of single point mutations on protein stability changes. BMC Bioinform 9(Suppl 2):S6. https://doi.org/10.1186/1471-2105-9-S2-S6

Capriotti E, Altman RB, Bromberg Y (2013) Collective judgment predicts disease-associated single nucleotide variants. BMC Genom 14(Suppl 3):S2. https://doi.org/10.1186/1471-2164-14-S3-S2

Cardoso JG, Andersen MR, Herrgard MJ, Sonnenschein N (2015) Analysis of genetic variation and potential applications in genome-scale metabolic modeling. Front Bioeng Biotechnol 3:13. https://doi.org/10.3389/fbioe.2015.00013

Carraro M, Minervini G, Giollo M, Bromberg Y, Capriotti E, Casadio R, Dunbrack R, Elefanti L, Fariselli P, Ferrari C, Gough J, Katsonis P, Leonardi E, Lichtarge O, Menin C, Martelli PL, Niroula A, Pal LR, Repo S, Scaini MC, Vihinen M, Wei Q, Xu Q, Yang Y, Yin Y, Zaucha J, Zhao H, Zhou Y, Brenner SE, Moult J, Tosatto SCE (2017) Performance of in silico tools for the evaluation of p16INK4a (CDKN2A) variants in CAGI. Hum Mutat 38:1042–1050. https://doi.org/10.1002/humu.23235

Carraro M, Monzon AM, Chiricosta L, Reggiani F, Aspromonte MC, Bellini M, Pagel K, Jiang Y, Radivojac P, Kundu K, Pal LR, Yin Y, Limongelli I, Andreoletti G, Moult J, Wilson SJ, Katsonis P, Lichtarge O, Chen J, Wang Y, Hu Z, Brenner SE, Ferrari C, Murgia A, Tosatto SCE, Leonardi E (2019) Assessment of patient clinical descriptions and pathogenic variants from gene panel sequences in the CAGI-5 intellectual disability challenge. Hum Mutat 40:1330–1345. https://doi.org/10.1002/humu.23823

Carter H, Chen S, Isik L, Tyekucheva S, Velculescu VE, Kinzler KW, Vogelstein B, Karchin R (2009) Cancer-specific high-throughput annotation of somatic mutations: computational prediction of driver missense mutations. Cancer Res 69:6660–6667. https://doi.org/10.1158/0008-5472.CAN-09-1133

Carter H, Douville C, Stenson PD, Cooper DN, Karchin R (2013) Identifying Mendelian disease genes with the variant effect scoring tool. BMC Genom 14(Suppl 3):S3. https://doi.org/10.1186/1471-2164-14-S3-S3

Castellana S, Mazza T (2013) Congruency in the prediction of pathogenic missense mutations: state-of-the-art web-based tools. Brief Bioinform 14:448–459. https://doi.org/10.1093/bib/bbt013

Cea-Rama I, Coscolin C, Katsonis P, Bargiela R, Golyshin PN, Lichtarge O, Ferrer M, Sanz-Aparicio J (2021) Structure and evolutionary trace-assisted screening of a residue swapping the substrate ambiguity and chiral specificity in an esterase. Comput Struct Biotechnol J 19:2307–2317. https://doi.org/10.1016/j.csbj.2021.04.041

Chakravorty S, Hegde M (2018) Inferring the effect of genomic variation in the new era of genomics. Hum Mutat 39:756–773. https://doi.org/10.1002/humu.23427

Chan PA, Duraisamy S, Miller PJ, Newell JA, McBride C, Bond JP, Raevaara T, Ollila S, Nystrom M, Grimm AJ, Christodoulou J, Oetting WS, Greenblatt MS (2007) Interpreting missense variants: comparing computational methods in human disease genes CDKN2A, MLH1, MSH2, MECP2, and tyrosinase (TYR). Hum Mutat 28:683–693. https://doi.org/10.1002/humu.20492

Chandonia JM, Adhikari A, Carraro M, Chhibber A, Cutting GR, Fu Y, Gasparini A, Jones DT, Kramer A, Kundu K, Lam HYK, Leonardi E, Moult J, Pal LR, Searls DB, Shah S, Sunyaev S, Tosatto SCE, Yin Y, Buckley BA (2017) Lessons from the CAGI-4 Hopkins clinical panel challenge. Hum Mutat 38:1155–1168. https://doi.org/10.1002/humu.23225

Chasman D, Adams RM (2001) Predicting the functional consequences of non-synonymous single nucleotide polymorphisms: structure-based assessment of amino acid variation. J Mol Biol 307:683–706. https://doi.org/10.1006/jmbi.2001.4510

Chaudhuri TK, Paul S (2006) Protein-misfolding diseases and chaperone-based therapeutic approaches. FEBS J 273:1331–1349. https://doi.org/10.1111/j.1742-4658.2006.05181.x

Chen L, Jin P, Qin ZS (2016) DIVAN: accurate identification of non-coding disease-specific risk variants using multi-omics profiles. Genome Biol 17:252. https://doi.org/10.1186/s13059-016-1112-z

Chen S, Fragoza R, Klei L, Liu Y, Wang J, Roeder K, Devlin B, Yu H (2018) An interactome perturbation framework prioritizes damaging missense mutations for developmental disorders. Nat Genet 50:1032–1040. https://doi.org/10.1038/s41588-018-0130-z

Chen H, Li J, Wang Y, Ng PK, Tsang YH, Shaw KR, Mills GB, Liang H (2020) Comprehensive assessment of computational algorithms in predicting cancer driver mutations. Genome Biol 21:43. https://doi.org/10.1186/s13059-020-01954-z

Cheng J, Randall A, Baldi P (2006) Prediction of protein stability changes for single-site mutations using support vector machines. Proteins 62:1125–1132. https://doi.org/10.1002/prot.20810

Chennen K, Weber T, Lornage X, Kress A, Bohm J, Thompson J, Laporte J, Poch O (2020) MISTIC: A prediction tool to reveal disease-relevant deleterious missense variants. PLoS One 15:e0236962. https://doi.org/10.1371/journal.pone.0236962

Choi Y, Sims GE, Murphy S, Miller JR, Chan AP (2012) Predicting the functional effect of amino acid substitutions and indels. PLoS One 7:e46688. https://doi.org/10.1371/journal.pone.0046688

Chun S, Fay JC (2009) Identification of deleterious mutations within three human genomes. Genome Res 19:1553–1561. https://doi.org/10.1101/gr.092619.109

Chun YS, Passot G, Yamashita S, Nusrat M, Katsonis P, Loree JM, Conrad C, Tzeng CD, Xiao L, Aloia TA, Eng C, Kopetz SE, Lichtarge O, Vauthey JN (2019) Deleterious effect of RAS and evolutionary high-risk TP53 double mutation in colorectal liver metastases. Ann Surg 269:917–923. https://doi.org/10.1097/SLA.0000000000002450

Clark WT, Kasak L, Bakolitsa C, Hu Z, Andreoletti G, Babbi G, Bromberg Y, Casadio R, Dunbrack R, Folkman L, Ford CT, Jones D, Katsonis P, Kundu K, Lichtarge O, Martelli PL, Mooney SD, Nodzak C, Pal LR, Radivojac P, Savojardo C, Shi X, Zhou Y, Uppal A, Xu Q, Yin Y, Pejaver V, Wang M, Wei L, Moult J, Yu GK, Brenner SE, LeBowitz JH (2019) Assessment of predicted enzymatic activity of alpha-N-acetylglucosaminidase variants of unknown significance for CAGI 2016. Hum Mutat 40:1519–1529. https://doi.org/10.1002/humu.23875

Clarke L, Fairley S, Zheng-Bradley X, Streeter I, Perry E, Lowy E, Tasse AM, Flicek P (2017) The international genome sample resource (IGSR): A worldwide collection of genome variation incorporating the 1000 genomes project data. Nucleic Acids Res 45:D854–D859. https://doi.org/10.1093/nar/gkw829

Clarke CN, Katsonis P, Hsu TK, Koire AM, Silva-Figueroa A, Christakis I, Williams MD, Kutahyalioglu M, Kwatampora L, Xi Y, Lee JE, Koptez ES, Busaidy NL, Perrier ND, Lichtarge O (2019) Comprehensive genomic characterization of parathyroid cancer identifies novel candidate driver mutations and core pathways. J Endocr Soc 3:544–559. https://doi.org/10.1210/js.2018-00043

Cline MS, Karchin R (2011) Using bioinformatics to predict the functional impact of SNVs. Bioinformatics 27:441–448. https://doi.org/10.1093/bioinformatics/btq695

Cline MS, Liao RG, Parsons MT, Paten B, Alquaddoomi F, Antoniou A, Baxter S, Brody L, Cook-Deegan R, Coffin A, Couch FJ, Craft B, Currie R, Dlott CC, Dolman L, den Dunnen JT, Dyke SOM, Domchek SM, Easton D, Fischmann Z, Foulkes WD, Garber J, Goldgar D, Goldman MJ, Goodhand P, Harrison S, Haussler D, Kato K, Knoppers B, Markello C, Nussbaum R, Offit K, Plon SE, Rashbass J, Rehm HL, Robson M, Rubinstein WS, Stoppa-Lyonnet D, Tavtigian S, Thorogood A, Zhang C, Zimmermann M, Authors BC, Burn J, Chanock S, Ratsch G, Spurdle AB (2018) BRCA challenge: BRCA exchange as a global resource for variants in BRCA1 and BRCA2. PLoS Genet 14:e1007752. https://doi.org/10.1371/journal.pgen.1007752

Cline MS, Babbi G, Bonache S, Cao Y, Casadio R, de la Cruz X, Diez O, Gutierrez-Enriquez S, Katsonis P, Lai C, Lichtarge O, Martelli PL, Mishne G, Moles-Fernandez A, Montalban G, Mooney SD, O'Conner R, Ootes L, Ozkan S, Padilla N, Pagel KA, Pejaver V, Radivojac P, Riera C, Savojardo C, Shen Y, Sun Y, Topper S, Parsons MT, Spurdle AB, Goldgar DE, Consortium E (2019) Assessment of blind predictions of the clinical significance of BRCA1 and BRCA2 variants. Hum Mutat 40: 1546–1556. https://doi.org/10.1002/humu.23861

Cooper GM, Shendure J (2011) Needles in stacks of needles: finding disease-causal variants in a wealth of genomic data. Nat Rev Genet 12:628–640. https://doi.org/10.1038/nrg3046

Cooper DN, Krawczak M, Polychronakos C, Tyler-Smith C, Kehrer-Sawatzki H (2013) Where genotype is not predictive of phenotype: towards an understanding of the molecular basis of reduced penetrance in human inherited disease. Hum Genet 132:1077–1130. https://doi.org/10.1007/s00439-013-1331-2

Cvijovic I, Good BH, Desai MM (2018) The effect of strong purifying selection on genetic diversity. Genetics 209:1235–1278. https://doi.org/10.1534/genetics.118.301058

Daneshjou R, Wang Y, Bromberg Y, Bovo S, Martelli PL, Babbi G, Lena PD, Casadio R, Edwards M, Gifford D, Jones DT, Sundaram L, Bhat RR, Li X, Pal LR, Kundu K, Yin Y, Moult J, Jiang Y, Pejaver V, Pagel KA, Li B, Mooney SD, Radivojac P, Shah S, Carraro M, Gasparini A, Leonardi E, Giollo M, Ferrari C, Tosatto SCE, Bachar E, Azaria JR, Ofran Y, Unger R, Niroula A, Vihinen M, Chang B, Wang MH, Franke A, Petersen BS, Pirooznia M, Zandi P, McCombie R, Potash JB, Altman RB, Klein TE, Hoskins RA, Repo S, Brenner SE, Morgan AA (2017) Working toward precision medicine: predicting phenotypes from exomes in the critical assessment of genome interpretation (CAGI) challenges. Hum Mutat 38:1182–1192. https://doi.org/10.1002/humu.23280

Dash R, Mitra S, Munni YA, Choi HJ, Ali MC, Barua L, Jang TJ, Moon IS (2021) Computational insights into the deleterious impacts of missense variants on n-acetyl-d-glucosamine kinase structure and function. Int J Mol Sci. https://doi.org/10.3390/ijms22158048

Davis CA, Hitz BC, Sloan CA, Chan ET, Davidson JM, Gabdank I, Hilton JA, Jain K, Baymuradov UK, Narayanan AK, Onate KC, Graham K, Miyasato SR, Dreszer TR, Strattan JS, Jolanki O, Tanaka FY, Cherry JM (2018) The encyclopedia of DNA elements (ENCODE): data portal update. Nucleic Acids Res 46:D794–D801. https://doi.org/10.1093/nar/gkx1081

Davoli T, Xu AW, Mengwasser KE, Sack LM, Yoon JC, Park PJ, Elledge SJ (2013) Cumulative haploinsufficiency and triplosensitivity drive aneuploidy patterns and shape the cancer genome. Cell 155:948–962. https://doi.org/10.1016/j.cell.2013.10.011

Davydov EV, Goode DL, Sirota M, Cooper GM, Sidow A, Batzoglou S (2010) Identifying a high fraction of the human genome to be under selective constraint using GERP++. PLoS Comput Biol 6:e1001025. https://doi.org/10.1371/journal.pcbi.1001025

Dehouck Y, Kwasigroch JM, Gilis D, Rooman M (2011) PoPMuSiC 2.1: a web server for the estimation of protein stability changes upon mutation and sequence optimality. BMC Bioinform 12:151. https://doi.org/10.1186/1471-2105-12-151

Denny JC, Ritchie MD, Basford MA, Pulley JM, Bastarache L, Brown-Gentry K, Wang D, Masys DR, Roden DM, Crawford DC (2010) PheWAS: demonstrating the feasibility of a phenome-wide scan to discover gene-disease associations. Bioinformatics 26:1205–1210. https://doi.org/10.1093/bioinformatics/btq126

Desmet FO, Hamroun D, Lalande M, Collod-Beroud G, Claustres M, Beroud C (2009) Human splicing finder: an online bioinformatics tool to predict splicing signals. Nucleic Acids Res 37:e67. https://doi.org/10.1093/nar/gkp215

Dietlein F, Weghorn D, Taylor-Weiner A, Richters A, Reardon B, Liu D, Lander ES, Van Allen EM, Sunyaev SR (2020) Identification of cancer driver genes based on nucleotide context. Nat Genet 52:208–218. https://doi.org/10.1038/s41588-019-0572-y

DiGiammarino EL, Lee AS, Cadwell C, Zhang W, Bothner B, Ribeiro RC, Zambetti G, Kriwacki RW (2002) A novel mechanism of tumorigenesis involving pH-dependent destabilization of a mutant p53 tetramer. Nat Struct Biol 9:12–16. https://doi.org/10.1038/nsb730

DNA sequencing costs: Data (2020) DNA Sequencing Costs: Data

Dong C, Wei P, Jian X, Gibbs R, Boerwinkle E, Wang K, Liu X (2015) Comparison and integration of deleteriousness prediction methods for nonsynonymous SNVs in whole exome sequencing studies. Hum Mol Genet 24:2125–2137. https://doi.org/10.1093/hmg/ddu733

Dousse A, Junier T, Zdobnov EM (2016) CEGA–a catalog of conserved elements from genomic alignments. Nucleic Acids Res 44:D96-100. https://doi.org/10.1093/nar/gkv1163

Douville C, Masica DL, Stenson PD, Cooper DN, Gygax DM, Kim R, Ryan M, Karchin R (2016) Assessing the pathogenicity of insertion and deletion variants with the variant effect scoring tool (VEST-Indel). Hum Mutat 37:28–35. https://doi.org/10.1002/humu.22911

Dunker AK, Brown CJ, Lawson JD, Iakoucheva LM, Obradovic Z (2002) Intrinsic disorder and protein function. Biochemistry 41:6573–6582. https://doi.org/10.1021/bi012159+

Encode Project Consortium (2012) An integrated encyclopedia of DNA elements in the human genome. Nature 489:57–74. https://doi.org/10.1038/nature11247

Fariselli P, Martelli PL, Savojardo C, Casadio R (2015) INPS: predicting the impact of non-synonymous variations on protein stability from sequence. Bioinformatics 31:2816–2821. https://doi.org/10.1093/bioinformatics/btv291

Fischbach GD, Lord C (2010) The Simons Simplex Collection: a resource for identification of autism genetic risk factors. Neuron 68:192–195. https://doi.org/10.1016/j.neuron.2010.10.006

Flanagan SE, Patch AM, Ellard S (2010) Using SIFT and PolyPhen to predict loss-of-function and gain-of-function mutations. Genet Test Mol Biomarkers 14:533–537. https://doi.org/10.1089/gtmb.2010.0036

Fokkema IF, den Dunnen JT, Taschner PE (2005) LOVD: easy creation of a locus-specific sequence variation database using an “LSDB-in-a-box” approach. Hum Mutat 26:63–68. https://doi.org/10.1002/humu.20201

Folkman L, Yang Y, Li Z, Stantic B, Sattar A, Mort M, Cooper DN, Liu Y, Zhou Y (2015) DDIG-in: detecting disease-causing genetic variations due to frameshifting indels and nonsense mutations employing sequence and structural properties at nucleotide and protein levels. Bioinformatics 31:1599–1606. https://doi.org/10.1093/bioinformatics/btu862

French JD, Edwards SL (2020) The role of noncoding variants in heritable disease. Trends Genet 36:880–891. https://doi.org/10.1016/j.tig.2020.07.004

Fu W, Akey JM (2013) Selection and adaptation in the human genome. Annu Rev Genom Hum Genet 14:467–489. https://doi.org/10.1146/annurev-genom-091212-153509

Fu Y, Liu Z, Lou S, Bedford J, Mu XJ, Yip KY, Khurana E, Gerstein M (2014) FunSeq2: a framework for prioritizing noncoding regulatory variants in cancer. Genome Biol 15:480. https://doi.org/10.1186/s13059-014-0480-5

Gallagher MD, Chen-Plotkin AS (2018) The post-GWAS Era: from association to function. Am J Hum Genet 102:717–730. https://doi.org/10.1016/j.ajhg.2018.04.002

Gallion J, Koire A, Katsonis P, Schoenegge AM, Bouvier M, Lichtarge O (2017) Predicting phenotype from genotype: Improving accuracy through more robust experimental and computational modeling. Hum Mutat 38:569–580. https://doi.org/10.1002/humu.23193

Ganesan K, Kulandaisamy A, Binny Priya S, Gromiha MM (2019) HuVarBase: A human variant database with comprehensive information at gene and protein levels. PLoS One 14:e0210475. https://doi.org/10.1371/journal.pone.0210475

Garber M, Guttman M, Clamp M, Zody MC, Friedman N, Xie X (2009) Identifying novel constrained elements by exploiting biased substitution patterns. Bioinformatics 25:i54-62. https://doi.org/10.1093/bioinformatics/btp190