Abstract

“Power of instructions” originally referred to automatic response activation associated with instructed rules, but previous examination of the power of instructed rules in actual task implementation has been limited. Typical tasks involve both explicit aspects (e.g., instructed stimulus–response mapping rules) and implied, yet easily inferred aspects (e.g., be ready, attend to error beeps) and it is unknown if inferred aspects also become readily executable like their explicitly instructed counterparts. In each mini-block of our paradigm we introduced a novel two-choice task. In the instructions phase, one stimulus was explicitly mapped to a response; whereas the other stimulus’ response mapping had to be inferred. Results show that, in most cases, explicitly instructed rules were implemented more efficiently than inferred rules, but this advantage was observed only in the first trial following instructions (though not in the first implementation of the rules), which suggests that the entire task set was implemented in the first trial. Theoretical implications are discussed.

Similar content being viewed by others

Notes

In the following paper, we adopt the term ‘RITL’, but do so without commitment to its specific instantiation in Cole’s papers, but instead, more broadly, as a class of paradigms, all which examine instructions-based performance.

Originally, the NEXT phase was introduced to measure automatic effects of instructions (Meiran et al., 2017), which was not the focus of the current work (though it is involved in Experiments 1 and 2, but not in Experiment 3). This additional process (responding to a NEXT target) might raise the question of whether RITL in its original meaning is measured in this task. We argue that it is. Specifically, perhaps early works concerning RITL focused merely on direct and immediate instructions (e.g., Cole, Bagic, Kass, & Schneider, 2010), but recent developments in this literature (Cole et al., 2017) consider additional forms of RITL tasks, including those involving delayed implementation. In addition, instructions in real life are often performed with some delay and not immediately (reconsider the driving directions example). In our view, the broad definition of RITL (“the ability to rapidly perform novel instructed procedures”, Cole et al., 2017, p.2) qualifies the GO phase of the NEXT paradigm as measuring RITL (see also Cole et al., 2013, Fig. 1b for a non-verbal RITL example that closely resembles our NEXT instructions). More specifically, Cole et al. (2013) defined different forms of RITL, of which the NEXT paradigm should be considered as measuring concrete and simple non-verbal RITL; while a later elaboration of this definition suggests that S–R mappings in the NEXT paradigm could be completely proactively reconfigured (Cole et al., 2017), relative to Cole et al.’s (2013) RITL paradigm. Another consideration for including a NEXT phase relates to yet unpublished experiments showing that performance in the GO phase was approaching ceiling when the NEXT phase was omitted (i.e., participants reached an excellent level of performance shortly after the onset of the experiment), suggesting that delaying implementation might prove as crucial to study RITL in this task if one wishes to avoid near-ceiling levels of performance. Nonetheless, in this study we directly measure the importance of the delaying NEXT phase in influencing the efficiency of explicitly instructed/inferred rules (comparing Experiment 2 with Experiment 3).

Another important comment is that, in contrast to null-hypothesis testing, Bayesian inference generally avoids alpha inflation toward accepting H1 in exploratory analyses, since there is no bias in favor of H1, as both H1 and H0 can be accepted (Rouder et al., 2009).

NEXT errors refer to trials in which participants erroneously executed the GO instructions during the NEXT phase (e.g., pressing “left” instead of the spacebar). We did not remove mini-blocks involving a NEXT error since it does not reflect a problem with rule encoding, but rather the opposite (i.e., a reflexive activation of the instructions in an inappropriate context).

We thank anonymous Reviewer 1 for suggesting this analysis.

It should be noted that the RT interaction between Inference Difficulty and Rule-Type was robust when using a different RT cutoff of 3.5 sd per condition and participant, suggesting that perhaps the effect is increased in the high percentiles of the distribution.

We thank Bernard Hommel for raising this important issue and inspiring Experiments 2 and 3.

Direct and indirect instructions refer to instructed and inferred rules, respectively.

References

Allon, A., & Luria, R. (2016). Prepdat-an R package for preparing experimental data for statistical analysis. Journal of Open Research Software. https://doi.org/10.5334/jors.134.

Brass, M., Liefooghe, B., Braem, S., & De Houwer, J. (2017). Following new task instructions: Evidence for a dissociation between knowing and doing. Neuroscience & Biobehavioral Reviews, 81, 16–28. https://doi.org/10.1016/j.neubiorev.2017.02.012.

Cohen-Kdoshay, O., & Meiran, N. (2007). The representation of instructions in working memory leads to autonomous response activation: Evidence from the first trials in the flanker paradigm. The Quarterly Journal of Experimental Psychology, 60(8), 1140–1154.

Cohen-Kdoshay, O., & Meiran, N. (2009). The representation of instructions operates like a prepared reflex. Experimental Psychology, 56(2), 128–133. https://doi.org/10.1027/1618-3169.56.2.128.

Cole, M. W., Bagic, A., Kass, R., & Schneider, W. (2010). Prefrontal dynamics underlying rapid instructed task learning reverse with practice. Journal of Neuroscience, 30(42), 14245–14254. https://doi.org/10.1523/JNEUROSCI.1662-10.2010.

Cole, M. W., Laurent, P., & Stocco, A. (2013). Rapid instructed task learning: A new window into the human brain’s unique capacity for flexible cognitive control. Cognitive, Affective, & Behavioral Neuroscience, 13(1), 1–22. https://doi.org/10.3758/s13415-012-0125-7.

Cole, M. W., Braver, T. S., & Meiran, N. (2017). The task novelty paradox: Flexible control of inflexible neural pathways during rapid instructed task learning. Neuroscience & Biobehavioral Reviews, 81, 4–15. https://doi.org/10.1016/j.neubiorev.2017.02.009.

De Houwer, J., Hughes, S., & Brass, M. (2017). Toward a unified framework for research on instructions and other messages: An introduction to the special issue on the power of instructions. Neuroscience & Biobehavioral Reviews, 81, 1–3. https://doi.org/10.1016/j.neubiorev.2017.04.020.

Dreisbach, G., & Haider, H. (2008). That’s what task sets are for: Shielding against irrelevant information. Psychological Research Psychologische Forschung, 72(4), 355–361. https://doi.org/10.1007/s00426-007-0131-5.

Duncan, J., Parr, A., Woolgar, A., Thompson, R., Bright, P., Cox, S., et al. (2008). Goal neglect and Spearman’s g: Competing parts of a complex task. Journal of Experimental Psychology: General, 137(1), 131–148. https://doi.org/10.1037/0096-3445.137.1.131.

Faul, F., Erdfelder, E., Buchner, A., & Lang, A.-G. (2009). Statistical power analyses using G*Power 3.1: Tests for correlation and regression analyses. Behavior Research Methods, 41(4), 1149–1160. https://doi.org/10.3758/BRM.41.4.1149.

Hommel, B. (1998). Event files: Evidence for automatic integration of stimulus-response episodes. Visual Cognition, 5(1/2), 183–216. https://doi.org/10.1080/713756773.

Hommel, B., & Colzato, L. S. (2004). Visual attention and the temporal dynamics of feature integration. Visual Cognition, 11(4), 483–521. https://doi.org/10.1080/13506280344000400.

Jacoby, L. L. (1978). On interpreting the effects of repetition: Solving a problem versus remembering a solution. Journal of Verbal Learning and Verbal Behavior, 17, 649–668.

Jeffreys, H. (1961). Theory of probability (3rd ed.). Oxford: Oxford University Press.

Katzir, M., Ori, B., & Meiran, N. (2018). “Optimal suppression” as a solution to the paradoxical cost of multitasking: Examination of suppression specificity in task switching. Psychological Research Psychologische Forschung, 82(1), 24–39. https://doi.org/10.1007/s00426-017-0930-2.

Koechlin, E., Basso, G., Pietrini, P., Panzer, S., & Grafman, J. (1999). The role of the anterior prefrontal cortex in human cognition. Nature, 399(6732), 148–151.

Kühn, S., Keizer, A. W., Colzato, L. S., Rombouts, S. A. R. B., & Hommel, B. (2011). The neural underpinnings of event-file management: Evidence for stimulus-induced activation of and competition among stimulus–response bindings. Journal of Cognitive Neuroscience, 23(4), 896–904.

Liefooghe, B., Wenke, D., & De Houwer, J. (2012). Instruction-based task-rule congruency effects. Journal of Experimental Psychology: Learning, Memory, and Cognition, 38(5), 1325–1335. https://doi.org/10.1037/a0028148.

Liefooghe, B., De Houwer, J., & Wenke, D. (2013). Instruction-based response activation depends on task preparation. Psychonomic Bulletin & Review, 20(3), 481–487. https://doi.org/10.3758/s13423-013-0374-7.

Meiran, N. (1996). Reconfiguration of processing mode prior to task performance. Journal of Experimental Psychology: Learning, Memory, and Cognition, 22(6), 1423–1442. https://doi.org/10.1037/0278-7393.22.6.1423.

Meiran, N., Pereg, M., Kessler, Y., Cole, M. W., & Braver, T. S. (2015). The power of instructions: Proactive configuration of stimulus–response translation. Journal of Experimental Psychology: Learning, Memory, and Cognition, 41(3), 768–786. https://doi.org/10.1037/xlm0000063.

Meiran, N., Pereg, M., Givon, E., Danieli, G., & Shahar, N. (2016). The role of working memory in rapid instructed task learning and intention-based reflexivity: An individual differences examination. Neuropsychologia, 90, 180–189. https://doi.org/10.1016/j.neuropsychologia.2016.06.037.

Meiran, N., Liefooghe, B., & De Houwer, J. (2017). Powerful instructions: Automaticity without practice. Current Directions in Psychological Science, 26(6), 509–514.

Pereg, M., & Meiran, N. (2018). Evidence for instructions-based updating of task-set representations: The informed fadeout effect. Psychological Research Psychologische Forschung, 82(3), 549–569. https://doi.org/10.1007/s00426-017-0842-1.

Pereg, M., Shahar, N., & Meiran, N. (2019). Can we learn to learn? The influence of procedural working-memory training on rapid instructed-task-learning. Psychological Research Psychologische Forschung, 83(1), 132–146.

Rogers, R. D., & Monsell, S. (1995). Costs of a predictable switch between simple cognitive tasks. Journal of Experimental Psychology: General, 124(2), 207–231.

Rouder, J. N., Speckman, P. L., Sun, D., Morey, R. D., & Iverson, G. (2009). Bayesian t tests for accepting and rejecting the null hypothesis. Psychonomic Bulletin & Review, 16(2), 225–237.

Ruge, H., & Wolfensteller, U. (2010). Rapid formation of pragmatic rule representations in the human brain during instruction-based learning. Cerebral Cortex, 20(7), 1656–1667. https://doi.org/10.1093/cercor/bhp228.

Schönbrodt, F. D., & Wagenmakers, E.-J. (2018). Bayes factor design analysis: Planning for compelling evidence. Psychonomic Bulletin & Review, 25(1), 128–142. https://doi.org/10.3758/s13423-017-1230-y.

Shahar, N., Pereg, M., Teodorescu, A. R., Moran, R., Karmon-Presser, A., & Meiran, N. (2018). Formation of abstract task representations: Exploring dosage and mechanisms of working memory training effects. Cognition, 181, 151–159. https://doi.org/10.1016/j.cognition.2018.08.007.

JASP Team. (2017). JASP (Version 0.8.1.2) [Computer software]. https://jasp-stats.org/

Thomas, J. (1995). Meaning in interaction: An introduction to pragmatics. Abingdon: Routledge.

Treisman, A. (1996). The binding problem. Current Opinion in Neurobiology, 6(2), 171–178. https://doi.org/10.1016/S0959-4388(96)80070-5.

van Dam, W. O., & Hommel, B. (2010). How object-specific are object files? Evidence for integration by location. Journal of Experimental Psychology: Human Perception and Performance, 36(5), 1184–1192. https://doi.org/10.1037/a0019955.

Verbruggen, F., McLaren, R., Pereg, M., & Meiran, N. (2018). Structure and implementation of novel task rules: A cross-sectional developmental study. Psychological Science, 29(7), 1113–1125. https://doi.org/10.1177/0956797618755322.

Vogel, S., & Schwabe, L. (2018). Tell me what to do: Stress facilitates stimulus-response learning by instruction. Neurobiology of Learning and Memory, 151, 43–52. https://doi.org/10.1016/j.nlm.2018.03.022.

Acknowledgements

This work was supported by a research grant from the US–Israel Binational Science Foundation Grant #2,015,186 To Nachshon Meiran, Todd S. Braver and Michael W. Cole.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Ethical approval

The study was approved by the departmental ethics committee. Informed consent was obtained from all individual participants included in the study.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Appendix

Appendix

This appendix involves a few additional tests that were raised post hoc to try and clear the issues of (1) encoding during the instructions phase; and (2) learning throughout the experiment, given that the same inference is repeatedly needed. Importantly, we note that these analyses were raised in the review process and were not hypothesized in advance (this is especially important for the pre-registered Experiments 2 and 3) (Fig.

6).

-

1)

Examining study times.

As has been mentioned, in the instructions phase each of the screens had a 2 s delay, after which participants could advance to the following screen via a spacebar self-paced response, thus introducing some inaccuracy in the measurement of study times.

To test whether participants used the instructions phase to differentially encode the instructed/inferred rules, under the assumption that longer study times imply greater encoding efforts, we performed two sets of Bayesian ANOVAs in each of the three experiments. The first set of ANOVAs was performed for total study times (i.e., the sum of both instructions screens compared to one instruction screen including both instructed rules). This analysis was aimed as a first exploration, closer to our original hypotheses (that difficult inference should take longer than easy inference, and longer than when both rules were explicitly instructed). Thus, the independent variable was Block Type (3 levels: both rules explicitly instructed; one rule explicitly instructed and one inferred, with difficult vs. easy inference).

In Experiment 1, The results supported a main effect for Block Type [F(2,78) = 9.56, p < 0.001, η2p = 0.20, BF10 = 123.39], showing support for our hypothesis (study times were 3.52 s for two explicit rules, 3.79 s for the easy inference condition, and 3.95 s for the difficult inference condition (these times include the 2 s delay). The same pattern was observed in Experiment 2 [F(2,78) = 6.79, p < 0.01, η2p=0.15, BF10 = 16.21], although the results indicated a smaller difference between the condition where both rules were explicitly instructed and the easy inference condition (5.77, 5.80, and 6.15 s in the both explicitly instructed, easy inference and difficult inference, respectively). Finally, Experiment 3 showed the same pattern [F(2,78) = 7.81, p < 0.001, η2p = 0.17, BF10 = 34.48], with results that more clearly demonstrate the difference between the three conditions (4.63, 4.82, and 5.08 s for the both explicitly instructed, easy inference and difficult inference, respectively).

The second set of ANOVAs only involved the “one explicitly instructed S–R mapping and one inferred S–R mapping” condition. This analysis was performed with the within-subjects independent variables Rule-Type (instructed-inferred), Inference Difficulty (difficult-easy; in this analysis, this variable codes for whether the explicit instruction appeared first (easy inference) or second (difficult inference)). This analysis was meant to hopefully better differentiate the encoding processes within this condition.

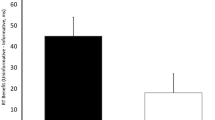

Unlike in the previous analysis, these results point to differences between the experiments. In Experiment 1, the results indicated a robust interaction between Rule-Type and Inference Difficulty [F(1,39) = 11.05, p < 0.01, η2p = 0.22, BF10 = 3,337.53], whereas both main effects were not significant (ps > 0.06, BF10 < 0.38). The results demonstrate that participants took longer to study whichever stimulus appeared first, regardless of whether it was explicitly instructed or inferred (Figure 6): Both simple effects for Rule-Type were robust (BF10 = 3.41 for difficult inference, and BF10 = 40.56 for easy inference.

A similar pattern was observed in Experiment 2 [F(1,39) = 5.80, p = 0.2, η2p = 0.13, BF10 = 68.76 for the interaction]. In this experiment, the simple effect of Rule-Type was only robust for easy inference mini-blocks (BF10 = 0.66 for difficult inference, and BF10 = 20.84 for easy inference) suggesting that when the inference was easy (i.e., the instructed rule presented first), participants took less time to study the inferred rule, but when the inference was difficult study times did not robustly differed between rule types.

In Experiment 3 however, the results did not indicate a robust interaction (or any other main effects, BFs < 2.60) [for the interaction: F(1,39) = 0.10, p = 0.76, η2p = < 0.01, BF10 = 0.25]. Here, the descriptive results indicate that participants learned explicitly instructed rules somewhat longer than inferred ones (main effect: F(1,39) = 4.25, p = 0.046, η2p = 0.10, BF10 = 2.53; which is considered an indecisive effect). We note that this experiment did not involve a NEXT phase, and while we do not completely understand this effect, which was not the focus of the current study, it could be that this (lack of) effect partly reflects the lower task demands in this experiment.

-

2)

Learning to infer during the experiment

The next set of analyses aims to test whether the superiority of instructions changes with the progress of the experiment, given that participants are required to repeat the same general inference. To perform this analysis, we added a Progress variable that divides mini-blocks into three equal parts with 50 mini-blocks each. To focus on the main result, we tested the first-trial RT instructions superiority effect above and beyond other variables. In Experiment 1, the results showed a main effect for Progress [F(2,78)=85.78, p < 0.001, η2p=0.49, BF10=1.44e+29] demonstrating RT acceleration throughout the experiment. In addition, the results suggest a robust interaction between Rule-Type and Progress F(2,78)=9.33, p<0.001, η2p = 0.19, BF10=6.83], showing that the superiority effect decreased with progression in the experiment (47.4 ms in the first 50 mini-blocks, 19.3 ms in the next 50 mini-blocks, and 11.5 ms in the final 50 mini-blocks).

In Experiments 2 and 3, although the Progress main effect was robust [F(2,78)=38.14, p<0.001, η2p=0.49, BF10=3.58e+14 (Exp. 2); F(2,78)=43.60 p<0.001, η2p=0.53, BF10=2.04e+16 (Exp. 3)], the interaction between Rule-Type and Progress was not significant [F(2,78)=2.52, p=0.09, η2p=0.06, BF10=0.31 (Exp. 2); F(2,78)=0.31, p=0.73, η2p<.01, BF10=0.09 (Exp. 3)] and allowed accepting H0.

Therefore, the results do not support the hypothesis that participants have learned how to cope with required inference in the course of the experiment capitalizing on the constant abstract structure of the instructions. Specifically, while a supporting pattern was observed in some experiments, it was not robust across experiments.

Rights and permissions

About this article

Cite this article

Pereg, M., Meiran, N. Power of instructions for task implementation: superiority of explicitly instructed over inferred rules. Psychological Research 85, 1047–1065 (2021). https://doi.org/10.1007/s00426-020-01293-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00426-020-01293-5