Abstract

Negative correlations in the sequential evolution of interspike intervals (ISIs) are a signature of memory in neuronal spike-trains. They provide coding benefits including firing-rate stabilization, improved detectability of weak sensory signals, and enhanced transmission of information by improving signal-to-noise ratio. Primary electrosensory afferent spike-trains in weakly electric fish fall into two categories based on the pattern of ISI correlations: non-bursting units have negative correlations which remain negative but decay to zero with increasing lags (Type I ISI correlations), and bursting units have oscillatory (alternating sign) correlation which damp to zero with increasing lags (Type II ISI correlations). Here, we predict and match observed ISI correlations in these afferents using a stochastic dynamic threshold model. We determine the ISI correlation function as a function of an arbitrary discrete noise correlation function \({{\,\mathrm{\mathbf {R}}\,}}_k\), where k is a multiple of the mean ISI. The function permits forward and inverse calculations of the correlation function. Both types of correlation functions can be generated by adding colored noise to the spike threshold with Type I correlations generated with slow noise and Type II correlations generated with fast noise. A first-order autoregressive (AR) process with a single parameter is sufficient to predict and accurately match both types of afferent ISI correlation functions, with the type being determined by the sign of the AR parameter. The predicted and experimentally observed correlations are in geometric progression. The theory predicts that the limiting sum of ISI correlations is \(-0.5\) yielding a perfect DC-block in the power spectrum of the spike train. Observed ISI correlations from afferents have a limiting sum that is slightly larger at \(-0.475 \pm 0.04\) (\(\text {mean} \pm \text {s.d.}\)). We conclude that the underlying process for generating ISIs may be a simple combination of low-order AR and moving average processes and discuss the results from the perspective of optimal coding.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The spiking activity of many neurons exhibits memory, which stabilizes the neurons’ firing rate and makes it less variable than a renewal process. In spontaneously active neurons, a signature of these memory effects can be found in the serial correlations of interspike intervals (ISIs), which display a prominent negative correlation between adjacent ISIs. This is a result of long intervals following short intervals so that fluctuations from the mean ISI are damped over long time-scales, thereby stabilizing the firing rate (Ratnam and Nelson 2000; Goense and Ratnam 2003). Negative correlation between adjacent ISIs, which is the first serial correlation coefficient (\(\rho _1\)), can assume a range of values (Farkhooi et al. 2009) from near-zero [close to a renewal spike train, e.g., Lowen and Teich (1992); Fisch et al. (2012)] to values close to \(-0.9\) (Ratnam and Nelson 2000). While more negative values may suggest a stronger memory effect, the relationship between the extent of memory in the spike train and their ISI correlations is by no means clear, in part due to the difficulty in determining joint interval distributions of arbitrarily high orders (van der Heyden et al. 1998; Ratnam and Nelson 2000).

Negative correlations in spontaneous spike trains, or in spike trains obtained under quiescent conditions, have been known for many years. Early reports came from lateral line units of Japanese eel (Katsuki et al. 1950), the retina of the cat (Kuffler et al. 1957), and subsequently from several neuronal systems (Farkhooi et al. 2009; Neiman and Russell 2001; Johnson et al. 1986; Lowen and Teich 1992; Hagiwara and Morita 1963; Amassian et al. 1964; Bullock and Chichibu 1965; Calvin and Stevens 1968; Nawrot et al. 2007; Fisch et al. 2012) (see Avila-Akerberg and Chacron 2011; Farkhooi et al. 2009, for tabulations of negative correlations). In the active sense of some wave-type weakly electric fish (Hagiwara and Morita 1963; Bullock and Chichibu 1965), primary electrosensory afferents exhibit the strongest known correlations in their adjacent ISIs (Ratnam and Nelson 2000). These electrosensory neurons are an excellent model system for studying memory effects and regularity in firing due to their high spontaneous spike-rates (Chacron et al. 2001; Ratnam and Nelson 2000; van der Heyden et al. 1998). It is likely that spike encoders which demonstrate negative ISI correlations have adaptive value because they can facilitate optimal detection of weak sensory signals (Goense and Ratnam 2003; Goense et al. 2003; Ratnam and Nelson 2000; Ratnam and Goense 2004; Chacron et al. 2001; Hagiwara and Morita 1963; Nesse et al. 2010; Farkhooi et al. 2011) and enhance information transmission (Chacron et al. 2005, 2004b, a).

Negative ISI correlations are a characteristic feature of spike frequency adaptation where a constant DC-valued input to a neuron is gradually forgotten, possibly due to an intrinsic high-pass filtering mechanism in the encoder (Liu and Wang 2001; Benda and Herz 2003; Benda et al. 2010). Two commonly used models of spike frequency adaptation are based on: (1) a dynamic threshold, and (2) an adaptation current, both of which cause increased refractoriness following an action potential. In the case of a dynamic threshold, the increased refractoriness is due to an elevation in firing threshold, historically referred to as “accommodation” (Hill 1936). This is usually modeled as an abrupt increase in spike threshold immediately after a spike is output, followed by a relaxation of the threshold to its normative value without reset (Buller 1965; Hagiwara 1954; Goldberg et al. 1964; Geisler and Goldberg 1966; Ten Hoopen 1964; Brandman and Nelson 2002; Chacron et al. 2000, 2001; Schwalger et al. 2010; Schwalger and Lindner 2013). Thus, if two spikes are output in quick succession with an interval smaller than the mean ISI, the upward threshold shift will be cumulative, making the membrane more refractory, and so a third spike in the sequence will occur with an ISI that is likely to be longer than the mean. In this way, a dynamic time-varying threshold serves as a moving barrier, carrying with it a memory of prior spiking activity. In the case of an adaptation current, outward potassium currents, including voltage gated potassium currents and calcium-dependent or AHP currents, can give rise to increased refractoriness and lead to spike frequency adaptation (e.g., Benda and Herz 2003; Prescott and Sejnowski 2008; Liu and Wang 2001; Benda et al. 2010; Jolivet et al. 2004; Schwalger et al. 2010; Fisch et al. 2012). Adaptation currents are usually modeled by explicitly introducing a hyperpolarizing outward current with a time-varying conductance in the equation for the membrane potential. The conductance (or a gating variable) will be elevated following a spike and, as with a dynamic threshold, it will decay back to its normative value. Both models have been successful in reproducing nearly identical spike frequency adaptation behavior for a step input current, and normalized first ISI correlation coefficient (\(\rho _1\)), although they differ in other details (Benda et al. 2010).

In this report, we focus on a dynamic threshold model. A simple dynamic threshold model can accurately predict spike-times in cortical neurons (Kobayashi et al. 2009; Gerstner and Naud 2009; Jones et al. 2015) and peripheral sensory neurons (Jones et al. 2015). Further, we had recently proposed that spike trains encoded with a dynamic threshold are optimal, in the sense that they provide an optimal estimate of the input by minimizing coding error (estimation error) for a fixed long-term spike-rate (energy consumption) (Jones et al. 2015; Johnson et al. 2015, 2016). These results did not incorporate noise in the model, and so here, we extend our earlier model by incorporating noise to model spike timing variability and serial ISI correlations.

In previous work, colored noise or Gaussian noise (or both) is added to a dynamic threshold, or to an adaptation current, or to the input signal so that negative correlations can be observed (Brandman and Nelson 2002; Chacron et al. 2001, 2003; Prescott and Sejnowski 2008). In these models the first ISI correlation coefficient \(\rho _1\) (between adjacent ISIs) is close to or equal to \(-0.5\), and all remaining correlation coefficients \(\rho _i\), \(i \ge 2\), are identically zero. In another report (Benda et al. 2010) \(\rho _1\) is parameterized, and can assume values between 0 and \(-0.5\). Experimental spike trains demonstrate broader trends, where \(\rho _1\) can assume values smaller or greater than \(-0.5\), and the remaining coefficients can be nonzero for several lags, sometimes with damped oscillations and sometimes monotonically increasing to zero (Ratnam and Nelson 2000). In several types of integrate-and-fire models with adaptation currents (Schwalger et al. 2010; Schwalger and Lindner 2013), colored and Gaussian noise fluctuations of different time-scales (slow and fast, respectively) determine the various patterns of ISI correlations, including positive correlations. All of these patterns had a geometric structure (i.e., \(\rho _k/\rho _{k-1} = \text {constant}\)). Urdapilleta (2011) also obtained a geometric structure with monotonically decaying correlation pattern with \(\rho _k < 0\). These latter studies show that the role of noise fluctuations, in particular the time-scale of fluctuations, is important in determining patterns of ISI correlations. So, far adaptation models with an adaptation current have successfully predicted patterns of ISIs, but models with dynamic thresholds have been investigated much less, and it is not known whether they can accurately predict observed ISI patterns. Models that can accurately predict experimentally observed correlation coefficients for all lags have the potential to isolate mechanisms responsible for ISI fluctuations and negative correlations and provide insights into neural coding. This is the goal of the current work.

Serial interspike interval correlations observed in primary P-type afferents of weakly electric fish Apteronotus leptorhynchus are modeled using a dynamic threshold with noise. The model is analytically tractable and permits an explicit closed-form expression for ISI correlations in terms of an arbitrary correlation function \({{\,\mathrm{\mathbf {R}}\,}}_k\), where k is a multiple of the mean ISI. This allows us to solve the inverse problem where we can determine \({{\,\mathrm{\mathbf {R}}\,}}_k\) given a sequence of observed correlation coefficients. Theoretically, the limiting sum of ISI correlation coefficients is \(-0.5\) (a perfect DC-block), and experimental correlation coefficients are close to this sum. This model is parsimonious, and in addition to predicting spike-times as shown earlier, it reproduces observed ISI correlations. Finally, the model provides a fast method for generating surrogate spike trains that match a mean firing rate with prescribed ISI distribution, joint-ISI distribution, and ISI correlations.

2 Methods

2.1 Experimental procedures

Spike data from P-type primary electrosensory afferents were collected in a previous in vivo study in Apteronotus leptorhynchus, a wave-type weakly electric fish (Ratnam and Nelson 2000). All animal procedures including animal handling, anesthesia, surgery, and euthanasia received institutional ethics (IACUC) approval from the University of Illinois at Urbana-Champaign and were carried out as previously reported (Ratnam and Nelson 2000). No new animal procedures were performed during the course of this work. Of relevance here are some details on the electrophysiological recordings. Briefly, action potentials (spikes) were isolated quasi-intracellularly from P-type afferent nerve fibers in quiescent conditions under ongoing electric organ discharge (EOD) activity (this is the so-called baseline spiking activity of P-type units). An artificial threshold was applied to determine spike onset times, and reported at the ADC sampling rate of \(16.67~\text {kHz}\) (sampling period of \(60~\mu \text {s}\)). The fish’s ongoing quasi-sinusoidal EOD was captured whenever possible to determine the EOD frequency from the power spectrum, or the EOD frequency was estimated from the power spectrum of the baseline spike train. Both methods reported almost identical EOD frequencies. EOD frequencies typically ranged from 750 to 1000 Hz (see Ratnam and Nelson 2000, for more details).

2.2 Data analysis

P-type units fire at most once every EOD cycle, and this forms a convenient time-base to resample the spike train (Ratnam and Nelson 2000). Spike times resampled at the EOD rate are reported as increasing integers. Resampling removes the phase jitter in spike-timing but retains long-term correlations due to memory effects in the spike train. Spike-times were converted to a sequence of interspike intervals, \(X_1,\,X_2,\,\ldots ,\,X_k,\ldots , X_N\).

2.2.1 Autocorrelation function

The normalized autocorrelation function for the sequence of ISIs are the normalized serial correlation coefficients or SCCs, \(\rho _1,\,\rho _2,\,\rho _3,\,\ldots \), with \(\rho _0 = 1\) by definition. These were estimated from time-stamps at the original ADC rate of \(16.67~\text {kHz}\) and at the resampled EOD frequency (Ratnam and Nelson 2000). In some afferents, there is a small difference between the two estimates, particularly in the estimates of \(\rho _1\), but this has negligible effect on the results. The normalized ISI correlation function (i.e., correlation coefficients) were estimated from the resampled spike trains as follows:

where \(T_1\) is the mean ISI, and M is the number of spikes in a block, typically ranging from 1000–3000 spikes. Correlation coefficients were estimated in non-overlapping blocks and then averaged over [N/M] blocks. There was no drift in the mean ISI within a block. We usually had about \(2.5 \times 10^5\) spikes per afferent.

Throughout this work, we will use the term covariance to refer to the mean subtracted cross-correlation. If the two random variables in question are identical, then we will simply refer to covariance as variance, and correlation as autocorrelation. If the correlation is normalized by the variance, then we will refer to it as the correlation coefficient. The abbreviation SCCs stand for ISI serial correlation coefficients and are the same as the normalized ISI autocorrelation function (ACF).

2.2.2 Partial autocorrelation function

In addition to the normalized autocorrelation (SCCs), we compute the normalized partial autocorrelation \(\phi _{k,\,k}\) between \(X_i\) and \(X_{i+k}\) by removing the linear influence of the intervening variables \(X_{i+1},\,\ldots ,\,X_{i+k-1}\) (Box and Jenkins 1970). The notation \(\phi _{k,\,j}\) means that the process is purely autoregressive (AR) of order k, and \(\phi _{k,\,j}\) is the jth coefficient in the AR model. Partial autocorrelations provide a convenient way to identify an AR process, just as the autocorrelation function, Eq. (1), provides a way to identify a moving average (MA) process. When the ISIs are AR of order-p, then the partial correlation function (PACF) is finite with \(\phi _{k,\,k} = 0\), for \(k > p\), however, the autocorrelation function will be infinite. Conversely, when the process is MA of order-m, the autocorrelation function is finite with \(\rho _k = 0\), for \(k > m\), however, the partial autocorrelation function will be infinite. When the partial autocorrelation and autocorrelation functions are both infinite, the underlying process is neither purely AR nor purely MA, but is an autoregressive moving average (ARMA) process of some unknown order \((p,\,m)\). The partial autocorrelations \(\phi _{1,\,1},\,\phi _{2,\,2},\,\phi _{3,\,3},\,\ldots \), can be obtained for \(k = 1,\,2,\,3,\,\ldots \), by solving the Yule-Walker equations. A more efficient method is to solve Durbin’s recursive equations. Durbin’s formula is (Box and Jenkins 1970),

where the \(\rho _j\) are SCCs obtained from Eq. (1). The \(\phi _{k,\,j}\) in the formula are estimates with some mean and standard deviation over the population. To reduce the estimation error, we can follow the same procedure as for serial correlations by averaging over blocks.

Representative normalized interspike interval (ISI) correlation functions from two P-type primary electrosensory afferent spike trains from two fish. For each column, from top to bottom, panels depict a sample stretch of spikes, sequence of ISIs for the sample spikes (normalized to mean ISI), and the pattern of ISI correlations. A Type I (\(\rho _1 > -0.5\)): non-bursting unit with first ISI correlation coefficient \(\rho _1 = -0.36\). Remaining \(\rho _k < 0\) diminish to 0. Sum of correlation coefficients (\(\Sigma \)) over 15 lags is \(-0.48\). B Type II (\(\rho _1 < -0.5\)): Strongly bursting unit with \(\rho _1 = -0.7\) with marked alternating positive and negative correlations. Sum of correlation coefficients (\(\Sigma \)) over 15 lags is \(-0.49\). Spike trains sampled at \(60~\mu \text {s}\). Mean ISI ± SD (in ms): \(2.42 \pm 0.72\) (A), and \(6.04 \pm 3.59\) (B). Electric organ discharge (EOD) frequency: 948 Hz (A), and 990 Hz (B)

Population summaries of normalized ISI correlation function for P-type primary afferent spike-trains (\(N = 52\)). A. Summaries of first and second ISI correlation coefficients. A1 Histogram of correlation coefficient between adjacent ISIs (\(\rho _1\)), \(\bar{\rho }_1 = -0.52 \pm 0.14\) (\(\text {mean} \pm \text {s.d.}\)), range \(-0.82\) to \(-0.25\) A2. Histogram of correlation coefficient (rotated counter clockwise by \(90^{\text {o}}\)) between every other ISI (\(\rho _2\)), \(\bar{\rho }_2 = 0.10 \pm 0.18\), range \(-0.18\) to 0.57. A3. Anti-diagonal relationship between observed \(\rho _1\) (abscissa) and \(\rho _2\) (ordinate) (filled circles). Line describes best fit, \(\rho _2 = -1.18\rho _1 -0.51\) with \(\text {Pearson's } r = 0.94\). B \(\rho _1\) as a function of firing rate. Filled black circles are the three exemplar neurons considered in this work, and the filled red circles are their matched models. C \(\rho _1\) as a function of coefficient of variation (CV) of ISIs with \(\text {Pearson's } r = -0.67\). D Mean sum of correlation coefficients for the population over 15 lags, \(\sum \,{\rho _k} = -0.475 \pm 0.04\). The population histograms in panels A1 and A2 were reported earlier with a different bin width (see Ratnam and Nelson 2000, Figs. 7A and B therein, respectively)

3 Results

3.1 Experimental results

ISI serial correlation coefficients (SCCs) were estimated from the baseline activity of 52 P-type primary electrosensory afferents in the weakly electric fish Apteronotus leptorhynchus (see Materials and Methods). SCCs from two example afferents (Fig. 1) demonstrate the patterns of negative SCCs observed in spike trains, and motivate this work. Statistical properties of these and other spike trains were reported earlier (Ratnam and Nelson 2000), with a qualitative description of SCCs and some descriptive statistics. A complete analytical treatment is undertaken here. Two types of serial interspike interval statistics can be identified according to the value taken by \(\rho _1\) (the first SCC, between adjacent ISIs).

-

1.

Type I: \(-0.5< \rho _1 < 0\). Subsequent \(\rho _k\) are negative and diminish to 0 with increasing k (Fig. 1A). The ISIs of these afferents are unimodal (shown later) and their spike trains do not exhibit bursting activity.

-

2.

Type II: \(\rho _1 < -0.5\). Subsequent \(\rho _k\) alternate between positive (\(\rho _{2k})\) and negative (\(\rho _{2k+1}\)) values, are progressively damped, and diminish to zero (Fig. 1C). The ISIs of these spike trains are bimodal with a prominent peak at an ISI equal to about one period of the electric organ discharge (EOD) (shown later). These spike trains exhibit strong bursting.

Afferent fibers sampled from individual fish were a mix of Types I and II. Additionally we identify a third type that has not been observed in experimental data (at least by these authors) but is fairly common in some models with adaptation (e.g., Brandman and Nelson 2002; Chacron et al. 2001, see also Discussion). We call this Type III, and for this pattern of SCCs \(\rho _1 = -0.5\) and subsequent \(\rho _k\) are identically zero (Fig. 1). The Type III pattern is a singleton (i.e., there is only one SCC pattern in this class).

The baseline spike-train statistics of 52 P-type afferents reported earlier (Ratnam and Nelson 2000) were analyzed in detail in this work. All observed units showed a negative correlation between succeeding ISIs (\(\rho _1 < 0\)) (Fig. 2A, panels A1 and A3). Experimental spike-trains demonstrated an average \(\bar{\rho }_1 = -0.52 \pm 0.14\) (\(\text {mean} \pm \text {s.d.}\)) (\(N = 52\)). Nearly half of these fibers had \(\rho _1 > -0.5\) (\(N = 24\)) while the remaining fibers had \(\rho _1 < -0.5\) (\(N = 28\)). The second SCC (\(\rho _2\)), between every other ISI (Fig. 2A, panels A2 and A3) assumed positive (\(N = 36\)) or negative (\(N = 16\)) values with an average of \(\bar{\rho }_2 = 0.10 \pm 0.18\). The correlation coefficient at the second lag (\(\rho _2\)) is linearly dependent on \(\rho _1\) with a positive \(\rho _2\) more likely if \(\rho _1 < - 0.5\) (Fig. 2A, panel A3). The correlation between \(\rho _1\) and \(\rho _2\) is \(-0.94\). The linear relationship is described by the equation \(\rho _2 = -1.18 \rho _1 - 0.51\), with a standard error (SE) of 0.06 (slope) and 0.03 (intercept), and significance \(p = 1.11 \times 10^{-25}\) (slope) and \(p = 1.42 \times 10^{-21}\) (intercept). This is close to the line \(\rho _2 = -\rho _1 - 0.5\), the significance of which is discussed further below. For all afferents across the population, Fig. 2B depicts \(\rho _1\) as a function of the average firing rate of the neuron, and Fig. 2C depicts \(\rho _1\) as a function of the coefficient of variation (CV) of the ISIs. Finally, the sum of the SCCs for each fiber taken over the first fifteen lags (excluding zeroth lag) has a population mean \(\sum _k\,\rho _k = -0.475 \pm 0.042\) (\(N = 52\)) which is just short of \(-0.5\) (Fig. 2D). Fifty out of 52 afferents had sums larger than \(-0.5\), whereas the remaining two afferents had a sum smaller than \(-0.5\) (\(-0.504\) and \(-0.502\), respectively). However, for these two fibers, the difference from \(-0.5\) is small. We are not able to state with confidence that the trailing digits of these two estimates are significant.

Schematic of neuron with deterministic dynamic threshold. A v is a constant bias voltage that generates spontaneous activity, \(r\left( t\right) \) is a dynamic threshold, \(\gamma \) is a spike threshold such that a spike is emitted when \(v-r\left( t\right) = \gamma \). Following a spike, the dynamic threshold suffers a jump of fixed magnitude A. The membrane voltage is non-resetting. Spike times are \(t_0,\,t_1,\,t_2,\,\ldots \), etc. Historically, the spike threshold was set to zero, i.e., \(\gamma = 0\) and a spike is fired when \(v = r\left( t\right) \). Although it makes no difference to the results presented here, the more general form with \(\gamma \) is considered for reasons entered in the discussion. B The spike encoder with dynamic threshold can be viewed as a feedback control loop where the spike-train \(\delta \left( t - t_i\right) \) is filtered by \(h\left( t\right) \) to generate the time-varying dynamic threshold \(r\left( t\right) \) which can be viewed as an estimate of the membrane voltage v(t). The comparator generates the estimation error \(e(t) = v-r\left( t\right) \) (see also Panel A). The estimation or coding error drives the spike generator, and a spike is fired whenever \(e(t) \ge \gamma \). The simplest form for the dynamic threshold (the estimation filter) \(h\left( t\right) \) mimics an RC membrane whose filter function is low-pass and given by \(h\left( t\right) = A\exp \left( -t/\tau \right) \). This is the form shown in panel A

3.2 Deterministic dynamic threshold model

In the simplest form of the dynamic threshold model (Jones et al. 2015), the spike-initiation threshold is a dynamic variable governed by two time-varying functions: the sub-threshold membrane potential v(t) and a time-varying or dynamic threshold \(r\left( t\right) \) (Fig. 3A). In the sub-threshold regime, \(v(t) < r\left( t\right) \). Most models (e.g., Kobayashi et al. 2009) assume that a spike is emitted when v(t) exceeds \(r\left( t\right) \) from below. To induce memory, the dynamic threshold is never reset (Fig. 3A) but suffers a jump in value whenever a spike is generated and then gradually relaxes to its quiescent value. This “jump and decay” is a fixed function which is called the dynamic threshold, and it usually takes the form \(h\left( t\right) = A\exp \left( -t/\tau \right) \) where A is the instantaneous jump in threshold following a spike at \(t = 0\), and \(\tau \) is the time-constant of relaxation. Between two spikes \(t_k < t \le t_{k+1}\) the dynamic threshold is \(r(t_k^-) + h(t - t_k)\) where \(r(t_k^-)\) is the value assumed immediately before the spike at \(t = t_k\). It captures the sum over the history of spiking activity.

Most neuron models in the literature, including models incorporating a dynamic threshold, typically integrate the input using a low-pass filter (i.e., pass it through a leaky integrator) or a perfect integrator and then reset the membrane voltage to a constant \(v_r\) following a spike (e.g., Chacron et al. 2001; Schwalger and Lindner 2013). Some leaky integrators have been assumed to be non-resetting (e.g., Kobayashi et al. 2009). In the form of the model considered in this work and earlier (Jones et al. 2015; Johnson et al. 2015, 2016), the voltage v(t) is not integrated (i.e., there is no filtering), and it is not reset following a spike. These assumptions remove the effects of filtering in the soma and dendrites and remove all the nonlinearities except for the spike-generator, which we assume generates a sequence of Dirac-delta functions.

For modeling spontaneous activity, we consider a steady DC-level bias voltage \(v > 0\). In its most general form, a spike is fired whenever \(v - r\left( t\right) \ = \gamma \), where \(\gamma \) is a constant spike-threshold (Fig. 3A). Historically, and in much of the literature \(\gamma = 0\) (e.g., Kobayashi et al. 2009; Chacron et al. 2001; Schwalger and Lindner 2013). We use the more general form with a constant, nonzero \(\gamma \). The specific value assumed by \(\gamma \) plays a role in optimal estimation of the stimulus (Jones et al. 2015; Johnson et al. 2016) but does not influence serial correlation coefficients. This is addressed in the discussion. The major advantage of these simplifications is that they allow us to focus on the role of the dynamic threshold element \(h\left( t\right) \) in generating SCCs.

To make explicit the presence of memory, we note that the condition for firing the kth spike at \(t_k\) is met when

It is evident that memory builds up because of a summation over history of spiking activity. The spike encoder with dynamic threshold implicitly incorporates a feedback loop (Fig. 3B) and so a different view of the above model is to think of the dynamic threshold \(r\left( t\right) \) as an ongoing estimate of the membrane voltage \(v\left( t\right) \). Here, the dynamic threshold \(h\left( t\right) = A\exp \left( -t/\tau \right) \) acts as a linear low-pass filter. It filters the spike train to form an ongoing estimate \(r\left( t\right) \) of the voltage \(v\left( t\right) \). The instantaneous error in the estimate (the coding error) is then \(e\left( t\right) = v\left( t\right) - r\left( t\right) \) (Fig. 3B). When the error exceeds \(\gamma \), a spike is output and the threshold increases by A to reduce the error. The time-varying dynamic threshold tracks \(v\left( t\right) \) much like a home thermostat tracks a temperature set-point (Fig. 3A). Viewed in this way, it is the estimation error, and not the signal \(v\left( t\right) \), which directly drives the spike generator and determines when the next spike should be generated (Fig. 3B).

From Fig 3A, we can approximate the exponential with a piece-wise linear equation when the ISI is small. If \(t_{i-1}\) and \(t_{i}\), \(i \ge 1\), are successive spike-times (Fig. 3B), then the time evolution of the dynamic threshold \(r\left( t\right) \) is given by

Note that v, \(\gamma \), A, \(\tau \) are constant, and so we can define \(m = \left( v-\gamma +A\right) /\tau \) so that the slope of the decaying dynamic threshold is \(-m\). The ISI can be obtained directly as (see Appendix for details)

which is the deterministic firing rule for a spike generator with a constant, DC-level input voltage.

3.3 Stochastic extension of the dynamic threshold model

In the schematic shown in Fig. 3B, noise injected in the body of the feedback loop will reverberate around the loop and generate memory. In the literature, a stochastic extension of this model is usually \(v -r\left( t\right) + w\left( t\right) = 0\), where w is independent Gaussian noise. That is, noise is continuous. Here, we consider a discrete, noisy threshold \(\gamma \). Subsequently, we will provide some results for a noisy time-constant \(\tau \) in the dynamic threshold element.

Figure 4 depicts the stochastic dynamic threshold model where the spike threshold (\(\gamma \)) is a stochastic process. All other aspects of the model are unchanged from the deterministic model (Fig. 3). Let \(\gamma \) be a discrete wide-sense stationary process with mean \({{\,\mathrm{\mathbf {E}}\,}}[\gamma ]\), discrete autocorrelation function \({{\,\mathrm{\mathbf {R}}\,}}_k\) and power \({{\,\mathrm{\mathbf {E}}\,}}\left[ \gamma ^2\right] = {{\,\mathrm{\mathbf {R}}\,}}_0\). The spike threshold with additive noise assumes the random value \(\gamma _i\), \(i \ge 1\) immediately after the \((i-1)\)th spike and remains constant in the time interval \(t_{i-1} < t \le t_{i}\) (Fig 4A) (Chacron et al. 2004a; Gestri et al. 1980). Thus, the ith spike is emitted when the error satisfies the condition

Subsequently the instantaneous value of the dynamic threshold jumps to a higher value specified by \(v - \gamma _i + A\), and the noisy spike threshold assumes a new value \(\gamma _{i+1}\). From Fig. 4A proceeding as before

Schematic of a neuron with dynamic threshold and stochastic firing threshold. Panel descriptions follow Fig. 3 and only differences are noted. A The spike threshold is \(\gamma _i = v - r\left( t\right) \) where \(\gamma _i\) is a random value generated at \(t_{i-1}^+\), and held constant until the next spike at \(t_i\). The discrete noise sequence \(\gamma _i\) is generated by a discrete wide-sense stationary process with mean \(\gamma \) and unknown discrete autocorrelation function \({{\,\mathrm{\mathbf {R}}\,}}_k\). The goal is to determine \({{\,\mathrm{\mathbf {R}}\,}}_k\) which will generate a prescribed sequence of ISI serial correlation coefficients \(\rho _k\). To reduce clutter, the spike threshold \(v - \gamma _i\) is depicted as \(\gamma _i\). B Block diagram showing the stochastic modification of the spike threshold. Symbols and additional description as in text and Fig. 3

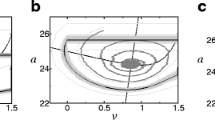

Threshold noise, \(\gamma _k\): Normalized autocorrelation (top row, \({{\,\mathrm{\mathbf {R}}\,}}_k\)) and partial autocorrelation functions (bottom row, calculated from \({{\,\mathrm{\mathbf {R}}\,}}_k\) and Eqs. 2, 3, and 4). A Generates Type I ISI SCCs matched to the afferent depicted in Fig. 1A with no bursting activity. B Generates Type II ISI SCCs from an afferent with moderate bursting activity. C Generates Type II ISI SCCs matched to the afferent depicted in Fig. 1B with strong bursting activity. Autocorrelation function that matches Type III SCCs will be zero for all nonzero lags (not shown). Panels D–F show partial autocorrelation functions of the corresponding noise process shown in panels (A–C), respectively. The partial autocorrelations are nonzero for the first lag, and zero for all lags \(k > 1\). By design, the noise generator is a first-order autoregressive (AR) process (see Eqs. 18 and 22 for \({{\,\mathrm{\mathbf {R}}\,}}_k\))

The mean ISI is therefore \({{\,\mathrm{\mathbf {E}}\,}}\left[ t_{i}-t_{i-1}\right] = A/m\) as in the deterministic case given by Eq. (7). From the assumption of wide-sense stationarity, the autocorrelation function \({{\,\mathrm{\mathbf {E}}\,}}\left[ \gamma _i\, \gamma _j\right] \) can be written as \({{\,\mathrm{\mathbf {R}}\,}}\left( t_i - t_j\right) \). This is a discrete autocorrelation function whose discrete nature is made clear in the following way (see also Appendix). Denote the mean of the kth-order interval by \(T_k = {{\,\mathrm{\mathbf {E}}\,}}[t_{i+k} - t_i]\), then the mean ISI is \(T_1\) \((=A/m)\), and further \(T_k = kT_1\). A realization of the random variable \(\gamma \) is generated once every ISI and thus, \({{\,\mathrm{\mathbf {R}}\,}}\) takes discrete values at successive multiples of the mean ISI, i.e., \({{\,\mathrm{\mathbf {R}}\,}}\left( T_1\right) \), \({{\,\mathrm{\mathbf {R}}\,}}\left( T_2\right) \), etc., and will be denoted by \({{\,\mathrm{\mathbf {R}}\,}}_1\), \({{\,\mathrm{\mathbf {R}}\,}}_2\), etc., respectively. As noted before, \({{\,\mathrm{\mathbf {R}}\,}}_0\) is noise power. That is, we can write

where k is the number of intervening ISIs. The serial correlation coefficients at lag k are defined as (Cox and Lewis 1966)

For a wide-sense stationary process, the covariances are constant, so the subscript i can be dropped. From the relations and definitions in Eqs. (9), (10), and (11), and after some routine algebra (see Appendix), we obtain

Further below we determine the \({{\,\mathrm{\mathbf {R}}\,}}_k\) from experimental data. The serial-correlation coefficients given by Eqs. (12) and (13) are independent of the slope m of the decay rate of the dynamic threshold, and its gain A. Thus, for a constant input the observed correlation structure of the spike-train is determined solely by the noise added to the deterministic firing threshold \(\gamma \).

3.3.1 Limiting sum of ISI correlation coefficients and power spectra

We make the assumption that the process \(\gamma \) is aperiodic and the autocorrelation function \({{\,\mathrm{\mathbf {R}}\,}}_N \rightarrow 0\) when \(N \rightarrow \infty \). That is, the noise process decorrelates over long time-scales. From this assumption and Eq. (13), it follows that (see Appendix)

This is the limiting sum of ISI serial correlation coefficients. Let the mean and variance of ISIs be denoted by \(T_1\) and \(V_1\), respectively, and the coefficient of variation of ISIs as \(C = \sqrt{V_1}/T_1\). If \(P\left( \omega \right) \) is the power spectrum of the spike train, then the DC-component of the power is given by (Cox and Lewis 1966)

Introducing Eq. (14) into Eq. (15), we obtain

yielding a perfect DC block.

The limiting sum of SCCs from experimental spike trains, with the sum calculated up to 15 lags, is depicted in Fig. 2D. The population of afferents demonstrated a range of limiting sums with mean sum of \(-0.475 \pm 0.04\). As noted earlier, the limiting sum was less than \(-0.5\) in only two afferents, where the sums were estimated as \(-0.505\) and \(-0.502\).

3.3.2 Predicting Type I and Type II serial correlation coefficients

In the relationships for the SCCs given by Eqs. (12) and (13), the noise process that drives the spike generator has an unknown correlation function \({{\,\mathrm{\mathbf {R}}\,}}\) which must be determined so as to predict experimentally observed SCCs. Here, we are interested in identifying the simplest process that can satisfactorily capture observed SCCs.

Consider a Gauss–Markov, i.e., Ornstein–Uhlenbeck (OU) process which relaxes as \(\exp \left( -t/\tau _\gamma \right) \) with relaxation time \(\tau _\gamma \), where \(\gamma \) signifies the spike threshold. We are interested in a discrete-time realization of the process. We first convert the decaying exponential to a geometric series where the time-base is in integer multiples of the mean ISI (see Sect. 3.3 and Eq. 10). Thus, the mean ISI becomes the “sampling period” and the exponential can be sampled at multiples of \(T_1\), i.e., \(t = kT_1\), with \(k = 1,\,2,\,3,\ldots \). Following this procedure, the continuous-time relaxation process \(\exp \left( -kT_1/\tau _\gamma \right) \) transforms to a discrete-time (sampled) geometric series with \(a^{-k} = \exp \left( -kT_1/\tau _\gamma \right) \), where the parameter \(a = \exp \left( -T_1/\tau _\gamma \right) < 1\). In the discrete formalism, the continuous-time first-order Gauss–Markov process (OU process) with relaxation time \(\tau _\gamma \), transforms to a first-order autoregressive (AR) process with parameter a. We show this below, where we define two first-order AR processes which will generate Type I and Type II SCCs, respectively.

Type I serial correlation coefficients Type I afferent spiking activity demonstrates serial correlations where \(-0.5< \rho _1 < 0\), and subsequent \(\rho _k\) are negative and diminish to 0 with increasing k (Fig. 1A). These spike trains have a unimodal ISI and do not display bursting activity. They can be generated from the ansatz, a first-order AR process

where \(\gamma _k\) is the noise added to the threshold at the kth spike (Fig. 4), a is the relaxation time of the discrete noise process (see above), and \(w_k\) is white noise input to the AR process. The output \(\gamma \) is wide-sense stationary, and its discrete autocorrelation function \({{\,\mathrm{\mathbf {R}}\,}}_k\) is

Equations (13) and (18) yield the geometric series

From Eq. (19), and noting that \(0< a < 1\), we conclude that \(\rho _1 > -0.5\), \(\rho _k < 0\) for all k, \(\mid \rho _{k-1}\mid > \mid \rho _k\mid \), and \(\rho _k \rightarrow 0\) as \(k \rightarrow \infty \). This is the observed Type I pattern of SCCs. Further, summing the geometric series Eq. (19) yields \(\sum _{k \ge 1}\,\rho _k = -0.5\) as stated in Eq. (14). The AR parameter a can be estimated from experimentally determined SCCs. From Eq. (19) this is

In practice, a better estimate is often obtained from the ratio \(a = \rho _2/\rho _1\), where \(\rho _k\) are available from experimental data.

We model a Type I P-type primary electrosensory afferent depicted in Fig. 1A using a noisy threshold with correlation function \({{\,\mathrm{\mathbf {R}}\,}}_k\) specified by Eq. (18) and shown in Fig. 5A. The dynamic threshold parameters were determined from the experimental data, and tuned so that they matched the afferent SCCs, ISI, and joint distributions (Fig. 6). By design, the noise process is first-order autoregressive, and this is reflected in the partial autocorrelation function calculated from the noise samples (Fig. 5D). The top row (Fig. 6A–C) depicts the ISI distribution, joint ISI distribution, and the serial correlation coefficients, respectively. Type I spike trains do not display bursting activity and their ISI distribution is unimodal. The bottom row (Fig. 6D–F) shows data from a matched model using noise correlation function given by Eq. (18). SCCs of adjacent ISIs \(\rho _1\) are \(-0.39\) (data) and \(-0.40\) (model). The mean sum of SCCs are \(-0.48\) (data) and \(-0.5\) (model). Thus, the observed pattern of Type I SCCs is reproduced.

Type II serial correlation coefficients Type II afferent spiking activity demonstrates serial correlations where \(\rho _1 < -0.5\) and successive \(\rho _k\) alternate between positive (\(\rho _{2k})\) and negative (\(\rho _{2k+1}\)) values, are progressively damped, and diminish to zero (Fig. 1B). These spike trains have bimodal ISIs and display bursting activity. Proceeding as before, they can be generated from the ansatz, a first-order AR process

with discrete autocorrelation function

Equations (13) and (22) yield the geometric series

From Eq. (23), and noting that \(0< a < 1\), we conclude that \(\rho _1 < -0.5\), \(\rho _{2k} > 0\), \(\rho _{2k+1} < 0\), \(\mid \rho _{2k}\mid > \mid \rho _{2k+1}\mid \), and \(\rho _k \rightarrow 0\) as \(k \rightarrow \infty \). This is the observed Type II pattern of SCCs. Further, summing the geometric series (Eq. 23) yields \(\sum _{k \ge 1}\,\rho _k = -0.5\) as stated in Eq. (14). The AR parameter a can be estimated from experimentally determined SCCs. From Eq. (23) this is

As noted for Type I SCCs a better estimate is often obtained from the ratio \(a = -\rho _2/\rho _1\). Finally, we note that the Type I formalism extends nicely to the Type II formalism with the only difference being that the coefficient of the first-order process (a) becomes negative. This simple substitution in Eqs. (18), (19), and (20) will result in Eqs. (22), (23), and (24), respectively

Type I neuron with no bursting activity. Top row depicts experimental spike-train from a P-type primary electrosensory afferent. A The spike train has a unimodal interspike interval distribution (ISI) and does not display bursting activity. Abscissa is ISI in multiples of electric organ discharge (EOD) period and ordinate is probability. B Joint interspike interval distribution showing probability of observing successive intervals \(\text {ISI}\left( i\right) \) (abscissa) and \(\text {ISI}\left( i+1\right) \) (ordinate). The size of the circle is proportional to the probability of jointly observing the adjacent ISIs, i.e., \(P\left( i, \, i+1\right) \). C Normalized correlation (ordinate) as a function of lag measured in multiples of mean ISI (abscissa). Spike-train sampled at EOD period. ISI correlations for this afferent are shown in Fig. 1A at a different sampling rate (see Methods). Bottom row depicts results for matched model using colored noise with correlation function given by Eq. (18) and shown in Fig. 5A. Panel descriptions as in top row, except in F where open circles denote experimental data taken from (C). EOD period: 1.06 ms, mean ISI: 2.42 ms. To generate model results, \(v = 1.845\) V, adaptive threshold parameters: \(A = 0.15\) and \(\tau = 30\) ms, AR parameter: \(a = 0.4\) (\(\tau _\gamma = 2.09\) ms), and \({{\,\mathrm{\mathbf {R}}\,}}_0 = 1.07 \times 10^{-3}~\text {V}^2\) (\(\text {SNR} = 35~\text {dB}\))

Type II neuron showing moderate bursting activity. Description is identical to Fig. 6. Top row depicts experimental spike train from a P-type primary electrosensory afferent. Bottom row depicts results for matched model using colored noise with correlation function given by Eq. (22) and shown in Fig. 5B. EOD period: 1.31 ms, mean ISI: 3.77 ms. To generate model results, \(v = 1.845\) V, adaptive threshold parameters: \(A = 0.28\) and \(\tau = 26\) ms, AR parameter: \(a = 0.29\) (\(\tau _\gamma = 3.18\) ms), and \({{\,\mathrm{\mathbf {R}}\,}}_0 = 9.22 \times 10^{-3}~\text {V}^2\) (\(\text {SNR} = 26~\text {dB}\))

Type II neuron showing strong bursting activity. Description is identical to Fig. 6. Top row depicts experimental spike train from a P-type primary electrosensory afferent. SCCs for this afferent are shown in Fig. 1B at a different sampling rate (see Methods). Bottom row depicts results for matched model using colored noise with correlation function given by Eq. (22) and shown in Fig. 5C. EOD period: 1.04 ms, mean ISI: 6.04 ms. To generate model results, \(v = 1.845~\text {V}\), adaptive threshold parameters: \(A = 0.19\) and \(\tau = 60\) ms, AR parameter: \(a = 0.69\) (\(\tau _\gamma = 16.89\) ms), and \({{\,\mathrm{\mathbf {R}}\,}}_0 = 6.4 \times 10^{-3}~\text {V}^2\) (\(\text {SNR} = 27~\text {dB}\))

We model Type II P-type primary electrosensory afferents using a noisy threshold with correlation function \({{\,\mathrm{\mathbf {R}}\,}}_k\) specified by Eq. (22). The noise correlation function and dynamic threshold parameters were determined from the experimental data, and tuned so that they matched the afferent SCCs, ISI, and joint distributions. We demonstrate with two examples: (i) moderate bursting activity and (ii) strong bursting activity. The moderately bursting neuron has a broad bimodal ISI distribution (Fig. 7A–C), and its ISI and joint ISI distributions, and SCCs are captured by the matched model (Fig. 7D–F). The noise correlation function for generating the model spike-train is depicted in Fig. 5B, and the noise partial correlation function in Fig. 5E. SCCs of adjacent ISI \(\rho _1\) are \(-0.59\) (data) and \(-0.62\) (model). The mean sum of SCCs are \(-0.49\) (data) and \(-0.5\) (model). The strongly bursting neuron has a well-defined bimodal distribution (Fig. 8A–C), and its ISI and joint ISI distributions, and SCCs are captured by the matched model (Fig. 8D–F). The noise correlation function for generating the model spike-train is depicted in (Fig. 5C), and the noise partial correlation function in Fig. 5F. SCCs of adjacent ISI \(\rho _1\) are \(-0.7\) (data) and \(-0.7\) (model). The mean sum of SCCs are \(-0.49\) (data) and \(-0.5\) (model). Thus, in both cases (Figs. 7 and 8), the observed patterns of Type II SCCs are reproduced. All observed afferent spike trains were either Type I or Type II.

We mention in passing that the noise correlation functions depicted in Fig. 5A–C should not be confused with the SCCs depicted in panels C and F in Figs. 6, 7, and 8.

Type III serial correlation coefficients Type III afferent spiking activity demonstrates serial correlations where \(\rho _1 = -0.5\) and all \(\rho _k = 0\) for \(k \ge 2\). This is a degenerate case, resulting in a singleton with a unique set of SCCs. Such spike trains can be generated by a process where spike threshold noise is uncorrelated, and hence white. In this case, \({{\,\mathrm{\mathbf {R}}\,}}_0 = \sigma _\gamma ^2\) is noise power, and \({{\,\mathrm{\mathbf {R}}\,}}_k = 0\) for \(k \ge 1\). We see immediately from Eqs. (12) and (13) that the \(\rho _k\) have the prescribed form. Further, we note that trivially \(\sum _{k \ge 1}\,\rho _k = -0.5\) as stated in Eq. (14). We mention in passing, and for later discussion, that white noise added to the spike threshold is equivalent to setting \(a = 0\) (\(\tau _\gamma = 0\)) in the first-order AR process defined in Eq. (17). A spike-train with exactly Type III SCCs has not been observed in the experimental data presented here.

3.3.3 Partial autocorrelation functions

The autocorrelation function (ACF) at lag k includes the influence of the variable \(X_{i+k}\) on \(X_i\), and the influence of all the intervening lags \(r < k\). The direct effect of \(X_{i+k}\) on \(X_i\) is not explicitly known. On the other hand, the partial autocorrelation (PAC) between two variables in a sequence \(X_i\) and \(X_{i+k}\) removes the linear influence of the intervening variables \(X_{i+1},\,\ldots ,\,X_{i+k-1}\) (Box and Jenkins 1970). The PAC as a function of k is the PAC function or PACF. Section 2.2.2 in Methods discusses the differences between the ISI PACF and the ISI ACF with regard to AR and MA processes. We estimated the PACF from experimental data and the modeled spike trains using Eqs. (2), (3), and (4). These are the same model spike trains used in the calculation of the ACFs. The PACFs are shown in Fig. 9A–C for the afferents shown in Figs. 6, 7, and 8, respectively. By definition the first partial autocorrelation \(\phi _{1,\,1} = \rho _1\). In contrast to the SCCs where correlation coefficients \(\rho _k\) could be positive or negative for \(k \ge 2\), all partial autocorrelations are negative irrespective of the type of afferent.

3.3.4 Dynamic threshold with a random time-constant

The dynamic threshold has three parameters A, \(\gamma \), and \(\tau \). We have so far described a stochastic model based on a noisy \(\gamma \) which is formally equivalent to a noisy A. We now consider a stochastic dynamic threshold model based on a noisy time-constant \(\tau \). We can transform the adaptive threshold model with a random spike threshold from the previous section and Fig. 4 so that the time-constant of the adaptive threshold filter \(h\left( t\right) \) is a random variable with mean \(\tau \) (Fig. 10). From Eq. (9) the random variate \(\gamma _i - \gamma _{i-1}\) which appears in the time-base can be transformed into the random variate \(m_i\) which is the slope of the linearized adaptive threshold (green line, Fig. 10). From

we obtain

and so, \({{\,\mathrm{\mathbf {E}}\,}}\left[ \tau _i\right] = \tau \). As noted, this is the mean value of the dynamic threshold filter time-constant. It is immediately apparent from Eqs. (9) and (26) that the covariance of the sequence \(\tau _i\) (sampled at the ISIs) is the same as the covariance of the ISI sequence up to a scale-factor, and therefore the serial correlations of \(\tau \) (\(\rho _{\tau ,\,k}\), \(k \ge 0\)) are the same as the serial correlations of the ISIs. Therefore (see Appendix for details)

The right side of the above equations, Eqs. (27) and (28), are the same as Eqs. (12) and (13), the expressions for the SCCs of a spike train generated with a noisy adaptive threshold. Thus, the random filter time-constants have the same serial correlation coefficients as the interspike intervals, \(\rho _{\tau ,\,k} = \rho _k\). This is a “pass through” effect where the correlations observed in the time-constant are directly reflected in the ISI correlations.

In summary, a noisy threshold \(\gamma \) or a noisy filter time-constant \(\tau \) can be used to generate spike trains which have prescribed ISI SCCs. We have generated spike trains using a noisy threshold and will not duplicate the results for a noisy time-constant.

Normalized ISI partial autocorrelation functions (PACFs) estimated from afferent ISI sequences (open circles) and models (red line). A–C depict the PACFs for the three example afferents shown in Figs. 6, 7, and 8, respectively. These PACFs should be compared with the normalized ISI autocorrelation function (ACF) generated for the same data (Figs. 6F, 7F, and 8F, respectively). A. Type I, non-bursting (ACFs shown in Fig. 6F). B. Type II, moderately bursting (ACFs shown in Fig. 7F). C. Type II, strongly bursting (ACFs shown in Fig. 8F). The first partial correlation coefficient \(\phi _{1,\,1}\) is reported in each panel (data: black text, model: red text) and is the same as \(\rho _1\) (Eq. 4). The long tails observed in the PACFs and ACFs suggest that the afferent and model spike-trains are not an AR process, but may be an autoregressive moving average (ARMA) process of unknown order

Dynamic threshold with a stochastic filter time-constant. A. Geometry of the spiking mechanism. The input is a deterministic piece-wise constant signal (v, here shown to be DC-valued). Two equivalent spike-generation processes are possible: Case (i) The spike threshold \(\gamma \) is noisy, while the dynamic threshold filter h(t) has fixed time-constant \(\tau \). This is the same as Fig. 4A. The spike threshold is a constant term \(v - \gamma \) plus a random perturbation \(x_i\) generated at \(t_{i-1}^+\), and held constant until the next spike at \(t_i\) (black). The time-varying dynamic threshold h(t) is shown in red. Case (ii) The function h(t) shown in green has a random time-constant with mean \(\tau \) while the spike threshold is \(v - \gamma \), and is constant. Threshold noise \(x_i\) from Case (i) can be transformed into a noisy dynamic threshold time-constant \(\tau _i\) which is fixed between spikes, and takes correlated values over successive ISIs. Spike-times for both cases are identical. B Block diagram illustrating the feedback from the estimator that generates optimal spike times. The time-constant \(\tau \) is a random variable that is constant between spikes, and is correlated over interspike intervals (ISIs). It is of interest to ask what is the relationship between the correlation functions in the two cases. Symbols and additional description as in text

4 Discussion

4.1 Experimental observations

All experimentally observed P-type spike-trains from Apteronotus leptorhynchus demonstrated negative correlations between adjacent ISIs (Figs. 1, 2A). Thus, negative SCCs between adjacent ISIs may be an obligatory feature of neural coding, at least in this species. The implications for coding are discussed further below. A broad experimental observation is the roughly equal division of spike-trains into units with \(\rho _1 > -0.5\) (non-bursting or Type I units, Fig. 1A, \(N = 24\)) and units with \(\rho _1 < -0.5\) (bursting or Type II units, Fig. 1C, \(N = 28\)). Using a different method of classification, Xu et al. (1996) reported 31% of 117 units as bursting.

As a function of the firing rate, the first SCC, \(\rho _1\) (Fig. 2C) shows a minimum at a firing rate of about 190 spikes/s with a V-shaped envelope which appears to set a lower bound on \(\rho _1\) at low and high firing rates. At high firing rates, the mean ISI is small, and there is limited variability below the mean ISI because an ISI cannot be less than zero. This possibly increases \(\rho _1\) (toward zero) as firing rate increases, and creates the effect shown in (Fig. 2C). Supporting this finding is the inverse relationship between \(\rho _1\) and the coefficient of variation (CV) of ISI (Fig. 2C). Although the correlation is weak (\(r = -0.67\)) a large \(\rho _1\) is more likely when the ISI CV is small. An explanation for the shape of the envelope on the low-firing rate flank in Fig. 2C is not readily apparent.

For Type I units \(\rho _2 < 0\), whereas for Type II units \(\rho _2 > 0\) (Fig. 2C). The former give rise to over-damped SCCs which remain negative and diminish to zero, while the latter give rise to under-damped or oscillatory SCCs which also diminish to zero. The dependence of \(\rho _2\) on \(\rho _1\) is linear for the most part and follows the equation \(\rho _2 = -1.18\rho _1 - 0.51\) (Fig. 2C). The limiting sum of SCCs \(\sum _{k \ge 1}\rho _k\) is close to \(-0.5\) irrespective of the type of SCC pattern (Fig. 2D) (Ratnam and Goense 2004). With the exception of two afferent fibers, the sum of SCCs for the fibers was never smaller than \(-0.5\), i.e., it was almost always true that \(\sum _{k \ge 1}\rho _k \ge -0.5\). In two cases the sums were less than the limit (\(-0.505\) and \(-0.502\)), possibly due to estimation error. Although the dominant SCCs are \(\rho _1\) and \(\rho _2\), their sum \(\rho _1 + \rho _2\) is not close to \(-0.5\) (i.e., \(\rho _2 \ne -\rho _1 - 0.5\)) and deviates, as stated above, with a linear relationship which follows \(\rho _2 = -1.18\rho _1 - 0.51\). Thus, more terms (lags) are needed to bring the sum of SCCs close to the limiting value, and this results in significant correlations extending over multiple lags (time-scales). As discussed in an earlier work (Ratnam and Nelson 2000), long-short (short-long) ISIs create memory in the spike-train and keep track of the deviations of successive ISIs from the mean ISI (\(T_1\)). These deviations are a series of “credits” and “debits” which may not balance over adjacent ISIs, but will eventually balance so that a given observation of k successive ISIs returns to the mean with \(t_k - t_0 \approx kT_1\). Such a process will exhibit long-range dependencies that may not be captured by SCCs.

That the dependencies may extend over multiple ISIs is confirmed from an analysis of joint dependencies of intervals extending to high orders (van der Heyden et al. 1998; Ratnam and Nelson 2000). All 52 units in Apteronotus leptorhynchus were at least second-order Markov processes, with about half (\(N = 24\)) being fifth-order or higher (Ratnam and Nelson 2000). Further, SCCs were not correctly predicted when only the adjacent ISI dependencies were preserved, i.e., were considered to be first-order Markov (Poggio and Viernstein 1964; Rodieck 1967; Ratnam and Nelson 2000). Indeed, an examination of the sequence of SCCs provides no indication of the extent of memory. For instance, short-duration correlations do not necessarily imply that ISI dependencies are limited to fewer adjacent intervals. Long-duration dependencies may be present even when the correlation time is short (van der Heyden et al. 1998). Conversely, a first-order Markov process produces a ringing in the serial correlogram (\(\rho _k = \rho _1^k\)) that can continue for ISIs much longer than two adjacent ISIs (Cox and Lewis 1966; Nakahama et al. 1972). In fact, for some P-type electrosensory afferent spike trains, the observed ISIs exhibited SCCs whose magnitudes were smaller than the SCCs for the matched first-order Markov model even though the experimental data were at least second-order or higher (see Fig. 8, Ratnam and Nelson 2000).

The stochastic process which generates ISIs may be more complex than a simple autoregressive (AR) or moving average (MA) process because afferent ISI correlation functions (Figs. 6F, 7F, and 8F) and partial autocorrelation functions (Fig. 9) are infinite in duration (see Methods for a distinction between the two functions, Box and Jenkins (1970)). This suggests that a more general ARMA process may be responsible for the generation of ISIs. The source of the mix of AR and MA processes is discussed further below when we consider the generating model.

4.2 The dynamic threshold model

The experimental observations and dependency analyses motivated us to ask whether we could develop a stochastic model to reproduce a prescribed sequence of SCCs \(\rho _1\,,\rho _2\,,\ldots \,,\rho _k\). We adopt a widely used and physiologically plausible model with a time-varying, i.e., dynamic, threshold. This is a simple model without much complexity, and has few parameters. The model allows us to probe patterns of SCCs as a function of these parameters. Further, it tests the extent to which we can describe experimental data with a simple model. Dynamic threshold models typically have three components: (1) dynamics of membrane voltage v(t) in response to an input signal, (2) a spike or impulse generator, and (3) a time-varying dynamic threshold r(t) which is elevated following a spike and subsequently relaxes monotonically. A spike is fired when the membrane voltage meets the (relaxing) threshold, thus forming a feedback loop (Fig. 3B). Models of adaptation with an adaptation current are broadly similar but instead of altering the threshold directly, they use an outward current to induce refractoriness (see, e.g., Benda et al. 2010; Benda and Herz 2003; Schwalger and Lindner 2013). In many models, the feedback loop is implicitly defined (as in conductance-based models), but it may also be explicitly defined as is done here so that \(v(t) - r(t)\) reaches a fixed spike-initiation threshold. This threshold is usually taken to be zero, i.e., \(v(t) = r(t)\) (Kobayashi et al. 2009), but here we assume it to be a nonzero value (\(\gamma = A/2\)) following Jones et al. (2015). This choice does not alter model behavior or the analyses presented here (see Methods) but has relevance to optimal coding and is discussed further below.

Models that do not utilize a dynamic threshold or an adaptation current are not discussed here because they are outside the scope of this work. The reader may consult Longtin et al. (2003), Lindner and Schwalger (2007), Farkhooi et al. (2009), Urdapilleta (2011) for more details and references to such models. Most dynamic threshold models that address negative correlations assume some form of perfect or leaky integrate-and-fire dynamics for the first two components listed above (Chacron et al. 2000, 2001, 2004b; Brandman and Nelson 2002; Benda et al. 2010), and an exponentially decaying dynamic threshold without spike reset. Noise is added to the time-varying threshold or to the input current and this results in negative serial correlations. Model complexity has been a major drawback in determining the precise role of noise in shaping ISI correlations (see, for instance, observations made in Chacron et al. 2004b; Lindner et al. 2005). Resets, hard refractory periods, sub-threshold dynamics due to synaptic filtering, and sometimes multiple sources of noise obscure the effects of signal propagation through the system and obscure signal dependencies. Thus, with few exceptions (see below), dynamic threshold models and models with adaptation currents, have been qualitative. They demonstrate some features of experimentally observed ISI distributions, and at best correlations between adjacent ISIs (i.e., \(\rho _1\)) (Geisler and Goldberg 1966; Chacron et al. 2000, 2003). On the other hand, reduced model complexity can result in a lack of biophysical plausibility. Thus a judicious choice of models should expose desired mechanisms while retaining enough important features of the phenomena.

4.3 Model results

In recent years, deterministic dynamic threshold models with an exponential kernel have been used to predict spike-times from cortical and peripheral neurons (Kobayashi et al. 2009; Fontaine et al. 2014; Jones et al. 2015) (see Gerstner and Naud 2009, for an early review) and predict peri-stimulus time histograms (Jones et al. 2015; Johnson et al. 2016) with good accuracy. Capturing spike-times accurately is perhaps the first requirement in our analysis, and this gives confidence that the model may tell us something about ISI correlations. We eliminated sub-threshold dynamics and resets so that there is only one nonlinear element, the spike generator (Jones et al. 2015; Johnson et al. 2016). These are not serious restrictions, and they make the analysis tractable. We follow the usual practice of representing the dynamic threshold element with an exponential decay with time-constant \(\tau \). The absence of reset implies that the time-varying dynamic threshold which carries memory is a simple convolution of the spike train with an exponential kernel \(Ae^{-t/\tau }\) (Fig. 3B). We inject noise precisely in one of two places, either to perturb the spike threshold \(\gamma \) (Fig. 4) or perturb the time-constant \(\tau \) (Fig. 10). The two forms of perturbation are formally equivalent (see Appendix). We linearize the exponential so that we can obtain analytical solutions of SCCs. This is applicable at asymptotically high spike-rates (Jones et al. 2015; Johnson et al. 2015) and applies well to P-type afferent spike trains because of their high baseline firing rates, about 250-300 spikes/s (Bastian 1981; Xu et al. 1996; Ratnam and Nelson 2000). To fit ISI and joint-ISI distributions of individual P-type afferents, the parameters of the dynamic threshold element \(h\left( t\right) \) (A and \(\tau \)) are obtained from the afferent spike-train (Jones et al. 2015). A single noise parameter, a, independent of the dynamic threshold, is obtained from the observed SCCs. These model elements and procedures allow us to determine the shaping of ISI correlations. The major results are

-

1.

ISI correlations are determined by the autocorrelation function, \({{\,\mathrm{\mathbf {R}}\,}}\), of the noise process (Eqs. 12–13).

-

2.

Non-bursting units and bursting units are described by the same functional relationship between ISI SCCs and \({{\,\mathrm{\mathbf {R}}\,}}\) (Eqs. 12–13).

-

3.

Non-bursting spike trains (with unimodal ISI distribution) are generated by slow noise with a decaying (positive) correlation function \({{\,\mathrm{\mathbf {R}}\,}}\), which in its simplest form is given by Eq. (18) (e.g., Fig. 6).

-

4.

Bursting spike trains (with bi-modal ISI distribution) are generated by fast noise with an oscillating correlation function \({{\,\mathrm{\mathbf {R}}\,}}\), which in its simplest form is given by Eq. (22) (e.g., Figs. 7 and 8).

-

5.

The two types of correlation functions are described by the sign of a single parameter, the parameter a of a first-order autoregressive process (Eqs. 21 and 17). The AR parameter is directly related to noise bandwidth, i.e., the low or high cut-off frequencies and can be uniquely determined from the correlation, \(\rho _1\), between adjacent ISIs (Eqs. 20 and 24). More robust estimates are obtained from the ratio \(\rho _2/\rho _1\). SCCs at subsequent lags are related to a as terms in a geometric progression (Eqs. 19 and 23).

-

6.

While more complex patterns of SCCs can be produced by other types of noise correlation functions, only Type I and Type II SCC patterns are observed in P-type afferent spike-trains. Type III SCC patterns are mentioned here because they are commonly reported in modeling studies and are discussed further below.

-

7.

The expression for ISI SCCs is independent of the adaptive threshold parameters (A and \(\tau \)), the signal (v), and the firing threshold (\(\gamma \)). It is dependent only on the noise correlation function, including noise power \({{\,\mathrm{\mathbf {R}}\,}}_0\).

-

8.

The model fits ISI and joint-ISI distributions.

-

9.

For both non-bursting and bursting units (slow and fast noise, respectively) the theoretical prediction of the sum of ISI SCCs is exactly \(-0.5\). The sum of SCCs over all afferent spike trains is close to this limit: \(-0.475 \pm 0.04.\) (\(N = 52\)).

-

10.

SNR (\(10\,\log _{10}(v^2/{{\,\mathrm{\mathbf {R}}\,}}_0\)) is generally larger than \(20~\text {dB}\), i.e., fluctuations in threshold (noise) are small compared to the input signal. This is in keeping with the hypothesis that spike-time jitter is small in comparison with the mean ISI.

There are two components to ISI serial correlations as is apparent from Eq. (9), where the ith ISI is given by \(t_{i} - t_{i-1} = \left( \gamma _{i} - \gamma _{i-1} + A\right) /m\). The first component is due to the difference \(\gamma _{i} - \gamma _{i-1}\) which is coupled to the next (adjacent) interval \(t_{i+1} - t_{i}\) by the common term \(\gamma _i\). This term appears with opposing signs in adjacent ISIs and hence results in a negative correlation which does not extend beyond these ISIs. If the \(\gamma _i\) are uncorrelated then it can be shown that the adjacent ISI correlation \(\rho _1 = -0.5\) and all other \(\rho _k = 0\), for \(k \ge 2\). Thus, for independent random variables, this result follows from the property of a differencing operation and it is not indicative of memory beyond adjacent ISIs. The second component of ISI correlations is due to long-range correlations \({{\,\mathrm{\mathbf {R}}\,}}\) in the random process \(\gamma \) which extend beyond adjacent ISIs. These correlations are endogenous, possibly biophysical in origin, and could be shaped by coding requirements. The two components to ISI correlations are made clear by restating Eq. (13) for \(\rho _1\) as

Thus, they are separable. For a wide-sense stationary process, \({{\,\mathrm{\mathbf {R}}\,}}_0 > {{\,\mathrm{\mathbf {R}}\,}}_k\) for all \(k \ge 1\), and so the denominator of the second term is always positive. Thus, the deviation of \(\rho _1\) from \(-0.5\) is determined by the sign of \({{\,\mathrm{\mathbf {R}}\,}}_1-{{\,\mathrm{\mathbf {R}}\,}}_2\). This term is positive for non-bursting units (Type I SCCs), and it is negative for bursting units (Type II SCCs). The singleton case (Type III SCCs) results because noise is uncorrelated and so the term vanishes. In this case only the first component is present. For a Ornstein–Uhlenbeck or Gauss–Markov process, i.e., first-order AR process with coefficient \(a > 0\), \({{\,\mathrm{\mathbf {R}}\,}}_1 > {{\,\mathrm{\mathbf {R}}\,}}_2\) (Fig. 5A), and this produces a non-bursting Type I pattern. When the AR parameter is negative, i.e., the coefficient is \(-a\), \({{\,\mathrm{\mathbf {R}}\,}}_1 < {{\,\mathrm{\mathbf {R}}\,}}_2\) (Fig. 5B, C), and this produces a bursting Type II pattern with a bimodal ISI distribution. Thus, a single parameter (the sign of the first-order AR parameter) can create the observed patterns of negatively correlated SCCs. Type III SCCs where \(\rho _1 = -0.5\), and \(\rho _k = 0\) for \(k \ge 2\) are trivially generated by perturbing the spike threshold with uncorrelated white noise, i.e., by setting \(a = 0\) in the first-order AR process. We have not observed Type III neurons experimentally although some spike trains have \(\rho _1\) values close to \(-0.5\).

The sign of the a parameter in the AR process determines the time-scale of noise fluctuations in the spike threshold, and determines the patterns of SCCs. Recall that \(\tau _\gamma = -T_1/\ln \left( a\right) \). For Type I SCCs, the AR process produces slow noise with noise bandwidth dominated by frequencies \(\omega < 2\pi \tau _\gamma ^{-1}\) (i.e., low-pass). For Type II SCCs, the generating noise is dominated by frequencies \(\omega > 2\pi \tau _\gamma ^{-1}\) (i.e., high-pass). In the latter case, the characteristic ringing of the correlation function is distinctive, with the degree of damping being controlled by the value of \(\tau _\gamma \).

From the above observations, we suggest that the ARMA process leading to generation of ISIs is the result of two processes: 1) An MA component which is due to a differencing operation. This contributes a value of \(-1/2\) to \(\rho _1\), i.e., to the finite portion of the autocorrelation function (and the infinite tail of the partial autocorrelation function). 2) An AR component which is due to a feedback loop (Fig. 4). This contributes a value of \(-1/2\) to \(\phi _{1,\,1}\), i.e., to the finite portion of the partial autocorrelation function (and the infinite tail of the autocorrelation function). In the noise process used here, \(\rho _1 = -1/2 \pm a/2\) (Eqs. 20 and 24 for non-bursting and bursting afferents, respectively), and so the residual contribution of the autoregressive component to \(\rho _1\) is \(\pm a/2\). The goodness of fit to the experimental data with a single parameter \(\pm a\) leads us to believe that the underlying ARMA model is first order in both the AR and MA components.

Farkhooi et al. (2009) reported that in the extrinsic neurons of the mushroom body in the honeybee, the ISI partial autocorrelation function is zero for all lags \(k > 1\). Thus, the ISI process is first-order AR, i.e., AR(1). As we remarked above, we cannot conclude the order of the AR or ARMA process from our data although we think that it is possibly ARMA\((1,\,1)\). The threshold noise process (whose autocorrelation and partial autocorrelation are depicted in Fig. 5) is first-order AR (Figs. 5D–F). However, the threshold noise correlations (Eq. 18 for Type I, and Eq. 22 for Type II) influences the ISI correlations according to Eq. 13. This is illustrated in the closed form expressions for ISI SCCs using a first-order noise process (Eqs. 19 and 23). The ISI SCC \(\rho _k\) is a function of k and \(k-1\). For a first-order noise process at least, our data suggest that the ISI process is a mix of AR and MA processes and not a simple AR(1) process.

4.4 Comparison with other adaptation models

These results can be directly compared with results from an earlier study which modeled adaptation currents (Schwalger et al. 2010). In that study, patterns of SCCs were generated by a perfect integrate-and-fire neuron under two conditions : i) a deterministic adaptation current with fast, white Gaussian noise input, and ii) a stochastic adaptation current with slow, exponentially correlated (channel) noise. A deterministic adaptation current with fast noise produced patterns of SCCs that we report here as Types I and II. These patterns were characterized by a parameter \(\vartheta \) which is analogous to the AR parameter a used here, with Type I pattern (positive coefficient, a) corresponding to \(\vartheta >0\), and Type II pattern (negative coefficient, \(-a\)) corresponding to \(\vartheta < 0\). A pattern similar to the Type III SCC pattern reported here resulted when \(\vartheta = 0\). This is the same as \(a = 0\) although we did not observe these neurons experimentally. While we define Type III SCCs to have only one pattern (\(\rho _1 = -0.5\), and \(\rho _k = 0\) for \( k \ge 2\)), Schwalger et al. (2010) report that \(\rho _k = 0\) when \(k \ge 2\), but the value of \(\rho _1\) is governed by an additional parameter and could take a range of values, with \(\rho _1 = -0.5\) appearing as a limiting case. Stochastic adaptation currents produced only positive ISI correlations which we do not observe or model here. In a subsequent report Schwalger and Lindner (2013) extended the model to more general integrate-and-fire models and obtained a relationship between ISI SCCs and the phase-response curve (PRC). The patterns of SCCs reported here most likely correspond to their Type I PRC.

The model presented here for first-order noise processes, given by Eqs. (17) and (21), does not generate positive correlations. Note that the parameter a in these equations is bounded such that \(0< a = \exp (-T_1/\tau _\gamma ) < 1\). Thus, the AR(1) coefficients a in Eq. (17) and \(-a\) in Eq. (21), are restricted to the open interval \((-1,\,1)\) otherwise the noise process is unstable (i.e., unbounded). Let us say that we require the first ISI SCC \(\rho _1 > 0\), then from Eq. (19) we must have \(a > 1\) (Type I neuron), and from Eq. (23) we must have \(-a < -1\) (Type II neuron). Thus, a is outside the stable range of the AR(1) coefficients and the model cannot generate positive ISI SCCs. For second-order or higher-order noise processes \(\text {AR}(p)\) with \(p > 1\), positive ISI SCCs are possible if the noise correlation \({{\,\mathrm{\mathbf {R}}\,}}_k\) satisfies \({{\,\mathrm{\mathbf {R}}\,}}_0 < 2{{\,\mathrm{\mathbf {R}}\,}}_1 - {{\,\mathrm{\mathbf {R}}\,}}_2\) (from Eq. 13). It should be possible to construct arbitrary correlation sequences using Eq. (13) and satisfying the inequality, to create prescribed sequences of ISI correlations. We have not explored these ideas.

The SCCs reported here follow a geometric progression with the filter-pole a being the ratio parameter (see Eqs. 19 and 23). Schwalger et al. (2010) and Schwalger and Lindner (2013) reported that the patterns of negative correlations follow a geometric progression with the ratio parameter being \(\vartheta \). Similarly, a geometric progression was also reported by Urdapilleta (2011). Note that a first-order Markov process also follows a geometric progression with ratio parameter \(\rho _1\) (Cox and Lewis 1966; Nakahama et al. 1972). A geometric progression of SCCs is not surprising given that the ISI sequence is discrete, and thus, feedback will result in a (discrete) recurrence relation. For example, all the first-order AR processes used to predict SCCs have correlation functions which are in geometric progression (Eqs. 19, 22). Taken together with the analysis of partial correlation coefficients, and as noted above, it is likely that the underlying ARMA process generating the ISI sequence is low-order. The limiting sum of SCCs reported here (Eq. 14) is exactly \(-0.5\), whereas Schwalger and Lindner (2013) report a sum that asymptotically approaches a value that is slightly larger than \(-0.5\). The average sum of SCCs in our experimental data (Fig. 2D) is also slightly larger than \(-0.5\) (\(-0.475 \pm 0.04.\)), and this merits further investigation.

In considering the results presented here using a dynamical threshold model, and those from the more general models using an adaptation current, it appears that the presence of the feedback loop (the coupling between the membrane voltage and the dynamic threshold, see Figs. 3B, 4B, and 10B) may account for almost all the properties of SCCs if the noise fluctuation is shaped appropriately. These fluctuations have the effect of reverberating around the feedback loop, creating memory and introducing negative correlations extending over multiple ISIs. However, the source of this noise is moot because it can appear in the input or the model parameters (see Eq. 31, where noise can be distributed over the input v, the threshold decay function r, or the spike threshold \(\gamma \)). The dynamic threshold model used here does not suggest a biophysical mechanism, but we suggest that a noisy threshold is due to an endogenous source of noise which perturbs the spiking threshold \(\gamma \) or the time-constant \(\tau \), i.e., noise is not passed through the input. This is an assumption, and input noise will of course shape SCCs. However, several biophysical mechanisms can account for endogenous sources of noise, including probabilistic transitions between conformational states in voltage-gated ion channels (White et al. 1998, 2000; Schneidman et al. 1998; Van Rossum et al. 2003; Fontaine et al. 2014) leading to perturbations in gating characteristics (Benda et al. 2010; Chacron et al. 2007). These can introduce cumulative refractoriness in firing. It should be noted that the amount of noise to be added to the threshold is small, generally weaker than \(20~\text {dB}\) SNR (see Figs. 6, 7, and 8). That only weak noise may be necessary has been reported earlier by Schwalger and Lindner (2013), and in a study on threshold shifts by Fontaine et al. (2014).