Abstract

Purpose

The aim of this prospective cohort study was to assess the factual accuracy, completeness of medical information, and potential harmfulness of incorrect conclusions by medical professionals in automatically generated texts of varying complexity (1) using ChatGPT, Furthermore, patients without a medical background were asked to evaluate comprehensibility, information density, and conclusion possibilities (2).

Methods

In the study, five different simplified versions of MRI findings of the knee of different complexity (A: simple, B: moderate, C: complex) were each created using ChatGPT. Subsequently, a group of four medical professionals (two orthopedic surgeons and two radiologists) and a group of 20 consecutive patients evaluated the created reports. For this purpose, all participants received a group of simplified reports (simple, moderate, and severe) at intervals of 1 week each for their respective evaluation using a specific questionnaire. Each questionnaire consisted of the original report, the simplified report, and a series of statements to assess the quality of the simplified reports. Participants were asked to rate their level of agreement with a five-point Likert scale.

Results

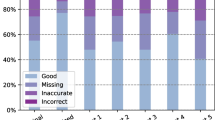

The evaluation of the medical specialists showed that the findings produced were consistent in quality depending on their complexity. Factual correctness, reproduction of relevant information and comprehensibility for patients were rated on average as “Agree”. The question about possible harm resulted in an average of “Disagree”. The evaluation of patients also revealed consistent quality of reports, depending on complexity. Simplicity of word choice and sentence structure was rated “Agree” on average, with significant differences between simple and complex findings (p = 0.0039) as well as between moderate and complex findings (p = 0.0222). Participants reported being significantly better at knowing what the text was about (p = 0.001) and drawing the correct conclusions the more simplified the report of findings was (p = 0.013829). The question of whether the text informed them as well as a healthcare professional was answered as “Neutral” across all findings.

Conclusion

By using ChatGPT, MRI reports can be simplified automatically with consistent quality so that the relevant information is understandable to patients. However, a report generated in this way does not replace a thorough discussion between specialist and patient.

Similar content being viewed by others

Data availability

Raw data were generated at Orthopädische Klinik Paulinenhilfe, Diakonieklinikum, Rosenbergstrasse 38, 70192 Stuttgart Germany. Derived data supporting the findings of this study are available from the corresponding author on request.

References

European Society of Radiology (2010) The future role of radiology in healthcare. Insights Imaging 1:2–11. https://doi.org/10.1007/s13244-009-0007-x

Zha N, Patlas MN, Duszak R (2019) Radiologist burnout is not just isolated to the United States: perspectives from Canada. J Am Coll Radiol 16:121–123. https://doi.org/10.1016/j.jacr.2018.07.010

Jeblick K, Schachtner B, Dexl J et al (2022) ChatGPT makes medicine easy to swallow: an exploratory case study on simplified radiology reports. Eur Radiol. https://doi.org/10.1007/s00330-023-10213-1

Kurz C, Lau T, Martin M (2023) ChatGPT: Noch kein Allheilmittel. Dtsch Arztebl 120:A-230 / B-202

Shen Y, Heacock L, Elias J et al (2023) ChatGPT and other large language models are double-edged swords. Radiology. https://doi.org/10.1148/radiol.230163

Stokel-Walker C, Van Noorden R (2023) What ChatGPT and generative AI mean for science. Nature 614:214–216. https://doi.org/10.1038/d41586-023-00340-6

The Lancet Digital Health (2023) ChatGPT: friend or foe? Lancet Digit Heal 5:E102. https://doi.org/10.1016/S2589-7500(23)00023-7

Polesie S, Larkö O (2023) Use of large language models: editorial comments. Acta Derm Venereol 103:adv00874. https://doi.org/10.2340/actadv.v103.9593

Martin-Carreras T, Cook TS, Kahn CE (2019) Readability of radiology reports: implications for patient-centered care. Clin Imaging 54:116–120. https://doi.org/10.1016/j.clinimag.2018.12.006

Becker CD, Kotter E (2022) Communicating with patients in the age of online portals—challenges and opportunities on the horizon for radiologists. Insights Imaging. https://doi.org/10.1186/s13244-022-01222-7

Patil S, Yacoub JH, Geng X et al (2021) Radiology reporting in the era of patient-centered care: how can we improve readability? J Digit Imaging 34:367–373. https://doi.org/10.1007/s10278-021-00439-0

Pohontsch NJ (2019) Qualitative content analysis. Rehabil 58:413–418. https://doi.org/10.1055/a-0801-5465

StatistischesBundesamt (Destatis) (2022) Erwerbsbeteiligung der Bevölkerung - Ergebnisse des Mikrozensus zum Arbeitsmarkt (Endgültige Ergebnisse). Fachserie 1(49):1–62

Blaeschke F, Freitag H-W (2021) Bildung - Auszug aus dem Datenreport. Stat Bundesamt 4:101–127

Funding

None.

Author information

Authors and Affiliations

Contributions

SS and LN provided the concept of this study and developed the survey. SS, LN and TC were responsible for data collection, provided ongoing results, figures and tables and wrote the manuscript. AZ and MF revised the manuscript. The authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Ethical approval and consent to participate

None (no patient data involved). Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Schmidt, S., Zimmerer, A., Cucos, T. et al. Simplifying radiologic reports with natural language processing: a novel approach using ChatGPT in enhancing patient understanding of MRI results. Arch Orthop Trauma Surg 144, 611–618 (2024). https://doi.org/10.1007/s00402-023-05113-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00402-023-05113-4