Abstract

Purpose

Our aim was to assess alignment in timing of ‘highest caloric intake’ with individual chronotype and its association with body composition in adolescents.

Methods

We used repeatedly collected data from n = 196 adolescents (age 9–16 years, providing N = 401 yearly questionnaires) of the DONALD open cohort study. Chronotype was assessed by the Munich Chronotype Questionnaire from which midpoint of sleep (MSFsc) was derived. A sex- and age-specific diet-chrono-alignment score (DCAS) was calculated as the difference in hours between the chronotype-specific median timing of highest caloric intake of the studied population and the individual timing of ‘highest caloric intake’ or vice versa. Repeated-measures regression models were applied to study cross-sectional and longitudinal associations between the DCAS and body composition, e.g., Fat Mass Index (FMI) or Fat Free Mass Index (FFMI).

Results

DCAS ranged from −6:42 h to + 8:01 h and was not associated with body composition. Among adolescents with a later chronotype (N = 201) a 1 h increase in DCAS (later consumption of ‘highest caloric intake’ in comparison to the median intake of that group), increased FFMI by 1.92 kg/m2 (95% CI: 0.15, 3.69, p value = 0.04) over a median follow-up of 0.94 year.

Conclusion

Alignment of energy intake with individual chronotype appears beneficial for FFMI among those with a late chronotype.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Chrono-nutrition is a novel research field focusing on three dimensions: Timing, frequency, and regularity of dietary intake [1]. We will investigate the first dimension of this trio which has rarely been studied in adolescents [2]. Those aged 9–16 years constitute a vulnerable group for the development of increasing body weight and unfavorable changes of other body composition measures, e.g., Fat Mass Index (FMI), likely tracking into adulthood [3,4,5].

Interest in the timing of energy intake was first sparked by studies in rodents indicating particularly detrimental cardio-metabolic effects when energy intake was scheduled to occur before or during the inactive period, i.e., periods corresponding to the evening in humans. Correcting the timing of food intake could prevent such effects [6, 7]. Subsequently, studies among humans followed with the perspective on the optimal timing of caloric intake. Observational studies linked the preferred consumption of habitual energy intake in the evening to higher total energy intake also during adolescence [8,9,10]. In consequence, higher evening energy intake is discussed to predispose to increases in BMI among adolescents [11].

Taken together, current evidence—albeit preliminary—suggests that substantial health benefits, e.g., lower BMI [12], which is an important risk factor for many chronic diseases, such as diabetes, cardiovascular diseases, or cancer, may arise from the consumption of energy at earlier times of the day. However, recent studies that address food timing in the context of the personal chronotype, i.e., individual preference in sleep timing, challenge this hypothesis [12, 13]. While emerging evidence suggests that those with a later chronotype are at higher risk for weight gain [14] and higher BMI [15, 16], shifting energy intake to earlier times of the day, against their underlying chronotype, may not be associated with the same benefit as seen among persons with an earlier chronotype. One explanation could be that timing of food intake, which diverges from the individual chronotype, constitutes a characteristic of a mismatch between the inner clock, i.e., chronotype, and the outer clock, i.e., timing of energy intake. On the contrary, alignment between eating time in accordance to ones chronotype may influence metabolic health beneficially [13]. Of note, chronotype itself is determined by genetics, age, sex, and environment [17]. During adolescence, a shift from an earlier to a later chronotype occurs naturally. Around the age of 20 years, the chronotype is most delayed and then tends to become earlier again [18].

Thus far, no studies have addressed whether timing of energy intake in accordance with the biological clock (i.e., early vs. late chronotype) is associated with beneficial body compositional developments during adolescence (age 9–16 years). For this purpose, we defined alignment as the earlier timing of ‘highest caloric intake’ per individual in comparison to the median timing of ‘highest caloric intake’ among persons with an earlier chronotype and vice versa among persons with a later chronotype. We defined ‘highest caloric intake’ as consuming at least 20% of total daily energy intake that is 10% above the definition for a ‘meal’ [9, 10].

We hypothesized that alignment between the circadian timing of ‘highest caloric intake’ and the individual chronotype among adolescents is associated with decreases in Body Mass Index-(Standard deviation score) [BMI (-SDS)] and FMI and/or increases in Fat Free Mass Index (FFMI).

Materials and methods

Study design

The DONALD study is an ongoing, prospectively designed open cohort study conducted in Dortmund, Germany. Since 1985, data on diet, growth, and developmental and metabolic factors are collected continuously from infancy (age 3 months) to adulthood. Approximately 30–35 healthy infants from Dortmund and surrounding communities, whose mothers and/or fathers have a sufficient level of the German language, are recruited every year via personal contacts, maternity wards, or pediatric clinics. The examination schedule includes quarter-yearly examinations in infancy, half-yearly examinations in the second year of life, and annual examinations thereafter until young adulthood. Among others, examinations include anthropometric measurements, lifestyle questionnaires, and a 3-day food record. Chronotype assessment in the DONALD study started in 2014 for participants from 9 years onwards by use of the Munich Chronotype Questionnaire (MCTQ) [17, 18]. Detailed information regarding the study design can be found elsewhere [19].

Study sample

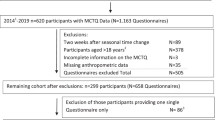

Until July 2020, n = 652 study participants completed the MCTQ (N = 1.237 Q). Questionnaires collected during the first 2 weeks after the time change in Germany from standard winter to summer time or vice versa [10] (N = 95) and questionnaires with missing values (N = 38) were excluded. The sample was further reduced due to missing information on in parallel collected dietary data leaving a total number of n = 259 participants (N = 461 questionnaires). Of those, (n = 196 out of 172 families) were adolescent (aged 9–16 years) [20] and provided N = 401 questionnaires (Appendix Fig. 3).

Dietary assessment

Dietary intake in the DONALD study is assessed by use of 3-day weighed dietary records. The participants are free to choose the consecutive days, meaning that week and weekend days can be recorded. All foods and beverages consumed, as well as leftovers, are weighed and recorded over three consecutive days by the parents or by the older participants themselves with the use of regularly calibrated electronic food scales [initially Soehnle Digita 8000 (Leifheit AG, Nassau,Germany), now WEDO digi 2000 (Werner Dorsch GmbH, Muenster/Dieburg, Germany)]. When exact weighing is not possible, household measures (e.g., spoons and cups) are allowed for semi-quantitative recording. Information on recipes and on the types and brands of food items consumed is also requested. Additionally, participants record the time of every eating occasion. Energy and macronutrient intakes were calculated using the continuously updated in-house nutrient database LEBTAB [21], which is based on German standard food composition tables. Energy and nutrient contents of commercial food products are calculated by recipe simulation using labeled nutrient contents and ingredients. Macronutrients were considered as percentages of total energy intake (TEI). Subsequently, the individual means of TEI and macronutrient intakes were calculated from the three record days. The validity of dietary recording was previously evaluated by Bokhof et al. [22].

Chronotype

The MCTQ [17, 18] includes questions regarding sleep and wake times during the week and weekend. The individual chronotype (continuously in h:min) was calculated as the Midpoint of Sleep, i.e., the half-way point between sleep-onset and sleep-end on free days (MSF) [17]. If applicable, the MSF is corrected for “oversleep” on free days to account for sleep-debt accumulated over the week (MSFsc) [18].

Boys and girls are different in their individual level of lateness; girls tend to be earlier in comparison to boys [23]. To account for age and sex differences in chronotype, we derived MSFsc residuals for all observations independent of age and sex and ranked them by the group of two (PROC RANK in SAS®). Afterward, we calculated median MSFsc hours stratified by sex for adolescents with earlier and later chronotype (Fig. 1).

Diet-chrono alignment scoring (DCAS). MSFsc Midpoint of sleep (chronotype) corrected for sleep-debt accumulated over the workweek; h hours; m minutes. Alignment between chronotype and time of ‘highest caloric intake’ was expressed as the number of hours diverging from the chronotype-specific median eating time of ‘highest caloric intake’. Diet data were assessed by 3-day weighed dietary records, from which we derived average timing of ‘highest caloric intake’. Earlier eating resulted in positive divergence from the chronotype-specific reference eating time (defined as the median eating time of the specific chronotype), whereas later eating resulted in negative divergence from the reference for early chronotypes and vice versa for late chronotypes. MSFsc was regressed on age and sex. We ranked the residuals by the group of two and show in this figure the sex-specific MSFsc hours for early and late chronotypes

Diet-chrono-alignment score (DCAS)

In a first step, we calculated the sum of energy intake per eating occasion, e.g., all foods and beverages consumed within a 30-min time period were summarized into one eating occasion; and all eating occasions < 10 kcal were added to the previous eating occasion [10]. From this, we derived the time of the day at which the greatest amount of energy (> 20% of total daily energy intake) was consumed (timing of ‘highest caloric intake’ per day). Then, these individual eating times were averaged and defined as ‘highest caloric intake’ derived from 3-day dietary records.

In a second step, age- and sex-adjusted median eating times, for which we applied the residual method, were derived for adolescents with earlier and later chronotype. The median eating time was defined based on the sample population and served as the reference point for the definition of diet-chrono-alignment. Third, individual diet-chrono-alignment score (DCAS) was calculated as the difference in hours between the chronotype-specific median eating time of ‘highest caloric intake’ and the individual eating time of ‘highest caloric intake’. Hence, earlier eating in comparison to the population median eating time of ‘highest caloric intake’ resulted in positive alignment for adolescents with an earlier chronotype measured in hours, whereas for adolescents with a later chronotype, later eating resulted in positive alignment (Fig. 1).

Anthropometric measures

Adolescents were measured annually by trained nurses according to standard procedures, dressed in underwear and barefoot. Standing height is measured to the nearest 0.1 cm using a digital stadiometer. Weight was measured to the nearest 0.1 kg with an electronic scale (model 753 E; Seca, Hamburg, German). Skinfold thickness was measured on the right side of the body at the biceps, triceps, subscapular, and suprailiac sites to the nearest 0.1 mm with a Holtain caliper (Holtain Ltd., Crymych, UK) [19]. Sex- and age-independent body mass index (BMI kg/m2) standard deviation scores (SDS) were calculated using the German national reference data according to the LMS Method [24]. Percent body fat (%BF) was estimated from two skinfolds (triceps, subscapular) using age-specific Slaughter equations [25], for the subsequent calculation of FMI (fat mass/m2; where fat mass = body weight * BF%/100) and FFMI (fat free mass/m2; where fat free mass = body weight − fat mass). Since the distribution of FMI was skewed, log10-transformed values were used in the analyses.

Assessment of potential covariates

Parents are interviewed regarding family characteristics (i.e., parental education, smoking, and persons in the household). Every 4 years, maternal body weight and height were measured with the same equipment as for the children on the child’s admission to the study center.

Adolescents’ pubertal status was defined in accordance with the onset and the end of pubertal growth spurt. Age at Take-Off (ATO) is the age of minimal growth velocity. Age at Peak Height Velocity (APHV) is the age of maximal height velocity. ATO and APHV were derived from the parametric Preece and Baines Model 1 [26], details are explained elsewhere [26, 27]. Under- and over-reporting of dietary intake was assigned if TEI was unrealistic in relation to the estimated basal metabolic rate (according to age- and sex-specific equations of Schofield [28]). Based on the pediatric cut-offs by Sichert-Hellert et al. [29] we detected 64 under-reporters (15.9%) and no over-reporting of dietary records.

Statistical analyses

All statistical analyses of the present evaluation were performed using SAS® procedures (version 9.4; Cary, NC, USA). The significance level was set at p < 0.05.

Linear mixed-effects regression models (PROC MIXED in SAS), including both fixed and random effects accounting for the nested nature of our data (children within families in the random statement) and the lack of independence between repeated observations on the same person. A further advantage is that the inclusion of all measurements is possible also in case of missing data for a specific point in time [30].

Repeated-measures regression models (PROC MIXED) were used to examine change-on-change associations of (a) the cross-sectional and (b) the longitudinal associations between the DCAS at first assessment and (Δ) body composition as well as (c) the respective changes in associations of (Δ) in DCAS and (Δ) BMI(-SDS), (Δ) FMI, and (Δ) FFMI over time [30, 31]. Variables of change were calculated by subtracting the baseline value from value at each year of assessment with 0 difference at first assessment.

Covariates for model adjustment were selected according to known predictors of BMI, body composition, and timing of energy intake [16, 32, 33]. From here, we identified minimally sufficient adjustment sets (msas) using a diagram (Appendix Fig. 4) representing the relationships among the identified variables [34]. Besides age at baseline and time between first and subsequent measurements (basic model) [31], sex, energy intake (g/d), physical activity (high vs. low), age at take-off and age at peak height velocity as puberty markers, smoking in the household, social jetlag, season of record (spring/summer/autumn/winter), number of questionnaires, and underreporting of dietary intake were important covariates.

Model building was driven by the log-likelihood criterion to define the final crude model. The Akaike Information Criterion (AIC) and the Bayesian Information were used to select the correlation structure best describing the correlated nature of the data. Tests for effect modification were performed by the inclusion of interaction terms between DCAS and MSFsc or sex.

To manage missing data, we undertook multiple imputations, using the MI procedure in SAS and explored the pattern of missingness. We generated an imputed database containing five imputed versions to predict missing values for ATO (n = 72, 18%) and physical activity (n = 67, 17%). Final models were tested regarding multicollinearity, heteroscedasticity, and normal distribution of residuals. Finally, we excluded participants who used an alarm clock during the weekend (n = 59, 15%) in a sensitivity analyses and assessed the influence of ‘eveningness in energy intake’ on body compositional measures. ‘Eveningness in energy intake’ was defined as percentage of energy intake in the evening (after 6 pm until 11:15 pm) − percentage of energy intake in the morning (before 11 am starting at 5:15 am) [9].

Results

Sample characteristics of the first and last assessment, stratified by adolescents with an earlier and later chronotype, are presented in Table 1. The sample used for the analyses constituted 41% female participants (N = 81). All body compositional measures were slightly higher in those adolescents with a later chronotype. Mean values of MSFsc differed between adolescents with earlier and later chronotype by more than 1 h for the first and last measurement. While sleep duration by chronotype was not different at the first measurement but differed with almost 0:30 min at the last measurement.

Dietary characteristics of the first and last assessment, stratified by adolescents with an earlier and later chronotype, are presented in Table 2. The DCAS ranged from −6:42 to + 8:01 h. While adolescents with a later chronotype at first assessment showed negative alignment of 7 min (earlier eating), they adapted toward later eating until last assessment. Macronutrient intake was not different by chronotype. In contrast to those having a late chronotype, adolescents with an earlier chronotype are more likely to have breakfast (93% vs. 96% having breakfast, considering the last assessment). No one with a later chronotype skipped dinner.

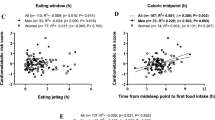

Table 3 shows no associations of DCAS with BMI-SDS or FMI in any of the adjusted models (a–c), neither in the total sample nor stratified by adolescents with an earlier and later chronotype (interactions between DCAS and chronotype: for BMI-SDS, p value < 0.05, for FMI p value > 0.05). Whereas there was a positive longitudinal association between DCAS and FFMI development in those adolescents with a later chronotype (model b). A 1 h increase in later eating, compared to the median intake time of late chronotypes, resulted in a longitudinal increase of ∆ FFMI by 1.92 kg/m2 (p = 0.04) over time (model b, Table 3). We found no significant interaction by sex. The sensitivity analyses in which we excluded participants who used an alarm clock during the weekend yielded similar results (data not shown). Figure 2 visualizes the longitudinal relationships between ‘eveningness in energy intake’ and long-term ∆ BMI-SDS, ∆ FFMI, and ∆ FMI (model b) for adolescents with a later chronotype. A 10% larger energy intake in the evening rather than in the morning increased FFMI prospectively by 0.2 kg/m2 (95% CI: 0.1, 0.3).

Regression coefficients and 95% confidence intervalsa,b of the longitudinal association (model b) between ‘eveningness in energy intake’ and body composition in later chronotypes (N = 201 questionnaires, n = 110 participants). FFMI Fat Free Mass Index (kg/m2); FMI Fat Mass Index (kg/m2); BMI-SDS Body Mass Index-Standard Deviation Score (kg/m2). *Statistically significant p value < 0.05, per 1% more energy intake in the evening FFMI increase by 0.02 kg/m2 in late chronotypes. aModels contain a random intercept with an unstructured covariance (UN). bAll models were adjusted for energy intake (g/day), number of questionnaires, physical activity level (high/low, based on the median), age at take-off and age at peak height velocity as puberty markers, season of record (spring/summer/autumn/winter), smoking in the household, social jetlag, and underreporting of energy intake

Discussion

In this longitudinal designed cohort study, we examined the cross-sectional, longitudinal, and change-on-change associations between alignment in dietary energy intake timing according to chronotype in relation to BMI (-SDS), FMI, and FFMI during adolescence. We found no overall associations between the DCAS and anthropometrics. However, those adolescents who had a late chronotype and ate their meal with ‘highest caloric intake’ later in the day, in comparison to the median specific eating time in that group, showed an increase in FFMI over time. To the best of our knowledge, this is the first study that has defined a score representing the alignment between dietary intake timing and chronotype.

Later and earlier eating

Studying BMI as the outcome of interest should be accompanied by additional measures on body composition, such as FMI or FFMI, especially during adolescence [35]. However, most previous studies did not consider body compositional measures next to BMI, which limits comparability with previous research. Nevertheless, Eng et al. [36] reported a paradox in their analyses of the National Health and Nutrition Examination Survey (NHANES) 1999–2004, N = 11,072 aged 2–18 years. In children aged 2–11 years, later eating in the time between 4 pm to midnight was associated with increasing overweight defined as a BMI between the 85th and 94th percentile, and obese (≥ 95th percentile) applying Centers for Disease Control and Prevention (CDC's) BMI-for-age and sex-specific growth charts. Similar results as shown by Eng et al. for children below the age of 11 years were presented by Martínez-Lozano et al. [37] for the age 8–12 years. However, the opposite was true in NHANES for adolescents aged 12–18 years. Late-eating adolescents had 16 times lower odds for being overweight (ß: −15.9, p value = 0.01). This difference may partly be attributable to chronobiological changes toward a later chronotype starting around the age of 12 years [38]. In line with this, Munoz et al. [13] reported that also adults aged 18–65 years having a late chronotype and a higher %TEI at dinner were normal weight, whereas evening types who had more %TEI in the morning were more likely to be overweight. Coulthard et al. [39] found no association between eating after 8 pm and excessed weight or increased energy intake in 1.620 UK children aged 4–10 years. Also, no effect was observed in the CHOP trial where they included 729 healthy children to investigate the distribution of eating occasions on zBMI [40]. These findings support our null findings for BMI-SDS.

Eveningness in energy intake

Results of the CRO-PALS Longitudinal Study including 607 adolescents showed no associations between ‘eveningness in energy intake’, based on a single 24-h recall and BMI or waist–hip-ratio [41]. This is in line with our results on ‘eveningness in energy intake’ and BMI-SDS. The association between ‘eveningness in energy intake’ and FFMI supported our results of the main analyses. The weaker association of our sensitivity analyses for FFMI may suggest that not solely the amount of energy intake eaten earlier or later during the day, but especially the balance with the underlying chronotype, hence the internal clock, may be of particular interest regarding beneficial longitudinal developments in body composition. The importance of dietary intake timing as an independent risk factor besides the amount of food intake is supported by an intervention trial based on 110 participants aged 18–22 years in which timing of food consumption relative to clock hour and endogenous circadian time was assessed in relation to body composition [42].

Biological background

The timing of food intake is important in harmonizing the central and peripheral clocks. A desynchrony between both may lead to metabolic distortion and unbeneficial body compositional developments [43]. Earlier, we have shown in DONALD that children, while they age, do change the amount of energy intake to later times of the day [9]. Such an observation requires a supportive family environment enabling later eating times. In line with this, Morgan et al. [44] reported earlier on the benefits in autonomous decisions by emerging adults regarding eating regulation styles by parents on body fat. The adaptation toward later eating hours in adolescents who naturally develop toward later chronotypes may contribute to a better alignment between their internal and external clock. The benefits of such an alignment has been elaborated on in the other studies [13, 43] and is supported by our findings.

To summarize, a large body of evidence suggests ‘later’ eating as being unbeneficial for metabolic health [42]. Especially, in adults. However, this does not seem to be applicable for everybody [13, 43]. In the current study, we could show that the best time for food consumption depends on a person’s individual chronotype. Not considering chronotype in the analyses may partly explain the large controversy we find in the current literature [45, 46]. Another possible reason for the discrepancies in results between ‘later’ energy intake and body composition could be related to the definition of eating time. For instance, ‘late’ energy intake 2 h before bedtime [12] or closer to melatonin onset (i.e., biological night) [42] represents more extreme ‘lateness’ and shows positive associations with overweight in adult later chronotypes.

Strength and limitations

A major advantage of the DONALD Study is the longitudinal study design, enabling the examination of longitudinal associations. Other studies had to rely on cross-sectional data mainly [39]. Further strengths are the dietary assessment method, avoiding recall bias, and the assessment of chronotype using the validated MCTQ [18, 47] which was applied previously among adolescents in the other studies [23, 48]. In addition, the consideration of sleep/wake cycles which are used for chronotype development were shown earlier to be a practical approach for the definition of circadian timing of food intake [12, 42].

Generalizability of our results may be limited, since the DONALD study is characterized by a homogeneous sample of German participants, represented by a high socioeconomic status (SES) [19]. Also, females were slightly underrepresented in the current analyses. Regarding body compositional measures, we would like to add that despite high SES of the DONALD population, the prevalence of overweight (15.5%) is very well comparable to the overall German population (15.4%) [49]. Another disadvantage is the small sample size. However, by generating the DCAS, we saved an additional level of stratification by early and late timing of ‘highest caloric intake’.

Public health impact and future directions

This is the first analysis performed in adolescence showing longer term consequences of circadian alignment with energy intake timing. Supporting adolescents in following their individual clock appears to be most beneficial for a healthy body compositional development [35]. General practitioners should prepare and explain parents the natural occurring changes in chronobiology of their children they can expect during adolescence and enable parents to accept changes in timing of sleep-onset [18] as well as food intake [9]. Of note, it is not the later chronotype that increase the risk for future metabolic disease development, but the attached individual behavior [43]. The application of our approach to a larger and more heterogeneous sample of adolescence, that may also include participants not enabled by their parents to follow their internal clock, would enhance this field of research.

In conclusion, changes in chronobiology and eating time accompany the phase of the transition from childhood to adulthood. Chronotype as well as eating time advance naturally to later times of the day during adolescence. The alignment between dietary intake timing and chronotype appears to encourage favorable developments in body composition in adolescents with a later chronotype.

Data availability

Data of the DONALD study is available upon request to epi@uni-bonn.de.

References

Almoosawi S, Vingeliene S, Gachon F et al (2019) Chronotype: implications for epidemiologic studies on chrono-nutrition and cardiometabolic health. Adv Nutr 10:30–42. https://doi.org/10.1093/advances/nmy070

Alberga AS, Sigal RJ, Goldfield G et al (2012) Overweight and obese teenagers: why is adolescence a critical period? Pediatr Obes 7:261–273. https://doi.org/10.1111/j.2047-6310.2011.00046.x

Siervogel RM, Demerath EW, Schubert C et al (2003) Puberty and body composition. Horm Res Paediatr 60(suppl 1):36–45. https://doi.org/10.1159/000071224

Patton GC, Coffey C, Carlin JB et al (2011) Overweight and obesity between adolescence and young adulthood: a 10-year prospective cohort study. J Adolesc Health 48:275–280. https://doi.org/10.1016/j.jadohealth.2010.06.019

Cheng G, Bolzenius K, Joslowski G et al (2015) Velocities of weight, height and fat mass gain during potentially critical periods of growth are decisive for adult body composition. Eur J Clin Nutr 69:262–268. https://doi.org/10.1038/ejcn.2014.131

Arble DM, Bass J, Laposky AD et al (2009) Circadian timing of food intake contributes to weight gain. Obesity (Silver Spring) 17:2100–2102. https://doi.org/10.1038/oby.2009.264

Sherman H, Genzer Y, Cohen R et al (2012) Timed high-fat diet resets circadian metabolism and prevents obesity. FASEB J 26:3493–3502. https://doi.org/10.1096/fj.12-208868

de Castro JM (2004) The time of day of food intake influences overall intake in humans. J Nutr 134:104–111

Diederichs T, Perrar I, Roßbach S et al (2018) In adolescence a higher ‘eveningness in energy intake’ is associated with higher total daily energy intake. Appetite 128:159–166. https://doi.org/10.1016/j.appet.2018.05.142

Roßbach S, Diederichs T, Nöthlings U et al (2018) Relevance of chronotype for eating patterns in adolescents. Chronobiol Int 35:336–347. https://doi.org/10.1080/07420528.2017.1406493

Thompson OM, Ballew C, Resnicow K et al (2006) Dietary pattern as a predictor of change in BMI z-score among girls. Int J Obes (Lond) 30:176–182. https://doi.org/10.1038/sj.ijo.0803072

Xiao Q, Garaulet M, Scheer FAJL (2019) Meal timing and obesity: interactions with macronutrient intake and chronotype. Int J Obes (Lond) 43:1701–1711. https://doi.org/10.1038/s41366-018-0284-x

Muñoz JSG, Cañavate R, Hernández CM et al (2017) The association among chronotype, timing of food intake and food preferences depends on body mass status. Eur J Clin Nutr 71:736–742. https://doi.org/10.1038/ejcn.2016.182

Garaulet M, Esteban Tardido A, Lee Y-C et al (2012) SIRT1 and CLOCK 3111T> C combined genotype is associated with evening preference and weight loss resistance in a behavioral therapy treatment for obesity. Int J Obes (Lond) 36:1436–1441. https://doi.org/10.1038/ijo.2011.270

Roenneberg T, Allebrandt KV, Merrow M et al (2012) Social jetlag and obesity. Curr Biol 22:939–943. https://doi.org/10.1016/j.cub.2012.03.038

Malone SK, Zemel B, Compher C et al (2016) Social jet lag, chronotype and body mass index in 14–17-year-old adolescents. Chronobiol Int 33:1255–1266. https://doi.org/10.1080/07420528.2016.1196697

Roenneberg T, Wirz-Justice A, Merrow M (2003) Life between clocks: daily temporal patterns of human chronotypes. J Biol Rhythms 18:80–90. https://doi.org/10.1177/0748730402239679

Roenneberg T, Kuehnle T, Juda M et al (2007) Epidemiology of the human circadian clock. Sleep Med Rev 11:429–438. https://doi.org/10.1016/j.smrv.2007.07.005

Kroke A, Manz F, Kersting M et al (2004) The DONALD study. Eur J Nutr 43:45–54. https://doi.org/10.1007/s00394-004-0445-7

Penczynski KJ, Herder C, Krupp D et al (2019) Flavonoid intake from fruit and vegetables during adolescence is prospectively associated with a favorable risk factor profile for type 2 diabetes in early adulthood. Eur J Nutr 58:1159–1172. https://doi.org/10.1007/s00394-018-1631-3

Sichert-Hellert W, Kersting M, Chahda C et al (2007) German food composition database for dietary evaluations in children and adolescents. J Food Compos Anal 20:63–70. https://doi.org/10.1016/j.jfca.2006.05.004

Bokhof B, Günther ALB, Berg-Beckhoff G et al (2010) Validation of protein intake assessed from weighed dietary records against protein estimated from 24 h urine samples in children, adolescents and young adults participating in the Dortmund Nutritional and Longitudinally Designed (DONALD) Study. Public Health Nutr 13:826–834. https://doi.org/10.1017/S136898000999317X

Roenneberg T, Kuehnle T, Pramstaller PP et al (2004) A marker for the end of adolescence. Curr Biol 14:R1038–R1039. https://doi.org/10.1016/j.cub.2004.11.039

Kromeyer-Hauschild K, Wabitsch M, Kunze D et al (2001) Perzentile für den Body-mass-Index für das Kindes- und Jugendalter unter Heranziehung verschiedener deutscher Stichproben. Monatsschr Kinderheilk 149:807–818. https://doi.org/10.1007/s001120170107

Slaughter MH, Lohman TG, Boileau RA et al (1988) Skinfold equations for estimation of body fatness in children and youth. Hum Biol 60:709–723

Preece MA, Baines MJ (1978) A new family of mathematical models describing the human growth curve. Ann Hum Biol 5:1–24. https://doi.org/10.1080/03014467800002601

Buyken AE, Karaolis-Danckert N, Remer T (2008) Association of prepubertal body composition in healthy girls and boys with the timing of early and late pubertal markers. Am J Clin Nutr 89:221–230. https://doi.org/10.3945/ajcn.2008.26733

Schofield WN (1985) Predicting basal metabolic rate, new standards and review of previous work. Hum Nutr Clin Nutr 39(Suppl 1):5–41

Sichert-Hellert W, Kersting M, Schöch G (1998) Underreporting of energy intake in 1 to 18 year old German children and adolescents. Eur J Nutr 37:242–251. https://doi.org/10.1007/s003940050023

Jacobs DR Jr, Hannan PJ, Wallace D et al (1999) Interpreting age, period and cohort effects in plasma lipids and serum insulin using repeated measures regression analysis: the CARDIA study. Stat Med 18:655–679. https://doi.org/10.1002/(SICI)1097-0258(19990330)18:6%3c655:AID-SIM62%3e3.0.CO;2-U

Libuda L, Alexy U, Buyken AE et al (2009) Consumption of sugar-sweetened beverages and its association with nutrient intakes and diet quality in German children and adolescents. Br J Nutr 101:1549–1557. https://doi.org/10.1017/S0007114508094671

Arora T, Taheri S (2015) Associations among late chronotype, body mass index and dietary behaviors in young adolescents. Int J Obes 39:39–44. https://doi.org/10.1038/ijo.2014.157

Fischer D, Lombardi DA, Marucci-Wellman H et al (2017) Chronotypes in the US—influence of age and sex. PLoS ONE 12:e0178782–e0178782. https://doi.org/10.1371/journal.pone.0178782

Greenland S, Pearl J, Robins JM (1999) Causal diagrams for epidemiologic research. Epidemiology 10:37

Eissa MA, Dai S, Mihalopoulos NL et al (2009) Trajectories of fat mass index, fat free-mass index, and waist circumference in children: Project HeartBeat! Am J Prev Med 37:S34–S39. https://doi.org/10.1016/j.amepre.2009.04.005

Eng S, Wagstaff DA, Kranz S (2009) Eating late in the evening is associated with childhood obesity in some age groups but not in all children: the relationship between time of consumption and body weight status in US children. Int J Behav Nutr Phys Act 6:27. https://doi.org/10.1186/1479-5868-6-27

Martínez-Lozano N, Tvarijonaviciute A, Ríos R et al (2020) Late eating is associated with obesity, inflammatory markers and circadian-related disturbances in school-aged children. Nutrients 12:2881. https://doi.org/10.3390/nu12092881

Randler C (2011) Age and gender differences in morningness–eveningness during adolescence. J Genet Psychol 172:302–308. https://doi.org/10.1080/00221325.2010.535225

Coulthard JD, Pot GK (2016) The timing of the evening meal: how is this associated with weight status in UK children? Br J Nutr 115:1616–1622. https://doi.org/10.1017/S0007114516000635

Jaeger V, Koletzko B, Luque V et al (2022) Time of dietary energy and nutrient intake and body mass index in children: compositional data analysis from the childhood obesity project (CHOP) trial. Nutrients. https://doi.org/10.3390/nu14204356

Rešetar J, Pfeifer D, Mišigoj-Duraković M et al (2020) Eveningness in energy intake among adolescents with implication on anthropometric indicators of nutritional status: the CRO-PALS Longitudinal study. Nutrients. https://doi.org/10.3390/nu12061710

McHill AW, Phillips AJ, Czeisler CA et al (2017) Later circadian timing of food intake is associated with increased body fat. Am J Clin Nutr 106:1213–1219. https://doi.org/10.3945/ajcn.117.161588

Lopez-Minguez J, Gómez-Abellán P, Garaulet M (2019) Timing of breakfast, lunch, and dinner. Effects on obesity and metabolic risk. Nutrients 11:2624. https://doi.org/10.3390/nu11112624

Morgan AZ, Keiley MK, Ryan AE et al (2012) Eating regulation styles, appearance schemas, and body satisfaction predict changes in body fat for emerging adults. J Youth Adolesc 41:1127–1141. https://doi.org/10.1007/s10964-012-9757-8

Fong M, Caterson ID, Madigan CD (2017) Are large dinners associated with excess weight, and does eating a smaller dinner achieve greater weight loss? A systematic review and meta-analysis. Br J Nutr 118:616–628. https://doi.org/10.1017/S0007114517002550

Raynor HA, Li F, Cardoso C (2018) Daily pattern of energy distribution and weight loss. Physiol Behav 192:167–172. https://doi.org/10.1016/j.physbeh.2018.02.036

Zavada A, Gordijn MCM, Beersma DGM et al (2005) Comparison of the Munich chronotype questionnaire with the Horne-Östberg’s morningness–eveningness score. Chronobiol Int 22:267–278

Fleig D, Randler C (2009) Association between chronotype and diet in adolescents based on food logs. Eat Behav 10:115–118. https://doi.org/10.1016/j.eatbeh.2009.03.002

Schienkiewitz A, Brettschneider A-K, Damerow S et al (2018) Übergewicht und Adipositas im Kindes- und Jugendalter in Deutschland—Querschnittergebnisse aus KiGGS Welle 2 und Trends. J Health Monit. https://doi.org/10.17886/RKI-GBE-2018-005.2

Acknowledgements

The participation of all children and their families in the DONALD Study is gratefully acknowledged. The authors would also like to thank the DONALD staff for carrying out the anthropometric measurements, for administering the questionnaires, and for collecting and coding the dietary records.

Funding

Open Access funding enabled and organized by Projekt DEAL. The DONALD Study is financially supported by the Ministry of Science and Research of North Rhine Westphalia, Germany. This analysis was funded by the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation)-AL 1794/1-2.

Author information

Authors and Affiliations

Contributions

The authors alone are responsible for the content and writing of the paper. SS, AB, UA, and NJ conceived and designed the work that led to the submission. All authors played an important role in interpreting the results. NJ drafted the manuscript and all coauthors revised and approved the current manuscript. The corresponding author confirms that she has had full access to the data in the study and final responsibility for the decision to submit for publication. The analyzed datasets and SAS Syntax used for the current study are available from the corresponding author on reasonable request.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflicts of interest.

Ethical standards

The DONALD Study has been approved by the Ethics Committee of the University Bonn, Germany and have therefore been performed in accordance with the ethical standards laid down in the 1964 Declaration of Helsinki and its later amendments (ethics numbers: 098/06 and 185/20). Participants themselves or their parents gave written informed consent prior to their inclusion in the study. Details that might disclose the identity of the subjects under study are omitted.

Appendix

Appendix

Directed Acyclic Grapha for the association between the DCAS and body composition in adolescents. The minimally sufficient adjustment set revealed the following covariates: age at take-off and age at peak height velocity (puberty markers), age, energy intake (main meal), count of questionnaires, puberty status, season, sex, smoking in the household, social jetlag, and underreporting of dietary intake. aSchipf S, Knüppel S, Hardt J, Stang A. Directed Acyclic Graphs (DAGs)—Die Anwendung kausaler Graphen in der Epidemiologie [Directed acyclic graphs (DAGs)—the application of causal diagrams in epidemiology]. Gesundheitswesen. 2011 Dec; 73(12):888–92. German. https://doi.org/10.1055/s-0031-1291192. Epub 2011 Dec 22. PMID: 22193898

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Jankovic, N., Schmitting, S., Stutz, B. et al. Alignment between timing of ‘highest caloric intake’ and chronotype in relation to body composition during adolescence: the DONALD Study. Eur J Nutr 63, 253–265 (2024). https://doi.org/10.1007/s00394-023-03259-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00394-023-03259-w