Abstract

Extended exposure to virtual reality displays has been linked to the emergence of cybersickness, characterized by symptoms such as nausea, dizziness, fatigue, and disruptions in eye movements. The main objective of our study is to examine the effects of real-time fine-tuning of stereo parameters and blurriness in virtual reality on the discomfort level of users who are experiencing motion sickness triggered by the display. Our hypothesis proposes that by dynamically correcting the rendering settings, the symptoms of motion sickness can be relieved and the overall VR user experience can be improved. Our methodology commences with a prediction model for the comfort level of the viewer based on their gaze parameters, such as pupil diameter, blink count, gaze position, and fixation duration. We then propose a method to dynamically adapt the stereoscopic rendering parameters by considering the predicted comfort level of the viewer.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Virtual reality (VR) environments experienced through head-mounted displays are becoming increasingly common in both everyday and professional settings. However, the rise of such technology is not without its drawbacks. Long-term use of VR headset displays can lead to various stereoscopic issues with potentially severe consequences. These problems can lead to a type of motion sickness referred to as cybersickness.

This condition, a result of exposure to virtual environments, poses significant health and safety problems that could hinder the progress of virtual reality technology. Previous research [1] has shown that cybersickness can cause users to experience both emotional and psychological adverse effects such as disorientation, nausea, headaches, dizziness, and sweating. These side effects can also potentially lead to a range of long-term health issues. As VR technology continues to evolve, researchers and developers must understand and mitigate the potential health risks associated with prolonged use. There is a need for a better understanding of the long-term effects of these risks, especially given the unpredictability of their impact on the human body.

The purpose of this research is to evaluate the effects of stereoscopic rendering parameters on the viewers’ cybersickness level during VR experience. In the first part of our work, we propose a model to predict the cybersickness level of the viewer based on gaze information obtained from an eye tracker integrated into the VR display, which includes pupil size, frequency of blinks, fixation location, fixation frequency, and gaze position. In the second part of our work, we use the proposed cybersickness prediction model to optimize the stereoscopic rendering parameters, namely the inter-axial separation of stereo cameras, as well as the blur level for depth-of-field rendering. Finally, we present an experimental evaluation to investigate the effects of stereo parameters on user experiences using our approach.

We have used the following three questions as a basis for our work:

-

RQ1: How significantly can viewers’ level of discomfort be predicted based on their physiological reactions, particularly gaze movements, when engaging with virtual reality?

-

RQ2: To what extent does real-time adjustment of VR stereo parameters based on user feedback lead to a reduction in cybersickness and an improvement in overall comfort in the VR experience?

-

RQ3: To what degree does the level of immersion and interactivity within a virtual environment impact the comfort level of users in VR experiences?

2 Background

2.1 Stereopsis

Stereopsis, the phenomenon that occurs when both eyes work together to create a sense of depth, plays a crucial role in virtual reality technology. Stereopsis allows users to perceive objects with a heightened sense of depth, improving the overall quality of 3D visualizations.

However, it is important to note that there are also potential disadvantages associated with the use of stereopsis in VR. Some users may experience symptoms such as eye fatigue and dizziness as a result of prolonged exposure to the technology, especially when stereoscopic parameters are used incorrectly. VR developers must exercise caution in implementing stereopsis correctly and take into account the potential drawbacks to ensure a safe and comfortable user experience (Fig. 1).

2.2 Inter-axial separation

The two primary factors in a stereoscopic rendering setup are the inter-axial separation, \((I_c)\), between the virtual stereoscopic camera pair and their convergence distance, \((Z_c)\) [2] (Fig. 2). Each virtual camera captures the scene with slight variations from the other, enabling the rendering of distinct views for a single eye. These variations are caused by the inter-camera separation, facilitating the fusion of retinal images to create binocular depth perception. The inter-axial distance \((I_c)\) between the centers of the virtual cameras in the virtual environment plays a crucial role in delivering distinct visuals to each eye for an immersive VR experience. Inter-axial separation directly influences the image’s perceived depth by altering the disparity, showing a positive correlation with both disparity and perceived depth. On the other hand, the convergence distance exhibits a negative correlation with disparity. An increase in the convergence distance leads to a forward shift in perceived depth and a decrease in screen parallax, while a decrease has the opposite effect.

The depth perception of corresponding points in the two rendered views is measured by the distance between these views, known as parallax or on-screen disparity, shown as d in Fig. 2. Simultaneously, the two cameras converge at a point where there is zero parallax in the rendered views. This convergence point, where the viewing directions of the cameras intersect, is referred to as the convergence distance \((Z_c)\) or zero parallax distance, measured from the center of the axis between the camera pair. Objects situated beyond this distance demonstrate positive parallax and appear within the display, whereas objects closer than this distance necessitate eye convergence and are perceived as being in front of the display, indicating negative parallax.

Thus, the resulting image disparity d of an object between the left and right images depends on the object’s scene distance Z, inter-axial separation \(I_c\), convergence distance \(Z_c\) and focal length f of the cameras:

During stereoscopic viewing, the viewer’s eyes naturally adjust their focus to align with the display (accommodation) while simultaneously rotating toward the point they are looking at (vergence). In everyday natural viewing, accommodation and vergence are cross-coupled, meaning that the eyes both converge and accommodate at the same position. However, in stereoscopic display systems, this coupling between accommodation and vergence is disrupted, leading to Vergence-Accommodation Conflict (VAC). In VAC, the viewer’s eyes converge or diverge based on the depth of objects in the scene while they maintain focus on the display. To address this issue, it is necessary to keep the focus object within the stereoscopic comfort zone as much as possible. This zone extends to a distance of 1/3 diopter from each side (negative disparity and positive disparity) to the accommodation distance. To ensure that prominent Virtual Environment (VE) contents remain within this stereoscopic comfort zone, it is common practice to limit the perceived depth [3], particularly for environments that cause a high level of discomfort.

3 Previous work

Cybersickness has been studied using various forms of biometric feedback, such as electroencephalograms (EEG), electrocardiograms (ECG), and skin conductance, to examine the correlation between physiological signals and the intensity of discomfort experienced [4, 5]. Naqvi et al. [6] observed a significant increase in subjective discomfort scores along with a notable decrease in the low-frequency/high-frequency ratio within ECG signals in users exposed to 3D stimuli compared to 2D. Dennison et al. [7] used a variety of physiological responses including ECG, heart rate, and electro-oculogram (EOG) and demonstrated a significant association with SSQ scores.

Eye tracking has also been used to evaluate cybersickness, though to a lesser extent compared to other biometric measures such as ECG or EEG [8, 9]. However, the increasing availability of VR HMDs with integrated eye-tracking technology, coupled with its non-intrusive nature, makes eye tracking a particularly valuable tool for detecting cybersickness. Lopes et al. have shown that pupil position and blink rate are indicative of cybersickness [10]. Abiding by these indicators could prove usefully effective for cybersickness identification. Similarly, a study by Cebeci et al. [1] investigated the physiological responses and emotional effects induced by various VEs. Their findings indicate that unfavorable VR environments result in heightened pupil dilation and heart rate, frequently accompanied by symptoms associated with cybersickness. This underscores the imperative of considering physiological reactions in VR experience design.

A proposed method by Islam et al. [11] introduced a deep fusion approach utilizing eye-tracking and head-tracking data to forecast cybersickness severity, achieving an 87% accuracy. This study serves as a source of inspiration for our research, where we seek to improve user comfort by dynamically adjusting VR stereo parameters based on user feedback and physiological responses. Nam et al. [12] have recently conducted a study focusing on eye movement patterns in relation to cybersickness, demonstrating that different VR experience modes can result in varying degrees of discomfort. The findings highlight the link between eye movements and motion sickness, aligning with our efforts to leverage physiological data for optimizing user comfort in VR environments. Our research builds upon these foundations, aiming to contribute by dynamically adapting VR stereo parameters to alleviate motion sickness and enhance the overall user experience.

Numerous factors hold promise for mitigating discomfort within VEs. Concerning VR hardware, attributes such as high resolution, low latency, and high refresh rate are recognized for their capacity to alleviate cybersickness. Similarly, within VR content design, employing slow movements, minimizing motion sickness triggers, and facilitating user control are identified strategies for diminishing cybersickness. Moreover, individual characteristics, such as youthful age, non-smoking status and prior VR exposure have been empirically linked to reduced cybersickness susceptibility [13].

Stereo camera control methods have become an important tool to reduce visual discomfort. In particular, methods used to determine the user’s focal point by tracking eye movements and dynamically adjusting the stereoscopic image with this information can improve the users’ experience. Recent studies show that eye-tracking-based methods can provide a more natural experience by directing the user’s attention to the point of focus [14]. The use of eye tracking can enhance the user experience by offering insights into the cognitive load [15]. Studies indicate that metrics such as pupil position, eye movements, and eye-tracking data correlate with cognitive load. In a more recent study, Cebeci et al. compared two visual-attention-based methods, eye-tracking and salience-guided methods, for stereoscopic camera control in interactive VR [14], where the eye-tracking-based method automatically adjusts the rendering based on the user’s attention, providing a more natural experience.

Depth of Field (DoF) rendering serves as an effective method for mitigating visual discomfort in 3D stereoscopic images [16]. Gaze-contingent DoF helps to enhance the perception of depth in the image, resulting in a more natural and immersive visual experience [17]. Empirical studies have shown that DoF blur effects facilitate the fusion of stereoscopic content, leading to increased viewing comfort. Furthermore, DoF blurring effectively conceals the disparity between accommodation and vergence cues. The utilization of DoF blur effects assists in correcting focal cues, reducing visual fatigue and enhancing the overall quality of the 3D viewing experience. On the other hand, improper utilization of DoF parameters, such as inaccurately adjusting the blur level, can result in a poor user experience. Precise use of DoF parameters during the viewing of stereoscopic content has the potential to alleviate visual fatigue and discomfort by directly addressing the accommodation-vergence conflict.

4 High-level methodology

The hypotheses proposed in our study are as follows:

-

H1: The cybersickness level of the viewer can be predicted experimentally by measured gaze parameters (pupil size, blink count, saccade/fixation count, view direction) using an eye tracker.

-

H2: Adjusting VR stereoscopic rendering parameters in real-time based on user feedback enhances overall comfort and minimizes the cybersickness of viewers.

Figure 1 gives the overall methodology of our approach. In the first part of our approach, we develop a model to predict the cybersickness level of the users from their gaze parameters (H1). These gaze parameters, including gaze point, fixation duration and frequency, pupil diameter, and pupil dilation/contraction count, as well as the viewers’ subjective rating of cybersickness, are collected in an experiment utilizing eye trackers. The data collected is used to train a regression model to predict the reported cybersickness rating from the gaze parameters. Then, in the second part, we propose a method that adjusts the rendering parameters avoiding the vergence-accommodation conflicts as much as possible by taking into consideration the viewer’s stereoscopic limits and current comfort level, which is predicted by the model(H2). Sections 5 and 6 detail our approach.

5 Cybersickness prediction model

For cybersickness prediction, first we implement a system to collect gaze data from the participants using a VR head-mounted display (HMD) equipped with eye trackers. Then we extract a set of gaze-related features from the collected data to train a Multilayer Perceptron (MLP) model that predicts the cybersickness level of the viewer on a scale from 1 to 7, where 1 indicates a low and 7 indicates a high comfort level.

5.1 Gaze parameters

Our cybersickness model takes the following gaze-related parameters as input:

-

Blink count and frequency per unit time serve as an indicator of cognitive workload. A change in blink count may indicate heightened cognitive demands experienced by the user, potentially increasing the risk of cybersickness. [18]

-

Gaze position (2D coordinate) indicates the direction of the user’s gaze within the left or right eye image while experiencing the VE. This data can be utilized to assess the viewer’s attention level and engagement with the VE. Frequent movements in gaze position close to the center of the view image may indicate difficulty in maintaining focus within the VE, potentially increasing the susceptibility to cybersickness [19].

-

Fixation denotes the act of sustaining gaze on a specific point within the VR environment. It is well-known that fixation and saccades are two distinct eye movements in the field of vision research. Saccades refer to rapid eye movements, whereas fixation denotes intervals during which the eyes remain relatively steady and concentrate on a particular point or area within the visual field. Analyzing the duration and frequency of fixations enables the assessment of the user’s visual workload and cognitive processing demands. If the user displays frequent and prolonged fixations, it may indicate challenges in processing visual information within the VE, potentially increasing the risk of cybersickness [20].

-

Pupil diameter reflects the amount of light entering the pupil. It is influenced by a variety of factors, including the brightness of the VE, the viewer’s attention level, and cognitive workload. Changes in pupil diameter in a given VE with a stable brightness level can be used to identify early signs of cybersickness. For example, changes in pupil size in response to different visual tasks may provide insights into visual processing and potential fatigue, which is a common precursor to cybersickness [20].

5.2 Multilayer perceptron (MLP)

Our MLP model is designed to accept gaze parameters, including blink count, fixation count, pupil diameter (as scalar values), and gaze position (as a 2D vector), and produce a scalar comfort level ranging from 1 to 7. To address the complexity of cybersickness, we opted for the Multilayer Perceptron (MLP) among various artificial neural network models. We argue that Multilayer perceptrons (MLPs) offer a potentially effective tool for cybersickness prediction, due to their superior performance even with limited and variable-rich data sets. The use of an MLP architecture is adept for analyzing this set of features due to its capacity to handle diverse type of parameters, and nonlinear relationships among multidimensional parameters effectively. MLPs also offer valuable capabilities for dimensionality reduction and noise mitigation, which is particularly advantageous when processing raw eye-tracking data. This functionality enhances processing efficiency by reducing data size while retaining pertinent information and guarding against overfitting.

5.3 Setup

Our study utilizes a high-end HTC Vive Pro virtual reality setup paired with Tobii Pro eye trackers. This system provides essential data regarding users’ gaze in the virtual space and offers a smooth tracking experience with an output frequency of 120 Hz. Furthermore, our eye trackers demonstrate a notable accuracy of 0.5 degrees, enabling detailed analysis of gaze behavior. Our setup is powered by a high-performance desktop computer, with an Intel Core i7-10700 CPU. The computer is equipped with 16 GB of RAM and an MSI GeForce RTX 2060 Ti graphics card, ensuring optimal performance for processing complex data and rendering high-resolution visuals in the VR environment. The Tobii Pro eye trackers provide real-time streams of participant gaze behavior, capturing gaze parameters such as blink count, gaze position, fixation count, and pupil diameter. This comprehensive data set was instrumental in uncovering patterns of visual attention, cognitive engagement, and perceptual processing in the virtual environment. Section 5.1 presents the collected gaze parameters.

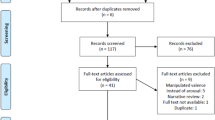

5.4 Participants

The study was completed with 14 participants (8 females, 6 males) of ages \(23.71 \pm 2.27\). The participants were asked to grade their level of VR experience on a scale from 0 to 4. The participants’ overall level of VR experience was mostly low with an average of 0.76 years and 1–3 short-term experiences in the past year. Before starting the experiment, a demographic survey was conducted to collect necessary information from the participants. This survey aimed to gather data on age, gender, and familiarity with the content. It provided important background information that could potentially influence the participants’ susceptibility to cybersickness. All subjects voluntarily participated in the study by providing signed consent prior to testing and received no compensation. The consent form stated that the data collected would be used anonymously (Fig. 3).

Comparison of the verbally observed and predicted Comfort Level using MLP for two random test participants, for a random sequence of frames. The top row shows an experienced participant (VR experience = 8 years), and the bottom row is for an inexperienced participant (no prior VR experience). The first column is for the street scene, the second for roller coaster, and the third for the classroom scene

5.5 Pretests

The experimental procedure started with two pretests: a Monoyer chart test to assess general vision acuity and a random dot stereogram test to evaluate stereo vision acuity. Participants who scored 9 or 10 out of 10 on the Monoyer test and passed the random dot stereogram test were considered eligible to proceed with the experiment. Prior to the evaluation, the interpupillary distance of each participant was measured using a digital pupilometer, and the corresponding setting of the VR headset was adjusted to the measured value. The eye tracker was also re-calibrated per subject. Participants were subsequently briefed on the study’s context and the overall procedure of the upcoming experiment.

5.6 Virtual environments

The following virtual environments (VEs) were employed for both stages of the experiment.

Street: The VE was designed as a city street scene featuring heightened realism through the addition of sound effects and greater depth. The task involved collecting randomly placed white spheres, which had to be collected within a specific time frame of 300 s before the scene automatically closed. This VE was created to enhance the immersive experience, allowing users to engage with the environment through more immersive interactions using the control devices. The participants employed VR controllers to navigate and gather virtual spheres, and they remained in a standing position in an empty laboratory environment throughout the experiment.

Classroom: The VE was designed to imitate a classroom, complete with desks, a whiteboard, appropriate lighting, instructors, and participants. The experiment entailed participants seated in a swivel chair, and they were able to move freely in the VE using the HTC Vive controllers and freely move their viewing direction with their heads. This scene runs for a total of 150 s.

Roller coaster: The VE depicted a roller coaster, with participants passively observing their surroundings without any interactive engagement. During the experiment, the participants remained seated in a 360-degree rotating chair. The VE followed a predetermined route of the roller coaster in two passes, a slow and a fast ride, taking a total of 150 s. The participants could rotate their heads to change their view direction (Fig. 4).

5.7 Procedure

During the experiment, each participant was exposed to the three VEs. Each scene was treated as an individual phase. The default rendering parameters of the HMD were used for this experiment. A Latin squares counterbalanced design was implemented to control for potential order effects in the study. This design involved three experimental conditions (Street, Classroom, Roller Coaster). As a result, each participant experienced each VE once, with the order of VEs varied across participants to ensure a balanced presentation.

In the experiment, a supervisor collected verbal cybersickness ratings from the subjects at five-second intervals using a 7-point Likert scale. This real-time assessment enabled the researchers to continuously monitor the participants’ comfort levels and promptly identify any signs of discomfort or cybersickness symptoms. This method differs from prior approaches, like the SSQ questionnaire administered after the experiment, which lacks real-time detail as it evaluates the entire sequence after the experiment.Verbal feedback was chosen over interactive input methods, such as use of controllers or keyboard, to minimize interference with the participants’ focus while interacting with the scene.

5.8 Results

From the available 14-participant (6 female, 8 male) \(\times \) 3-scene dataset, we randomly selected 741 frames for training the neural network, and 318 frames as the test data set.

The MLP model was evaluated using the root mean square error (RMSE), mean absolute error (MAE), and correlation coefficient (R) global statistics (Table 1). Furthermore, Fig. 5 shows the histogram of prediction errors (i.e., verbally reported cybersickness level minus predicted value) across all participants and scenes, for a random selection of test frame sequences. The histogram revealed a bell-shaped curve, indicating that the majority of prediction errors clustered around zero, suggesting a relatively accurate model overall. Together with this observation, the MAE value of 1.13 indicates that, on average, the model’s predictions deviate from the verbally reported values by 1.13 units.

Figure 4 shows the comparison of the MLP prediction with the observations, for two random participants and a random sequence of frames. The top row shows an experienced participant, and the bottom row is for an inexperienced participant. Note that the reported comfort levels were collected every 5 s. The figure shows that the MLP model provides a satisfactory prediction of cybersickness levels, in different contexts (level of viewer’s experience, interactive vs. non-interactive scenes, standing vs. sitting use, etc.).

Figure 6 presents the results of our initial ablation study to evaluate the contribution of several aspects of our MLP model. To this end, we designed a series of experiments where we selectively removed certain gaze parameters of the model, and changed the hidden layers. The experimental results show that the use of all obtained gaze parameters (pupil size, fixation count, blink count, and gaze positions) yields a smaller error, compared to using a subset of these features. A notable result is that the blink count feature contributes less than the other parameters, and average \(x-y\) coordinates of gaze point and fixation frequency contribute more than other factors.

The regression results and prediction errors revealed promising performance, as shown by the overall MAE value of 1.13, validating hypothesis H1: The proposed model captured complex relationships between multiple input variables and the target cybersickness output. Furthermore, the model’s adaptability shows promise in its ability to handle diverse VEs and interaction scenarios, as shown with different test setups.

6 Comfort-based stereoscopic rendering

In the second part of our study, we propose a method to optimize the comfort level of stereoscopic rendering, by adjusting stereoscopic rendering settings based on the predicted comfort level of the viewer. Our method is based on a feedback-loop approach, where the current comfort level of the viewer is predicted using our proposed cybersickness metric based on the gaze parameters captured in real-time (Fig. 1). Our method then continuously updates the two major stereoscopic rendering parameters, the inter-axial separation of the stereo camera pair (\(I_{c}\)), and the strength of the depth-of-field rendering, based on the predicted comfort level.

Inter-axial distance \(I_{c}\) as a function of convergence distance \(Z_{c}\), based on results obtained by Kim et al. [21]

6.1 Cybersickness prediction

During VR interaction, the gaze parameters are obtained in real-time and used as input features for MLP regression module to predict the viewer’s cybersickness level. Our regressor runs in the background, in parallel with the VE interaction and rendering. To avoid excessive and abrupt changes in the user experience, our method computes the predicted cybersickness value every 5 s. We use the PyTorch library and multithreading for implementation of the regression subsystem.

6.2 Updating stereo camera parameters

To minimize discomfort, it is crucial to first position the convergence distance \(Z_{c_i}\) as closely as possible to the viewer’s focal point [22]. To accomplish this objective, we employ the viewer’s 2D gaze location in real-time. The 3D gaze position is then determined by identifying the point along the axis from the center of the stereo camera pair to the 2D gaze position data provided by the eye-tracker. The distance between this gaze position and the stereo camera pair serves as the camera convergence distance \(Z_{c_i}\).

Based on the computed camera convergence distance, we set the inter-axial separation of the stereo camera pair (\(I_{c}\)). For this purpose, we adopt Kim et al.’s experimentally obtained inter-axial separation parameters as a function of the convergence distance [21]. We consider the experimentally obtained optimum \(I_{c}\) values in Kim et al.’s work [21] to correspond to the optimally comfortable condition, i.e., \(CL=7\), and scale these values linearly based on the currently obtained comfort level of the viewer (Fig. 7).

6.3 Depth-of-field rendering

Foveated rendering has been shown to potentially mitigate cybersickness [23, 24]. While various foveated rendering algorithms are available, we have opted to utilize a conventional depth-of-field rendering implementation because of its accessibility and its ability to be easily parameterized. For depth-of-field blur implementation, we used the Unity engine Post Processing Stack V2.

The focus distance of DoF rendering is dynamically matched to the convergence distance \(Z_c\) obtained in the previous step. The focal length and aperture values for the average comfort level of 4 were set to 75mm and f/5.6, respectively, in our tests. The blur effect is controlled by the aperture value, and its strength increases with decreasing comfort levels. The aperture is interpolated linearly by using the comfort level CL, where the minimum and maximum aperture values correspond to f/9.8 and f/1.4, respectively.

Note that different users may have varying sensitivities to the comfortable disparity ranges and depth-of-field effects. Providing options for users to customize the minimum and maximum disparity values and the depth-of-field settings is not considered in this study, and is left as a future work.

6.4 Temporal smoothing

Updating the stereo camera parameters from frame to frame can potentially cause excessive fluctuations, resulting in abrupt and undesirable effects where viewers must quickly adjust their focus between nearby and distant regions. These sudden changes can significantly impact viewing comfort and the overall VR experience negatively. Therefore, the computed parameters \(x_{c^*}\) (i.e., \(I_c\) and \(f-stop\)) for a frame generated at n frames per second are adjusted based on \(x_p\), which is set for the previous frame, as outlined below.

where \(\{x_a,\,x_b\}\) are \(\{-0.3,\,0.3\}\) for DoF and \(\{-0.02,\,0.02\}\) for \(I_c\).

6.5 Stereoscopic rendering

As the final step, the temporally smoothed parameters \(I_c\) and \(Z_c\) are used to update the stereoscopic view of the head-mounted display (HMD). The projection matrices of the stereo camera pair are adjusted using the asymmetric projection matrix adjustment method for stereoscopic 3D viewing [25]. Using this method instead of the default HTC Vive HMD projection matrix setup allowed us to dynamically change the view projection based on the computed parameters.

7 Evaluation

We employed the same virtual reality and desktop computer setup, and the participants also experienced the same three VEs as in the first step.

7.1 Participants

A sample of participants, different from the first phase, participated in the evaluation experiment. The study was completed with 18 participants (11 females, 7 males) of ages \(24.00 \pm 5.\) All subjects voluntarily participated in the study and received no compensation. The participants were asked to grade their level of VR experience on a scale from 0 to 4. The participants’ average level of VR experience was 1.27 years.

7.2 Procedure

For the comparative evaluation, each of the three VEs was presented in two different VR experience settings. The first baseline setting was the default stereo configuration of the HTC Vive VR setup, where subjects experienced each VE with the default stereo camera parameters, which are kept fixed throughout the experiments. In the second setting, the VE was experienced dynamically by adapting the stereo camera and DoF rendering parameters using the proposed method. The three VEs were experienced within comparable time frames as the first phase. Thus, subjects were administered a total of six sessions (three VEs with two VR experience settings). To prevent any order effects, a \(6 \times 6\) Latin square design was used, with the rendering method and the VE as the experimental condition.

Following each session, participants assessed the session using a questionnaire formulated based on a widely adopted methodology in similar previous studies [26, 27]. Within the questionnaire, participants were prompted to assess the session across dimensions of image quality, perceived depth, visual comfort, and overall quality, utilizing a Likert scale ranging from one to seven. Ratings of one, four, and seven corresponded to "very bad", "neither good nor bad", and "very good", respectively. These evaluation criteria align with the common parameters employed for perceptual evaluations of stereoscopic content as outlined in the ITU-R recommendation [28]. For the image quality, the subjects were advised to consider ghosting (crosstalk) and blurriness in the focused area as major indicators for poor image quality. For the perceived depth, they were asked to regard how much the overall depth feeling distinguishes the experience from flat two-dimensional imagery and how noticeable the depth sensation is between the region of interest and its surroundings. They were instructed that the feeling of visual fatigue, eye strain onset of headache, nausea, and dizziness to assess visual comfort. Furthermore, they were told that the overall quality rating expresses how much they like and enjoy the experience in general.

At the end of each scene, the participants were asked to fill out the Simulator Sickness Questionnaire (SSQ) [29], totaling six SSQ responses per participant. SSQ is widely utilized in cybersickness studies due to the similarity of symptoms to simulator sickness [30, 31]. Bruck and Watters [30] examined the usability of SSQ to assess cybersickness in virtual reality and found that the SSQ is a reliable and valid tool for this purpose.

Violin plots illustrate the distribution of ratings provided by the study participants across four metrics: (a) image quality, (b) perceived depth, (c) visual comfort, and (d) overall quality. The averages for each rating are denoted by horizontal lines (CLS: Classroom scene, RCS: Roller-Coaster scene, STR: Street scene, CSAR: proposed method)

7.3 Results

Table 2 presents the average and standard deviation of overall ratings for image quality, depth perception, and visual comfort across all experiments. The table additionally presents the statistical results of the Wilcoxon Matched-Pairs Signed-Rank Test, which compares the two methods (independent variable) and their effect in terms of these four ratings (considered as dependent variables). The analysis revealed a statistically significant difference (\(p < 0.05)\) in perceived image quality (Z = \(-\)2.71, p = 0.007), depth perception (Z = \(-\)1.96, p = 0.05), and overall quality assessment (Z = \(-\)2.68, p = 0.007) between the proposed method and the baseline method, and showed that the use of the proposed method has a medium-level effect on these ratings. However, no statistically significant difference was observed in the visual comfort level ratings (Z = \(-\)1.38, p = 0.168). The average rating for the proposed method was observed higher than that of the baseline method, but the effect was found as small. The lack of statistical significance may be attributed to the low level of experience among many viewers with the HMD. Further research involving participants with higher levels of experience could provide more conclusive findings.

Figure 8 illustrates the distribution of evaluation ratings for the three scenes, including both the baseline and the proposed method, along with their respective mean ratings. The data depicted in the figure consistently show that the majority of ratings for the proposed method were higher than the default baseline setting across all scenes. Furthermore, the distribution of ratings appears to be skewed, particularly noticeable in the roller coaster scene. This result may be attributed to the fact that this scene was added to induce a higher level of cybersickness, which was experienced by a higher ratio of participants compared to the other two scenes. However, contrary to the authors’ expectations, the image quality and comfort levels for this scene did not exhibit a statistically significant change compared to the other two scenes.

A detailed factor analysis, performed by Kennedy et al. [29] revealed that cybersickness symptoms can be placed into three general categories: Oculomotor(eyestrain, difficulty focusing, blurred vision, headache difficulty concentrating, fatigue, sweating), Disorientation (dizziness, vertigo, fullness of the head), and Nausea (nausea, stomach awareness, increased salivation, burping). Table 3 shows the average SSQ (Simulator Sickness Questionnaire) scores for the three test environments. Note that the SSQ questionnaire asks participants to score 16 symptoms on a four-point scale (0:none-3:severe) [29]. Table 3 suggests that our method demonstrated an advantage in reducing the general severity of symptoms. Furthermore, the Wilcoxon Matched-Pairs Signed-Rank Test on specific symptoms revealed a statistically significant difference (\(p < 0.05)\) in the symptoms General Discomfort (Z = \(-\)2.76, p = 0.006, r = 0.41), Fatigue (Z = \(-\)2.56, p = 0.011, r = 0.38), Difficulty Focusing (Z = \(-\)2.27, p = 0.023, r = 0.33), and Vertigo (Z = \(-\)1.90, p = 0.058, r = 0.28). The results showed that the proposed method has a medium-level effect on these symptoms.

8 Conclusion

The initial part of our study, focusing on the cybersickness prediction model, yielded promising results across various parameters, thus confirming the hypothesis H1. The proposed model, with a test MAE value of 1.13, successfully captured relationships among multiple gaze input variables and the target cybersickness prediction output. In the second part of our study, our proposed method outperformed the baseline setting regarding image quality, overall quality, and depth perception ratings across three VEs. Additionally, the proposed method demonstrated generally better visual comfort results than the baseline setting. Overall, the integration of the cybersickness-aware method enhanced overall favorability, as indicated by the overall quality rating, thereby validating the hypothesis H2.

Our proposed work is subject to several limitations that need attention in future studies. Firstly, our exploration focused solely on the relatively short-term effects of dynamic stereo camera control with VR head-mounted displays (HMDs) - the interaction took up to five minutes in the street scene. It is crucial for subsequent research to comprehensively investigate the further prolonged impact of such approaches on visual comfort and cybersickness during extended VR experiences. Secondly, although our study sample comprised individuals with varying levels of prior VR experience, the sample size was insufficient to categorize them into groups based on their VR experience level for separate analysis of their responses. Therefore, future studies should consider enlarging the sample size to enable between-subject evaluation of different experience levels and other VR-related aspects. Furthermore, longitudinal studies are needed to investigate the long-term effects of VR device usage experience on cybersickness, which will help to track individuals’ experiences and symptoms over extended periods.

Data availibility statement

No datasets were generated or analyzed during the current study.

References

Cebeci, B., Celikcan, U., Capin, T.K.: A comprehensive study of the affective and physiological responses induced by dynamic virtual reality environments. Comput. Anim. Virtual Worlds 30(3–4), e1893 (2019)

Wann, J.P., Rushton, S., Mon-Williams, M.: Natural problems for stereoscopic depth perception in virtual environments. Vision. Res. 35(19), 2731–2736 (1995)

Hoffman, D.M., Girshick, A.R., Akeley, K., Banks, M.S.: Vergence-accommodation conflicts hinder visual performance and cause visual fatigue. J. Vision 8, 33 (2008)

Kim, Y.Y., Kim, E.N., Park, M.J., Park, K.S., Ko, H.D., Kim, H.T.: The application of biosignal feedback for reducing cybersickness from exposure to a virtual environment. Presence: Teleoper Virtual Environ 17(1), 1–16 (2008)

Ozkan, A., Uyan, U., Celikcan, U.: Effects of speed, complexity and stereoscopic vr cues on cybersickness examined via eeg and self-reported measures. Displays 78, 102415 (2023)

Naqvi, S.A.A., Badruddin, N., Malik, A.S., Hazabbah, W., Abdullah, B.: Does 3d produce more symptoms of visually induced motion sickness? In: 2013 35th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), pp. 6405–6408. IEEE (2013)

Dennison, M.S., Wisti, A.Z., D’Zmura, M.: Use of physiological signals to predict cybersickness. Displays, 44, 42–52 (2016)

Ozkan, A., Celikcan, U.: The relationship between cybersickness and eye-activity in response to varying speed, scene complexity and stereoscopic vr parameters. Int. J. Hum Comput Stud. 176, 103039 (2023)

Wibirama, S., Nugroho, H.A., Hamamoto, K.: Depth gaze and ecg based frequency dynamics during motion sickness in stereoscopic 3d movie. Entertain. Comput. 26, 117–127 (2018)

Lopes, P., Tian, N., Boulic, R.: Eye thought you were sick! exploring eye behaviors for cybersickness detection in VR. In: Proceedings of the 13th ACM SIGGRAPH Conference on Motion, Interaction and Games, pp. 1–10 (2020)

Islam, R., Desai, K., Quarles, J.: Cybersickness prediction from integrated hmd’s sensors: a multimodal deep fusion approach using eye-tracking and head-tracking data. In: 2021 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), pp. 31–40 (2021)

Nam, Y., Hong, U., Chung, H., Noh, S.R.: Eye movement patterns reflecting cybersickness: evidence from different experience modes of a virtual reality game. Cyberpsychol. Behav. Soc. Netw. 25(2), 135–139 (2022)

Saredakis, D., Szpak, A., Birckhead, B., Keage, H.A.D., Rizzo, A., Loetscher, T.: Factors associated with virtual reality sickness in head-mounted displays: a systematic review and meta-analysis. Front. Hum. Neurosci. 14, 96 (2020)

Cebeci, B., Askin, M.B., Capin, T.K., Celikcan, U.: Gaze-directed and saliency-guided approaches of stereo camera control in interactive virtual reality. Comput. Graph. 118, 23–32 (2024)

Antony William Joseph and Ramaswamy Murugesh: Potential eye tracking metrics and indicators to measure cognitive load in human–computer interaction research. J. Sci. Res. 64(1), 168–175 (2020)

Carnegie, K., Rhee, T.: Reducing visual discomfort with hmds using dynamic depth of field. IEEE Comput. Graph. Appl. 35(5), 34–41 (2015)

Duchowski, A.T., House, D.H., Gestring, J., Wang, R.I., Krejtz, K., Krejtz, I., Mantiuk, R., Bazyluk, B.: Reducing visual discomfort of 3d stereoscopic displays with gaze-contingent depth-of-field. In: Proceedings of the ACM Symposium on Applied Perception, pp. 39–46 (2014)

Ledger, H.: The effect cognitive load has on eye blinking. Plymouth Stud. Sci. 6, 206–223 (2013)

Chang, E, Kim, H.-T., Yoo, B.: Predicting cybersickness based on user’s gaze behaviors in hmd-based virtual reality. J. Comput. Des. Eng. 8, 728–739 (2021)

Kourtesis, P, Papadopoulou, A., Roussos, P.: Examination of cybersickness in virtual reality: the role of individual differences, effects on cognitive functions and motor skills, and intensity differences during and after immersion. arXiv preprint arXiv:2310.17344 (2023)

Kim, H., Lee, G., Billinghurst, M.: Adaptive interpupillary distance adjustment for stereoscopic 3d visualization. In: Proceedings of the 14th Annual ACM SIGCHI_NZ Conference on Computer–human Interaction, pp. 1–4 (2013)

Hussain, R., Chessa, M., Solari, F.: Modelling foveated depth-of-field blur for improving depth perception in virtual reality. In: 2020 IEEE 4th International Conference on Image Processing, Applications and Systems (IPAS), pp. 71–76. IEEE (2020)

Liu, J., Mantel, C., Forchhammer, S.: Perception-driven hybrid foveated depth of field rendering for head-mounted displays. In: 2021 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), pp. 1–10. IEEE (2021)

McIntosh, L., Riecke, B.E., DiPaola, S.: Efficiently simulating the bokeh of polygonal apertures in a post-process depth of field shader. Comput. Graph. Forum 31(6), 1810–1822 (2012)

Avan, E., Capin, T.K., Gurcay, H., Celikcan, U.: Enhancing vr experience with rbf interpolation based dynamic tuning of stereoscopic rendering. Comput. Graph. 102, 390–401 (2022)

Conti, J., Ozell, B., Paquette, E., Renaud, P.: Adjusting stereoscopic parameters by evaluating the point of regard in a virtual environment. Comput. Graph. 69, 24–35 (2017)

Martin-Gomez, A., Weiss, J., Keller, A., Eck, U., Roth, D., Navab, N.: The impact of focus and context visualization techniques on depth perception in optical see-through head-mounted displays. IEEE Trans. Visual Comput. Graph. 28(12), 4156–4171 (2021)

International Telecommunication Union. Subjective methods for the assessment of stereoscopic 3dtv systems. Technical Report Recommendation BT.2021, ITU-R (2012)

Kennedy, R.S., Lane, N.E., Berbaum, K.S., Lilienthal, M.G.: Simulator sickness questionnaire: An enhanced method for quantifying simulator sickness. Int. J. Aviat. Psychol. 3(3), 203–220 (1993)

Bruck, S., Watters, P.A.: Estimating cybersickness of simulated motion using the simulator sickness questionnaire (ssq): a controlled study. In: 2009 Sixth International Conference on Computer Graphics, Imaging and Visualization, pp. 486–488. IEEE (2009)

Hirzle, T., Cordts, M., Rukzio, E., Gugenheimer, J., Bulling, A.: A critical assessment of the use of ssq as a measure of general discomfort in vr head-mounted displays. In: Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, pp. 1–14 (2021)

Funding

Open access funding provided by the Scientific and Technological Research Council of Türkiye (TÜBİTAK).

Author information

Authors and Affiliations

Contributions

B.T., U.C., T.C. wrote the main manuscript text. B.T. prepared the figures and the supplementary video. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Supplementary file 1 (mov 137069 KB)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Terzioğlu, B., Celikcan, U. & Capin, T.K. Gaze-contingent adaptation of VR stereo parameters for cybersickness prevention. Vis Comput (2024). https://doi.org/10.1007/s00371-024-03505-0

Accepted:

Published:

DOI: https://doi.org/10.1007/s00371-024-03505-0