Abstract

This paper proposes an approach for training visuo-haptic object recognition models for robots using synthetic datasets generated by 3D virtual simulations. In robotics, where visual object recognition has witnessed considerable progress due to an abundance of image datasets, the scarcity of diverse haptic samples has resulted in a noticeable gap in research on machine learning incorporating the haptic sense. Our proposed methodology addresses this challenge by utilizing 3D virtual simulations to create realistic synthetic datasets, offering a scalable and cost-effective solution to integrate haptic and visual cues for object recognition seamlessly. Acknowledging the importance of multimodal perception, particularly in robotic applications, our research not only closes the existing gap but envisions a future where intelligent agents possess a holistic understanding of their environment derived from both visual and haptic senses. Our experiments show that synthetic datasets can be used for training object recognition in haptic and visual modes by incorporating noise, performing some preprocessing, data augmentation, or domain adaptation. This work contributes to the advancement of multimodal machine learning toward a more nuanced and comprehensive robotic perception.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In machine learning and robotics, the integration of visual and haptic modalities stands at a critical frontier for advancing the capabilities of intelligent agents. This paper introduces a practical approach to this challenge by advocating the use of synthetic datasets derived from 3D simulations to train models for visuo-haptic object recognition in robotic systems. While significant strides have been made in visual object recognition, driven by the accessibility of abundant image datasets, the scarcity of diverse haptic samples has resulted in a notable gap in haptic object recognition (Fig. 1).

The primary novelty of our project lies in its dedicated focus on addressing this imbalance by harnessing the power of 3D virtual simulations to generate realistic synthetic datasets. This approach not only mitigates the challenges associated with acquiring diverse haptic samples but also offers a scalable and cost-effective solution to train models capable of seamlessly integrating visual and haptic cues for object recognition. The emphasis on 3D virtual simulations marks a departure from conventional methodologies, presenting an innovative avenue for more comprehensive model training.

The importance of our project is underscored by the growing realization that the fusion of visual and haptic sensing is imperative for enhancing the perceptual capabilities of intelligent agents, especially in robotic applications. While extensive work has been devoted to visual object recognition, the intricate nature of generating haptic samples has resulted in a relative scarcity of research in machine learning incorporating the haptic sense. Our research addresses this gap and envisions a future where robots possess a holistic understanding of their environment derived from visual and haptic senses.

We have constructed a real three-finger end effector that we attached to a Universal Robots (UR3) arm to obtain haptic samples. We also mounted a camera on the arm to obtain the visual samples. To complement this, we have also implemented a virtual simulation of the robot, using basic ray tracing techniques to calculate the intersections of the end effectors to simulate touches on the surface. Furthermore, we obtain screen captures of the object in the simulation to simulate the images taken by a camera.

In the forthcoming sections, we delve into the intricacies of our methodology, demonstrating the efficacy of training visuo-haptic object recognition models using synthetic datasets from 3D simulations. We explain how we adapt to using the synthetic data by incorporating noise, performing some preprocessing on the images, augmenting the data, and performing domain adaptation. We show through empirical evidence and practical insights that our work could potentially contribute to the burgeoning field of multimodal machine learning, marking a significant stride toward more nuanced and comprehensive robotic perception.

2 Related work

Object recognition is essential for many robotic tasks and is extensively studied with various proposed techniques, primarily focusing on visual methods [1, 2]. However, integrating touch sensors presents challenges due to the tedious process of obtaining haptic samples, hindering research progress [3].

In haptic object recognition, methodologies fall into two main categories based on input type. Some methods utilize the spatial coordinates of touch points to recognize 3D shapes, often employing sensors on a robot’s end effector [4,5,6,7]. Alternatively, others use high-resolution tactile arrays, such as GelSight, which has been applied in robotic tasks [8, 9]. Additionally, hybrid approaches combining these methods aim to enhance model robustness [10, 11].

These approaches, however, require large training datasets to achieve high accuracies using modern machine learning algorithms. Obtaining haptic data for training robots remains a tedious and time-consuming task, making synthetic data generation increasingly important. MAT [12] proposed a method of sim-to-real transfer where an end-to-end tactile grasping policy trained in simulation could be transferred to the real-world setup. In [13], they also produced synthetic data, in this case, they generated GelSight Tactile images for Sim2Real Learning.

But there already exists an abundance of work on synthetic image generation, especially for visual object recognition. The state-of-the-art approaches to generating visual datasets are detailed in the survey papers of [14, 15]. Some works have even explored combining the haptic sense with visual recognition systems to improve results [16]. Our paper focuses on a less-explored domain-synthetic haptic sample generation that uses a simpler haptic feature compared to other approaches such as the one in [17]. Furthermore, we present a straightforward approach for obtaining image training sets for object recognition in our robot’s well-controlled environment and combine this with the haptic recognition model.

3 Stimuli

The objects or stimuli were generated using Gielis’ parametric model, also known as Supershapes, [18, 19], which have been used in previous studies in a similar context [20, 21]. It is defined by the following formula:

In order to create 3D shapes, we multiply the Superformulas, \(r_1\) and \(r_2\) for latitude (\(\phi \)) and longitude (\(\theta \)), respectively: \( x=r_1(\theta )\cos \theta \, r_2(\phi )\cos \phi \), \(y= r_1(\theta )\sin \theta \, r_2(\phi )\sin \phi \), and \(z=r_2(\phi ) \sin \phi \).

We set the parameters \(a = b = 1\), \(m = 4\), longitude \(n_1 = n_2 = n_3 = 10\) and latitude \(n_1 = n_3 = 10\). We vary latitude \(n_2\) from 0 to 20 to generate six-faced polyhedra with flat, convex, and concave surfaces (shown in Fig. 2). These objects were 3D printed for the experiments involving the robot arm with a scale of 15 cm \(\times \) 15 cm for the haptic experiments. These were also exported as 3D meshes (.off/.obj files) for the virtual simulations.

4 Real capture system

This work evaluates how well models trained in virtual scenarios perform on real samples gathered through robotic exploration. Here, we briefly explain both the robotic haptic and visual data capture systems, covering hardware setup details and the data acquisition process.

4.1 Haptic capture system

The haptic capture system is comprised of a custom end effector designed from the ground up in terms of both hardware and software, working in tandem with a UR3 robotic arm from Universal Robots [22], responsible for the system’s movement. The key components of our real haptic capture system include the robotic arm, the haptic sensing hardware, and the software.

The UR3 robotic arm maneuvers the end effector, approaching objects to collect samples. While UR3 has six rotating joints, our system utilizes only three, controlled by the user to ensure precision. To instruct the robot’s movement and position, we establish a connection between a computer and the robotic arm through sockets.

The hardware encasing encompasses the end-effector structure, sensors enabling sample collection, and a microcontroller housing the sampling logic. The end-effector structure, designed to fit the UR3 (see Fig. 3a), houses electronic components and fingers for obtaining haptic samples. Optical sensors were chosen for their precision and autonomy (see Fig. 3c). Other types of sensors were also tested, including microswitches and variable resistors, which were also included in the hardware electronics connected to the Arduino Nano 33 BLE microcontroller (see Fig. 3b) that was responsible for logic and data sampling.

The software is comprised of two primary scripts. The first script, embedded in the microcontroller, is an Arduino script monitoring sensor states to extract a descriptor for each sample. The descriptor is a 1x3 numeric array containing the times in microseconds each finger has touched the object since the first one (with one value always 0, corresponding to the first finger). The second script, a Python 3 program on the computer, manages the sending movement commands to the robotic arm via socket communication, user interface commands through the command line, and reception of data descriptors from the microcontroller through the UART channel.

In addition, a Python program was created for preprocessing data, responsible for calibration and data conversion. This is necessary due to different reference variables (velocity, time, and distance) in the systems used for data comparison. The software converts between time units, from distance to time, and vice versa. Calibration is crucial to apply offsets since haptic samples are not taken on a perfectly flat surface. To facilitate communication between the two scripts, a protocol of commands was established for the UART channel, determining the hardware actions.

4.2 Real haptic samples

Our tactile sampling uses this specially designed three-fingered end effector for discerning the local curvature features of objects. Each finger, equipped with a tactile sensor at its tip, is strategically positioned to detect contact during the examination of an object. The system records the contact timing of each sensor as the fingers approach the object at a consistent velocity.

These recorded timings, denoted as by the triple \((t_l, t_m,t_r)\), are used for describing the object’s curvature. This deduction is achieved by calculating the time intervals between successive touches of the sensors, utilizing the initial sensor’s contact as the reference point with zero time. The result is analogous to the second derivative which denotes the concavity or convexity of the local curvature.

Samples are acquired on all faces for all of the objects. With four rounds for each face (totaling 32 samples per face), this procedure yields 192 samples per object. The histograms of the curvatures of the object are shown in Fig. 8. The bin width for the histograms is chosen to optimally represent the data. Note that these histograms are not directly used in the classification process. Nevertheless, the histograms are shown here to provide insights into the nature of this curvature feature.

Figure 8 highlights key observations: Lat00 and Lat05 curvatures are mainly positive, indicating convex faces, with Lat00 having greater curvatures. Conversely, Lat15 and Lat20 curvatures are predominantly negative, suggestive of concave faces, with Lat20 exhibiting greater curvatures. Lat10 curvatures are nearly zero, with a slightly positive trend due to noise in real-world sampling, a phenomenon further explored in Sect. 6 across various samples and objects.

4.3 Visual capture system

In the next phase, a visual capture system was constructed and mounted on the same robotic arm that previously housed the haptic capture system, with a change in the end effector. An INTEL Realsense 2 (RS2) camera was utilized as the end effector, enabling portable image capture. A Python 3 program was developed using RS2 and UR3 SDKs for simultaneous camera movement and image capture. Figure 4 illustrates the proposed setup, where we include a black background to the table for contrast enhancement together with a fixed white light source from the top.

4.4 Real visual samples

We perform two main processes for obtaining the visual samples. First, we conduct a real image capture session, and second, we apply image processing techniques for normalizing the real images.

The real image-capturing system is built on the previously mentioned hardware (UR3 + Realsense2). The UR3 was programmed to perform a sequence of fixed positions, and when each position was reached, the system activated the camera to capture an image (see Fig 4E). The system will capture as many images as pre-fixed positions were established at the beginning, saving all images in a folder. Once the sequence is finished, the object is manually rotated and the process of capturing images is repeated.

The preprocessing of the images (See Fig. 5) has two main stages. First, an adaptive binarization process is applied to separate objects from the background, and consequently remove shadows. Second, we invert colors and subtract the background to obtain a dark object over a white background as the images are generated virtually.

5 Virtual capture system

Obtaining haptic and visual training samples from a physical robot setup is time-consuming due to calibration, setup complexities, and hardware vulnerabilities, particularly in haptic mode. Similarly, amassing a large number of images in visual mode demands considerable effort.

To address these challenges, we developed a virtual capture system to efficiently procure both haptic and visual datasets for training our machine learning models. Initial attempts involved simulation using the Unity game engine, while faster than the physical robot setup, it still required a significant amount of time to collect the necessary samples and explore different parameters.

Consequently, we developed the current system using Visual Studio 2022 with C, OpenGL, and GLUT, employing basic ray tracing techniques to simulate the robot’s movements within a virtual environment. The virtual representation of the robot, shown in Fig. 6, facilitated the acquisition of haptic and visual images. Subsequently, screen captures from different camera viewpoints served as visual training samples.

5.1 Parameters

Initially, the user has to set some parameters of the virtual system to match the real-world configuration of the robot. These parameters are as follows:

-

1.

\(P_{f\_\textrm{dist}}\)—this is the distance between the end effectors or fingers of the robot.

-

2.

\(P_{\textrm{scale}}\)—this is a multiplier to the scale or size of the 3D mesh to match the actual dimensions of the physical object relative to the finger distance.

-

3.

\(P_{\textrm{velocity}}\)—this is the constant velocity of the fingers of the robot as it approaches the object.

-

4.

\(P_{\textrm{step}\_\textrm{angle}}\)—this is the generated step angle increments of the random angle (theta and \(\varphi \))

-

5.

\(P_{\textrm{num}\_\textrm{sample}}\)—this is the number of virtual haptic samples that will be generated by the system.

-

6.

\(P_{\textrm{sigma}}\)—this is the variance of the Gaussian noise added to the samples.

Histograms of haptic samples: a The first column shows the samples taken on each of the objects in an ideal virtual simulation without noise, b The second column shows the samples taken on each of the objects in a virtual simulation with noise incorporated. c The last column shows the samples taken from each of the objects in a real-world setting

5.2 Synthetic haptic dataset generation

The primary objective of the system is to generate specific line segments, namely \(\overline{F_lT_l}\), \(\overline{F_mT_m}\), and \(\overline{F_rT_r}\), representing the trajectories of the robot’s fingers as they approach the object (refer to Fig. 6). Here, \(F_l\), \(F_m\), and \(F_r\) denote the initial locations or points of the end effectors of the fingers, while \(T_l\), \(T_m\), and \(T_r\) signify the respective target points of the fingers. With these line segments, we can use basic ray tracing techniques to find the intersections with the object simulating the robot’s touch.

Since a triangular 3D mesh is employed, we can compare each triangle in the mesh and the aforementioned line segments to identify potential intersections. In cases where more than one triangle intersects, the algorithm seeks the nearest intersection points to the starting points. The computational method utilized for this purpose is based on Ericson’s algorithm [23], designed to determine intersection points between any given triangle with a normal and any line segment using Barycentric coordinates.

After obtaining intersection points (\(I_l\), \(I_m\), \(I_r\)), we can find the lengths of the line segments from the starting point to the intersection to get the distances (\(d_l\), \(d_m\), \(d_r\)), refer to Fig. 6. We assume that the robot’s fingers have constant velocity (\(P_{velocity}\)) as they move toward the object, as such the relationship between distance (d), velocity (\(P_{velocity}\)), and time (t) is \(d = P_{velocity}(t)\) as such to get the time it took for each finger to touch the object is \(t = \frac{d}{P_{velocity}}\).

We then add Gaussian noise to the samples based on variance \(P_{sigma}\), which is based on the actual variance of the noise obtained by the haptic sensors of the real robot. And since our real robot records the relative times when the fingers touched the object, we get the lowest time (\(t_{lowest}\)) and deduct this from all the times of the fingers. As such, the first finger to touch will have a time of 0.0. This will lead to the three contact times for one haptic sample (\(t_l\),\(t_m\), and \(t_r\)), analogous to the actual samples derived from the real robot.

5.3 Synthetic visual dataset generation

To generate the virtual visual dataset, we obtain an image of the object taken from the camera’s viewpoint mounted on the robot arm. In the virtual system, this means re-configuring the main camera of the OpenGL viewport to match the camera’s location and orientation in the real world (see Fig. 7). This is done in OpenGL using the lookAt function, to configure the camera, and using perspective projection.

For our case, the eye vector or the camera position is set to point \(F_M\), the at vector or the target of the camera is set to \(T_m\), and the \(up_{cam}\) is set to the vector \(\textbf{S} \times \overrightarrow{F_mT_m}\). The setup of the camera is shown in Fig. 7. The projection is set to perspective with a field of view (fov) set to 45 degrees, the near plane at 0.1, and the far plane at 100.0. We make use of simple white point lights to illuminate the scene without shadows, positioning them similarly to the actual robot setup. This is sufficient illumination for our purposes since the real robot works in a well-lit environment. We save the screenshot to a bitmap file that serves as our virtual visual sample.

These steps are repeated until the number of samples, \(P_{num\_sample}\). Note that if not all three rays hit the object, the sample is disregarded and a new set of rays is generated.

6 Classification

To validate the effectiveness of our proposed methodology, we conducted training on the datasets generated by the virtual simulation and subsequently assessed the model’s performance using real-world data collected from the robot’s sensors. For haptic classification, classical machine learning techniques were explored, incorporating noise into the training sets. In the visual domain, convolutional neural networks (CNNs) were employed to classify images captured by the robot arm’s camera, with training data sourced from the virtual simulation. To improve accuracy, we implemented data augmentation and a form of domain adaptation.

6.1 Haptic classification

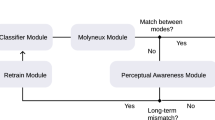

In the process of determining the optimal machine learning algorithm for haptic object classification, we evaluated various classical machine learning techniques, such as Naive Bayes, support vector machines (SVM), and XGBoost. XGBoost, an open-source library for machine learning, was particularly notable for its ability to construct decision trees based on gradient-boosting models. This approach enhances the overall model accuracy by aggregating multiple weak models into a more robust one through iterative decision tree building and performance optimization (Fig. 8).

Figure 9 presents a comparative analysis of the three machine learning algorithms, varying the number of haptic samples for each feature set. It is important to remember that each haptic sample, generated by both the robot and the virtual system, comprises a triple (\(t_l,t_m,t_r\)), representing the normalized contact times of the end effectors that we can use to derive the local curvature of an object. While employing a greater number of haptic samples tends to enhance performance, it necessitates multiple touches of the robotic arm. Therefore, the challenge lies in identifying an optimal sample size that ensures high recognition accuracy while minimizing the number of robot interactions.

The comparison was conducted by training and testing exclusively within the virtual simulation environment. We train with only 640 virtual haptic samples. This deliberate choice aimed to isolate the influence of real haptic data and its inherent characteristics on the selection of classification techniques. As illustrated in Fig. 9, XGBoost consistently surpasses other methods across different sample sizes. Particularly noteworthy is its ability to achieve satisfactory recognition results even with as few as 5 or 10 samples, underscoring the efficacy of XGBoost in haptic object classification based on limited robotic interactions.

When moving to the real world, it is important to emphasize that we incorporated noise into the synthetic haptic dataset during the virtual simulation. Attempting to train with ideal haptic samples and subsequently testing on the haptic samples from the real robot yields suboptimal results, as illustrated in Fig. 10a.

A plausible explanation for these results can be seen in the histograms of the curvatures of various objects in both the virtual simulation and the real-world scenario. In Fig. 8, we observe significant differences in the curvature histograms of models from the virtual ideal simulation and those from the real robot. Specifically, virtual simulations show the ideal curvatures, while the real robot’s sensors introduce some noise. For instance, flat surfaces should ideally exhibit a curvature of zero, a characteristic evident in the virtual world. In contrast, real-world curvatures are distributed across multiple bins rather than concentrated in a single bin.

We therefore incorporated Gaussian noise, with a standard deviation value of 0.04779, into the simulation. This is based on the statistical variance observed in the real haptic samples for flat curvatures that should have zero curvature. Using this virtual haptic sample with Gaussian noise significantly improves the performance of the system, as shown in Fig. 10b.

As a form of domain adaptation, we have also tried combining the virtual and real haptic datasets to train the machine learning model. Using 640 virtual and 160 real haptic samples for training (80:20 ratio) has led to improved accuracy, even outperforming the models trained with only real data, as shown in Fig. 11.

The impact of adjusting the virtual-to-real training sample ratio is shown in Fig. 12. While training with ample real haptic data yields high results, our goal is to reduce reliance on a large number of real samples due to their time-consuming acquisition. The graph also demonstrates virtual data’s effectiveness as a form of data augmentation, thereby minimizing acquisition time using the robot. Additionally, purely virtual data still yields relatively satisfactory results, especially when the classifier incorporates more local curvatures for each feature set.

Graph of the accuracy for different haptic models. The first two models (green and blue) were trained with 640 virtual samples. The third model (yellow) was trained with 640 virtual (80%) and 160 real samples (20%). Finally, the last model (black) was trained with 768 real samples. All models were tested with real haptic samples

6.2 Visual classification

Initially, we attempted visual classification using hand-crafted features like local curvature, coupled with classical machine learning techniques, to classify the real images. However, these methods produced suboptimal results. Additionally, we experimented with augmenting synthetic images by introducing Gaussian and salt-and-pepper noise, yet these adjustments failed to improve accuracy. Ultimately, we turned to convolutional neural networks (CNNs), a prevalent technique in computer vision. CNNs, being a deep-learning approach, eliminate the need for explicit feature selection. Instead, we input grayscale images into the network and allow the model to autonomously learn important features for accurate classification.

6.2.1 Model architecture

Our recognition model architecture begins with a pre-trained CNN, followed by two additional trainable layers, leading to the output layer tailored specifically to our classification task. Leveraging a pre-trained CNN, such as VGG, ResNet, or Inception, enables us to harness robust feature extraction capabilities honed through extensive training on vast and diverse image datasets.

We have used the following network structure, which is represented in Fig. 13:

-

1.

The initial layer of our network is the ResNet-50 architecture, a 50-layer deep convolutional network. We have configured this layer as non-trainable so it works mainly as a feature extractor, ensuring an optimal transfer learning to our model.

-

2.

Following the ResNet-50 layer, we have a flatten layer that transforms the 2D feature maps into a 1D feature vector. This is necessary to connect the convolutional layers with dense layers but doesn’t add any parameters to the model.

-

3.

After the flatten layer, we have a fully connected layer with 128 nodes to process further the features extracted by the ResNet-50 layer.

-

4.

The final layer in your model is a dense layer with 5 nodes, corresponding to the number of classes we are predicting.

The trainable layers enable the network to fine-tune the features specific to our dataset, enhancing the model’s ability to distinguish between different classes effectively.

6.2.2 Model training and performance evaluation

In our study, the initial training of the neural network model spanned four epochs, utilizing the Adam optimizer with a learning rate of 0.001 and ’Categorical Crossentropy’ as the loss function. The training dataset comprised 400 synthetic images for each of the objects generated by the virtual system, totaling 2000 images. To mitigate overfitting, 20% of this dataset was reserved for validation purposes, ensuring an unbiased evaluation of the model’s performance.

Confusion matrices of two different models when tested with real images. a A model trained only with virtual images, showcasing an accuracy of 58.26%, and b a model trained with both virtual and real images with augmentation—3 images (with a virtual-to-real ratio of 84:16), showcasing an accuracy of 80.59%

Following the training phase, the model was tested on two distinct sets of images. The first test set comprised 80 synthetic images for each object from the virtual simulation, totaling 400 images, yielding notably high performance (97%) indicative of its efficacy in classifying in the same domain of images. In contrast, the second test set posed a more challenging scenario, using 75 real images captured by the robot’s camera. The model’s performance on this set was relatively lower (58.2%) due to the increased diversity and complexity inherent in real-world images, shown in Fig. 14a.

To enhance the model’s image classification capabilities for real-world scenarios, we implemented a modified training strategy involving a mixed training set as a form of domain adaptation. This dataset comprised 2000 synthetic images from the virtual system and 296 real images with augmentation, and 20% was allocated for validation. Our decision was based on an analysis of classification accuracy varying the number of real augmented images, detailed in Fig. 15, advocating the use of two or three augmented images per real image. More augmented images did not yield better results.

For the augmentation, we applied random scaling transformations to generate three additional images for each real image, resulting in 222 augmented real images from 74 original real images. Consequently, our test dataset consisted of the 75 real images we also used for testing the previous model. Notably, these real images were distinct from those used in training, ensuring an unbiased evaluation. The results, illustrated in Fig. 14b, show the model’s classification capabilities on real images, affirming the effectiveness of our approach. This strategy, leveraging real images and data augmentation, substantially bolstered the model’s versatility and accuracy to 80.6%.

7 Multimodal fusion

In the subsequent section, we conducted independent evaluations of our approach for both the visual and haptic modalities, facilitating a comprehensive analysis of their individual performances and potential synergies when combined.

In the haptic modality, initial attempts using raw synthetic haptic samples for training yielded unsatisfactory recognition results. Upon closer examination of the disparities between haptic samples generated by the virtual system and those obtained from the robot’s sensors, we identified noise as the primary culprit. Consequently, upon incorporating noise in the virtual simulation, the accuracies for haptic object recognition experienced a notable improvement.

In contrast, in the visual mode, the addition of noise to images generated by the virtual system did not enhance the training of the recognition model. Instead, we opted to integrate a small number of real images into the training dataset with some data augmentation. This strategy resulted in significantly superior results compared to the haptic recognition model.

To create a cohesive and effective system that incorporates both visual and haptic modalities, we employed a multimodal approach. We conducted tests using various combinations of haptic and visual classifiers, varying the types of training datasets utilized. We also considered different ways to combine the predictions, e.g., using a product of probabilities and using a weighted sum. As shown in Table 1, we observed that employing a substantial quantity of actual haptic samples and incorporating some real images with data augmentation for training produced the best results. However, even when utilizing only synthetic haptic data, the system was still capable of achieving comparable accuracy, when combined with the visual classifier. Using purely virtual haptic and visual data could achieve an accuracy of 89.3%.

Figure 16 shows the confusion matrix of using 80% virtual and 20% real haptic training data and virtual visual data with some real images with three augmented images included in the training. This leverages the complementary strengths of each modality while mitigating the use of real samples. Our findings reveal a substantial enhancement in accuracy (95.7%) compared to either modality in isolation (93.3% and 80.6%), underscoring the efficacy of this technique for integrating information from multiple modalities in classification tasks.

Confusion matrix for a multimodal testing scenario, combining the visual and haptic modalities. Haptic model trained with 80% virtual samples with noise and 20% real samples, using 15 test samples per feature set; and visual model trained with virtual and real images with augmentation—3 images generated per each real training image (with a virtual-to-real ratio of 84-16)

8 Conclusions and future work

This paper presents a method for training visuo-haptic object recognition models in robots using synthetic datasets from 3D virtual simulations. While visual object recognition has thrived with abundant image datasets, the scarcity of diverse haptic samples poses a challenge in machine learning for haptic sensing. Our approach leverages 3D virtual simulations to create realistic synthetic datasets, bridging this gap and enabling seamless integration of visual and haptic cues for object recognition. Through empirical experiments, we validate the efficacy of our method, underscoring the importance of multimodal perception in enhancing robotic understanding of the environment.

Selected models from the Princeton Mesh Benchmark [24]: cup, martini, glass, table, teddy, pliers, bust, mechanical part, vase, teapot

Incorporating noise in the virtual simulation improves the fidelity of the synthetic samples to real-world scenarios and thus improves haptic recognition accuracy. Conversely, in the visual mode, integrating a small number of real images into training datasets, along with data augmentation, yields superior results compared to haptic recognition models.

To further enhance performance, we proposed a multimodal approach that integrates both visual and haptic modalities. By combining their strengths and mitigating their limitations, our multimodal system achieves some improvement in accuracy compared to individual modalities.

In conclusion, our research contributes to advancing multimodal machine learning, enabling robots to possess a holistic understanding of their environment derived from both visual and haptic senses. Utilizing synthetic training data offers the advantage of conducting experiments in virtual simulations before real-world implementation. This facilitates rapid iteration, algorithm refinement, and parameter optimization without the constraints and costs of physical experimentation. Virtual simulations provide a controlled environment for testing algorithms in various scenarios, ensuring thorough validation. This approach holds promise for numerous applications in robotics and artificial intelligence.

In the future, we plan to apply our method to more complex models, including everyday objects, to confirm our hypothesis. Figure 17 displays the 3D models utilized in our initial experiments, where only virtual simulated data was used for both training and testing with some added noise in the tests. The results of the multimodal classification for these 10 models from the Princeton Mesh Benchmark are shown in Fig. 18. These results are comparable to those obtained with the five simple objects, but notably higher given their shared domain. This suggests that our method may have broader applicability across a wider array of objects and settings.

Finally, the current virtual simulation is designed for our specific robot hardware, with adjustable parameters allowing users to modify end-effector settings and material properties. However, looking ahead, we plan to extend support to diverse robot configurations, e.g., robots with different numbers of end effectors, different types of sensors, and robotic arm configurations capable of haptic and visual data acquisition.

Data availability

This research study used the dataset publicly available at https://segeval.cs.princeton.edu.

References

Fanello, S.R., Ciliberto, C., Noceti, N., Metta, G., Odone, F.: Visual recognition for humanoid robots. Robot. Auton. Syst. 91(2017), 151–168 (2017)

Zhao, Z.-Q., Zheng, P., Xu, S.-T., Wu, X.: Object detection with deep learning: a review. IEEE Trans. Neural Netw. Learn. Syst. 30(11), 3212–3232 (2019)

Navarro-Guerrero, N., Toprak, S., Josifovski, J., Jamone, L.: 2023. Visuo-haptic object perception for robots: an overview. Autonomous Robots (2023)

Peter, K., Allen, K., Roberts, S.: Haptic object recognition using a multi-fingered dextrous hand. Technical Report. Columbia University (1988)

Zhang, M.M., Kennedy, M.D., Hsieh,A., Daniilidis, M.K.: A triangle histogram for object classification by tactile sensing. In: 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, pp. 4931–4938 (2016)

Gorges, N., Escaida, S., Heinz Wörn, N.: Haptic object recognition using statistical point cloud features. In: 2011 15th International Conference on Advanced Robotics (ICAR). IEEE, pp. 15–20 (2011)

Kulkarni, S., Funabashi, S., Schmitz, A., Ogata, T., Sugano, S.: Tactile object property recognition using geometrical graph edge features and multi-thread graph convolutional network. IEEE Robot. Autom. Lett. 4, 1–8 (2024)

Yuan, W., Dong, S., Adelson, E.H.: Gelsight: high-resolution robot tactile sensors for estimating geometry and force. Sensors 1712, 2762 (2017)

Dong, S., Yuan, W., Adelson, E.H.: Improved gelsight tactile sensor for measuring geometry and slip. In: 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, pp. 137–144 (2017)

Spiers, A.J., Liarokapis, M.V., Calli, B., Dollar, A.M.: Single-grasp object classification and feature extraction with simple robot hands and tactile sensors. IEEE Trans. Haptics 9(2), 207–220 (2016)

Luo, S., Mou, W., Althoefer, K., Liu, H.: Iterative closest labeled point for tactile object shape recognition. In: 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, pp. 3137–3142 (2016)

Wu, B., Akinola, I., Varley, J., Allen, P.: 2019. Mat: Multi-fingered adaptive tactile grasping via deep reinforcement learning. arXiv preprint arXiv:1909.04787 (2019)

Fernandes, D., Paolo, G., Luo, P.S.: Generation of GelSight Tactile Images for Sim2Real Learning. arXiv preprint arXiv:2101.07169 (2021)

Nikolenko, S.I.: 2019. Synthetic Data for Deep Learning. arXiv:1909.11512 (2019)

Schraml, D.: Physically based synthetic image generation for machine learning: a review of pertinent literature. In: Photonics and Education in Measurement Science 2019, Vol. 11144. SPIE, 111440J (2019)

Li, B., Bai, J., Qiu, S., Wang, H., Guo, Y.: VITO-transformer: a visual-tactile fusion network for object recognition. IEEE Trans. Instrum. Meas. 1, 1–1 (2023)

Erickson, Z., Chernova, S., Kemp, C.C: Semi-Supervised Haptic Material Recognition for Robots using Generative Adversarial Networks (2017)

Nicolau, F., Gielis, J., Simeone, A.L., Lopes, D.S.: Exploring and Selecting Supershapes in Virtual Reality with Line, Quad, and Cube Shaped Widgets. In: 2022 IEEE Conference on Virtual Reality and 3D User Interfaces (VR). IEEE, pp. 21–28 (2022)

Gielis, J.: A generic geometric transformation that unifies a wide range of natural and abstract shapes. Am. J. Bot. 90(3), 333–338 (2003)

Fougerolle, Y.D., Gribok, A., Foufou, S., Truchetet, F., Abidi, M.A.: Rational supershapes for surface reconstruction. In: Eighth International Conference on Quality Control by Artificial Vision, Vol. 6356. SPIE, pp. 206–215 (2007)

Garrofé, G., Parés, C., Gutiérrez, A., Ruiz, C., Serra, G., Miralles, D.: Virtual haptic system for shape recognition based on local curvatures. In: Advances in Computer Graphics: 38th Computer Graphics International Conference, CGI 2021, Virtual Event, September 6–10, 2021, Proceedings 38. Springer, pp. 41–53 (2021)

[n. d.]. UR3E collaborative robot arm that automates almost anything. https://www.universal-robots.com/products/ur3-robot/

Ericson, C.: Real-Time Collision Detection. CRC Press Inc, USA (2004)

Chen, X., Golovinskiy, A., Funkhouser, T.: A benchmark for 3D mesh segmentation. ACM Trans. Graph. 28(3), 73 (2009)

Funding

Open Access funding provided thanks to the CRUE-CSIC agreement with Springer Nature. The work has been partially supported by the CMES project (grant No. PID2020-119725GA-I00) funded by the Spanish Ministry of Science and Innovation.

Author information

Authors and Affiliations

Contributions

C.R. implemented the virtual system and was involved in the writing of the manuscript, supervision, and analysis of the experiments. O.dJ. implemented the machine learning algorithms, was involved in the experiments for the haptic, visual, and multimodal classification, was involved in the hardware construction of the robot, and was involved in the data acquisition of the haptic samples. C.S. was involved in implementing the machine language algorithms for visual and haptic classifiers and was involved in performing the experiments. A.G. and P.N performed the visual experiments, implemented the code for image processing, and were involved in the data acquisition of the images from the robot. A.M. was involved in the implementation of the machine language algorithms and CNN, and creation of the video and figures. D.M. conceived the presented idea, supervised the entire project, and was involved in all the experiments and the review of the paper. All authors provided critical feedback and helped shape the research, analysis, and manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Supplementary file 2 (mp4 63492 KB)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ruiz, C., de Jesús, Ò., Serrano, C. et al. Bridging realities: training visuo-haptic object recognition models for robots using 3D virtual simulations. Vis Comput 40, 4661–4673 (2024). https://doi.org/10.1007/s00371-024-03455-7

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00371-024-03455-7