Abstract

Over the last decade, convolutional neural networks (CNNs) have allowed remarkable advances in single image super-resolution (SISR). In general, recovering high-frequency features is crucial for high-performance models. High-frequency features suffer more serious damages than low-frequency features during downscaling, making it hard to recover edges and textures. In this paper, we attempt to guide the network to focus more on high-frequency features in restoration from both channel and spatial perspectives. Specifically, we propose a high-frequency channel attention (HFCA) module and a frequency contrastive learning (FCL) loss to aid the process. For the channel-wise perspective, the HFCA module rescales channels by predicting statistical similarity metrics of the feature maps and their high-frequency components. For the spatial perspective, the FCL loss introduces contrastive learning to train a spatial mask that adaptively assigns high-frequency areas with large scaling factors. We incorporate the proposed HFCA module and FCL loss into an EDSR baseline model to construct the proposed lightweight high-frequency channel contrastive network (HFCCN). Extensive experimental results show that it can yield markedly improved or competitive performances compared to the state-of-the-art networks of similar model parameters.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Single image super-resolution (SISR) has applications in many fields, including surveillance [1], medical imaging [2], and remote sensing imaging [3]. The goal of SISR is to reconstruct a high-resolution (HR) image from its low-resolution (LR) observation by recovering the lost high-frequency information. However, this is an inherently ill-posed inverse problem, since multiple corresponding HR images can be generated from a given LR image.

In the last decade, deep learning (DL)-based methods have significantly improved the performance of many computer vision tasks compared to traditional methods. For example, DL-based traffic sign detectors can effectively detect small and fuzzy traffic signs in the wild, offering potential for autonomous driving. Zhang et al. [4] proposed ReYOLO to learn rich contextual information and senses scale variations, showing great performances on traffic sign dataset such as CCTSDB2021 [5].

Due to the powerful feature extraction capabilities of convolutional neural networks (CNNs), many CNN-based SR models [6,7,8,9,10,11,12] have been proposed to learn the mapping between LR and HR images from a large dataset. Dong et al. were the first to develop a CNN-based SR model (SRCNN) [6]. In order to improve the feature extraction ability of CNNs, Lim et al. further increased the network depth by stacking more than 30 residual blocks and proposed an enhanced deep SR (EDSR) network [7], yielding high reconstruction accuracy. To effectively extract channel and spatial features and capture global contextual information from high-resolution remote-sensing images, Zhou et al. [13] proposed a split depth-wise separable graph convolutional network (SGCN) and obtained significant performance for road extraction. ClassSR [14] combines classification and SR in a unified framework to accelerate SR network on large images. SR images generated by the aforementioned networks are still blurry at edges and textures. Based on the powerful generative adversarial network (GAN) [15], Ledig et al. [16] proposed SRGAN for photorealistic SR. To reduce the impact of inter-class similarities among feature maps, NIGAN [17] embedded a remote sensing road scene neighbourhood probability enhancement method into the conditional GAN to extract meaningful features. Inspired by fluid dynamics, Zhang et al. [18] delved into the mechanism of image evolution during the SR process and proposed FM-Net, to simulate the motions of pixels in the reconstruction process as the flow of fluid. Based on the consistency between thermal particles in the thermal field and pixels in the image domain, Zhang et al. [19] further proposed HTI-Net for image SR, and these methods improve the effectiveness of the hardware Trojan detection system, offering the potential for real-world applications [20].

Attention mechanisms aim at guiding the network to focus on important signals or features and to suppress irrelevant ones. Due to the high information redundancy between channels, a channel attention mechanism was proposed in SENet [21] to re-weigh the channels and obtained great success in image classification. In the same way, in RCAN [10] a first-order channel attention mechanism was introduced for SISR. The method in SAN [22] calculates the covariance of feature maps to predict second-order channel attention maps to improve final performance for SISR. Liu et al. [11] further enhanced the spatial attention and proposed the RFANet. In MFA [23], a frequency-channel attention mechanism was proposed to perform the attention across both channel and frequency dimensions to rescale the important frequency regions.

The aforementioned channel or spatial attention mechanisms generate attention maps by calculating the mean statistical information, which only uses the lowest frequency component while discarding some high-frequency information. However, capturing high-frequency information from feature maps is crucial for SISR in order to recover the lost high-frequency components such as fine details and textures. It has been demonstrated that CNNs are biased towards learning from low-frequency signals [24], making high-frequency signals hard to propagate through the network. To address this problem, FcaNet [25] transfers the feature maps from the spatial domain to the frequency domain and generates global descriptors for each channel based on its discrete cosine transform (DCT) coefficients. In a similar way, Magid et al. [24] proposed a matrix multi-spectral channel attention mechanism that re-weighs channels according to their frequency spectrum. These frequency-domain-based channel attention mechanisms integrate several artificially selected high-frequency components into their proposed attention mechanisms. While this method can capture high-frequency information when generating the global descriptors, it requires artificial selection of DCT coefficients and lacks guidance while generating channel-wise scaling factors for each channel.

Intrigued by these issues, we propose to apply a channel attention mechanism with contrastive learning to make the network focus more on learning high-frequency features. In particular, we treat this problem from both channel and spatial perspectives. Our channel-wise approach is to construct a high-frequency channel attention (HFCA) module. We conducted experiments to evaluate representations of high-frequency features in the network. Figure 1 shows the correlation coefficients between the feature maps and the output of the high-pass filter of the corresponding feature maps. The results show that the high-frequency signals of different channels vary a lot with respect to their relative feature maps. In general, channels with abundant high-frequency information should be assigned with high weight values. To achieve this design, the proposed HFCA module predicts the channel-wise global descriptors by calculating the similarity statistical metrics between the original feature maps and the high-frequency components. Compared to the previous channel attention mechanisms [10, 22, 24], this method captures high-frequency information using a more powerful statistical approach and adjusts adaptively the channel-wise scaling factor according to its high-frequency feature similarity. Our spatial approach is to introduce a contrastive learning loss to split high-frequency and low-frequency features in the spatial domain. Considering higher-frequency areas are expected to be assigned larger scaling factors on feature maps, we proposed a frequency contrastive learning (FCL) module to train a spatial mask by optimizing the contrastive learning loss constraint. This trained spatial mask can adaptively make the network focus on high-frequency areas and make high-frequency signals easier to propagate through the network.

We incorporate the proposed HFCA and FCL into a standard residual block and obtain the high-frequency channel contrastive block (HFCCB). By integrating the HFCCB in an EDSR baseline, we construct a lightweight high-frequency channel contrastive network (HFCCN) with only around 1M parameters. As shown in Fig. 2, compared to models with the same parameter scale, the proposed network achieves improved results. Main contributions of this paper are three-fold. (1) A HFCA module is proposed to rescale channel-wise features based on their high-frequency similarity, while introducing fewer additional parameters. (2) A FCL loss is used to train a spatial mask and rescale weights from high-frequency features. (3) A lightweight HFCCN is proposed for highly accurate image SR. HFCCN obtains improved performance compared to other CNN-based SR models on the same parameter scale.

2 Related work

2.1 CNN-based super-resolution models

For the past decade, CNN-based models have outperformed traditional methods in low-level computer vision tasks [26], due to their powerful nonlinear representation abilities. Dong et al. [6] first proposed a CNN with three convolutional layers by constructing a nonlinear mapping between HR and LR images. To increase the receptive field, Kim et al. proposed VDSR [27] with 20 layers by introducing residual learning to accelerate the convergence of the network. DRCN [28] and DRRN [29] incorporated recursive blocks to further improve the overall performance and reduce the number of model parameters. Shi et al. [8] proposed an efficient sub-pixel convolutional layer at the end of the network, to allow the learning operations to be performed on LR feature maps and to reduce computation time and model parameters. To obtain more accurate reconstruction results, Lim et al. [7] removed unnecessary modules in the residual block and stacked these modules to construct a very deep and wide network, EDSR. Utilizing the benefits of the dense structure, Tong et al. [30] proposed SRDenseNet, which fully utilizes the residual information from the previous layers. Zhang et al. [31] combined residual connection [32] and dense connection [33] in a dense residual block and then proposed RDN. These CNN-based SR models are biased towards constructing deeper networks. Although they gained good reconstruction performances, there are considerable increases in the model complexity and computation costs. MFFN [34] proposed a multi-level feature fusion network that fuses deep layer feature information with shallow layer feature information, and constructed a lightweight SR model. Similarly, we construct our lightweight CNN-based SR network based on an EDSR baseline model as a trade-off between number of parameters and restoration performance.

2.2 Attention mechanism

In recent years, attention mechanisms have been widely used to guide or rescale network resources towards important features. To explore the redundancy between different channels, SENet [21] was proposed to fully utilize relationships among channels, improving the performance on image classification. RCAN [10] then incorporated a channel attention mechanism into the SR network. In the channel attention block, a global average pooling (GAP) is placed after each input channel to produce a channel descriptor. These descriptors are then fed into several convolutional layers to further explore channel-wise relationships. By applying a sigmoid function, channel-wise scaling factors are calculated to rescale the input channels. SAN [22] was designed with a second-order channel attention (SOCA) module. The SOCA characterizes the correlation of channel-wise features by calculating covariance matrix. DRLN [35] was proposed with a Laplacian module, which adaptively rescales feature maps across multiple feature spaces. In RFANet [11] a residual feature aggregation block was designed to utilize the features on the residual branch and an enhanced spatial attention block to generate more powerful spatial attention maps. Considering interdependencies between pixels on each feature map, in SARN [36] a novel spatial attention block was proposed to rescale pixel-wise features. Hu et al. [9] combined channel attention and spatial attention mechanisms to propose the channel-wise and spatial feature modulation network (CSFM) for SISR. However, most of these channel attention methods do not generate global descriptors based on the frequency elements of the input features. To overcome this, Magid et al. [24] proposed a matrix multi-spectral channel attention mechanism that incorporates high-frequency components into their design. To reduce the impact of background information or distractors, Zhang et al. [37] proposed a novel Siamese anchor-free network based on criss-cross attention, obtaining more accurate and robust tracking results. Considering that most attention mechanisms are only processed at a single resolution, Liu et al. [38] proposed a cross-resolution feature attention mechanism to progressively reconstruct images at different scale factors. Similarly, we adapt and improve channel and spatial attention mechanisms to enhance reconstruction performance. In particular, we explicitly focus on the learning of high-frequency signals. The existing methods use only several artificially selected frequency components and cannot capture meaningful high-frequency components in an adaptive way, while our proposed model generates global descriptors by calculating the similarity between features and high-frequency components. In this way, channels with abundant high-frequency components are given large weights to improve the performance of the channel attention mechanisms.

2.3 Contrastive learning

Contrastive learning has been used for self-supervised learning recently and shown impressive performances. Contrastive learning aims to push anchors closer to positive samples and away from negative samples. Contrastive learning maximizes the mutual information in representation space and has been used previously in high-level tasks [39, 40]. However, there are few attempts to explore the performance of contrastive learning in SISR tasks. Wang et al. [41] proposed a contrastive self-distillation framework by introducing contrastive loss and knowledge transfer. Wu et al. [42] proposed a novel contrastive loss and demonstrated its effectiveness for improving the quality of reconstructed images. Xia et al. [12] applied contrastive learning to further separate relevant and irrelevant features. RLFN [43] re-selects the features used in the contrastive loss by replacing the ReLU activation function of a randomly initialized two-layer feature extractor with the Tanh function. CIPPSRNet [44] introduced contrastive learning to optimize a pretrained network and improve its adaptability and robustness. These models adapted contrastive learning to better extract feature representations by identifying similarities and differences of data without labels. Herein, we adapt contrastive learning to extract high-frequency representations as priors without supervision. It is to increase the gap between high-frequency and low-frequency features with the use of a spatial mask that adaptively assigns a scaling factor to every pixel. By incorporating contrastive learning, the network can focus more on learning of high-frequency areas of the feature maps, leading high-frequency signals to propagate through the network more easily.

3 Methodology

The proposed high-frequency channel contrastive network (HFCCN) consists of two novel modules that can be simply integrated into an existing SR architecture (e.g. EDSR [7]), including the high-frequency channel attention (HFCA) module (Sect. 3.1) and the frequency contrastive learning (FCL) module (Sect. 3.2). HFCA and FCL adopt channel and spatial approaches, respectively, to make the network focus on high-frequency information learning. HFCA rescales channels using similarity statistical metric between features and high-frequency details. FCL separates high- and low-frequency information to allow for better propagation of high-frequency components through the network.

3.1 High-frequency channel attention

Firstly, we revisit the residual blocks in EDSR [7]. The residual block contains two \(3 \times 3\) convolutional layers and a ReLU activation function. We denote \({x_{in}}\) and \({x_{res}}\) as the input and the output of the residual block, which can be formulated as:

where \({W_{3 \times 3}^1}\) and \({W_{3 \times 3}^2}\) are the weights of \(3 \times 3\) convolutional layers, and \(\phi \) denotes the ReLU function.

As shown in Fig. 3a, our proposed HFCA module and FCL module can be easily integrated into a normal residual block to construct the high-frequency channel contrastive block (HFCCB). \({x_{out}}\) represents the output of HFCCB, defined as:

where \({x_{hfa}}\) and \({x_{cl}}\) represent the outputs of HFCA module and FCL module, respectively.

In general, the challenge of SISR lies in restoring high-frequency components such as textures and edges. Previous channel attention mechanisms [10, 21, 22] average the features across the spatial domain by an average pooling layer, hence neglecting most high-frequency components that may be crucial for restoring textures and details. Since high-frequency signals are more difficult to recover in image reconstruction, focus should be placed more on learning high-frequency features. Considering the limited ability of the mean statistic to describe the network features, we propose to use a more powerful statistical metric to describe the high-frequency information of each channel more accurately. It has been demonstrated that channels with abundant high-frequency information are critical for SR performance. Based on this idea, we propose to use a high-pass filter to obtain high-frequency features and take the similarity statistical metric among them as the channel-wise global descriptor. In this way, channels with greater high-frequency features are allocated with larger weights.

As shown in Fig. 3b, we refine the generation process of channel scaling factors. Since most similarity statistical metrics, such as mean square error (MSE), L1 norm, root mean square (RMS), are only related to pixel-level differences, they often lead to poor performance in comparing details and structure similarities. In order to better represent the difference between high-frequency features, we refer to the SSIM [45] metric and extract 3 key features: luminance, contrast and structure, represented by three statistical metrics: mean, standard deviation and covariance. Each statistical metric is multiplied by a learnable scale factor, contributing to the adopted similarity statistical metric. The process of generating these three statistics and the similarity metric is formulated as follows.

where \({x_H}\) is the high-frequency feature maps passed from a Laplacian high-pass filter. \(\mu \) and \(\sigma \) denote mean and standard deviation, respectively. \({Cov({x_{res}},{x_H})}\) represents the covariance between original feature maps and high-frequency maps. \(\alpha \), \(\beta \) and \(\chi \) represent three learnable scaling factors.

We take \(Sim({x_{res}},{x_H})\) as the channel attention descriptor. To fully capture channel-wise dependencies form the similarity descriptor, similar with most channel attention mechanisms, we apply a two-layer convolution and a sigmoid function to generate the scaling factor, which is also the output of HFCA (\({x_{hfa}}\)), and it can be formulated as:

where \(f\left( \cdot \right) \) and \(\phi \left( \cdot \right) \) denote the sigmoid gating and ReLU function, respectively. \({W_{1 \times 1}^1}\) and \({W_{1 \times 1}^2}\) are the weights of a 1x1 convolutional layer. By adopting HFCA, the network can adaptively focus on channel information that contains more or abundant high-frequency features.

3.2 Frequency contrastive learning

CNNs are biased towards learning from low-frequency signals, making high-frequency components hard to propagate through the network [24]. To address this problem, high-frequency areas of feature maps are exploited to be assigned larger weights. Previous spatial attention mechanisms [11, 46] generate attention maps by average or max pooling, which cannot make network focus on high-frequency areas. We introduce contrastive learning to train a spatial mask that adaptively assigns a scaling factor to every pixel. The goal of adopting contrastive learning is to increase the gap between high-frequency and low-frequency features in the feature representation. The overview of the proposed frequency contrastive learning (FCL) module is shown in Fig. 4. The output of FCL, \({x_{cl}}\), is defined as

where \({M_{cl}}\) represents the spatial mask.

In each FCL module, we take the high-frequency features as the positive samples and the low-frequency features as the negative samples. The high-frequency and low-frequency features can be generated by a high-pass filter and a low-pass filter, respectively. In this case, we use the Laplacian high-pass filter and the Gaussian low-pass filter. The Laplacian operator is a second-order, linear differential operator and is isotropic in all directions. Therefore, using the Laplacian operator as high-pass filter does not require detection operation on separate X and Y direction, hence easily adoptable for extracting meaningful high-frequency features. In addition, the convolution kernel of the Gaussian low-pass filter assigns different weights to different regions according to the Gaussian distribution, which can retain the overall distribution of the feature maps. We have compared various other filters (i.e. mean low-pass filter, Sobel high-pass filter and Scharr high-pass filter), and results are shown in Sect. 4.4. The outputs of filters are shown below:

Given positive and negative samples, we can construct a frequency contrastive learning loss \({L_{cl}}\) for training the spatial mask as:

where N indicates the input size, C is the number of channels, \(\zeta \) is a small value to keep the training stable, and \(\tau \) is the temperature factor to control the penalty for negative samples. Here, we set the value of \(\zeta \) to \(1{e^{ - 6}}\) and \(\tau \) to 1. \(Sim({x_{cl}},{x_H})\) represents similarity (e.g. correlation coefficient) between the output of FCL (\(x_{cl}\)) and the output of high-pass filter (\(x_H\)), while \(Sim({x_{cl}},{x_L})\) represents similarity between the output of FCL (\(x_{cl}\)) and the output of low-pass filter (\(x_L\)).

There are several metrics to measure similarity between the anchor and samples, such as the L1 norm, L2 norm or cosine similarity. However, in order to better compare the structural similarity between high-frequency components and feature maps, we take correlation coefficient as the similarity metric. The ablation analysis of comparing different similarity statistical metrics is given in Sect. 4.4. We denote \({I^{HR}}\) and \({I^{SR}}\) as the high-resolution image and recovered super-resolution image, respectively. Finally, the overall loss function L of the HFCCN model is designed as:

where \({L_{rec}}\) is the L1 loss, which is used in most SR models, \(\lambda \) is a trade-off factor, and we set it as \(1{e^{ - 4}}\). By minimizing the contrastive learning loss constraint, the spatial mask is trained to assign high-frequency areas with larger weighs, hence improving the network’s performance of learning more difficult high-frequency details.

3.3 High-frequency channel contrastive attention network

To demonstrate the effectiveness of the HFCA and FCL modules, we integrate them into an EDSR baseline model, consisting of 24 residual blocks, to form a lightweight HFCCN. The overall structure of the proposed high-frequency channel contrastive network (HFCCN) is shown in Fig. 5. The HFCA and FCL modules are incorporated into a standard residual block to form the HFCCB. One residual block is replaced by HFCCB after every two residual blocks to ensure that our model parameters are comparable with other lightweight SR models. Finally, HFCCN consists of 16 residual blocks and 8 HFCCB, so the total number of blocks is 24.

4 Experiments

4.1 Datasets and metrics

The HFCCN has been trained on the DIV2K [47] dataset, which contains 800 HR images. The LR images were obtained by the bicubic downsampling of HR images. For evaluation, we compared the performances on four widely used benchmark datasets: Set5 [48], Set14 [49], BSD100 [50] and Urban100 [51], which have 5, 14, 100 and 100 images, respectively. The Set5, Set14 and BSD100 contain natural scenes, while the Urban100 dataset are urban scene images with more edges and higher resolutions. The SR results were evaluated with the peak signal-to-noise ratio (PSNR) and structural similarity index (SSIM) [45], popular reconstruction quality measures of super-resolution. PSNR is defined via the maximum pixel value L and the mean-squared error between images. Given the high-resolution (ground truth) image \({I^{HR}}\) with N pixels and the super-resolution image \({I^{SR}}\), as follows:

where L equals 255 when dealing with 8-bit images. As human visual perception is highly adapted for extracting structural information from a scene, SSIM metric compares luminance, contrast and structure between images. We denote \({\mu _I}\) and \({\sigma _I}\) as the mean and standard deviation of the image intensity, respectively. The comparisons on luminance \({C_l}\left( {{I^{SR}},{I^{HR}}} \right) \) and contrast \({C_c}\left( {{I^{SR}},{I^{HR}}} \right) \) are defined as follows:

where \({C_1} = {({k_1}L)^2}\) and \({C_2} = {({k_2}L)^2}\) are constants to ensure stability when the denominator is close to zero (\({k_1} \ll 1\) and \({k_2} \ll 1\)). The structure comparison \({C_s}\left( {{I^{SR}},{I^{HR}}} \right) \) is defined as:

where \({\sigma _{{I^{SR}}{I^{HR}}}}\) is the covariance between \({I^{HR}}\) and \({I^{SR}}\), and \({C_3} = {({k_3}L)^2}\). Finally, the SSIM is given by:

where \(\alpha \), \(\beta \), \(\gamma \) denote the relative importance of each metrics. Both PSNR and SSIM [45] metrics are measured on Y channel in the YCbCr space.

4.2 Implementation and training details

For a lightweight network, we set the number of residual blocks to 16 and the number of HFCCB to 8, giving the total number of convolution blocks of 24. The frequency contrastive learning loss was added to L1 loss after 50 epochs. We set the convolutional kernel size to \(3 \times 3\) and set the intermediate feature channels to 40. During training, we employed data augmentation by randomly rotating 90, 180, 270 and flipping horizontally. The mini-batch size was set to 32 and each patch size to \(64 \times 64\). The training optimizer was ADAM [52] with \({\beta _1} = 0.9\), \({\beta _2} = 0.999\) and \(\varepsilon = {10^{ - 8}}\). The learning rate was initialized to \(2 \times {10^{ - 4}}\) and then decayed by a factor of 2 for every 200 epochs. We implemented the HFCCN model with PyTorch [53] framework. It takes 177.3 s on average to train one epoch (1000 batches) on DIV2K with scale of 4. Usually, the model is trained up to 1000 epochs. The inference time of our method is 0.1088s on the Urban100 dataset for scale factor \(\times \)4 SR. All experiments were conducted and tested on a single RTX 3090 GPU (Tables 1-4 and Figs. 7-6).

4.3 Results

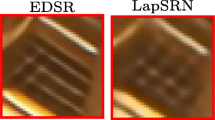

Comparisons with state-of-the-art methods: HFCCN was compared with 11 state-of-the-art lightweight models, including SRCNN [6], FSRCNN [54], VDSR [27], DRCN [28], LapSRN [55], DRRN [29], MemNet [56], CARN [57], IMDN [58], ACNet [59], RLFN [43] and DeFiAN [60].

Quantitative evaluation results in terms of average PSNR and SSIM on the four benchmark datasets are shown in Table 4. The size of our method is 1.08M, 1.13M, and 1.19M, respectively, for \(\times \)2, \(\times \)4, and \(\times \)8 SR. Under similar sizes or model parameters, the proposed HFCCN gained better performance by a marked margin on almost all benchmarks and on all up-sampling scales over other models. Adding additional HFCA and FCL modules to an EDSR-baseline model showed a significant improvement, also outperforming other lightweight SR models. In particular, the proposed HFCCN brought further improvement of 0.04 dB on Urban100 with scale of 4, when comparing with the second best SR model. The greater performance gain by HFCCN shown on larger datasets demonstrates its enhanced generalization ability. In addition to the PSNR metric, the proposed model achieved higher values of SSIM on all datasets, demonstrating improvement in perceptional quality. Higher SSIM shows that the proposed HFCCN can better recover more details and textures. Such improved performance attributes to the HFCA mechanism and frequency contrastive learning strategy, in making the network learn more high-frequency features. The table also shows the comparisons with models of similar number of parameters. In particular, even though HFCCN has about 300–600K fewer parameters than ACNet [59] and CARN [57], it achieved better results than these models on all datasets. Compared to RLFN [43], although it has slightly fewer number of parameters, the proposed model achieved marked improvements on most datasets, especially for more difficult scenarios, such as Urban100, with more noticeable improvements with scale of 2. As high-frequency details are highly discounted on large scales, we further conducted experiments on a larger scale (x8 SR), and the results are shown in Table 4. With such a large scale, HFCCN surpassed MemNet [56] by a marked margin of 0.48 dB on Urban100 benchmark, which further proves the effectiveness of the proposed method and its ability to reconstruct high-frequency details.

Examples of qualitative results on B100 and Urban100 are shown in Fig. 7. HFCCN was more effective for detail restoration with scale factor \(\times \)4. Most models could not recover much of the high-frequency fine details and textures and suffered from blurring and aliasing artefacts. In contrast, HFCCN yielded favourable recovered images. These results indicate that the effectiveness of HFCCN by using the proposed HFCA module and FCL loss.

Similar to DeFiAN [60], our method also processes the high-frequency and low-frequency information in a divide-and-conquer manner. This can effectively reconstruct high-frequency details while preserving low-frequency smoothness. The difference is that DeFiAN uses an interpretable multi-scale Hessian filtering to learn an adaptive operator to dividedly process high-frequency and low-frequency information, while our method applies comparative learning and attention mechanisms to separate high-frequency and low-frequency components. The experimental results, shown in Table 4, demonstrate marked improvements of the proposed method over DeFiAN on all datasets and on all up-sampling scales under similar model parameters.

Effectiveness analysis: HFCA and FCL were devised to assign greater scaling factors to areas or channels with more high-frequency details. To investigate the effect, we conducted an experiment to understand the behaviour of HFCA and FCL in the SR model. We constructed an EDSR baseline model and a HFCCN model with 24 residual blocks and calculated the correlation coefficient between the feature maps and the high-pass filtering results at the 12th block during training. The results are shown in Fig. 6. For the baseline model, the correlation coefficients between the intermediate layer and the high-frequency features varied little with increasing epochs, implying that high frequencies were difficult to pass through the network. With the HFCA and FCL, the correlation coefficients gradually increased. This verifies that HFCCN can help learn high-frequency signals better compared to the original EDSR. It makes easier for the high-frequency signals to propagate through the network.

4.4 Ablation studies

To examine the effectiveness of the proposed attention mechanism, we first utilized the EDSR-baseline to ablate the roles of different attention modules. We conducted experiments by replacing HFCA or FCL with other attention modules. We selected channel attention and spatial attention and used the same configurations in CBAM [61] for them. The results are shown in Table 1. In this experiment, CA and SA improved the PSNR by 0.022 dB and 0.013 dB, respectively. However, the proposed HFCA and FCL gained 0.079 dB and 0.029 dB performance boosts, respectively, compared to the baseline. The results demonstrate that the proposed HFCA and FCL are more effective than the original CA and SA. Moreover, combining HFCA and FCL can further elevate the performance by a larger margin, validating the advantage of HFCA and FCL.

To demonstrate the effectiveness of taking correlation coefficient as similarity metric in FCL loss, we compared four similarity metrics: L1 norm, L2 norm, Cosine similarity and correlation coefficient. These metrics are all widely used in CV and natural language processing. The experimental results indicate that Cosine similarity had the worst performance. Because Cosine similarity is more about distinguishing differences in direction than the absolute values, it may lead an obvious performance drop in feature map comparison. L1 and L2 are very commonly used loss functions in SR to determine similarities between SR reconstructed images and high-resolution images. However, both of them cannot effectively compare the structural similarity between images. As shown in Table 3, using the correlation coefficient performed slightly better than the other three metrics. As human visual perception is highly adapted for extracting structural information, taking correlation as similarity metric can reflect such information between high-frequency features and hence is arguably more suitable.

We further conducted a detailed ablation study on the high-pass and low-pass filters used in the HFCA and FCL modules. The results of HFCCN using different filters are reported in Table 2. Compared with the combination of mean low-pass filter and Laplacian high-pass filter, the combination of Gaussian low-pass filter and Laplacian high-pass filter gained 0.067dB improvement, which proves effective contributions to the network. The mean low-pass filter assigns the same weight to all regions of feature maps, while Gaussian low-pass filter can better retain overall distribution of feature maps. We also conducted experiments on three different high-pass filters: Scharr, Sobel and Laplacian. Both Scharr and Sobel high-pass filters require operation on separate X and Y direction while Laplacian operator is isotropic in all directions and can extract meaningful high-frequency information. The experimental results indicate that the combination of Gaussian low-pass filter and Laplacian high-pass filter is the best combination.

5 Conclusion

In this paper, a lightweight high-frequency channel contrastive network (HFCCN) is proposed for single image super-resolution. The HFCCN consists of a high-frequency channel attention module and a frequency contrastive learning module, making the network focus more on learning high-frequency signals from both channel and spatial perspectives. HFCA module can re-weigh channels with respect to their high-frequency abundance. The frequency contrast learning module introduces a contrastive learning loss to train a spatial mask that is expected to assign larger scaling factors to higher-frequency areas. Extensive experiments have been conducted, and results show that the proposed HFCCN obtained improved or highly competitive performance than the state-of-the-art SR models on a lightweight setting. To further enhance the ability of extracting high-frequency features, we will evaluate the effect of more adaptive filters and improve contrastive learning loss functions to achieve a more robust performance. In addition, we will introduce the proposed mechanisms to other low-level CV tasks such as image deblurring and denoising. Specially, noise and nature images have distinct features in the frequency domain, and the proposed mechanism of using contrastive learning to separate high-frequency and low-frequency information may offer promising potential for these low-level CV tasks and other applications.

Data availability

Datasets used in this work are publicly available and cited in the text.

References

Rasti, P., Uiboupin, T., Escalera, S., Anbarjafari, G.: Convolutional neural network super resolution for face recognition in surveillance monitoring. In: International Conference on Articulated Motion and Deformable Objects, pp. 175–184. Springer (2016)

Peled, S., Yeshurun, Y.: Superresolution in MRI: application to human white matter fiber tract visualization by diffusion tensor imaging. Mag. Reson. Med. 45(1), 29–35 (2001)

Thornton, M.W., Atkinson, P.M., Holland, D.: Sub-pixel mapping of rural land cover objects from fine spatial resolution satellite sensor imagery using super-resolution pixel-swapping. Int. J. Remote Sens. 27(3), 473–491 (2006)

Zhang, J., Zheng, Z., Xie, X., Gui, Y., Kim, G.-J.: ReYOLO: a traffic sign detector based on network reparameterization and features adaptive weighting. J. Ambient Intell. Smart Environ. (Preprint), 14(4), 317–334 (2022)

Zhang, J., Zou, X., Kuang, L., Wang, J., Sherratt, R., Yu, X.: CCTSDB 2021: a more comprehensive traffic sign detection benchmark. Human-Centric Comput. Inf. Sci. (2022). https://doi.org/10.22967/HCIS.2022.12.023

Dong, C., Loy, C.C., He, K., Tang, X.: Image super-resolution using deep convolutional networks. IEEE Trans. Pattern Anal. Mach. Intell. 38(2), 295–307 (2015)

Lim, B., Son, S., Kim, H., Nah, S., Mu Lee, K.: Enhanced deep residual networks for single image super-resolution. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, pp. 136–144 (2017)

Shi, W., Caballero, J., Huszár, F., Totz, J., Aitken, A.P., Bishop, R., Rueckert, D., Wang, Z.: Real-time single image and video super-resolution using an efficient sub-pixel convolutional neural network. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1874–1883 (2016)

Hu, Y., Li, J., Huang, Y., Gao, X.: Channel-wise and spatial feature modulation network for single image super-resolution. IEEE Trans. Circuits Syst. Video Technol. 30(11), 3911–3927 (2019)

Zhang, Y., Li, K., Li, K., Wang, L., Zhong, B., Fu, Y.: Image super-resolution using very deep residual channel attention networks. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 286–301 (2018)

Liu, J., Zhang, W., Tang, Y., Tang, J., Wu, G.: Residual feature aggregation network for image super-resolution. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 2359–2368 (2020)

Xia, B., Hang, Y., Tian, Y., Yang, W., Liao, Q., Zhou, J.: Efficient non-local contrastive attention for image super-resolution. arXiv preprint arXiv:2201.03794 (2022)

Zhou, G., Chen, W., Gui, Q., Li, X., Wang, L.: Split depth-wise separable graph-convolution network for road extraction in complex environments from high-resolution remote-sensing images. IEEE Trans. Geosci. Remote Sens. 60, 1–15 (2021)

Kong, X., Zhao, H., Qiao, Y., Dong, C.: ClassSR: a general framework to accelerate super-resolution networks by data characteristic. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 12016–12025 (2021)

Goodfellow, I., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., Courville, A., Bengio, Y.: Generative adversarial nets. In: Advances in Neural Information Processing Systems, vol. 27 (2014)

Johnson, J., Alahi, A., Fei-Fei, L.: Perceptual losses for real-time style transfer and super-resolution. In: European Conference on Computer Vision, pp. 694–711. Springer (2016)

Chen, W., Zhou, G., Liu, Z., Li, X., Zheng, X., Wang, L.: NIGAN: a framework for mountain road extraction integrating remote sensing road-scene neighborhood probability enhancements and improved conditional generative adversarial network. IEEE Trans. Geosci. Remote Sens. 60, 1–15 (2022)

Zhang, M., Wu, Q., Zhang, J., Gao, X., Guo, J., Tao, D.: Fluid micelle network for image super-resolution reconstruction. IEEE Trans. Cybern. 53(1), 578–591 (2022)

Zhang, M., Wu, Q., Guo, J., Li, Y., Gao, X.: Heat transfer-inspired network for image super-resolution reconstruction. IEEE Trans. Neural Netw Learn.Syst. (2022). https://doi.org/10.1109/TNNLS.2022.3185529

Zhang, M., Xin, J., Zhang, J., Tao, D., Gao, X.: Curvature consistent network for microscope chip image super-resolution. IEEE Trans. Neural Netw. Learn. Syst. (2022). https://doi.org/10.1109/TNNLS.2022.3168540

Hu, J., Shen, L., Sun, G.: Squeeze-and-excitation networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 7132–7141 (2018)

Dai, T., Cai, J., Zhang, Y., Xia, S.-T., Zhang, L.: Second-order attention network for single image super-resolution. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 11065–11074 (2019)

Liu, T., Das, R.K., Lee, K.A., Li, H.: MFA: TDNN with multi-scale frequency-channel attention for text-independent speaker verification with short utterances. In: ICASSP 2022-2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 7517–7521. IEEE (2022)

Magid, S.A., Zhang, Y., Wei, D., Jang, W.-D., Lin, Z., Fu, Y., Pfister, H.: Dynamic high-pass filtering and multi-spectral attention for image super-resolution. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 4288–4297 (2021)

Qin, Z., Zhang, P., Wu, F., Li, X.: Fcanet: Frequency channel attention networks. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 783–792 (2021)

Khan, A., Yin, H.: Arbitrarily shaped point spread function (PSF) estimation for single image blind deblurring. Vis. Comput. 37(7), 1661–1671 (2021)

Kim, J., Lee, J.K., Lee, K.M.: Accurate image super-resolution using very deep convolutional networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1646–1654 (2016)

Kim, J., Lee, J.K., Lee, K.M.: Deeply-recursive convolutional network for image super-resolution. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1637–1645 (2016)

Tai, Y., Yang, J., Liu, X.: Image super-resolution via deep recursive residual network. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3147–3155 (2017)

Tong, T., Li, G., Liu, X., Gao, Q.: Image super-resolution using dense skip connections. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 4799–4807 (2017)

Zhang, Y., Tian, Y., Kong, Y., Zhong, B., Fu, Y.: Residual dense network for image super-resolution. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2472–2481 (2018)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 770–778 (2016)

Huang, G., Liu, Z., Van Der Maaten, L., Weinberger, K.Q.: Densely connected convolutional networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4700–4708 (2017)

Chen, Y., Xia, R., Yang, K., Zou, K.: MFFN: image super-resolution via multi-level features fusion network. Vis. Comput. 1–16 (2023)

Anwar, S., Barnes, N.: Densely residual Laplacian super-resolution. IEEE Trans. Pattern Anal. Mach. Intell. 44, 1192–1204 (2020)

Shi, W., Du, H., Mei, W., Ma, Z.: (sarn) spatial-wise attention residual network for image super-resolution. Vis. Comput. 37, 1569–1580 (2021)

Zhang, J., Huang, H., Jin, X., Kuang, L.-D., Zhang, J.: Siamese visual tracking based on criss-cross attention and improved head network. Multimed. Tools Appl. 83(1), 1589–1615 (2024)

Liu, A., Li, S., Chang, Y.: Cross-resolution feature attention network for image super-resolution. Vis. Comput. 39(9), 3837–3849 (2023)

Chen, T., Kornblith, S., Norouzi, M., Hinton, G.: A simple framework for contrastive learning of visual representations. In: International Conference on Machine Learning, pp. 1597–1607. PMLR (2020)

Tian, Y., Krishnan, D., Isola, P.: Contrastive representation distillation. arXiv preprint arXiv:1910.10699 (2019)

Wang, Y., Lin, S., Qu, Y., Wu, H., Zhang, Z., Xie, Y., Yao, A.: Towards compact single image super-resolution via contrastive self-distillation. arXiv preprint arXiv:2105.11683 (2021)

Wang, Y., Lin, S., Qu, Y., Wu, H., Zhang, Z., Xie, Y., Yao, A.: Towards compact single image super-resolution via contrastive self-distillation. arXiv preprint arXiv:2105.11683 (2021)

Kong, F., Li, M., Liu, S., Liu, D., He, J., Bai, Y., Chen, F., Fu, L.: Residual local feature network for efficient super-resolution. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 766–776 (2022)

Wang, K., Sun, Q., Wang, Y., Wei, H., Lv, C., Tian, X., Liu, X.: CIPPSRNet: a camera internal parameters perception network based contrastive learning for thermal image super-resolution. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 342–349 (2022)

Wang, Z., Bovik, A.C., Sheikh, H.R., Simoncelli, E.P.: Image quality assessment: from error visibility to structural similarity. IEEE Trans. Image Process. 13(4), 600–612 (2004)

Choi, J.-S., Kim, M.: A deep convolutional neural network with selection units for super-resolution. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, pp. 154–160 (2017)

Timofte, R., Agustsson, E., Van Gool, L., Yang, M.-H., Zhang, L.: Ntire 2017 challenge on single image super-resolution: methods and results. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, pp. 114–125 (2017)

Bevilacqua, M., Roumy, A., Guillemot, C., Alberi-Morel, M.L.: Low-complexity single-image super-resolution based on nonnegative neighbor embedding (2012)

Zeyde, R., Elad, M., Protter, M.: On single image scale-up using sparse-representations. In: International Conference on Curves and Surfaces, pp. 711–730. Springer (2010)

Martin, D., Fowlkes, C., Tal, D., Malik, J.: A database of human segmented natural images and its application to evaluating segmentation algorithms and measuring ecological statistics. In: Proceedings Eighth IEEE International Conference on Computer Vision. ICCV 2001, vol. 2, pp. 416–423. IEEE (2001)

Huang, J.-B., Singh, A., Ahuja, N.: Single image super-resolution from transformed self-exemplars. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 5197–5206 (2015)

Kingma, D.P., Ba, J.: Adam: a method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014)

Paszke, A., Gross, S., Massa, F., Lerer, A., Bradbury, J., Chanan, G., Killeen, T., Lin, Z., Gimelshein, N., Antiga, L., et al.: PyTorch: an imperative style, high-performance deep learning library. In: Advances in Neural Information Processing Systems, vol. 32 (2019)

Dong, C., Loy, C.C., Tang, X.: Accelerating the super-resolution convolutional neural network. In: European Conference on Computer Vision, pp. 391–407. Springer (2016)

Lai, W.-S., Huang, J.-B., Ahuja, N., Yang, M.-H.: Deep Laplacian pyramid networks for fast and accurate super-resolution. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 624–632 (2017)

Tai, Y., Yang, J., Liu, X., Xu, C.: MemNet: a persistent memory network for image restoration. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 4539–4547 (2017)

Ahn, N., Kang, B., Sohn, K.-A.: Fast, accurate, and lightweight super-resolution with cascading residual network. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 252–268 (2018)

Hui, Z., Gao, X., Yang, Y., Wang, X.: Lightweight image super-resolution with information multi-distillation network. In: Proceedings of the 27th ACM International Conference on Multimedia, pp. 2024–2032 (2019)

Tian, C., Xu, Y., Zuo, W., Lin, C.-W., Zhang, D.: Asymmetric CNN for image superresolution. IEEE Trans. Syst. Man Cybern. Syst. 52(6), 3718–3730 (2021)

Huang, Y., Li, J., Gao, X., Hu, Y., Lu, W.: Interpretable detail-fidelity attention network for single image super-resolution. IEEE Trans. Image Process. 30, 2325–2339 (2021)

Woo, S., Park, J., Lee, J.-Y., Kweon, I.S.: CBAM: convolutional block attention module. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 3–19 (2018)

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no conflict of interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Yan, T., Yin, H. High-frequency channel attention and contrastive learning for image super-resolution. Vis Comput (2024). https://doi.org/10.1007/s00371-024-03276-8

Accepted:

Published:

DOI: https://doi.org/10.1007/s00371-024-03276-8