Abstract

In this paper, we propose an accurate and controllable rendering process for implicit surfaces with no or unknown analytic Lipschitz constants. Our process is built upon a ray-casting approach where we construct an adaptive Chebyshev proxy along each ray to perform an accurate intersection test via a robust and multi-stage searching method. By taking into account approximation errors and numerical conditions, our methods comprise several pre-conditioning and post-processing stages to improve the numerical accuracy, which potentially applied recursively. The intersection search is performed by evaluating a QR decomposition on the Chebyshev proxy function, which can be done in a numerically accurate way. Our process achieves comparable accuracy to other techniques that impose more constraints on the surface, e.g., knowledge of Lipschitz constants, and higher accuracy compared to approaches that impose similar constraints as our approach.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Implicit surfaces have a long tradition in computer graphics as they are a powerful abstraction of many geometric models [6, 23] and as they provide construction operations on the underlying shapes either directly, e.g., using metaballs [5], or indirectly, e.g., using skeleton-based representations [2]. Implicit surfaces are commonly defined as an iso-contour of a 3D scalar field \(s({\varvec{x}}),\; {\varvec{x}}\in {{\mathbb {R}}}^3\), where \({\varvec{x}}\) is inside and outside the object, if \(s({\varvec{x}}) < 0\) and \(s({\varvec{x}})>0\), respectively.

Direct rendering of implicit surfaces,e.g., without conversion into a mesh, is, however, a non-trivial task, and is commonly handled with ray-casting [26, 33, 44]. This involves finding the first root of \(s({\varvec{r}}(\lambda ))\) along a ray \({\varvec{r}}(\lambda ) ={\varvec{o}}+\lambda {\vec {\varvec{d}}}\) cast from a viewpoint \({\varvec{o}}\) in direction \({\vec {\varvec{d}}}\). For polynomial scalar fields s of degree \(\le 4\), several accurate or even analytic approaches for root finding exist, e.g., interval arithmetic [45], which can also be utilized by approximating s by polynomials of degree \(\le 4\) [28, 43] or Taylor expansions [45]. This often induces an approximation error that is hardly controllable, as finding a sufficiently accurate approximation of \(s({\varvec{r}}(\lambda ))\) is a very challenging task, especially when being restricted to low degree approximations. Alternatively, if the global or local Lipschitz constant for the scalar field is known, more accurate root finding algorithms can be used, e.g., LG-surfaces [29] and Sphere Tracing [26]. However, while Lipschitz constants are known when rendering, e.g., SDFs, they are generally not known a priori when rendering surfaces based on arbitrary implicit functions.

In this paper, we propose a rendering approach for implicit surfaces that approximates the scalar field along rays with controllable accuracy and does not require any knowledge of Lipschitz constants. The only technical requirement is, that the scalar field is globally \(C^0\)-continuous. The specific contributions of this paper are:

-

(a)

An efficient and accurate approach to find the first root along a ray, which involves an efficient Chebyshev approximation method, comprising several pre-conditioning and post-processing stages, as well as an optional, recursive root finding, to achieve as-optimal-as-possible scalar field approximations along rays,

-

(b)

a performance analysis of our method using scalar fields of varying types, e.g., polynomial and non-algebraic, regarding both, computational aspects and achievable numerical accuracy, which are compared to existing rendering approaches, and

-

(c)

an open source CPU-based C++ implementation of the Chebyshev proxy construction process, the rendering approach and an implicit surface rendering framework that also includes implementations of other common rendering approaches, namely LG-Surfaces [29], Sphere Tracing [26], Adaptive Marching Points [44], a local quartic polynomial approximation process [43], Segment Tracing [21] and a simple ray marching approach.

Limitations. Our method is not well-suited for functions that are defined piecewise, e.g., discrete Signed Distance Fields, due to their large number of discontinuities in some derivative terms, which involve many recursions and high polynomial degrees, yielding a significant drop in runtime performance.

2 Related work

Implicit surfaces are described based on a position in space \({\textbf{x}}\in \mathbb {R}^3\) and an arbitrary scalar field \(S({\textbf{x}})\) [6], where the surface is some iso-contour of S, commonly \(S({\textbf{x}}) = 0\). We distinguish three distinct ways to visualize implicit surfaces: (i) polynomial approximations that locally approximate the surface using piecewise polynomials of various degrees, (ii) rendering approaches that require prior knowledge of the Lipschitz constant of the scalar field, either locally or globally, and (iii) interval refinement methods using root isolation techniques.

Polynomial approximations. For polynomial scalar functions along rays up to degree 4 closed-form solutions can be applied [24], while for higher-degree polynomials no general analytic solutions can exist. Instead, for higher degree, and/or piecewise polynomial surfaces, numerical root finding approaches can be utilized, e.g., Bézier clipping [30, 38]. For arbitrary scalar fields, approximating polynomials can be constructed along a ray, to which various root finding methods are applied [28, 43], involving, e.g., Taylor expansion [45] or Bernstein polynomials [30]. However, increasing the degree of a monomial polynomial arbitrarily does not necessarily yield a better approximation due to Runge’s phenomenon [41], which is a fundamental limitation when constructing polynomial approximations using interpolation methods in existing rendering approaches. Our method addresses these limitations by utilizing Chebyshev polynomials that do not suffer from Runge’s phenomenon and which can scale to much greater numerical accuracy for arbitrary implicit surfaces.

Lipschitz constant-based approaches. Knowing the Lipschitz continuity of the scalar function and its Lipschitz constant k significantly simplifies the root finding procedure. Kalra and Barr [29] utilized the Lipschitz continuity of the surface function and of the gradient of the surface function to construct an approach in which surfaces are guaranteed to be intersected. If only a global Lipschitz constant is known, sphere tracing [26] can be applied, which provides a robust rendering approach that iterates along a ray based on the current function magnitude. Several extensions have been proposed to sphere tracing, e.g., using overrelaxation [32], locally defined Lipschitz constants [22], or nonlinearly deformed signed distance fields [42]. Sphere tracing-like approaches can, however, be arbitrarily slow for grazing rays, which can be diminished using local Lipschitz constant, e.g., using Segment tracing [21]. Our method circumvents these limitations by not relying on a known Lipschitz constant.

Interval refinement. For arbitrarily defined polynomial functions a robust ray tracing approach is to utilize interval-based approaches [14, 37], where the main goal is to perform a root isolation, i.e., finding an interval along the ray that contains a single root, notably the first root for surface rendering. This can be done in a variety of ways, e.g., using Budan’s theorem [16], Descartes’ rule [25] or Sturm’s theorem [48], but these approaches generally rely on the surface function being polynomial or monomial polynomial approximations of the surface function that may not be accurate for challenging surface functions. Several improvements over these approaches have been proposed in the past, e.g., using affine arithmetics to obtain tighter interval bounds [19], Taylor tests for root bracketing [40] or GPU-based algorithms [34]. Recently, Keeter [31] proposed an interval refinement approach that realizes a hierarchical approach to render implicit surfaces involving thousands of arithmetic operations efficiently on GPUs. Our method is able to work with traditional arithmetic operations, while preserving numerical robustness, by directly incorporating numerical error approximations into the rendering procedure.

3 Chebyshev proxy rendering

Rendering an implicit surface function \(s({\textbf{x}})\) can be formulated as finding intersections along a ray \({\varvec{r}}(\lambda ) ={\varvec{o}}+\lambda {\vec {\varvec{d}}}\), that are cast from a viewpoint \({\varvec{o}}\) in direction \({\vec {\varvec{d}}}\), where the intersections are the roots along r. Consequently, we can reformulate the problem of intersection testing along rays as a root search problem for an arbitrary unary function \(f:{\mathbb {R}}\rightarrow {\mathbb {R}}\). More specifically, for rendering problems we are only interested in intersections along a compact interval, i.e., intersections in front of the camera up to some maximum depth \(b<\infty \) with a minimum distance \(a\ge 0\), which means that we need to find roots of an arbitrary function \(f:[a,b]\rightarrow {\mathbb {R}}\). However, finding roots of arbitrary functions is a challenging problem and, accordingly, we aim to transform the problem into a form that can be readily handled.

Our approach comprises five separate steps as

-

(a)

Constructing a Chebyshev proxy \(f_p\), which allows for robust root finding, see Sect. 3.1.

-

(b)

Finding the roots of the Chebyshev proxy \(f_p\), see Sect. 3.2.

-

(c)

Improving the pre-conditions for the approximation accuracy of \(f_p\) to enhance the numerical accuracy of the root finding for f, see Sect. 3.3.

-

(d)

Post-processing steps to refine the estimated root, see Sect. 3.4.

-

(e)

A recursive process to improve numerical accuracy in cases where extreme numerical conditions lead to visual artifacts, see Sect. 3.5.

3.1 Chebyshev proxy construction

In \({\mathbb {R}}\) the Weierstrass Approximation Theorem [49] guarantees the existence of a proxy function \(f_p\) for every \(C^0\) continuous function f over a compact interval \([a,b]\subset {\mathbb {R}}\) with arbitrary precision \(\epsilon \), i.e., \( \left\| f-f_p \right\| _\infty \le \epsilon \), using a polynomial function of finite degree n, depending on \(\epsilon \). To construct \(f_p\), we utilize Chebyshev polynomials as these do not suffer from Runge’s phenomen [41], i.e., increasing the degree results in better approximations, and as they can be efficiently constructed using DFTs. In general, Chebyshev basis polynomials of the first kind \(T_n\), of degree n, are recursively defined via

Based on this orthogonal basis over \([-1,1]\), a Chebyshev polynomial \(p(x) = \sum _{i=0}^{n}c_i T_i(x)\), with Chebyshev coefficients \(C=\{c_0,\dots ,c_{n}\}\), can be constructed, which can be used for approximating a \(C^0\)-function f. The corresponding coefficients C are calculated by sampling f on a Chebyshev-Lobato and performing a discrete Fourier transform (DFT) with \({\mathcal {O}}(n\log n)\) time complexity [8]. Sampling at the Chebyshev-Lobato grid, defined over \([-1,1]\) as \(x_{k}=-\cos ({\frac{k\pi }{n}}),\, k=0,1,\dots , n\), guarantees uniform convergence for analytic functions with an approximation error \({\mathcal {O}}\left( e^{-n\mu }\right) \) [4] in the mathematical sense, with n being the polynomial degree and \(\mu \) being a positive constant. Note, that arbitrary compact finite intervals can be transformed to the interval \([-1,1]\) via an affine transformation.

While the Weierstrass Approximation Theorem prescribes the existence of a polynomial degree \(n^\text {opt}_{\epsilon _u}\) with an \(L_\infty \) approximation error of \(\epsilon \) for \(f:[a,b]\rightarrow {\mathbb {R}}\), there is no general approach to find this lower bound degree. Therefore, heuristic approaches, e.g., [1, 3, 7, 9, 15, 18, 27, 35, 36, 46], exist to determine \(n_\epsilon \approx n^\text {opt}_\epsilon \), e.g., using the interstitial error [12] as an approximation of the \(L_\infty \) error.

In our proposed method, we utilize the approach of Aurentz and Trefethen [1] that employs the round-off plateau behavior of the Chebyshev coefficients, and has been well tested for a large variety of functions and is integrated into the widely utilized Chebfun library [17]. Using \(n_\epsilon \), a uniquely determined proxy function \(f_p\) exists as

Machine Precision & Discretization. At this point, it is important to note that in computer science we always operate on the finite set \({\mathbb {M}}\) of machine numbers and that we deal with the machine approximation \(f_m:{\mathbb {M}}\rightarrow {\mathbb {M}}\) of the “real” function f given by the numerical implementation. As we do not address this level of approximation, we assume \(f_m=f\), i.e., \(f_m\) represents the “real” function whose nearest root we are looking for. We, therefore, use f and \(f_m\) for analytic aspects with infinite precision and numerical aspects with finite precision. Moreover, there are limits in transferring “ infinitely precise” analytical concepts to finite precision machine operators and representations, which we will address in the following. Specifically, there is always an underlying limitation relating to the achievable computational precision, e.g., based on the number and magnitude of coefficients.

Several heuristics exist to estimate this approximation accuracy limit \(\epsilon _a\) for Chebyshev proxys [12, 39], where we utilize the estimated proposed by Boyd [12] given as

where \({{\text {mmc}}(f_p)}\) is the maximum magnitude coefficient.

For our proxy construction process, we utilize the adaptive approach of Boyd [12] and the heuristic chopping process of Aurentz and Trefethen [1], which tries to achieve \( \left\| f_m-f_p \right\| _\infty \le \epsilon _u\) by appropriately setting the threshold for the round-off plateau detection. This round-off plateau is the result of the difference in magnitude of coefficients being limited by the machine precision \(\epsilon _m\), as any coefficient smaller than \({{\text {mmc}}(f_p)}/\epsilon _m\) will (most likely) not affect the result of machine precision operations. Therefore, \(\epsilon _u\) can not be chosen below a value that depends on \({{\text {mmc}}(f_p)}\) and the approximation error is bound on both sides

where both limits depend on \({{\text {mmc}}(f_p)}\) [1, 12]. \({\text {mmc}}(f_p)\) is considered as being proportional to the magnitude of the function [12]

where \({\text {mag}}\) denotes the absolute magnitude of the function over the given interval range. Under the assumption of Eq. 4, \(\epsilon _a\) and \(\epsilon _u\) have to strictly relate to \(\epsilon _m {\text {mag}}_{f_m}(a,b)\), i.e., \(\epsilon _u<\epsilon _m\cdot {\text {mag}}_{f_m}(a,b)\) can, in general, not be achieved [12].

Since this proportionality is semi-well understood for many cases, we evaluated the proportionality hypothesis for all test functions to ensure that this also applies to rendering problems. The determination of the maximum magnitude \({\text {mag}}_{f_m}(a,b)= \left\| f_m\vert _{(s,t]} \right\| _\infty \) is challenging for an arbitrary function, as it requires root finding for derivatives. We, therefore, evaluate the assumption indirectly using the maximum magnitude \({\text {mag}}_{f_p}(a,b)\) of the proxy \(f_p\) by applying QR-based root search on the Chebyshev proxy’s derivative (see Sect. 3.2). We evaluated \(\log _{10}({{\text {mmc}}(f_p)}/{{\text {mag}}_{f_p}(a,b)})\) for all 16 functions from Fig. 2 and found that the maximum difference of 0.2, i.e., a difference factor of 5, which occurs for the Bohemian Star test function (Fig. 2b), as shown in Fig. 1. Consequently, we assume that the proportionality is valid for ray casting polynomial surfaces, rational surfaces, analytic algebraic surfaces, algebraic surfaces, and non-algebraic surfaces, which we use for testing (see Sect. 4).

The logarithmic per-pixel ratio \(\log _{10}({{\text {mmc}}(f_p)}/{{\text {mag}}_{f_p}(a,b)})\) for the Bohemian star surface (Fig. 2b), estimated using the proxy

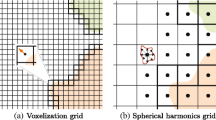

3.2 Root finding for chebyshev proxies

After constructing the proxy function \(f_p\), we now need to find the roots of \(f_p\) for the intersection tests. This can be achieved using the companion matrix M, which is the uniquely defined matrix that has \(f_p\) as its characteristic polynomial. Consequently, the real Eigenvalues of M are the real roots of p. Commonly, for Chebyshev polynomials the Eigenvalues of the companion matrix are computed via a QR algorithm [47], which estimates roots of the polynomial simultaneously in time complexity \({\mathcal {O}}(n^3)\) [11]. Due to this computational complexity and the limited numerical accuracy for large matrices, reducing the polynomial degree has clear benefits. However, simply excluding higher polynomial terms is not feasible, as it would negatively affect the approximation accuracy \(\epsilon _p\).

We utilize the subdivision approach proposed by Boyd [10, 11] that reduces the polynomial degree during the calculation. This approach relies on the concept that, in general, a function \(f_m\) on [a, b] approximated by \(f_p\) with degree n can be alternatively approximated on the intervals [a, d] and [d, b], with \(d\in (a,b)\), with lower degree and similar approximation accuracy. This subdivision process is especially useful for functions f that are \(C^\infty \) everywhere on [a, b] besides a few \(C^k\) points. In this case, the subdivision ultimately excludes the \(C^k\) points and yields intervals over which f is \(C^\infty \) and for which the polynomial degree for approximation is significantly reduced [12].

More precisely, \(f_m:[a,b]\rightarrow {\mathbb {M}}\) is transformed to the canonical interval \([-1,1]\) using an affine mapping [12, 17]. \([-1,1]\) is split at the heuristically chosen value \(d = -0.004849834917525\) [17], and two new approximation functions \(f_p^l\) and \(f_p^r\) are determined over \(\left. f_p\right| _{[-1,d]}\) and \(\left. f_p\right| _{[d,1]}\), respectively. The new proxies \(f_p^l\) and \(f_p^r\) are constructed by evaluating \(f_p\) on a Chebyshev-Lobatto grid of degree n, equal to the degree of \(f_p\), on each sub-interval, with time complexity \({\mathcal {O}}(n^2)\), and applying a DFT, with time complexity \({\mathcal {O}}(n\log n)\), to find the respective coefficients [17]. See, Boyd and Gally [13] for further numerical properties of Chebyshev root searching.

3.3 Improving pre-conditions

As discussed in Sect. 3.1, the error bounds \(\epsilon _a\) and \(\epsilon _u\) both depend on the maximum magnitude coefficient of the polynomial proxy \({\text {mmc}}(f_p)\) and, as \({\text {mmc}}(f_p) \propto {\text {mag}}_{f_m} (a,b)\), they both depend on the maximum magnitude of \(f_m\). It is important to keep in mind that uniformly scaling the function, e.g., searching for roots of \(g:x\rightarrow \frac{x}{1000}f(x)\), does not improve the root searching process as the errors are still bound in the same relative ratio to the maximum magnitudes. Consequently, to achieve smaller \(\epsilon _a\) and \(\epsilon _u\) we have to either exclude regions of large relative magnitude from the root search interval to reduce \({\text {mmc}}(f_p)\), or regions that are difficult to approximate using Chebyshev proxies, i.e., regions with \(C^0\) locations, that require high Chebyshev proxy degrees.

Using the fact that \(f_m:[a,b]\rightarrow {\mathbb {M}}\) has an (unknown) machine Lipschitz constant, yields that \({\text {mag}}_{f_m}(a,b)\) can be reduced by lowering \(f_m(a), f_m(b)\) and \(b-a\). Reducing \(f_m(a)\) and \(f_m(b)\) can be efficiently achieved by excluding segments on either end of the interval that do not contain the first root of the function. Moreover, reducing the root search to a sub-interval \([a_s,b_s] \subsetneq [a,b]\) always reduces the interval length \(b-a\).

As we aim to find the first root \(\xi _0\), we shift \(f_m\) by the approximation error \(\epsilon _a\)] of \(f_p\) to guarantee that \(\xi _0\) will not be missed when using \(f_p\) for root search. This also holds, if \(\xi _0\) is a double root of \(f_m\). The shifted function \(f_p^\epsilon :[a,b]\rightarrow {\mathbb {M}}\) is constructed by directly modifying the Chebyshev coefficients \(c_i^p\) of the proxy function \(f_p\) of \(f_m\):

Note that if \(|f_p(a)|\le \epsilon \) then a was already the approximated first root and the process can be stopped.

Performing this shift requires estimating \(\epsilon _a= \left\| f_m-f_p \right\| _\infty \), which we achieve using Eq. 2. Subsequently, we shift \(f_p\) by \(s\cdot \epsilon _a\), where empirical experiments reveal that \(s = 100\) is sufficient in all our test cases, i.e., no roots of \(f_m\) are missed.

However, this shift is not without drawbacks. The first root \({{\tilde{\xi }}}_0\) of \(f_p^\epsilon \) is an estimate for the actual first root \(\xi _0\) of \(f_m\) that is located left of \(\xi _0\). Depending on the shift value \(s\cdot \epsilon _a\), this offset may be large. For example, \(f(x) = e^{-x}-0.5, x\in [-100,100],\) yields an estimated approximation error of \(\epsilon _a\approx 5.9\cdot 10^{27}\). Moreover, the shift can generate a pseudo-root, i.e., \({{\tilde{\xi }}}_0\) does not correspond to \(\xi _0\). Consequently, we may have \({{\tilde{\xi }}}_0\ll \xi _0\) in specific cases. These problems are addressed in our post-processing (Sect. 3.4) and in our recursive approach (Sect. 3.5).

3.4 Post-processing

Given a root \({{\tilde{\xi }}}\) of the proxy \(f_p\), or \(f_p^\epsilon \), two main categories of errors exist that we try to diminish via post-processing: (i) errors on the proxy, i.e., \({{\tilde{\xi }}}\) may not be the exact first root of \(f_p\), and (ii) errors of the approximation, i.e., \({{\tilde{\xi }}}\) may not be the exact first root of \(f_m\). We utilize two separate approaches, i.e., iterative refinement using Newton’s method and an incremental shifting.

Iterative Refinement. Newton’s method is commonly used to polish estimated roots of polynomial functions [12]. This approach improves the estimate of the first root \({{\tilde{\xi }}}^0={{\tilde{\xi }}}\) by iteratively estimating a new approximate root \({{\tilde{\xi }}}_{n+1}\) for \(f_m\), as

until a convergence criterion has been reached, e.g., \(|f_m({{\tilde{\xi }}}_{n+1})|\le \epsilon \), which can be applied either to the proxy \(f_p\) or \(f_m\) itself.

Applying Newton’s method to \(f_p\) is straightforward [12] as the derivative of \(f_p\) can be calculated directly from the coefficients of \(f_p\), but it does not necessarily improve the root’s accuracy relative to \(f_m\) as the approximation accuracy for \(f_m\) by \(f_p\) remains unchanged (see also Sect. 4). Applying Newton’s method to \(f_m\) directly requires a derivative estimate, e.g., using finite differences, which may not be sufficiently accurate to achieve convergence. Moreover, there is no guarantee that starting the Newton process at \({{\tilde{\xi }}}\) will converge toward the corresponding root of \(f_m\), i.e., it may diverge or converge to a different root, potentially outside the search interval.

Considering the shifted function \(f_p^\epsilon \), the approximate root \({{\tilde{\xi }}}^\epsilon \) is generally left of the first root \(\xi \) of \(f_m\), if \({{\tilde{\xi }}}^\epsilon \) is no pseudo-root. Accordingly, Newton’s method can yield significant improvements, but it may, depending on the specific constellation, also reduce the accuracy considerably, see Sect. 4.

Incremental Shifting. This approach successively evaluates \({{\tilde{\xi }}}_+\) or \({{\tilde{\xi }}}_-\), i.e., the previous or next number in machine precision to \({{\tilde{\xi }}}\), to improve the root estimate of \(f_m\), or f, i.e.,

which is repeated until \({{\tilde{\xi }}}^{i+1}={{\tilde{\xi }}}^i\). This process is guaranteed to improve the error of the root estimate, but it may get stuck in a local minimum. Applying this process to f may incur significant computational costs, as the offset \(\xi -{{\tilde{\xi }}}\) can be large and, thus, may require \(\gg 10^6\) iterations, while applying it to \(f^\epsilon _p\) requires only a few iterations. Therefore, our root finding process applies incremental shifting to \(f_p\) only, see Sect. 3.5.

3.5 Recursive proxy root finding

The main objective in rendering implicit surfaces is the visual quality and correctness; see Sect. 4.2.2. It is, however, hardly possible to robustly determine qualitative failure cases by algorithmic or numerical means. Specifically, locally nearly flat or multiple roots of the per ray scalar function can cause strong visual artefacts; see Sect. 4.3.

We, therefore, propose a manually activatable root searching algorithm that recursively combines the pre-conditioning via shifting (Sect. 3.3), the proxy root finding using Chebyshev approximation (Sect. 3.1), and post-processing refinement procedures (Sect. 3.4), as depicted in Algorithm 1. This recursive procedure works by splitting the initial search interval into more granular intervals that can be processed at higher approximation accuracies. As we are only interested in finding the first root along a ray, we perform this recursion in a depth-first manner, i.e., we evaluate the left-most intervals first, as we can stop the entire process once the first root of \(f_m\) has been found.

We search for sub-intervals where \(f_p\) provides a more accurate approximation to \(f_m\) and that potentially contains a root. For example, given a function \(f_m\) over [a, b], with \(f_m(a) = 100\) and an approximation accuracy of \(\epsilon = 1\), then, instead of searching for roots of \(f_m\) directly, searching for intervals where \(f_m(x) \le \epsilon \) and subsequently re-approximating \(f_m\) over these intervals, yields a more accurate and efficient root search in most cases.

To perform this interval division, we shift \(f_m\) by the approximation error of \(f_p\) over the entire interval by

where \(\epsilon _a=n^2 \epsilon _m {\text {mmc}}(f_p)\) (see Eq. 2) is a lower bound for \( \left\| f_p-f_m \right\| _\infty \) and s is set to 100; see also Sect. 3.3. No root for \(f_p^\sigma \) is a root of f, but indicates a location at which \(|f_p|\) drops below the threshold \(|\sigma |\). The roots of \(f_p^\sigma \) define sub-intervals with lower magnitudes, and, thus, can increase the approximation accuracy. For now, we assume that \(f_m(a)>\sigma \).

If no root was found for \(f_p^\sigma \) then no root can exist for \(f_m\), as the function remains above the threshold \(\sigma \) everywhere in absolute magnitude and has no sign changes. If a single root \({\bar{\xi }}_0\) is found for \(f_p^\sigma \), this marks the transition from \( \left| f_p \right| > \sigma \) to \( \left| f_p \right| <\sigma \). In such cases, a new root search can be performed on the interval \([{\bar{\xi }}_0,b]\) as \([a,{\bar{\xi }}_0)\) can not contain a root of \(f_m\). If two roots \({\bar{\xi }}_0\) and \({\bar{\xi }}_1\) are found for \(f_p^\sigma \), \( \left| f_p \right| <\sigma \) is only valid between \({\bar{\xi }}_0\) and \({\bar{\xi }}_1\). Consequently, a root of \(f_m\) can only occur in this interval, and a new root search is performed on \([{\bar{\xi }}_0,{\bar{\xi }}_1]\). Finally, if there exist three or more roots \(\{{\bar{\xi }}_0,{\bar{\xi }}_1,{\bar{\xi }}_2,\dots \}\) for \(f_p^\sigma \) then, analogously, roots can occur on the intervals \([{\bar{\xi }}_0,{\bar{\xi }}_1]\) and \([{\bar{\xi }}_2,b]\), where a root search is performed on each sub-interval.

Overall, this process recursively narrows down onto the first root, as given in Algorithm 1. As the approximation gets more accurate with each subdivision, it would be possible to reduce the scaling parameter s in each iteration. However, there was no practical benefit in our experiments and, thus, we use \(s=100\) in all cases.

In the case of \(|f_m(a)|\le \sigma \), the argumentation above is inverted, e.g., if a single root \({\bar{\xi }}_0\) was found then a root exists in the interval \([a,{\bar{\xi }}_0]\) instead of \([{\bar{\xi }}_0,b]\). However, if no roots were found and \(f(a)\le \sigma \), then we assume that a already was a root of \(f_m\).

4 Evaluation

Implementation and system. We implemented our proposed approach, including the adaptive Chebyshev approximation, in an open source C++ program included in the supplementary material. We perform our evaluations on a system with an AMD Ryzen 3950X CPU, an Nvidia 3090 RTX GPU and 64 GiB of host memory. The actual rendering performed on the CPU using double precision arithmetic, while the GPU is utilized only to visualize the results. Note that there are no technical limitations that would prevent implementing our procedure on a GPU, however, due to limitations regarding double precision arithmetic performance on consumer GPUs, such an implementation would not be widely useful. All images are ray traced at a \(1920\times 1080\) pixel resolution with no antialiasing or subsampling applied.

Test functions. The 17 implicit surfaces used for the evaluation are shown in Figs. 2 and 7. Their definitions are given in Appendix B, and meta information for the functions is provided in Table 1. The test surface functions are of five different classes. The classes are (a) polynomial surfaces (\(C^\infty \) everywhere) that can be represented exactly using Chebyshev proxies, (b) rational surfaces (\(C^\infty \) outside sparse singularities), e.g., using division operations, (c) analytic algebraic surfaces (\(C^\infty \)), (d) algebraic surfaces (\(C^\infty \) outside sparse singularities), utilizing, e.g., the square root function, and (e) non-algebraic (transcendental) surfaces that may be only \(C^0\) in large regions of the image, e.g., using \({\text {max}}\) and \({\text {abs}}\).

Evaluation procedure. The evaluation consists of three distinct parts: (1) Evaluation of the proposed rendering approach on its own. This comprises the evaluation of (1.a) the required polynomial degrees and the resulting coefficient distributions (Sect. 4.1.1), and (1.b) the computational performance and effects of post-processing (Sect. 4.1.4). (2) Comparisons of the proposed rendering approach to existing rendering techniques, including (2.a) a discussion of the various visible failure modes occurring in rendering of implicit surfaces in Sect. 4.2.1, and, (2.b) a quantitative comparisons against baseline methods separated by the five categories of implicit surfaces (Sect. 4.2.2). (3) An evaluation of our recursive root searching approach applied to the Bohemian Star function in Sect. 4.3.

Precision control. Our proposed rendering technique depends on the user desired precision parameter \(\epsilon _u^*\), which transforms to \(\epsilon _u=10^{\epsilon _u^*}\epsilon _m\) (see Sect. 3.1). While \(\epsilon _u^*=0\) would be ideal in all cases, the process is bound by the approximation accuracy \(\epsilon _a\) (see Eq. 3). Thus, too small \(\epsilon _u^*\) enforces infeasible high polynomial degrees. We, therefore, apply a heuristic by choosing \(\epsilon _u^*\) based on the portion of the image that is not \(C^\infty \), as these regions require “allocate” the majority of computational resources. For surface functions that are \(C^\infty \) (besides a few points; Fig. 2j), we set \(\epsilon _u^*= 1\), for functions with discontinuities in small regions, we set \(\epsilon _u^*= 4\), and for functions with discontinuities in most of the image, i.e., the cube function (see Fig. 2p), we set \(\epsilon _u^*= 6\). Note that for polynomial functions of low degrees, e.g., the sphere function (Fig. 2a), the underlying function can be exactly represented using a Chebyshev proxy \(f_p\) and thus the choice of \(\epsilon _u^*\) does not matter.

4.1 Evaluation of the proposed method

We evaluate our method regarding different properties specific to our method, focusing on both numerical aspects, e.g., the polynomial degrees required for different functions, as well as computational aspects, e.g., the computational cost of constructing Chebyshev proxies.

4.1.1 Polynomial degree distributions

Regarding the polynomial degree of the proxy \(f_p\), we need to consider both, the maximum degree of all pixels, which requires the “highest approximation effort” for a pixel-ray, and the distribution of the degrees, which relates more to the average approximation effort for a surface. As mentioned before, using a Chebyshev-Lobato grid guarantees uniform convergence for analytic functions with an approximation error \({\mathcal {O}}\left( e^{-n\mu }\right) \) [4], with n being the polynomial degree and \(\mu \) being a positive scaling coefficient and approximating non analytic functions, i.e., functions that are not \(C^\infty \) everywhere, is more challenging [12]. Consequently, functions that are polynomial, e.g., a sphere, require only few coefficients to be approximated, whereas \(C^0\)-continuous non-algebraic functions require significantly more coefficients.

Table 1 describes the distribution of polynomial degrees across different surface functions, revealing the strong variation in the required polynomial degree. In general, we observe that the polynomial surfaces require only very low polynomial degrees, as they can be represented exactly using a Chebyshev proxy, which is in line with the expected behavior. Analytic surfaces require moderate polynomial degrees everywhere, with a relatively low ratio of the maximum polynomial degree to the median polynomial degree, which is in line with the expected behavior as the function is only difficult to approximate in some regions. Rational surfaces require higher polynomial degrees everywhere and exhibit a much higher ratio of maximum to median polynomial degree. This behavior is exacerbated for algebraic surfaces, e.g., the Morph surface (Fig. 2j) exhibits a relatively low median degree of 25, but requires at worst a degree 5755 polynomial, where the high degree polynomials occurs for rays close to the four points in which the function is \(C^0\)-continuous, see Fig. 2j. Finally, for non-algebraic surfaces the overall required polynomial degree increases significantly with similar outliers in the maximum polynomial degree, e.g., the Cut (min) surface (Fig. 2n) requires high degree approximations along the seam between the two input surfaces, shifting the overall median degree up to 508, while yielding a significantly lower approximation accuracy than the Morph surface Fig. 2j.

4.1.2 Proxy construction

The computational costs of the proxy construction are mostly determined by the sampling of the surface function on a Chebyshev–Lobatto grid and the subsequent DFT. In our proposed rendering approach, we utilize the adaptive Chebyshev proxy construction of Boyd [12], where the function is evaluated on Chebyshev-Lobatto grids of size \(2^k+1\), starting with \(k=4\), and performing a subsequent chopping of the coefficients using the approach of Aurentz and Trefethen [1]. Performing the DFT of the Chebyshev-Lobato has time complexity \({\mathcal {O}}(n\log n)\), independent of the computational complexity of f. Accordingly, the computational requirements of constructing the proxy function are tightly coupled to the polynomial degree of \(f_p\). Furthermore, as our test surface functions are computationally efficient to evaluate, the DFT is dominant in the overall computational requirements of the proxy construction in our experiments. For an overview of the computational requirements for each method see Fig. 3, and for more detailed results for each individual implicit function, please see the supplementary material.

4.1.3 Root finding

Regarding the computational performance of the root searching, we observe that the overall computational cost mainly depends on the polynomial degree of \(f_p\) and not on the underlying surface function. However, the overall computational costs are significantly higher due to the computational complexity of the QR decomposition of \({\mathcal {O}}(n^3)\), which is dominating the \({\mathcal {O}}(n\log n)\) for the DFT in the proxy construction. For an overview of the computational requirements for each method see Fig. 4, and for more detailed results for each individual implicit function, please see the supplementary material.

4.1.4 Post-processing

To assess the impact of the post-processing, we investigate three exemplary functions, i.e., the polynomial surface Sphere, the rational surface Blend II, and the algebraic surface Union (soft), by evaluating the implicit surface function f at the estimated roots \({{\tilde{\xi }}}\) and \({{\tilde{\xi }}}^\epsilon \) found for the proxy \(f_p\) and the shifted proxy \(f_p^\epsilon \) function, respectively, see Table 2. Note that the quartiles \(Q_1\) and \(Q_3\) indicate errors away from and toward the ray origin, respectively, as the functions are positive outside the surface. For the Sphere surface, the error without any shifting is very low, i.e., in the order of \(\epsilon _m\), where applying the post-processing yields a median error of exactly zero. Finding the root for the shifted function \(f_p^\epsilon \) yields a positive error, as the root is shifted toward the ray origin. Applying the post-processing yields errors identical to the non-shifted and post-processed function.

For the Blend II surface, the behavior is similar for both the non-shifted and shifted variant, i.e., the error for the non-shifted variant is close to machine precision whereas shifting the function yields a similar increase in error magnitude of about 6 orders of magnitude. We see that applying a Newton iteration based post-processing on the shifted function, yielding the root estimate \({{\tilde{\xi }}}_N\), does not yield the same accuracy as for the non-shifted variant of the Blend II surface, in fact, the median error magnitude increases by about an order of magnitude.

For the Union (soft) surface, the error of the non-shifted variant is significantly higher than for the previous two functions and can become \(f({{\tilde{\xi }}})=-8.76 \times 10^{-4}\) in outlier cases. The shifted variant yields a smaller relative absolute error as the non-shifted variant, i.e., the error only increases by 3 orders of magnitude regarding the median instead of 4 or 8. Similar to the other examples, the estimated root is also pushed toward the ray origin and applying the post-processing yields a significant improvement in the error magnitudes for both variants regarding \(Q_1\), median and \(Q_3\), but the maximum error magnitude increases significantly. This behavior indicates that for this specific function, the estimated root \({{\tilde{\xi }}}\) of \(f_p\) is not a good starting point for a Newton-based post-processing and generates outliers with significantly higher error.

Overall, applying the shift to the proxy function yields the expected behavior, i.e., the estimated intersection is pushed toward the ray origin, and applying a Newton iteration-based post-processing does significantly increase the accuracy in most cases. However, the post-processing, also in combination with shifting, does not robustly improve the accuracy in all cases. Accordingly, the post-processing should be combined with a sanity check, i.e., if the absolute magnitude of f actually decreased after applying the post-processing.

4.2 Comparison against baseline methods

Examples of the different qualitative errors observed in ray-surface intersections for the Bohemian Star function (see Fig. 2b). The left image (using Ray Marching) displays a jagged outline of the surface and a discontinuous surface. The middle image (using Ray Marching) displays large regions of missed surface. The right image (using AMP-Taylor) displays significant amounts of false surfaces

To compare our method against existing approaches, we distinguish existing methods based on their requirements regarding the prior knowledge of Lipschitz constants and derivatives, and the number of tuning parameters to be specified per surface function; see Table 3. We compare against two methods that do not require any prior knowledge, i.e., ray marching (Ray March) and Adaptive Marching Points (AMP-Sign) [44], that uses a sign-test to isolate roots. All other methods either require Lipschitz constants (Sphere Tracing (Sphere) [26] and Segment Tracing (Segment) [21]), derivatives (Adaptive Marching Points (AMP-Taylor) [44] using a Taylor-test to isolate roots and a piecewise polynomial approach (Sherstyuk) [43]), or both (LG Surfaces approach (LG) [29]).

In general, there is no direct option to estimate the Lipschitz constant for a given black-box function \(f:[a,b]\rightarrow {\mathbb {M}}\), i.e., finding the maximum derivative of f, besides finding all roots of \(f^{\prime \prime }\) on [a, b]. In other words, computing the Lipschitz constant of f is as expensive as computing all roots of f. Consequently, it is not possible to apply methods that require Lipschitz constants, such as sphere tracing, without a second method that does not require Lipschitz constants to estimate the same. Note that while approximations could be used for the Lipschitz constant, significantly underestimating the Lipschitz constant would lead to missing surface intersections and overestimating Lipschitz constants would increase computational costs. Accordingly, using an accurate value for the Lipschitz constants is important for a fair comparison with baseline methods that rely on this constant.

Exclusively for the purpose of comparing with methods that require Lipschitz constants, e.g., Sphere Tracing, we construct a Chebyshev proxy \(f_p\) of degree \(n_\epsilon \) for all surface functions f and estimate their Lipschitz constant using our methods for root finding. Our results indicate, that the high root finding accuracy of our approach yields Lipschitz constants with comparably marginal errors.

4.2.1 Qualitative evaluation

When evaluating the different surface functions, we identified three distinct categories of qualitative errors in the visualized surface (see Fig. 5): (i) Deformations, e.g., the surface was visible dilated or showed a jagged instead of a smooth boundary/silhouette, (ii) Holes in the surface, e.g., a rendering method missed the ray-surface intersection, and (iii) False Surfaces, e.g., the rendering method found false surface intersections. A good example of the various kinds of error modes is the Bohemian Star function, due to the large amount of multiple roots that comprise the surface of the function (see Fig. 6). When using the shifted function \(f_p^\sigma \) as described in Sect. 4.1.4, our method does not exhibit any noticeable qualitative errors in the surface rendering, however, if no shifting was applied then our method also misses some surface intersections (see Fig. 9a); see also the detailed discussion in Sect. 4.3.

As depicted in Fig. 6, the only other methods that do not display any noticeable errors for the Bohemian Star are the Sphere Tracing and Segment Tracing approaches, which require additional knowledge about the Lipschitz constant. Using a naïve ray marching approach with sign tests yields significant gaps in the surface, with similar behavior as AMP-sign. While the AMP-Taylor approach yields no holes in the surface, it generates a large amount of false surfaces close to the multiple roots of the surface. The approach by Sherstyuk [43], yields both, false surfaces and holes in the surface, especially close to the coordinate axis. Finally, LG surfaces exhibited some false surfaces, but this error is mainly due to difficulties in estimating the Lipschitz constant and the gradient of the function, and is not necessarily a conceptual problem of the method itself. The overall qualitative results are summarized in Table 4, which highlights that the only two methods not exhibiting any visually noticeable problems are our proposed method and Sphere Tracing, which requires a globally known Lipschitz constant and which can be arbitrarily slow for grazing rays.

4.2.2 Quantitative evaluation

Evaluate the quantitative accuracy of our method, and the baseline methods, is done by evaluating an intersection point \({{\tilde{\xi }}}\) using the absolute value of the actual surface function f, i.e., \(|f({{\tilde{\xi }}})|\). The resulting error distributions are visualized as box plots in Fig. 8. It is important to note that the actual magnitude of the error \(|f({{\tilde{\xi }}})|\) depends on the Lipschitz constant of the underlying surface function. The Bohemian Star (see Fig. 6) and Kiss (see Fig. 7) surfaces, for instance, have large Lipschitz constants (see Table 1), which means that a small error in the estimation of \({{\tilde{\xi }}}\) may yield a large error in the actual function magnitude. Furthermore, to evaluate the computational performance, we only measure the actual root searching process, i.e., for methods that require evaluating Lipschitz constants (either locally or globally), the execution time of these steps is excluded as there is no fair comparison possible as finding the Lipschitz constant of a black-box function is a broader problem that is beyond the scope of our paper. Moreover, as we evaluate the Lipschitz constant, and derivatives along rays, using a Chebyshev proxy (of high numerical accuracy), including this in the evaluation of baseline methods would lead to an unfair comparison regarding computational performance as our method does not require this additional root search and thus has to be necessarily faster.

General Observations. Considering the results in Fig. 8, we observe several effects across all surface categories, which we summarize here. Firstly, Sphere and Segment tracing exhibit a very tight spread on the achieved error in the rendering which is primarily based on the stopping criterion of these methods, i.e., they use an absolute threshold value \(\epsilon \) and make no further attempts in improving the results after achieving this threshold. Conversely, these methods tend to show large variations in computational performance, especially for Sphere Tracing, as their computational performance is directly coupled to the Lipschitz constant of the underlying surface and especially for grazing rays Sphere Tracing required up to \(10^{11}\) iterations. Secondly, while the LG surface approach also shows a similar thresholding behavior for the error, the observed accuracy can be much greater as this is an upper bound for the method and not a stopping criterion, i.e., the error can be much lower than the threshold value. Finally, the ray marching approaches (Ray-March, AMP-Sign, AMP-Taylor and Sherstyuk) show relatively consistent computational performance across the different surfaces as their performance only directly depends on the computational cost of evaluating f.

Polynomial Surfaces. Our proposed method achieves a numerical accuracy comparable to the best baseline methods, e.g., Sphere Tracing. Regarding the computational performance. our method is better than most methods, i.e., only Sphere Tracing and the adaptive marching point approaches occasionally outperform our method. For this surfaces category, the Lipschitz constants can be fairly large and, consequently, the computational performance of Sphere Tracing can vary greatly for different parts of the rendered image due to grazing rays. Finally, LG-Surfaces demonstrates the worst overall computational performance in this category.

Rational Surfaces. For this category, our proposed method outperforms all baseline methods considering the achieved numerical accuracy by several orders of magnitude. However, our methods has the second highest computational cost, i.e., only LG-Surfaces exhibits higher computational efforts.

Analytic Surfaces. In this category, the performance of all methods is comparable to the rational surfaces with a few notable differences. Our proposed method performs notably worse for the exponential cube function regarding numerical accuracy, as the function is approximated with a much lower polynomial degree, than the other analytic surfaces, see Table 1. Moreover, as this function also has a Lipschitz constant that is noticeably smaller than the other surfaces of this category, Sphere Tracing also performs notably worse, regarding computational performance. Finally, the computational performance of our proposed methods and of LG-Surfaces is comparable across the category with all other methods performing noticeably better.

Algebraic Surfaces. This category shows behavior analogous to the analytic surfaces in quantitative aspects and requires no further specialized discussion.

Non-Algebraic Surfaces. In this category, our method still yields a better numerical accuracy than the other non-Lipschitz methods, i.e., Ray Marching and adaptive marching points, while performing worse than all other baselines by relatively small margins, with the single exception of the Sherstyuk approach, which performs exceptionally well for the cube surface. Regarding computational complexity, our proposed rendering approach performs worse than most baselines methods besides Segment Tracing and LG-Surfaces. It also can be observed, that there is a wide spread of computational performance, specifically for Segment Tracing and LG for surface category.

4.3 Recursive root searching

The Bohemian Star is a challenging implicit surface function as it comprises axis aligned lines and diagonally oriented curves of roots with even multiplicity, see Sect. 3.5 and Fig. 9. Visualizing the bulk surface is straight forward as these regions have single roots, i.e., there is a change of sign with large enough magnitudes on either side of the surface. On \(y=0\), \(f^\text {star}(x,y,z)\) gets

which is 0 if x and/or z are 0, and all of these roots have an even multiplicity. Evaluating this function close to these axes yields discretization errors of 0, leading to root intervals for f. For \(x=\pm z\), \(f^\text {star}(x,y,z)\) gets

which is zero for \(y=\pm \sqrt{\sqrt{x^2\left( x^2+4\right) }-x^2}\), see the dilated surface in Fig. 9 for a visualization of these curves of roots with even multiplicity. These curves of roots are numerically rarely rounded to 0 in \({\mathbb {M}}\) if evaluated in proximity to these curves. Using our recursive root search approach, a few single pixels containing these surface segments can be identified. Consequently, very few, if any, rays hit these points making them difficult to visualize, even with knowledge of their existence. Applying a shift to the function ensures that even multiplicity roots are found, and the surface is closed in the main region (Fig. 9, bottom detail view). In this case the lines of roots along the main axes are clearly visible, whereas the diagonal curves are not clearly visible as no rays intersect these curves. Note, that applying Newton’s method to pseudo-roots as post-processing in case of roots with even multiplicity may diverge significantly, as, for instance, there are no roots along the ray to converge to.

5 Conclusions

In this paper we presented a novel rendering approach for black-box implicit surface functions based on Chebyshev proxy function. Our approach can handle a wide variety of surfaces, ranging from polynomial to non-algebraic surfaces, all without requiring any knowledge of the underlying function. Furthermore, by utilizing numerically accurate and fast to evaluate Chebyshev proxy functions, our method is able to outperform methods that require similar amounts of knowledge of the underlying implicit function regarding numerical accuracy. Moreover, our proposed rendering method achieves better visual results and numerical accuracy than methods with similar requirements on the implicit function being rendered. Overall, our proposed method can serve as a useful baseline method for the evaluation of the numerical accuracy of other methods and can be used to accurately visualize complex implicit surfaces, e.g., polynomial surfaces with large regions of very thin surfaces, without requiring any derivation of properties of the function, such as their local Lipschitz constants. In the future we intend to expand this approach, e.g., using beam tracing, to reliably find small features that no individual ray is likely to intersect.

Data availability

The full source code to reproduce the dataset will be available publically after publication at https://github.com/wi-re/chebyshevSurfaces, this also includes high resolution images of all analyzed rendered images for reference.

References

Aurentz, J.L., Trefethen, L.N.: Chopping a chebyshev series. ACM Trans. Math. Softw. 43(4), 1–21 (2017)

Aydinlilar, M., Zanni, C.: Fast ray tracing of scale-invariant integral surfaces. In: Computer Graphics Forum, Wiley Online Library (2021)

Battles, Z., Trefethen, L.N.: An extension of matlab to continuous functions and operators. SIAM J. Sci. Comput. 25(5), 1743–1770 (2004)

Bernstein, S.: Quelques remarques sur l’interpolation. Math. Ann. 79(1), 1–12 (1918)

Blinn, J.F.: A generalization of algebraic surface drawing. ACM Trans. Graph. 1(3), 235–256 (1982)

Bloomenthal, J., Bajaj, C., Blinn, J., Wyvill, B., Cani, M.-P., Rockwood, A., Wyvill, G.: Introduction to implicit surfaces. Morgan Kaufmann, (1997)

Boyd, J.P.: A chebyshev polynomial interval-searching method (“lanczos economization’’) for solving a nonlinear equation with application to the nonlinear eigenvalue problem. J. Comput. Phys. 118(1), 1–8 (1995)

Boyd, J.P.: Chebyshev and Fourier spectral methods. Courier Corporation (2001)

Boyd, J.P.: Computing zeros on a real interval through chebyshev expansion and polynomial rootfinding. SIAM J. Numer. Anal. 40(5), 1666–1682 (2002)

Boyd, J.P.: Computing real roots of a polynomial in chebyshev series form through subdivision. Appl. Numer. Math. 56(8), 1077–1091 (2006)

Boyd, J.P.: Computing real roots of a polynomial in chebyshev series form through subdivision with linear testing and cubic solves. Appl. Math. Comput. 174(2), 1642–1658 (2006)

Boyd, J.P.: Solving Transcendental Equations: The Chebyshev Polynomial Proxy and Other Numerical Rootfinders, Perturbation Series, and Oracles, vol. 139. SIAM (2014)

Boyd, J.P., Gally, D.H.: Numerical experiments on the accuracy of the chebyshev-frobenius companion matrix method for finding the zeros of a truncated series of chebyshev polynomials. J. Comput. Appl. Math. 205(1), 281–295 (2007)

Caprani, O., Hvidegaard, L., Mortensen, M., Schneider, T.: Robust and efficient ray intersection of implicit surfaces. Reliable Comput. 6(1), 9–21 (2000)

Clenshaw, C.W., Curtis, A.R.: A method for numerical integration on an automatic computer. Numer. Math. 2(1), 197–205 (1960)

Collins, G. E., Loos, R.: Real zeros of polynomials. In: Computer Algebra. Springer, pp. 83–94 (1983)

Driscoll, T. A., Hale, N., Trefethen, L. N., editors.: Chebfun guide, Pafnuty Publications, Oxford (2014)

Fraser, W., Wilson, M.: Remarks on the clenshaw-curtis quadrature scheme. SIAM Rev. 8(3), 322–327 (1966)

Fryazinov, O., Pasko, A., Comninos, P.: Fast reliable interrogation of procedurally defined implicit surfaces using extended revised affine arithmetic. Comput. Graph. 34(6), 708–718 (2010)

Galaad Team, U. o. N. S. A.: Implicit algebraic surfaces. Accessed on 25 Sep 2021

Galin, E., Guérin, E., Paris, A., Peytavie, A.: Segment tracing using local lipschitz bounds. Comput. Graph. Forum 39(2), 545–554 (2020)

Génevaux, J.-D., Galin, E., Peytavie, A., Guérin, E., Briquet, C., Grosbellet, F., Benes, B.: Terrain modelling from feature primitives. Comput. Graph. Forum 34(6), 198–210 (2015)

Gomes, A., Voiculescu, I., Jorge, J., Wyvill, B., Galbraith, C.: Implicit Curves and Surfaces: Mathematics, Data Structures and Algorithms. Springer Science & Business Media (2009)

Gourmel, O., Pajot, A., Paulin, M., Barthe, L., Poulin, P.: Fitted bvh for fast raytracing of metaballs. Comput. Graph. Forum 29(2), 281–288 (2010)

Hanrahan, P.: Ray tracing algebraic surfaces. In: Proceedings of the 10th annual conference on Computer graphics and interactive techniques, pp. 83–90 (1983)

Hart, J.C.: Sphere tracing: a geometric method for the antialiased ray tracing of implicit surfaces. Vis. Comput. 12(10), 527–545 (1996)

Jacobs, S.J.: A pseudospectral method for two-point boundary value problems. J. Comput. Phys. 88(1), 169–182 (1990)

Jin, X., Tai, C.-L., Zhang, H.: Implicit modeling from polygon soup using convolution. Vis. Comput. 25(3), 279–288 (2009)

Kalra, D., Barr, A.H.: Guaranteed ray intersections with implicit surfaces. ACM Siggraph Computer Graphics 23(3), 297–306 (1989)

Kanamori, Y., Szego, Z., Nishita, T.: Gpu-based fast ray casting for a large number of metaballs. Comput. Graph. Forum 27(2), 351–360 (2008)

Keeter, M.J.: Massively parallel rendering of complex closed-form implicit surfaces. ACM Trans. Graph. 39(4), 141–1 (2020)

Keinert, B., Schäfer, H., Korndörfer, J., Ganse, U., Stamminger, M.: Enhanced sphere tracing. STAG Smart Tools Graph. 8, 4 (2014)

Knoll, A., Hijazi, Y., Hansen, C., Wald, I., Hagen, H.: Interactive ray tracing of arbitrary implicits with simd interval arithmetic. In: 2007 IEEE Symposium on Interactive Ray Tracing, IEEE, pp. 11–18 (2007)

Knoll, A., Hijazi, Y., Kensler, A., Schott, M., Hansen, C., Hagen, H.: Fast ray tracing of arbitrary implicit surfaces with interval and affine arithmetic. Comput. Graph. Forum 28(1), 26–40 (2009)

Mason, J.C., Handscomb, D.C.: Chebyshev Polynomials. CRC Press (2002)

Mavriplis, C.: Adaptive mesh strategies for the spectral element method. Comput. Methods Appl. Mech. Eng. 116(1–4), 77–86 (1994)

Mitchell, D.P.: Robust ray intersection with interval arithmetic. Proc. Graph. Interface 90, 68–74 (1990)

Nishita, T., Nakamae, E.: A method for displaying metaballs by using bézier clipping. Comput. Graph. Forum 13(3), 271–280 (1994)

O’Hara, H., Smith, F.J.: Error estimation in the clenshaw-curtis quadrature formula. Comput. J. 11(2), 213–219 (1968)

Rawat, P. S., Sukumaran-Rajam, A., Rountev, A., Rastello, F., Pouchet, L.-N., Sadayappan, P.: Associative instruction reordering to alleviate register pressure. In: SC18: International conference for high performance computing, networking, storage and analysis, IEEE, pp. 590–602 (2018)

Runge, C.: Über empirische Funktionen und die Interpolation zwischen äquidistanten Ordinaten. Zeitschrift für Mathematik und Physik 46(224–243), 20 (1901)

Seyb, D., Jacobson, A., Nowrouzezahrai, D., Jarosz, W.: Non-linear sphere tracing for rendering deformed signed distance fields. ACM Trans. Graph. 38, 6 (2019)

Sherstyuk, A.: Fast ray tracing of implicit surfaces. Comput. Graph. Forum 18(2), 139–147 (1999)

Singh, J.M., Narayanan, P.: Real-time ray tracing of implicit surfaces on the GPU. IEEE Trans. Vis. Comput. Graph. 16(2), 261–272 (2009)

Singh, J.M., Wasnik, P., Ramachandra, R.: Hessian-based robust ray-tracing of implicit surfaces on gpu. In: SIGGRAPH Asia 2018 Technical Briefs. ACM, 1–4 (2018)

Sloan, I., Smith, W.: Product integration with the clenshaw-curtis quadrature scheme. SIAM Rev. 8, 322–327 (1980)

Trefethen, L.N., Bau, D., III.: Numerical Linear Algebra, vol. 50. Siam (1997)

Van Wijk, J.J.: Ray tracing objects defined by sweeping a sphere. Comput. Graph. 9(3), 283–290 (1985)

Weierstrass, K.: Über die analytische Darstellbarkeit sogenannter willkürlicher Functionen einer reellen Veränderlichen. Sitzungsberichte der Königlich Preußischen Akademie der Wissenschaften zu Berlin 2, 633–639 (1885)

Wikipedia. Implicit algebraic surfaces. Accessed 25 Sep 2021

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Source Code

The source code will be available publically after publication at https://github.com/wi-re/chebyshevSurfaces.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: Finite precision root search

In general, the set of all roots for a \(C^0\) continuous function \(f:[a,b]\subset {{\mathbb {R}}}^2\rightarrow {\mathbb {R}}\), is defined as

Our goal is to identify the first, i.e., leftmost root. Note that we ignore the cases of \(f(a)=0\) and \(f(b)=0\) as these can be readily detected and require no specific treatment. Assuming the cardinality of \({\mathcal {R}}(f)\), \(n>0\), we strictly order \({\mathcal {R}}(f)\) the roots \(\xi _i\) as \(a<\xi _0<\ldots \xi _{n-1}< b\) and partition [a, b] into sub-intervals \(\Xi _i\)

Consequently, the \(i-\)th root \(\xi _i\) has two adjacent intervals \(\Xi _i\) and \(\Xi _{i+1}\), which can be used to describe the behavior of the function around the root \(\xi _i\). We define the sign of the interval \({\text {sgn}}_f(\Xi )\) and the magnitude of the function \({\text {mag}}_f(\Xi )\) over the interval \(\Xi = (s,t]\) as

We furthermore define the dynamic range of a root \(\xi _i\) as

which describes the relative range of values before and after a root compared to the overall magnitude of the function. Boyd [12] shows that, a Chebyshev proxy \(f_p\) of f is bound in its precision relative to the supremum of the function over the entire interval [a, b], i.e., \( \left\| f_{p,(a,b]}-f_{(a,b]} \right\| _\infty \approx \left\| f_{(s,t]} \right\| _\infty \epsilon \), that is, the highest achievable dynamic range by an approximation is bound by \(\epsilon \), which for \(\epsilon = \epsilon _m\) in double precision arithmetic is approximately 16.5.

The explicit determination of \({\mathcal {R}}(f)\) for \(f:[a,b]\rightarrow {\mathbb {R}}, \;a,b\in {\mathbb {R}}\) is not generally possible within finite precision, i.e., over \({\mathbb {M}}\). Therefore, f is discretized using a machine implemented variant \(f_m:[a,b]\rightarrow {\mathbb {M}}\), assuming that \(a,b\in {\mathbb {M}}\), utilizing finite precision arithmetic over the machine numbers \({\mathbb {M}}\). Using \(f_m\) for root finding for f needs specific consideration.

Note, that in the following, all variables are assumed to be in \({\mathbb {M}}\). Discretizing f, yielding \(f_m\), and subsequently approximating \(f_m\) by \(f_p\) involves four types of errors:

-

1.

Domain discretization, when moving from \([a,b]\subset {{\mathbb {R}}}\) to \([a,b]\subset {\mathbb {M}}\)

-

2.

Image discretization, when outputting function image values in \({\mathbb {M}}\) instead of \({\mathbb {R}}\)

-

3.

Evaluation errors due to finite precision operations used during function evaluation

-

4.

Approximation errors from \(f_m\) to \(f_p\)

1.1 Appendix A.1: Domain discretization

Domain discretization of \([a,b]\subset {{\mathbb {R}}}\) gets \([a,b]\subset {\mathbb {M}}\), and requires handling any root \(\xi \in [a,b]{\setminus }{\mathbb {M}}\). To handle intervals of real numbers between adjacent machine numbers, we define the higher \(x_+\) and lower \(x_-\) of \(x\in {\mathbb {M}}\) as

Given the rounding operator \(\lfloor x_{\mathbb {R}}\rceil :{\mathbb {R}}\rightarrow {\mathbb {M}}\) that yields the closest value in \({\mathbb {M}}\) for \(x\in {\mathbb {R}}\), we define the machine precision interval \([x_l,x_r]\) for a real number \(x\in {\mathbb {R}}\) as

Operating on \({\mathbb {M}}\), a root \(\xi \in ]a, b[{\setminus }{\mathbb {M}}\) is not captured by the definition of \({\mathcal {R}}_f\) in Eq. A.1. More precisely, \(\xi \in ]a, b[\setminus {\mathbb {M}}\) can only be captured, if \({\text {sgn}}(f(\xi _l)) \ne {\text {sgn}}(f(\xi _r))\), for which the intermediate value theorem guarantees the existence of a root in \((\xi _l, \xi _r)\) for \(C^0\)-continuous functions. For all other cases, i.e., \(f(\xi _l)=0\), \(f(\xi _r)=0\), or \({\text {sgn}}(f(\xi _l)) = {\text {sgn}}(f(\xi _r))\), no conclusion can be made regarding roots within \((\xi _l, \xi _r)\). Accordingly, the definition in Eq. A.1 is expanded to

Lipschitz argument. While we focus on rendering functions without any knowledge about Lipschitz constants, some optimizations can be applied for Lipschitz continuous functions (see Sect. 3.3). Given that f is Lipschitz continuous with Lipschitz constant \(k<\infty \), i.e.,

a necessary condition for the existence of a root within the interval [s, t] can be deduced from \(f:[s,t]\rightarrow [ \max \{f(s),f(t)\} - k (t - s), \min \{f(s), f(t)\} + k (t - s)\}]\). Specifically, the condition

is required for f to have a root in [s, t].

1.2 Appendix A.2: Image discretization

Image discretization refers to the mapping of the output values of f from \({\mathbb {R}}\) to \({\mathbb {M}}\), yielding \(f_d(x) = \lfloor f(x)\rceil \). As \(0\in {\mathbb {M}}\), no zeroes get lost, but as the mapping is non-bijective, non-zero values can be mapped to zero. More precisely, any value \({0_-}/{2}< f(x) < {0_+}/{2}\) will be mapped to zero when using \(f_d\) instead of f, yielding a pointwise error function \(e_\text {round}:{\mathbb {M}}\rightarrow {\mathbb {R}}\):

Important note. It is not possible to distinguish if a value x with \(f_d(x)=0\), is an actual root, i.e., \(f(x)=0\), or only an apparent root due to rounding, using finite precision arithmetic. For example, consider \(f(x)=x^2\), for which any \(x \in (-\sqrt{|{0_-}|/{2}}, \sqrt{{0_+}/{2}})\) (\(\approx 10^{22}\) values) yields \(f_d(x)=0\). Practically, any value within such an interval is treated as the same root for our purpose.

1.3 Appendix A.3: Evaluation errors

Evaluation errors occur when performing operations \(x{\text {op}}_{\mathbb {M}}y,\;x,y\in {\mathbb {M}}\) in finite precision, which may not yield the exact result of the infinite precision operation \(x{\text {op}}_{\mathbb {R}}y\) in over \({{\mathbb {R}}}\), yielding the relative error z(x, y) with

\( \left| z(x,y) \right| \le \epsilon _m\) for the machine precision \(\epsilon _m\) (\(2^{-52}\) in double precision) for elementary operations (e.g., \(+,-\)). Complex operations and function implementations comprise several elementary operations and may lead to catastrophic cancelation, e.g., divisions of values at extreme different scale.

Important note. As evaluation errors induced by machine implementation \(f_m\) of a mathematical function f are outside our control and the scope of this paper, we assume the machine implementation \(f_m\) to be “correct,” i.e., we assume an ideal implementation \(f_m(x) = f_d(x) = \lfloor f(x)\rceil ,\;x\in {\mathbb {M}}\). Specifically, we assume \(f_m\) to be properly defined, i.e., it maps to \({\mathbb {M}}{\setminus }\{\pm \infty \}\). Therefore, while a (mathematical) function f may not have a Lipschitz constant \(k<\infty \), \(f_m\) has a machine Lipschitz constant due to the discrete representation. More precisely, any two adjacent machine representable numbers \(x,x'\in {\mathbb {M}}\) have a finite difference in function magnitude \( \left| f_m(x)-f_m(x') \right| \) and, thus, there is an upper bound for this local discrete change rate of \(f_m\). We will use the fact that a machine Lipschitz constant exists for \(f_m\), even though we do not require the specific constant.

1.4 Appendix A.4: Approximation errors

For the following section of this paper, we will make heavy use of approximations \(f_p\) to \(f_m\) using Chebyshev polynomials. Therefore, we discuss the impact of approximations to root finding in general. In combination with Eq. A.10, the approximation adds another pointwise error \(e_\text {approx}:{\mathbb {M}}\rightarrow {\mathbb {R}}\), yielding

As we assume \(f_m\) to be an ideal implementation and as \(e_\text {round}(x)\) is unknowable, but small, the approximation error \(e_\text {approx}(x)\) is dominant, and we consider

and we define the upper bound of the error as

Accordingly, the (ideal) machine implementation \(f_m\) of f lays in the interval \(f_p(x) - \epsilon \le f_m(x) \le f_p(x) + \epsilon \) and any value \(|f_p(x)|\le \epsilon \) potentially indicates a root for \(f_m(x)\). Considering the set of (potential) roots of \(f_m\) when using its approximation \(f_p\), we expand Eq. A.7 by incorporating the error bound \(\epsilon \)

\({\mathcal {R}}^\text {approx}(f_m)\) includes significantly more values than \({\mathcal {R}}(f)\).

Important note. Root searching methods commonly consider Eq. A.7 also in case they use a proxy function \(f_p\). As the root set in Eq. A.15 is “overcomplete,” our approach presented in Sect. 3 finds the leftmost root using \(f_p\) in combination with Eq. A.15 and optionally refines the root using \(f_m\) based on Eq. A.7.

Considering the task of finding the root \(\xi _i\) of \(f_m\) using the proxy \(f_p\) requires that \(f_m\) has a magnitude of at least \(\epsilon \) on either side of the root, i.e.,

In general, the approximation error \(\epsilon \) from Eq. A.14 steers the set of potential roots of \(f_m\) given \(f_p\); see Eq. A.15. If we specify \(f_p\) to be a Chebyshev proxy function to \(f_m\), it is possible to decrease \(\epsilon \) by increasing the polynomial degree of the Chebyshev polynomial \(f_p\) for infinite precision (Weierstrass Approximation Theorem). However, higher-degree Chebyshev polynomials do not necessarily improve the accuracy of the approximation in finite precision arithmetic due to the accumulation of numerical errors inherent to evaluating larger sets of operations.

Consequently, there is a lower bound of accuracy when constructing the Chebyshev proxy \(f_p\) that is independent of the polynomial degree of \(f_p\), and several heuristics estimate \(\epsilon \) [12, 39]. We utilize the bound estimate \(\epsilon _a\) proposed by Boyd [12], which is based on the coefficients \(c_i\) and degree n of the Chebyshev proxy, that, according to Boyd [12], can not provide a better approximation accuracy than \(\epsilon _a\), i.e.,

where \({{\text {mmc}}(f_p)}\) is the maximum magnitude coefficient.

Furthermore, within our proxy construction process we utilized the adaptive approach of Boyd [12] and the heuristic chopping process of Aurentz and Trefethen [1], which relies on a user provided parameter \(\epsilon _u\) that steers the accuracy of the proxy function that tries to achieve \( \left\| f_m-f_p \right\| _\infty \le \epsilon _u\). The approach of Aurentz and Trefethen [1] uses the parameter \(\epsilon _u\) to set the threshold for the round-off plateau detection. This round-off plateau is the result of the difference in magnitude of coefficients being limited by \(\epsilon _m\), as any coefficient smaller than \({{\text {mmc}}(f_p)}/\epsilon _m\) will (most likely) not affect the result of machine precision operations. Therefore, \(\epsilon _u\) can not be chosen below a value that depends on \({{\text {mmc}}(f_p)}\) and the approximation error is bound on both sides

where both limits depend on \({{\text {mmc}}(f_p)}\) [1, 12]. \({\text {mmc}}(f_p)\) is considered as being proportional to the magnitude of the function [12]

where the error terms are bound in terms of relative machine precision as \(\epsilon _a,\epsilon _u \le \epsilon _m {\text {mag}}_{f_m}(a,b)\) [12].

Appendix B: Function definitions

The blend functions used throughout the evaluation in Sect. 4 are given by:

The functions used throughout the evaluation of the paper:

Functions (B.8), (B.17), (B.18) and (B.19) were taken from [20], while (B.10) and (B.12) are from [50].

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Winchenbach, R., Möller, M. & Kolb, A. Lipschitz-agnostic, efficient and accurate rendering of implicit surfaces. Vis Comput (2024). https://doi.org/10.1007/s00371-023-03216-y

Accepted:

Published:

DOI: https://doi.org/10.1007/s00371-023-03216-y