Abstract

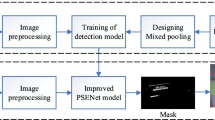

Due to the emergence and advancement of deep learning technologies, scene text detection is becoming more widespread in various fields. However, due to the complexity of distances, angles and backgrounds, the adjacent texts in images have the problem that the detection boxes are far away from the texts, i.e., a position is not accurate enough. In this paper, we propose a text detection method centered on double ResNet-based and changed channels recursive feature pyramid, which integrates ResNet50-Mish and Res2Net50-Mish, as well as using recursive feature pyramid with changed channels. Firstly, scene images are fed into ResNet50-Mish and Res2Net50-Mish of double ResNet-based, and results are passed through a weight-based addition step to generate the fused feature maps. Secondly, the processed feature maps of double ResNet-based are sent into changed channels recursive feature pyramid to obtain feature maps with enhanced feature information. Also, the relevant segmentation results are then obtained by concatenating and convoluting. Finally, the results are given to progressive scale expansion algorithm to output the location of texts in images. The proposed model is trained and tested on ICDAR15 and CTW1500 benchmark datasets. In terms of precision values, our method outperforms or is comparable to state-of-the-art methods. In particular, experimental results achieve 91.53% precision on ICDAR15 dataset and 84.89% precision on CTW-1500 dataset.

Similar content being viewed by others

Data Availability

The data that support this study are available by contacting the corresponding author if necessary.

Abbreviations

- BLSTM:

-

Bidirectional long short-term memory network

- BN:

-

Batch normalization

- Bottleneck A or BottleneckA:

-

The first Bottleneck of LayerX

- Bottleneck B or BottleneckB:

-

Bottlenecks except the first one of LayerX

- CBNet:

-

Composite backbone network

- CCM:

-

Changed channels module

- CCRFP:

-

Changed channels recursive feature pyramid

- Conv1:

-

The \(7\times 7\) convolution layers

- CTPN:

-

Connectionist text proposal network

- DRN:

-

Double ResNet-based

- DC-PSENet:

-

A PSENet consisting of DRN and CCRFP

- Faster R-CNN:

-

Toward real-time object detection with region proposal networks

- EAST:

-

An efficient and accurate STD

- Fast R-CNN:

-

Faster region-based convolutional neural network

- FPN:

-

Feature pyramid network

- FCN:

-

Fully convolutional network

- FCENet:

-

Fourier contour embedding network

- LayerX:

-

Modules after the \(7\times 7\) convolution layers

- LSTM:

-

Long short-term memory network

- PAN:

-

Pixel aggregation network

- PAN++:

-

An extended version of PAN

- PSENet:

-

Progressive scale expansion network

- ResNet:

-

Residual network

- ResNet18:

-

ResNet with 18 layers

- ResNet50:

-

ResNet with 50 layers

- RFP:

-

Recursive feature pyramid

- ReLU:

-

Rectified linear units

- ResNet50-Mish:

-

ResNet50 with Mish activation function

- Res2Net50-Mish:

-

Res2Net50 with Mish activation function

- SegLink:

-

Segment linking

- SLC:

-

Same-level composition

- SSD:

-

Single-shot multibox detector

- STD:

-

Scene text detection

- VGG16:

-

Very deep convolutional networks

References

Liu, Z., Zhou, W., Li, H.: AB-LSTM: Attention-based bidirectional LSTM model for scene text detection. ACM Trans. Multimedia Comput. Commun. Appl. (2019). https://doi.org/10.1145/3356728

Long, S., He, X., Yao, C.: Scene text detection and recognition: the deep learning era. Int. J. Comput. Vis. 129(1), 161–184 (2021). https://doi.org/10.1007/s11263-020-01369-0

Kang, J., Ibrayim, M., Hamdulla, A.: Overview of scene text detection and recognition. In: 2022 14th International Conference on Measuring Technology and Mechatronics Automation (ICMTMA), pp. 661–666 (2022). https://doi.org/10.1109/ICMTMA54903.2022.00137

Chaung, H.-H., Chen, D.-W., Lin, C.-H.: Multi-language text detection and recognition based on deep learning. In: 2021 IEEE International Conference on Consumer Electronics-Taiwan (ICCE-TW), pp. 1–2 (2021). https://doi.org/10.1109/ICCE-TW52618.2021.9603182

Tang, Y., Zhu, M., Chen, Z., Wu, C., Chen, B., Li, C., Li, L.: Seismic performance evaluation of recycled aggregate concrete-filled steel tubular columns with field strain detected via a novel mark-free vision method. Structures 37, 426–441 (2022). https://doi.org/10.1016/j.istruc.2021.12.055

Taşyürek, M.: ODRP: a new approach for spatial street sign detection from EXIF using deep learning-based object detection, distance estimation, rotation and projection system. Vis. Comput. (2023). https://doi.org/10.1007/s00371-023-02827-9

Song, S., Huang, T., Zhu, Q., Hu, H.: ODSPC: deep learning-based 3D object detection using semantic point cloud. Vis. Comput. (2023). https://doi.org/10.1007/s00371-023-02820-2

Rainarli, E.: Suprapto, Wahyono: a decade: review of scene text detection methods. Comput. Sci. Rev. 42, 100434 (2021). https://doi.org/10.1016/j.cosrev.2021.100434

Li, G.: CSNet-PGNet: algorithm for scene text detection and recognition. In: 2022 3rd International Conference on Computer Vision, Image and Deep Learning & International Conference on Computer Engineering and Applications (CVIDL & ICCEA), pp. 1217–1224 (2022). https://doi.org/10.1109/CVIDLICCEA56201.2022.9824815

Perepu, P.K.: Deep learning for detection of text polarity in natural scene images. Neurocomputing 431, 1–6 (2021). https://doi.org/10.1016/j.neucom.2020.12.054

Liu, B., Jin, J.: Text detection based on bidirectional feature fusion and SA attention mechanism. In: 2022 IEEE Asia-Pacific Conference on Image Processing, Electronics and Computers (IPEC), pp. 912–915 (2022). https://doi.org/10.1109/IPEC54454.2022.9777406

Shinde, A., Patil, M.: Street view text detection methods: review paper. In: 2021 International Conference on Artificial Intelligence and Smart Systems (ICAIS), pp. 961–965 (2021). https://doi.org/10.1109/ICAIS50930.2021.9395776

Ye, Q., Doermann, D.: Text detection and recognition in imagery: a survey. IEEE Trans. Pattern Anal. Mach. Intell. 37(7), 1480–1500 (2015). https://doi.org/10.1109/TPAMI.2014.2366765

Zhu, Y., Yao, C., Bai, X.: Scene text detection and recognition: recent advances and future trends. Front. Comput. Sci. 10(1), 19–36 (2016). https://doi.org/10.1007/s11704-015-4488-0

Lee, J.-J., Lee, P.-H., Lee, S.-W., Yuille, A., Koch, C.: AdaBoost for text detection in natural scene. In: 2011 International Conference on Document Analysis and Recognition, pp. 429–434 (2011). https://doi.org/10.1109/ICDAR.2011.93

Ye, Q., Huang, Q., Gao, W., Zhao, D.: Fast and robust text detection in images and video frames. Image Vis. Comput. 23(6), 565–576 (2005). https://doi.org/10.1016/j.imavis.2005.01.004

Raisi, Z., Naiel, M.A., Fieguth, P.W., Wardell, S., Zelek, J.S.: Text detection and recognition in the wild: a review (2020). CoRR arXiv:2006.04305

Ye, M., Zhang, J., Zhao, S., Liu, J., Du, B., Tao, D.: DPText-DETR: towards better scene text detection with dynamic points in transformer. In: Proceedings of the AAAI Conference on Artificial Intelligence (2023)

Ren, S., He, K., Girshick, R.B., Sun, J.: Faster R-CNN: towards real-time object detection with region proposal networks (2015). CoRR arXiv: 1506.01497

Liu, W., Anguelov, D., Erhan, D., Szegedy, C., Reed, S., Fu, C.-Y., Berg, A.C.: SSD: single shot MultiBox detector. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) Computer Vision—ECCV 2016, pp. 21–37. Springer, Cham (2016)

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. arXiv e-prints, pp. 1409–1556 (2014) arXiv:1409.1556 [cs.CV]

Liao, M., Shi, B., Bai, X., Wang, X., Liu, W.: TextBoxes: a fast text detector with a single deep neural network. CoRR abs/1611.06779 (2016) arXiv:1611.06779

Shelhamer, E., Long, J., Darrell, T.: Fully convolutional networks for semantic segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 39(4), 640–651 (2017). https://doi.org/10.1109/TPAMI.2016.2572683

Shi, B., Bai, X., Belongie, S.: Detecting oriented text in natural images by linking segments. In: 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 3482–3490 (2017). https://doi.org/10.1109/CVPR.2017.371

Long, S., Ruan, J., Zhang, W., He, X., Wu, W., Yao, C.: TextSnake: a flexible representation for detecting text of arbitrary shapes. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) Computer Vision—ECCV 2018, pp. 19–35. Springer, Cham (2018)

Lin, T.-Y., Dollár, P., Girshick, R., He, K., Hariharan, B., Belongie, S.: Feature pyramid networks for object detection. In: 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 936–944 (2017). https://doi.org/10.1109/CVPR.2017.106

Liu, H., Yuan, M., Wang, T., Ren, P., Yan, D.-M.: LIST: low illumination scene text detector with automatic feature enhancement. Vis. Comput. 38(9), 3231–3242 (2022). https://doi.org/10.1007/s00371-022-02570-7

Wang, W., Xie, E., Li, X., Hou, W., Lu, T., Yu, G., Shao, S.: Shape robust text detection with progressive scale expansion network. In: 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 9328–9337 (2019). https://doi.org/10.1109/CVPR.2019.00956

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 770–778 (2016). https://doi.org/10.1109/CVPR.2016.90

Wang, W., Xie, E., Song, X., Zang, Y., Wang, W., Lu, T., Yu, G., Shen, C.: Efficient and accurate arbitrary-shaped text detection with pixel aggregation network. In: 2019 IEEE/CVF International Conference on Computer Vision (ICCV), pp. 8439–8448 (2019). https://doi.org/10.1109/ICCV.2019.00853

Wang, W., Xie, E., Li, X., Liu, X., Liang, D., Yang, Z., Lu, T., Shen, C.: PAN++: towards efficient and accurate end-to-end spotting of arbitrarily-shaped text. IEEE Trans. Pattern Anal. Mach. Intell. 44(9), 5349–5367 (2022). https://doi.org/10.1109/TPAMI.2021.3077555

Zhu, Y., Chen, J., Liang, L., Kuang, Z., Jin, L., Zhang, W.: Fourier contour embedding for arbitrary-shaped text detection. In: 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 3122–3130 (2021). https://doi.org/10.1109/CVPR46437.2021.00314

Wu, Q., Luo, W., Chai, Z., Guo, G.: Scene text detection by adaptive feature selection with text scale-aware loss. Appl. Intell. 52(1), 514–529 (2022). https://doi.org/10.1007/s10489-021-02331-4

Wang, X., Yi, Y., Peng, J., Wang, K.: Arbitrary-shaped scene text detection by predicting distance map. Appl. Intell. 52(12), 14374–14386 (2022). https://doi.org/10.1007/s10489-021-03065-z

Gao, S.-H., Cheng, M.-M., Zhao, K., Zhang, X.-Y., Yang, M.-H., Torr, P.: Res2Net: a new multi-scale backbone architecture. IEEE Trans. Pattern Anal. Mach. Intell. 43(2), 652–662 (2021). https://doi.org/10.1109/TPAMI.2019.2938758

Qiao, S., Chen, L., Yuille, A.L.: DetectoRS: detecting objects with recursive feature pyramid and switchable atrous convolution. CoRR abs/2006.02334 (2020) arXiv:2006.02334

Liu, Y., Wang, Y., Wang, S., Liang, T., Zhao, Q., Tang, Z., Ling, H.: CBNet: a novel composite backbone network architecture for object detection. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 34, pp. 11653–11660 (2020). https://doi.org/10.1609/aaai.v34i07.6834

Gabbasov, R., Paringer, R.: Influence of the receptive field size on accuracy and performance of a convolutional neural network. In: 2020 International Conference on Information Technology and Nanotechnology (ITNT), pp. 1–4 (2020). https://doi.org/10.1109/ITNT49337.2020.9253219

Tang, Y., Huang, Z., Chen, Z., Chen, M., Zhou, H., Zhang, H., Sun, J.: Novel visual crack width measurement based on backbone double-scale features for improved detection automation. Eng. Struct. 274, 115158 (2023). https://doi.org/10.1016/j.engstruct.2022.115158

Tang, Y., Zhou, H., Wang, H., Zhang, Y.: Fruit detection and positioning technology for a Camellia oleifera C. Abel orchard based on improved YOLOv4-tiny model and binocular stereo vision. Expert Syst. Appl. 211, 118573 (2023). https://doi.org/10.1016/j.eswa.2022.118573

Ramachandran, P., Zoph, B., Le, Q.V.: Searching for activation functions. CoRR abs/1710.05941 (2017) arXiv:1710.05941

Chen, Y., Dai, X., Liu, M., Chen, D., Yuan, L., Liu, Z.: Dynamic ReLU. CoRR abs/2003.10027 (2020) arXiv:2003.10027

Ma, N., Zhang, X., Sun, J.: Activate or not: Learning customized activation. CoRR abs/2009.04759 (2020) arXiv:2009.04759

He, K., Zhang, X., Ren, S., Sun, J.: Delving deep into rectifiers: surpassing human-level performance on imagenet classification. In: Proceedings of the IEEE International Conference on Computer Vision (ICCV) (2015)

Nair, V., Hinton, G.E.: Rectified linear units improve restricted Boltzmann machines. In: Proceedings of the 27th International Conference on International Conference on Machine Learning. ICML’10, pp. 807–814. Omnipress, Madison (2010)

Misra, D.: Mish: a self regularized non-monotonic neural activation function. CoRR abs/1908.08681 (2019) arXiv:1908.08681

Shrivastava, A., Gupta, A., Girshick, R.: Training region-based object detectors with online hard example mining. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 761–769 (2016). https://doi.org/10.1109/CVPR.2016.89

Karatzas, D., Gomez-Bigorda, L., Nicolaou, A., Ghosh, S., Bagdanov, A., Iwamura, M., Matas, J., Neumann, L., Chandrasekhar, V.R., Lu, S., Shafait, F., Uchida, S., Valveny, E.: Icdar 2015 competition on robust reading. In: 2015 13th International Conference on Document Analysis and Recognition (ICDAR), pp. 1156–1160 (2015). https://doi.org/10.1109/ICDAR.2015.7333942

Liu, Y., Jin, L., Zhang, S., Zhang, S.: Detecting curve text in the wild: New dataset and new solution. CoRR abs/1712.02170 (2017) arXiv:1712.02170

Zhang, C., Liang, B., Huang, Z., En, M., Han, J., Ding, E., Ding, X.: Look more than once: An accurate detector for text of arbitrary shapes. In: 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 10544–10553 (2019). https://doi.org/10.1109/CVPR.2019.01080

Kim, K., Cheon, Y., Hong, S., Roh, B., Park, M.: PVANET: deep but lightweight neural networks for real-time object detection. CoRR abs/1608.08021 (2016) arXiv:1608.08021

Tian, Z., Huang, W., He, T., He, P., Qiao, Y.: Detecting text in natural image with connectionist text proposal network. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) Computer Vision—ECCV 2016, pp. 56–72. Springer, Cham (2016)

Tang, J., Yang, Z., Wang, Y., Zheng, Q., Xu, Y., Bai, X.: Seglink++: detecting dense and arbitrary-shaped scene text by instance-aware component grouping. Pattern Recognit. 96, 106954 (2019). https://doi.org/10.1016/j.patcog.2019.06.020

Zhou, X., Yao, C., Wen, H., Wang, Y., Zhou, S., He, W., Liang, J.: EAST: an efficient and accurate scene text detector. In: 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 2642–2651 (2017). https://doi.org/10.1109/CVPR.2017.283

Graves, A., Schmidhuber, J.: Framewise phoneme classification with bidirectional LSTM and other neural network architectures. Neural Netw. 18(5), 602–610 (2005). https://doi.org/10.1016/j.neunet.2005.06.042

Deng, D., Liu, H., Li, X., Cai, D.: PixelLink: Detecting scene text via instance segmentation. CoRR abs/1801.01315 (2018) arXiv:1801.01315

He, M., Liao, M., Yang, Z., Zhong, H., Tang, J., Cheng, W., Yao, C., Wang, Y., Bai, X.: MOST: a multi-oriented scene text detector with localization refinement. In: 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 8809–8818 (2021). https://doi.org/10.1109/CVPR46437.2021.00870

Acknowledgements

This study was supported by the National Natural Science Foundation of China under Grant No. 12101289, the Natural Science Foundation of Fujian Province under Grant Nos. 2020J01821 and 2022J01891, the Institute of Meteorological Big Data-Digital Fujian, and Fujian Key Laboratory of Data Science and Statistics (Minnan Normal University), China.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Huang, L., Liao, S. & Yang, W. DC-PSENet: a novel scene text detection method integrating double ResNet-based and changed channels recursive feature pyramid. Vis Comput 40, 4473–4491 (2024). https://doi.org/10.1007/s00371-023-03093-5

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00371-023-03093-5