Abstract

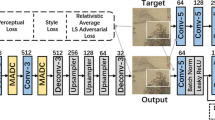

Image inpainting techniques have made rapid progresses in recent years. Recent advancements focus mainly on generating realistic and semantically plausible structure and texture features in missing regions. However, current popular inpainting methods rely typically on a single encoder–decoder or two separate encoder–decoders, which lead to inconsistent contextual semantics and blurry textures. To address the above issue, a dual-feature encoder implemented by structure and texture features is proposed. It utilizes skip connection to guide its corresponding decoder to fill image structure information (deep layer) and texture information (shallow layer) in the attention-based latent space. Additionally, we design multi-scale receptive fields to further improve the consistency of contextual semantics and image details. The experimental findings demonstrate that our method can effectively repair the structure and texture information of missing images with superior performance on three commonly used datasets. Furthermore, we build a mural dataset from the Mogao Grottoes and successfully restore them using our network.

Similar content being viewed by others

Data Availability

The data that support the findings of this study are openly available in https://github.com/zhangjiajunAlex/DEST.

References

Qin, Z., Zeng, Q., Zong, Y., Fan, X.: Image inpainting based on deep learning: a review. Displays 69, 102028 (2021)

Liang, D., Zhang, D., Wang, Q., Wei, Z., Zhang, L.: Crossnet: Cross-scene background subtraction network via 3d optical flow. IEEE Trans. Multimed. (2023)

Bertalmio, M., Sapiro, G., Caselles, V. and Ballester, C.: Image inpainting. In: Proceedings of the 27th Annual Conference on Computer Graphics and Interactive Techniques, pp. 417–424 (2000)

Shen, J., Chan, T.F.: Mathematical models for local nontexture inpaintings. SIAM J. Appl. Math. 62(3), 1019–1043 (2002)

Chan, T.F., Shen, J.: Nontexture inpainting by curvature-driven diffusions. J. Vis. Commun. Image Represent. 12(4), 436–449 (2001)

Criminisi, A., Pérez, P., Toyama, K.: Region filling and object removal by exemplar-based image inpainting. IEEE Trans. Image Process. 13(9), 1200–1212 (2004)

Barnes, C., Shechtman, E., Finkelstein, A., Goldman, D.B.: Patchmatch: a randomized correspondence algorithm for structural image editing. ACM Trans. Gr. 28(3), 24 (2009)

Huang, J.-B., Kang, S.B., Ahuja, N., Kopf, J.: Image completion using planar structure guidance. ACM Trans. Gr. 33(4), 1–10 (2014)

Hinton, G.E., Salakhutdinov, R.R.: Reducing the dimensionality of data with neural networks. Science 313(5786), 504–507 (2006)

Goodfellow, I., Pouget-Abadie, J., Mirza, M., Bing, X., Warde-Farley, D., Ozair, S., Courville, A., Bengio, Y.: Generative adversarial nets. In: Neural Information Processing Systems (2014)

Bao, J., Chen, D., Wen, F., Li, H., Hua, G.: fine-grained image generation through asymmetric training. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 2745–2754 (2017)

Mao, X., Li, Q., Xie, H., Lau, R.Y., Wang, Z., Paul Smolley, S.: Least squares generative adversarial networks. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 2794–2802 (2017)

Quan, W., Zhang, R., Zhang, Y., Li, Z., Wang, J., Yan, D.-M.: Image inpainting with local and global refinement. IEEE Trans. Image Process. 31, 2405–2420 (2022)

O’Shea, K., Nash, R.: An introduction to convolutional neural networks. arXiv preprint arXiv:1511.08458 (2015)

Pathak, D., Krahenbuhl, P., Donahue, J., Darrell, T., Efros, A.A.: Context encoders: feature learning by inpainting. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2536–2544 (2016)

Cao, Z., Chu, Z., Liu, D., Chen, Y.: A vector-based representation to enhance head pose estimation. In: Proceedings of the IEEE/CVF Winter Conference on applications of computer vision, pp. 1188–1197 (2021)

Cui, Y., Yan, L., Cao, Z., Liu, D.: Tf-blender: temporal feature blender for video object detection. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 8138–8147 (2021)

Liu, D., Cui, Y., Tan, W., Chen, Y.: Sg-net: Spatial granularity network for one-stage video instance segmentation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 9816–9825 (2021)

Yang, C., Lu, X., Lin, Z., Shechtman, E., Wang, O., Li, H.: High-resolution image inpainting using multi-scale neural patch synthesis. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 6721–6729 (2017)

Iizuka, S., Simo-Serra, E., Ishikawa, H.: Globally and locally consistent image completion. ACM Trans. Gr. 36(4), 1–14 (2017)

Demir, U., Unal, G.: Patch-based image inpainting with generative adversarial networks. arXiv preprint arXiv:1803.07422 (2018)

Chen, M., Liu, Z., Ye, L., Wang, Y.: Attentional coarse-and-fine generative adversarial networks for image inpainting. Neurocomputing 405, 259–269 (2020)

Zhang, Y., Wang, Y., Dong, J., Qi, L., Fan, H., Dong, X., Jian, M., Hui, Yu.: A joint guidance-enhanced perceptual encoder and atrous separable pyramid-convolutions for image inpainting. Neurocomputing 396, 1–12 (2020)

Yu, J., Lin, Z., Yang, J., Shen, X., Lu, X., Huang, T.S.: Generative image inpainting with contextual attention. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 5505–5514 (2018)

Liu, G., Reda, F.A., Shih, K.J., Wang, T.C., Tao, A., Catanzaro, B.: Image inpainting for irregular holes using partial convolutions. In: Proceedings of the European conference on computer vision (ECCV), pp. 85–100 (2018)

Xie, C., Liu, S., Li, C., Cheng, M.M., Zuo, W., Liu, X., Wen, S., Ding, E.: Image inpainting with learnable bidirectional attention maps. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 8858–8867 (2019)

Liu, H., Jiang, B., Song, Y., Huang, W., Yang, C.: Rethinking image inpainting via a mutual encoder–decoder with feature equalizations. In: Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part II 16, 725–741. Springer (2020)

Zheng, C., Cham, T.J., Cai, J.: Pluralistic image completion. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 1438–1447 (2019)

Zhao, L., Mo, Q., Lin, S., Wang, Z., Zuo, Z., Chen, H., Xing, W., Lu, D.: Uctgan: diverse image inpainting based on unsupervised cross-space translation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 5741–5750 (2020)

Liming, X., Zeng, X., Li, W., Huang, Z.: Multi-granularity generative adversarial nets with reconstructive sampling for image inpainting. Neurocomputing 402, 220–234 (2020)

Yu, J., Lin, Z., Yang, J., Shen, X., Lu, X., Huang, T.S.: Free-form image inpainting with gated convolution. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 4471–4480 (2019)

Nazeri, K., Ng, E., Joseph, T., Qureshi, F., Ebrahimi, M.: Edgeconnect: structure guided image inpainting using edge prediction. In: Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops (2019)

Yi, Z., Tang, Q., Azizi, S., Jang, D., Xu, Z.: Contextual residual aggregation for ultra high-resolution image inpainting. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 7508–7517 (2020)

Peng, J., Liu, D., Xu, S. and Li, H.: Generating diverse structure for image inpainting with hierarchical vq-vae. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 10775–10784 (2021)

Razavi, A., Van den Oord, A., Vinyals, O.: Generating diverse high-fidelity images with vq-vae-2. Adv. Neural Inf. Process. Syst 32 (2019)

Ren, Y., Yu, X., Zhang, R., Li, T.H., Liu, S., Li, G.: Structure flow: image inpainting via structure-aware appearance flow. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 181–190 (2019)

Yang, S., Wang, Y., Cai, H., Chen, X.: Residual inpainting using selective free-form attention. Neurocomputing 510, 149–158 (2022)

Yoshida, Y., Miyato, T.: Spectral norm regularization for improving the generalizability of deep learning. arXiv preprint arXiv:1705.10941 (2017)

Liu, D., Cui, Y., Yan, L., Mousas, C., Yang, B., Chen, Y.: Densernet: weakly supervised visual localization using multi-scale feature aggregation. In: Proceedings of the AAAI Conference on Artificial Intelligence vol. 35, pp. 6101–6109 (2021)

Zhang, H., Goodfellow, I., Metaxas, D., Odena, A.: Self-attention generative adversarial networks. In: International Conference on Machine Learning, pp. 7354–7363. PMLR (2019)

Li, J., Wang, N., Zhang, L., Du, B., Tao, D.: Recurrent feature reasoning for image inpainting. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 7760–7768 (2020)

Johnson, J., Alahi, A., Fei-Fei, L.: Perceptual losses for real-time style transfer and super-resolution. In: Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, October 11–14, 2016, Proceedings, Part II 14, 694–711. Springer (2016)

Gatys, L.A., Ecker, A.S., Bethge, M.: Image style transfer using convolutional neural networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2414–2423 (2016)

Liang, D., Li, L., Wei, M., Yang, S., Zhang, L., Yang, W., Yun, D., Zhou, H.: Semantically contrastive learning for low-light image enhancement. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 36, pp. 1555–1563 (2022)

Karras, Tero, Aila, Timo, Laine, Samuli, Lehtinen, Jaakko, Progressive growing of gans for improved quality, stability, and variation. arXiv preprint arXiv:1710.10196, (2017)

Liu, Z., Luo, P., Wang, X., Tang, X.: Deep learning face attributes in the wild. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 3730–3738 (2015)

Zhou, B., Lapedriza, A., Khosla, A., Oliva, A., Torralba, A.: Places: a 10 million image database for scene recognition. IEEE Trans. Pattern Anal. Mach. Intell. 40(6), 1452–1464 (2017)

Doersch, C., Singh, S., Gupta, A., Sivic, J., Efros, A.: What makes Paris look like Paris? ACM Trans. Gr. 31(4) (2012)

Yan, Z., Li, X., Li, M., Zuo, W., Shan, S.: Shift-net: image inpainting via deep feature rearrangement. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 1–17 (2018)

Li, L., Liang, D., Gao, Y., Huang, S.J., Chen, S.: All-e: aesthetics-guided low-light image enhancement. arXiv preprint arXiv:2304.14610 (2023)

Acknowledgements

The authors would like to thank the anonymous reviewers and an editor for their insightful comments and suggestions which help to improve the quality of the work. This study was funded in National Natural Science Foundation of China (Grant Nos. 62061023, 82260364 and 61941109), Distinguished Young Scholars of Gansu Province of China (Grant No. 21JR7RA345), Natural Science Foundation of Gansu Province of China (Grant Nos. 22JRJ5RA166, 21JR1RA024, 23JRRA1485 and 21JR1RA252), and Science and Technology Plan of Gansu Province (Grant No. 20JR10RA273).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Lian, J., Zhang, J., Liu, J. et al. Guiding image inpainting via structure and texture features with dual encoder. Vis Comput (2023). https://doi.org/10.1007/s00371-023-03083-7

Accepted:

Published:

DOI: https://doi.org/10.1007/s00371-023-03083-7