Abstract

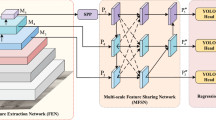

The wheelset tread has large noise interference and various forms of defects, resulting in the low detection accuracy and poor localization effect. We proposed a new detection model for wheel tread defect detection. First, we proposed a deformable residual attention network that combines deformable residual network modules and Channel-Fusion-Correct-Attention modules. The deformable residual network modules are to strengthen the capability of feature extraction for various forms of defects. While the Channel-Fusion-Correct-Attention modules can weaken noise interference. Second, adopt the path aggregation network feature fusion method combining top-down and bottom-up to reduce the loss of defect feature. Third, a deformable double-branch detection head is constructed to further optimize the overall performance of the model. A dataset of defects in the wheelset treads of trains was formulated for experiments to verify the performance of the model. The experiment results showed that the model could achieve accuracy of 78.2% and Recall of up to 91% when the IOU threshold was 0.5. It could detect 24.1 images per second on a single GPU, with 35.2 M parameters. The performance of the model was higher than classical models, such as Faster-RCNN and YOLO. The proposed method can be applied to the detection of defects on the tread surface of the wheelset.

Similar content being viewed by others

Data availability

The datasets generated during the current study are available from the corresponding author on reasonable request.

References

Tao, X., Hou, W., Xu, D.: A survey of surface defect detection methods based on deep learning. Acta Autom. Sin. 47(05), 1017–1034 (2021). https://doi.org/10.16383/j.aas.c190811

Li, S.B., Yang, J., Wang, Z.: Review of development and application of defect detection technology. Acta Autom. Sin. 46(11), 2319–2336 (2020). https://doi.org/10.16383/j.aas.c180538

Tang, Y., Huang, Z., Chen, Z.: Novel visual crack width measurement based on backbone double-scale features for improved detection automation. Eng. Struct. 274, 115158 (2023). https://doi.org/10.1016/j.engstruct.2022.115158

Chen, M., Tang, Y., Zou, X.: High-accuracy multi-camera reconstruction enhanced by adaptive point cloud correction algorithm. Opt. Lasers Eng. 122, 170–183 (2019). https://doi.org/10.1016/j.optlaseng.2019.06.011

Tang, Y., Zhu, M., Chen, Z.: Seismic performance evaluation of recycled aggregate concrete-filled steel tubular columns with field strain detected via a novel mark-free vision method. In: Structures, vol. 37, pp. 426–441. Elsevier, Amsterdam (2022). https://doi.org/10.1016/j.istruc.2021.12.055

Guan, Z., Wang, Z., Zhu, Y.: Presswork defect inspection using only defect-free high-resolution images. Vis. Comput. (2022). https://doi.org/10.1007/s00371-022-02403-7

Chen, Z., Huang, G., Wang, Y.: Bi-deformation-UNet: recombination of differential channels for printed surface defect detection. Vis. Comput. (2022). https://doi.org/10.1007/s00371-022-02554-7

Hou, W., Jing, H.: RC-YOLOv5s: for tile surface defect detection. Vis. Comput. (2023). https://doi.org/10.1007/s00371-023-02793-2

Mao, S.G., Mao, Y., Li, X.: Rapid vehicle logo region detection based on information theory. Int. J. Comput. Electric. Eng. 39(3), 863–872 (2013). https://doi.org/10.1016/j.compeleceng.2013.03.004

Girshick, R.: Fast r-cnn. In: Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), pp. 1440–1448 (2015)

Ren, S., He, K., Girshick, R.: Faster r-cnn: towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 39(6), 1137–1149 (2017). https://doi.org/10.1109/TPAMI.2016.2577031

Liu, W. Anguelov, D. Erhan, D.: SSD: single shot multibox detector. In: Proceedings of the 2016 European Conference on Computer Vision (ECCV), pp. 21–37 (2016). https://doi.org/10.1007/978-3-319-46448-0_2

Lin, T.Y., Goyal, P. Girshick, R.: Focal loss for dense object detection. In: Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), pp. 2999–3007 (2017). https://doi.org/10.1109/ICCV.2017.324

Redmon, J., Farhadi, A.: Yolo9000: better, faster, stronger. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 6517–6525 (2017). https://doi.org/10.1007/978-3-319-46448-0_2

Redmon, J., Farhadi, A.: Yolov3: an incremental improvement. arXiv preprint arXiv:1804.02767 (2018). https://doi.org/10.1109/CVPR.2017.690

Bochkovskiy, A., Wang, C. Liao, H.Y.: Yolov4: optimal speed and accuracy of object detection. arXiv preprint arXiv:2004.10934 (2020)

Wang, C., Bochkovskiy, A. Liao, H. Y. M.: YOLOv7: trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. arXiv preprint arXiv:2207.02696 (2022). https://doi.org/10.48550/arXiv.2207.02696

Ge, Z., Liu, S., Wang, F., et al.: Yolox: exceeding yolo series in 2021. arXiv preprint arXiv:2107.08430 (2021). https://doi.org/10.48550/arXiv.2107.08430

He, Y., Song, K., Meng, Q.: An end-to-end steel surface defect detection approach via fusing multiple hierarchical features. IEEE Trans. Instrum. Meas. 69(4), 1493–1504 (2019). https://doi.org/10.1109/TIM.2019.2915404

Cui, L., Jiang, X., Xu, M.: SDDNet: a fast and accurate network for surface defect detection. IEEE Trans. Instrum. Meas. 70(99), 1–13 (2021). https://doi.org/10.1109/TIM.2021.3056744

Cheng, X., Yu, J.: RetinaNet with difference channel attention and adaptively spatial feature fusion for steel surface defect detection. IEEE Trans. Instrum. Meas. (2020). https://doi.org/10.1109/TIM.2020.3040485

Zhang, C., Hu, X., He, J.: Yolov4 high-speed train wheelset tread defect detection system based on multiscale feature fusion. J. Adv. Transp. (2022). https://doi.org/10.1155/2022/1172654

Zhang, C., Xu, Y., He, J.: rapid detection of wheel tread defects for YOLO-v5 trains based on residual attention. Electr. Drive Locomot. 289(06), 1–9 (2022). https://doi.org/10.13890/j.issn.1000-128X.2022.06.001

Dai, J., Qi, H., Xiong, Y.: Deformable convolutional networks. In: Proceedings of the IEEE International Conference on Computer Vision (ICCV), pp. 764–773 (2017). https://doi.org/10.1109/ICCV.2017.89

He, K., Zhang, X., Ren, S.: Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 770–778 (2016).

Zhu, X., Hu, H., Lin, S.: Deformable convnets v2: more deformable, better results. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 9308–9316 (2019)

Su, B.Y., Chen, H.Y., Liu, K.: RCAG-Net: residual channel-wise attention gate network for hot spot defect detection of photovoltaic farms. IEEE Trans. Instrum. Meas. (2021). https://doi.org/10.1109/TIM.2021.3054415

Hao, S., Yang, L., Ma, X.: YOLOv5 transmission line fault detection based on attention mechanism and cross-scale feature fusion. In: Proceedings of the CSEE, pp. 1–12 (2022)

Hu, J., Shen, L., Albanie, S.: Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 7132–7141 (2018). https://doi.org/10.1109/TPAMI.2019.2913372

Woo, S., Park, J., Lee, J.Y.: Cbam: convolutional block attention module. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 3–19 (2018)

Liu, S., Qi, L., Qin, H.: Path aggregation network for instance segmentation. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR), pp. 8759–8768 (2018)

Lin, T.Y., Dollár, P., Girshick, R.: Feature pyramid networks for object detection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 2117–2125 (2017)

Song, G.L., Liu, Y., Wang, X.G.: Revisiting the sibling head in object detector. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 11563–11572 (2020)

Chen, Z., Yang, C., Li, Q.: Disentangle your dense object detector. In: Proceedings of the 29th ACM international conference on multimedia, pp 4939–4948 (2021)

Wu, Y., Chen, Y., Yuan, L.: Rethinking classification and localization for object detection. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 10186–10195 (2020)

Acknowledgements

This work was supported by the Natural Science Foundation of China (52172403, 62173137), Hunan Provincial Natural Science Foundation of China (2021JJ50001).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhang, C., Xu, Y., Sheng, Z. et al. Deformable residual attention network for defect detection of train wheelset tread. Vis Comput 40, 1775–1785 (2024). https://doi.org/10.1007/s00371-023-02885-z

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00371-023-02885-z