Abstract

In workplaces or publicly accessible buildings, escape routes are signposted according to official norms or international standards that specify distances, angles and areas of interest for the positioning of escape-route signs. In homes for the elderly, in which the residents commonly have degraded mobility and suffer from vision impairments caused by age or eye diseases, the specifications of current norms and standards may be insufficient. Quantifying the effect of symptoms of vision impairments like reduced visual acuity on recognition distances is challenging, as it is cumbersome to find a large number of user study participants who suffer from exactly the same form of vision impairments. Hence, we propose a new methodology for such user studies: By conducting a user study in virtual reality (VR), we are able to use participants with normal or corrected sight and simulate vision impairments graphically. The use of standardized medical eyesight tests in VR allows us to calibrate the visual acuity of all our participants to the same level, taking their respective visual acuity into account. Since we primarily focus on homes for the elderly, we accounted for their often limited mobility by implementing a wheelchair simulation for our VR application.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

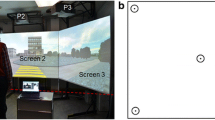

In our VR-based user study, participants indicate from which distance they can recognize escape-route signs. Top left: a simulated wheelchair reduces motion sickness. Top right: participant sees an escape-route sign in the upper right. Bottom left: participants are also asked to find an escape route through this building from the visual perspective and means of locomotion of an elderly person, using our virtual wheelchair. Bottom right: blurred vision caused by simulated vision impairments

In this work, we investigate the influence of vision impairments on the recognizability of escape-route signs. For this, we use a virtual reality (VR) application (see Fig. 1) to simulate certain levels of loss of visual acuity. The results of the conducted user study suggest that current norms specifying the positioning of escape-route signage should be adapted for certain buildings like homes for the elderly, where a larger average loss of visual acuity can be expected among the residents than in the general population. Escape-route signs are planned during building design and construction in a way that ensures people can easily find and follow predefined escape routes in case of an emergency. They are placed according to norms or standards that specify both the maximum recognition distance (MRD) and viewing angles of a sign as well as important areas where they have to be placed. The planned signage is evaluated, and its compliance with the norms is manually checked on site. According to the international standard ISO 3864-1 [12], at least 85% of all people have to be able to recognize the signs at the given distance and angle. However, in places like retirement homes, where one can expect an increased percentage of people to have impairments that reduce their visual MRD, the standards provided by the norms might not be sufficient to allow for successful emergency response. To ensure the safety in case of emergency situations in such buildings, it may help to verify that current standards still apply, and, if this is not the case, to adjust them accordingly, decreasing the chances for casualties and also reducing costs in terms of time and money for necessary adaptations later on.

It is very challenging to properly investigate the effects of a vision impairment on recognition distances without conducting a user study with participants that all have the same characteristic of the same symptoms. Since the severity of the symptoms of people with vision impairments differs, finding a sufficiently large number of participants is challenging. In this paper, we describe a new methodology to conduct a valid user study with enough participants that experience the same symptoms of vision impairment: we use people with normal vision (or corrected vision using glasses or contact lenses), and only simulate the vision impairment in a VR application. This is made possible by calibrating all participants to the same level of visual acuity, taking into account their measured visual acuity as well as deficiencies of the display system. Using this methodology, we conducted a user study to measure the MRDs of participants for signs of various sizes and at different viewing angles. We also let participants perform interactive walkthroughs through building models to allow users to experience an emergency scenario like an elderly person, trying to follow an escape route out of the building. For this, we developed a new type of locomotion for VR environments simulating a wheelchair, since this is a common form of movement in our chosen use case: a home for the elderly. The main contributions of this paper can be summarized as follows:

-

A realistic simulation of vision impairments (based on scientific medical findings) that can be calibrated to any level of visual acuity, and allows the combination of different symptoms to create a certain disease pattern. Our simulation of loss of visual acuity is calibrated relative to the actual visual acuity of the user and takes into account display deficiencies, for the first time allowing to adjust the perceived visual acuity of different users to the same level.

-

A user study based on this methodology to investigate recognition distances of escape-route signs. To provide a highly immersive environment, we introduce an interactive, controlled test environment including a wheelchair-based type of locomotion, and use high-quality lighting simulation.

-

Finally, we provide an analysis of the data obtained from our user study in comparison with the values prescribed by international standards and European norms.

The remainder of this paper is organized as follows: In Sect. 2 we first give some background information on measurements of visual acuity in medicine and summarize the regulations given by international standards and European norms on escape-route signage. Then, we discuss related work regarding the simulation of vision impairments and locomotion techniques in VR. Section 3 describes our approach to simulate vision impairments and calibrate the simulated reduced visual acuity of each participant in our user study. Details about the user study we conducted are presented in Sect. 4, and the results of the study are listed in Sect. 5. In Sect. 6, we discuss and interpret these results and compare them to the regulations provided by international standards and European norms. Finally, Sect. 7 gives a conclusion based on the presented findings and an outlook on future work.

2 Background and related work

The National Eye Institute (NEI) provides data on the prevalence of vision impairments and age-related eye diseases in America [19] in 2010. The data show that the most common eye diseases in the US population above 40 years of age (142,648,393 people) are cataract (24,409,978 people), diabetic retinopathy (7,685,237 people), glaucoma (2,719,379 people), and age-related macular degeneration above age 50 (2,069,403 people). The projections of the NEI for the years to come predict a steady rise of the numbers of people suffering from one of these eye diseases until they will have approximately doubled in 2050, which is not surprising given that the older US population (over 65) is predicted to double from 40.2 million in 2010 to 88.5 million in 2050 [30]. Since we can expect more people to have some form of vision impairment, there is a need for studies on their effects. A complete analysis of the mentioned eye diseases requires extensive research and multiple studies. As a first step, we decided to focus on the most common symptom associated with age-related vision impairments and eye diseases, which also greatly influences the maximum recognition distance: loss of visual acuity.

2.1 Measuring visual acuity

The ISO standard ISO 8596 [11] defines test symbols and procedures to determine a subject’s visual acuity under daytime conditions. Among the test symbols is the so-called Landolt ring or Landolt C (see Fig. 2). To test for a visual acuity of 20/20 or 1.0 decimal, the diameter of the ring should be chosen such that the gap in the ring spans 1 angular minute when viewed from the selected test distance. The angular extent of the gap is the reciprocal of the decimal acuity value. Thus, to test for other visual acuity levels, the rings can simply be scaled according to the decimal acuity value. The position of the gap should be horizontally left or right, vertically up or down or diagonally in-between for a total of eight possible positions. ISO 8596 suggest to use at least five rings per acuity level to be tested, with random positions of the gap. A test subject has reached the limit of visual acuity when less than 60% of rings can be correctly identified. The actual visual acuity of the test subject corresponds to that of the previous correct row of test symbols in the Landolt chart. Other common notations beside decimal acuity are the Snellen fraction [21] and the LogMAR acuity [11]. The angular extent of the gap in the Landolt ring used for testing a certain visual acuity level can be directly converted to these three common measures for visual acuity and vice versa.

2.2 Legal regulations, standards and norms

Laws and regulations are in place for the use and placement of emergency and escape-route signs in buildings. The European norm EN 1838 [6] covers all aspects of emergency lighting: general emergency lighting, anti-panic lighting, emergency lighting for dangerous workplaces and escape-route signs. In terms of vision conditions, EN 1838 notes that factors like eyesight, required illumination level, or adaptation of the eyes differ between individuals. Furthermore, elderly people in general are regarded as requiring a higher level of illumination and a longer time to adapt to the conditions present in emergency scenarios. For escape-route signs to be effective, EN 1838 states that they should not be mounted higher than 2 m above floor level, and, where possible, also not higher than \(20^{\circ }\) above the horizontal viewing direction at maximum recognition distance of a sign. The MRD is defined as the maximum distance from which a sign should still be recognized by a normal-sighted observer. This distance is specified by the norm as the sign’s height times a distance factor, which is assumed to be 100 for illuminated and 200 for luminescent signs. Let z be the distance factor and h the height of a sign, then the MRD l is calculated as \( l = z\times h \). According to ISO 3864-1 [12] distance factors are calculated based on the angle under which a sign is observed and its brightness. ISO 7010:2011 [9] provides specifications for standardized safety signs, which we also used in our user study. In relation to visual acuity, the informal appendix of ISO 3864-1 suggests to scale the MRD by an observer’s decimal visual acuity value. If a person has a visual acuity of 20/20, it is scaled by 1.0. If a person has a visual acuity of 20/80, the distance should be scaled by 0.25. The informal appendix of the standard also defines the MRD (as described by EN 1838) to be sufficient for at least 85% of observers.

2.3 Previous work on simulating vision impairments

Several approaches to simulating visual impairments and evaluating their impacts have been proposed: Xie et al. [33] investigated the effectiveness of signs by determining the visibility catchment area (VCA) of a sign, i.e., the region of space from where a sign can be seen. The authors conducted a user study where participants were asked to walk down a corridor and approach a sign until they were able to read at least 50% of the letters on that sign. During different runs, the sign was tilted to different angles, rather than letting participants approach the sign from different directions. The results of this study showed that the MRD is dependent on the viewing angle and decreases in a nonlinear fashion as the angle between observer and sign increases. Xie et al. [34] further investigated the influence of smoke on evacuation scenarios. However, since the authors only had participants with normal vision or wearing glasses or contact lenses in their studies, the influence of vision impairments was not investigated. In our work, we designed our experiments according to the setup proposed by Xie et al. [33], but as a VR simulation with simulated vision impairments.

Based on the concept of serious games, Almeida et al. [1] used Unity3D [26] to develop a game for conducting experiments on eliciting human behavior in emergency situations. The authors claim that their game gives a sense of realism due to elaborated 3D renderings. We also chose a game engine (Unreal Engine 4 [8]) to implement our application. However, to achieve a more realistic situation than a typical computer game played on a standard monitor could provide, we deemed it vital to use VR for our study, especially in order to be able to measure distances and angles that can be compared to real-world measurements. Cosma et al. [5] also used Unity3D to conduct a study, but in VR, and evaluated the impact of way-finding lighting systems in a rail-tunnel evacuation scenario. They only compared different types of luminescent stripes as guidance system and did not include signage in their study, though workspaces or public buildings mostly rely only on signage to signpost escape routes.

Apart from investigations of emergency scenarios, there has also been research on simulating eye diseases in recent years, albeit primarily for educational purposes: Zagar et al. [35] developed individual sets of (non-VR) goggles to simulate glaucoma, cataracts, macular degeneration, diabetic retinopathy, and retinitis pigmentosa, and used them to rate the presence and severity of disease-specific characteristics. Similarly, Wood et al. [32] used modified goggles in a study to investigate the effects of simulated visual impairments on nighttime driving performance and pedestrian recognition under real-road conditions. However, real goggles limit the experiment environment to the real world. Lewis et al. used the Unreal Engine 3 [15] and in later work the Microsoft XNA framework [16] to apply post-processing effects to simulate common eye diseases in a 3D game or explorable environment. The systems were evaluated by opticians, specialists and test users, and even though the simulated symptoms are not fully accurate, they are still suitable to raise awareness and gain a good understanding of the effects of visual impairments. Yet the severity of symptoms is not controllable. Also, the vision impairments are not adjusted to each individual user’s visual acuity. Note that there is also much research on color vision deficiency, which we will not cover in this paper, but plan to include in future work.

The goal of the study conducted by Hogorvorst et al. [10] was to give unimpaired persons insight into the problems faced by visually impaired people, by modifying 2D images according to a visual impairment. When measuring the visual acuity with eyesight tests using the Landolt C, the authors found a linear correlation between visual acuity and a just recognizable threshold for blurring an image. We build upon these findings in our calibration procedure, using a blur filter to adapt a user’s vision to a certain level of visual acuity. Jin et al. [13] provide a complex eye anatomy model for simulating visual impairments in VR based on medical measurements. A scotoma texture, created from perimetry exam data from real patients, defines regions where vision is deteriorated. This texture is the same for every user and does not account for a users’ vision capabilities. Furthermore, the authors used specialized hardware from 2002, based on a CAVE-derived, projection-based VR display in combination with shutter glasses as compared to modern VR HMDs. Using the Oculus Rift HMD and a PlayStation 4 Camera as AR setup, Ates et al. [2] conducted a user study with focus on accessibility inspection of user interfaces. Their simulation of vision impairments is based on photographs of the NEI [19] and implemented through a VR media player which can render stereoscopic video files. The level of intensity of the simulated impairments can be adjusted via keyboard. However, unlike in our approach, the existing visual acuity of the user is not taken into account when calibrating the visual impairment. Similarly, Väyrynen et al. [29] also used the Oculus Rift and Unity3D to create a system for evaluating the effect of visual impairments in path-finding tasks in a 3D city model. They state that their approach is targeted toward giving architectural designers a general idea of challenges of visual impairments. Impairments are simulated based on images from online simulators or hardware-based simulations and implemented using standard effects in Unity3D. This is similar to our implementation of macular degeneration and cataract (which serve to create some task variety between MRD tests rather than being used for the actual evaluation) in Unreal Engine 4. However, by applying our calibration technique, we are able to adapt the reduced visual acuity, one of the major symptoms of these diseases, to the actual vision capacities of each individual user. Therefore, unlike in previous work, we can provide a consistent experience for each user regarding reduced visual acuity.

In summary, the actual influence of vision impairments on the MRD has not yet been thoroughly investigated or quantified. Hence, legal regulations and norms only provide informal recommendations based on assumptions derived from medical definitions of visual acuity.

2.4 Previous work on locomotion techniques in VR

In order to measure MRDs in VR, it is vital to provide a locomotion technique for continuous movement toward an escape-route sign in a large environment. Hence, all forms of teleportation in VR are not applicable for such measurements. Research on natural walking [28] in virtual environments has shown positive effects on the immersion in VR. Redirected walking techniques [22], manipulate the mapping between physical and virtual motions to enable users to navigate through vast virtual environments. However, these techniques require significantly more physical space than the tracking space of a typical HTC Vive setup. Other walking approaches, like change blindness illusions [23], self-overlapping architecture [25] or flexible spaces [27], manipulate the architectural layout of a VR environment to fit into the tracked space. Although these techniques work well to create an immersive experience [28], the need for specific layouts or manipulations of the VR environment prohibits the evaluation of escape-route signage of models of real-world buildings. Locomotion devices [27] like shoe-based devices, omnidirectional treadmills or robotic elements allow navigations through arbitrary building models without any manipulations of the building architecture or the need for a large physical workspace, but the acquisition of this specialized hardware increases the costs of a project significantly. Other inexpensive locomotion techniques that require a lot of physical movement, like jumping up and down to run in VR, would be too tiresome if performed for 30 min. Techniques that simulate walking while the person does not move in the real world—such as pressing buttons on a controller or navigating via joystick—are known to cause motion sickness for many people due to the discrepancy between visual and vestibular cues. Therefore, we implemented a form of locomotion that provides continuous movement and also minimizes this discrepancy: a wheelchair simulation. Nybakke et al. [20] compared different locomotion techniques in a series of search tasks in VR. They found that people performed best with real walking as compared to virtual translation via joystick with real rotation while standing. The performance with real movement in a motorized wheelchair was intermediate and only slightly better than rotating a swivel chair and using a joystick for translation. Chowdhury et al. [3] did a study on information recall in a VR disability simulation and concluded that their wheelchair interface (using a real non-motorized wheelchair) with an Oculus HMD induced the highest sense of presence in the virtual environment, when compared to non-VR or game-pad navigation. Since real walking is not possible in VR environments that exceed the physical tracking space and a real wheelchair results in additional costs, we designed our wheelchair simulation similar to the swivel chair model of Nybakke et al. [20].

3 Simulation of vision impairments

The most common symptom present in vision impairments is the reduction of visual acuity. Hogervorst et al. [10] determined a relation between the \(\sigma \) parameter of a just recognizable Gaussian blur and the visual acuity of a person. Following these findings, we simulate a reduced visual acuity by applying a Gaussian blur to the image, the size of which we determine in a calibration phase. Similar to Lewis et al. [16], we can also apply post-processing effects to simulate common eye diseases like cataract or macular degeneration (see Fig. 3), but we combine these effects with our calibrated reduced visual acuity to adapt the simulation of these eye diseases for every user.

3.1 Calibration for reduced visual acuity

Different (even normal-sighted) people have different visual acuity. Furthermore, the display device may limit the maximum achievable visual acuity. Therefore, we devised a calibration procedure to calibrate all users to the intended visual acuity of an experiment. The user first performs an eyesight test on the target display device, which allows us to calculate the correct strength of the blur to achieve the desired visual acuity.

3.1.1 Eyesight test

For calibration, the user is placed in a virtual room with Landolt chart [11] lines on the wall at 4 m distance. The Landolt C optotype (see Fig. 2) is a ring with a gap at one of eight possible angles. Five of these Landolt Cs are displayed at a time. The user’s task is to indicate the correct angle of the gap in each Landolt C using a controller, in our case by pressing the corresponding position on the Vive controller touch pad. The test shows successively smaller optotypes until a recognition threshold (in our test less than half the signs in one row) is reached. The last optotype size which was recognized above the threshold determines the visual acuity in angular minutes.

3.1.2 Determination of blur strengths

Next, we determine the parameters we need in order to calibrate the vision of a user u to a reduced level of visual acuity. Building upon the findings of Hogorvorst et al. [10], we can reduce the visual acuity by blurring the image. To determine the strength of the blur, we perform the eyesight test again, but this time use a fixed size for the Landolt C with a gap corresponding to the desired level of acuity a in angular minutes. We apply a Gaussian blur to the image and increase this blur in each iteration as long as the participant is able to recognize more than half of the optotypes in a row correctly. This gives us a factor \(f_a^u\) for the width of the blur needed to calibrate the vision of user u to the reduced visual acuity a (note that in the case of a Gaussian blur, f is simply the standard deviation of the respective Gaussian).

There are a number of factors that have an impact on our calibration procedure and the resulting blur factors. Even though our study participants claimed to have normal sight or corrected sight (wearing contact lenses or glasses), some might still have a reduced visual acuity. The resolution of the display and discretization of the images also influence a user’s ability to perceive details shown at small sizes. Additionally, the HMD introduces a fixed focal distance to the eyes, which can create a vergence-accommodation conflict that can have negative effects on a user’s vision [14]. A possible misplacement of the HMD can also reduce the perceived sharpness of the images and therefore the visual acuity. All these circumstances create an already reduced visual acuity for the user once she puts on the HMD. From this unknown level of reduced visual acuity, caused by any or multiple of the factors mentioned above, we start decreasing a user’s vision further, by applying and increasing the Gaussian blur until a certain size of the Landolt C (at 4 m distance) cannot be correctly recognized anymore. This size and distance of the last recognizable row of optotypes directly correspond to a certain level of visual acuity in the real world, according to established medical eyesight tests. Note that because we use the HTC Vive with Steam VR in UE 4 in a room-scale setup, distances and sizes in VR match real-world measurements. This methodology allows us to have people with different levels of visual acuity participating in an experiment that assumes participants with similar levels of visual acuity. Furthermore, we can use the blur factors determined in the calibration phase in combination with other symptoms to simulate vision impairments like macular degeneration and cataract to create a similar impression for each participant during the walkthroughs with these impairments. However, it will not be perceived exactly the same by every user, since we only calibrate one of the symptoms (visual acuity) to the user’s actual vision and combine it with a fixed level of other symptoms (e.g., contrast loss), using the same value for everyone. In future work, more calibration steps could be added to also calibrate other symptoms that influence contrast, color perception, or field of vision, for example.

3.2 Hardware limitations of VR displays

While for desktop displays the user can be placed at the appropriate distance such that any desired visual acuity can be reached, the distance for VR displays is fixed. Therefore, at a certain size the significant details of the optotypes are smaller than a pixel and can not be properly rendered and displayed. The HTC Vive HMD we use has a resolution of \(2160 \times 1200\) pixels. Even though our participants have corrected or normal sight, this resolution made it impossible for any of our participants to recognize a visual angle smaller than 2.5 angular minutes (\(\sim \) 0.4 logMAR). According to the International Council of Ophthalmology [4], visual acuity less or equal to 0.1 logMAR, corresponding to a maximum perceivable angle of 1.25 angular minutes, is considered normal vision. This means that just by putting on the VR headset, a person with normal sight will experience a loss of visual acuity that is already considered to be a mild vision impairment. Consequently, we were not able to measure MRDs with normal sight and have to take the specifications given by existing norms as base for our comparisons. However, it is still feasible to use a HTC Vive for our study, since most elderly people suffering from vision impairments have a more severe reduction in visual acuity than the one induced by the HMD.

4 User study

We chose to apply our new methodology to determine the maximum recognition distance for escape-route signs. In a user study, we present participants with two tasks of different complexity: first, indicating when an escape-route sign becomes recognizable when moving straight toward it, and second, finding a given escape route in a building in a simulated emergency situation. The first task constitutes the actual quantitative experiment, while the second task serves to make the study more interesting for participants, and presents first experiments toward studying participant behavior in simulated emergency situations in future work. We also restrict the formal analysis to the study of visual acuity, while in the second task, we also include symptoms of other visual impairments. Since the more complex simulations of eye diseases, used in our second task, need further evaluation and consultations of experts (like ophthalmologists) before meaningful measurements can be derived from them, we do not include data from the second task in our current statistical analysis. To avoid fatigue, which can be caused by a vergence-accommodation mismatch when using a HMD, we designed our study to not exceed 30 min/participant.

4.1 Participants

For this work, we conducted a user study with 30 participants (10 female, 20 male) between 23 and 42 years of age. All but one participant had experience with computer games in general, and two thirds had already tried a virtual reality headset before participating in our study. \(50\%\) of our participants have normal vision. The other, mostly shortsighted participants (some having astigmatism) were wearing either glasses or contact lenses—with the exception of two shortsighted participants who did not wear any sight-correcting aid during the experiments. One of our participants got motion sick and could not complete the study. Some of the other participants reported minor feelings of dizziness after the study, but overall, the feedback (gathered from informal interviews) of our implemented locomotion technique was very positive. Most participants stated they liked our wheelchair simulator and had fun using it to navigate through the building model.

4.2 Experiment protocol

Each participant starts with the calibration phase as described in Sect. 3. We calibrated for two visual acuity conditions: weak blur, corresponding to 5 angular minutes, and strong blur, corresponding to 8 angular minutes. We then carry out two rounds of experiments in order to test for learning effects. In each round, we perform the actual MRD experiment with no, weak and strong blur conditions. For each condition, we show 3 escape-route signs of 15 cm height and 3 of 30 cm height. The angle between sign and observer is set to 0, 30 and 60 degrees, respectively. In total, we obtain 36 measurements for each observer (18 per round of experiments). Interspersed with the MRD experiment, we let the participant do walkthroughs through the test environment with the task of finding the exit, with different vision impairment symptoms. In the first round, the first two conditions (no blur, weak blur) serve to acquaint the participant with the experimental environment. The experiment protocol is as follows:

-

1.

Calibration phase

-

Eyesight test

-

Determine blur factor: weak blur

-

Determine blur factor: strong blur

-

-

2.

First round of test runs

-

Recognition distances measurements: no blur

-

Walkthrough: no blur

-

Recognition distances measurements: weak blur

-

Recognition distances measurements: strong blur

-

Walkthrough: weak blur

-

-

3.

Second round of test runs

-

Recognition distances measurements: no blur

-

Walkthrough : cataract (with weak blur)

-

Recognition distances measurements: weak blur

-

Recognition distances measurements: strong blur

-

Walkthrough: macular degeneration (with weak blur)

-

4.3 Task description

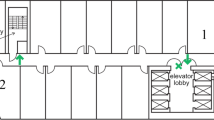

Corridor used for the measurements of MRDs. The luminaires and the lightmap for this scene have been exported from HILITE [31]

4.3.1 Maximum recognition distance measurements

In our study, we aim to determine the maximum distance a user can be away from an escape-route sign such that she can still recognize the direction the sign is pointing to. This is measured by placing the user at the beginning of a 40-m-long corridor (shown in Fig. 4) with an escape-route sign at the far end and asking her to advance in the direction of the sign. As soon as she recognizes its label, the user indicates the recognized direction by pressing on the corresponding direction of the Vive controller touch pad. This can be up, down, left or right. The controller vibrates if the input was wrong. In that case, the user has to proceed by moving further toward the escape-route sign until she correctly recognizes the displayed direction on the sign and presses on the correct position of the touch pad. After a correct input, the scene is reset for the next sample.

4.3.2 Walkthroughs

Between the MRD measurements, the participant is presented with a more realistic escape scenario. The user moves in a large building consisting of multiple furnished rooms and corridors with luminaires and escape-route signs, as shown in Fig. 5. For each walkthrough, a different path is signposted with escape-route signs, and the task for the user is to follow this path out of the virtual building. We aimed for a high level of realism for our VR environments, using realistic geometry and physically plausible lighting, as described in Sect. 4.4.2. Walkthroughs are performed with different conditions: with clear vision, with a weak blur, with simulated cataract and with simulated macular degenerations.

These walkthroughs serve two purposes: First, to provide a break between numerous recognition distance tests and increase the variety of tasks, which makes the whole experiment more interesting for the participants and keeps them motivated and concentrated. Second, to gather more information about the behavior of users in virtual escape scenarios, as we also measure the time participants take for each walkthrough, and record their movements, which we plan to evaluate in future work.

4.4 Experiment implementation

4.4.1 Wheelchair simulation

A primary objective of our project is to improve escape-route signage in homes for the elderly, but simulating navigation of elderly people in VR is a complicated topic and challenge in itself. Elderly people are usually not as fast as the younger population and often have to use canes, walkers or wheelchairs. By simulating a wheelchair in VR, we target the most constraining form of movement for elderly people. At the same time, we manage to keep the discrepancy between visual and vestibular cues to motion low, while providing continuous movement (which allows measuring MRDs) in arbitrary large virtual environments. We implemented a wheelchair simulation similar to Nybakke et al. [20], but with a HTC Vive, using a form of torso-directed travel [24]. Our physical wheelchair consist of a swivel office chair with a Vive controller mounted on its back and a 3D model of a wheelchair that users see in VR (see Fig. 6). When turning the real-world office chair, the rotation is tracked by the Vive controller on its back and translated to a rotation of the user and the virtual wheelchair in the VR environment. With the trigger of the other Vive controller, users are able to control the speed of the forward movement. Typical mechanical wheelchairs have a maximum speed of about 1.8–2.2 m/s, so we decided to restrict the maximal movement speed of our simulated wheelchair to 1.8 meters per second. Although turning an office chair has a different haptic feeling than counter-rotating the wheels of a real wheelchair, our simulator is a cheap and easy-to-build emulation that lets participants experience a VR environment from the visual perspective of a person in a wheelchair. Except for one participant, who got motion sick shortly after the start of the experiment and had to abort, all other participants reported no uncomfortable motion sickness or the need to take a break or preliminarily terminate the experiment. Some participants mentioned slight dizziness after the end of the experiment, which is not uncommon after the use of any VR application. Although our informal interviews already gave a good indication that our wheelchair simulator is a suitable solution for the task at hand, providing continuous movement while avoiding any severe motion sickness, we plan to conduct structured interviews in future research to further support this claim.

4.4.2 Realistic environments

Simulating emergency situations in VR places high demands on the quality of the virtual environment, both in terms of modeling and realistic rendering. While the MRD task only requires a simple scene, even there the lighting simulation should be accurate to reproduce illumination of the signs comparable to international standards or norms. The walkthrough scenario, on the other hand, should also present a realistically modeled building. To achieve high realism in both modeling and rendering, we implemented a tool chain consisting of a 3D interior design software (pCon.planner [7]), a light-planning software (HILITE [31]) and a game engine (Unreal Engine 4 [8]). Using an interior design software allows us to model rooms with realistically looking furnishings. After importing these 3D scenes into HILITE, we are able to insert luminaires and render the scenes with physically plausible lighting, using a realistic material model [18] and the many-light global-illumination solution of Luksch et al. [17].

5 Results

The results of our user study comprise data collected from MRD measurements as well as a questionnaire completed by each participant.

5.1 Measured recognition distances and angles

Table 1 shows the average measured maximum recognition distances over all observations for each test, as well as the corresponding standard deviation (see Fig. 7 or supplementary material for a boxplot visualization of this data). We can see that a doubling of the size of an escape-route sign also on average approximately doubles its MRD. Increasing angles between the surface normal of a sign and the viewing direction of a user decrease the MRD. Our data suggest that this decrease is nonlinear, which is consistent with the observations of Xie et al. [33]. However, more measurements of different angles would be necessary to determine the exact nature of this angle-dependent decrease in MRDs and the influence of a reduced visual acuity on it.

The comparison shows the MRDs for 15 and 30 cm size signs and rotations according to (1) EN 1838 (not taking visual acuity into account), (2) ISO 3864-1 (directly scaled by calculated visual acuity) underestimating MRDs, and (3) ISO 3864-1 (calculated for an unknown percentage of normal-sighted people, reducing normal MRDs by 40%) overestimating MRDs when compared to (4) our measured results (with simulated reduced visual acuity), which are valid for 85% of our study participants. The distributions of our measurement are depicted as boxplots

5.2 Outlier detection

To prevent technical errors from compromising our data, we need to find outliers in our measurements and remove them from the dataset. First, we look at the blur factors that have been calculated for each participant for weak blur (corresponding to a visual angle of 5.0) and strong blur (corresponding to a visual angle of 8.0) during the calibration phase. The data show one participant with very low blur factors for weak blur and strong blur and corresponding high recognition distances for all measurements, compared to other participants. We assume that the low blur factors were caused by a technical problem and decided to remove all data from this participant from the data set. For another participant, blur factors for weak and strong blur had the same value, which also indicates a technical error during the calibration phase. Therefore, the data from this participant were removed from the dataset as well. One of our participants had to stop the experiment after the first few measurements due to motion sickness, so we also excluded her data from our analysis.

Although we asked our participants to avoid random guessing during the MRD measurements, some very high values in the measurements suggest that some participants guessed correctly, leading to an outlier in the observations. Another cause for outliers are cases where participants were inattentive or accidentally pressed the wrong button. Pressing the wrong button leads to a short vibration of the controller indicating a wrong input. The participant then needs to advance further toward the sign until she can recognize the direction and press the correct button. However, if a participant accidentally presses the wrong button without noticing, she might think that she got the direction wrong, even if just her input was wrong, and might move a lot closer to the sign than necessary. To exclude single observations from the data set that are considered as outliers, we calculate the standard deviation for each test and remove all observations that deviate more than 3 standard deviations from the mean.

5.3 Validity checks

We performed several tests to validate the correctness of our data. The resulting values of our statistical analyses can be found in the supplementary material.

5.3.1 Learning effect

Each test person performed our MRD tests with the mild vision impairment introduced by the Vive headset, with a weak blur and with a strong blur. After some time (\(\sim \)10 to 15 min) spent navigating through a building in VR, the recognition tests were performed for a second time. Using the t-test, we compared the measurements (see Table 1) of the first and the second runs. Since all our p-values are above the standard \(\alpha = 0.05\) cutoff value, we conclude that there is no evidence for a learning effect.

5.3.2 Comparison of normal sight and corrected sight

We compared the MRD measurements of people with normal sight to those of people wearing contact lenses or glasses to correct shortsightedness and/or astigmatism, in order to show that there are no significant differences and all participants perform similar when calibrated to the same level of visual acuity. We used Welch’s t-test to analyze our data. Note that we refrained from doing any correction (like Bonferroni correction), because this would increase the probability of false negatives, thus obscure our results by hiding potentially significant values that could be indicators for a problem with our method. Our analysis of the first run of measurements under weak and strong blur shows a significant difference between normal-sighted people and people with corrected sight for half the tests with weak blur and one of the tests with strong blur. However, when analyzing the measurements of our second run, the performed t-tests show no evidence for a significant difference in recognition distance and angle for people with normal sight and people with corrected sight. Therefore, we can conclude that there is no systematic error in our system. A table with all p-values and a boxplot visualization of the similarities and differences of the compared distributions are provided in the supplementary material. The 4 (out of 12) t-tests that show significant differences between the compared distributions could be false positives, the consequence of a too small sample size or other, yet unknown parameters. Further analyses and experiments are needed to identify the cause of these results in future work.

5.3.3 Influence of gender or previous VR experience

The performed statistical test shows no evidence for a significant difference between the distributions of recognition distances of people with prior VR experience and people without. Similarly, we could not find any evidence of the influence of gender on the performance in our test.

6 Discussion and recommendation for extensions of norms

According to the International Council of Ophthalmology [4], people with normal sight are able to recognize a visual angle of 1.25 angular minutes or less. Our weak blur represents a vision impairment corresponding to a minimum recognizable visual angle of 5.0 angular minutes, which is a reduction of the visual acuity by a factor of 4. EN 1838:2013-07 [6] states that the maximum recognition distance of an escape-route sign of size 15 cm is 15 m, which according to ISO 3864-1 [12] is true for 85% of all people. Looking at the results of our study, as shown in Fig. 7 (see supplementary material for numerical values), we observe that a visual acuity reduced by a factor of 4 translates to a reduction of the MRD by a factor of approximately 2.25–2.27 (calculated from the average of both test runs for 15 cm signs and 30 cm signs, respectively). Our strong blur, corresponding to a visual acuity of 8.0 angular minutes, represents a reduction of a factor of 6.4 in visual acuity. The results show that this visual acuity reduces the MRD by a factor of 3.5 (for 15 cm signs) or 3.4 (for 30 cm signs).

In future work, we would like to conduct a study with more tests of different levels of visual acuity to obtain a more detailed quantification of the influence of vision impairment on the recognizability of escape-route signs. However, our results already suggest that a reduced visual acuity has a significant impact on the MRD of escape-route signs, which differs from the recommendations or assumptions of current norms and standards. The specifications of EN 1838 do not provide guidelines on how to take vision impairments into account, nor on how to consider the dependency of the MRD on the viewing angle. Compared to the informal appendix of ISO 3864-1, which assumes a reduction of the MRD by a factor equal to the decimal acuity of the observer, our results show a lower impact on the MRD. Figure 7 shows that ISO 3864-1 underestimates the MRDs recorded during our study, while EN 1838 generally overestimates the MRDs. Appendix of ISO 3864-1 further states that if the amount of normal-sighted people is unknown, the distance factor as calculated for normal-sighted people for illuminated escape-route signs should be reduced by 40%. As our results show (see Fig. 7), this is insufficient for people that are only able to perceive a minimum visual angle of 5.0 or more. Considering that about half the population of the USA over the age of 75 suffered from some form of cataract in 2010, and the total number of cases is expected to double until 2050, according to the NEI [19], it is reasonable to assume that the informal recommendation (reducing the distance of escape-route signs by 40%) of ISO 3864-1 is insufficient. Therefore, we recommend further in-depth studies on the impact of vision impairments on the recognition distance to derive more specific information to be included in norms and standards. Additionally, a more conservative recommendation for the distance between escape-route signs in places like homes for the elderly, where a high percentage of residents are expected to suffer from vision impairments, may be advisable.

7 Conclusion and future work

In this paper, we have presented the first step toward the evaluation and quantification of the effects of vision impairments on recognition distances of escape-route signs, which got little attention in scientific research until now. We have found that informal recommendations for the placement of escape-route signs are insufficient for buildings where a larger number of residents with vision impairments can be found, and provide first steps toward adapting international standards and norms. To achieve this, we have introduced a new methodology to conduct user studies investigating the effects of vision impairments in VR. The key idea is to calibrate all participants to the same (reduced) visual acuity, hence making it much easier to find a suitable number of participants for experiments investigating vision impairments.

There are several avenues for future work in this direction. Many aspects we have already discussed could be studied in more depth: taking more levels of visual acuity into account, studying other symptoms of visual impairment, or investigating why some conditions show differences between corrected and normal-sighted participants. While we have taken care to provide a realistic lighting simulation, we do not yet account for environmental conditions specific for emergency situations, like flickering light, smoke and haze. This is especially interesting when doing more formal studies for the walkthrough settings, where the additional question arises whether a sign is noticed by the user at all (independent of whether the content of the sign is recognized). Recently announced eye-tracking VR headsets will be helpful in evaluating this question. In the current study, we only focused on unlit signs, while many modern buildings feature incandescent emergency signs. We would also investigate further the influence of the angle under which a sign is seen on recognizability, especially for grazing angles. Finally, we believe our general methodology can be used to investigate smart lighting systems in VR, which are specifically designed to aid people with impaired vision, since we can provide vision impairment simulations and realistic environments with a physically plausible lighting simulation.

References

Almeida, J.E., Jacob, J.T.P.N., Faria, B.M., Rossetti, R.J., Coelho, A.L.: Serious games for the elicitation of way-finding behaviours in emergency situations. In: 2014 9th Iberian Conference on Information Systems and Technologies (CISTI), pp. 1–7. IEEE (2014)

Ates, H.C., Fiannaca, A., Folmer, E.: Immersive simulation of visual impairments using a wearable see-through display. In: Proceedings of the Ninth International Conference on Tangible, Embedded, and Embodied Interaction, pp. 225–228. ACM (2015)

Chowdhury, T.I., Ferdous, S.M.S., Quarles, J.: Information recall in a virtual reality disability simulation. In: Proceedings of the 23rd ACM Symposium on Virtual Reality Software and Technology, p. 37. ACM (2017)

Colenbrander, A.: Visual Standards Aspects and Ranges of Vision Loss. http://www.icoph.org/downloads/visualstandardsreport.pdf (2002). Accessed 09 Sept 2017

Cosma, G., Ronchi, E., Nilsson, D.: Way-finding lighting systems for rail tunnel evacuation: a virtual reality experiment with oculus rift®. J. Transp. Saf. Secur. 8(sup1), 101–117 (2016)

DIN German Institute for Standardization: Lighting Applications—Emergency Lighting; EN 1838:2013. Standard, DIN German Institute for Standardization, Berlin (2013)

EasternGraphics GmbH: pCon.planner. http://pcon-planner.com/en/. Accessed 04 Sept 2017

Epic Games, Inc: Unreal Engine 4. https://www.unrealengine.com. Accessed 04 Sept 2017

European Committee for Standardization: Graphical Symbols—Safety Colours and Safety Signs—Registered Safety Signs (ISO 7010:2011). Standard, European Committee for Standardization, Brussels, Belgium (2012)

Hogervorst, M., Van Damme, W.: Visualizing visual impairments. Gerontechnology 5(4), 208–221 (2006)

International Organization for Standardization: ISO 8596:2009 Ophthalmic Optics—Visual Acuity Testing—Standard Optotype and Its Presentation. Standard, International Organization for Standardization, Geneva, CH (2009)

International Organization for Standardization: ISO 3864–1:2011 Graphical Symbols—Safety Colours and Safety Signs—Part 1: Design Principles for Safety Signs and Safety Markings. Standard, International Organization for Standardization, Geneva, CH (2011)

Jin, B., Ai, Z., Rasmussen, M.: Simulation of eye disease in virtual reality. In: 27th Annual International Conference of the Engineering in Medicine and Biology Society, 2005. IEEE-EMBS 2005, pp. 5128–5131. IEEE (2005)

Kramida, G.: Resolving the vergence-accommodation conflict in head-mounted displays. IEEE Trans. Vis. Comput. Gr. 22(7), 1912–1931 (2016)

Lewis, J., Brown, D., Cranton, W., Mason, R.: Simulating visual impairments using the unreal engine 3 game engine. In: 2011 IEEE 1st International Conference on Serious Games and Applications for Health (SeGAH), pp. 1–8. IEEE (2011)

Lewis, J., Shires, L., Brown, D.: Development of a visual impairment simulator using the microsoft XNA framework. In: Proceedings of 9th International Conference on Disability, Virtual Reality & Associated Technologies, Laval, France (2012)

Luksch, C., Tobler, R.F., Habel, R., Schwärzler, M., Wimmer, M.: Fast light-map computation with virtual polygon lights. In: ACM SIGGRAPH Symposium on Interactive 3D Graphics and Games, pp. 87–94 (2013)

Luksch, C., Tobler, R.F., Mühlbacher, T., Schwärzler, M., Wimmer, M.: Real-time rendering of glossy materials with regular sampling. Vis. Comput. 30(6–8), 717–727 (2014)

NEI Office of Science Communications, Public Liaison, and Education: Prevalence of Adult Vision Impairment and Age-Related Eye Diseases in America. https://nei.nih.gov/eyedata/adultvision_usa. Accessed 04 Sept 2017

Nybakke, A., Ramakrishnan, R., Interrante, V.: From virtual to actual mobility: Assessing the benefits of active locomotion through an immersive virtual environment using a motorized wheelchair. In: 2012 IEEE Symposium on 3D User Interfaces (3DUI), pp. 27–30. IEEE (2012)

Snellen, H.: Optotypi ad visum determinandum (letterproeven tot bepaling der gezichtsscherpte; probebuchstaben zur bestimmung der sehschaerfe). Weyers, Utrecht (1862)

Suma, E.A., Bruder, G., Steinicke, F., Krum, D.M., Bolas, M.: A taxonomy for deploying redirection techniques in immersive virtual environments. In: Virtual Reality Short Papers and Posters (VRW), 2012 IEEE, pp. 43–46. IEEE (2012)

Suma, E.A., Clark, S., Krum, D., Finkelstein, S., Bolas, M., Warte, Z.: Leveraging change blindness for redirection in virtual environments. In: Virtual Reality Conference (VR), 2011 IEEE, pp. 159–166. IEEE (2011)

Suma, E.A., Finkelstein, S.L., Clark, S., Goolkasian, P., Hodges, L.F.: Effects of travel technique and gender on a divided attention task in a virtual environment. In: 2010 IEEE Symposium on 3D User Interfaces (3DUI), pp. 27–34. IEEE (2010)

Suma, E.A., Lipps, Z., Finkelstein, S., Krum, D.M., Bolas, M.: Impossible spaces: maximizing natural walking in virtual environments with self-overlapping architecture. IEEE Trans. Vis. Comput. Gr. 18(4), 555–564 (2012)

Unity Technologies: Unity. https://unity3d.com/. Accessed 04 Sept 2017

Vasylevska, K., Kaufmann, H., Bolas, M., Suma, E.A.: Flexible spaces: dynamic layout generation for infinite walking in virtual environments. In: 2013 IEEE Symposium on 3D User Interfaces (3DUI), pp. 39–42. IEEE (2013)

Vasylevska, K., Podkosova, I., Kaufmann, H.: Walking in virtual reality: flexible spaces and other techniques. In: L. Cocchiarella (ed.) The Visual Language of Technique, pp. 81–97. Springer (2015). https://doi.org/10.1007/978-3-319-05341-7. http://publik.tuwien.ac.at/files/PubDat_238656.pdf

Väyrynen, J., Colley, A., Häkkilä, J.: Head mounted display design tool for simulating visual disabilities. In: Proceedings of the 15th International Conference on Mobile and Ubiquitous Multimedia, pp. 69–73. ACM (2016)

Vincent, G.K., Velkoff, V.A.: The next four decades: the older population in the United States: 2010 to 2050. 1138. US Department of Commerce, Economics and Statistics Administration, US Census Bureau (2010)

VRVis Research Center: HILITE. http://www.vrvis.at/projects/hilite. Accessed 13 Feb 2017

Wood, J., Chaparro, A., Carberry, T., Chu, B.S.: Effect of simulated visual impairment on nighttime driving performance. Optom. Vis. Sci. 87(6), 379–386 (2010)

Xie, H., Filippidis, L., Gwynne, S., Galea, E.R., Blackshields, D., Lawrence, P.J.: Signage legibility distances as a function of observation angle. J. Fire Prot. Eng. 17(1), 41–64 (2007)

Xie, H., et al.: Investigation into the interaction of people with signage systems and its implementation within evacuation models. Ph.D. thesis, University of Greenwich (2011)

Zagar, M., Baggarly, S.: Low vision simulator goggles in pharmacy education. Am. J. Pharm. Educ. 74(5), 83 (2010)

Acknowledgements

Open access funding provided by TU Wien (TUW). We would like to thank the reviewers for their helpful suggestions, and all participants of the user study for their time and patience.

Funding

This work was enabled by the Doctoral College Computational Design, Center for Geometry and Computational Design, TU Wien and the Competence Centre VRVis. VRVis is funded by BMVIT, BMWFW, Styria, SFG and Vienna Business Agency in the scope of COMET—Competence Centers for Excellent Technologies (854174)—which is managed by FFG.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declared that they have no conflict of interest.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Supplementary material 2 (mp4 39612 KB)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Krösl, K., Bauer, D., Schwärzler, M. et al. A VR-based user study on the effects of vision impairments on recognition distances of escape-route signs in buildings. Vis Comput 34, 911–923 (2018). https://doi.org/10.1007/s00371-018-1517-7

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00371-018-1517-7